CSC/ECE 506 Spring 2012/1c cl: Difference between revisions

No edit summary |

|||

| (66 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction to MISD== | ||

[[File: | In computing, MISD (multiple instruction, single data) is a type of parallel computing architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. The requirement that data is passed from one processing element to the next means that it is restricted to a certain type of computations, but is hard to apply in general. There are some bottle neck of MISD architecture<ref>http://www.cse.unsw.edu.au/~cs4211/04s1/seminars/rompo.ppt</ref>: | ||

1, Low level of parallelism ; 2, High synchronizations; 3, High bandwidth required ; 4, CISC bottleneck ; 5, High complexity | |||

[[File:Cl11.jpg]] | |||

Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline. Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type. | |||

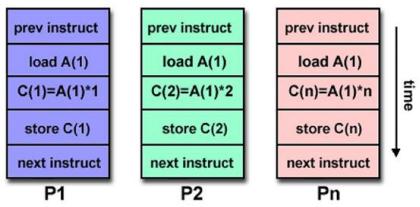

Here is a simple MISD coding sample<ref>http://www.cs.ucf.edu/courses/cot4810/fall04/presentations/Parallel_Computing.ppt</ref> | |||

[[File:C12.jpg]] | |||

<pre> | |||

Stream #1 | |||

Load R0,%1 | |||

Add $1,R0 | |||

Store R1,%1 | |||

Stream #2 | |||

Load R0,%1 | |||

MUL %1,R0 | |||

Store R1,%1 | |||

MISD | |||

ADD_MUL_SUB $1,$4,$7,%1 | |||

SISD | |||

Load R0,%1 | |||

ADD $1,R0 | |||

MUL $4,R0 | |||

STORE %1,R0 | |||

</pre> | |||

== Multiprocessing and Classification== | |||

===Multiprocessing=== | |||

------------------------------------------- | |||

Multiprocessing is the use of two or more central processing units) CPUs (within a single computer system .The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them .There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined. | |||

Multiprocessing sometimes refers to the execution of multiple concurrent software processes in a system as opposed to a single process at any one instant .However, the terms multitasking or multiprogramming are more appropriate to describe this concept, which is implemented mostly in software, whereas multiprocessing is more appropriate to describe the use of multiple hardware CPUs .A system can be both multiprocessing and multiprogramming, only one of the two, or neither of the two.<ref>http://en.wikipedia.org/wiki/Parallel_computing</ref> | |||

===Types of Multiprocessing<ref>http://en.wikipedia.org/wiki/Multiprocessing</ref>=== | |||

====Processor Symmetry==== | |||

------------------------------------------- | |||

In a multiprocessing system, all CPUs may be equal, or some may be reserved for special purposes .A combination of hardware and operating-system software design considerations determine the symmetry )or lack thereof (in a given system .For example, hardware or software considerations may require that only one CPU respond to all hardware interrupts, whereas all other work in the system may be distributed equally among CPUs; or execution of kernel-mode code may be restricted to only one processor )either a specific processor, or only one processor at a time(, whereas user-mode code may be executed in any combination of processors .Multiprocessing systems are often easier to design if such restrictions are imposed, but they tend to be less efficient than systems in which all CPUs are utilized. | |||

Systems that treat all CPUs equally are called symmetric multiprocessing)SMP (systems .In systems where all CPUs are not equal, system resources may be divided in a number of ways, including asymmetric multiprocessing )ASMP(, non-uniform memory access)NUMA(multiprocessing, and clustered multiprocessing. | |||

====Processor coupling<ref>https://computing.llnl.gov/tutorials/parallel_comp/</ref>==== | |||

------------------------------------------- | |||

Tightly-coupled multiprocessor systems contain multiple CPUs that are connected at the bus level .These CPUs may have access to a central shared memory )SMP or UMA(, or may participate in a memory hierarchy with both local and shared memory )NUMA.( | |||

Chip multiprocessors, also known as multi-core computing, involves more than one processor placed on a single chip and can be thought of the most extreme form of tightly-coupled multiprocessing .Mainframe systems with multiple processors are often tightly-coupled. | |||

Loosely-coupled multiprocessor systems )often referred to as clusters (are based on multiple standalone single or dual processor commodity computers interconnected via a high speed communication system )Gigabit Ethernet is common .(A Linux Beowulf cluster is an example of a loosely-coupled system. | |||

Tightly-coupled systems perform better and are physically smaller than loosely-coupled systems, but have historically required greater initial investments and may depreciate rapidly; nodes in a loosely-coupled system are usually inexpensive commodity computers and can be recycled as independent machines upon retirement from the cluster. | |||

Power consumption is also a consideration .Tightly-coupled systems tend to be much more energy efficient than clusters .This is because considerable economies can be realized by designing components to work together from the beginning in tightly-coupled systems, whereas loosely-coupled systems use components that were not necessarily intended specifically for use in such systems. | |||

====Flynn's taxonomy for Instruction and Data Streams<ref>http://en.wikipedia.org/wiki/Flynn%27s_taxonomy</ref>==== | |||

------------------------------------------- | |||

[[File:Cl3.jpg]] | |||

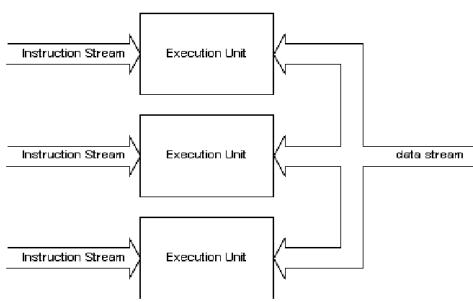

In multiprocessing, the processors can be used to execute a single sequence of instructions in multiple contexts )single-instruction, multiple-data or SIMD, often used in vector processing(, multiple sequences of instructions in a single context )multiple-instruction, single-data or MISD(, or multiple sequences of instructions in multiple | |||

contexts )multiple-instruction, multiple-data or MIMD). Flynn's taxonomy is a classification to describe instruction and data streams for parallel computing, proposed by Michael J. Flynn in 1966. The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) and data streams available in the architecture: | |||

Single Instruction, Single Data stream (SISD) | |||

A sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fetches single Instruction Stream (IS) from memory. The CU then generates appropriate control signals to direct single processing element (PE) to operate on single Data Stream (DS) i.e. one operation at a time. Examples of SISD architecture are the traditional uniprocessor machines like a PC (currently manufactured PCs have multiple processors) or old mainframes. | |||

Single Instruction, Multiple Data streams (SIMD) | |||

A computer which exploits multiple data streams against a single instruction stream to perform operations which may be naturally parallelized. For example, an array processor or GPU. | |||

Multiple Instruction, Single Data stream (MISD) | |||

Multiple instructions operate on a single data stream. Uncommon architecture which is generally used for fault tolerance. Heterogeneous systems operate on the same data stream and must agree on the result. Examples include the Space Shuttle flight control computer.<ref>http://www.itswtech.org/Lec/Manal%28system%20programming%29/simeners_A/Multiprocessing_System_Cimenar.pdf</ref> | |||

Multiple Instruction, Multiple Data streams (MIMD) | |||

Multiple autonomous processors simultaneously executing different instructions on different data. Distributed systems are generally recognized to be MIMD architectures; either exploiting a single shared memory space or a distributed memory space. | |||

====MISD multiprocessing <ref>http://en.wikipedia.org/wiki/MISD</ref>==== | |||

------------------------------------------- | |||

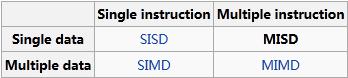

[[File:Cl51.jpg]] | |||

In computing, MISD)Multiple Instruction, Single Data (is a type of parallel computing architecture where many functional units perform different operations on the same data .Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline. | |||

MISD multiprocessing offers mainly the advantage of redundancy, since multiple processing units perform the same tasks on the same data, reducing the chances of incorrect results if one of the units fails .MISD architectures may involve comparisons between processing units to detect failures .Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type. | |||

Apart from the redundant and fail-safe character of this type of multiprocessing, it has few advantages, and it is very expensive .It does not improve performance. Not many instances of this architecture exist, as MIMD and SIMD are often more appropriate for common data parallel techniques .Specifically, they allow better scaling and use of computational resources than MISD does. | |||

==MISD Architecture Application== | |||

Few actual examples of this class of parallel computer have ever existed. One example of this machine is the systolic array, such as the CMU iWarp[Borkaret al., 1990]. Another prominent example of MISD in computing are the Space Shuttle flight control computers. The research on MISD concentrates on some conceivable usage: Multiple frequency filters operating on a single signal stream, Multiple cryptography algorithms, and Attempting to crack a single coded message. | Few actual examples of this class of parallel computer have ever existed. One example of this machine is the systolic array, such as the CMU iWarp[Borkaret al., 1990]. Another prominent example of MISD in computing are the Space Shuttle flight control computers. The research on MISD concentrates on some conceivable usage: Multiple frequency filters operating on a single signal stream, Multiple cryptography algorithms, and Attempting to crack a single coded message. | ||

===Systolic Arrays<ref>http://en.wikipedia.org/wiki/Systolic_array</ref>=== | |||

---------------------------------------------- | |||

== | ====Definition and History==== | ||

===in | In computer architecture, a systolic array is a pipe network arrangement of processing units called cells. It is a specialized form of parallel computing, where cells (i.e. processors), compute data and store it independently of each other. The systolic array paradigm, data-stream-driven by data counters, is the counterpart of the von Neumann paradigm, instruction-stream-driven by a program counter. Because a systolic array usually sends and receives multiple data streams, and multiple data counters are needed to generate these data streams, it supports data parallelism. The name derives from analogy with the regular pumping of blood by the heart. | ||

H. T. Kung and Charles E. Leiserson published the first paper describing systolic arrays in 1978; however, the first machine known to have used a similar technique was the Colossus Mark II in 1944. | |||

====Description==== | |||

A systolic array is composed of matrix-like rows of data processing units called cells. Data processing units (DPUs) are similar to central processing units (CPU)s, (except for the usual lack of a program counter, since operation is transport-triggered, i.e., by the arrival of a data object). Each cell shares the information with its neighbours immediately after processing. The systolic array is often rectangular where data flows across the array between neighbour DPUs, often with different data flowing in different directions. The data streams entering and leaving the ports of the array are generated by auto-sequencing memory units, ASMs. Each ASM includes a data counter. In embedded systems a data stream may also be input from and/or output to an external source. | |||

An example of a systolic algorithm might be designed for matrix multiplication. One matrix is fed in a row at a time from the top of the array and is passed down the array, the other matrix is fed in a column at a time from the left hand side of the array and passes from left to right. Dummy values are then passed in until each processor has seen one whole row and one whole column. At this point, the result of the multiplication is stored in the array and can now be output a row or a column at a time, flowing down or across the array. | |||

Systolic arrays are arrays of DPUs which are connected to a small number of nearest neighbour DPUs in a mesh-like topology. DPUs perform a sequence of operations on data that flows between them. Because the traditional systolic array synthesis methods have been practiced by algebraic algorithms, only uniform arrays with only linear pipes can be obtained, so that the architectures are the same in all DPUs. The consequence is, that only applications with regular data dependencies can be implemented on classical systolic arrays. Like SIMD machines, clocked systolic arrays compute in "lock-step" with each processor undertaking alternate compute | communicate phases. But systolic arrays with asynchronous handshake between DPUs are called wavefront arrays. One well-known systolic array is Carnegie Mellon University's iWarp processor, which has been manufactured by Intel. An iWarp system has a linear array processor connected by data buses going in both directions. | |||

====Super Systolic Array==== | |||

The super systolic array is a generalization of the systolic array. Because the classical synthesis methods (algebraic, i. e. projection-based synthesis), yielding only uniform DPU arrays permitting only linear pipes, systolic arrays could be used only to implement applications with regular data dependencies. By using simulated annealing instead, Rainer Kress has introduced the generalized systolic array: the super systolic array. Its application is not restricted to applications with regular data dependencies. | |||

====applications on 2D Convolution <ref>http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=4142414</ref>==== | |||

bit-level super-systolic array can be used for 2D convolution which needs 1-bit ports for each input or output sequence. In addition to the arrangement of delays for data flow synchrony, the super-systolic array in which the cell of systolic array is organized again as a systolic | |||

array is adopted to perform the bit-level data flow and operation on bit data. The derived Super-systolic array for 2D convolution is synthesized using Synopsys design compiler based on Hynix 0.35,um cell library and compared with conventional word-level systolic array for 2D convolution. The bit-level super-systolic design is very compact in that it needs only 1-bit ports for each I/O sequence instead of n-bit ports in word-level design besides the reduced area requirement without time penalty on the output. | |||

===Network application with MISD architecture<ref>http://wiki.cs.unm.edu/ssl/lib/exe/fetch.php/papers:bridges11exploiting.pdf </ref>=== | |||

------------------------------------------- | |||

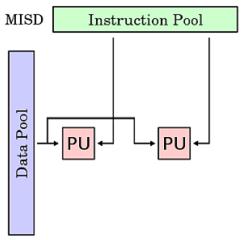

A number of important system services need strong scaling, where a fixed workload is executed more quickly with increased core counts. This is particularly true for data-intensive, network-oriented system services because network interface card (NIC) line rates continue to increase but the individual cores that service them are not getting any faster. Replicated work approach based on a multiple-instruction/single-data (MISD) execution model seems to be a promising solution to take advantage of unexploited parallelization opportunities in multi-core systems. This approach is particularly important in the network stack, as it is needed to enable strongly scalable network services. | A number of important system services need strong scaling, where a fixed workload is executed more quickly with increased core counts. This is particularly true for data-intensive, network-oriented system services because network interface card (NIC) line rates continue to increase but the individual cores that service them are not getting any faster. Replicated work approach based on a multiple-instruction/single-data (MISD) execution model seems to be a promising solution to take advantage of unexploited parallelization opportunities in multi-core systems. This approach is particularly important in the network stack, as it is needed to enable strongly scalable network services. | ||

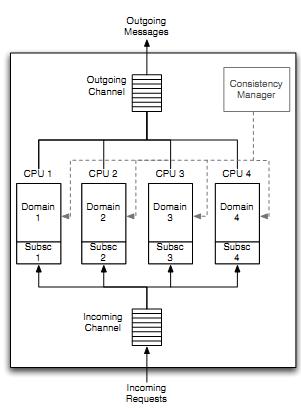

A multiple instruction/single data (MISD) execution model (i.e. replicated work) is used to provide ne-grained data consistency between cores and to eliminate expensive inter-core interactions. In this approach, shown in Figure 1(a), state is replicated into domains on separate cores, and requests that modify shared state are broadcast to every domain using a ringbuffer -based channel abstraction. The | A multiple instruction/single data (MISD) execution model (i.e. replicated work) is used to provide ne-grained data consistency between cores and to eliminate expensive inter-core interactions. In this approach, shown in Figure 1(a), state is replicated into domains on separate cores, and requests that modify shared state are broadcast to every domain using a ringbuffer -based channel abstraction. | ||

The replica that dequeues a request becomes the primary replica for that request and is responsible for fully processing it, including any updates with globally visible side effects (e.g. data delivery, packet reconstruction, acknowledgment generation). Other replicas that process the request will, on the other hand, only partially process each request to maintain state consistency. | |||

MISD approach is particularly appropriate for many network services because it parallelizes expensive per-byte processing (e.g. data copies, encryption/decryption, and error correction) and replicates per-header processing costs that are known to be small. It also retains the reduced locking and caching costs of systems like Barrelfish while adding the ability to perform fine-grained updates to logically shared state. | |||

[[File:Cl4.jpg]] | |||

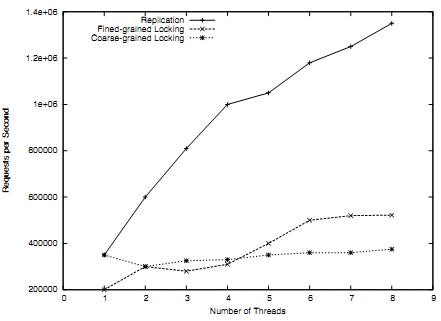

To examine the potential for this approach compared to lock-based and atomic instruction-based MIMD approaches, A simple synthetic test has been constructed. In this test, processing each request requires some amount of work that can be done by one core without synchronization or replication (i.e. parallelizable work), and some work that must be synchronized or replicated. Specially, the parallelizable work is a set of memory updates done on per-core memory, while the synchronized work is the set of memory up-dates that must be performed. Figure 1(b) shows the potential performance advantages of this approach using a 10:1 ratio of parallelizable to replicated work; we chose this ratio based on our studies of production TCP/IP stacks. As can be seen, the lock-based model scales remarkably poorly and the atomic instruction approach is only slightly better. | |||

[[File:Cl5.jpg]] | |||

===Iwarp project by CMU<ref>http://www.cs.cmu.edu/~iwarp/</ref>=== | |||

------------------------------------------- | |||

The iWarp project was started in 1988 to investigate issues involved in building and using high performance computer systems with powerful communication support. The project lead to the construction of the iWarp machines, jointly developed by Carnegie Mellon University and Intel Corporation. | |||

[[File:Cl7.jpg]][[File:Cl8.jpg]] | |||

The basic building block of the iWarp system is a full custom VSLI component integrating a LIW microprocessor, a network interface and a switching node into one single chip of 1.2cm x 1.2cm silicon. Intel Corporation has announced the iWarp systems as product in 1989 and built iWarp systems with over 1500 nodes since then. The first iWarp prototype system was delivered to Carnegie Mellon in Summer 1990 and in Fall CMU received the first 64 cell systems. All three full speed production systems were delivered in 1991. With the creation of the Intel Supercomputing Systems Division in Summer 1992 the iWarp know how was merged with the iPSC product line. | |||

[[File:Cl91.jpg]][[File:Cl10.jpg]] | |||

Intel kept iWarp as a product but stopped actively marketing it. As of today, the start of 1995 all three iWarp systems at CMU are in still in daily use. Surprisingly there are a few applications (e.g. in real time vision) for which iWarp is still the best machine, 3 years after it has been delivered. The high speed static memory and the high performance low latency communication system make iWarp a very well suited target for research efforts and many "proof of concept" applications. | |||

== | ==References== | ||

<references /> | |||

Latest revision as of 02:04, 3 February 2012

Introduction to MISD

In computing, MISD (multiple instruction, single data) is a type of parallel computing architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. The requirement that data is passed from one processing element to the next means that it is restricted to a certain type of computations, but is hard to apply in general. There are some bottle neck of MISD architecture<ref>http://www.cse.unsw.edu.au/~cs4211/04s1/seminars/rompo.ppt</ref>:

1, Low level of parallelism ; 2, High synchronizations; 3, High bandwidth required ; 4, CISC bottleneck ; 5, High complexity

Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline. Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type.

Here is a simple MISD coding sample<ref>http://www.cs.ucf.edu/courses/cot4810/fall04/presentations/Parallel_Computing.ppt</ref>

Stream #1 Load R0,%1 Add $1,R0 Store R1,%1 Stream #2 Load R0,%1 MUL %1,R0 Store R1,%1 MISD ADD_MUL_SUB $1,$4,$7,%1 SISD Load R0,%1 ADD $1,R0 MUL $4,R0 STORE %1,R0

Multiprocessing and Classification

Multiprocessing

Multiprocessing is the use of two or more central processing units) CPUs (within a single computer system .The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them .There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined.

Multiprocessing sometimes refers to the execution of multiple concurrent software processes in a system as opposed to a single process at any one instant .However, the terms multitasking or multiprogramming are more appropriate to describe this concept, which is implemented mostly in software, whereas multiprocessing is more appropriate to describe the use of multiple hardware CPUs .A system can be both multiprocessing and multiprogramming, only one of the two, or neither of the two.<ref>http://en.wikipedia.org/wiki/Parallel_computing</ref>

Types of Multiprocessing<ref>http://en.wikipedia.org/wiki/Multiprocessing</ref>

Processor Symmetry

In a multiprocessing system, all CPUs may be equal, or some may be reserved for special purposes .A combination of hardware and operating-system software design considerations determine the symmetry )or lack thereof (in a given system .For example, hardware or software considerations may require that only one CPU respond to all hardware interrupts, whereas all other work in the system may be distributed equally among CPUs; or execution of kernel-mode code may be restricted to only one processor )either a specific processor, or only one processor at a time(, whereas user-mode code may be executed in any combination of processors .Multiprocessing systems are often easier to design if such restrictions are imposed, but they tend to be less efficient than systems in which all CPUs are utilized.

Systems that treat all CPUs equally are called symmetric multiprocessing)SMP (systems .In systems where all CPUs are not equal, system resources may be divided in a number of ways, including asymmetric multiprocessing )ASMP(, non-uniform memory access)NUMA(multiprocessing, and clustered multiprocessing.

Processor coupling<ref>https://computing.llnl.gov/tutorials/parallel_comp/</ref>

Tightly-coupled multiprocessor systems contain multiple CPUs that are connected at the bus level .These CPUs may have access to a central shared memory )SMP or UMA(, or may participate in a memory hierarchy with both local and shared memory )NUMA.( Chip multiprocessors, also known as multi-core computing, involves more than one processor placed on a single chip and can be thought of the most extreme form of tightly-coupled multiprocessing .Mainframe systems with multiple processors are often tightly-coupled.

Loosely-coupled multiprocessor systems )often referred to as clusters (are based on multiple standalone single or dual processor commodity computers interconnected via a high speed communication system )Gigabit Ethernet is common .(A Linux Beowulf cluster is an example of a loosely-coupled system.

Tightly-coupled systems perform better and are physically smaller than loosely-coupled systems, but have historically required greater initial investments and may depreciate rapidly; nodes in a loosely-coupled system are usually inexpensive commodity computers and can be recycled as independent machines upon retirement from the cluster.

Power consumption is also a consideration .Tightly-coupled systems tend to be much more energy efficient than clusters .This is because considerable economies can be realized by designing components to work together from the beginning in tightly-coupled systems, whereas loosely-coupled systems use components that were not necessarily intended specifically for use in such systems.

Flynn's taxonomy for Instruction and Data Streams<ref>http://en.wikipedia.org/wiki/Flynn%27s_taxonomy</ref>

In multiprocessing, the processors can be used to execute a single sequence of instructions in multiple contexts )single-instruction, multiple-data or SIMD, often used in vector processing(, multiple sequences of instructions in a single context )multiple-instruction, single-data or MISD(, or multiple sequences of instructions in multiple contexts )multiple-instruction, multiple-data or MIMD). Flynn's taxonomy is a classification to describe instruction and data streams for parallel computing, proposed by Michael J. Flynn in 1966. The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) and data streams available in the architecture:

Single Instruction, Single Data stream (SISD) A sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fetches single Instruction Stream (IS) from memory. The CU then generates appropriate control signals to direct single processing element (PE) to operate on single Data Stream (DS) i.e. one operation at a time. Examples of SISD architecture are the traditional uniprocessor machines like a PC (currently manufactured PCs have multiple processors) or old mainframes.

Single Instruction, Multiple Data streams (SIMD) A computer which exploits multiple data streams against a single instruction stream to perform operations which may be naturally parallelized. For example, an array processor or GPU.

Multiple Instruction, Single Data stream (MISD) Multiple instructions operate on a single data stream. Uncommon architecture which is generally used for fault tolerance. Heterogeneous systems operate on the same data stream and must agree on the result. Examples include the Space Shuttle flight control computer.<ref>http://www.itswtech.org/Lec/Manal%28system%20programming%29/simeners_A/Multiprocessing_System_Cimenar.pdf</ref>

Multiple Instruction, Multiple Data streams (MIMD) Multiple autonomous processors simultaneously executing different instructions on different data. Distributed systems are generally recognized to be MIMD architectures; either exploiting a single shared memory space or a distributed memory space.

MISD multiprocessing <ref>http://en.wikipedia.org/wiki/MISD</ref>

In computing, MISD)Multiple Instruction, Single Data (is a type of parallel computing architecture where many functional units perform different operations on the same data .Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline.

MISD multiprocessing offers mainly the advantage of redundancy, since multiple processing units perform the same tasks on the same data, reducing the chances of incorrect results if one of the units fails .MISD architectures may involve comparisons between processing units to detect failures .Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type.

Apart from the redundant and fail-safe character of this type of multiprocessing, it has few advantages, and it is very expensive .It does not improve performance. Not many instances of this architecture exist, as MIMD and SIMD are often more appropriate for common data parallel techniques .Specifically, they allow better scaling and use of computational resources than MISD does.

MISD Architecture Application

Few actual examples of this class of parallel computer have ever existed. One example of this machine is the systolic array, such as the CMU iWarp[Borkaret al., 1990]. Another prominent example of MISD in computing are the Space Shuttle flight control computers. The research on MISD concentrates on some conceivable usage: Multiple frequency filters operating on a single signal stream, Multiple cryptography algorithms, and Attempting to crack a single coded message.

Systolic Arrays<ref>http://en.wikipedia.org/wiki/Systolic_array</ref>

Definition and History

In computer architecture, a systolic array is a pipe network arrangement of processing units called cells. It is a specialized form of parallel computing, where cells (i.e. processors), compute data and store it independently of each other. The systolic array paradigm, data-stream-driven by data counters, is the counterpart of the von Neumann paradigm, instruction-stream-driven by a program counter. Because a systolic array usually sends and receives multiple data streams, and multiple data counters are needed to generate these data streams, it supports data parallelism. The name derives from analogy with the regular pumping of blood by the heart. H. T. Kung and Charles E. Leiserson published the first paper describing systolic arrays in 1978; however, the first machine known to have used a similar technique was the Colossus Mark II in 1944.

Description

A systolic array is composed of matrix-like rows of data processing units called cells. Data processing units (DPUs) are similar to central processing units (CPU)s, (except for the usual lack of a program counter, since operation is transport-triggered, i.e., by the arrival of a data object). Each cell shares the information with its neighbours immediately after processing. The systolic array is often rectangular where data flows across the array between neighbour DPUs, often with different data flowing in different directions. The data streams entering and leaving the ports of the array are generated by auto-sequencing memory units, ASMs. Each ASM includes a data counter. In embedded systems a data stream may also be input from and/or output to an external source.

An example of a systolic algorithm might be designed for matrix multiplication. One matrix is fed in a row at a time from the top of the array and is passed down the array, the other matrix is fed in a column at a time from the left hand side of the array and passes from left to right. Dummy values are then passed in until each processor has seen one whole row and one whole column. At this point, the result of the multiplication is stored in the array and can now be output a row or a column at a time, flowing down or across the array.

Systolic arrays are arrays of DPUs which are connected to a small number of nearest neighbour DPUs in a mesh-like topology. DPUs perform a sequence of operations on data that flows between them. Because the traditional systolic array synthesis methods have been practiced by algebraic algorithms, only uniform arrays with only linear pipes can be obtained, so that the architectures are the same in all DPUs. The consequence is, that only applications with regular data dependencies can be implemented on classical systolic arrays. Like SIMD machines, clocked systolic arrays compute in "lock-step" with each processor undertaking alternate compute | communicate phases. But systolic arrays with asynchronous handshake between DPUs are called wavefront arrays. One well-known systolic array is Carnegie Mellon University's iWarp processor, which has been manufactured by Intel. An iWarp system has a linear array processor connected by data buses going in both directions.

Super Systolic Array

The super systolic array is a generalization of the systolic array. Because the classical synthesis methods (algebraic, i. e. projection-based synthesis), yielding only uniform DPU arrays permitting only linear pipes, systolic arrays could be used only to implement applications with regular data dependencies. By using simulated annealing instead, Rainer Kress has introduced the generalized systolic array: the super systolic array. Its application is not restricted to applications with regular data dependencies.

applications on 2D Convolution <ref>http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=4142414</ref>

bit-level super-systolic array can be used for 2D convolution which needs 1-bit ports for each input or output sequence. In addition to the arrangement of delays for data flow synchrony, the super-systolic array in which the cell of systolic array is organized again as a systolic array is adopted to perform the bit-level data flow and operation on bit data. The derived Super-systolic array for 2D convolution is synthesized using Synopsys design compiler based on Hynix 0.35,um cell library and compared with conventional word-level systolic array for 2D convolution. The bit-level super-systolic design is very compact in that it needs only 1-bit ports for each I/O sequence instead of n-bit ports in word-level design besides the reduced area requirement without time penalty on the output.

Network application with MISD architecture<ref>http://wiki.cs.unm.edu/ssl/lib/exe/fetch.php/papers:bridges11exploiting.pdf </ref>

A number of important system services need strong scaling, where a fixed workload is executed more quickly with increased core counts. This is particularly true for data-intensive, network-oriented system services because network interface card (NIC) line rates continue to increase but the individual cores that service them are not getting any faster. Replicated work approach based on a multiple-instruction/single-data (MISD) execution model seems to be a promising solution to take advantage of unexploited parallelization opportunities in multi-core systems. This approach is particularly important in the network stack, as it is needed to enable strongly scalable network services.

A multiple instruction/single data (MISD) execution model (i.e. replicated work) is used to provide ne-grained data consistency between cores and to eliminate expensive inter-core interactions. In this approach, shown in Figure 1(a), state is replicated into domains on separate cores, and requests that modify shared state are broadcast to every domain using a ringbuffer -based channel abstraction.

The replica that dequeues a request becomes the primary replica for that request and is responsible for fully processing it, including any updates with globally visible side effects (e.g. data delivery, packet reconstruction, acknowledgment generation). Other replicas that process the request will, on the other hand, only partially process each request to maintain state consistency.

MISD approach is particularly appropriate for many network services because it parallelizes expensive per-byte processing (e.g. data copies, encryption/decryption, and error correction) and replicates per-header processing costs that are known to be small. It also retains the reduced locking and caching costs of systems like Barrelfish while adding the ability to perform fine-grained updates to logically shared state.

To examine the potential for this approach compared to lock-based and atomic instruction-based MIMD approaches, A simple synthetic test has been constructed. In this test, processing each request requires some amount of work that can be done by one core without synchronization or replication (i.e. parallelizable work), and some work that must be synchronized or replicated. Specially, the parallelizable work is a set of memory updates done on per-core memory, while the synchronized work is the set of memory up-dates that must be performed. Figure 1(b) shows the potential performance advantages of this approach using a 10:1 ratio of parallelizable to replicated work; we chose this ratio based on our studies of production TCP/IP stacks. As can be seen, the lock-based model scales remarkably poorly and the atomic instruction approach is only slightly better.

Iwarp project by CMU<ref>http://www.cs.cmu.edu/~iwarp/</ref>

The iWarp project was started in 1988 to investigate issues involved in building and using high performance computer systems with powerful communication support. The project lead to the construction of the iWarp machines, jointly developed by Carnegie Mellon University and Intel Corporation.

The basic building block of the iWarp system is a full custom VSLI component integrating a LIW microprocessor, a network interface and a switching node into one single chip of 1.2cm x 1.2cm silicon. Intel Corporation has announced the iWarp systems as product in 1989 and built iWarp systems with over 1500 nodes since then. The first iWarp prototype system was delivered to Carnegie Mellon in Summer 1990 and in Fall CMU received the first 64 cell systems. All three full speed production systems were delivered in 1991. With the creation of the Intel Supercomputing Systems Division in Summer 1992 the iWarp know how was merged with the iPSC product line.

Intel kept iWarp as a product but stopped actively marketing it. As of today, the start of 1995 all three iWarp systems at CMU are in still in daily use. Surprisingly there are a few applications (e.g. in real time vision) for which iWarp is still the best machine, 3 years after it has been delivered. The high speed static memory and the high performance low latency communication system make iWarp a very well suited target for research efforts and many "proof of concept" applications.

References

<references />