CSC/ECE 506 Spring 2011/ch11 sw: Difference between revisions

| (33 intermediate revisions by the same user not shown) | |||

| Line 39: | Line 39: | ||

Either SRAM or DRAM can be used for storage of the directory information. Based on these design options, the next section discusses the DSM cache coherence protocols. | Either SRAM or DRAM can be used for storage of the directory information. Based on these design options, the next section discusses the DSM cache coherence protocols. | ||

== DSM Cache Coherence Protocol == | == DSM Cache Coherence Protocol: Scalable Coherent Interface == | ||

There are variants of three major cache coherence protocols that have been implemented in commercial DSM machines, and other protocols have been proposed in the research community. The protocols differ in terms of directory organization (and therefore directory memory overhead), the number and types of messages exchanged between nodes, the direct protocol processing overhead, and inherent scalability features. | There are variants of three major cache coherence protocols that have been implemented in commercial DSM machines, and other protocols have been proposed in the research community. The protocols differ in terms of directory organization (and therefore directory memory overhead), the number and types of messages exchanged between nodes, the direct protocol processing overhead, and inherent scalability features. | ||

| Line 47: | Line 47: | ||

SCI defines a chain-directory based protocol. Memory that can be coherently cached is divided into 64 bytes long lines. For each line, a distributed directory is maintained that maintains the nodes whose caches contain the copy of the line. A doubly-linked list called the ''''' sharing list''''' is used to implement the directory. Each memory line is associated with state information and pointer to the head of the sharing list. Each cache line has a forward and backward pointer. Cache lines also contain information about the cache data and the position (head, mid, tail) in the sharing list. The SCI cache coherence protocol is based on write invalidation. A head line can become exclusive by invalidating the rest of the sharing list. | SCI defines a chain-directory based protocol. Memory that can be coherently cached is divided into 64 bytes long lines. For each line, a distributed directory is maintained that maintains the nodes whose caches contain the copy of the line. A doubly-linked list called the ''''' sharing list''''' is used to implement the directory. Each memory line is associated with state information and pointer to the head of the sharing list. Each cache line has a forward and backward pointer. Cache lines also contain information about the cache data and the position (head, mid, tail) in the sharing list. The SCI cache coherence protocol is based on write invalidation. A head line can become exclusive by invalidating the rest of the sharing list. | ||

The following figure shows the steps involved in acquiring exclusive access to a line, specifically a line previously in exclusive mode: | === Protocol States === | ||

As in any protocol, set of states are defined in SCI for memory and cache line. The transition between these states on any access to memory by the processor is defined using a set of actions. | |||

Different applications (minimal, typical, and full) have different set of attributes. For instance, a '''minimal application''' (trivial but correct) requires that the memory is available in only one cache line and so it should not enable read-sharing. But a'''typical application''' might enable read-sharing of a memory location and provides provisions for efficiency, such as DMA transfers, local caching of data, and error recovery. Thus, it might add an additional stable memory state (FRESH). Similarly, a '''full application''' adds support for pair-wise sharing, QOLB lock bit synchronization etc. | |||

As the name suggests, the '''memory states''' identify the state of the memory block as seen by the home directory. The home directory maintains memory tag along with the pointer to the head of the sharing list. The memory states in SCI are as listed below: | |||

* Home : has no sharing list | |||

* Fresh : its sharing list is the same as that in the memory | |||

* Gone : has a sharing list which may be different from the memory | |||

* Wash : this is the transitional state between Fresh and Gone and, hence, is semi-stable | |||

Each processor's cache-coherency controller maintains the '''cache-line states'''. As stated before, a 7-bit field is stored in each cache line in the sharing list, along with the forward pointer to the next sharing-list node and the back pointer to the previous sharing-list node. Seven bits enable up to 128 possible values, and SCI defines twenty-nine stable-states for use in the minimal, typical, and full sets. | |||

Cache states existing when a memory transaction is not in process are termed as the '''''stable states'''''. Their names are derived from a combination of the position of the node in the sharing list - '''ONLY, HEAD, MID, and TAIL'''- and the state of the data for that node -'''FRESH, CLEAN, DIRTY, VALID, STALE'''- etc. | |||

The table of states below are the states defined for the Typical set: | |||

{|border="1" cellspacing="0" cellpadding="2" | |||

!colspan="5"| Table 1: Cache-line States | |||

|---- | |||

!State | |||

!Number of processors caching the block | |||

!Writable | |||

!Readable | |||

|---- | |||

|Only_Dirty | |||

|1 | |||

|Yes | |||

| | |||

|---- | |||

|Only_Fresh | |||

|1 | |||

|Yes | |||

| | |||

|---- | |||

|Head_Fresh | |||

|>1 | |||

|Yes | |||

| | |||

|---- | |||

|Tail_Valid | |||

|>2 | |||

| | |||

|Yes | |||

|---- | |||

|Mid_Valid | |||

|>2 | |||

| | |||

|Yes | |||

|---- | |||

|} | |||

=== State Diagram === | |||

There are 17 variations of reads, writes, and locks differentiated based on the number of bytes requested and the coherency requirements: | |||

* '''Fetch R''' - memory block with read privileges. | |||

* '''Fetch RW''' - memory block with read/write privileges. | |||

* '''Data Modify''' - a processor which is either the HEAD or the ONLY processor caching the block writes to the cache line. | |||

Based on the states mentioned in the previous section, the state diagrams for the SCI protocol are as follows: | |||

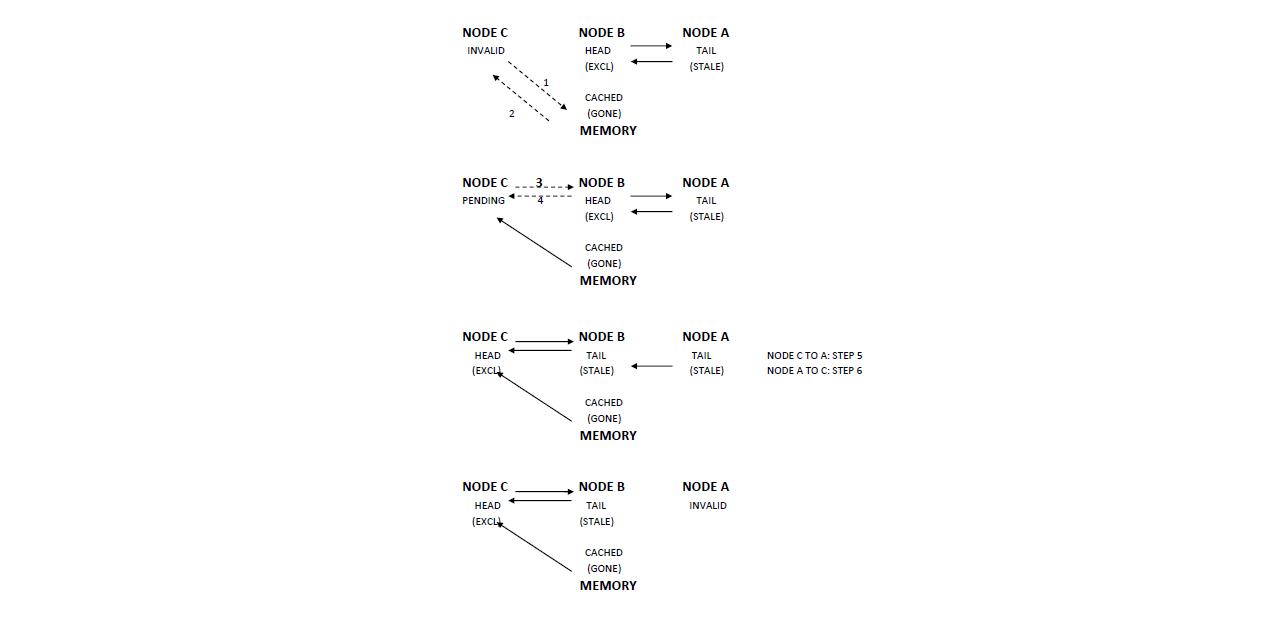

[[Image: MEM.jpg|center|frame|Memory State Diagram]] | |||

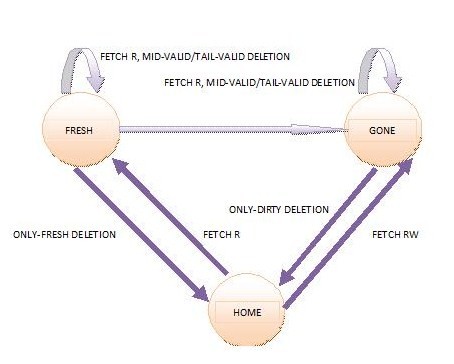

The cache-line state diagram is as shown below: | |||

[[Image: CACHE.jpg|center|frame|Cache Line State Diagram]] | |||

In the above diagram, the first value indicates the request to the directory by a node for memory access and the value in the parenthesis indicates the memory state at the time of the request. | |||

=== Examples of line sharing in SCI === | |||

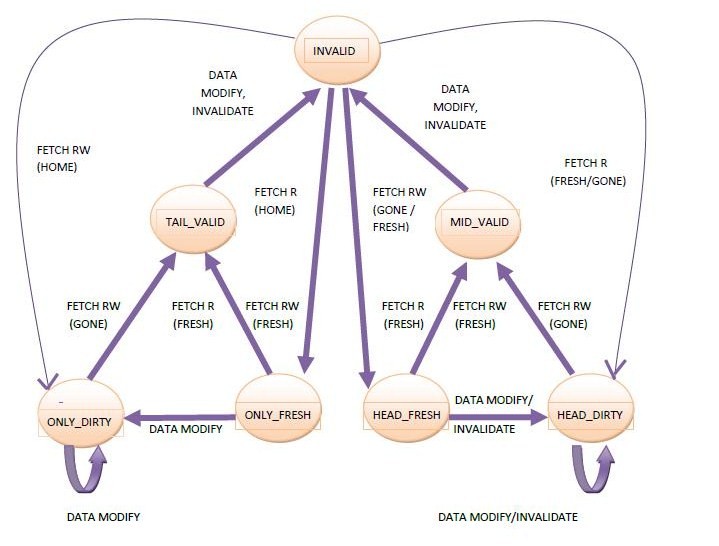

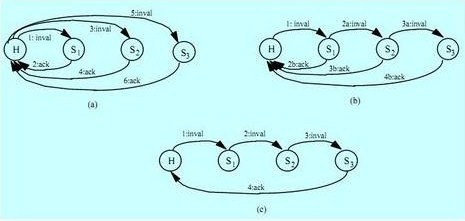

The following figure shows the steps involved in acquiring exclusive access to a line, specifically a line previously in exclusive mode:[2] | |||

[[Image:FIG1SW.jpg]] | [[Image:FIG1SW.jpg]] | ||

| Line 53: | Line 128: | ||

In a pairwise sharing situation, there is substantial benefits in the bandwidth and latency, due to the fewer number of messages exchanged: | In a pairwise sharing situation, there is substantial benefits in the bandwidth and latency, due to the fewer number of messages exchanged: | ||

[[Image: | [[Image:Figsw1.jpg|center|frame|Pairwise sharing scenario]] | ||

=== Forwarding messages === | |||

The following state diagram shows how messages are forwarded between nodes in a cache-based protocol like SCI: | |||

[[Image:figsw2.jpg|center|frame|Cache-based protocol]] | |||

=== How the protocol works === | |||

Initially the memory is uncached and the cached copies are in invalid state. A read request from the processor is directed towards the memory controller and the requested data is sent to the requester's cache, modifying its entry state from invalid to the head state. This results in changing the memory from uncached to the cached state. | |||

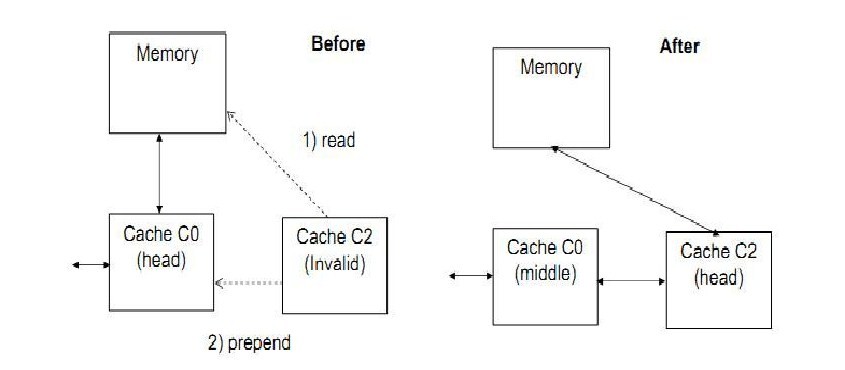

When a new requester directs its request to memory, the requester gets a pointer to the head from the memory. The requester sends a Prepend request (cache-to-cache read request) to the head cache. The head cache on receiving this request sets a backward pointer to the requester's cache. The requester gets the required data and its state is changed from entry state to head state. The following figure depicts this operation: | |||

[[Image:figsw3.jpg|center|frame]] | |||

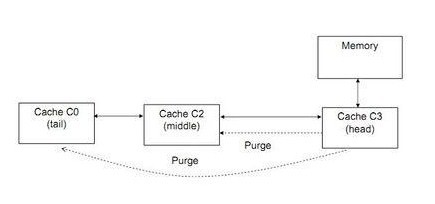

The head of the list can purge other entries in the list to obtain an exclusive RW entry. The figure shown below shows this operation: | |||

[[Image:figsw4.jpg|center|frame]] | |||

Similar to SCI, there is a distributed directory protocol as well. It is known as '''''Scalable Distributed Directory''''' (SDD) protocol. | |||

== Race conditions == | |||

[http://en.wikipedia.org/wiki/Race_condition Race condition] is an undesirable situation that arises when two or more processes or devices try to manipulate the same shared data variable and the output is dependent on the sequence of operations that are carried out on this variable. Race conditions are likely in multi-threaded or distributed programs. Thus, in a DSM which lacks serialization of actions and is prone to network errors and congestion issues, race conditions are inevitable. Thus, it is the responsibility of the cache coherence protocol to avoid such race conditions. | |||

Section 11.4.1 in Solihin discusses race conditions occurring due to out-of-sync directory states. This occurs due to the directory having an inconsistent view of the cache states since updating doesn't happen in a lock step. The cache coherence protocol handles such situations by splitting the EM state as mentioned on page 340 in Solihin book. Thus, with a few modifications in the protocol at the directory and every node's cache coherence controller was enough to handle the race conditions and the out-of-sync view of cache states at the directory. | |||

But handling races arising due to non-instantaneous processing of requests is not this simple. Section 11.4.2 discusses a few scenarios. The following section shows how SCI solves this problem. | |||

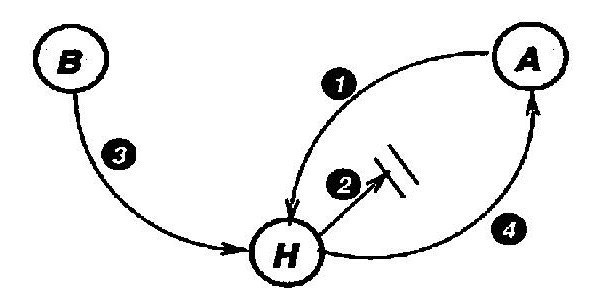

=== Handling Overlapped Processing of Requests === | |||

Figure 11.8 in Solihin depicts an example of race condition occurring due to early invalidation. The figure is redrawn here to explain the situation: | |||

[[Image: race1.jpg|center|frame]] | |||

The following is the sequence of actions taking place: | |||

* Step 1: ''A'' sends a read request to ''Home'' | |||

* Step 2: ''Home'' sends the reply (ReplyD) to ''A'' which is delayed. | |||

* Step 3: ''B'' sends a read exclusive request to ''Home'' | |||

* Step 4: ''Home'' sends an invalidation message to ''A'' thinking ''A'' has cached the block. This request reaches ''A'' sooner than ReplyD. | |||

Thus, ''A'' and directory see different sequence of requests and so, ''A'' doesn't know how to respond to the invalidation message. SCI protocol is designed to combat such situations and its possible to avoid race conditions in SCI. The following section describes how SCI prevents race conditions. | |||

=== How race conditions are prevented === | |||

SCI prevents race conditions based on the following three policies: | |||

* '''Atomic transactions''' | |||

A transaction is a set or sequence of sub-actions necessary to complete some requested action. For instance, querying memory for a data or invalidation message can be treated as transactions. Transactions are primarily a request-response pair, where one node acts as the initiating node by sending a request to another node and waiting for the response. The transaction is said to be complete when the response returns. | |||

[http://en.wikipedia.org/wiki/Atomicity_%28programming%29 Atomic transactions] proceed continuously between the request and the response and should be uninterrupted. So, for example, if node ''X'' is in the middle of a transaction with node ''Y'' and node ''Z'' tries to make a request to node ''X'', node ''X'' should respond to node ''Z'' that it is busy, telling node ''Z'' to try again. | |||

* '''Head Node''' | |||

SCI makes the ''Head'' node of a sharing list perform most of the coherence actions so that only one node is performing actions such as writes and invalidation of other sharers. The possibility of concurrent actions is thus decreased. When another node wishes to write, it can do so by becoming the Head Node of the sharing list for the cache line. | |||

* '''Access to Memory''' | |||

The ''Home'' node is modified in SCI to avoid race conditions. Here, ''Home'' node is only responsible for keeping track of who the current ''Head''. The memory block physically resides in the ''Home'' node. This requirement thus, serializes the order of access, as the ''Home'' node services a new request only after the previous request is finished, and the requests are processed in FIFO order. Also, the ''Head'' node of the sharing list is notified by the new ''Head'' when it is demoted. In this way, all nodes involved in a potential race are thus communicating with each other. This prevents the occurrence of the race condition itself. | |||

Consider the early invalidation example mentioned in the previous section. The atomic transactions property of the SCI protocol would prevent node Y from invalidating node X, as node X will be in the middle of a transaction, after requesting a block of data from memory and waiting for a response. Also, due of the memory access condition, when the Head node is in FRESH state and wants to write while another node wants to read, cannot hold good. This situation is avoided since both nodes must make their request to the Home node. The Head node must in turn request the ability to write, and the other node will have to request the data from the Home node. Either of the requests will reach the Home node first and the other request will thus, be deferred. | |||

=== SCI protocol solution to the Race Condition === | |||

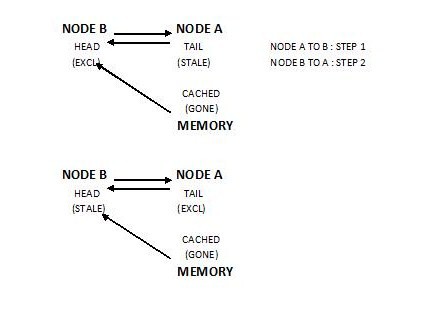

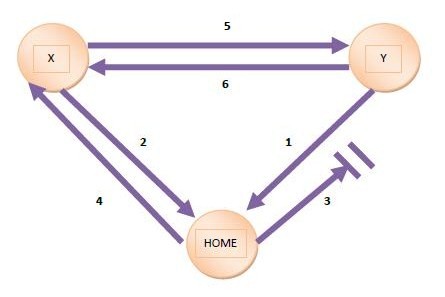

The following picture depicts how the early invalidation situation mentioned above is prevented in SCI protocol: | |||

[[Image: RACE2.jpg|center|frame]] | |||

In this diagram, both X and Y send a request to Home for access to the memory block (indicated by 1 and 2). Suppose Y's request reaches first. Then, Y goes into a busy state and waits for a response. Home responds to Y with the data which is delayed (indicated by 3). Also, Home responds to the request from X and informs it that Y is the current Head node (indicated by 4). X then sends a request to Y to demote itself (indicated by 5). Since Y still hasn't received the response from Home, and is in a transaction, it tells X that it is busy (indicated by 6). X will have to retry the request. | |||

In this way, SCI protocol avoids race conditions. Also, the use of ''PENDING'' state and ''purging'' avoid race conditions arising in case of simultaneous deletion and invalidation or concurrent deletions on a list etc. | |||

== References == | == References == | ||

[1] Ulrich Stern, and David L. Dill. Automatic | [1] Ulrich Stern, and David L. Dill. Automatic Verification of the SCI Cache Coherence Protocol. Department of Computer Science, Stanford University, Stanford. | ||

[2] Nagi Aboulenein, James Goodman, Stein Gjessing and Philip J.Woest. Hardware Support for Synchronization in Scalable Coherent Interface SCI. Technical Report #1117, November 1992. | |||

== See Also == | == See Also == | ||

Latest revision as of 01:33, 24 April 2011

This article discusses the design of scalable shared memory multiprocessors. As we know, bus-based multiprocessor possess the disadvantage of being non-scalable. So, a new system called Distributed Shared Memory was introduced wherein, accessing different parts of memory takes different times. Thus, a DSM is more scalable than a bus-based multiprocessors. But as the size of the DSM increases, so does the cost for the hardware support needed for it.

The wiki chapter introduces the approaches used to scale multiprocessors, the cache coherence protocols for a basic DSM and explains how the race conditions are handled.

Approaches to Large-Scale Multiprocessors

The two main factors limiting the scalability of a bus-based multiprocessor are:

- Physical scalability

- Protocol scalability

As mentioned in section 11.1 of Solihin book, a directory protocol using point-to-point interconnection is the best option. In a directory-based protocol, the directory - a structure - holds the information about which caches have a copy of the block. So it is required to contact the directory to get the list of caches to be requested for the copy of the block (which avoids broadcasting request). The directory-based protocol benefits by saving traffic in cases where data sharing occurs for read-only data.

Design considerations

The following design decisions are chosen to implement a DSM:

- A straight-forward physical address - to - memory mapping function is used.

- Memory is considered to be linear and interleaving is avoided.

- Page allocation policies like the Least Recently Used policy, Round Robin policy etc, can be used.

Also, to implement the directory structure, we have the following options:

- Cache-based or memory-based directory.

- Centralized or distributed directory.

A distributed directory approach is more scalable. In order to implement such a protocol, a directory has the following ways to keep track of which caches hold the copy of a block: [ Solihin, 11.2.1]

- Full-bit vector format

- Coarse-bit vector format

- Limited pointer format

- Sparse directory format

These formats can be either used exclusively or can be used in combinations. The last design issue is where is the directory information physically located, and the following choices exist for this:

- Allocating a part of main memory

- Allocating separate memory

- On the same chip as the processor

Either SRAM or DRAM can be used for storage of the directory information. Based on these design options, the next section discusses the DSM cache coherence protocols.

DSM Cache Coherence Protocol: Scalable Coherent Interface

There are variants of three major cache coherence protocols that have been implemented in commercial DSM machines, and other protocols have been proposed in the research community. The protocols differ in terms of directory organization (and therefore directory memory overhead), the number and types of messages exchanged between nodes, the direct protocol processing overhead, and inherent scalability features.

This section discusses the Scalable Coherent Interface (SCI) protocol for maintaining cache coherency. As we know, a distributed shared-memory multiprocessor provides shared memory at the software level, while the actual hardware implementation is a distributed message passing system. The IEEE Standard for Scalable Coherent Interface (SCI) includes a protocol for maintaining cache coherence among the distributed components in such a distributed shared-memory multiprocessor.[1]

SCI defines a chain-directory based protocol. Memory that can be coherently cached is divided into 64 bytes long lines. For each line, a distributed directory is maintained that maintains the nodes whose caches contain the copy of the line. A doubly-linked list called the sharing list is used to implement the directory. Each memory line is associated with state information and pointer to the head of the sharing list. Each cache line has a forward and backward pointer. Cache lines also contain information about the cache data and the position (head, mid, tail) in the sharing list. The SCI cache coherence protocol is based on write invalidation. A head line can become exclusive by invalidating the rest of the sharing list.

Protocol States

As in any protocol, set of states are defined in SCI for memory and cache line. The transition between these states on any access to memory by the processor is defined using a set of actions.

Different applications (minimal, typical, and full) have different set of attributes. For instance, a minimal application (trivial but correct) requires that the memory is available in only one cache line and so it should not enable read-sharing. But atypical application might enable read-sharing of a memory location and provides provisions for efficiency, such as DMA transfers, local caching of data, and error recovery. Thus, it might add an additional stable memory state (FRESH). Similarly, a full application adds support for pair-wise sharing, QOLB lock bit synchronization etc.

As the name suggests, the memory states identify the state of the memory block as seen by the home directory. The home directory maintains memory tag along with the pointer to the head of the sharing list. The memory states in SCI are as listed below:

- Home : has no sharing list

- Fresh : its sharing list is the same as that in the memory

- Gone : has a sharing list which may be different from the memory

- Wash : this is the transitional state between Fresh and Gone and, hence, is semi-stable

Each processor's cache-coherency controller maintains the cache-line states. As stated before, a 7-bit field is stored in each cache line in the sharing list, along with the forward pointer to the next sharing-list node and the back pointer to the previous sharing-list node. Seven bits enable up to 128 possible values, and SCI defines twenty-nine stable-states for use in the minimal, typical, and full sets.

Cache states existing when a memory transaction is not in process are termed as the stable states. Their names are derived from a combination of the position of the node in the sharing list - ONLY, HEAD, MID, and TAIL- and the state of the data for that node -FRESH, CLEAN, DIRTY, VALID, STALE- etc.

The table of states below are the states defined for the Typical set:

| Table 1: Cache-line States | ||||

|---|---|---|---|---|

| State | Number of processors caching the block | Writable | Readable | |

| Only_Dirty | 1 | Yes | ||

| Only_Fresh | 1 | Yes | ||

| Head_Fresh | >1 | Yes | ||

| Tail_Valid | >2 | Yes | ||

| Mid_Valid | >2 | Yes | ||

State Diagram

There are 17 variations of reads, writes, and locks differentiated based on the number of bytes requested and the coherency requirements:

- Fetch R - memory block with read privileges.

- Fetch RW - memory block with read/write privileges.

- Data Modify - a processor which is either the HEAD or the ONLY processor caching the block writes to the cache line.

Based on the states mentioned in the previous section, the state diagrams for the SCI protocol are as follows:

The cache-line state diagram is as shown below:

In the above diagram, the first value indicates the request to the directory by a node for memory access and the value in the parenthesis indicates the memory state at the time of the request.

Examples of line sharing in SCI

The following figure shows the steps involved in acquiring exclusive access to a line, specifically a line previously in exclusive mode:[2]

In a pairwise sharing situation, there is substantial benefits in the bandwidth and latency, due to the fewer number of messages exchanged:

Forwarding messages

The following state diagram shows how messages are forwarded between nodes in a cache-based protocol like SCI:

How the protocol works

Initially the memory is uncached and the cached copies are in invalid state. A read request from the processor is directed towards the memory controller and the requested data is sent to the requester's cache, modifying its entry state from invalid to the head state. This results in changing the memory from uncached to the cached state.

When a new requester directs its request to memory, the requester gets a pointer to the head from the memory. The requester sends a Prepend request (cache-to-cache read request) to the head cache. The head cache on receiving this request sets a backward pointer to the requester's cache. The requester gets the required data and its state is changed from entry state to head state. The following figure depicts this operation:

The head of the list can purge other entries in the list to obtain an exclusive RW entry. The figure shown below shows this operation:

Similar to SCI, there is a distributed directory protocol as well. It is known as Scalable Distributed Directory (SDD) protocol.

Race conditions

Race condition is an undesirable situation that arises when two or more processes or devices try to manipulate the same shared data variable and the output is dependent on the sequence of operations that are carried out on this variable. Race conditions are likely in multi-threaded or distributed programs. Thus, in a DSM which lacks serialization of actions and is prone to network errors and congestion issues, race conditions are inevitable. Thus, it is the responsibility of the cache coherence protocol to avoid such race conditions.

Section 11.4.1 in Solihin discusses race conditions occurring due to out-of-sync directory states. This occurs due to the directory having an inconsistent view of the cache states since updating doesn't happen in a lock step. The cache coherence protocol handles such situations by splitting the EM state as mentioned on page 340 in Solihin book. Thus, with a few modifications in the protocol at the directory and every node's cache coherence controller was enough to handle the race conditions and the out-of-sync view of cache states at the directory.

But handling races arising due to non-instantaneous processing of requests is not this simple. Section 11.4.2 discusses a few scenarios. The following section shows how SCI solves this problem.

Handling Overlapped Processing of Requests

Figure 11.8 in Solihin depicts an example of race condition occurring due to early invalidation. The figure is redrawn here to explain the situation:

The following is the sequence of actions taking place:

- Step 1: A sends a read request to Home

- Step 2: Home sends the reply (ReplyD) to A which is delayed.

- Step 3: B sends a read exclusive request to Home

- Step 4: Home sends an invalidation message to A thinking A has cached the block. This request reaches A sooner than ReplyD.

Thus, A and directory see different sequence of requests and so, A doesn't know how to respond to the invalidation message. SCI protocol is designed to combat such situations and its possible to avoid race conditions in SCI. The following section describes how SCI prevents race conditions.

How race conditions are prevented

SCI prevents race conditions based on the following three policies:

- Atomic transactions

A transaction is a set or sequence of sub-actions necessary to complete some requested action. For instance, querying memory for a data or invalidation message can be treated as transactions. Transactions are primarily a request-response pair, where one node acts as the initiating node by sending a request to another node and waiting for the response. The transaction is said to be complete when the response returns.

Atomic transactions proceed continuously between the request and the response and should be uninterrupted. So, for example, if node X is in the middle of a transaction with node Y and node Z tries to make a request to node X, node X should respond to node Z that it is busy, telling node Z to try again.

- Head Node

SCI makes the Head node of a sharing list perform most of the coherence actions so that only one node is performing actions such as writes and invalidation of other sharers. The possibility of concurrent actions is thus decreased. When another node wishes to write, it can do so by becoming the Head Node of the sharing list for the cache line.

- Access to Memory

The Home node is modified in SCI to avoid race conditions. Here, Home node is only responsible for keeping track of who the current Head. The memory block physically resides in the Home node. This requirement thus, serializes the order of access, as the Home node services a new request only after the previous request is finished, and the requests are processed in FIFO order. Also, the Head node of the sharing list is notified by the new Head when it is demoted. In this way, all nodes involved in a potential race are thus communicating with each other. This prevents the occurrence of the race condition itself.

Consider the early invalidation example mentioned in the previous section. The atomic transactions property of the SCI protocol would prevent node Y from invalidating node X, as node X will be in the middle of a transaction, after requesting a block of data from memory and waiting for a response. Also, due of the memory access condition, when the Head node is in FRESH state and wants to write while another node wants to read, cannot hold good. This situation is avoided since both nodes must make their request to the Home node. The Head node must in turn request the ability to write, and the other node will have to request the data from the Home node. Either of the requests will reach the Home node first and the other request will thus, be deferred.

SCI protocol solution to the Race Condition

The following picture depicts how the early invalidation situation mentioned above is prevented in SCI protocol:

In this diagram, both X and Y send a request to Home for access to the memory block (indicated by 1 and 2). Suppose Y's request reaches first. Then, Y goes into a busy state and waits for a response. Home responds to Y with the data which is delayed (indicated by 3). Also, Home responds to the request from X and informs it that Y is the current Head node (indicated by 4). X then sends a request to Y to demote itself (indicated by 5). Since Y still hasn't received the response from Home, and is in a transaction, it tells X that it is busy (indicated by 6). X will have to retry the request.

In this way, SCI protocol avoids race conditions. Also, the use of PENDING state and purging avoid race conditions arising in case of simultaneous deletion and invalidation or concurrent deletions on a list etc.

References

[1] Ulrich Stern, and David L. Dill. Automatic Verification of the SCI Cache Coherence Protocol. Department of Computer Science, Stanford University, Stanford.

[2] Nagi Aboulenein, James Goodman, Stein Gjessing and Philip J.Woest. Hardware Support for Synchronization in Scalable Coherent Interface SCI. Technical Report #1117, November 1992.

See Also

[1] http://ntrg.cs.tcd.ie/undergrad/4ba2.05/group12/index.html

[2] http://www.csl.cornell.edu/~heinrich/dissertation/ChapterTwo.pdf

[3] Simoni, Richard. Implementing a Directory-Based Cache Consistency Protocol. Technical Report: CSL-TR-90-423 March 1990.

[4] D. B. Gustavson. The Scalable Coherent Interface and related standards projects. IEEE Micro, 12(1):10{22, 1992.

[5] IEEE Std 1596-1992, IEEE Standard for Scalable Coherent Interface (SCI).

[6] D. V. James, A. T. Laundrie, S. Gjessing, and G. S. Sohi. Distributed-directory scheme: Scalable Coherent Interface. Computer, 23(6):74{7, 1990.