CSC/ECE 506 Spring 2011/ch11 BB EP: Difference between revisions

| (64 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

__TOC__ | __TOC__ | ||

= | |||

(Unless otherwise noted, the contents of this article is derived from the IEEE standardization document titled "IEEE Standard for Scalable Coherent Interface (SCI)"<sup><span class = "plainlinks">[[#References|[1]]]</span></sup> .) | |||

== Background == | == Background == | ||

The Scalable Coherent Interface (SCI) cache coherence protocol defines a set of states for memory, a set of states for cache lines, and a set of actions that transition between these states when processors access memory. | |||

Three sets of these attributes are defined for minimal, typical, and full applications: | |||

* '''Minimal''' - for ‘trivial but correct’ applications that require the presence of the memory in only one cache line. It does not enable read-sharing, and is appropriate for small multi-processors or applications that don’t require significant sharing. | |||

* '''Typical''' - enables read-sharing of a memory location and provisions for efficiency, such as DMA transfers, local caching of data, and error recovery. This set adds an additional stable memory state (FRESH) and multiple cache states. This set will be the focus of this article going forward. | |||

* '''Full''' - adds support for pair-wise sharing, QOLB lock bit synchronization, and cleansing and washing of cache lines. | |||

== State Diagrams == | == State Diagrams == | ||

=== Memory === | === Memory States === | ||

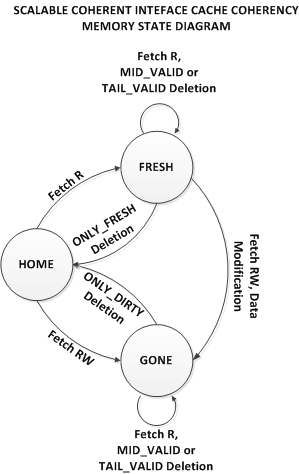

The memory states define the state of the memory block from the perspective of the home directory. This 2-bit field is maintained in the memory tag by the home directory along with the pointer to the head of the sharing list (forwId). This simple state model includes three stable states and one semi-stable state. | |||

{|border="1" cellspacing="0" cellpadding="2" | |||

!colspan="5"| Table 1: Memory States | |||

|---- | |||

!State | |||

!Description | |||

!Minimal | |||

!Typical | |||

!Full | |||

|---- | |||

|HOME | |||

|no sharing list | |||

|Y | |||

|Y | |||

|Y | |||

|---- | |||

|FRESH | |||

|sharing-list copy is the same as memory | |||

| | |||

|Y | |||

|Y | |||

|---- | |||

|GONE | |||

|sharing-list copy may be different from memory | |||

|Y | |||

|Y | |||

|Y | |||

|---- | |||

|WASH* | |||

|transitional state (GONE to FRESH) | |||

| | |||

| | |||

|Y | |||

|---- | |||

|} | |||

These states are used by the coherency model to determine the state of the cache line when memory transactions are performed by individual processors. Likewise, these memory states are impacted when these memory transactions occur. | |||

Below is a state diagram that depicts the transition between these memory states: | |||

[[Image:memory_state.png|center]] | |||

=== Cache States === | === Cache States === | ||

The cache-line states are maintained by each processors cache-coherency controller. This 7-bit field is stored in each cache line in the sharing list, along with the pointer to the next sharing-list node (forwId) and the previous sharing-list node (backID). Seven bits enable up to 128 possible values, and SCI defines twenty-nine stable-states for use in the minimal, typical, and full sets. | |||

Stable states are those cache states that exist when a memory transaction is not in process. Their names are derived from a combination of | |||

the position of the node in the sharing list, such as ONLY, HEAD, MID, and TAIL, and the state of the data for that node, such as FRESH, CLEAN, DIRTY, VALID, STALE, etc. | |||

====States of the Typical Set==== | |||

Following are the states defined for the Typical set. (Note that other states may be supported to provide certain interoperability with nodes implementing the Full set, but are not used when all nodes support the Typical set.) | |||

* '''ONLY_DIRTY''' - only one processor has the memory block in its cache. This block is writable, and the processor has written (or intends) to write to it. This state is set when the processor requests the block with read/write privileges, and no other processor currently caches the block. | |||

* '''ONLY_FRESH''' - only one processor had the memory block in its cache. This block is writeable, but processor has not written to it. This state is set when the processor requests the block with read privileges, and no other processor currently caches the block. | |||

* '''HEAD_DIRTY''' - more than one processor has the memory block in its cache. This block is writable, and the processor has written (or intends) to write to it. This state is set when the processor requests the block with read/write privileges, and another processors already caches the block. | |||

* '''HEAD_FRESH''' - more than one processor had the memory block in its cache. This block is writeable, but processor has not written to it. This state is set when the processor requests the block with read privileges, and another processors already caches the block. | |||

* '''MID_VALID''' - more than two processors have the memory in its cache, and it is readable. The processor cache with this state is neither the Head or the Tail of the of the sharing list. | |||

* '''TAIL_VALID''' - more than two processors have the memory in its cache, and it is readable. The processor cache with this state is the Tail of the of the sharing list. | |||

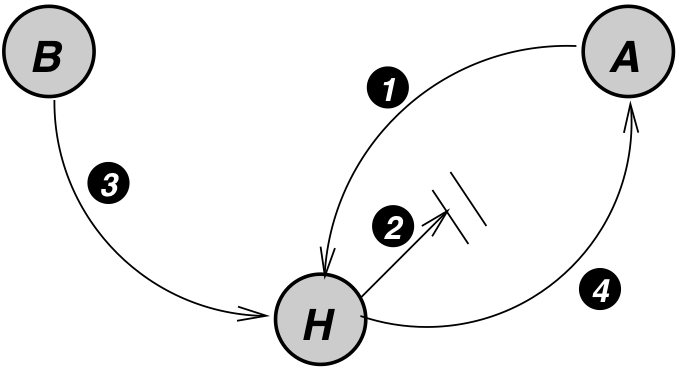

Below is a state diagram that depicts these states: | |||

[[Image:cache_states.png|center]] | [[Image:cache_states.png|center]] | ||

The request sub-actions of memory transactions that expect a response from the directory include 17 variations of reads, writes, and locks based on the number of bytes requested and the coherency requirements. For convenience, the notation on the edges of the above graph is derived from the terms used in the SCI specification to describe the memory transactions. | |||

The first value indicates the request to the directory by a node for memory access: | |||

* '''Fetch R''' - indicates a request for a memory block with read privileges. | |||

* '''Fetch RW''' - indicates a request for a memory block with read/write privileges. | |||

* '''Data Modify''' - indicates that a processor (the HEAD or the ONLY processor caching the block) writes to the cache line. | |||

The value in the parenthesis indicates the memory state at the time of the request. | |||

== Race Conditions == | == Race Conditions == | ||

| Line 30: | Line 113: | ||

Race conditions are almost non-existent in the SCI protocol, due primarily to the protocol's design. A brief discussion of how this is accomplished follows, followed by a discussion showing what happens if this condition arises in the SCI protocol. | Race conditions are almost non-existent in the SCI protocol, due primarily to the protocol's design. A brief discussion of how this is accomplished follows, followed by a discussion showing what happens if this condition arises in the SCI protocol. | ||

==== Atomic Transactions ==== | ==== Atomic Transactions ==== | ||

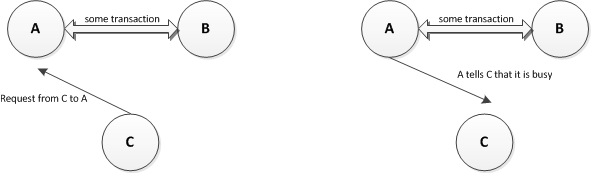

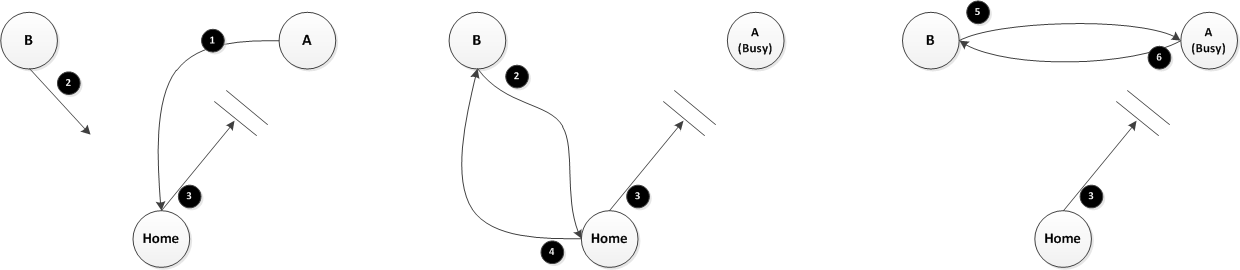

SCI's primary method for preventing race conditions is having atomic transactions. A transaction is defined as a set of sub-actions necessary to complete some requested action, such as | SCI's primary method for preventing race conditions is having atomic transactions. A transaction is defined as a set of sub-actions necessary to complete some requested action, such as querying memory for a data or invalidating a node's cache. This usually takes the form a a request-response pair, where the initiating node sends a request to another node and waits for the response. The transaction is not complete until the response returns. Suppose, for example, that node ''A'' is in the middle of a transaction with node ''B''. Node ''C'' then tries to make a request of node ''A''. Node ''A'' will respond to node ''C'' that it is busy, telling node ''C'' to try again, as shown in the following diagram. | ||

[[Image:AtomicBusy.png|center]] | [[Image:AtomicBusy.png|center]] | ||

Thus, in the Early Invalidation race, node ''A'' would be in the middle of a transaction, which would prevent node ''B'' from invalidating it. | Thus, in the Early Invalidation race, node ''A'' would be in the middle of a transaction, having requested a block of data from memory and not having received a response, which would prevent node ''B'' from invalidating it. Note, however, that nodes will not stay in the busy state indefinitely. Doing so would lead to potential deadlocks, so all transactions have time outs that will eventually cause the transaction to fail, thus moving the node out of the busy state, making it able to respond to requests again. | ||

==== Head Node ==== | ==== Head Node ==== | ||

Another method for preventing race conditions is to have the Head | Another method for preventing race conditions is to have the ''Head'' node of a sharing list perform many of the coherence actions. As a result, only one node is performing actions such as writes and invalidations of other sharers. Since only one node is performing these actions, the possibility of concurrent actions is decreased. If another node wants to write, for instance, it must become the Head Node of the sharing list for the cache line to which it wants to write, displacing the current ''Head'' node as the node that can write. All state changes in the sharing list occur at the behest of the ''Head'' node, with the exception of nodes deleting themselves from the list. | ||

When simply sharing a read-only copy of a node, as in when the memory is a ''FRESH'' state, the Head | When simply sharing a read-only copy of a node, as in when the memory is a ''FRESH'' state, the ''Head'' node is somewhat irrelevant. All the sharing nodes have their own cached value of the cache line. If any node wants to write, including the ''Head'' node, it must perform an additional action in order to do so. Likewise, when a sharing list is sharing a line of data in the dirty, or ''GONE'', memory state, as long as the ''Head'' node has not written to the line, then all the sharing nodes stay in the list with their cached line. However, at the point the ''Head'' node wants to write, it can write its data immediately, but it then must invalidate all other shared copies, via the forward pointers in the sharing list. | ||

This, however, does not protect against a race condition where the memory is ''FRESH'', the Head | This, however, does not protect against a race condition where the memory is ''FRESH'', the ''Head'' node wants to write, and another node also wants to join the list. The Memory Access mechanism prevents this condition. | ||

==== Memory Access ==== | ==== Memory Access ==== | ||

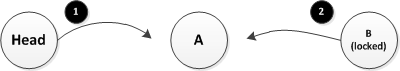

The final way that the SCI protocol minimizes race conditions is by changing the directory structure. In Solihin 11.4, there is a ''Home'' node which keeps track of the cache state and has a certain amount of knowledge about the system as a result. In the SCI protocol, there is still a ''Home'' node of sorts, but this node is only responsible for keeping track of who the current ''Head''. Such a ''Home'' node is usually the node where the memory block physically resides. When a node wants to access a block of memory, it sends a request to the ''Home'' node of that memory block, assuming it isn't already in the sharing list for that block. In the event that the data is already being used, the ''Home'' node will include in its response the address of the ''Head'' node of the sharing list. This requirement necessarily serializes the order of access, as one access request is not serviced at the ''Home'' node until the previous request is finished, and the requests are processed in FIFO order. As well, whichever node is the ''Head'' of the sharing list will be notified by the new ''Head'' when it is demoted. All parties involved in a potential race are thus talking to each other, preventing the race from occurring in the first place. | |||

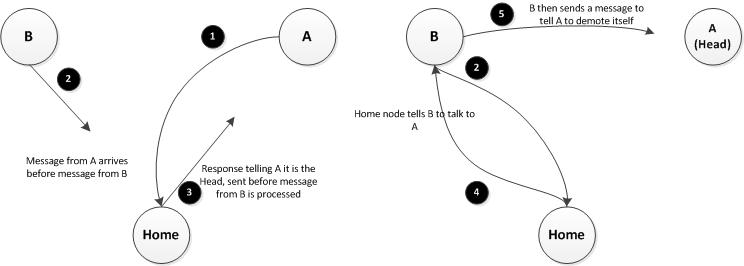

Because of this requirement, the projected race condition from the previous section, where the ''Head'' node is in a ''FRESH'' state and wants to write while another node also wants to read, cannot occur. This is because both nodes must make their request to the ''Home'' node; the ''Head'' node must request the ability to write, and the other node must request the data from the ''Home'' node. One of these requests will make it to the ''Home'' node first, resulting in the second request being deferred to the first node to reach to ''Home'' node. Such a scenario is pictured below. | |||

[[Image:MemoryAccess.png|center]] | |||

==== Solihin 11.4 Resolution ==== | |||

The three previous sections discussed individual parts of how the SCI protocol reduces race conditions. Putting all three of these parts together yields the following scenario which resolves the Early Invalidation race as described in the text. | |||

[[Image:SolihinSCI.png|center]] | |||

# ''A'' sends a request to ''Home'' for access to the memory block. It then goes into a busy state while it waits for a response. | |||

# ''B'' also sends a request to ''Home'' for access to the same memory block. ''A'''s request is received first | |||

# ''Home'' responds to ''A'' with the data, but this response gets caught in network traffic and delayed. This response is sent before ''Home'' processes the request from ''B''. | |||

# ''Home'' responds to the request from ''B'', telling it that ''A'' is the current ''Head'' node. | |||

# ''B'' then sends a request to ''A'' to tell it to demote itself. | |||

# ''A'' still hasn't received the response from ''Home'', so it is still in a transaction, and it tells ''B'' that it is busy. ''B'' will have to retry the request. | |||

=== Possible Race Conditions === | It should be noted that ''B'' will be in the ''PENDING''<sup><span class = "plainlinks">[[#Definitions_and_Terms|[definitions]]]</span></sup> state, so any requests to demote it from the ''Head'' position will be delayed.. This could lead to a chaining of nodes in the ''PENDING'' state, but as soon as node ''A'' receives its response from ''Home'' or times out, the requests in the chain will be successively accepted and processed. | ||

=== Other Possible Race Conditions === | |||

The design of the SCI protocol prevents many race conditions from occurring, as has already been shown. Here are two other conditions that may arise, with a discussion of how the protocol resolves the issue. | |||

==== Concurrent List Deletions ==== | ==== Concurrent List Deletions ==== | ||

Even though the ''Head'' node performs most of the coherence actions for a shard list, the individual nodes are able to invalidate themselves, such as when they evict the block from their cache. This is referred to in the SCI standard as a "deletion" from the sharing list. Deletion is accomplished by having the invalidating node "lock" itself and then inform its forward and back nodes that they should now point to each other. This "locking" is essentially another ''PENDING'' state. A problem could arise, then, when two neighboring nodes try to invalidate themselves at the same time, as they would both be locked and not respond to each other's message. In this case, though, the protocol specifies that the node that is closest to the tail takes priority (IEEE pg156)<sup><span id="1body">[[#1foot|[1]]]</span></sup>. So, the deadlock and/or race condition is averted by ordering the deletion from the tail towards the head. | |||

==== Simultaneous Deletion and Invalidation ==== | ==== Simultaneous Deletion and Invalidation ==== | ||

Another potential race condition occurs when the ''Head'' node tries to purge the list of sharers at the same time as a node in the list tries to remove itself from the list. Suppose that the ''Head'' node is trying to purge node ''A'', which has node ''B'' as its next node in the list, while at the same time, node ''B'' is trying to remove itself from the list. Node ''B'' needs to tell node ''A'' to update its next pointer, but the ''Head'' node will need to access node ''A'''s next pointer to know what the next node in the list is. An illustration is shown below. The ''1'' and the ''2'' represent the messages being sent from the respective node to node ''A'' that have not yet arrived. | |||

= | [[Image:PurgeDelete.png|center]] | ||

What will happen is that one of the messages will reach node ''A'' first. While ''A'' is responding to this message, it will tell other nodes that it is busy, in a similar manner to the notion of the atomic transactions. If node ''B'''s message arrives first, causing the message from the ''Head'' node to be rejected. Node ''A'' will have its forward pointer changed at this point, so when the ''Head'' node resends its request, all will work as expected. | |||

The second option is more difficult. If the ''Head'' node's message arrives first, node ''A'' will be invalidated, so that when the message from node ''B'' is sent again, node ''A'' will simply inform node ''B'' that it is no longer part of the list. As well, the ''Head'' node will now have its forward pointer pointing at node ''B''. Node ''A'' has thus been successfully purged, but we arrive at an impasse, as now the ''Head'' node will try to invalidate the locked node ''B'', while node ''B'' will try to tell the ''Head'' node to change its forward pointer, with both nodes being inside a transaction. This situation is illustrated by the following figure. | |||

[[Image:PurgeDelete2.png|center]] | |||

The protocol does not specify how to handle this situation, but it would make sense for the protocol to allow one of the messages through, presumably the ''Head'' node's message. Node ''B'' could simply respond in the affirmative, telling the ''Head'' node what the following node is, but this would only work if node ''B'' notifies the next node of the deletion after it notifies node ''A''. Otherwise, the node following ''B'' would have its back pointer pointing to the wrong node. The protocol standard seems to suggest that this is the case (IEEE, pg162)<sup><span id="1body">[[#1foot|[1]]]</span></sup>. | |||

== Definitions and Terms == | == Definitions and Terms == | ||

* ''Head Node'' - The node at the beginning of the sharing list | |||

* ''Home Node'' - The node responsible for keeping track of the ''Head'' node for a sharing list for a memory block, usually the node at which the memory block resides. | |||

* ''PENDING state'' - The intermediate state for a node while it is attempting to become the ''Head'' node in the sharing list. This includes the transaction involved in querying the ''Home'' node and the transaction involved in demoting the current ''Head'' node. | |||

* ''SCI'' - Scalable Coherent Interface | |||

== References == | == References == | ||

<span id="1foot">[[#1body|1.]]</span> "IEEE Standard for Scalable Coherent Interface (SCI).," IEEE Std 1596-1992 , vol., no., pp.i, 1993. doi: 10.1109/IEEESTD.1993.120366 | |||

URL: http://ieeexplore.ieee.org.proxied.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=347683&isnumber=8049 <br> | |||

<span id="2foot">[[#2body|2.]]</span> [http://www.scizzl.com/HowSCIcohWorks.html How SCI Coherence Works] <br> | |||

Latest revision as of 20:40, 23 April 2011

(Unless otherwise noted, the contents of this article is derived from the IEEE standardization document titled "IEEE Standard for Scalable Coherent Interface (SCI)"[1] .)

Background

The Scalable Coherent Interface (SCI) cache coherence protocol defines a set of states for memory, a set of states for cache lines, and a set of actions that transition between these states when processors access memory.

Three sets of these attributes are defined for minimal, typical, and full applications:

- Minimal - for ‘trivial but correct’ applications that require the presence of the memory in only one cache line. It does not enable read-sharing, and is appropriate for small multi-processors or applications that don’t require significant sharing.

- Typical - enables read-sharing of a memory location and provisions for efficiency, such as DMA transfers, local caching of data, and error recovery. This set adds an additional stable memory state (FRESH) and multiple cache states. This set will be the focus of this article going forward.

- Full - adds support for pair-wise sharing, QOLB lock bit synchronization, and cleansing and washing of cache lines.

State Diagrams

Memory States

The memory states define the state of the memory block from the perspective of the home directory. This 2-bit field is maintained in the memory tag by the home directory along with the pointer to the head of the sharing list (forwId). This simple state model includes three stable states and one semi-stable state.

| Table 1: Memory States | ||||

|---|---|---|---|---|

| State | Description | Minimal | Typical | Full |

| HOME | no sharing list | Y | Y | Y |

| FRESH | sharing-list copy is the same as memory | Y | Y | |

| GONE | sharing-list copy may be different from memory | Y | Y | Y |

| WASH* | transitional state (GONE to FRESH) | Y | ||

These states are used by the coherency model to determine the state of the cache line when memory transactions are performed by individual processors. Likewise, these memory states are impacted when these memory transactions occur.

Below is a state diagram that depicts the transition between these memory states:

Cache States

The cache-line states are maintained by each processors cache-coherency controller. This 7-bit field is stored in each cache line in the sharing list, along with the pointer to the next sharing-list node (forwId) and the previous sharing-list node (backID). Seven bits enable up to 128 possible values, and SCI defines twenty-nine stable-states for use in the minimal, typical, and full sets.

Stable states are those cache states that exist when a memory transaction is not in process. Their names are derived from a combination of the position of the node in the sharing list, such as ONLY, HEAD, MID, and TAIL, and the state of the data for that node, such as FRESH, CLEAN, DIRTY, VALID, STALE, etc.

States of the Typical Set

Following are the states defined for the Typical set. (Note that other states may be supported to provide certain interoperability with nodes implementing the Full set, but are not used when all nodes support the Typical set.)

- ONLY_DIRTY - only one processor has the memory block in its cache. This block is writable, and the processor has written (or intends) to write to it. This state is set when the processor requests the block with read/write privileges, and no other processor currently caches the block.

- ONLY_FRESH - only one processor had the memory block in its cache. This block is writeable, but processor has not written to it. This state is set when the processor requests the block with read privileges, and no other processor currently caches the block.

- HEAD_DIRTY - more than one processor has the memory block in its cache. This block is writable, and the processor has written (or intends) to write to it. This state is set when the processor requests the block with read/write privileges, and another processors already caches the block.

- HEAD_FRESH - more than one processor had the memory block in its cache. This block is writeable, but processor has not written to it. This state is set when the processor requests the block with read privileges, and another processors already caches the block.

- MID_VALID - more than two processors have the memory in its cache, and it is readable. The processor cache with this state is neither the Head or the Tail of the of the sharing list.

- TAIL_VALID - more than two processors have the memory in its cache, and it is readable. The processor cache with this state is the Tail of the of the sharing list.

Below is a state diagram that depicts these states:

The request sub-actions of memory transactions that expect a response from the directory include 17 variations of reads, writes, and locks based on the number of bytes requested and the coherency requirements. For convenience, the notation on the edges of the above graph is derived from the terms used in the SCI specification to describe the memory transactions.

The first value indicates the request to the directory by a node for memory access:

- Fetch R - indicates a request for a memory block with read privileges.

- Fetch RW - indicates a request for a memory block with read/write privileges.

- Data Modify - indicates that a processor (the HEAD or the ONLY processor caching the block) writes to the cache line.

The value in the parenthesis indicates the memory state at the time of the request.

Race Conditions

In a distributed shared memory system with caching, the emergence of race conditions is extremely likely. This is mainly due to the lack of a bus for serialization of actions. It is further compounded by the problem of network errors and congestion.

The early invalidation case from Section 11.4 in Solihin is an excellent example of a race condition that can arise in a distributed system. Recall the diagram from the text, and the cache coherence actions, as shown below.

The circled actions are as follows:

- A sends a read request to Home.

- Home replies with data (but the message gets delayed).

- B sends a write request to Home.

- Home sends invalidation to A, and it arrives before the ReplyD

The SCI protocol has a way to handle this race condition, as well as many others, and the following sections will discuss how the SCI protocol design can prevent this race condition.

Prevention in the SCI Protocol

Race conditions are almost non-existent in the SCI protocol, due primarily to the protocol's design. A brief discussion of how this is accomplished follows, followed by a discussion showing what happens if this condition arises in the SCI protocol.

Atomic Transactions

SCI's primary method for preventing race conditions is having atomic transactions. A transaction is defined as a set of sub-actions necessary to complete some requested action, such as querying memory for a data or invalidating a node's cache. This usually takes the form a a request-response pair, where the initiating node sends a request to another node and waits for the response. The transaction is not complete until the response returns. Suppose, for example, that node A is in the middle of a transaction with node B. Node C then tries to make a request of node A. Node A will respond to node C that it is busy, telling node C to try again, as shown in the following diagram.

Thus, in the Early Invalidation race, node A would be in the middle of a transaction, having requested a block of data from memory and not having received a response, which would prevent node B from invalidating it. Note, however, that nodes will not stay in the busy state indefinitely. Doing so would lead to potential deadlocks, so all transactions have time outs that will eventually cause the transaction to fail, thus moving the node out of the busy state, making it able to respond to requests again.

Head Node

Another method for preventing race conditions is to have the Head node of a sharing list perform many of the coherence actions. As a result, only one node is performing actions such as writes and invalidations of other sharers. Since only one node is performing these actions, the possibility of concurrent actions is decreased. If another node wants to write, for instance, it must become the Head Node of the sharing list for the cache line to which it wants to write, displacing the current Head node as the node that can write. All state changes in the sharing list occur at the behest of the Head node, with the exception of nodes deleting themselves from the list.

When simply sharing a read-only copy of a node, as in when the memory is a FRESH state, the Head node is somewhat irrelevant. All the sharing nodes have their own cached value of the cache line. If any node wants to write, including the Head node, it must perform an additional action in order to do so. Likewise, when a sharing list is sharing a line of data in the dirty, or GONE, memory state, as long as the Head node has not written to the line, then all the sharing nodes stay in the list with their cached line. However, at the point the Head node wants to write, it can write its data immediately, but it then must invalidate all other shared copies, via the forward pointers in the sharing list.

This, however, does not protect against a race condition where the memory is FRESH, the Head node wants to write, and another node also wants to join the list. The Memory Access mechanism prevents this condition.

Memory Access

The final way that the SCI protocol minimizes race conditions is by changing the directory structure. In Solihin 11.4, there is a Home node which keeps track of the cache state and has a certain amount of knowledge about the system as a result. In the SCI protocol, there is still a Home node of sorts, but this node is only responsible for keeping track of who the current Head. Such a Home node is usually the node where the memory block physically resides. When a node wants to access a block of memory, it sends a request to the Home node of that memory block, assuming it isn't already in the sharing list for that block. In the event that the data is already being used, the Home node will include in its response the address of the Head node of the sharing list. This requirement necessarily serializes the order of access, as one access request is not serviced at the Home node until the previous request is finished, and the requests are processed in FIFO order. As well, whichever node is the Head of the sharing list will be notified by the new Head when it is demoted. All parties involved in a potential race are thus talking to each other, preventing the race from occurring in the first place.

Because of this requirement, the projected race condition from the previous section, where the Head node is in a FRESH state and wants to write while another node also wants to read, cannot occur. This is because both nodes must make their request to the Home node; the Head node must request the ability to write, and the other node must request the data from the Home node. One of these requests will make it to the Home node first, resulting in the second request being deferred to the first node to reach to Home node. Such a scenario is pictured below.

Solihin 11.4 Resolution

The three previous sections discussed individual parts of how the SCI protocol reduces race conditions. Putting all three of these parts together yields the following scenario which resolves the Early Invalidation race as described in the text.

- A sends a request to Home for access to the memory block. It then goes into a busy state while it waits for a response.

- B also sends a request to Home for access to the same memory block. A's request is received first

- Home responds to A with the data, but this response gets caught in network traffic and delayed. This response is sent before Home processes the request from B.

- Home responds to the request from B, telling it that A is the current Head node.

- B then sends a request to A to tell it to demote itself.

- A still hasn't received the response from Home, so it is still in a transaction, and it tells B that it is busy. B will have to retry the request.

It should be noted that B will be in the PENDING[definitions] state, so any requests to demote it from the Head position will be delayed.. This could lead to a chaining of nodes in the PENDING state, but as soon as node A receives its response from Home or times out, the requests in the chain will be successively accepted and processed.

Other Possible Race Conditions

The design of the SCI protocol prevents many race conditions from occurring, as has already been shown. Here are two other conditions that may arise, with a discussion of how the protocol resolves the issue.

Concurrent List Deletions

Even though the Head node performs most of the coherence actions for a shard list, the individual nodes are able to invalidate themselves, such as when they evict the block from their cache. This is referred to in the SCI standard as a "deletion" from the sharing list. Deletion is accomplished by having the invalidating node "lock" itself and then inform its forward and back nodes that they should now point to each other. This "locking" is essentially another PENDING state. A problem could arise, then, when two neighboring nodes try to invalidate themselves at the same time, as they would both be locked and not respond to each other's message. In this case, though, the protocol specifies that the node that is closest to the tail takes priority (IEEE pg156)[1]. So, the deadlock and/or race condition is averted by ordering the deletion from the tail towards the head.

Simultaneous Deletion and Invalidation

Another potential race condition occurs when the Head node tries to purge the list of sharers at the same time as a node in the list tries to remove itself from the list. Suppose that the Head node is trying to purge node A, which has node B as its next node in the list, while at the same time, node B is trying to remove itself from the list. Node B needs to tell node A to update its next pointer, but the Head node will need to access node A's next pointer to know what the next node in the list is. An illustration is shown below. The 1 and the 2 represent the messages being sent from the respective node to node A that have not yet arrived.

What will happen is that one of the messages will reach node A first. While A is responding to this message, it will tell other nodes that it is busy, in a similar manner to the notion of the atomic transactions. If node B's message arrives first, causing the message from the Head node to be rejected. Node A will have its forward pointer changed at this point, so when the Head node resends its request, all will work as expected. The second option is more difficult. If the Head node's message arrives first, node A will be invalidated, so that when the message from node B is sent again, node A will simply inform node B that it is no longer part of the list. As well, the Head node will now have its forward pointer pointing at node B. Node A has thus been successfully purged, but we arrive at an impasse, as now the Head node will try to invalidate the locked node B, while node B will try to tell the Head node to change its forward pointer, with both nodes being inside a transaction. This situation is illustrated by the following figure.

The protocol does not specify how to handle this situation, but it would make sense for the protocol to allow one of the messages through, presumably the Head node's message. Node B could simply respond in the affirmative, telling the Head node what the following node is, but this would only work if node B notifies the next node of the deletion after it notifies node A. Otherwise, the node following B would have its back pointer pointing to the wrong node. The protocol standard seems to suggest that this is the case (IEEE, pg162)[1].

Definitions and Terms

- Head Node - The node at the beginning of the sharing list

- Home Node - The node responsible for keeping track of the Head node for a sharing list for a memory block, usually the node at which the memory block resides.

- PENDING state - The intermediate state for a node while it is attempting to become the Head node in the sharing list. This includes the transaction involved in querying the Home node and the transaction involved in demoting the current Head node.

- SCI - Scalable Coherent Interface

References

1. "IEEE Standard for Scalable Coherent Interface (SCI).," IEEE Std 1596-1992 , vol., no., pp.i, 1993. doi: 10.1109/IEEESTD.1993.120366

URL: http://ieeexplore.ieee.org.proxied.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=347683&isnumber=8049

2. How SCI Coherence Works