CSC/ECE 506 Spring 2011/ch7 jp: Difference between revisions

No edit summary |

|||

| (18 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Introduction | =Introduction= | ||

Shared memory architecture is one of the two major classes of large computer systems architecture. In a shared memory architecture, physical memory from all the processors is mapped into a single memory map. All the processors can potentially access to all the memory on the system, although access time could be different (eg NUMA). Different threads or processes can communicate by reading/writing to shared memory. | |||

==Advantages of shared memory system | ==Advantages of shared memory system== | ||

* Shared memory parallel programs and multi-threaded programs will automatically run on shared memory system. | * Shared memory parallel programs and multi-threaded programs will automatically run on shared memory system. | ||

| Line 12: | Line 12: | ||

* Common data types can be shared between different threads running on different processors. (eg. shared data which is Read Only) | * Common data types can be shared between different threads running on different processors. (eg. shared data which is Read Only) | ||

==Disadvantages of shared memory system | ==Disadvantages of shared memory system== | ||

* Cost of providing shared memory grows | * Cost of providing shared memory grows superlinearly with number of processors compared to message passing model which grows linearly. | ||

==Hardware Support == | ==Hardware Support == | ||

Shared memory architecture needs some hardware support for the implementation unlike a message passing model which can rely on software for send and receive messages. Three things necessary with respect to hardware support for correct execution of a shared memory parallel program on a multiprocessor system are: | Shared memory architecture needs some hardware support for the implementation unlike a message passing model which can rely on software for send and receive messages. Three things necessary with respect to hardware support for correct execution of a shared memory parallel program on a multiprocessor system are: | ||

# Cache Coherence protocol | |||

# Memory consistency model | |||

# Hardware Synchronization | |||

=Cache Coherence Problem= | |||

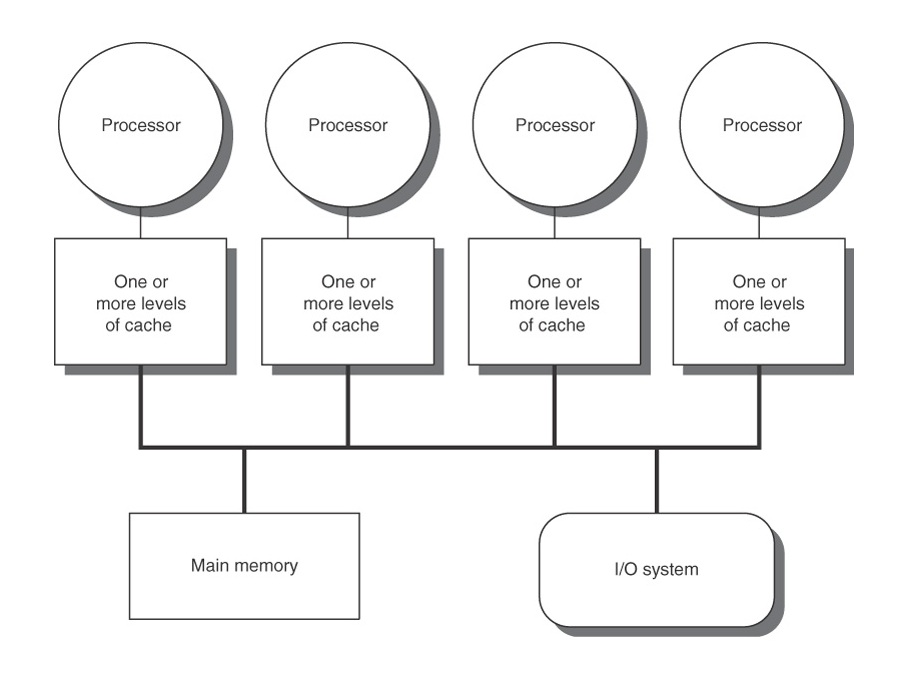

[[Image:sharedmem.jpg|thumbnail|right|600px|Shared Memory system with dedicated Cache for each processor<sup><span id="3body">[[#4foot|[4]]]</span></sup>]] | |||

In a system with single processor (single core), maintaining cache coherency is simple and easy but in a multiprocessor system, it is not as simple. Data can be present in any processors cache and protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated. | In a system with single processor (single core), maintaining cache coherency is simple and easy but in a multiprocessor system, it is not as simple. Data can be present in any processors cache and protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated. | ||

| Line 31: | Line 31: | ||

In the figure shown here, this is a 4 processor shared memory system where each processor has its own cache. Supposed processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is responsibility of Cache Coherence Protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement. | In the figure shown here, this is a 4 processor shared memory system where each processor has its own cache. Supposed processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is responsibility of Cache Coherence Protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement. | ||

One may think that cache write policy can provide cache coherence but it is not true. Cache write policy only controls how a change in value of cache is propagated to lower level cache or main memory. It is not responsible for propagating changes to other caches. | One may think that cache write policy can provide cache coherence but it is not true. Cache write policy only controls how a change in value of cache is propagated to lower level cache or main memory. It is not responsible for propagating changes to other caches. | ||

=Memory Consistency Problem= | =Memory Consistency Problem= | ||

| Line 40: | Line 38: | ||

Maintaining program order is very important for memory consistency but it comes with performance degradation. Various memory consistency models trades off performance to make programming easy. | Maintaining program order is very important for memory consistency but it comes with performance degradation. Various memory consistency models trades off performance to make programming easy. | ||

=Peterson's Algorithm= | =Peterson's Algorithm= | ||

| Line 63: | Line 59: | ||

interested[process] = 0; | interested[process] = 0; | ||

} | } | ||

The algorithm uses two variables as seen above, interested and turn. If a flag value for interested is set to 1, it indicates the process wants to enter the critical section. The variable turn is a placeholder for the process whose turn will be next allowed to enter the critical section. As seen above, Process1 has been given priority over P0. | The algorithm uses two variables as seen above, interested and turn. If a flag value for interested is set to 1, it indicates the process wants to enter the critical section. The variable turn is a placeholder for the process whose turn will be next allowed to enter the critical section. As seen above, Process1 has been given priority over P0. | ||

| Line 87: | Line 84: | ||

<span id="2foot">[[#2body|2.]]</span> http://en.wikipedia.org/wiki/Peterson%27s_algorithm <br> | <span id="2foot">[[#2body|2.]]</span> http://en.wikipedia.org/wiki/Peterson%27s_algorithm <br> | ||

<span id="3foot">[[#3body|3.]]</span> Li, K. and Hudak, P. Memory Coherence in Shared Virtual Memory Systems. ACM Transactions on Computer Systems, 7(4):321{359, November 1989. <br> | <span id="3foot">[[#3body|3.]]</span> Li, K. and Hudak, P. Memory Coherence in Shared Virtual Memory Systems. ACM Transactions on Computer Systems, 7(4):321{359, November 1989. <br> | ||

<span id="4foot">[[#4body|4.]]</span>http://web.sfc.keio.ac.jp/~rdv/keio/sfc/teaching/architecture/architecture-2007/lec08.html | |||

Latest revision as of 02:46, 2 May 2011

Introduction

Shared memory architecture is one of the two major classes of large computer systems architecture. In a shared memory architecture, physical memory from all the processors is mapped into a single memory map. All the processors can potentially access to all the memory on the system, although access time could be different (eg NUMA). Different threads or processes can communicate by reading/writing to shared memory.

- Shared memory parallel programs and multi-threaded programs will automatically run on shared memory system.

- Single OS runs on shared memory system which simplifies maintenance, memory management and scheduler tasks.

- Amount of total memory is large as it is simply sum of all memory of individual nodes.

- Communicating data between caches is faster than that in message passing model.

- Ability of finer granularity sharing compared to message passing which adds overhead of creating/sending messages for each bit of information.

- Common data types can be shared between different threads running on different processors. (eg. shared data which is Read Only)

- Cost of providing shared memory grows superlinearly with number of processors compared to message passing model which grows linearly.

Hardware Support

Shared memory architecture needs some hardware support for the implementation unlike a message passing model which can rely on software for send and receive messages. Three things necessary with respect to hardware support for correct execution of a shared memory parallel program on a multiprocessor system are:

- Cache Coherence protocol

- Memory consistency model

- Hardware Synchronization

Cache Coherence Problem

In a system with single processor (single core), maintaining cache coherency is simple and easy but in a multiprocessor system, it is not as simple. Data can be present in any processors cache and protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated.

In the figure shown here, this is a 4 processor shared memory system where each processor has its own cache. Supposed processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is responsibility of Cache Coherence Protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement.

One may think that cache write policy can provide cache coherence but it is not true. Cache write policy only controls how a change in value of cache is propagated to lower level cache or main memory. It is not responsible for propagating changes to other caches.

Memory Consistency Problem

Memory consistency deals with the ordering of memory operations (load and store) to different memory locations. In a single processor system, code will execute correctly if the compiler preservers the order of the access to synchronization variables and other dependent variables. But in shared memory model with multiple processors, two threads could be access a shared data (something like a synchronization variable) and the output of the threads could change based on which thread can get to the shared data earlier. If this happens, then the program output on uni-processor system and multi-processor program will be different.

Maintaining program order is very important for memory consistency but it comes with performance degradation. Various memory consistency models trades off performance to make programming easy.

Peterson's Algorithm

Peterson’s algorithm, also known as Peterson’s solution, is an algorithm that addresses the critical section problem by meeting the 3 criteria of the problem: mutual exclusion, progress, and bounded waiting[1].

int turn; // turn for execution

int interested[2]; // the processors interested

void enter_region(int process)

{

int otherProcess;

otherProcess = 1 - process;

interested[process]=1;

turn = process;

while(turn == process && interested[otherProcess] == 1)

{

// busy wait

}

}

void leave_region(int process)

{

interested[process] = 0;

}

The algorithm uses two variables as seen above, interested and turn. If a flag value for interested is set to 1, it indicates the process wants to enter the critical section. The variable turn is a placeholder for the process whose turn will be next allowed to enter the critical section. As seen above, Process1 has been given priority over P0.

To ensure mutual exclusion, the variable interested and turn are set to ensure only 1 can enter the critical section at a time. To ensure Progress, once a processor has left the critical section the other processors can determine which processor is allowed into the critical section next. To ensure bounded waiting, as seen with the above code, a process will wait no longer than 1 turn to enter the critical section as the variable turn=1 will be enabled.

Page tables

An issue with multiprocessor systems is how to enter the critical section. One software solution is to use Page tables to ensure mutual exclusion, progress, and bounded waiting which are the 3 criteria needed to be met[2].

Memory Coherence

As with other forms of synchronization, page tables for multiprocessor systems must have strategies for handling data. As discussed in Li and Hudaks article, there are two basic approaches for page synchronization, invalidation and write-broadcast. In an invalidation approach, there is only 1 owner for each page, having either read or write access to the page. In a write-broadcast, as the name implies, it broadcasts or writes all copies for the page data and returns the faulty instruction[3].

As mentioned in the invalidation approach, there must be an owner for the page. There are two basic ownership approaches for page tables, fixed or dynamic. In a fixed ownership approach, 1 processor always owns the same page. Other processors who want read or write access to the page location must negotiate for the access. For dynamic ownership, there are two subcategories, centralized or distributed management[3].

Centralized Management

In centralized management for dynamic pages, there is a single, central processor that maintains a page table which has a table for each page and each page has 3 fields: owner, copy set, and lock. The owner field consists of the single processor which owns the page, which could also be thought as the most recent processor to have write access. The copy set field has a list of all processors that have a copy of the page. This field helps to avoid a broadcast. The lock field is used for synchronizing requests[3].

Distributed Management

There are 2 schemes for Distributed Management: fixed and dynamic. For a fixed distributed management, each processor in the system is given a predetermined subset of pages to manage. A simple, straightforward distribution of responsibility is the divide pages evenly among the system, although there are other solutions which could be tailored to the applications needs[3].

In a dynamic distributed management system, each processor keeps track of ownership of all pages within their local table. Instead of the owner field in the Centralized Management scheme, instead there is a probOwner field which has the probable owner of the page for a page fault[3].

References

1. http://en.wikipedia.org/wiki/Memory_coherence

2. http://en.wikipedia.org/wiki/Peterson%27s_algorithm

3. Li, K. and Hudak, P. Memory Coherence in Shared Virtual Memory Systems. ACM Transactions on Computer Systems, 7(4):321{359, November 1989.

4.http://web.sfc.keio.ac.jp/~rdv/keio/sfc/teaching/architecture/architecture-2007/lec08.html