CSC/ECE 506 Spring 2010/ch 11 maf: Difference between revisions

(→ReadX) |

|||

| (67 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

The cache coherence protocol presented in Chapter 11 of [[#References | Solihin 2008]] is simpler than most real directory-based protocols. This textbook supplement presents the directory-based protocols used by the DASH multiprocessor and the Alewife multiprocessor. It concludes with an argument of why complexity might be undesirable in cache coherence protocols. | |||

=DASH Coherence Protocol= | |||

==Overview== | |||

The DASH multiprocessor uses a two-level coherence protocol, relying on a snoopy bus to ensure cache coherence within cluster and a directory-based protocol to ensure coherence across clusters. The protocol uses a <b>Remote Access Cache (RAC)</b> at each cluster, which essentially consolidates memory blocks from remote clusters into a single cache on the local snoopy bus. When a request is issued for a block from a remote cluster that is not in the RAC, the request is denied but the request is also forwarded to the owner. The owner supplies the block to the RAC. Eventually, when the requestor retries, the block will be waiting in the RAC. | The DASH multiprocessor uses a two-level coherence protocol, relying on a snoopy bus to ensure cache coherence within cluster and a directory-based protocol to ensure coherence across clusters. The protocol uses a <b>Remote Access Cache (RAC)</b> at each cluster, which essentially consolidates memory blocks from remote clusters into a single cache on the local snoopy bus. When a request is issued for a block from a remote cluster that is not in the RAC, the request is denied but the request is also forwarded to the owner. The owner supplies the block to the RAC. Eventually, when the requestor retries, the block will be waiting in the RAC. | ||

Because the local snoopy bus of the home cluster maintains coherence with the home memory, the directory does not keep track of the block's status in the home cluster. Instead, the directory | Because the local snoopy bus of the home cluster maintains coherence with the home memory, the directory does not keep track of the block's status in the home cluster. Instead, the directory keeps track of which remote clusters are holding the block. This is in contrast to the protocols discussed by [[#References | Solihin 2008]] which do not incorporate a local bus and therefore use directories which track the state of the block across all caches. The DASH directories are memory-based and use a full bit vector representation. | ||

DASH uses a three state invalidation protocol for the caches with Dirty, Shared, and Invalid states. This is essentially the Modified-Shared-Invalid (MSI) protocol that [[#References | Solihin 2008]] describes. The three directory states are Dirty-Remote, Shared-Remote, and Uncached-Remote. These correspond roughly to the Exclusive/Modified, Shared, and Uncached directory states discussed in Chapter 11 of [[#References | Solihin 2008]]. However, whereas the Exclusive/Modified state only indicates that a block could be dirty, a block in the Dirty-Remote state is always considered dirty. Furthermore, a block that is dirty in its home cluster will be in state Uncached-Remote in the directory. So the correspondence is inexact. | DASH uses a three state invalidation protocol for the caches with Dirty, Shared, and Invalid states. This is essentially the Modified-Shared-Invalid (MSI) protocol that [[#References | Solihin 2008]] describes. The three directory states are Dirty-Remote, Shared-Remote, and Uncached-Remote. These correspond roughly to the Exclusive/Modified, Shared, and Uncached directory states discussed in Chapter 11 of [[#References | Solihin 2008]]. However, whereas the Exclusive/Modified state only indicates that a block could be dirty, a block in the Dirty-Remote state is always considered dirty. Furthermore, a block that is dirty in its home cluster will be in state Uncached-Remote in the directory. So the correspondence is inexact. | ||

| Line 11: | Line 13: | ||

The DASH coherence protocol supports three different memory requests: Read, Writeback, and Read-Exclusive. Read and Read-Exclusive are equivalent to the Read and ReadX requests present in [[#References | Solihin 2008]]. Writeback is similar to a Flush. However, a writeback is used when a cache wants to evict a dirty block. A writeback from a remote cluster ends with the block entering the Uncached-Remote state in the directory. | The DASH coherence protocol supports three different memory requests: Read, Writeback, and Read-Exclusive. Read and Read-Exclusive are equivalent to the Read and ReadX requests present in [[#References | Solihin 2008]]. Writeback is similar to a Flush. However, a writeback is used when a cache wants to evict a dirty block. A writeback from a remote cluster ends with the block entering the Uncached-Remote state in the directory. | ||

There are too many cases to express the protocol succinctly as a finite state machine. Instead, pseudocode for handling Read and Read-Exclusive requests from the appendix of [[#References | Lenoski <i>et al.</i> 1990]] follows. The pseudocode refers to a number of hardware subsystems that are beyond the scope of this article& | There are too many cases to express the protocol succinctly as a finite state machine. Instead, pseudocode for handling Read and Read-Exclusive requests from the appendix of [[#References | Lenoski <i>et al.</i> 1990]] follows. The pseudocode refers to a number of hardware subsystems that are beyond the scope of this article—the directory controller (DC), pseudo-CPU (PCPU), and reply controller (RC). Descriptions may be found in [[#References | Lenoski <i>et al.</i> 1990]]. | ||

==Read== | |||

When a Read request is issued, it first goes to the snoopy bus of the local cluster. If the block is already in the local RAC or in a local processor's cache, then one of these caches supplies the data. If the block was dirty and the local cluster is the home cluster, then the owner will also have to flush to main memory and transition to the shared state. If the block was dirty and the local cluster is a remote cluster, then the owner flushes to the local RAC which becomes the new owner. In this last case, locally the block will be shared, but remotely it will be considered dirty. | |||

If the data is not already held locally, but the local cluster is the home cluster and the block is not held in the dirty state by a remote cluster, then memory supplies the data. However, if the block is dirty in a remote cluster, the bus request is denied, prompting a retry. Meanwhile, the request is fowarded to the owning cluster. The owner flushes the block and transitions to the shared state. The directory transitions to the shared state as well. | |||

If the data is not already held locally and the local cluster is not the home cluster, the bus request is denied, prompting a retry. Meanwhile, the request is forwarded to the home cluster. When the block is owned by a remote cluster, the request is forwarded to the owning cluster and the owner flushes the block and transitions to the shared state. The home cluster sends the data to the requestor's RAC and the directory transitions to the shared state. | |||

// " | // "Normal flow of read request bus transaction." | ||

// From Lenoski <i>et al.</i> 1990 (see References). | // From Lenoski <i>et al.</i> 1990 (see References). | ||

| Line 64: | Line 72: | ||

} | } | ||

==ReadX== | |||

When a ReadX request is issued, it first goes to the snoopy bus of the local cluster. If the block is being held dirty in the local RAC or in a local processor's cache, then the owner supplies the data and transitions to the invalid state. | |||

Otherwise, if the local cluster is the home cluster and the block is in the uncached or shared state in the directory, then memory supplies the data and other local caches transistion to the invalid state. If the block is in the shared state in the directory, then the directory must also send out invalidation requests to the remote clusters that are in the sharing vector and transition the block to the uncached state in the directory. However, if the block is in the dirty state in the directory, the bus request is denied, prompting a retry. Meanwhile, the request is forwarded to the owning cluster. The owner flushes and transitions to the invalid state. The directory transitions the block to the uncached state. | |||

Otherwise, if the local cluster is not the home cluster, the bus request is denied, prompting a retry. Meanwhile, the request is forwarded to the home cluster. If the block is in the uncached or shared state in the directory, the home cluster will send the data to the requestor's RAC and the directory transitions to the dirty state. If the block is in the shared state in the directory, the directory must also send invalidation requests to the sharing clusters. However, if the block is in the dirty state in the directory, the request is forwarded to the owning cluster. The owner sends the dirty block to the RAC of the requestor and an acknowledgement to home and transitions to the invalid state. | |||

// " | // "Normal flow of read-exclusive request bus transaction." | ||

// From Lenoski <i>et al.</i> 1990 (see References). | // From Lenoski <i>et al.</i> 1990 (see References). | ||

| Line 156: | Line 164: | ||

} | } | ||

== Handling Races == | |||

[[#References | Lenoski <i>et al.</i> 1990]] discuss two race conditions that may arise in the DASH multiprocessor. These are essentially identical to two of the race conditions discussed in Chapter 11 of [[#References | Solihin 2008]]. The first occurs when a Read from requester R is forwarded from home H to owner O, but O sends a Writeback to H before the forwarded Read arrives. This situation is diagrammed below. | |||

R --> Read --> H --> Read (Forward) --> O | |||

O --> Writeback --> H | |||

In this case, O no longer holds a valid copy of the data to send to R. Instead, O can simply deny the request by sending R a NAK (negative acknowledgement) message. R can then resend the Read request to H. Since ownership of the block returned to H while the forwarded Read was in transit, H can respond to R with the data. This is the same solution proposed by [[#References | Solihin 2008]]. It is possible for R to starve if ownership of the block continues to be passed around, but this is unlikely to continue for long. | |||

Another possible race occurs when the home node H replies with data (ReplyD) to a Read from requester R but an invalidation (Inv) arrives first. | |||

R --> Read --> H -----> ReplyD -----> R | |||

A --> ReadX --> H --> Inv --> R | |||

Handled naively, R would invalidate the block in its cache but then write the stale data into its cache when it arrived later violating cache coherence. Instead, DASH uses the snoopy RAC to watch for invalidations of pending Reads. Any reply to a Read that was pending when an Inv of the block was observed is considered to be stale and handled by sending a NAK to R (rather than forwarding the data). | |||

This is subtly different from the remedy proposed by [[#References | Solihin 2008]] which also relies on keeping track of outstanding transactions. Whereas DASH converts state replies to NAKs, [[#References | Solihin 2008]] suggests that the requester delay responding to the Inv until after the response to the pending Read arrives. | |||

While [[#References | Lenoski <i>et al.</i> 1990]] express confidence that the DASH protocol handles all races correctly, they also suggest that the protocol is too complex to validate analytically. Instead, they tested it empirically against parallel programs with known results. This leaves open the possibility that races which violate cache coherence exist. | |||

= LimitLESS: Alewife Coherence Protocol = | |||

==Overview== | |||

LimitLESS is the cache coherence protocol used by the Alewife multiprocessor. It uses a hardware-based limited pointer representation for directory representation, meaning that it maintains a cache of pointers to the nodes that are sharing a memory block. If the number of sharers exceeds some maximum size, then the system switches over to a memory-based full bit vector representation. Unlike the DASH multiprocessor, the Alewife multiprocessor is not organized into clusters of nodes with local buses, and therefore cache coherence through the system is maintain through the directory. | |||

LimitLESS defines three states that a cache block may be in: Invalid, Read-Only, or Read-Write. These correspond to the Invalid, Shared, and Modified states of the MSI protocol described in [[#References | Solihin 2008]] and to the Invalid, Shared, and Dirty states of the DASH. A memory block in a LimitLESS directory may be in one of four states: Read-Only, Read-Write, Read-Transaction, or Write-Transaction. Read-Only is similar to the Shared state from [[#References | Solihin 2008]] and from the DASH. Read-Write is similar to the Exclusive/Modified state from [[#References | Solihin 2008]] and the Dirty-Remote state from the DASH. The other two states, Read-Transaction and Write-Transaction, are transient states that a memory block enters when it is waiting for invalidation acknowledgements. One interesting difference between LimitLESS and the DASH coherence protocol and [[#References | Solihin 2008]] is the absence of a state indicating that the block is uncached. The initial state of a memory block is Read-Only. These states are summarized in the following table. | |||

<br> | |||

{| border="1" align="center" style="width: 625px;" | |||

|+ "Directory states," from [[#References | Chaiken <i>et al.</i> 1991.]] | |||

|- | |||

| Component || Name || Meaning | |||

|- | |||

| Memory || Read-Only <br> Read-Write <br> Read-Transaction <br> Write-Transaction | |||

| Some number of caches have read-only copies of the data. <br> Exactly one cache has a read-write copy of the data. <br> Holding read request, update is in progress. <br> Holding write request, invalidation is in progress. | |||

|- | |||

| Cache || Invalid <br> Read-Only <br> Read-Write | |||

| Cache block may not be read or written. <br> Cache block may be read, but not written. <br> Cache block may be read or written. | |||

|} | |||

<br> | |||

LimitLESS defines three memory requests: RREQ (Read Request), WREQ (Write Request), and REPM (Replace Modified). These correspond to the Read, Read-Exclusive, and Writeback requests on the DASH. RREQ and WREQ are also more or less equivalent to Read and ReadX respectively as defined by [[#References | Solihin 2008]]. Like a Writeback on the DASH, a REPM causes the block to be written back to memory and also clears its sharers list in the directory. LimitLESS also defines two reply messages that a cache may send to the directory: UPDATE and ACKC (Invalidate Acknowledgement). These correspond to the Flush and InvAck messages defined in [[#References | Solihin 2008]]. | |||

LimitLESS defines four messages that the directory may send to the caches: RDATA (Read Data), WDATA (Write Data), INV (Invalidate), and BUSY (Busy Signal). RDATA and WDATA are essentially the same as the ReplyD message from [[#References | Solihin 2008]] with the addition that WDATA indicates that a cache has received exclusive access to the block and may write to it until it receives an INV message. The INV message is equivalent to the Inv message described in [[#References | Solihin 2008]], and the BUSY message correspond to a NAK. Unlike the protocol described in [[#References | Solihin 2008]], the LimitLESS protocol does not support the concept of an intervention which downgrades the state of a cached block to shared. Instead, downgrading is accomplished through invalidation. | |||

The different protocol messages specified by LimitLESS are summarized in the table below. | |||

<br> | |||

{| border="1" align="center" style="width: 625px;" | |||

|+ "Protocol messages for hardware coherence," from [[#References | Chaiken <i>et al.</i> 1991.]] | |||

|- | |||

| Type || Symbol || Name || Data? | |||

|- | |||

| Cache to Memory || RREQ <br> WREQ <br> REPM <br> UPDATE <br> ACKC | |||

| Read Request <br> Write Request <br> Replace Modified <br> Update <br> Invalidate Ack. | |||

| No <br> No <br> Yes <br> Yes <br> No | |||

|- | |||

| Memory to Cache || RDATA <br> WDATA <br> INV <br> BUSY | |||

| Read Data <br> Write Data <br> Invalidate <br> Busy Signal | |||

| Yes <br> Yes <br> No <br> No | |||

|} | |||

<br> | |||

==State Diagram== | |||

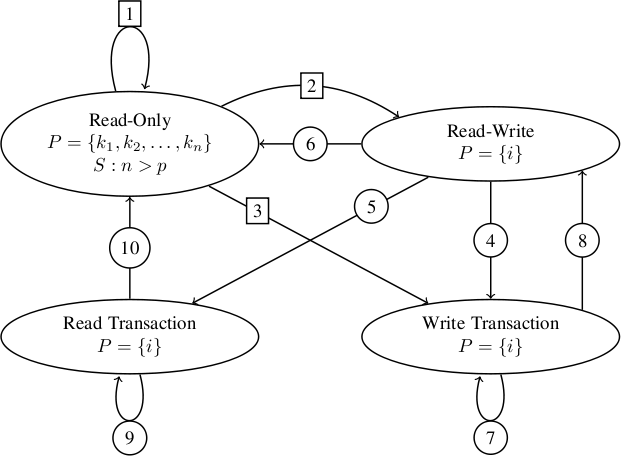

The following state transition diagram and table of annotations represent the LimitLESS protocol. The numbers on the edges in the state diagram correspond to entries in the "Transition Label" column in the table of annotations. <i>P</i> is the set of sharers that is maintained in the directory. The note <i>S</i> : <i>n</i> > <i>p</i> is a reminder that when the number of sharers exceeds the limit of the hardware-based pointer set, the system switches over to a memory-based full bit vector. Some transitions, namely those into and out of the transition states Read-Transaction and Write-Transaction, require an acknowledgement count (AckCtr), which keeps track of how many caches still need to send ACKC messages before the block will be invalidated throughout the system. | |||

<br> | |||

[[Image:Limitless_fsm.png|thumb|center|621px|alt=LimitLESS FSM|"Directory state transition diagram, " from [[#References | Chaiken <i>et al.</i> 1991.]]]] | |||

<br> | |||

{| border="1" align="center" style="text-align: center; font-family: serif; width: 700px;" | |||

|+ "Annotation of the state transition diagram," from [[#References | Chaiken <i>et al.</i> 1991.]] | |||

|- | |||

| Transition Label || Input Message || Precondition || Directory Entry Change || Output Message(s) | |||

|- | |||

| 1 || <i>i</i> → RREQ || — || <i>P</i> = <i>P</i> ∪ {<i>i</i>} || RDATA → <i>i</i> | |||

|- | |||

| 2 || <i>i</i> → WREQ <br> <i>i</i> → WREQ || <i>P</i> = {<i>i</i>} <br> <i>P</i> = {} || — <br> <i>P</i> = {<i>i</i>} || WDATA → <i>i</i> <br> WDATA → <i>i</i> | |||

|- | |||

| 3 || <i>i</i> → WREQ <br> <i>i</i> → WREQ | |||

| <i>P</i> = {<i>k</i><sub>1</sub>, …, <i>k</i><sub><i>n</i></sub>} ∧ <i>i</i> ∉ <i>P</i> <br> <i>P</i> = {<i>k</i><sub>1</sub>, …, <i>k</i><sub><i>n</i></sub>} ∧ <i>i</i> ∈ <i>P</i> | |||

| <i>P</i> = {<i>i</i>}, AckCtr = <i>n</i> <br> <i>P</i> = {<i>i</i>}, AckCtr = <i>n</i> - 1 | |||

| ∀<i>k</i><sub><i>j</i></sub> INV → <i>k</i><sub><i>j</i></sub> <br> ∀<i>k</i><sub><i>j</i></sub> ≠ i INV → <i>k</i><sub><i>j</i></sub> | |||

|- | |||

| 4 || <i>j</i> → WREQ || <i>P</i> = {<i>i</i>} || <i>P</i> = {<i>j</i>}, AckCtr = 1 || INV → <i>i</i> | |||

|- | |||

| 5 || <i>j</i> → RREQ || <i>P</i> = {<i>i</i>} || <i>P</i> = {<i>j</i>}, AckCtr = 1 || INV → <i>i</i> | |||

|- | |||

| 6 || <i>i</i> → REPM || <i>P</i> = {<i>i</i>} || <i>P</i> = {} || — | |||

|- | |||

| 7 || <i>j</i> → RREQ <br> <i>j</i> → WREQ <br> <i>j</i> → ACKC <br> <i>j</i> → REPM | |||

| — <br> — <br> AckCtr ≠ 1 <br> — | |||

| — <br> — <br> AckCtr = AckCtr - 1 <br> — | |||

| BUSY → <i>j</i> <br> BUSY → <i>j</i> <br> — <br> — | |||

|- | |||

| 8 || <i>j</i> → ACKC <br> <i>j</i> → UPDATE | |||

| AckCtr = 1, <i>P</i> = {<i>i</i>} <br> <i>P</i> = {<i>i</i>} | |||

| AckCtr = 0 <br> AckCtr = 0 | |||

| WDATA → <i>i</i> <br> WDATA → <i>i</i> | |||

|- | |||

| 9 || <i>j</i> → RREQ <br> <i>j</i> → WREQ <br> <i>j</i> → REPM | |||

| — <br> — <br> — || — <br> — <br> — | |||

| BUSY → <i>j</i> <br> BUSY → <i>j</i> <br> — | |||

|- | |||

| 10 || <i>j</i> → UPDATE <br> <i>j</i> → ACKC | |||

| <i>P</i> = {<i>i</i>} <br> <i>P</i> = {<i>i</i>} | |||

| AckCtr = 0 <br> AckCtr = 0 | |||

| RDATA → <i>i</i> <br> RDATA → <i>i</i> | |||

|} | |||

<br> | |||

The "Input Message" column indicates the message to the directory that prompts the transition and the cache that sent it. The "Precondition" column indicates what state P and AckCtr must be in prior to the transition. The "Directory Entry Change" column indicates any changes which are made to P and AckCtr during the transition. The "Output Message(s)" column indicates any messages that the directory sends out during the transition. | |||

==Handling Races== | |||

[[#References | Chaiken <i>et al.</i> 1991]] do not discuss how LimitLESS handles race conditions. However, it is possible to infer how LimitLESS would handle the first case discussed for the DASH multiprocessor. This is the case where the owner O of a dirty block writes it back to home H and evicts it before the Read from the requesting processor R can reach it. In the LimitLESS protocol, this race is handled by the transition states. When a block is dirty (in the Read-Write state), a RREQ or WREQ to the directory causes the directory to send an INV to the owner. This requires the owner to send either an UPDATE, thereby providing the data to forward on to the requester, or an ACKC if the owner no longer has the data. However, in order for the owner to have evicted the data, it would have had to issue a REPM, writing the data back to memory. In either case, the data gets written back to memory, which can then reply with an RDATA or WDATA to the requester. This in contrast to the solution used in the DASH and [[#References | Solihin 2008]] which denies the memory request and forces it to retry. In the LimitLESS protocol, R still receives the data, whether or not the race condition occurs. The two cases are illustrated below. | |||

R --> RREQ --> H --> INV --> O --> UPDATE --> H --> RDATA --> R | |||

R --> RREQ --> H -----> INV -----> O --> ACKC --> H --> RDATA --> R | |||

O --> REPM --> H | |||

=Advocating for Simple Coherence Protols= | |||

[[#References | Kunz and Horowitz 2008]] note that there is an ever-growing body of literature dedicated to increasingly complex cache protocols designed to counteract various performance bottlenecks. [[#References | Kunz and Horowitz 2008]] argue that this trend to tune hardware protocols to improve the performance of existing software rather than to tune software to perform well on simpler hardware may not be the best approach. In addition, many of these studies, like [[#References | Dahlgren <i>et al.</i> 1994]], are conducted in simulation and this may obscure details which would hamper performance in a real implementation. | |||

In particular, they use the example of the Remote Access Cache (RAC), which, as discussed in an earlier section, is an important element of the cache coherence protocol of the DASH multiprocessor. RACs are intended to reduce the memory latency of accessing remote memory by allowing copies of blocks of remote memory that are evicted from a local cache to be stored locally in case they are accessed again. Using a FLASH multiprocessor, which allows the cache coherence protocol to be reprogrammed, [[#References | Kunz and Horowitz 2008]] compared the performance of a simple bit vector protocol with the same protocol extended with a RAC. Counter to intuition, the protocol with the RAC actually performs 30% worse on average than the simple protocol. | |||

[[#References | Kunz and Horowitz 2008]] explain that in their tests the inclusion of the RAC introduces a bottleneck. Contention for access to the RAC ends up slowing the system down more than simply requesting blocks directly from remote memory. They point out that is an example of unintended consequences that illustrates how adding a single enhancement to a protocol may actually end up hurting performance. In fact none of the enhancements that [[#References | Kunz and Horowitz 2008]] tested actually improved performance significantly. | |||

[[#References | Kunz and Horowitz 2008]] compare the development of complex cache coherence protocols to that of ever more complex instruction sets during the era of CISC processors. They suggest that a better solution might be to follow the RISC example and develop simple and efficient hardware and focus on optimizing software instead. | |||

=References= | =References= | ||

* David Chaiken, John Kubiatowicz, and Anant Agarwal (1991). [http://groups.csail.mit.edu/cag/papers/pdf/asplos4.pdf "LimitLESS directories: A scalable cache coherence scheme."] <i>ACM SIGPLAN Notices</i>. | |||

* Frederik Dahlgren, Michel Dubois, and Per Stenström (1994) [http://doi.acm.org/10.1145/192007.192028 "Combined performance gains of simple cache protocol extensions."] <i>ACM SIGARCH Computer Architecture News.</i> | |||

* Robert Kunz and Mark Horowitz (2008). [http://doi.acm.org/10.1145/1353522.1353532 "The case for simple, visible cache coherency."] In <i>Proceedings of the 2008 ACM SIGPLAN Workshop on Memory Systems Performance and Correctness</i>. | |||

* Daniel Lenoski, James Laudon, Kourosh Gharachorloo, Anoop Gupta, and John Hennessy (1990). [http://doi.acm.org/10.1145/325164.325132 "The directory-based cache coherence protocol for the DASH multiprocessor."] In <i>Proceedings of the 17th Annual International Symposium on Computer Architecture.</i> | * Daniel Lenoski, James Laudon, Kourosh Gharachorloo, Anoop Gupta, and John Hennessy (1990). [http://doi.acm.org/10.1145/325164.325132 "The directory-based cache coherence protocol for the DASH multiprocessor."] In <i>Proceedings of the 17th Annual International Symposium on Computer Architecture.</i> | ||

* Yan Solihin (2008). <i>Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems.</i> | |||

Latest revision as of 14:36, 26 April 2010

The cache coherence protocol presented in Chapter 11 of Solihin 2008 is simpler than most real directory-based protocols. This textbook supplement presents the directory-based protocols used by the DASH multiprocessor and the Alewife multiprocessor. It concludes with an argument of why complexity might be undesirable in cache coherence protocols.

DASH Coherence Protocol

Overview

The DASH multiprocessor uses a two-level coherence protocol, relying on a snoopy bus to ensure cache coherence within cluster and a directory-based protocol to ensure coherence across clusters. The protocol uses a Remote Access Cache (RAC) at each cluster, which essentially consolidates memory blocks from remote clusters into a single cache on the local snoopy bus. When a request is issued for a block from a remote cluster that is not in the RAC, the request is denied but the request is also forwarded to the owner. The owner supplies the block to the RAC. Eventually, when the requestor retries, the block will be waiting in the RAC.

Because the local snoopy bus of the home cluster maintains coherence with the home memory, the directory does not keep track of the block's status in the home cluster. Instead, the directory keeps track of which remote clusters are holding the block. This is in contrast to the protocols discussed by Solihin 2008 which do not incorporate a local bus and therefore use directories which track the state of the block across all caches. The DASH directories are memory-based and use a full bit vector representation.

DASH uses a three state invalidation protocol for the caches with Dirty, Shared, and Invalid states. This is essentially the Modified-Shared-Invalid (MSI) protocol that Solihin 2008 describes. The three directory states are Dirty-Remote, Shared-Remote, and Uncached-Remote. These correspond roughly to the Exclusive/Modified, Shared, and Uncached directory states discussed in Chapter 11 of Solihin 2008. However, whereas the Exclusive/Modified state only indicates that a block could be dirty, a block in the Dirty-Remote state is always considered dirty. Furthermore, a block that is dirty in its home cluster will be in state Uncached-Remote in the directory. So the correspondence is inexact.

The DASH coherence protocol supports three different memory requests: Read, Writeback, and Read-Exclusive. Read and Read-Exclusive are equivalent to the Read and ReadX requests present in Solihin 2008. Writeback is similar to a Flush. However, a writeback is used when a cache wants to evict a dirty block. A writeback from a remote cluster ends with the block entering the Uncached-Remote state in the directory.

There are too many cases to express the protocol succinctly as a finite state machine. Instead, pseudocode for handling Read and Read-Exclusive requests from the appendix of Lenoski et al. 1990 follows. The pseudocode refers to a number of hardware subsystems that are beyond the scope of this article—the directory controller (DC), pseudo-CPU (PCPU), and reply controller (RC). Descriptions may be found in Lenoski et al. 1990.

Read

When a Read request is issued, it first goes to the snoopy bus of the local cluster. If the block is already in the local RAC or in a local processor's cache, then one of these caches supplies the data. If the block was dirty and the local cluster is the home cluster, then the owner will also have to flush to main memory and transition to the shared state. If the block was dirty and the local cluster is a remote cluster, then the owner flushes to the local RAC which becomes the new owner. In this last case, locally the block will be shared, but remotely it will be considered dirty.

If the data is not already held locally, but the local cluster is the home cluster and the block is not held in the dirty state by a remote cluster, then memory supplies the data. However, if the block is dirty in a remote cluster, the bus request is denied, prompting a retry. Meanwhile, the request is fowarded to the owning cluster. The owner flushes the block and transitions to the shared state. The directory transitions to the shared state as well.

If the data is not already held locally and the local cluster is not the home cluster, the bus request is denied, prompting a retry. Meanwhile, the request is forwarded to the home cluster. When the block is owned by a remote cluster, the request is forwarded to the owning cluster and the owner flushes the block and transitions to the shared state. The home cluster sends the data to the requestor's RAC and the directory transitions to the shared state.

// "Normal flow of read request bus transaction."

// From Lenoski et al. 1990 (see References).

if (Data held locally in shared state by processor or RAC)

Other cache(s) supply data for fill;

else if (Data held locally in dirty state by processor or RAC) {

Dirty cache supplies data for fill and goes to shared state;

if (Memory Home is Local)

Writeback Data to main memory;

else

RAC takes data in shared-dirty state;

}

else if (Memory home is Local) {

if (Directory entry state != Dirty-Remote)

Memory supplies read data;

else {

Allocate RAC entry, mask arbitration and force retry;

Forward Read Request to Dirty Cluster;

PCPU on Dirty Cluster issues read request;

Dirty cache supplies data and does to shared state;

DC sends shared data reply to local cluster;

Local RC gets reply and unmasks processor arbitration;

Upon local processor read, RC supplies data and the

RAC entry goes to shared state;

Directory entry state = Shared-Remote;

}

}

else /* Memory home is Remote */

Allocate RAC entry, mask arbitration and force retry;

Local DC sends read request to home cluster;

if (Directory entry state != Dirty-Remote) {

Directory entry state = Shared-Remote, update vector;

Home DC sends reply to local RC;

Local RC gets reply and unmasks processor arbitration;

else {

Home DC forwards Read Request to dirty cluster;

PCPU on dirty cluster issues read request and DC sends

reply to local cluster and sharing writeback to home;

Local RC gets reply and unmasks processor arbitration;

Home DC gets sharing writeback, writes back dirty data,

Directory entry state = Shared-Remote, update vector;

}

Upon local processor read, RC supplies the data and the

RAC entry goes to shared state;

}

ReadX

When a ReadX request is issued, it first goes to the snoopy bus of the local cluster. If the block is being held dirty in the local RAC or in a local processor's cache, then the owner supplies the data and transitions to the invalid state.

Otherwise, if the local cluster is the home cluster and the block is in the uncached or shared state in the directory, then memory supplies the data and other local caches transistion to the invalid state. If the block is in the shared state in the directory, then the directory must also send out invalidation requests to the remote clusters that are in the sharing vector and transition the block to the uncached state in the directory. However, if the block is in the dirty state in the directory, the bus request is denied, prompting a retry. Meanwhile, the request is forwarded to the owning cluster. The owner flushes and transitions to the invalid state. The directory transitions the block to the uncached state.

Otherwise, if the local cluster is not the home cluster, the bus request is denied, prompting a retry. Meanwhile, the request is forwarded to the home cluster. If the block is in the uncached or shared state in the directory, the home cluster will send the data to the requestor's RAC and the directory transitions to the dirty state. If the block is in the shared state in the directory, the directory must also send invalidation requests to the sharing clusters. However, if the block is in the dirty state in the directory, the request is forwarded to the owning cluster. The owner sends the dirty block to the RAC of the requestor and an acknowledgement to home and transitions to the invalid state.

// "Normal flow of read-exclusive request bus transaction."

// From Lenoski et al. 1990 (see References).

if (Data held locally in dirty state by processor or RAC)

Dirty cache supplies Read-Exclusive fill data and

invalidates self;

else if (Memory Home is Local) {

switch (Directory entry state) {

case Uncached-Remote :

Memory supplies data, any locally cached copies

are invalidated;

break;

case Shared-Remote:

RC allocates an entry in RAC with DC specified

invalidate acknowledge count;

Memory supplies data, any locally cached copies are

invalidated;

Local DC sends invalidate request to shared clusters;

Dir. entry state = Uncached-Remote, update vector;

Upon receipt of all acknowledges RC deallocates RAC

entry;

break;

case Dirty-Remote :

Allocate RAC entry, mask arbitration and force retry;

Forward Read-Exclusive Request to dirty cluster;

PCPU at dirty cluster issues Read-Ex request,

Dirty cache supplies data and invalidates self;

DC in dirty cluster sends reply to local RC;

Local RC gets reply from dirty cluster and unmasks

processor arbitration;

Upon local processor re-Read-Ex, RC supplies data,

RAC entry is deallocated and

Dir. entry state = Uncached-Remote, update vector;

}

}

else /* Memory Home is Remote */ {

RC allocates RAC entry, masks arbitration and forces retry;

Local DC sends Read-Exclusive request to home;

switch (Directory entry state) {

case Uncached-Remote :

Home memory supplies data, any locally cached copies

are invalidated, Home DC sends reply to local RC;

Directory entry state = Dirty-Remote, update vector;

Local RC gets Read-Ex reply with zero invalidation

count and unmasks processor for arbitration;

Upon local processor re-Read-Ex, RC supplies data and

RAC entry is deallocated;

break;

case Shared-Remote :

Home memory supplies data, any locally cached copies

are invalidated, Home DC sends reply to local RC;

Home DC sends invalidation requests to sharing

clusters;

Directory entry state = Dirty-Remote, update vector;

Local RC gets reply with data and invalidate acknow-

ledge count and unmasks processor for arbitration;

Upon local processor re-Read-Ex, RC supplies data;

Upon receipt of all acknowledge RC deallocates RAC

entry;

break;

case Dirty-Remote :

Home DC forwards Read-Ex request to dirty cluster;

PCPU at dirty cluster issues Read-Ex request,

Dirty cache supplies data and invalidates self;

DC in dirty cluster sends reply to local RC with

acknowledge count of one and sends Dirty Transfer

request to home;

Local RC gets reply and acknowledge count and unmasks

processor for arbitration;

Upon local processor re-Read-Ex, RC supplies data;

Upon receipt of Dirty Transfer request, Home DC

sends acknowledgement to local RC,

Home Dir. entry state = Dirty-Remote, update vector;

Upon receipt of acknowledge RC deallocates RAC entry;

}

}

Handling Races

Lenoski et al. 1990 discuss two race conditions that may arise in the DASH multiprocessor. These are essentially identical to two of the race conditions discussed in Chapter 11 of Solihin 2008. The first occurs when a Read from requester R is forwarded from home H to owner O, but O sends a Writeback to H before the forwarded Read arrives. This situation is diagrammed below.

R --> Read --> H --> Read (Forward) --> O

O --> Writeback --> H

In this case, O no longer holds a valid copy of the data to send to R. Instead, O can simply deny the request by sending R a NAK (negative acknowledgement) message. R can then resend the Read request to H. Since ownership of the block returned to H while the forwarded Read was in transit, H can respond to R with the data. This is the same solution proposed by Solihin 2008. It is possible for R to starve if ownership of the block continues to be passed around, but this is unlikely to continue for long.

Another possible race occurs when the home node H replies with data (ReplyD) to a Read from requester R but an invalidation (Inv) arrives first.

R --> Read --> H -----> ReplyD -----> R

A --> ReadX --> H --> Inv --> R

Handled naively, R would invalidate the block in its cache but then write the stale data into its cache when it arrived later violating cache coherence. Instead, DASH uses the snoopy RAC to watch for invalidations of pending Reads. Any reply to a Read that was pending when an Inv of the block was observed is considered to be stale and handled by sending a NAK to R (rather than forwarding the data).

This is subtly different from the remedy proposed by Solihin 2008 which also relies on keeping track of outstanding transactions. Whereas DASH converts state replies to NAKs, Solihin 2008 suggests that the requester delay responding to the Inv until after the response to the pending Read arrives.

While Lenoski et al. 1990 express confidence that the DASH protocol handles all races correctly, they also suggest that the protocol is too complex to validate analytically. Instead, they tested it empirically against parallel programs with known results. This leaves open the possibility that races which violate cache coherence exist.

LimitLESS: Alewife Coherence Protocol

Overview

LimitLESS is the cache coherence protocol used by the Alewife multiprocessor. It uses a hardware-based limited pointer representation for directory representation, meaning that it maintains a cache of pointers to the nodes that are sharing a memory block. If the number of sharers exceeds some maximum size, then the system switches over to a memory-based full bit vector representation. Unlike the DASH multiprocessor, the Alewife multiprocessor is not organized into clusters of nodes with local buses, and therefore cache coherence through the system is maintain through the directory.

LimitLESS defines three states that a cache block may be in: Invalid, Read-Only, or Read-Write. These correspond to the Invalid, Shared, and Modified states of the MSI protocol described in Solihin 2008 and to the Invalid, Shared, and Dirty states of the DASH. A memory block in a LimitLESS directory may be in one of four states: Read-Only, Read-Write, Read-Transaction, or Write-Transaction. Read-Only is similar to the Shared state from Solihin 2008 and from the DASH. Read-Write is similar to the Exclusive/Modified state from Solihin 2008 and the Dirty-Remote state from the DASH. The other two states, Read-Transaction and Write-Transaction, are transient states that a memory block enters when it is waiting for invalidation acknowledgements. One interesting difference between LimitLESS and the DASH coherence protocol and Solihin 2008 is the absence of a state indicating that the block is uncached. The initial state of a memory block is Read-Only. These states are summarized in the following table.

| Component | Name | Meaning |

| Memory | Read-Only Read-Write Read-Transaction Write-Transaction |

Some number of caches have read-only copies of the data. Exactly one cache has a read-write copy of the data. Holding read request, update is in progress. Holding write request, invalidation is in progress. |

| Cache | Invalid Read-Only Read-Write |

Cache block may not be read or written. Cache block may be read, but not written. Cache block may be read or written. |

LimitLESS defines three memory requests: RREQ (Read Request), WREQ (Write Request), and REPM (Replace Modified). These correspond to the Read, Read-Exclusive, and Writeback requests on the DASH. RREQ and WREQ are also more or less equivalent to Read and ReadX respectively as defined by Solihin 2008. Like a Writeback on the DASH, a REPM causes the block to be written back to memory and also clears its sharers list in the directory. LimitLESS also defines two reply messages that a cache may send to the directory: UPDATE and ACKC (Invalidate Acknowledgement). These correspond to the Flush and InvAck messages defined in Solihin 2008.

LimitLESS defines four messages that the directory may send to the caches: RDATA (Read Data), WDATA (Write Data), INV (Invalidate), and BUSY (Busy Signal). RDATA and WDATA are essentially the same as the ReplyD message from Solihin 2008 with the addition that WDATA indicates that a cache has received exclusive access to the block and may write to it until it receives an INV message. The INV message is equivalent to the Inv message described in Solihin 2008, and the BUSY message correspond to a NAK. Unlike the protocol described in Solihin 2008, the LimitLESS protocol does not support the concept of an intervention which downgrades the state of a cached block to shared. Instead, downgrading is accomplished through invalidation.

The different protocol messages specified by LimitLESS are summarized in the table below.

| Type | Symbol | Name | Data? |

| Cache to Memory | RREQ WREQ REPM UPDATE ACKC |

Read Request Write Request Replace Modified Update Invalidate Ack. |

No No Yes Yes No |

| Memory to Cache | RDATA WDATA INV BUSY |

Read Data Write Data Invalidate Busy Signal |

Yes Yes No No |

State Diagram

The following state transition diagram and table of annotations represent the LimitLESS protocol. The numbers on the edges in the state diagram correspond to entries in the "Transition Label" column in the table of annotations. P is the set of sharers that is maintained in the directory. The note S : n > p is a reminder that when the number of sharers exceeds the limit of the hardware-based pointer set, the system switches over to a memory-based full bit vector. Some transitions, namely those into and out of the transition states Read-Transaction and Write-Transaction, require an acknowledgement count (AckCtr), which keeps track of how many caches still need to send ACKC messages before the block will be invalidated throughout the system.

| Transition Label | Input Message | Precondition | Directory Entry Change | Output Message(s) |

| 1 | i → RREQ | — | P = P ∪ {i} | RDATA → i |

| 2 | i → WREQ i → WREQ |

P = {i} P = {} |

— P = {i} |

WDATA → i WDATA → i |

| 3 | i → WREQ i → WREQ |

P = {k1, …, kn} ∧ i ∉ P P = {k1, …, kn} ∧ i ∈ P |

P = {i}, AckCtr = n P = {i}, AckCtr = n - 1 |

∀kj INV → kj ∀kj ≠ i INV → kj |

| 4 | j → WREQ | P = {i} | P = {j}, AckCtr = 1 | INV → i |

| 5 | j → RREQ | P = {i} | P = {j}, AckCtr = 1 | INV → i |

| 6 | i → REPM | P = {i} | P = {} | — |

| 7 | j → RREQ j → WREQ j → ACKC j → REPM |

— — AckCtr ≠ 1 — |

— — AckCtr = AckCtr - 1 — |

BUSY → j BUSY → j — — |

| 8 | j → ACKC j → UPDATE |

AckCtr = 1, P = {i} P = {i} |

AckCtr = 0 AckCtr = 0 |

WDATA → i WDATA → i |

| 9 | j → RREQ j → WREQ j → REPM |

— — — |

— — — |

BUSY → j BUSY → j — |

| 10 | j → UPDATE j → ACKC |

P = {i} P = {i} |

AckCtr = 0 AckCtr = 0 |

RDATA → i RDATA → i |

The "Input Message" column indicates the message to the directory that prompts the transition and the cache that sent it. The "Precondition" column indicates what state P and AckCtr must be in prior to the transition. The "Directory Entry Change" column indicates any changes which are made to P and AckCtr during the transition. The "Output Message(s)" column indicates any messages that the directory sends out during the transition.

Handling Races

Chaiken et al. 1991 do not discuss how LimitLESS handles race conditions. However, it is possible to infer how LimitLESS would handle the first case discussed for the DASH multiprocessor. This is the case where the owner O of a dirty block writes it back to home H and evicts it before the Read from the requesting processor R can reach it. In the LimitLESS protocol, this race is handled by the transition states. When a block is dirty (in the Read-Write state), a RREQ or WREQ to the directory causes the directory to send an INV to the owner. This requires the owner to send either an UPDATE, thereby providing the data to forward on to the requester, or an ACKC if the owner no longer has the data. However, in order for the owner to have evicted the data, it would have had to issue a REPM, writing the data back to memory. In either case, the data gets written back to memory, which can then reply with an RDATA or WDATA to the requester. This in contrast to the solution used in the DASH and Solihin 2008 which denies the memory request and forces it to retry. In the LimitLESS protocol, R still receives the data, whether or not the race condition occurs. The two cases are illustrated below.

R --> RREQ --> H --> INV --> O --> UPDATE --> H --> RDATA --> R

R --> RREQ --> H -----> INV -----> O --> ACKC --> H --> RDATA --> R

O --> REPM --> H

Advocating for Simple Coherence Protols

Kunz and Horowitz 2008 note that there is an ever-growing body of literature dedicated to increasingly complex cache protocols designed to counteract various performance bottlenecks. Kunz and Horowitz 2008 argue that this trend to tune hardware protocols to improve the performance of existing software rather than to tune software to perform well on simpler hardware may not be the best approach. In addition, many of these studies, like Dahlgren et al. 1994, are conducted in simulation and this may obscure details which would hamper performance in a real implementation.

In particular, they use the example of the Remote Access Cache (RAC), which, as discussed in an earlier section, is an important element of the cache coherence protocol of the DASH multiprocessor. RACs are intended to reduce the memory latency of accessing remote memory by allowing copies of blocks of remote memory that are evicted from a local cache to be stored locally in case they are accessed again. Using a FLASH multiprocessor, which allows the cache coherence protocol to be reprogrammed, Kunz and Horowitz 2008 compared the performance of a simple bit vector protocol with the same protocol extended with a RAC. Counter to intuition, the protocol with the RAC actually performs 30% worse on average than the simple protocol.

Kunz and Horowitz 2008 explain that in their tests the inclusion of the RAC introduces a bottleneck. Contention for access to the RAC ends up slowing the system down more than simply requesting blocks directly from remote memory. They point out that is an example of unintended consequences that illustrates how adding a single enhancement to a protocol may actually end up hurting performance. In fact none of the enhancements that Kunz and Horowitz 2008 tested actually improved performance significantly.

Kunz and Horowitz 2008 compare the development of complex cache coherence protocols to that of ever more complex instruction sets during the era of CISC processors. They suggest that a better solution might be to follow the RISC example and develop simple and efficient hardware and focus on optimizing software instead.

References

- David Chaiken, John Kubiatowicz, and Anant Agarwal (1991). "LimitLESS directories: A scalable cache coherence scheme." ACM SIGPLAN Notices.

- Frederik Dahlgren, Michel Dubois, and Per Stenström (1994) "Combined performance gains of simple cache protocol extensions." ACM SIGARCH Computer Architecture News.

- Robert Kunz and Mark Horowitz (2008). "The case for simple, visible cache coherency." In Proceedings of the 2008 ACM SIGPLAN Workshop on Memory Systems Performance and Correctness.

- Daniel Lenoski, James Laudon, Kourosh Gharachorloo, Anoop Gupta, and John Hennessy (1990). "The directory-based cache coherence protocol for the DASH multiprocessor." In Proceedings of the 17th Annual International Symposium on Computer Architecture.

- Yan Solihin (2008). Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems.