CSC/ECE 506 Spring 2010/chapter 10: Difference between revisions

| (130 intermediate revisions by 3 users not shown) | |||

| Line 3: | Line 3: | ||

The memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read in a shared-memory program execution. | The memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read in a shared-memory program execution. | ||

This material gives a brief explanation about the intuition behind using relaxed memory consistency models for scalable design of multiprocessors. It also explains about the consistency models in real multiprocessor systems like Digital Alpha, Sparc V9 | This material gives a brief explanation about the intuition behind using relaxed memory consistency models for scalable design of multiprocessors. It also explains about the consistency models in real multiprocessor systems like Digital Alpha, Sparc V9 , IBM Power PC and processors from Sun Microsystems. | ||

= '''Sequential Consistency Model (SC)''' = | = '''Sequential Consistency Model (SC)''' = | ||

To write correct and efficient shared memory programs, programmers need a precise notion of shared memory semantics. To ensure correct execution a programmer expects that the data value read should be the same as the latest value written to it in the system. | =='''Introduction to Sequential Consistency Model'''== | ||

However in many commercial shared memory systems,the processor may observe an older value, causing unexpected behavior | To write correct and efficient shared memory programs, programmers need a precise notion of shared memory semantics. To ensure correct execution, a programmer expects that the data value read should be the same as the latest value written to it in the system. | ||

However in many commercial shared memory systems,the processor may observe an older value, causing unexpected behavior. Intuitively, a read should return the value of the "last" write to the same memory location. In uniprocessors, "last" is precisely defined by the sequential order specified by the program called '''program order'''. This is not the case in multiprocessors. A write and read of a variable, are not related by program order because they reside on two different processors. | |||

The uniprocessors model, however can be extented to apply to multiprocessors in a natural way. The resulting model is called '''Sequential consistency'''. In brief, sequential consistency requires that all memory operations | The uniprocessors model, however can be extented to apply to multiprocessors in a natural way. The resulting model is called '''Sequential consistency'''. In brief, sequential consistency requires that all memory operations | ||

| Line 14: | Line 15: | ||

[[Image:SeqC.jpg]] <br /> | [[Image:SeqC.jpg]] <br /> | ||

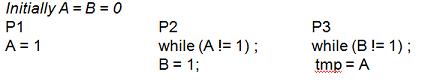

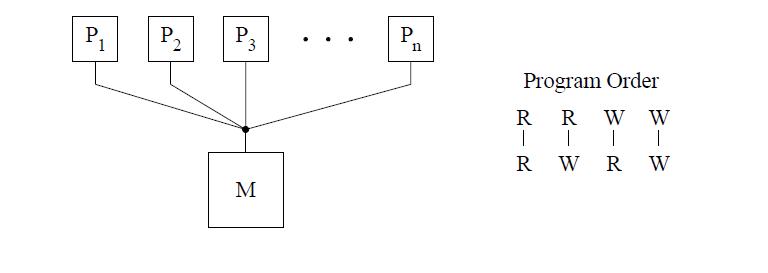

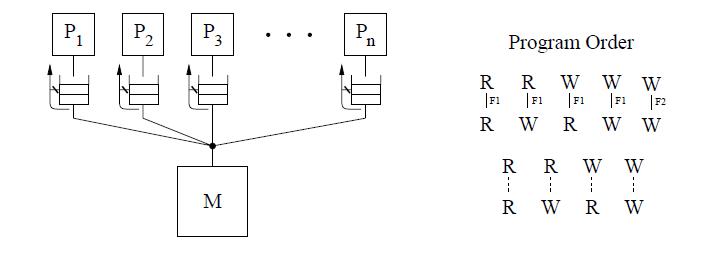

The figure above shows the basic representation for sequential consistency. This conceptual system for SC consists of n processors sharing a single logical memory. Though the figure does not show caches, an SC implementation may still cache data as long as the memory system appears as a single copy memory(i.e the writes should appear atomic). As SC requires program order to be maintained among all operation types, the pictorial representation of the program order shows all combinations of reads and writes, the line between them telling that the operations are required to complete in program order. | The figure above shows the basic representation for sequential consistency. This conceptual system for SC consists of n processors sharing a single logical memory. Though the figure does not show caches, an SC implementation may still cache data as long as the memory system appears as a single copy memory(i.e the writes should appear atomic). As SC requires program order to be maintained among all operation types, the pictorial representation of the program order shows all combinations of reads and writes, the line between them telling that the operations are required to complete in program order. | ||

This model ensures that the reads of a variable | This model ensures that the reads of a variable, will return the new values written to it by a processor. Sequential consistency provides a simple, intuitive programming model. Because of its strict consistency requirements, sequential consistency, many of the architecture and compiler optimizations used in uniprocessors are not safely applicable to sequentially consistent multiprocessors.[ For more details on sequential consistency model and its advantages/disadvantages refer to '''[http://www.cesr.ncsu.edu/solihin/Main.html Fundamentals of Parallel Computer Architecture]''' textbook by Yan Solihin , page 284 through 292]. For this reason, many '''Relaxed consistency models''' have been proposed, most of which | ||

are supported by commercial architectures. | are supported by commercial architectures. | ||

| Line 20: | Line 21: | ||

Sequential Consistency (SC) is the most intuitive programming interface for shared memory multiprocessors. A system implementing SC appears to execute memory operations one at a time and in program order. A program written for an SC system requires and | Sequential Consistency (SC) is the most intuitive programming interface for shared memory multiprocessors. A system implementing SC appears to execute memory operations one at a time and in program order. A program written for an SC system requires and | ||

relies on a specified memory behavior to execute correctly. Implementing memory accesses according to the SC model constraints, however, would adversely impact performance because memory accesses in shared-memory multiprocessors often incur prohibitively long | relies on a specified memory behavior to execute correctly. Implementing memory accesses according to the SC model constraints, however, would adversely impact performance because memory accesses in shared-memory multiprocessors often incur prohibitively long | ||

latencies (tens of times longer than in uniprocessor systems). To enforce sequential consistency, illegal reordering caused by hardware optimizations like [http://en.wikipedia.org/wiki/Write_buffer Write buffers], [http://www.pcguide.com/ref/mbsys/cache/charTransactional-c.html Non-blocking caches] etc and compiler optimizations like [http://en.wikipedia.org/wiki/Loop-invariant_code_motion code motion], [http://en.wikipedia.org/wiki/Register_allocation register allocation],[http://en.wikipedia.org/wiki/Common_subexpression_elimination eliminating common subexpressions], [http://www.cs.cmu.edu/afs/cs/academic/class/15828-s98/lectures/0318/index.htm loop transformations] etc resulting in reordering are not allowed. These are the optimizations which are implemented for better performance and are valid in uniprocessors. But in case of multiprocessors, these do not allow to satisfy the sequential consistency requirements and hence are not allowed. This affects the performance. | latencies (tens of times longer than in uniprocessor systems). To enforce sequential consistency, illegal reordering caused by hardware optimizations like '''[http://en.wikipedia.org/wiki/Write_buffer Write buffers]''', '''[http://www.pcguide.com/ref/mbsys/cache/charTransactional-c.html Non-blocking caches]''' etc and compiler optimizations like '''[http://en.wikipedia.org/wiki/Loop-invariant_code_motion code motion]''', '''[http://en.wikipedia.org/wiki/Register_allocation register allocation]''','''[http://en.wikipedia.org/wiki/Common_subexpression_elimination eliminating common subexpressions]''', '''[http://www.cs.cmu.edu/afs/cs/academic/class/15828-s98/lectures/0318/index.htm loop transformations]''' etc resulting in reordering are not allowed. These are the optimizations which are implemented for better performance and are valid in uniprocessors. But in case of multiprocessors, these do not allow to satisfy the sequential consistency requirements and hence are not allowed. This affects the performance. | ||

A number of techniques have been proposed to enable the use of certain optimizations by the hardware and compiler without violating sequential consistency, those having the potential to substantially boost performance. Some of them are mentioned below: | A number of techniques have been proposed to enable the use of certain optimizations by the hardware and compiler without violating sequential consistency, those having the potential to substantially boost performance. Some of them are mentioned below: | ||

| Line 26: | Line 27: | ||

'''Hardware optimization techniques:''' | '''Hardware optimization techniques:''' | ||

* '''Prefetching''' : | * '''Prefetching''' : A hardware optimization technique in which the processor automatically prefetches ownership for any write operations that are delayed due to the program order requirement (e.g., by issuing prefetch-exclusive requests for any writes delayed | ||

in the write buffer), thus partially overlapping the service of the delayed writes with the operations preceding them | in the write buffer), thus partially overlapping the service of the delayed writes with the operations preceding them | ||

in program order. This technique is only applicable to cache-based systems that use an invalidation-based protocol. This technique is suitable for statically scheduled processors. | in program order. This technique is only applicable to cache-based systems that use an invalidation-based protocol. This technique is suitable for statically scheduled processors. | ||

* '''Speculative Reads''' : | * '''Speculative Reads''' : A hardware optimization technique in which read operations that are delayed due to the program order requirement are serviced speculatively ahead of time. Sequential consistency is guaranteed by simply rolling back and reissuing the read and subsequent operations in the infrequent case that the read line gets invalidated or updated before the read could have been issued in a more straightforward implementation. This is suitable for dynamically scheduled processors since much | ||

of the roll back machinery is already present to deal with branch mispredictions. | of the roll back machinery is already present to deal with branch mispredictions. | ||

| Line 38: | Line 39: | ||

'''Software Optimization techniques''' | '''Software Optimization techniques''' | ||

*'''Shasha and Snir's agorithm ''' : | *'''Shasha and Snir's agorithm ''' : A compiler algorithm proposed by Dennis Shasha and Marc Snir is used to detect when memory operations can be reordered without violating sequential consistency. It uses the technique where Sequential consistency can be enforced by delaying each access to shared memory until the previous access of the same processor has terminated. For performance reasons, it allows several accesses by the same processor to proceed concurrently. It performs an analysis to find a minimal set of delays that enforces sequential consistency. The analysis extends to interprocessor synchronization constraints and to code where blocks of operations have to execute atomically thus providing a new compiler optimization techniques for parallel languages that support shared variables. | ||

In detail implementation of this algorithm can be studied from the paper : '''[http://portal.acm.org/citation.cfm?id=42277 Efficient and correct execution of parallel programs that share memory]''' | In detail implementation of this algorithm can be studied from the paper : '''[http://portal.acm.org/citation.cfm?id=42277 Efficient and correct execution of parallel programs that share memory]''' | ||

| Line 54: | Line 55: | ||

= '''Relaxed Consistency Models''' = | = '''Relaxed Consistency Models''' = | ||

Uniprocessor optimizations such as write buffers and overlapped execution can violate sequential consistency. Therefore, general implementations of sequential consistency require a processor to execute its memory operations one at a time in program order, and writes are executed atomically. Many schemes discussed in the previous section do not require memory operations of a processor to be executed one at a time or atomically. However, these schemes either require restricting the network, or using complex hardware, or aggressive compiler technology. Even so, the performance gains possible are not known. For these reasons, there seems to be an emerging consensus in the industry to support relaxed memory models. Many commercially available architectures such as Digital Alpha, SPARC V8 and V9, and IBM PowerPC are based on this model. Relaxed memory consistency models allow the processors in the system to see different orderings between all or selected categories of memory operations that are strictly enforced in SC. Relaxed consistency models perform better than SC, but impose extra burden on the programmer to ensure that their programs conform to the consistency models that the hardware provides. | Uniprocessor optimizations such as write buffers and overlapped execution can violate sequential consistency. Therefore, general implementations of sequential consistency require a processor to execute its memory operations one at a time in program order, and writes are executed atomically. Many schemes discussed in the previous section do not require memory operations of a processor to be executed one at a time or atomically. However, these schemes either require restricting the network, or using complex hardware, or aggressive compiler technology. Even so, the performance gains possible are not known. For these reasons, there seems to be an emerging consensus in the industry to support relaxed memory models. Many commercially available architectures such as '''Digital Alpha''', '''SPARC V8 and V9''', and '''IBM PowerPC''' are based on this model. Relaxed memory consistency models allow the processors in the system to see different orderings between all or selected categories of memory operations that are strictly enforced in SC. Relaxed consistency models perform better than SC, but impose extra burden on the programmer to ensure that their programs conform to the consistency models that the hardware provides. | ||

=='''Different Relaxed consistency Models'''== | =='''Different Relaxed consistency Models'''== | ||

| Line 64: | Line 65: | ||

Apart from the program order relaxation, some of these models also '''relax the write atomicity''' requirement. <br /> | Apart from the program order relaxation, some of these models also '''relax the write atomicity''' requirement. <br /> | ||

#'''Read others’ write early''': allow a read to return the value of another processor’s write before the write is made visible to all other processors. This relaxation only applies to cache-based systems. <br /> | #'''Read others’ write early''': allow a read to return the value of another processor’s write before the write is made visible to all other processors. This relaxation only applies to cache-based systems. <br /> | ||

#'''Read own write early''': a processor is allowed to read the value of its own previous write before the write is made visible to other processors.<br /> | Let us consider an example from [http://www.cs.cornell.edu/Courses/cs717/2001fa/lectures/sarita.ppt Memory Consistency Models, by Sarita Adve] to illustrate this: <br /> | ||

[[Image:ro0.jpg]]<br /> | |||

Let us see what happens if read returns new value before all copies see it. Below, (Write, A, 1) means the operation write on A returns 1 and (Read, A, 1) means the operation Read on A returns 1. | |||

[[Image:ro1.jpg]]<br /> | |||

We see that in P3, Read A returns zero while it should have been 1. This is because A was read before the P1's write to A had propagated to P3.<br /> | |||

#'''Read own write early''': a processor is allowed to read the value of its own previous write before the write is made visible to other processors. <br /> | |||

Example from [http://www.cs.cornell.edu/Courses/cs717/2001fa/lectures/sarita.ppt Memory Consistency Models, by Sarita Adve]: <br /> | |||

[[Image:rb1.jpg]] <br /> | |||

This can happen if Flag1 is read early from write buffer, when read is re-ordered with respect to write.<br /> | |||

All of the above models provide safety net that allow programmers to enforce the required constraints for achieving correctness. All models also maintain uniprocessor data and control dependences, and write serialization. | All of the above models provide safety net that allow programmers to enforce the required constraints for achieving correctness. All models also maintain uniprocessor data and control dependences, and write serialization. | ||

Let us now see the consistency models in some of the real multiprocessor systems listed above. | Let us now see the consistency models in some of the real multiprocessor systems listed above. We will not introduce those topics that are already covered in Solihin's textbook, like Weak ordering and Processor consistency models. '''RCsc''' and '''RCpc'''. RCsc maintains sequential consistency among synchronization operations, while RCpc maintains processor consistency among synchronization operations. | ||

==='''Relax Write to Read program order'''=== | ==='''Relax Write to Read program order'''=== | ||

Program order optimization is enabled by allowing a read to be reordered with respect to previous writes from the same processor and sequential consistency is maintained through the enforcement of the remaining program order constraints. Though relaxing the program order from a write followed by a read can improve performance, this reordering is not sufficient for compiler optimizations. The reason being most compiler optimizations require the full flexibility of reordering any two operations in program, and not just write to read. | Program order optimization is enabled by allowing a read to be reordered with respect to previous writes from the same processor and sequential consistency is maintained through the enforcement of the remaining program order constraints. Though relaxing the program order from a write followed by a read can improve performance, this reordering is not sufficient for compiler optimizations. The reason being most compiler optimizations require the full flexibility of reordering any two operations in program, and not just write to read. | ||

The models to be discussed under this type of relaxation differ in when they allow a read to return the value of a write. | '''IBM-370''', '''TSO''', and '''PC''' models relax Write to Read program order.The models to be discussed under this type of relaxation differ in when they allow a read to return the value of a write. | ||

====''IBM-370''==== | ====''IBM-370''==== | ||

The IBM-370 model relaxes certain writes to reads program order but maintains the atomicity requirements of SC. This model enforces the program order constraint from a write to a following read, by using the safety net ''serialization instructions'' that may be placed between the two operations. This model is the most stringent only next to SC because the program order from a write to a read still needs to be maintained in the below three cases: <br /> | The IBM-370 model relaxes certain writes to reads program order but maintains the atomicity requirements of SC. This model enforces the program order constraint from a write to a following read, by using the safety net ''serialization instructions'' that may be placed between the two operations. This model is the most stringent, only next to SC because the program order from a write to a read still needs to be maintained in the below three cases: <br /> | ||

- A write followed by a read to the same location [depicted by dashed line in between W and R in the figure]. <br /> | - A write followed by a read to the same location [depicted by dashed line in between W and R in the figure]. <br /> | ||

- If either write or read are generated by a serialization instruction[ WS , RS]. <br /> | - If either write or read are generated by a serialization instruction[ WS , RS]. <br /> | ||

| Line 91: | Line 108: | ||

For TSO, a safety net for write atomicity is required only for a write that is followed by a read to the same location in the same processor. The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes. | For TSO, a safety net for write atomicity is required only for a write that is followed by a read to the same location in the same processor. The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes. | ||

'''Difference between | |||

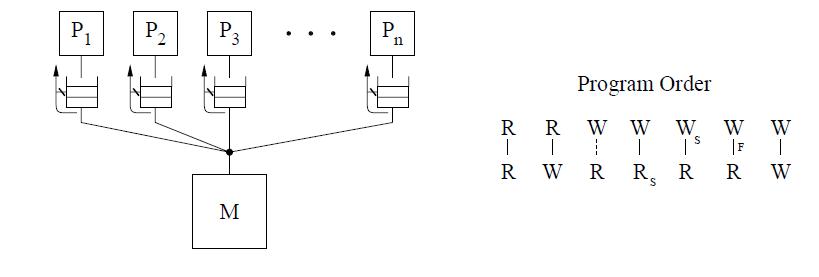

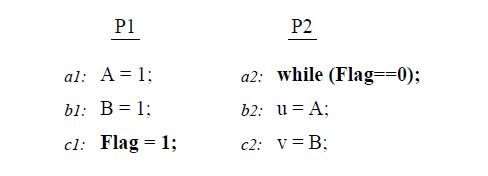

Let us consider the program segment below, taken from | '''Difference between IBM370 and TSO:''' <br /> | ||

Let us consider the program segment below, taken from '''[http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors]''', to demonstrate the difference between TSO and IBM 370 models. | |||

[[Image:Code_1.jpg]]<br /> | [[Image:Code_1.jpg]]<br /> | ||

| Line 101: | Line 119: | ||

==='''Relax Write to Write program order'''=== | ==='''Relax Write to Write program order'''=== | ||

This category of relaxed models allows <br /> | |||

- Two writes to execute out of program order. <br /> | |||

- A write followed by a read execute out of program order. <br /> | |||

This relaxation enables a number of hardware optimizations, including write merging in a write buffer and overlapping multiple write misses, thus servicing, the writes at a much faster rate. But this relaxation is not sufficiently flexible to be useful to a compiler. The SPARC V8 Partial Store Ordering model (PSO) is an example of such a model that we describe below. | |||

==== ''SPARC V8 Partial Store Ordering'' ==== | |||

The '''Partial Store Ordering'''('''PSO''') model is very much similar to the TSO model for SPARC V8. The figure below shows an identical conceptual system. There is only a slight difference in the program order, where writes to locations can be overlapped only if they are not to same locations. This is represented by the dashed line between the W's in the program order figure. Safety net for the program order is provided through a fence instruction, called the store barrier or STBAR, that may be used to enforce the program order between writes.<br /> | |||

[[Image:PSO.jpg]] <br /> | |||

Let us consider an example from '''[http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors]''' to demonstrate the working of PSO.<br /> | |||

[[Image:code2.jpg]]<br /> | |||

With relaxed Write to Read models, the only value for (u,v) is (1,1). With PSO, since it allows writes to different locations to complete out of program order, it also allows the outcomes (0,0) or (0,1) or (1,0) for (u,v). In this example, STBAR instruction needs to be placed immediately before ''c1'' on P1 in order to disallow all outcomes except (1,1). | |||

==='''Relax Read to Read and Read to Write program orders'''=== | ==='''Relax Read to Read and Read to Write program orders'''=== | ||

This model sheds the restriction on program order between all operations, including read to read and read followed by write to different locations . This flexibility provides the possibility of hiding the latency of read operations by implementing true non-blocking reads in the context of either static (in-order) or dynamic (out-of-order) scheduling processors, supported by techniques such as non-blocking (lockup-free) caches and speculative execution. The compiler also has full flexibility to reorder operations.<br /> | |||

'''Weak ordering (WO)''', '''Release consistency (RC)''', '''DEC Alpha''', '''Relaxed Memory Order (RMO)''', and '''PowerPC''' are examples of this model, with the last three models for commercial architectures. Except for Alpha,all the other models allow reordering of two reads to the same location. <br /> | |||

All of the models in this group allow a processor to read its own write early with the exception of RCpc, a flavor of Release consistency (RC) and PowerPC. All the three commercial architectures provide explicit fence instructions to ensure program order. Frequent use of fence instructions can incur a significant overhead due to an increase in the number of instructions and the extra delay that may be associated with executing fence instructions. | |||

==== ''DEC Alpha''==== | |||

The program order constraints allow reads and writes to different locations to complete out of program order | |||

unless there is a fence instruction between them. Memory operations to the same location, including reads, are required to complete in program order. Safety net for program order is provided through the fence instructions, the memory barrier (MB) and the write memory barrier (WMB). The MB instruction can be used to maintain program order between any memory operations, while WMB instruction provides this guarantee only among write operations. The Alpha model does not require a safety net for write atomicity. | |||

[[Image:alpha.jpg]] <br /> | |||

The conceptual system shown above is the same same as IBM 370 which requires a read to return the value of the last write operation to the same location. But since we can relax the program order from a write to a read, we can safely exploit optimizations such as read forwarding. | |||

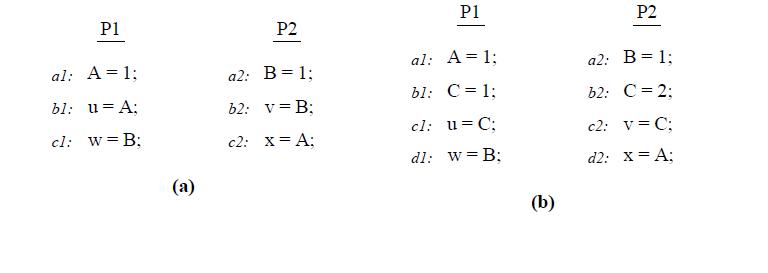

==== ''Relaxed Memory Order (RMO)''==== | |||

The SPARC V9 architecture uses RMO which is an extension of the TSO and PSO models used in SPARC V8. | |||

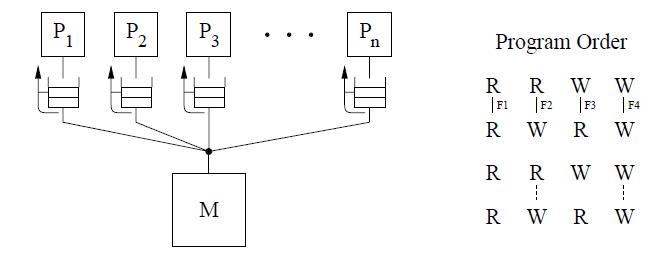

The Read to Read, Read to write program order is relaxed in this model, like in PSO. But a Read to Write or the order between two writes to the same location are not relaxed. This is shown in the figure for program order below. | |||

[[Image:rmo1.jpg]] <br /> | |||

RMO provides four types of fences[F1 through F4] that allow program order to be selectively maintained between any two types of operations. A single fence instruction, MEMBAR, can specify a combination of the above fence types by setting the appropriate bits in a four-bit opcode. No safety net for write atomicity is required. | |||

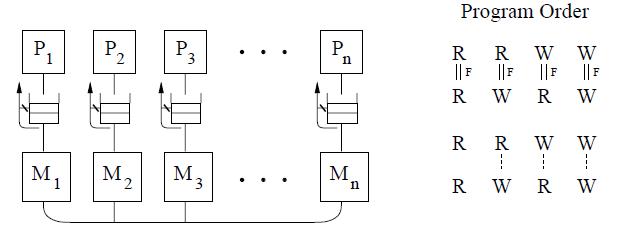

==== ''IBM PowerPC'' ==== | |||

This model constrains the program order <br /> | |||

-Between sub-operations. That is each operation may consist of multiple sub-operations and that all sub-operations of the first operation must complete before any sub-operations of the second operation.[represented by the double lines between operations in the figure below]. <br /> | |||

-Writes to the same location.<br /> | |||

-Among conflicting operations. <br /> | |||

[[Image:powerpc.jpg]] <br /> | |||

The IBM PowerPC model exposes multiple-copy semantics of memory to the programmer as shown in the conceptual system above. Safety net fence instruction is called SYNC which is similar to the MB fence instruction of the Alpha systems. However, SYNC between two reads allows to occur out of program order. Also PowerPC allows a write to be seen early by another processor’s read. Hence a read-modify-write operation may be needed to enforce program order between two reads to the same location as well as to make writes appear atomic. | |||

The table below summarizes the program constraint under each model we have described so far. | |||

{| class="wikitable" border="1" | |||

|- | |||

| Relaxation | |||

| W-> R order | |||

| W -> W order | |||

| R -> RW order | |||

| Read Others Write Early Order | |||

| Read Own Write Early Order | |||

| Safety net | |||

|- | |||

| SC | |||

| | |||

| | |||

| | |||

| | |||

| Yes | |||

| | |||

|- | |||

| IBM 370 | |||

| Yes | |||

| | |||

| | |||

| | |||

| | |||

| serialization instructions | |||

|- | |||

| Total Store Ordering | |||

| Yes | |||

| | |||

| | |||

| | |||

| Yes | |||

| RMW | |||

|- | |||

| PC | |||

| Yes | |||

| | |||

| | |||

| Yes | |||

| Yes | |||

| RMW | |||

|- | |||

| PSO | |||

| Yes | |||

| Yes | |||

| | |||

| | |||

| Yes | |||

| RMW, STBAR | |||

|- | |||

| WO | |||

| Yes | |||

| Yes | |||

| Yes | |||

| | |||

| Yes | |||

| synchronization | |||

|- | |||

| RCsc | |||

| Yes | |||

| Yes | |||

| Yes | |||

| | |||

| Yes | |||

| release, acquire, nsync, RMW | |||

|- | |||

| RCpc | |||

| Yes | |||

| Yes | |||

| Yes | |||

| Yes | |||

| Yes | |||

| release, acquire, nsync, RMW | |||

|- | |||

| Alpha | |||

| Yes | |||

| Yes | |||

| Yes | |||

| | |||

| Yes | |||

| MB, WMB | |||

|- | |||

| RMO | |||

| Yes | |||

| Yes | |||

| Yes | |||

| | |||

| Yes | |||

| MEMBARs | |||

|- | |||

|PowerPC | |||

| Yes | |||

| Yes | |||

| Yes | |||

| Yes | |||

| Yes | |||

| Sync | |||

|} | |||

RMW : read-modify-write instruction <br /> | |||

nsyncs : asynchronous data operations or special operations that are not used for synchronization.<br /> | |||

More information on Release Consistency models is presented in the next section. | |||

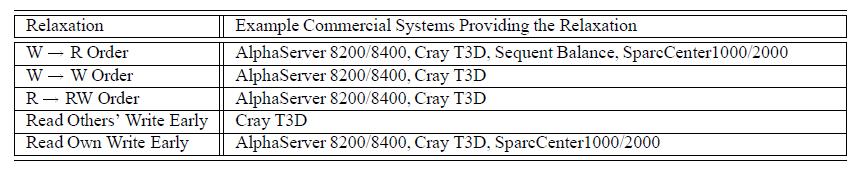

The table below shows some of the other commercial systems that relax sequential consistency.<br /> | |||

[[Image:table_new.jpg]] | |||

=='''Release Consistency Related Models'''== | |||

Release consistency provides these two kinds. '''Acquire''' accesses are used to tell the memory system that a critical region is about to be entered. '''Release''' accesses say that a critical region has just been exited. These accesses can be implemented either as ordinary operations on special variables or as special operations. | |||

==='''Release Consistency Models'''=== | |||

===='''Eager Release Consistency Model'''==== | |||

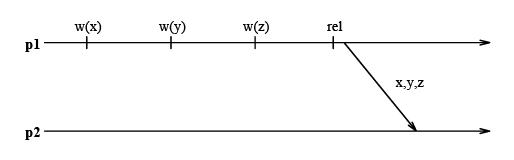

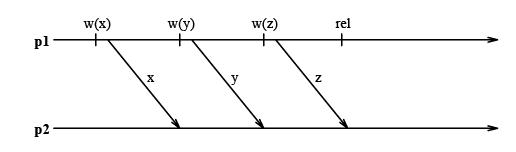

In Eager Release Consistency Model , the invalidations (or write notices) are propagated at release points. '''Munin's write shared protocol''' proposed by '''John K. Bennett, John B. Carter, and WiUy Zwaenepoel''' of Rice University implemented this Eager Release Consistency Model. It is a software implementation of Release consistency Model which buffers writes until a release, instead of pipelining them as in the DASH implementation. At the release all writes going to the same destination are merged into a single message. This is illustrated in the following diagram: | |||

<center> [[Image:munin.jpg]] </center> | |||

This approach is conservative because the system does not know when the next acquire by another processor will occur or whether a given process will even perform an acquire and need to see those write notices. | |||

More information about the implementation of this Eager Release Consistency Model can be studied from these two papers: '''[http://infoscience.epfl.ch/record/55790/files/sosp91.ps.pdf Implementation and Performance of Munin]''' and '''[http://www.springerlink.com/content/mg141q86k2112788/fulltext.pdf?page=1 Munin: Distributed Shared Memory Using Multi-Protocol Release Consistency]''' | |||

===='''Lazy Release Consistency Model'''==== | |||

Lazy release consistency (LRC) is consistency model most frequently used in Software Distributed Shared Memory. It is used for implementing release consistency that lazily pulls modifications across the interconnect only when necessary. The basic concept behind the protocol is to allow processors to continue referencing cache blocks that have been written by other processors. Although write notices are sent as soon as a processor writes a shared block, invalidations occur only at acquire operations. This is sufficient to ensure that true sharing dependencies are observed. Lazy algorithms such as LRC do not make modications globally visible at the time of a release. Instead, LRC guarantees only that a processor that acquires a lock will see all modications that precede the lock acquire. | |||

<center> [[Image: LRC.jpg]] </center> | |||

<br> | |||

More information about Lazy Release Consistency Model can be obtained from these two papers : '''[http://delivery.acm.org.www.lib.ncsu.edu:2048/10.1145/230000/224398/a61-kontothanassis.html?key1=224398&key2=6529911721&coll=ACM&dl=ACM&CFID=140829&CFTOKEN=60032635 Lazy Release Consistency for Hardware-Coherent Multiprocessors]''' and '''[http://infoscience.epfl.ch/record/55789/files/isca92.ps.pdf Lazy Release Consistency for Software Distributed Shared Memory]''' | |||

===='''Comparison between Lazy and Eager Release Consistency Models'''==== | |||

Eager release consistency represents the state of the art in release consistent protocols for hardware-coherent multiprocessors, while lazy release consistency has been shown to provide better performance for software distributed shared memory (DSM). Several of the optimizations performed by lazy protocols have the potential to improve the performance of hardware-coherent multiprocessors as well, but their complexity has precluded a hardware implementation. | |||

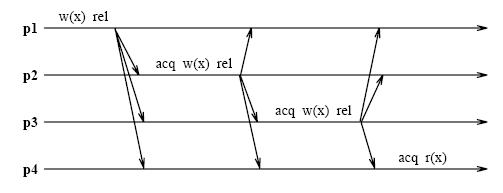

An Eager Release Consistency Model like Munin's write shared protocol may send more messages than a message passing implementation of the same application. The following figure shows an example where processors p1 through p4 repeatedly acquire the lock l, write the shared variable x, and then release l. | |||

<center> [[Image:Eager2.jpg]] </center> | |||

<br> | |||

If an update policy is used in conjunction with Munin's write shared protocol and x is present in all caches, then all of these cached copies are updated at every release. Logically, however it suffices to update each processor's copy only when it acquires l. This results in a single message exchange per acquire as in a message passing implementation. | |||

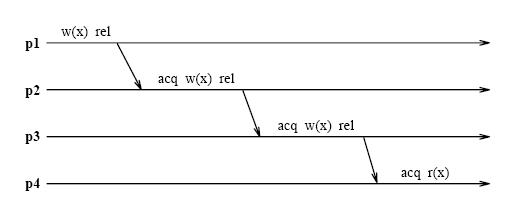

Unlike eager algorithms such as Munin's write shared protocol, lazy algorithms such as LRC (Lazy Release Consistency) do not make modications globally visible at the time of a release. Instead LRC guarantees only that a processor that acquires a lock will see all modications that precede the lock acquire. As indicated in the above figure , all modications that occur in program order before any of the releases in p1 through p4 precede the lock acquisition in p4. With LRC, modications are propagated at the time of an acquire. Only the modications that precede the acquire are sent to the acquiring processor. The modifications can be piggybacked on the message that grants the lock, further reducing message traffic. The following figure shows the message traffic in LRC for the same shared data accesses as in Figure under lazy consistency model section. l and x are sent in a single message at each acquire. | |||

<center> [[Image:lazy2.jpg]] </center> | |||

More information about differences between Eager and Lazy consistency Models can be found here : '''[http://www.cs.rochester.edu/research/cashmere/SC95/lazeag.html Lazy v. Eager Release Consistency]''' and | |||

'''[http://infoscience.epfl.ch/record/55789/files/isca92.ps.pdf Lazy Release Consistency for Software Distributed Shared Memory]''' | |||

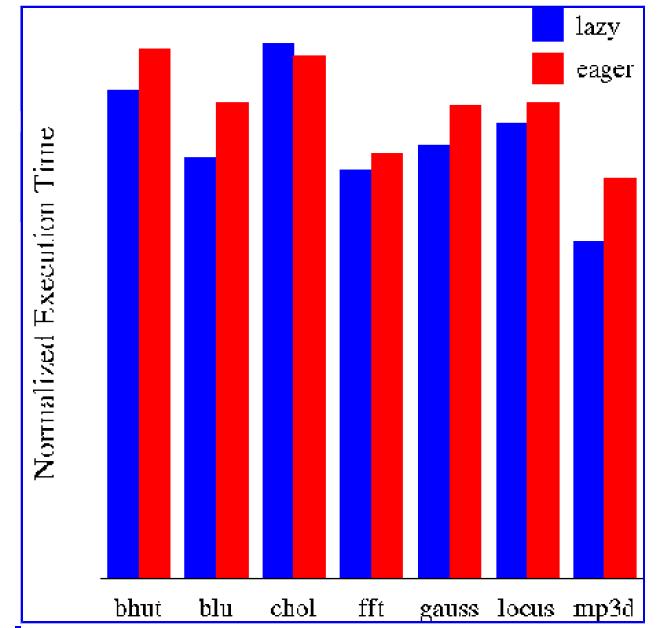

====='''Performance Analysis of Lazy and Eager Consistency Models'''===== | |||

Leonidas I. Kontothanassis, Michael L. Scott, and Ricardo Bianchini from University of Rochester have performed experimentations to evaluate a lazy release-consistent protocol suitable for machines with dedicated protocol processors. Their results indicate that the first protocol outperforms eager release consistency by as much as 20% across a variety of applications. The lazier protocol, on | |||

the other hand, is unable to recoup its high synchronization overhead. This represents a qualitative shift from the DSM world, where lazier protocols always yield performance improvements. Based on their results, they conclude that machines with flexible hardware | |||

support for coherence should use protocols based on lazy release consistency, but in a less ''aggressively lazy'' form than is appropriate for DSM. | |||

These are the results they show from their experimentations to compare Lazy and Eager Consistency Models: | |||

<br> | |||

<center>[[Image:Perf.jpg]]</center> | |||

<br> | |||

More information about this experimentation and results can be obtained from their paper : '''[http://delivery.acm.org.www.lib.ncsu.edu:2048/10.1145/230000/224398/a61-kontothanassis.html?key1=224398&key2=6529911721&coll=ACM&dl=ACM&CFID=140829&CFTOKEN=60032635 Lazy Release Consistency for Hardware-Coherent Multiprocessors]''' | |||

==='''Other Release Consistency Related Models'''=== | |||

===='''Entry Consistency Model'''==== | |||

Like the two variants of release consistency, entry consistency model too requires the programmer to use acquire and release at the start and end of each critical section, respectively. However, unlike release consistency, entry consistency requires each ordinary shared variable to be associated with some synchronization variable such as a lock or barrier. If it is desired that elements of an array be accessed independently in parallel, then different array elements must be associated with different locks. When an acquire is done on a synchronization variable, only those ordinary shared variables guarded by that synchronization variable are made consistent. | |||

Formally, a memory exhibits entry consistency if it meets all the following conditions : | |||

* An acquire access of a synchronization variable is not allowed to perform with respect to a process until all updates to the guarded shared data have been performed with respect to that process. | |||

* Before an exclusive mode access to a synchronization variable by a process is allowed to perform with respect to that process, no other process may hold the synchronization variable, not even in nonexclusive mode. | |||

* After an exclusive mode access to a synchronization variable has been performed, any other process next nonexclusive mode access to that synchronization variable may not be performed until it has performed with respect to that variable's owner. | |||

Entry consistency differs from lazy release consistency in that the latter does not associate shared variables with locks or barriers and at acquire time has to determine empirically which variables it needs. | |||

===='''Delayed consistency Model'''==== | |||

In a cache-based system, a '''store''' may cause an invalidation (write-invalidate protocol) or updates to other caches (writebroadcast | |||

protocol). In present systems, when a such coherence update must be sent, the cache controller is blocked while the update signals propagate and are acknowledged. If these update signals are buffered so that the cache is not blocked during the propagation of the | |||

signals, we say that coherence is send delayed. This buffering is different from the usual Store buffer needed in systems with write-through caches. Coherence can also be receive delayed. Namely, if a coherence update signal reaches a cache, the effect of the update can be temporarily buffered. By delaying the sending of updates, update propagation can be overlapped with cache activity. | |||

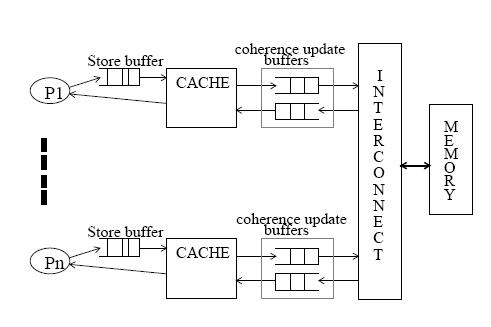

The figure below shows the system structure with store buffers and coherence update buffers used for delayed consistency models. | |||

<center>[[Image:Delayed.jpg]] </center> | |||

In a system with delayed consistency, synchronization variables must be stored in different regions of shared memory than other shared data. Accesses to the region of memory reserved for synchronization variables are not subject to delays, so that an On-the-Fly protocol is enforced on these variables. Therefore, the processor must have the ability to distinguish between synchronization variables and other variables. The state transition diagram for the On-the fly protocol is as shown below : | |||

<center>[[Image:On_fly.jpg]]</center> | |||

The different states are (a) Cache states and (b) System Directory states. | |||

* '''Cache States''' : If a cache block is Valid, then the cache may be an Owner (i.e. the copy is unique in the system and it is dirty) or a Keeper (i.e. there may be copies in other caches and all copies are clean). | |||

* '''System Directory states''' : There is one Modified bit and P Presence bits (one per processor) per block in memory. A Presence bit is set only if the corresponding cache is an Owner or a Keeper of the block. If a cache is the Owner of the block (in which case | |||

only one P bit is set), then the Modified bit is also set. | |||

The commands issued by cache controller are : | |||

* '''ReqO (Request Ownership)''': the memory controller sends an Inv command to all caches with a copy and the requesting cache becomes the Owner. | |||

* '''ReqOC (Request Owner Copy)''': same as ReqO, but the memory copy of the block is also sent to the requesting cache. | |||

* '''ReqKC (Request Keeper Copy)''': if there is an Owner, the memory controller sends an UpdM command to the Owner. The memory copy of the block is then sent to the requesting cache (which becomes a Keeper). | |||

* '''WB (Write Back)''': the block is written back to memory. | |||

Thus delayed consistency models allow the overlapping of cache activity and sending of invalidations. Delayed consistency also reduces the number of false sharing transitions. The only restriction is that parallel programs must access shared writable | |||

data in critical or semi-critical sections. | |||

More information about delayed consistency and the various protocols used to implement this can be obtained from this paper : '''[http://www.barroso.org/publications/delayed.pdf Delayed Consistency and Its Effects on the Miss Rate of Parallel Programs]''' | |||

='''Performance of Consistency Models'''= | |||

The advantage of the less strict models is increased performance potential. The disadvantage is increased hardware complexity and a more complex programming model. To make an informed decision on the above tradeoff requires performance data for the various models. | |||

There were many experiments conducted to examine systems with non-bus interconnection networks and that use real programs as workloads. | |||

Kourosh Gharachorloo et al. at Stanford University compared the models of sequential consistency, processor consistency, weak ordering, and release consistency for a DASH-like architecture '''([http://ra.ziti.uni-heidelberg.de/pages/lectures/hws06/ra2/script_pdf/dash.pdf Directory Architecture for Shared Memory])'''. The results show that in an environment where processor reads are blocking and writes are buffered, a significant performance increase is achieved from allowing reads to bypass previous writes. Pipelining of writes, which determines the rate at which writes are retired from the write buffer, is of secondary importance. As a result, they have showed that the sequential consistency model performs poorly relative to all other models, while the processor consistency model provides most of the benefits of the weak and release consistency models. It showed that the relaxed models can hide most of the write latency and can perform upto 41% better than sequential consistency. It also showed that with blocking reads, processor consistency performed as well as weak ordering and release consistency for most cases. This result is surprising because processor consistency only allows reads to bypass writes, whereas weak ordering and release consistency also allow writes to be pipelined. Thus, potentially with processor consistency, the write buffer could get full faster and the processor could have to block | |||

often on writes. However, with blocking reads, much of the latency of writes is hidden behind the reads, and the write buffer does not block often. In one case, processor consistency even does better than weak ordering because weak ordering requires its synchronization reads to stall for previous write operations, whereas in processor consistency, reads are never stalled for previous writes. More information about the experimentation and the evaluation results can be found in this paper : '''[http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.52.9935&rep=rep1&type=pdf Performance Evaluation of Memory Consistency Models for Shared-Memory Multiprocessors]''' | |||

Ronald Bos et al. investigated the performance benefits of relaxed consistency models on multiprocessors executing a process network application. They used a trace-driven simulator, developed using SystemC, to model the distributed shared memory system of a | |||

prototype multiprocessor developed at Philips called Philips '''[http://www.es.ele.tue.nl/epicurus/files/report_jvrijnsen.pdf CAKE]''' (a nonuniform, distributed shared memory multiprocessor prototype). The simulator offers two consistency models: Sequential Consistency (SC) and a generalized Relaxed Consistency (RC) model. Input traces were generated by running a process network application in a cycle-accurate simulator of the prototype multiprocessor. The results showed that relaxed consistency has marginal performance benefits over sequential consistency. The advantage of relaxed memory consistency decreases for increasing network latency. More information about this study can be found in this paper '''[http://ce.et.tudelft.nl/publicationfiles/915_463_bos.pdf Performance Benefits of Relaxed Memory Consistency for Process Network Applications]''' | |||

Most of the above studies evaluate runtime optimizations. These clearly show that there have not been any studies evaluating performance gains for the relaxed models due to compiler optimizations. Many researchers argue that the performance boost from implementing models in the class relaxing all orders is enough to justify the additional intellectual burden the relaxed | |||

models place on the middleware authors of complex commercial systems. The performance gained by using relaxed models does | |||

not justify their complexity. More information about this can be found here : '''[http://www.cs.wisc.edu/multifacet/papers/computer98_sccase.pdf Multiprocessors Should Support Simple Memory-Consistency Models]''' | |||

='''References'''= | ='''References'''= | ||

# [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.5.7132&rep=rep1&type=pdf Speculative Sequential Consistency with Little Custom Storage] | # [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.5.7132&rep=rep1&type=pdf Speculative Sequential Consistency with Little Custom Storage] <br /> | ||

# [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf Two techniques to enhance the performance of memory consistency models] | # [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf Two techniques to enhance the performance of memory consistency models] <br /> | ||

# [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8185&rep=rep1&type=pdf Optimizing parallel SPMD programs] | # [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8185&rep=rep1&type=pdf Optimizing parallel SPMD programs]<br /> | ||

# [http://portal.acm.org/citation.cfm?id=42277 Efficient and correct execution of parallel programs that share memory] | # [http://portal.acm.org/citation.cfm?id=42277 Efficient and correct execution of parallel programs that share memory]<br /> | ||

# [http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf Shared Memory Consistency Models] | # [http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf Shared Memory Consistency Models]<br /> | ||

# [http://portal.acm.org/citation.cfm?id=193889&dl=GUIDE&coll=GUIDE&CFID=84028355&CFTOKEN=32262273 Designing Memory Consistency Models For Shared-Memory Multiprocessors] | # [http://portal.acm.org/citation.cfm?id=193889&dl=GUIDE&coll=GUIDE&CFID=84028355&CFTOKEN=32262273 Designing Memory Consistency Models For Shared-Memory Multiprocessors]<br /> | ||

# [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.52.9935&rep=rep1&type=pdf Performance Evaluation of Memory Consistency Models for Shared-Memory Multiprocessors]<br /> | |||

# [http://ce.et.tudelft.nl/publicationfiles/915_463_bos.pdf Performance Benefits of Relaxed Memory Consistency for Process Network Applications]<br /> | |||

# [http://www.cesr.ncsu.edu/solihin/Main.html Fundamentals of Parallel Computer Architecture by Yan Solihin]<br /> | |||

# [http://www.cs.wisc.edu/multifacet/papers/computer98_sccase.pdf Multiprocessors Should Support Simple Memory-Consistency Models]<br /> | |||

# [http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors]<br /> | |||

# [http://cs.gmu.edu/cne/modules/dsm/green/memcohe.html Consistency Models]<br /> | |||

# [http://www.barroso.org/publications/delayed.pdf Delayed Consistency and Its Effects on the Miss Rate of Parallel Programs]<br /> | |||

# [http://delivery.acm.org.www.lib.ncsu.edu:2048/10.1145/230000/224398/a61-kontothanassis.html?key1=224398&key2=6529911721&coll=ACM&dl=ACM&CFID=140829&CFTOKEN=60032635 Lazy Release Consistency for Hardware-Coherent Multiprocessors]<br /> | |||

# [http://infoscience.epfl.ch/record/55790/files/sosp91.ps.pdf Implementation and Performance of Munin]<br /> | |||

# [http://www.springerlink.com/content/mg141q86k2112788/fulltext.pdf?page=1 Munin: Distributed Shared Memory Using Multi-Protocol Release Consistency]<br /> | |||

# [http://www.cs.rochester.edu/research/cashmere/SC95/lazeag.html Lazy v. Eager Release Consistency]<br /> | |||

# [http://infoscience.epfl.ch/record/55789/files/isca92.ps.pdf Lazy Release Consistency for Software Distributed Shared Memory]<br /> | |||

# [http://www.cs.cornell.edu/Courses/cs717/2001fa/lectures/sarita.ppt Memory Consistency Models, by Sarita Adve]<br /> | |||

Latest revision as of 20:16, 4 May 2010

Memory Consistency models

Introduction

The memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read in a shared-memory program execution.

This material gives a brief explanation about the intuition behind using relaxed memory consistency models for scalable design of multiprocessors. It also explains about the consistency models in real multiprocessor systems like Digital Alpha, Sparc V9 , IBM Power PC and processors from Sun Microsystems.

Sequential Consistency Model (SC)

Introduction to Sequential Consistency Model

To write correct and efficient shared memory programs, programmers need a precise notion of shared memory semantics. To ensure correct execution, a programmer expects that the data value read should be the same as the latest value written to it in the system. However in many commercial shared memory systems,the processor may observe an older value, causing unexpected behavior. Intuitively, a read should return the value of the "last" write to the same memory location. In uniprocessors, "last" is precisely defined by the sequential order specified by the program called program order. This is not the case in multiprocessors. A write and read of a variable, are not related by program order because they reside on two different processors.

The uniprocessors model, however can be extented to apply to multiprocessors in a natural way. The resulting model is called Sequential consistency. In brief, sequential consistency requires that all memory operations

- appear to execute one at a time,

- all memory operations of a single processor appear to execute in the order described by that processor's program.

The figure above shows the basic representation for sequential consistency. This conceptual system for SC consists of n processors sharing a single logical memory. Though the figure does not show caches, an SC implementation may still cache data as long as the memory system appears as a single copy memory(i.e the writes should appear atomic). As SC requires program order to be maintained among all operation types, the pictorial representation of the program order shows all combinations of reads and writes, the line between them telling that the operations are required to complete in program order.

This model ensures that the reads of a variable, will return the new values written to it by a processor. Sequential consistency provides a simple, intuitive programming model. Because of its strict consistency requirements, sequential consistency, many of the architecture and compiler optimizations used in uniprocessors are not safely applicable to sequentially consistent multiprocessors.[ For more details on sequential consistency model and its advantages/disadvantages refer to Fundamentals of Parallel Computer Architecture textbook by Yan Solihin , page 284 through 292]. For this reason, many Relaxed consistency models have been proposed, most of which

are supported by commercial architectures.

Performance of Sequential Consistency on multiprocessors

Sequential Consistency (SC) is the most intuitive programming interface for shared memory multiprocessors. A system implementing SC appears to execute memory operations one at a time and in program order. A program written for an SC system requires and relies on a specified memory behavior to execute correctly. Implementing memory accesses according to the SC model constraints, however, would adversely impact performance because memory accesses in shared-memory multiprocessors often incur prohibitively long latencies (tens of times longer than in uniprocessor systems). To enforce sequential consistency, illegal reordering caused by hardware optimizations like Write buffers, Non-blocking caches etc and compiler optimizations like code motion, register allocation,eliminating common subexpressions, loop transformations etc resulting in reordering are not allowed. These are the optimizations which are implemented for better performance and are valid in uniprocessors. But in case of multiprocessors, these do not allow to satisfy the sequential consistency requirements and hence are not allowed. This affects the performance.

A number of techniques have been proposed to enable the use of certain optimizations by the hardware and compiler without violating sequential consistency, those having the potential to substantially boost performance. Some of them are mentioned below:

Hardware optimization techniques:

- Prefetching : A hardware optimization technique in which the processor automatically prefetches ownership for any write operations that are delayed due to the program order requirement (e.g., by issuing prefetch-exclusive requests for any writes delayed

in the write buffer), thus partially overlapping the service of the delayed writes with the operations preceding them in program order. This technique is only applicable to cache-based systems that use an invalidation-based protocol. This technique is suitable for statically scheduled processors.

- Speculative Reads : A hardware optimization technique in which read operations that are delayed due to the program order requirement are serviced speculatively ahead of time. Sequential consistency is guaranteed by simply rolling back and reissuing the read and subsequent operations in the infrequent case that the read line gets invalidated or updated before the read could have been issued in a more straightforward implementation. This is suitable for dynamically scheduled processors since much

of the roll back machinery is already present to deal with branch mispredictions.

More information about these two techniques can be found in this paper presented by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy of Stanford University at International Conference on Parallel Processing, Two techniques to enhance the performance of memory consistency models

These two techniques of Prefetching and Speculative Reads are expected to be supported by several next generation microprocessors like MIPS R10000 and Intel P6, thus enabling more efficient hardware implementations of sequential consistency.

Software Optimization techniques

- Shasha and Snir's agorithm : A compiler algorithm proposed by Dennis Shasha and Marc Snir is used to detect when memory operations can be reordered without violating sequential consistency. It uses the technique where Sequential consistency can be enforced by delaying each access to shared memory until the previous access of the same processor has terminated. For performance reasons, it allows several accesses by the same processor to proceed concurrently. It performs an analysis to find a minimal set of delays that enforces sequential consistency. The analysis extends to interprocessor synchronization constraints and to code where blocks of operations have to execute atomically thus providing a new compiler optimization techniques for parallel languages that support shared variables.

In detail implementation of this algorithm can be studied from the paper : Efficient and correct execution of parallel programs that share memory

- Compiler algorithm for SPMD (Single Program multiple data) programs : The algorithm proposed by Sasha and Snir has exponential complexity. This new algorithm simplified the cycle detection analysis used in their algorithm to achieve the job in polynomial time.

More information about this can be found in this paper by Arvind Krishnamurthy and Katherine Yelick presented at 7th International Workshop on Languages and Compilers for Parallel Computing Optimizing parallel SPMD programs

In general the performance of sequential consistency model on multiprocessors with shared memory is low. But with the use of above techniques, better performance can be achieved.

All of the above schemes mentioned to improve performance of SC allow a processor to overlap or reorder its memory accesses without software support, however, either require complex or restricted hardware (e.g., hardware prefetching and rollback) or the gains are expected to be small. Further, the optimizations of these schemes can be exploited by hardware (or the runtime system software), but cannot be exploited by compilers. Work related to compiler optimizations including that by Shasha and Snir motivate their work for hardware optimizations. Hardware costs are thus high to implement these techniques.

Hence researchers and vendors have alternatively relied on relaxed memory consistency models that embed the shared-address space programming interface with directives enabling software to inform hardware when memory ordering is necessary.

Relaxed Consistency Models

Uniprocessor optimizations such as write buffers and overlapped execution can violate sequential consistency. Therefore, general implementations of sequential consistency require a processor to execute its memory operations one at a time in program order, and writes are executed atomically. Many schemes discussed in the previous section do not require memory operations of a processor to be executed one at a time or atomically. However, these schemes either require restricting the network, or using complex hardware, or aggressive compiler technology. Even so, the performance gains possible are not known. For these reasons, there seems to be an emerging consensus in the industry to support relaxed memory models. Many commercially available architectures such as Digital Alpha, SPARC V8 and V9, and IBM PowerPC are based on this model. Relaxed memory consistency models allow the processors in the system to see different orderings between all or selected categories of memory operations that are strictly enforced in SC. Relaxed consistency models perform better than SC, but impose extra burden on the programmer to ensure that their programs conform to the consistency models that the hardware provides.

Different Relaxed consistency Models

The various relaxed models can be categorized based on how they relax the program order constraint:

- Write to Read relaxation: allow a write followed by a read to execute out of program order . Examples of models that provide this relaxation includes the IBM-370 , Sun SPARC V8 Total Store Ordering (TSO), and Processor Consistency (PC) that we have seen in class.

- Write to Write relaxation: allows two writes to execute out of program order. The Sun SPARC V8 Partial Store Ordering (PSO) model relaxes two writes.

- Read to Read, Write: allows reads to execute out of program order with respect to their following reads and writes. The models that implement this relaxation include Digital Equipment Alpha (Alpha), Sun SPARC V9 Relaxed Memory Order (RMO), IBM PowerPC (PowerPC) and the weak ordering (WO) and release consistency (RC) models that we have seen in class.

Apart from the program order relaxation, some of these models also relax the write atomicity requirement.

- Read others’ write early: allow a read to return the value of another processor’s write before the write is made visible to all other processors. This relaxation only applies to cache-based systems.

Let us consider an example from Memory Consistency Models, by Sarita Adve to illustrate this:

Let us see what happens if read returns new value before all copies see it. Below, (Write, A, 1) means the operation write on A returns 1 and (Read, A, 1) means the operation Read on A returns 1.

We see that in P3, Read A returns zero while it should have been 1. This is because A was read before the P1's write to A had propagated to P3.

- Read own write early: a processor is allowed to read the value of its own previous write before the write is made visible to other processors.

Example from Memory Consistency Models, by Sarita Adve:

This can happen if Flag1 is read early from write buffer, when read is re-ordered with respect to write.

All of the above models provide safety net that allow programmers to enforce the required constraints for achieving correctness. All models also maintain uniprocessor data and control dependences, and write serialization.

Let us now see the consistency models in some of the real multiprocessor systems listed above. We will not introduce those topics that are already covered in Solihin's textbook, like Weak ordering and Processor consistency models. RCsc and RCpc. RCsc maintains sequential consistency among synchronization operations, while RCpc maintains processor consistency among synchronization operations.

Relax Write to Read program order

Program order optimization is enabled by allowing a read to be reordered with respect to previous writes from the same processor and sequential consistency is maintained through the enforcement of the remaining program order constraints. Though relaxing the program order from a write followed by a read can improve performance, this reordering is not sufficient for compiler optimizations. The reason being most compiler optimizations require the full flexibility of reordering any two operations in program, and not just write to read. IBM-370, TSO, and PC models relax Write to Read program order.The models to be discussed under this type of relaxation differ in when they allow a read to return the value of a write.

IBM-370

The IBM-370 model relaxes certain writes to reads program order but maintains the atomicity requirements of SC. This model enforces the program order constraint from a write to a following read, by using the safety net serialization instructions that may be placed between the two operations. This model is the most stringent, only next to SC because the program order from a write to a read still needs to be maintained in the below three cases:

- A write followed by a read to the same location [depicted by dashed line in between W and R in the figure].

- If either write or read are generated by a serialization instruction[ WS , RS].

- If there is a non-memory serialization instruction such as fence instruction[F] between the write and read.

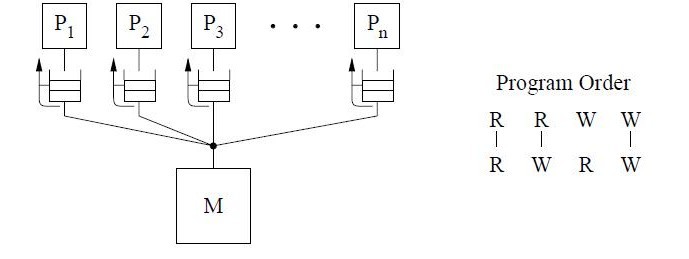

The conceptual system shown in the above figure is similar to that used for representing SC. The main difference is the presence of a buffer between each processor and the memory. Since we assume that each processor issues its operations in program order, we use the buffer to model the fact that the operations are not necessarily issued in the same order to memory. The cancelled reply path from the buffer to the processor implies that a read is not allowed to return the value of a writeto the same location from the buffer.

The IBM-370 model has two types of serialization instructions: special instructions that generate memory operations (e.g., compare-and-swap) and special non-memory instructions (e.g., a special branch).

SPARC V8 Total Store Ordering (TSO)

The total store ordering (TSO) model is one of the models proposed for the SPARC V8 architecture. TSO always allows a write followed by a read to complete out of program order, i.e reads issued to the same pending writes are allowed to be reordered. Read returns a previous write value if present, else fetches the value from the memory. All other program orders are maintained. This is shown in the conceptual system below by the reply path from the buffer to a read that is no longer blocked.

For TSO, a safety net for write atomicity is required only for a write that is followed by a read to the same location in the same processor. The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes.

Difference between IBM370 and TSO:

Let us consider the program segment below, taken from Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors, to demonstrate the difference between TSO and IBM 370 models.

First consider the program segment in (a). Under the SC or IBM-370 model, the outcome (u,v,w,x)=(1,1,0,0) is disallowed. However, this outcome is possible under TSO because reads are allowed to bypass all previous writes, even if they are to the same location; therefore the sequence (b1,b2,c1,c2,a1,a2) is a valid total order for TSO. Of course, consistency value requirement still requires b1 and b2 to return the values of a1 and a2, respectively, even though the reads occur earlier in the sequence than the writes. This maintains the intuition that a read observes all the writes issued from the same processor as the read. Consider the program segment in (b). In this case, the outcome (u,v,w,x)=(1,2,0,0)is not allowed under SC or IBM-370, but is possible under TSO.

Relax Write to Write program order

This category of relaxed models allows

- Two writes to execute out of program order.

- A write followed by a read execute out of program order.

This relaxation enables a number of hardware optimizations, including write merging in a write buffer and overlapping multiple write misses, thus servicing, the writes at a much faster rate. But this relaxation is not sufficiently flexible to be useful to a compiler. The SPARC V8 Partial Store Ordering model (PSO) is an example of such a model that we describe below.

SPARC V8 Partial Store Ordering

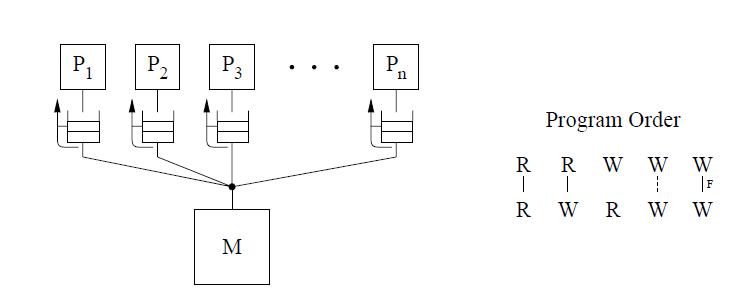

The Partial Store Ordering(PSO) model is very much similar to the TSO model for SPARC V8. The figure below shows an identical conceptual system. There is only a slight difference in the program order, where writes to locations can be overlapped only if they are not to same locations. This is represented by the dashed line between the W's in the program order figure. Safety net for the program order is provided through a fence instruction, called the store barrier or STBAR, that may be used to enforce the program order between writes.

Let us consider an example from Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors to demonstrate the working of PSO.

With relaxed Write to Read models, the only value for (u,v) is (1,1). With PSO, since it allows writes to different locations to complete out of program order, it also allows the outcomes (0,0) or (0,1) or (1,0) for (u,v). In this example, STBAR instruction needs to be placed immediately before c1 on P1 in order to disallow all outcomes except (1,1).

Relax Read to Read and Read to Write program orders

This model sheds the restriction on program order between all operations, including read to read and read followed by write to different locations . This flexibility provides the possibility of hiding the latency of read operations by implementing true non-blocking reads in the context of either static (in-order) or dynamic (out-of-order) scheduling processors, supported by techniques such as non-blocking (lockup-free) caches and speculative execution. The compiler also has full flexibility to reorder operations.

Weak ordering (WO), Release consistency (RC), DEC Alpha, Relaxed Memory Order (RMO), and PowerPC are examples of this model, with the last three models for commercial architectures. Except for Alpha,all the other models allow reordering of two reads to the same location.

All of the models in this group allow a processor to read its own write early with the exception of RCpc, a flavor of Release consistency (RC) and PowerPC. All the three commercial architectures provide explicit fence instructions to ensure program order. Frequent use of fence instructions can incur a significant overhead due to an increase in the number of instructions and the extra delay that may be associated with executing fence instructions.

DEC Alpha

The program order constraints allow reads and writes to different locations to complete out of program order

unless there is a fence instruction between them. Memory operations to the same location, including reads, are required to complete in program order. Safety net for program order is provided through the fence instructions, the memory barrier (MB) and the write memory barrier (WMB). The MB instruction can be used to maintain program order between any memory operations, while WMB instruction provides this guarantee only among write operations. The Alpha model does not require a safety net for write atomicity.

The conceptual system shown above is the same same as IBM 370 which requires a read to return the value of the last write operation to the same location. But since we can relax the program order from a write to a read, we can safely exploit optimizations such as read forwarding.

Relaxed Memory Order (RMO)

The SPARC V9 architecture uses RMO which is an extension of the TSO and PSO models used in SPARC V8.

The Read to Read, Read to write program order is relaxed in this model, like in PSO. But a Read to Write or the order between two writes to the same location are not relaxed. This is shown in the figure for program order below.

RMO provides four types of fences[F1 through F4] that allow program order to be selectively maintained between any two types of operations. A single fence instruction, MEMBAR, can specify a combination of the above fence types by setting the appropriate bits in a four-bit opcode. No safety net for write atomicity is required.

IBM PowerPC

This model constrains the program order

-Between sub-operations. That is each operation may consist of multiple sub-operations and that all sub-operations of the first operation must complete before any sub-operations of the second operation.[represented by the double lines between operations in the figure below].

-Writes to the same location.

-Among conflicting operations.

The IBM PowerPC model exposes multiple-copy semantics of memory to the programmer as shown in the conceptual system above. Safety net fence instruction is called SYNC which is similar to the MB fence instruction of the Alpha systems. However, SYNC between two reads allows to occur out of program order. Also PowerPC allows a write to be seen early by another processor’s read. Hence a read-modify-write operation may be needed to enforce program order between two reads to the same location as well as to make writes appear atomic.

The table below summarizes the program constraint under each model we have described so far.

| Relaxation | W-> R order | W -> W order | R -> RW order | Read Others Write Early Order | Read Own Write Early Order | Safety net |

| SC | Yes | |||||

| IBM 370 | Yes | serialization instructions | ||||

| Total Store Ordering | Yes | Yes | RMW | |||

| PC | Yes | Yes | Yes | RMW | ||

| PSO | Yes | Yes | Yes | RMW, STBAR | ||

| WO | Yes | Yes | Yes | Yes | synchronization | |

| RCsc | Yes | Yes | Yes | Yes | release, acquire, nsync, RMW | |

| RCpc | Yes | Yes | Yes | Yes | Yes | release, acquire, nsync, RMW |

| Alpha | Yes | Yes | Yes | Yes | MB, WMB | |

| RMO | Yes | Yes | Yes | Yes | MEMBARs | |

| PowerPC | Yes | Yes | Yes | Yes | Yes | Sync |

RMW : read-modify-write instruction

nsyncs : asynchronous data operations or special operations that are not used for synchronization.

More information on Release Consistency models is presented in the next section.

The table below shows some of the other commercial systems that relax sequential consistency.

Release Consistency Related Models

Release consistency provides these two kinds. Acquire accesses are used to tell the memory system that a critical region is about to be entered. Release accesses say that a critical region has just been exited. These accesses can be implemented either as ordinary operations on special variables or as special operations.

Release Consistency Models

Eager Release Consistency Model

In Eager Release Consistency Model , the invalidations (or write notices) are propagated at release points. Munin's write shared protocol proposed by John K. Bennett, John B. Carter, and WiUy Zwaenepoel of Rice University implemented this Eager Release Consistency Model. It is a software implementation of Release consistency Model which buffers writes until a release, instead of pipelining them as in the DASH implementation. At the release all writes going to the same destination are merged into a single message. This is illustrated in the following diagram:

This approach is conservative because the system does not know when the next acquire by another processor will occur or whether a given process will even perform an acquire and need to see those write notices.

More information about the implementation of this Eager Release Consistency Model can be studied from these two papers: Implementation and Performance of Munin and Munin: Distributed Shared Memory Using Multi-Protocol Release Consistency

Lazy Release Consistency Model

Lazy release consistency (LRC) is consistency model most frequently used in Software Distributed Shared Memory. It is used for implementing release consistency that lazily pulls modifications across the interconnect only when necessary. The basic concept behind the protocol is to allow processors to continue referencing cache blocks that have been written by other processors. Although write notices are sent as soon as a processor writes a shared block, invalidations occur only at acquire operations. This is sufficient to ensure that true sharing dependencies are observed. Lazy algorithms such as LRC do not make modications globally visible at the time of a release. Instead, LRC guarantees only that a processor that acquires a lock will see all modications that precede the lock acquire.

More information about Lazy Release Consistency Model can be obtained from these two papers : Lazy Release Consistency for Hardware-Coherent Multiprocessors and Lazy Release Consistency for Software Distributed Shared Memory

Comparison between Lazy and Eager Release Consistency Models

Eager release consistency represents the state of the art in release consistent protocols for hardware-coherent multiprocessors, while lazy release consistency has been shown to provide better performance for software distributed shared memory (DSM). Several of the optimizations performed by lazy protocols have the potential to improve the performance of hardware-coherent multiprocessors as well, but their complexity has precluded a hardware implementation.

An Eager Release Consistency Model like Munin's write shared protocol may send more messages than a message passing implementation of the same application. The following figure shows an example where processors p1 through p4 repeatedly acquire the lock l, write the shared variable x, and then release l.

If an update policy is used in conjunction with Munin's write shared protocol and x is present in all caches, then all of these cached copies are updated at every release. Logically, however it suffices to update each processor's copy only when it acquires l. This results in a single message exchange per acquire as in a message passing implementation.