CSC/ECE 506 Spring 2010/10 DJ: Difference between revisions

No edit summary |

|||

| (80 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

=='''MEMORY CONSISTENCY MODELS'''== | =='''MEMORY CONSISTENCY MODELS'''== | ||

The interface<sup>[1]</sup> for memory in a shared memory multiprocessor is called | The interface<sup>[1]</sup> for memory in a shared memory multiprocessor is called a '''memory consistency model'''. The memory consistency model of a shared-memory multiprocessor provides a formal specification<sup>[3]</sup> of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. | ||

=='''SEQUENTIAL CONSISTENCY'''== | =='''SEQUENTIAL CONSISTENCY'''== | ||

Lamport<sup>[1]</sup> defined a multiprocessor to be Sequentially consistent [SC] if: | Lamport<sup>[1]</sup> defined a multiprocessor to be '''Sequentially consistent [SC]''' if: <br /> | ||

the result of any execution is the same as if the operations of all the processors were executed in some sequential order and the operations of each individual processor appear in this sequence in the order specified by the program. <br /> | 'the result of any execution is the same as if the operations of all the processors were executed in some sequential order and the operations of each individual processor appear in this sequence in the order specified by the program'. <br /> | ||

The main advantage of selecting SC as the interface to shared memory hardware is that it is programmer’s intuition. SC permits coherence caching, prefetching and multithreading and yet keeps the software simple. In particular, SC enables middleware authors to use the same target as a multiprogrammed uniprocessor. Thus, SC should be a preferable choice for hardware architects. | The main advantage of selecting SC as the interface to shared memory hardware is that it is programmer’s intuition. SC permits coherence caching, prefetching and multithreading and yet keeps the software simple. In particular, SC enables middleware authors to use the same target as a multiprogrammed uniprocessor. Thus, SC should be a preferable choice for hardware architects. | ||

| Line 17: | Line 17: | ||

1. A processor <sup>[3]</sup> must ensure that its previous memory operation is complete before proceeding with its next memory operation in program order. This requirement is called the '''program order''' requirement. <br /> | 1. A processor <sup>[3]</sup> must ensure that its previous memory operation is complete before proceeding with its next memory operation in program order. This requirement is called the '''program order''' requirement. <br /> | ||

2. Determining <sup>[3]</sup> the completion of a write requires an explicit | 2. Determining <sup>[3]</sup> the completion of a write requires an explicit acknowledgment message from memory. Additionally, in a cache-based system, a write must generate invalidate or update messages for all cached copies. <br /> | ||

3, SC makes it hard to use write buffers <sup>[1]</sup>,because write buffers cause operations to be presented to the cache coherence protocol out of program order. <br /> | 3, SC makes it hard to use write buffers <sup>[1]</sup>,because write buffers cause operations to be presented to the cache coherence protocol out of program order. <br /> | ||

4. Some processors are precluded from overlapping multiple reads and writes in the memory system. This restriction is crippling in systems without caches. <br /> | 4. 'Some processors are precluded from overlapping multiple reads and writes in the memory system. This restriction is crippling in systems without caches'. <br /> | ||

5. Out of order execution <sup>[4]</sup> and instruction level parallelism can’t be used under SC. <br /> | 5. Out of order execution <sup>[4]</sup> and instruction level parallelism can’t be used under SC. <br /> | ||

| Line 27: | Line 27: | ||

6. Bypassing <sup>[4]</sup> a store to a younger load value is not allowed under SC since it violates atomicity. <br /> | 6. Bypassing <sup>[4]</sup> a store to a younger load value is not allowed under SC since it violates atomicity. <br /> | ||

7. Also, non blocking caches are not allowed. <br /> | 7. 'Also, non blocking caches are not allowed'. <br /> | ||

8. For compilers <sup>[3]</sup>, an analog of the program order requirement applies to straightforward implementations. | 8. For compilers <sup>[3]</sup>, an analog of the program order requirement applies to straightforward implementations. | ||

=='''INTUITION behind relaxed memory-consistency models'''== | =='''INTUITION behind relaxed memory-consistency models'''== | ||

| Line 39: | Line 39: | ||

So, prefetches are useless because the load will suffer a cache miss and store with still need to go the bus. They also incur unnecessary traffic. hence, it is not a perfect solution. <br /> | So, prefetches are useless because the load will suffer a cache miss and store with still need to go the bus. They also incur unnecessary traffic. hence, it is not a perfect solution. <br /> | ||

Next possible solution is '''speculation'''<sup>[4]</sup> With speculation, a younger (later) load is allowed to access the cache, but marked as speculative. If, by the time the first load completes and the block read by the second load has not been invalidated or naturally evicted, then the value obtained by the second load would be same, if it had to wait for the first load to be executed atomically. So, speculation is successful, if it fails, cancel the younger load and re execute it. Also, a younger load can be speculative to an older pending store. <br /> | Next possible solution is '''speculation'''<sup>[4]</sup> With speculation, a younger (later) load is allowed to access the cache, but marked as speculative. If, by the time the first load completes and the block read by the second load has not been invalidated or naturally evicted, then the value obtained by the second load would be same, if it had to wait for the first load to be executed atomically. So, speculation is successful, if it fails, cancel the younger load and re-execute it. Also, a younger load can be speculative to an older pending store. <br /> | ||

However, applying it to store is harder since it cannot be | However, applying it to store is harder since it cannot be canceled easily. <br /> | ||

Both these techniques have been used in MIPS R10000 and Intel Pentium architecture. <br /> | Both these techniques have been used in MIPS R10000 and Intel Pentium architecture. <br /> | ||

| Line 50: | Line 50: | ||

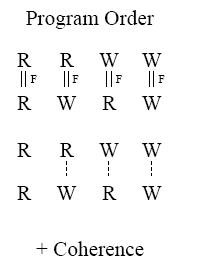

Relaxed memory consistency models<sup>[3]</sup> can be categorized based on three key characteristics: (1) how they relax the program order requirement, i.e.: whether they relax the order from a write to a following read, between two writes, and finally from a read to a following read or write and (2) how they relax the write atomicity requirement, i.e: whether they allow a read to return the value of another processor’s write before the write is made visible to all other processors.(3) A relaxation to both program order and write atomicity, where a processor is allowed to read the value of its own previous write before the write is made visible to other processors. In a cache-based system, this relaxation allows the read to return the value of the write before the write is serialized with respect to other writes to the same location and before the invalidations/updates of the write reach any other processor. | Relaxed memory consistency models<sup>[3]</sup> can be categorized based on three key characteristics: (1) how they relax the program order requirement, i.e.: whether they relax the order from a write to a following read, between two writes, and finally from a read to a following read or write and (2) how they relax the write atomicity requirement, i.e: whether they allow a read to return the value of another processor’s write before the write is made visible to all other processors.(3) A relaxation to both program order and write atomicity, where a processor is allowed to read the value of its own previous write before the write is made visible to other processors. In a cache-based system, this relaxation allows the read to return the value of the write before the write is serialized with respect to other writes to the same location and before the invalidations/updates of the write reach any other processor. | ||

<center> | |||

{| class="wikitable" border="1" | |||

|- | |||

| Relaxation | |||

| Commercial Systems Providing the Relaxation | |||

|- | |||

| W -> R Order | |||

| AlphaServer 8200/8400, Cray T3D, Sequent Balance, SparcCenter1000/2000 | |||

|- | |||

| W -> W Order | |||

| AlphaServer 8200/8400, Cray T3D | |||

|- | |||

| R -> RW Order | |||

| AlphaServer 8200/8400, Cray T3D | |||

|- | |||

| Read Others’ Write Early | |||

| Cray T3D | |||

|- | |||

| Read Own Write Early | |||

| AlphaServer 8200/8400, Cray T3D, SparcCenter1000/2000 | |||

|} | |||

'''Some commercial systems that relax sequential consistency'''<sup>[3]</sup> </center> | |||

<br /> | |||

=='''Relaxing the WRITE to READ Program Order'''== | |||

Here, the program orders are relaxed for a write followed by a read to a different location. These models <sup>[3]</sup> include the '''IBM370model''', the '''SPARC V8 TSO''' and the '''processor consistency''' model (PC) .These models allow a read to be reordered with respect to previous writes from the same processor. <br /> | |||

==='''IBM 370'''=== | |||

It is the strictest because it does not allow a read from returning the value of a write before the write is made visible to all processors. Therefore, even if a processor issues a read to the same address as a previous pending write from itself, the read must be delayed until the write is made visible to all processors. <br /> | |||

<center>[[Image:ibm370model.jpg]] </center> | |||

<center>'''IBM 370 model'''<sup>[6]</sup></center> | |||

Here, Rs & Ws mean read and write generated by serialization instructions. <br /> | |||

Dashed lines indicate in program order. <br /> | |||

To ensure the program order constraint, it provides special serialization instructions that may be placed between the two operations. eg. compare&swap. <br /> | |||

<center>[[Image:ibm2.jpg|120p]] </center> | |||

<br /> | |||

Placing a serialization instruction after the write on each processor provides sequentially consistent results. <br /> | |||

It does not need a safety net to ensure atomicity since it does not relax atomicity. <br /> | |||

==='''TSO model'''=== | |||

The total store ordering (TSO) model is one of the models proposed for the '''SPARC V8 architecture'''. <br /> | |||

It allows a read to return the value of its own processor’s write even before the write is serialized with respect to other writes to the same location. <br /> | |||

It requires that no other writes to any location appear to occur between the read and the write of the read-modify-write. <br /> | |||

It does not provide explicit safety nets. <br /> | |||

The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes. <br /> | |||

The disadvantages to rely on a read-modify write as a safety net: <br /> | |||

A system may not implement a general read-modify-write that can be used to appropriately replace any read or write and replacing a read by a read-modify-write needs invalidating other copies of the line. <br /> | |||

==='''PC model'''=== | |||

<center>[[Image:pcmodel2.jpg]]</center> | |||

<center>'''PC model'''<sup>[6]</sup></center> | |||

Double lines[6] between reads/writes denote the fact that operations may no longer be atomic and that all sub-operations of the first operation must complete before any sub-operations of the second operation. | |||

PC relaxes both constraints, such that a read can return the value of any write before the write is serialized or made visible to other processors. <br /> | |||

It requires that no other writes to the same location appear to occur between the read and the write of the read-modify-write. <br /> | |||

The program order between a write and a following read appears to be maintained if the read is replaced by or is already part of a read-modify-write. <br /> | |||

They do not provide explicit safety nets. <br /> | |||

A write is guaranteed to appear atomic if every read that may return the value of the write is part of, or replaced with, a read-modify-write. <br /> | |||

However, it has the same disadvantage of TSO for relying on read-modify-write. | |||

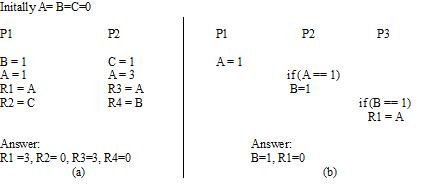

<center>[[Image:pcmodel3.jpg|180p]]</center> | |||

<center>'''PC model'''<sup>[6]</sup></center> | |||

<center>(a) is possible with PC and TSO, not with IBM 370 (b) is possible with PC only.</center> | |||

PC is less useful than the others, because it does not guarantee a property called '''causality'''. Causality requires that all other processors see the effect of a processor’s operation when any other processor sees it. Without causality, processor consistency can fail to look like SC in important cases involving three or more processors. | |||

=='''Relaxing the WRITE to READ and WRITE to WRITE Program Orders'''== | |||

The '''partial store ordering model''' (PSO)<sup>[3]</sup> is an extension of the TSO model and is the second model provided by the SPARC V8 architecture. | |||

As far as atomicity is concerned, it is same as TSO. | |||

PSO provides an explicit '''STBAR''' instruction for imposing program order between two writes. | |||

However, PSO is still not good enough to be useful to a compiler. <br /> | |||

=='''Relaxing all program orders'''== | |||

The program order <sup>[3]</sup> between all operations to different locations is relaxed. Thus, a read or write operation may be reordered with respect to a following read or write to a different location. <br /> | |||

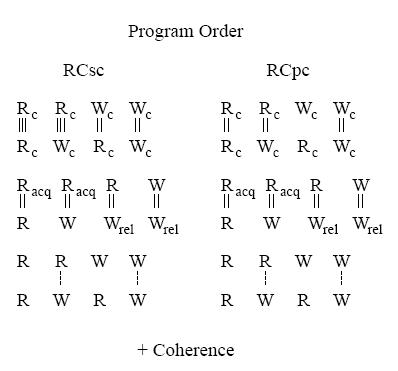

Examples of such models are: '''weak ordering(WO)''' model, '''release consistency models (RCsc/RCpc)''', and three models proposed for commercial architectures: the '''Digital Alpha''', '''SPARC V9 relaxed memory order (RMO)''', and '''IBM PowerPC''' models. <br /> | |||

All the above models allow memory operations following a read operation to be overlapped or reordered with respect to the read operation.In hardware, this flexibility provides the possibility of hiding the latency of read operations. | |||

All of the models in this group allow a processor to read its own write early. <br /> | |||

RCpc and PowerPC are the only models whose straightforward implementations allow a read to return the value of another processor’s write early.Except for Alpha, all the above models allow the reordering of two reads to the same location. | |||

Complex implementations of RCsc can potentially violate atomicity such that it can affect the result of a program. <br /> | |||

The WO, RCsc, and RCpc models distinguish memory operations based on their type, and provide stricter ordering constraints for some types of operations. On the other hand, the Alpha, RMO, and PowerPC models provide explicit fence instructions for imposing program orders between various memory operations. | |||

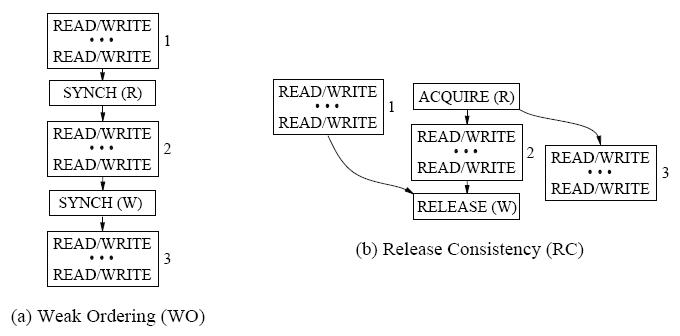

==='''WEAK ORDERING'''=== | |||

The weak ordering model (also known as weak consistency) was proposed by '''Dubois et al. [DSB86]'''<sup>[6]</sup> and relies on maintaining program order only at the synchronization points in the program. <br /> | |||

Many programs use synchronization to make sure data is up-to-date and programs to do not exhibit nondeterministic behavior. The synchronization primitive will ensure that all previous instructions before the synchronization primitive have executed and committed to memory before the current instruction is executed. <br /> | |||

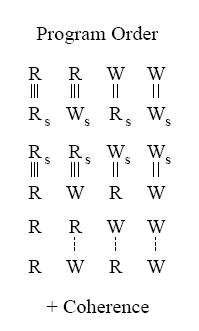

<center>[[Image:wo3.jpg]]</center> | |||

<center>'''Weak ordering model'''<sup>[6]</sup></center> | |||

<br /> | |||

Rs and Ws denote read and write identified as synchronization. <br /> | |||

Triple lines denotes that the read sub-operation and the sub-operations of the write (possibly from a different processor) whose value is returned by the read should complete before any of the sub-operations of the operation that follows the read in program order. | |||

The weak ordering model assumes that 1) programs are properly synchronized and that 2) synchronization is supported by the hardware. | |||

Also, assumptions about the synchronization primitives are that 1) before synchronization, all prior loads, stores, and synchronizations must have been completed and 2) all loads, stores, synchronizations following must not have been issued yet. | |||

With these assumptions, the weak ordering model can be used. The weak ordering model allows memory accesses between synchronization points to be reordered. <br /> | |||

The following illustration shows how this model is used.This model is modified from class notes | |||

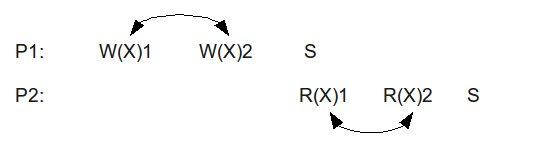

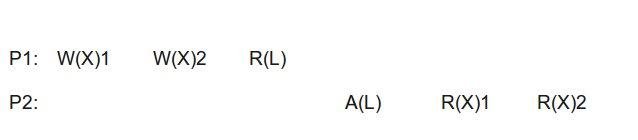

<center>[[Image:try1.jpg]]</center> | |||

<center>'''Memory operations with WO model'''<sup>[8]</sup></center> | |||

With weak ordering memory operations between synchronization points can be reordered. This means that W(X)1 and W(X)2 can be reordered before the synchronization point. In fact, W(X)1 and W(X)2 may not even have been fully committed to memory prior to the synchronization point. As long as all of the operations executed and commit to memory before the synchronization, weak ordering is satisfied. Similarly, the read operations of P2 can also be reordered. Weak ordering, however, does not allow reordering to occur across synchronization points, as illustrated in the figure below. | |||

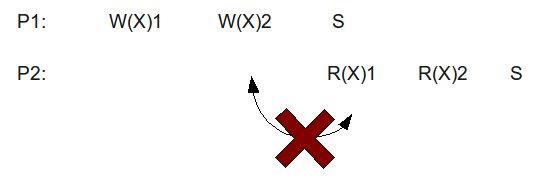

<center>[[Image:wo2.jpg]]</center> | |||

<center>'''Memory operation across synchronization points cannot be reordered'''<sup>[8]</sup></center> | |||

The R(X)1 and W(X)2 across synchronization points cannot be reordered, otherwise it would violate weak ordering and would lead to non-deterministic results. | |||

Several synchronization<sup>[4]</sup> mechanisms can be used including barriers, fences, fetch-and-op, test-and-set, load linked and store conditional, and so on. The synchronization mechanisms must ensure that instructions stall until all prior loads and stores complete and propagate.Compared to SC, WO provides better performance since memory operations can be reordered between synchronization points. Compared to PC, WO typically gives better performance. However, when there are several synchronization points, limiting reordering potential, PC may achieve better performance. | |||

==='''RELEASE CONSISTENCY'''=== | |||

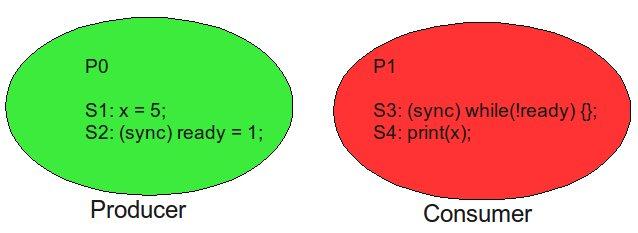

Release consistency is similar to weak ordering, but it can relax the model even further by distinguishing between the two types of synchronization: acquisition and release. In programs, some thread will produce some data, and another thread will consume it. The consuming thread cannot run before the producing thread. Looking at the same code from before, it is clear that P0 is the producer and P1 is the consumer. | |||

<center>[[Image:newrc1.jpg|120p]]</center> | |||

<center>'''Producer and Consumer process'''<sup>[8]</sup></center> | |||

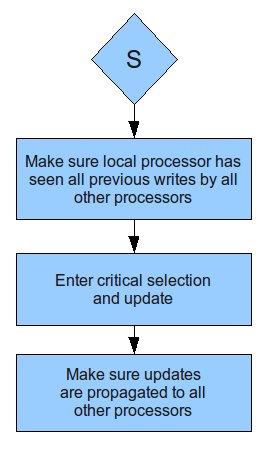

From the weak ordering model, when we encounter an 'S' instruction, or synchronization point, here is what happens. | |||

<center>[[Image:newrc2.jpg|120p]]</center> | |||

<center>'''Actions upon encountering synchronization point in WO'''</center> | |||

Looking at the code above the producer P0 upon encountering the synchronization instruction will make sure to see previous writes by other processors, enter critical selection and update ready, then propagate to other processors.The consumer P1, upon encountering the synchronization point will make sure to see previous by other processors, enter critical selection and read ready, then propagate to other processors. | |||

Notice that both the consumer and producer perform redundant operations. The producer does not need to see previous writes from other processors because it doesn't read any values! Similarly, the consumer does not need to propagate updates to other processors because it never made any updates. <br /> | |||

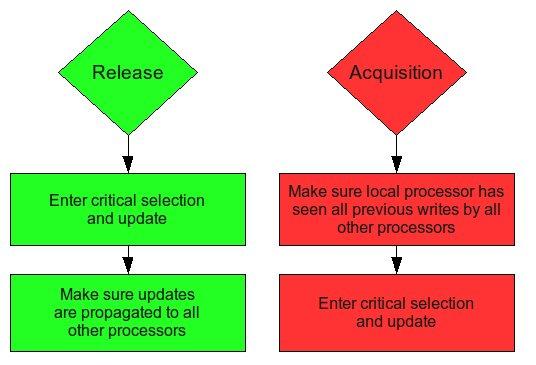

The release consistency model addresses this problem by distinguishing synchronization points for both producer and consumer. The producer synchronization is called a release and the consumer synchronization is called an acquisition. | |||

Here are the procedures for release and acquisition | |||

<center>[[Image:newrc3.jpg|120p]]</center> | |||

<center>'''Release and acquisition procedure for RC'''</center> | |||

By definition of release consistency, an acquisition must ensure that following load and stores must not executed until acquisition is complete. Also, a release must ensure that previous load and stores must complete before release. | |||

The following diagram shows release consistency in action. | |||

<center>[[Image:newrc7.jpg|120p]]</center> | |||

<center>'''RC'''<sup>[8]</sup></center> | |||

</center> | |||

There are two flavors<sup>[6]</sup> of release consistency that differ in the order that is maintained among synchronization operations. The first flavor maintains sequential consistency among synchronization operations and is referred to RCsc, while the second flavor maintains processor consistency among such operations and is called RCpc. Except for the program order requirements, these two models are identical. | |||

<center>[[Image:rc6.jpg|120p]]</center> | |||

<center>'''RC models'''<sup>[6]</sup></center> | |||

Similar to WO, RC requires that programs are synchronized correctly. In addition, the synchronizations must be labeled as either an acquisition or release. This obviously increases programming complexity. By reducing redundant operations, the performance of release is better than WO. This performance increase, however, may be overshadowed by the additional cost of programming complexity in identifying which synchronizations are release or acquisition. | |||

==='''Comparing RC and WO'''=== | |||

<center>[[Image:compareworc.jpg|120p]]</center> | |||

<center>'''Comparison of RC and WO'''<sup>[6]</sup></center> | |||

RC allows for extra reordering and overlap of memory operations across acquires and releases. | |||

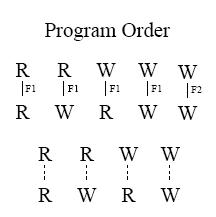

==='''Alpha, RMO, and PowerPC'''=== | |||

The Alpha, RMO, and PowerPC models all provide explicit fence instructions as their safety nets.<sup>[3]</sup> | |||

===='''Alpha model'''==== | |||

The Alpha model provides two different fence instructions, the '''memory barrier (MB)''' and the '''write memory barrier (WMB)'''. The memory barrier instruction is used to maintain program order and memory operations both before and after the instruction for both read and writes. In constrast, the write memory barrier instruction only maintains order among write operations. | |||

<center>[[Image:alpha1.jpg|120p]]</center> | |||

<center>'''Alpha model (F1: MB,F2: WMB)'''<sup>[6]</sup></center> | |||

SC can be guaranteed<sup>[6]</sup> by placing a fence instruction between any pair of memory operations. Weak ordering can be guaranteed by placing a fence instruction before and after every memory operation for a synchronization. | |||

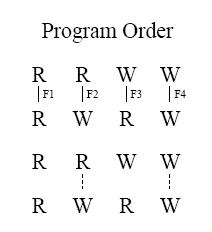

===='''SPARC V9 Relaxed Memory Order (RMO)'''==== | |||

The SPARC V9 architecture uses the relaxed memory order model (RMO)<sup>[6]</sup>. RMO is an extension of the TSO and PSO models. RMO extends the PSO and allows reads to complete out of order with respect to the following reads and writes. | |||

<center>[[Image:rmo.jpg|120p]]</center> | |||

<center>'''RMO model'''<sup>[6]</sup></center> | |||

RMO provides four types of fences that can be used to preserve program order between any two types of operations. The fence type can be selected using the appropriate opcode. | |||

A '''MEMBAR'''<sup>[3]</sup> instruction can be used to order a group of previous reads and writes with respect to future reads and writes. The MEMBAR instruction can eliminate the need to use read-modify-writes used in the SPARC V8 TSO and PSO models. | |||

===='''IBM PowerPC model'''==== | |||

<center>[[Image:ibmpc.jpg|120p]]</center> | |||

<center>'''IBM PowerPC model'''<sup>[6]</sup></center> | |||

The IBM PowerPC model exposes the multiple-copy semantics<sup>[6]</sup> of memory to the programmer. The IBM PowerPC model provides a single fence instruction called the SYNC. The SYNC instruction is similar<sup>[3]</sup> to the MB instruction of the Alpha model. However, if a SYNC instruction is placed between two reads from the same location, the second read may return an older value, resulting from an older write. This behavior can cause non-deterministic outcomes and may force the use of read-modify-write operations to ensure correctness. In other words, the IBM PowerPC allows writes to be seen earlier by another read, thus read-modify-writes may be needed to ensure correctness. | |||

Read forwarding from the buffer are actually safe. | |||

Here is a summary of relaxed models | |||

{| class="wikitable" border="1" | {| class="wikitable" border="1" | ||

| Line 150: | Line 350: | ||

|} | |} | ||

<center>Simple categorization of relaxed models <sup>[5]</sup></center> <br /> | <center>'''Simple categorization of relaxed models''' <sup>[5]</sup></center> <br /> | ||

Y: corresponding relaxation is allowed by straightforward implementations of the corresponding model & can be detected by the programmer <br /> | Y: corresponding relaxation is allowed by straightforward implementations of the corresponding model & can be detected by the programmer <br /> | ||

=='''Lazy Consistency'''== | |||

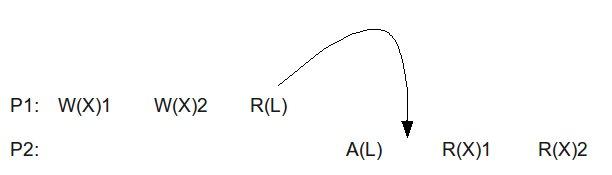

Further optimizations to the RC model are possible. When an A(L) or acquisition instruction is encountered we don't need the updated values from other processors immediately until sometime after the A(L) instruction. This allows the release to be delayed or "lazy." The release values are also clumped together so all of the modified values needed are sent in one pack. The following is illustrated below. | |||

<center> | <center>[[Image:lazy.jpg|120p]]</center> | ||

<center>'''Lazy consistency'''</center> | |||

< | |||

Instead of acquiring at the A(L) instruction, it is delayed until after. Also, changes to memory addresses 1 and 2 are sent together. This model allows more time for release and reduces memory traffic by propagating changes together in one group. | |||

Changes in RC model to support delaying the release are necessary. LRC is not employed in hardware supported cache coherent systems. | |||

Delaying the release and grouping the releases together may cause additional latency on the subsequent acquire. However, with lazy consistency the number of writes is reduced and performance may improve on systems that high memory overheads. | |||

< | One system that implements lazy consistency is an experimental system from '''Kontothanassis et. al.''' <sup>[7]</sup>from the University of Rochester. The system uses a modified version of lazy consistency, where release transactions are delayed and grouped and transactions are performed in the background.Konotothanassis states that the performance of the their modified lazy release protocol outperforms eager release by approximately 20%. | ||

=='''Eager Consistency'''== | |||

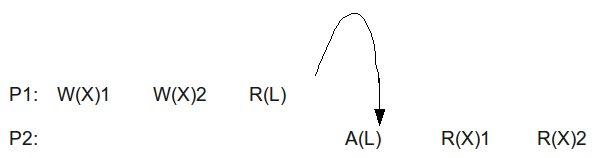

The difference between eager release and lazy release, is that eager release performs updates immediately upon release. Unlike lazy consistency, which delays updates until after subsequent acquire, the eager release will perform updates immediately. The following illustrates the procedure. | |||

<center>[[Image:eager.jpg|120p]]</center> | |||

<center>'''Eager consistency'''</center> | |||

Similar to lazy consistency, changes needed to be made to release consistency to support eager consistency. | |||

One type of system that implements eager consistency is the '''DASH'''<sup>[7]</sup> multiprocessor from Stanford. The write operations trigger coherence synchronization transactions immediately, and the transactions execute concurrently as the application continues executing. The processor will only stall when the write buffer overflows, or another release operation is encountered and other previous transactions have not completed. The DASH approach attempts to mask the latency of writes by performing them in the background. | |||

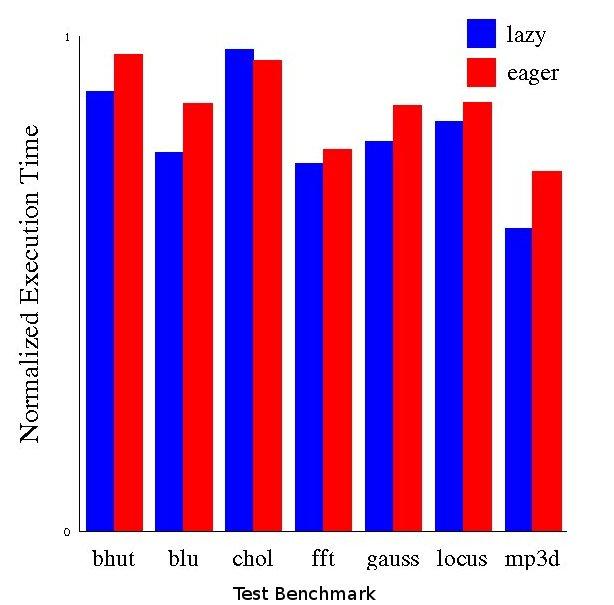

==='''Performance of Eager vs Lazy Consistency'''=== | |||

Lazy consistency bundles memory operations together after release, so that unnecessary memory operations such as invalidations can be avoided. This can improve the performance of a program by reducing the memory contention and number of transactions. On the other hand, by delaying the release, the latency of the synchronization may increase. Eager release consistency performs the synchronization immediately, reducing the synchronization delay time. However, eager release may | |||

cause higher memory contention. The following graph depicts an experiment run by Kontothanassis et. al. at the University of Rochester, where the performance of eager and lazy consistencies were compared on different sets of data. | |||

The | |||

<center>[[Image:compare2.jpg|120p]]</center> | |||

<center>'''Eager vs Lazy consistencies'''<sup>[7]</sup></center> | |||

The performance <sup>[8]</sup>of lazy consistency ranged from 5% to 17% better than eager consistency on these tests. The lazy consistency is based upon a software algorithm. Lazy consistency may delay the synchronization process since it delays the release operation. On programs where the delay can be masked by performing other useful computations, performance can be improved. However, on systems or programs that cannot mask the delay, performance may be decreased. <br /> | |||

The results show that lazy release consistency has better performance in nearly all of the tests. The advantage of the lazy protocol here stems from the reduction of memory contention and the elimination of redundant memory hops, which leads to overall better performance. | |||

=='''Compiler Optimizations'''== | |||

In '''WO, RCsc and RCpc''' models, the compiler<sup>[3]</sup> has the flexibility to reorder memory operations between two consecutive synchronization or special operations. Similarly, in the '''Alpha, RMO, and PowerPC''' models, the compiler has full flexibility to reorder operations between consecutive fence instructions. Since most programs use these operations or instructions infrequently, the compiler gets large regions of code where virtually all optimizations that are used for uniprocessor programs can be safely applied. | |||

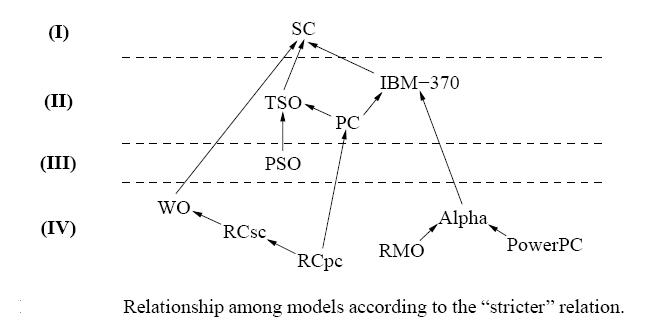

Below is the comparison of the relaxed consistency models. Level I being the strictest and Level IV being the most relaxed | |||

<center>[[Image:compare.jpg|120p]]</center> | |||

<center>'''Comparison of Relaxed models'''<sup>[6]</sup></center> | |||

=='''References'''== | |||

[1]: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.39.4728&rep=rep1&type=pdf <br /> | |||

[2]: Fundamentals of Parallel Computer Architecture-Lectures slides by Prof.Edward Gehringer <br /> | |||

[3]: http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf <br /> | |||

[4]: Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin <br /> | |||

[5]: http://rsim.cs.illinois.edu/~sadve/JavaWorkshop00/talk.pdf <br /> | |||

[6]: http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf <br /> | |||

[7]: http://www.cs.rochester.edu/research/cashmere/SC95/lazeag.html <br /> | |||

[8]: http://courses.ncsu.edu/csc506/lec/001/lectures/notes/lec17.pdf | |||

< | |||

Latest revision as of 20:57, 4 May 2010

MEMORY CONSISTENCY MODELS

The interface[1] for memory in a shared memory multiprocessor is called a memory consistency model. The memory consistency model of a shared-memory multiprocessor provides a formal specification[3] of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system.

SEQUENTIAL CONSISTENCY

Lamport[1] defined a multiprocessor to be Sequentially consistent [SC] if:

'the result of any execution is the same as if the operations of all the processors were executed in some sequential order and the operations of each individual processor appear in this sequence in the order specified by the program'.

The main advantage of selecting SC as the interface to shared memory hardware is that it is programmer’s intuition. SC permits coherence caching, prefetching and multithreading and yet keeps the software simple. In particular, SC enables middleware authors to use the same target as a multiprogrammed uniprocessor. Thus, SC should be a preferable choice for hardware architects.

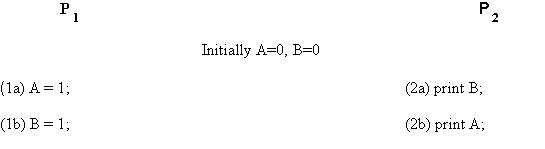

Limitations of SC

Under SC, 1a -> 1b and 2a -> 2b. [2] So, in the above example A= 0 and B=0 never possible under SC.

1. A processor [3] must ensure that its previous memory operation is complete before proceeding with its next memory operation in program order. This requirement is called the program order requirement.

2. Determining [3] the completion of a write requires an explicit acknowledgment message from memory. Additionally, in a cache-based system, a write must generate invalidate or update messages for all cached copies.

3, SC makes it hard to use write buffers [1],because write buffers cause operations to be presented to the cache coherence protocol out of program order.

4. 'Some processors are precluded from overlapping multiple reads and writes in the memory system. This restriction is crippling in systems without caches'.

5. Out of order execution [4] and instruction level parallelism can’t be used under SC.

6. Bypassing [4] a store to a younger load value is not allowed under SC since it violates atomicity.

7. 'Also, non blocking caches are not allowed'.

8. For compilers [3], an analog of the program order requirement applies to straightforward implementations.

INTUITION behind relaxed memory-consistency models

After observing the limitations of SC, we conclude that the performance suffers greatly. The key idea will be to make execution of memory accesses faster and allow overlapping.

One possible solution is prefetching [4] When the older (previous) load/store is completed the load which has already issued a prefetch can access the cache and complete sooner because of cache hit. Also, when a store’s address is generated, a prefetch exclusive can be issued even though there are older pending load/stores. When these older loads/stores complete, the store can access the cache and complete without going to the bus.

After a block is been prefetched into the cache, it can be invalidated or after a block is prefetched in exclusive state, another processor may read, downgrading state to shared.

So, prefetches are useless because the load will suffer a cache miss and store with still need to go the bus. They also incur unnecessary traffic. hence, it is not a perfect solution.

Next possible solution is speculation[4] With speculation, a younger (later) load is allowed to access the cache, but marked as speculative. If, by the time the first load completes and the block read by the second load has not been invalidated or naturally evicted, then the value obtained by the second load would be same, if it had to wait for the first load to be executed atomically. So, speculation is successful, if it fails, cancel the younger load and re-execute it. Also, a younger load can be speculative to an older pending store.

However, applying it to store is harder since it cannot be canceled easily.

Both these techniques have been used in MIPS R10000 and Intel Pentium architecture.

Still, the compiler cannot re order memory accesses when compiling the program. Welcome relaxed memory consistency models.

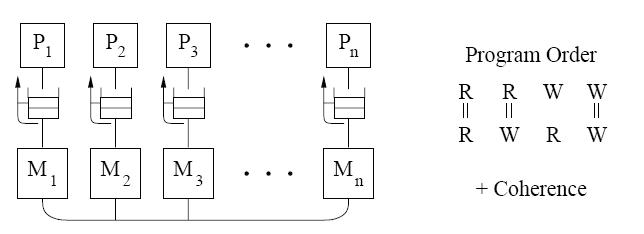

RELAXED CONSISTENCY MODELS

Relaxed memory consistency models[3] can be categorized based on three key characteristics: (1) how they relax the program order requirement, i.e.: whether they relax the order from a write to a following read, between two writes, and finally from a read to a following read or write and (2) how they relax the write atomicity requirement, i.e: whether they allow a read to return the value of another processor’s write before the write is made visible to all other processors.(3) A relaxation to both program order and write atomicity, where a processor is allowed to read the value of its own previous write before the write is made visible to other processors. In a cache-based system, this relaxation allows the read to return the value of the write before the write is serialized with respect to other writes to the same location and before the invalidations/updates of the write reach any other processor.

| Relaxation | Commercial Systems Providing the Relaxation |

| W -> R Order | AlphaServer 8200/8400, Cray T3D, Sequent Balance, SparcCenter1000/2000 |

| W -> W Order | AlphaServer 8200/8400, Cray T3D |

| R -> RW Order | AlphaServer 8200/8400, Cray T3D |

| Read Others’ Write Early | Cray T3D |

| Read Own Write Early | AlphaServer 8200/8400, Cray T3D, SparcCenter1000/2000 |

Relaxing the WRITE to READ Program Order

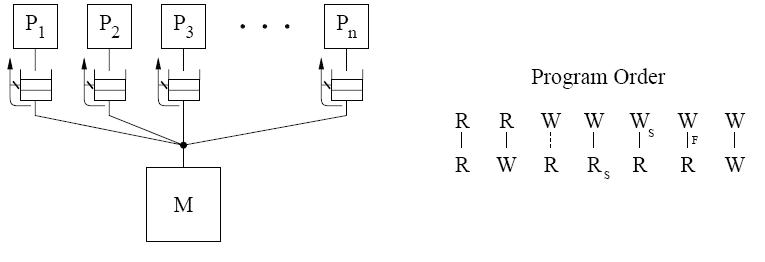

Here, the program orders are relaxed for a write followed by a read to a different location. These models [3] include the IBM370model, the SPARC V8 TSO and the processor consistency model (PC) .These models allow a read to be reordered with respect to previous writes from the same processor.

IBM 370

It is the strictest because it does not allow a read from returning the value of a write before the write is made visible to all processors. Therefore, even if a processor issues a read to the same address as a previous pending write from itself, the read must be delayed until the write is made visible to all processors.

Here, Rs & Ws mean read and write generated by serialization instructions.

Dashed lines indicate in program order.

To ensure the program order constraint, it provides special serialization instructions that may be placed between the two operations. eg. compare&swap.

Placing a serialization instruction after the write on each processor provides sequentially consistent results.

It does not need a safety net to ensure atomicity since it does not relax atomicity.

TSO model

The total store ordering (TSO) model is one of the models proposed for the SPARC V8 architecture.

It allows a read to return the value of its own processor’s write even before the write is serialized with respect to other writes to the same location.

It requires that no other writes to any location appear to occur between the read and the write of the read-modify-write.

It does not provide explicit safety nets.

The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes.

The disadvantages to rely on a read-modify write as a safety net:

A system may not implement a general read-modify-write that can be used to appropriately replace any read or write and replacing a read by a read-modify-write needs invalidating other copies of the line.

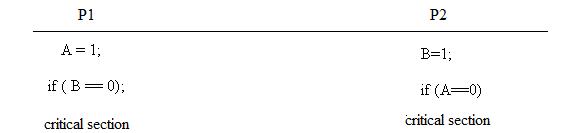

PC model

Double lines[6] between reads/writes denote the fact that operations may no longer be atomic and that all sub-operations of the first operation must complete before any sub-operations of the second operation.

PC relaxes both constraints, such that a read can return the value of any write before the write is serialized or made visible to other processors.

It requires that no other writes to the same location appear to occur between the read and the write of the read-modify-write.

The program order between a write and a following read appears to be maintained if the read is replaced by or is already part of a read-modify-write.

They do not provide explicit safety nets.

A write is guaranteed to appear atomic if every read that may return the value of the write is part of, or replaced with, a read-modify-write.

However, it has the same disadvantage of TSO for relying on read-modify-write.

PC is less useful than the others, because it does not guarantee a property called causality. Causality requires that all other processors see the effect of a processor’s operation when any other processor sees it. Without causality, processor consistency can fail to look like SC in important cases involving three or more processors.

Relaxing the WRITE to READ and WRITE to WRITE Program Orders

The partial store ordering model (PSO)[3] is an extension of the TSO model and is the second model provided by the SPARC V8 architecture.

As far as atomicity is concerned, it is same as TSO.

PSO provides an explicit STBAR instruction for imposing program order between two writes.

However, PSO is still not good enough to be useful to a compiler.

Relaxing all program orders

The program order [3] between all operations to different locations is relaxed. Thus, a read or write operation may be reordered with respect to a following read or write to a different location.

Examples of such models are: weak ordering(WO) model, release consistency models (RCsc/RCpc), and three models proposed for commercial architectures: the Digital Alpha, SPARC V9 relaxed memory order (RMO), and IBM PowerPC models.

All the above models allow memory operations following a read operation to be overlapped or reordered with respect to the read operation.In hardware, this flexibility provides the possibility of hiding the latency of read operations.

All of the models in this group allow a processor to read its own write early.

RCpc and PowerPC are the only models whose straightforward implementations allow a read to return the value of another processor’s write early.Except for Alpha, all the above models allow the reordering of two reads to the same location.

Complex implementations of RCsc can potentially violate atomicity such that it can affect the result of a program.

The WO, RCsc, and RCpc models distinguish memory operations based on their type, and provide stricter ordering constraints for some types of operations. On the other hand, the Alpha, RMO, and PowerPC models provide explicit fence instructions for imposing program orders between various memory operations.

WEAK ORDERING

The weak ordering model (also known as weak consistency) was proposed by Dubois et al. [DSB86][6] and relies on maintaining program order only at the synchronization points in the program.

Many programs use synchronization to make sure data is up-to-date and programs to do not exhibit nondeterministic behavior. The synchronization primitive will ensure that all previous instructions before the synchronization primitive have executed and committed to memory before the current instruction is executed.

Rs and Ws denote read and write identified as synchronization.

Triple lines denotes that the read sub-operation and the sub-operations of the write (possibly from a different processor) whose value is returned by the read should complete before any of the sub-operations of the operation that follows the read in program order.

The weak ordering model assumes that 1) programs are properly synchronized and that 2) synchronization is supported by the hardware.

Also, assumptions about the synchronization primitives are that 1) before synchronization, all prior loads, stores, and synchronizations must have been completed and 2) all loads, stores, synchronizations following must not have been issued yet.

With these assumptions, the weak ordering model can be used. The weak ordering model allows memory accesses between synchronization points to be reordered.

The following illustration shows how this model is used.This model is modified from class notes

With weak ordering memory operations between synchronization points can be reordered. This means that W(X)1 and W(X)2 can be reordered before the synchronization point. In fact, W(X)1 and W(X)2 may not even have been fully committed to memory prior to the synchronization point. As long as all of the operations executed and commit to memory before the synchronization, weak ordering is satisfied. Similarly, the read operations of P2 can also be reordered. Weak ordering, however, does not allow reordering to occur across synchronization points, as illustrated in the figure below.

The R(X)1 and W(X)2 across synchronization points cannot be reordered, otherwise it would violate weak ordering and would lead to non-deterministic results.

Several synchronization[4] mechanisms can be used including barriers, fences, fetch-and-op, test-and-set, load linked and store conditional, and so on. The synchronization mechanisms must ensure that instructions stall until all prior loads and stores complete and propagate.Compared to SC, WO provides better performance since memory operations can be reordered between synchronization points. Compared to PC, WO typically gives better performance. However, when there are several synchronization points, limiting reordering potential, PC may achieve better performance.

RELEASE CONSISTENCY

Release consistency is similar to weak ordering, but it can relax the model even further by distinguishing between the two types of synchronization: acquisition and release. In programs, some thread will produce some data, and another thread will consume it. The consuming thread cannot run before the producing thread. Looking at the same code from before, it is clear that P0 is the producer and P1 is the consumer.

From the weak ordering model, when we encounter an 'S' instruction, or synchronization point, here is what happens.

Looking at the code above the producer P0 upon encountering the synchronization instruction will make sure to see previous writes by other processors, enter critical selection and update ready, then propagate to other processors.The consumer P1, upon encountering the synchronization point will make sure to see previous by other processors, enter critical selection and read ready, then propagate to other processors.

Notice that both the consumer and producer perform redundant operations. The producer does not need to see previous writes from other processors because it doesn't read any values! Similarly, the consumer does not need to propagate updates to other processors because it never made any updates.

The release consistency model addresses this problem by distinguishing synchronization points for both producer and consumer. The producer synchronization is called a release and the consumer synchronization is called an acquisition.

Here are the procedures for release and acquisition

By definition of release consistency, an acquisition must ensure that following load and stores must not executed until acquisition is complete. Also, a release must ensure that previous load and stores must complete before release. The following diagram shows release consistency in action.

There are two flavors[6] of release consistency that differ in the order that is maintained among synchronization operations. The first flavor maintains sequential consistency among synchronization operations and is referred to RCsc, while the second flavor maintains processor consistency among such operations and is called RCpc. Except for the program order requirements, these two models are identical.

Similar to WO, RC requires that programs are synchronized correctly. In addition, the synchronizations must be labeled as either an acquisition or release. This obviously increases programming complexity. By reducing redundant operations, the performance of release is better than WO. This performance increase, however, may be overshadowed by the additional cost of programming complexity in identifying which synchronizations are release or acquisition.

Comparing RC and WO

RC allows for extra reordering and overlap of memory operations across acquires and releases.

Alpha, RMO, and PowerPC

The Alpha, RMO, and PowerPC models all provide explicit fence instructions as their safety nets.[3]

Alpha model

The Alpha model provides two different fence instructions, the memory barrier (MB) and the write memory barrier (WMB). The memory barrier instruction is used to maintain program order and memory operations both before and after the instruction for both read and writes. In constrast, the write memory barrier instruction only maintains order among write operations.

SC can be guaranteed[6] by placing a fence instruction between any pair of memory operations. Weak ordering can be guaranteed by placing a fence instruction before and after every memory operation for a synchronization.

SPARC V9 Relaxed Memory Order (RMO)

The SPARC V9 architecture uses the relaxed memory order model (RMO)[6]. RMO is an extension of the TSO and PSO models. RMO extends the PSO and allows reads to complete out of order with respect to the following reads and writes.

RMO provides four types of fences that can be used to preserve program order between any two types of operations. The fence type can be selected using the appropriate opcode.

A MEMBAR[3] instruction can be used to order a group of previous reads and writes with respect to future reads and writes. The MEMBAR instruction can eliminate the need to use read-modify-writes used in the SPARC V8 TSO and PSO models.

IBM PowerPC model

The IBM PowerPC model exposes the multiple-copy semantics[6] of memory to the programmer. The IBM PowerPC model provides a single fence instruction called the SYNC. The SYNC instruction is similar[3] to the MB instruction of the Alpha model. However, if a SYNC instruction is placed between two reads from the same location, the second read may return an older value, resulting from an older write. This behavior can cause non-deterministic outcomes and may force the use of read-modify-write operations to ensure correctness. In other words, the IBM PowerPC allows writes to be seen earlier by another read, thus read-modify-writes may be needed to ensure correctness. Read forwarding from the buffer are actually safe.

Here is a summary of relaxed models

| Relaxation | W-> R order | W -> W order | R -> RW order | Read Others Write Early Order | Read Own Write Early Order | Safety net |

| SC | Y | |||||

| IBM 370 | Y | serialization instructions | ||||

| Total Store Ordering | Y | Y | RMW | |||

| PC | Y | Y | Y | RMW | ||

| PSO | Y | Y | Y | RMW, STBAR | ||

| WO | Y | Y | Y | Y | synchronization | |

| RCsc | Y | Y | Y | Y | release, acquire, nsync, RMW | |

| RCpc | Y | Y | Y | Y | Y | release, acquire, nsync, RMW |

| Alpha | Y | Y | Y | Y | MB, WMB | |

| RMO | Y | Y | Y | Y | MEMBARs | |

| PowerPC | Y | Y | Y | Y | Y | Sync |

Y: corresponding relaxation is allowed by straightforward implementations of the corresponding model & can be detected by the programmer

Lazy Consistency

Further optimizations to the RC model are possible. When an A(L) or acquisition instruction is encountered we don't need the updated values from other processors immediately until sometime after the A(L) instruction. This allows the release to be delayed or "lazy." The release values are also clumped together so all of the modified values needed are sent in one pack. The following is illustrated below.

Instead of acquiring at the A(L) instruction, it is delayed until after. Also, changes to memory addresses 1 and 2 are sent together. This model allows more time for release and reduces memory traffic by propagating changes together in one group. Changes in RC model to support delaying the release are necessary. LRC is not employed in hardware supported cache coherent systems.

Delaying the release and grouping the releases together may cause additional latency on the subsequent acquire. However, with lazy consistency the number of writes is reduced and performance may improve on systems that high memory overheads.

One system that implements lazy consistency is an experimental system from Kontothanassis et. al. [7]from the University of Rochester. The system uses a modified version of lazy consistency, where release transactions are delayed and grouped and transactions are performed in the background.Konotothanassis states that the performance of the their modified lazy release protocol outperforms eager release by approximately 20%.

Eager Consistency

The difference between eager release and lazy release, is that eager release performs updates immediately upon release. Unlike lazy consistency, which delays updates until after subsequent acquire, the eager release will perform updates immediately. The following illustrates the procedure.

Similar to lazy consistency, changes needed to be made to release consistency to support eager consistency.

One type of system that implements eager consistency is the DASH[7] multiprocessor from Stanford. The write operations trigger coherence synchronization transactions immediately, and the transactions execute concurrently as the application continues executing. The processor will only stall when the write buffer overflows, or another release operation is encountered and other previous transactions have not completed. The DASH approach attempts to mask the latency of writes by performing them in the background.

Performance of Eager vs Lazy Consistency

Lazy consistency bundles memory operations together after release, so that unnecessary memory operations such as invalidations can be avoided. This can improve the performance of a program by reducing the memory contention and number of transactions. On the other hand, by delaying the release, the latency of the synchronization may increase. Eager release consistency performs the synchronization immediately, reducing the synchronization delay time. However, eager release may cause higher memory contention. The following graph depicts an experiment run by Kontothanassis et. al. at the University of Rochester, where the performance of eager and lazy consistencies were compared on different sets of data.

The performance [8]of lazy consistency ranged from 5% to 17% better than eager consistency on these tests. The lazy consistency is based upon a software algorithm. Lazy consistency may delay the synchronization process since it delays the release operation. On programs where the delay can be masked by performing other useful computations, performance can be improved. However, on systems or programs that cannot mask the delay, performance may be decreased.

The results show that lazy release consistency has better performance in nearly all of the tests. The advantage of the lazy protocol here stems from the reduction of memory contention and the elimination of redundant memory hops, which leads to overall better performance.

Compiler Optimizations

In WO, RCsc and RCpc models, the compiler[3] has the flexibility to reorder memory operations between two consecutive synchronization or special operations. Similarly, in the Alpha, RMO, and PowerPC models, the compiler has full flexibility to reorder operations between consecutive fence instructions. Since most programs use these operations or instructions infrequently, the compiler gets large regions of code where virtually all optimizations that are used for uniprocessor programs can be safely applied.

Below is the comparison of the relaxed consistency models. Level I being the strictest and Level IV being the most relaxed

References

[1]: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.39.4728&rep=rep1&type=pdf

[2]: Fundamentals of Parallel Computer Architecture-Lectures slides by Prof.Edward Gehringer

[3]: http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf

[4]: Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin

[5]: http://rsim.cs.illinois.edu/~sadve/JavaWorkshop00/talk.pdf

[6]: http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf

[7]: http://www.cs.rochester.edu/research/cashmere/SC95/lazeag.html

[8]: http://courses.ncsu.edu/csc506/lec/001/lectures/notes/lec17.pdf