CSC/ECE 506 Spring 2010/ch 6 PP: Difference between revisions

No edit summary |

|||

| (36 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Cache Structures of Multi-Core Architectures= | |||

With the advent of multicore and many core architectures, we are facing a problem that is relatively new to parallel computing, namely, the management of hierarchical parallel caches. This chapter describes some of the mainstream memory organizations in multiprocessor architectures. It also | ==Overview== | ||

With the advent of multicore and many-core architectures, we are facing a problem that is relatively new to parallel computing, namely, the management of hierarchical parallel caches. This chapter describes some of the mainstream memory organizations in multiprocessor architectures. It also describes some of write and placement policies. | |||

==Shared Memory Multiprocessors== | |||

Scalable shared-memory multiprocessors are emerging as attractive platforms for applications with high-performance demands. What makes these machines attractive is the shared address space, which allows processors in a multiprocessor to share data the same way it is shared by multiple processes in a sequential machine. The shared-memory paradigm makes it easier to write parallel programs, but tuning the application to reduce the impact of frequent long-latency memory accesses still requires substantial programmer effort. | Scalable shared-memory multiprocessors are emerging as attractive platforms for applications with high-performance demands. What makes these machines attractive is the shared address space, which allows processors in a multiprocessor to share data the same way it is shared by multiple processes in a sequential machine. The shared-memory paradigm makes it easier to write parallel programs, but tuning the application to reduce the impact of frequent long-latency memory accesses still requires substantial programmer effort. | ||

| Line 12: | Line 14: | ||

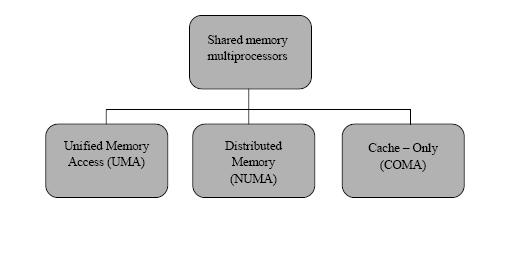

From the perspective of system architecture, current mainstream shared memory multiprocessors fall into three categories as shown in ''Figure 1'' : | From the perspective of system architecture, current mainstream shared memory multiprocessors fall into three categories as shown in ''Figure 1'' : | ||

'''UMA'''(Unified Memory Access), '''NUMA''' (Non-Uniform Memory Access) and '''COMA''' (Cache-only Memory Architectures). | '''UMA''' (Unified Memory Access), '''NUMA''' (Non-Uniform Memory Access) and '''COMA''' (Cache-only Memory Architectures). | ||

[[Image:Shared.jpg]] | [[Image:Shared.jpg]] | ||

| Line 19: | Line 21: | ||

===UMA-Uniform Memory Access=== | |||

All the processors in the UMA model share the physical memory uniformly. In a UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data. Each processor may use a private cache. Peripherals are also shared in some fashion; The UMA model is suitable for general purpose and time sharing applications by multiple users. It can be used to speed up the execution of a single large program in time critical applications. | |||

All the processors in the UMA model share the physical memory uniformly. In a UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data. Each processor may use a private cache. Peripherals are also shared in some fashion; The UMA model is suitable for general-purpose and time-sharing applications by multiple users. It can be used to speed up the execution of a single large program in time critical applications. | |||

[[Image:uma.jpg]] | [[Image:uma.jpg]] | ||

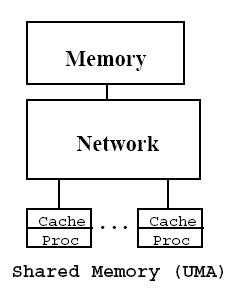

''Figure 2: UMA-Uniform Memory Access'' | ''Figure 2: UMA--Uniform Memory Access'' | ||

SMP is a common UMA architecture, in which multiple processors are connected on the system memory symmetrically and access the system memory equally and uniformly. Since all processors in the SMP system share the bus and competition conflict upgrades dramatically when the number of processors increases. | '''SMP''' is a common UMA architecture, in which multiple processors are connected on the system memory symmetrically and access the system memory equally and uniformly. Since all processors in the SMP system share the bus and competition conflict upgrades dramatically when the number of processors increases. | ||

Due to the performance bottleneck of the system bus, current SMP | Due to the performance bottleneck of the system bus, current SMP systems usually have only tens of processors with limited scalability. This architecture provides almost identical memory access latencies for any processor. But on the other hand, a '''common system bus''' is a potential bottleneck of the entire memory system in terms of bandwidth. Indeed, if a multi-threaded application is critical to memory bandwidth, its performance will be limited by this memory organization. | ||

===NUMA (Non-Uniform Memory Access)=== | |||

NUMA is specialized memory architecture for multiprocessor based systems where a set of CPUs on one system memory bus is fixed and other sets of CPUs are on different memory buses and the various processing nodes are connected by means of a high speed connection. This architecture is in contrast SMP where all memory access is shared through a single memory bus.The whole system logically divides into multiple nodes, which can access both local and remote memory resources. It is faster to access local memory than access remote memory. This is the reason for the name, non-uniform memory access architecture. | NUMA is specialized memory architecture for multiprocessor based systems where a set of CPUs on one system memory bus is fixed and other sets of CPUs are on different memory buses and the various processing nodes are connected by means of a high speed connection. This architecture is in contrast SMP where all memory access is shared through a single memory bus.The whole system logically divides into multiple nodes, which can access both local and remote memory resources. It is faster to access local memory than access remote memory. This is the reason for the name, '''non-uniform memory access architecture'''. | ||

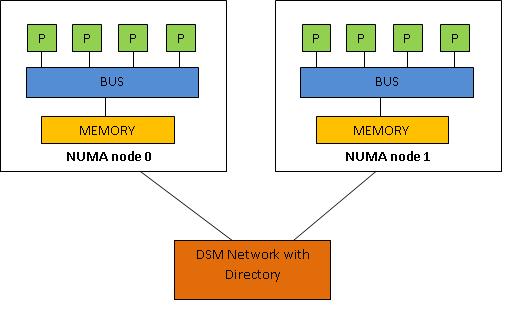

Consider ''Figure 3''. Each group of processors has its own memory and possibly its own I/O channels, but each CPU can access memory associated with other groups in a coherent way. Each group is called a NUMA node. The number of CPUs within a NUMA node depends on the hardware vendor. | Consider ''Figure 3''. Each group of processors has its own memory and possibly its own I/O channels, but each CPU can access memory associated with other groups in a coherent way. Each group is called a NUMA node. The number of CPUs within a NUMA node depends on the hardware vendor. | ||

| Line 44: | Line 47: | ||

''Figure 3: NUMA-Non Uniform Memory Access'' | ''Figure 3: NUMA-Non Uniform Memory Access'' | ||

In a node, the processors share a common memory space or “local” memory. For example, an SMP system’s shared memory would make up a node. This memory, of course, provides the fastest non-cache memory access for the node’s CPU. Multiple nodes are then combined together to form a NUMA machine. Memory transfers between nodes are handled by routers. Memory that can only be accessed via a router is called ‘remote’ memory. | In a node, the processors share a common memory space or “local” memory. For example, an SMP system’s shared memory would make up a node. This memory, of course, provides the fastest non-cache memory access for the node’s CPU. Multiple nodes are then combined together to form a NUMA machine. Memory transfers between nodes are handled by routers. Memory that can only be accessed via a router is called ‘remote’ memory. | ||

| Line 49: | Line 54: | ||

NUMA is easy to be managed and expanded, but costs a lot of time to access remote memory. The main benefit of NUMA is scalability. The NUMA architecture was designed to surpass the scalability limits of the SMP architecture. With SMP, all memory access is posted to the same shared memory bus. This works fine for a relatively small number of CPUs, but not when you have dozens, even hundreds, of CPUs competing for access to the shared memory bus. NUMA alleviates these bottlenecks by limiting the number of CPUs on any one memory bus and connecting the various nodes by means of a high speed interconnection. | NUMA is easy to be managed and expanded, but costs a lot of time to access remote memory. The main benefit of NUMA is scalability. The NUMA architecture was designed to surpass the scalability limits of the SMP architecture. With SMP, all memory access is posted to the same shared memory bus. This works fine for a relatively small number of CPUs, but not when you have dozens, even hundreds, of CPUs competing for access to the shared memory bus. NUMA alleviates these bottlenecks by limiting the number of CPUs on any one memory bus and connecting the various nodes by means of a high speed interconnection. | ||

===Cache-Only Memory Architectures (COMA)=== | |||

In a cache-only memory architecture, memory organization is similar to that of the NUMA in that each processor holds a portion of the address space. However, the partitioning of data among the memories does not have to be static, since all distributed memories are organized like large (second level) caches. The task of such a memory is twofold. Besides being a large cache for the processor, it may also contain some data from the shared address space that the processor never accessed before- in other words, it is a cache and a virtual part of the shared memory. This is called attraction memory. | In a cache-only memory architecture, memory organization is similar to that of the NUMA in that each processor holds a portion of the address space. However, the partitioning of data among the memories does not have to be static, since all distributed memories are organized like large (second level) caches. The task of such a memory is twofold. Besides being a large cache for the processor, it may also contain some data from the shared address space that the processor never accessed before- in other words, it is a cache and a virtual part of the shared memory. This is called '''attraction memory'''. | ||

COMA increases the chances of data being available locally because the hardware transparently replicates the data and migrates it to the memory module of the node that is currently accessing it. Each memory module acts as a huge cache memory in which each block has a tag with the address and the state. | COMA increases the chances of data being available locally because the hardware transparently replicates the data and migrates it to the memory module of the node that is currently accessing it. Each memory module acts as a huge cache memory in which each block has a tag with the address and the state. | ||

[[Image:coma.jpg]] | |||

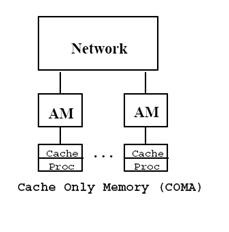

''Figure 4: COMA- Cache-Only Memory Architectures'' | |||

When a processor requests a block from a remote memory, the block is inserted in both the processor’s cache and the node’s AM. A block can be evicted from an AM if another block needs the space. Ideally, with this support, the processor dynamically attracts its working set into its local memory module. The data the processor is not accessing overflows and is sent to other memories. Because a large AM is more capable of containing the node’s current working set than a cache is, more of the cache misses are satisfied locally within the node. | When a processor requests a block from a remote memory, the block is inserted in both the processor’s cache and the node’s AM. A block can be evicted from an AM if another block needs the space. Ideally, with this support, the processor dynamically attracts its working set into its local memory module. The data the processor is not accessing overflows and is sent to other memories. Because a large AM is more capable of containing the node’s current working set than a cache is, more of the cache misses are satisfied locally within the node. | ||

=='''Placement Policies'''== | |||

'''Direct Mapped Cache:''' A block in memory can only be stored at a particular location in the cache. This policy is called a direct-mapped cache organization in which we only have an associativity of one. These caches suffer badly from Conflict Misses. | |||

'''Fully Associative Cache:''' A block in memory can be stored at any blocks in the cache. This is actually an N-way associative cache with only one set containing N blocks. | |||

'''Set Associative Cache:''' In a set-associative cache, a block can be kept at any way of a single set, depending on its address. A set-associative cache organization is much more resistant to conflicts than a direct-mapped cache, and is yet much cheaper to implement than a fully-associative cache. | |||

=='''Write Policies'''== | |||

===Write-back=== | |||

A write changes data only in the cache block without being propagated to the lower level memory hierarchy . Only when the block is evicted, the block is “written back”, or propagated and overwrites the stale version at the lower level. | |||

===Write-through=== | |||

Every time the processor writes to a cached memory location, both the cache and all the underlying memory components are updated. | |||

===Write-Allocate=== | |||

On a write miss, the block is brought into the cache before it is written. | |||

===Write-No Allocate=== | |||

On a write miss, the write is propagated down to the lower level memory hiearchy component without bringing the block into the cache. | |||

=='''Replacement Policies'''== | |||

Cache replacement policy has by far the strongest influence on the predictability of the cache behavior (cache hit rate). Following are some of the replacement policies used in caches today: | |||

===Least Recently Used (LRU)=== | |||

Cache block that was accessed the farthest back in the past is selected to be evicted to make room for a new block. This policy exploits the temporal locality that data accessed recently is more likely to be accessed again. | |||

===First-In First-Out (FIFO)=== | |||

FIFO can be seen as a queue: in this policy new elements are inserted at the front evicting elements at the end of the queue. Disadvantage of this policy is that even if a block is accessed more number of times than another that was brought in later, it will be evicted. | |||

===Pseudo-LRU (PLRU)=== | |||

For caches with large associativity (generally >4 ways), the implementation cost of LRU becomes prohibitive. If a scheme that almost always discards one of the least recently used items is sufficient, the PLRU algorithm can be used which only needs one bit per cache item to work. | |||

'''Two-way set associative''' - one bit- indicates which line of the two has been reference more recently | |||

'''Four-way set associative''' - three bits | |||

Each bit represents one branch point in a binary decision tree; let 1 represent that the left side has been referenced more recently than the right side, and 0 vice-versa. When a line must be replaced, the cache selects | |||

which of L0:L1 and L2:L3 was least recently used and marks it for replacement. This decision tree is shown in ''Figure 5''. | |||

[[Image:plru.jpg]] | |||

''Figure 5: Decision tree for Pseudo-LRU Replacement Strategy'' | |||

===Most-Recently Used (MRU)=== | |||

Evicting the most-recently used (MRU) page does very well on LRU’s worst case. In general, however, MRU is a bad idea, since many programs exhibit temporal locality in their memory accesses, and MRU is effectively assuming that memory accesses will not exhibit locality. | |||

===Least Frequently Used (LFU)=== | |||

LFU counts how often an item is needed. Those that are used least often are discarded first.LFU requires that a reference count be maintained for each block in the cache. A block/object reference count is incremented on each reference. When a replacement is necessary, LFU replaces the block with the lowest reference count. | |||

===Random=== | |||

To spread allocation uniformly, blocks to be evicted are randomly selected. Some systems generate pseudorandom block numbers to get reproducible behavior, which is useful when debugging hardware | |||

===Priority Cache(PC)=== | |||

Uses both run-time and compile-time information to select a block for replacement. PC associates a data priority bit with each cache block. The compiler through two additional bits associated with each memory access instruction, assigns priorities. These two bits indicate whether the data priority bit should be set and also indicate priority of the block (low or high). The cache block with the lowest priority is the one to be replaced. | |||

===Greedy Dual Size (GDS)=== | |||

This policy combines temporal locality, size and other cost information. The algorithm assigns a cost/size value to each cache block. In the simplest case the cost is set to 1 to maximize the hit ratio, but costs such as latency, network bandwidth can be explored. GDS assigns a key value to each object. The key is counted as the object's reference count plus the cost information divided by its size. There are several variations to GDS algorithm one of which takes into account coherency information and expiration time of the cache ('''''GDSlifetime'''''). The second variation uses the observation that different types of applications change their references at different rates ('''''GDStype'''''). A last GDS variation is ('''''GDSlatency'''''), which uses a key value for an object the quantity - ''latency/size'' where latency is measured delay for the last retrieval of the object. | |||

==Cache Coherence== | |||

In multiprocessing system, suppose a task running on processor P requests the data in global memory location X, the contents of X are copied to processor P’s local cache. Now, suppose processor Q also accesses X. What happens if Q wants to write a new value over the old value of X? | |||

There are two fundamental cache coherence policies: | |||

* '''Write-invalidate''' - On a write to a block by a cache all other cached copies are made invalid. Only when an invalid block is accessed, it is updated by bringing in the new block. | |||

* '''Write-update'''- On a write to a block by any cache, the updated value is propagated to all other caches. | |||

These two policies along with write back and write-through caches form many cache coherence protocols that control replacement and write policies in multiprocessor architectures. Some of the coherence protocols are: | |||

* MSI protocol | |||

* MESI protocol | |||

* MOSI protocol | |||

* MOESI protocol | |||

* MERSI protocol | |||

* MESIF protocol | |||

* Write-once protocol | |||

* Synapse protocol | |||

* Berkeley protocol | |||

* Illinois protocol | |||

* Firefly protocol | |||

* Dragon protocol | |||

Choice of the consistency model is crucial to designing a cache coherent system. Coherence models differ in performance and scalability; each must be evaluated for every system design. | |||

==References== | |||

# http://software.intel.com/en-us/blogs/2009/03/11/learning-experience-of-numa-and-intels-next-generation-xeon-processor-i/ | |||

# Eric Hagersten, Anders Landin, and Seif Haridi, DDM- A Cache only Memory Architecture, SICS Research Report 1991 | |||

#Yan Solihin, Fundamentals of Computer Architecture. | |||

#http://it.toolbox.com/wiki/index.php/NUMA_Architecture | |||

#http://practical-tech.com/infrastructure/numa-theory-and-practice/ | |||

#Fredrik Dahlgren, Josep Torrellas: Research Feature- Cache Only Memory Architecture | |||

#http://en.wikipedia.org/ | |||

#David E. Culler, Jaswinder Pal Singh, with Anoop Gupta, Parallel Computer Architecture: A Hardware/Software Approach, © 1999 Morgan-Kauffman, ISBN 1-55860-343-3 | |||

#Intel Embedded Pentium Processor Family Dev. Manual, 1998, http://www.intel.com/design/intarch/manuals/273204.htm | |||

#John L. Hennessy and David A. Patterson, Computer Architecture- A Quantitative Approach | |||

#Proceedings of the First Asia-Pacific Conference on Web Intelligence, WI 2001 | |||

Latest revision as of 22:51, 26 February 2010

Cache Structures of Multi-Core Architectures

Overview

With the advent of multicore and many-core architectures, we are facing a problem that is relatively new to parallel computing, namely, the management of hierarchical parallel caches. This chapter describes some of the mainstream memory organizations in multiprocessor architectures. It also describes some of write and placement policies.

Scalable shared-memory multiprocessors are emerging as attractive platforms for applications with high-performance demands. What makes these machines attractive is the shared address space, which allows processors in a multiprocessor to share data the same way it is shared by multiple processes in a sequential machine. The shared-memory paradigm makes it easier to write parallel programs, but tuning the application to reduce the impact of frequent long-latency memory accesses still requires substantial programmer effort.

From the perspective of system architecture, current mainstream shared memory multiprocessors fall into three categories as shown in Figure 1 :

UMA (Unified Memory Access), NUMA (Non-Uniform Memory Access) and COMA (Cache-only Memory Architectures).

Figure 1: Shared Memory Multiprocessors

UMA-Uniform Memory Access

All the processors in the UMA model share the physical memory uniformly. In a UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data. Each processor may use a private cache. Peripherals are also shared in some fashion; The UMA model is suitable for general-purpose and time-sharing applications by multiple users. It can be used to speed up the execution of a single large program in time critical applications.

Figure 2: UMA--Uniform Memory Access

SMP is a common UMA architecture, in which multiple processors are connected on the system memory symmetrically and access the system memory equally and uniformly. Since all processors in the SMP system share the bus and competition conflict upgrades dramatically when the number of processors increases.

Due to the performance bottleneck of the system bus, current SMP systems usually have only tens of processors with limited scalability. This architecture provides almost identical memory access latencies for any processor. But on the other hand, a common system bus is a potential bottleneck of the entire memory system in terms of bandwidth. Indeed, if a multi-threaded application is critical to memory bandwidth, its performance will be limited by this memory organization.

NUMA (Non-Uniform Memory Access)

NUMA is specialized memory architecture for multiprocessor based systems where a set of CPUs on one system memory bus is fixed and other sets of CPUs are on different memory buses and the various processing nodes are connected by means of a high speed connection. This architecture is in contrast SMP where all memory access is shared through a single memory bus.The whole system logically divides into multiple nodes, which can access both local and remote memory resources. It is faster to access local memory than access remote memory. This is the reason for the name, non-uniform memory access architecture.

Consider Figure 3. Each group of processors has its own memory and possibly its own I/O channels, but each CPU can access memory associated with other groups in a coherent way. Each group is called a NUMA node. The number of CPUs within a NUMA node depends on the hardware vendor.

Figure 3: NUMA-Non Uniform Memory Access

In a node, the processors share a common memory space or “local” memory. For example, an SMP system’s shared memory would make up a node. This memory, of course, provides the fastest non-cache memory access for the node’s CPU. Multiple nodes are then combined together to form a NUMA machine. Memory transfers between nodes are handled by routers. Memory that can only be accessed via a router is called ‘remote’ memory.

NUMA is easy to be managed and expanded, but costs a lot of time to access remote memory. The main benefit of NUMA is scalability. The NUMA architecture was designed to surpass the scalability limits of the SMP architecture. With SMP, all memory access is posted to the same shared memory bus. This works fine for a relatively small number of CPUs, but not when you have dozens, even hundreds, of CPUs competing for access to the shared memory bus. NUMA alleviates these bottlenecks by limiting the number of CPUs on any one memory bus and connecting the various nodes by means of a high speed interconnection.

Cache-Only Memory Architectures (COMA)

In a cache-only memory architecture, memory organization is similar to that of the NUMA in that each processor holds a portion of the address space. However, the partitioning of data among the memories does not have to be static, since all distributed memories are organized like large (second level) caches. The task of such a memory is twofold. Besides being a large cache for the processor, it may also contain some data from the shared address space that the processor never accessed before- in other words, it is a cache and a virtual part of the shared memory. This is called attraction memory.

COMA increases the chances of data being available locally because the hardware transparently replicates the data and migrates it to the memory module of the node that is currently accessing it. Each memory module acts as a huge cache memory in which each block has a tag with the address and the state.

Figure 4: COMA- Cache-Only Memory Architectures

When a processor requests a block from a remote memory, the block is inserted in both the processor’s cache and the node’s AM. A block can be evicted from an AM if another block needs the space. Ideally, with this support, the processor dynamically attracts its working set into its local memory module. The data the processor is not accessing overflows and is sent to other memories. Because a large AM is more capable of containing the node’s current working set than a cache is, more of the cache misses are satisfied locally within the node.

Placement Policies

Direct Mapped Cache: A block in memory can only be stored at a particular location in the cache. This policy is called a direct-mapped cache organization in which we only have an associativity of one. These caches suffer badly from Conflict Misses.

Fully Associative Cache: A block in memory can be stored at any blocks in the cache. This is actually an N-way associative cache with only one set containing N blocks.

Set Associative Cache: In a set-associative cache, a block can be kept at any way of a single set, depending on its address. A set-associative cache organization is much more resistant to conflicts than a direct-mapped cache, and is yet much cheaper to implement than a fully-associative cache.

Write Policies

Write-back

A write changes data only in the cache block without being propagated to the lower level memory hierarchy . Only when the block is evicted, the block is “written back”, or propagated and overwrites the stale version at the lower level.

Write-through

Every time the processor writes to a cached memory location, both the cache and all the underlying memory components are updated.

Write-Allocate

On a write miss, the block is brought into the cache before it is written.

Write-No Allocate

On a write miss, the write is propagated down to the lower level memory hiearchy component without bringing the block into the cache.

Replacement Policies

Cache replacement policy has by far the strongest influence on the predictability of the cache behavior (cache hit rate). Following are some of the replacement policies used in caches today:

Least Recently Used (LRU)

Cache block that was accessed the farthest back in the past is selected to be evicted to make room for a new block. This policy exploits the temporal locality that data accessed recently is more likely to be accessed again.

First-In First-Out (FIFO)

FIFO can be seen as a queue: in this policy new elements are inserted at the front evicting elements at the end of the queue. Disadvantage of this policy is that even if a block is accessed more number of times than another that was brought in later, it will be evicted.

Pseudo-LRU (PLRU)

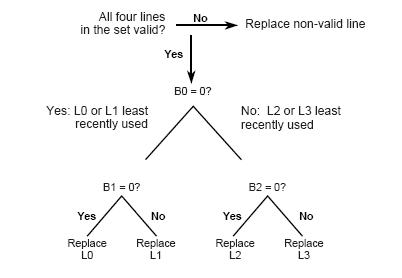

For caches with large associativity (generally >4 ways), the implementation cost of LRU becomes prohibitive. If a scheme that almost always discards one of the least recently used items is sufficient, the PLRU algorithm can be used which only needs one bit per cache item to work.

Two-way set associative - one bit- indicates which line of the two has been reference more recently

Four-way set associative - three bits

Each bit represents one branch point in a binary decision tree; let 1 represent that the left side has been referenced more recently than the right side, and 0 vice-versa. When a line must be replaced, the cache selects which of L0:L1 and L2:L3 was least recently used and marks it for replacement. This decision tree is shown in Figure 5.

Figure 5: Decision tree for Pseudo-LRU Replacement Strategy

Most-Recently Used (MRU)

Evicting the most-recently used (MRU) page does very well on LRU’s worst case. In general, however, MRU is a bad idea, since many programs exhibit temporal locality in their memory accesses, and MRU is effectively assuming that memory accesses will not exhibit locality.

Least Frequently Used (LFU)

LFU counts how often an item is needed. Those that are used least often are discarded first.LFU requires that a reference count be maintained for each block in the cache. A block/object reference count is incremented on each reference. When a replacement is necessary, LFU replaces the block with the lowest reference count.

Random

To spread allocation uniformly, blocks to be evicted are randomly selected. Some systems generate pseudorandom block numbers to get reproducible behavior, which is useful when debugging hardware

Priority Cache(PC)

Uses both run-time and compile-time information to select a block for replacement. PC associates a data priority bit with each cache block. The compiler through two additional bits associated with each memory access instruction, assigns priorities. These two bits indicate whether the data priority bit should be set and also indicate priority of the block (low or high). The cache block with the lowest priority is the one to be replaced.

Greedy Dual Size (GDS)

This policy combines temporal locality, size and other cost information. The algorithm assigns a cost/size value to each cache block. In the simplest case the cost is set to 1 to maximize the hit ratio, but costs such as latency, network bandwidth can be explored. GDS assigns a key value to each object. The key is counted as the object's reference count plus the cost information divided by its size. There are several variations to GDS algorithm one of which takes into account coherency information and expiration time of the cache (GDSlifetime). The second variation uses the observation that different types of applications change their references at different rates (GDStype). A last GDS variation is (GDSlatency), which uses a key value for an object the quantity - latency/size where latency is measured delay for the last retrieval of the object.

Cache Coherence

In multiprocessing system, suppose a task running on processor P requests the data in global memory location X, the contents of X are copied to processor P’s local cache. Now, suppose processor Q also accesses X. What happens if Q wants to write a new value over the old value of X?

There are two fundamental cache coherence policies:

- Write-invalidate - On a write to a block by a cache all other cached copies are made invalid. Only when an invalid block is accessed, it is updated by bringing in the new block.

- Write-update- On a write to a block by any cache, the updated value is propagated to all other caches.

These two policies along with write back and write-through caches form many cache coherence protocols that control replacement and write policies in multiprocessor architectures. Some of the coherence protocols are:

- MSI protocol

- MESI protocol

- MOSI protocol

- MOESI protocol

- MERSI protocol

- MESIF protocol

- Write-once protocol

- Synapse protocol

- Berkeley protocol

- Illinois protocol

- Firefly protocol

- Dragon protocol

Choice of the consistency model is crucial to designing a cache coherent system. Coherence models differ in performance and scalability; each must be evaluated for every system design.

References

- http://software.intel.com/en-us/blogs/2009/03/11/learning-experience-of-numa-and-intels-next-generation-xeon-processor-i/

- Eric Hagersten, Anders Landin, and Seif Haridi, DDM- A Cache only Memory Architecture, SICS Research Report 1991

- Yan Solihin, Fundamentals of Computer Architecture.

- http://it.toolbox.com/wiki/index.php/NUMA_Architecture

- http://practical-tech.com/infrastructure/numa-theory-and-practice/

- Fredrik Dahlgren, Josep Torrellas: Research Feature- Cache Only Memory Architecture

- http://en.wikipedia.org/

- David E. Culler, Jaswinder Pal Singh, with Anoop Gupta, Parallel Computer Architecture: A Hardware/Software Approach, © 1999 Morgan-Kauffman, ISBN 1-55860-343-3

- Intel Embedded Pentium Processor Family Dev. Manual, 1998, http://www.intel.com/design/intarch/manuals/273204.htm

- John L. Hennessy and David A. Patterson, Computer Architecture- A Quantitative Approach

- Proceedings of the First Asia-Pacific Conference on Web Intelligence, WI 2001