CSC/ECE 517 Fall 2009/wiki2 14 san: Difference between revisions

No edit summary |

|||

| (23 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

==Introduction to Thread-Safe Programming== | ==Introduction to Thread-Safe Programming== | ||

In computer programming, thread-safe describes a program portion or routine that can be called from multiple programming threads without unwanted interaction between the threads [1]. A piece of code is thread-safe if it functions correctly during simultaneous execution by multiple threads and in particular, it must satisfy the need for multiple threads to access the same shared data, and the need for a shared piece of data to be accessed by only one thread at any given time [2]. | In computer programming, [http://en.wikipedia.org/wiki/Thread_safety thread-safe] describes a program portion or routine that can be called from multiple programming threads without unwanted interaction between the threads [1]. A piece of code is thread-safe if it functions correctly during simultaneous execution by multiple threads and in particular, it must satisfy the need for multiple threads to access the same shared data, and the need for a shared piece of data to be accessed by only one thread at any given time [2]. | ||

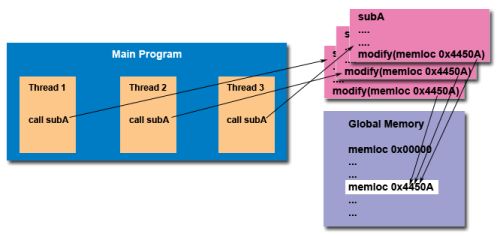

Here's an illustration of a thread unsafe scenario. Suppose that your application creates several threads, each of which makes a call to the same library routine such that this library routine accesses/modifies a global structure or location in memory then as each thread calls this routine it is possible that they may try to modify this global structure/memory location at the same time [4]. | Here's an illustration of a thread unsafe scenario. Suppose that your application creates several threads, each of which makes a call to the same library routine such that this library routine accesses/modifies a global structure or location in memory then as each thread calls this routine it is possible that they may try to modify this global structure/memory location at the same time [4]. | ||

[[Image:thread-unsafe.jpg|frame|Typical thread-unsafe scenario [4]]] | [[Image:thread-unsafe.jpg|frame|Typical thread-unsafe scenario [4]]] | ||

'''Two important questions arise:''' | '''Two important questions arise:''' | ||

* What are the consequences of running a thread-unsafe program? Thread programming errors may lead to unpredictable results like data errors possibly followed by fatal application exceptions | * What are the consequences of running a thread-unsafe program? Thread programming errors may lead to unpredictable results like data errors possibly followed by fatal application exceptions. There may also be the problem of endless blocked threads due to [http://en.wikipedia.org/wiki/Race_condition race-conditions] or [http://en.wikipedia.org/wiki/Deadlock deadlocks] and all these errors are especially difficult to find but easy to introduce by inexperienced programmers [3]. | ||

* How do we ensure that a particular multi-threaded code is thread safe? Some ways to unsure thread safety are that concurrent threads use | * How do we ensure that a particular multi-threaded code is thread safe? Some ways to unsure thread safety are that concurrent threads use algorithms that cooperate with each other and confine the address of a shared object to one thread whenever an unasynchronized event is active [1]. | ||

Here's an example of some thread safe code. In C language, local variables are dynamically allocated on the stack. Therefore, any function that does not use static data or other shared resources is trivially thread-safe, as in the following example [5]: | Here's an example of some thread safe code. In the C language, local variables are dynamically allocated on the stack [5]. Therefore, any function that does not use static data or other shared resources is trivially thread-safe, as in the following example [5]: | ||

/* thread-safe function */ | /* thread-safe function */ | ||

int diff(int x, int y) | int diff(int x, int y) | ||

| Line 25: | Line 24: | ||

} | } | ||

Another approach employed to make the multi-threaded code safer is the use of the Barrier. In parallel computing, a barrier is a type of [http://en.wikipedia.org/wiki/Synchronization_%28computer_science%29 synchronization] method. A barrier for a group of threads or processes in the source code means any thread/process must stop at this point and cannot proceed until all other threads/processes reach this barrier [22]. For example, a parallel do loop in [http://openmp.org/wp/openmp-specifications/ OpenMP] will not be allowed to continue on any thread until the last iteration is completed. This is in case the program relies on the result of the loop immediately after its completion [22]. | |||

[[Image:thread_barrier.jpg| Thread Barrier [23]]] | |||

==Concurrency Patterns== | ==Concurrency Patterns== | ||

| Line 34: | Line 36: | ||

* Concurrency Pattern | * Concurrency Pattern | ||

*:To ensure the above discussed thread safe programming in a multi-threaded programming paradigm where the focus is on the interactions between tasks that execute in parallel, we need to design applications using design patterns called concurrency patterns [7]. | *:To ensure the above discussed thread safe programming in a multi-threaded programming paradigm where the focus is on the interactions between tasks that execute in parallel, we need to design applications using design patterns called concurrency patterns [7]. | ||

===Some Important Concurrency Patterns=== | ===Some Important Concurrency Patterns=== | ||

====Balking Pattern==== | ====Balking Pattern==== | ||

The balking pattern only executes an action on an object when the object is in a particular state so if we are trying to access an object in a | The balking pattern only executes an action on an object when the object is in a particular state so if we are trying to access an object in a state which differs from the expected state, the object will "balk" (ie. refuse to proceed) [10]. | ||

For example, in Java, an IllegalStateException might be thrown in this case [10]. To avoid such an | For example, in Java, an [http://java.sun.com/j2se/1.4.2/docs/api/java/lang/IllegalStateException.html IllegalStateException] might be thrown in this case [10]. To avoid such an exception from occurring, the object can attempt to acquire write [http://en.wikipedia.org/wiki/Lock_%28computer_science%29 locks] which will time out if the object is not in the right state and if such an object’s method is called then the method should return without doing anything [11]. | ||

An example of the Balking pattern in Java [10]: | An example of the Balking pattern in Java [10]: | ||

| Line 58: | Line 60: | ||

====Guarded Suspension Pattern==== | ====Guarded Suspension Pattern==== | ||

In this pattern, an operation on an object requires not only a lock but also a condition to be met before the operation is executed [14]. So as the name suggests, the execution is "suspended" until the "guard" or condition is satisfied. | In this pattern, an operation on an object requires not only a lock but also a condition to be met before the operation is executed [14]. So as the name suggests, the execution is "suspended" until the "guard" or condition is satisfied [14]. | ||

Because it is blocking, there is a limitation here such that this pattern is only used when the developer knows that a method call will only be suspended for a finite and reasonable period of time because if a method call is suspended for too long, then the overall program will slow down or stop, waiting for the precondition to be satisfied [14]. In case the method in question is expected to run for long period of time then | Because it is blocking, there is a limitation here such that this pattern is only used when the developer knows that a method call will only be suspended for a finite and reasonable period of time because if a method call is suspended for too long, then the overall program will slow down or stop, waiting for the precondition to be satisfied [14]. In case the method in question is expected to run for a long period of time then the balking pattern may be preferred here [14]. | ||

An example of Guarded Suspension [15]: | An example of Guarded Suspension [15]: | ||

| Line 67: | Line 69: | ||

} | } | ||

Another example of this pattern may be explained with the help of the common data structure, a queue. A queue has get() and put() methods such that the get() methods will read the existing nodes of the queue and the put() method will add a node into the queue. Here, the get() method can have a guard with a condition that the method will only run if the queue is not empty. The put() method when called can notify the get() so that the guard may be removed since the queue is not empty [14]. | Another example of this pattern may be explained with the help of the common data structure, a [http://en.wikipedia.org/wiki/Queue_%28data_structure%29 queue] [14]. A queue has get() and put() methods such that the get() methods will read the existing nodes of the queue and the put() method will add a node into the queue. Here, the get() method can have a guard with a condition that the method will only run if the queue is not empty. The put() method when called can notify the get() so that the guard may be removed since the queue is not empty [14]. | ||

====Read/Write Lock Pattern==== | ====Read/Write Lock Pattern==== | ||

This pattern allows concurrent read access to an object but requires exclusive access for write operations [11]. This means that when an object is being written to, the object access will be blocked for all other read operations. | This pattern allows concurrent read access to an object but requires exclusive access for write operations [11]. This means that when an object is being written to, the object access will be blocked for all other read operations. | ||

The Java module ReadWriteLock is a good example of this pattern. It maintains a pair of associated locks, one for the read-only operations and one for writing so that the read lock may be held simultaneously by multiple reader threads as long as there are no writers however, the write lock is exclusive [12]. | The Java module [http://java.sun.com/j2se/1.5.0/docs/api/java/util/concurrent/locks/ReadWriteLock.html ReadWriteLock] is a good example of this pattern [12]. It maintains a pair of associated locks, one for the read-only operations and one for writing so that the read lock may be held simultaneously by multiple reader threads as long as there are no writers however, the write lock is exclusive [12]. | ||

An example of the read/write locking in Java [13]: | An example of the read/write locking in Java [13]: | ||

| Line 88: | Line 90: | ||

====Monitor Pattern==== | ====Monitor Pattern==== | ||

In this pattern, an object may be used by multiple threads using mutual exclusion such that at any time only a single thread will be executing on that object [16]. This simplifies the code complexity by removing the need to parallelize the code between the multiple threads. Monitors also provide a mechanism for threads to temporarily give up exclusive access, in order to wait for some condition to be met, before regaining exclusive access and resuming their task or have a mechanism for signaling other threads that such conditions have been met [16]. | In this pattern, an object may be used by multiple threads using [http://en.wikipedia.org/wiki/Mutual_exclusion mutual exclusion] such that at any time only a single thread will be executing on that object [16]. This simplifies the code complexity by removing the need to parallelize the code between the multiple threads. Monitors also provide a mechanism for threads to temporarily give up exclusive access, in order to wait for some condition to be met, before regaining exclusive access and resuming their task or have a mechanism for signaling other threads that such conditions have been met [16]. | ||

An example of a monitor for performing bank transactions [16]: | An example of a monitor for performing bank transactions [16]: | ||

| Line 106: | Line 108: | ||

} | } | ||

} | } | ||

In the above example, even when multiple threads are executing, only one thread has control of the monitor. So before running any of the above class methods, a thread must wait for any other thread to finish its execution of a method. Without this mutual exclusion mechanism in this case, the transactions between threads will cause the severe errors in the bank account data [16]. | In the above example, even when multiple threads are executing, only one thread has control of the monitor. So before running any of the above class methods, a thread must wait for any other thread to finish its execution of a method. Without this mutual exclusion mechanism in this case, the transactions between threads will cause the severe errors in the bank account data [16]. | ||

====Active Object==== | ====Active Object==== | ||

The Active Object pattern decouples method execution from method invocation in order to simplify synchronized access to an object that resides in its own thread of control so that one or more independent threads can access data modeled as a single object [8]. | The Active Object pattern decouples method execution from method invocation in order to simplify synchronized access to an object that resides in its own thread of control so that one or more independent threads can access data modeled as a single object [8]. | ||

This pattern is commonly used in distributed systems requiring multi-threaded servers and in client applications, such as windowing systems and network browsers which employ active objects to simplify concurrent, asynchronous network operations [8]. | This pattern is commonly used in distributed systems requiring multi-threaded servers and in client applications, such as [http://en.wikipedia.org/wiki/Windowing_system windowing systems] and [http://www2.krellinst.org/AiS/textbook/manual/lplans/unit4/lp_netres4.1.1.html network browsers] which employ active objects to simplify concurrent, asynchronous network operations [8]. | ||

This pattern consists of certain important components and describes the interactions between these components [8]: | This pattern consists of certain important components and describes the interactions between these components [8]: | ||

The Proxy component provides interface for clients with publicly accessible methods. The Scheduler component schedules requests to decide which request will be executed next. There is another interface which defines the method request on an active object. There also exists a list of pending requests from clients along with the implementation of the active object method. These inter-component interactions can be seen in the diagram below. | The ''Proxy'' component provides interface for clients with publicly accessible methods. The ''Scheduler'' component schedules requests to decide which request will be executed next. There is another interface which defines the ''method request'' on an active object. There also exists a list of pending requests from clients along with the implementation of the active object method. These inter-component interactions can be seen in the diagram below. | ||

[[Image:active.jpg|Class Diagram for Active Object [9]]] | [[Image:active.jpg|Class Diagram for Active Object [9]]] | ||

| Line 126: | Line 129: | ||

} | } | ||

====Thread Pool Pattern==== | |||

In this pattern, several different threads are created to execute tasks which are organized in the form of a queue such that the number of threads created is typically smaller than the number of tasks [19]. The threads will execute tasks from the queue independently such that as soon as a thread finishes a task it will request the next task in the queue so that multiple threads work on different tasks [19]. Once all the tasks are completed, the threads can either terminate or sleep until new tasks are added to the queue [19]. Now, the number of threads can be dynamic based on the number of waiting tasks and this number is a parameter that can be tuned to provide the best performance [20]. A typical thread pool is shown in the figure below: | |||

[[Image:thread_pool.png|Thread Pool [21]]] | |||

Consider the following example that uses this pattern: <br /> | |||

A web server can add threads if numerous web page requests come in and can remove threads when those requests taper down so that the cost of having a larger thread pool is increased resource usage [20]. | |||

==== | ====Scheduler Pattern==== | ||

As its name suggests, this pattern acts as a scheduler when multiple threads may want access to a single resource [17]. In such a case it becomes necessary to schedule these threads so that each gets access to the resource depending on some [http://oreilly.com/catalog/linuxkernel/chapter/ch10.html scheduling policy] such as [http://en.wikipedia.org/wiki/Round-robin_scheduling round-robin], [http://en.wikipedia.org/wiki/FIFO first come first served (FCFS)] or based on some other priority [17]. The right scheduling policy is very important so that none of the threads are starved (ie. each thread gets its share of access of the resource). | |||

The scheduler pattern uses an object that explicitly sequences waiting threads and provides a mechanism to implement a scheduling policy, but is independent of any specific scheduling policy — the policy is encapsulated in its own class and is reusable [18]. However, this adds significant overhead beyond that required to call a [http://mindprod.com/jgloss/synchronized.html synchronized method] [18]. | |||

| Line 159: | Line 159: | ||

[14] http://en.wikipedia.org/wiki/Guarded_suspension <br /> | [14] http://en.wikipedia.org/wiki/Guarded_suspension <br /> | ||

[15] http://web.cecs.pdx.edu/~antoy/Courses/Patterns/slides/GuardedSuspension.html <br /> | [15] http://web.cecs.pdx.edu/~antoy/Courses/Patterns/slides/GuardedSuspension.html <br /> | ||

[16] http://en.wikipedia.org/wiki/Monitor_%28synchronization%29 | [16] http://en.wikipedia.org/wiki/Monitor_%28synchronization%29 <br /> | ||

[17] http://c2.com/cgi/wiki?SchedulerPattern | [17] http://c2.com/cgi/wiki?SchedulerPattern <br /> | ||

[18] http://en.wikipedia.org/wiki/Scheduler_pattern | [18] http://en.wikipedia.org/wiki/Scheduler_pattern <br /> | ||

[19] http://threadpool.sourceforge.net/design/pattern.html | [19] http://threadpool.sourceforge.net/design/pattern.html <br /> | ||

[20] http://www.bookrags.com/wiki/Thread_pool_pattern | [20] http://www.bookrags.com/wiki/Thread_pool_pattern <br /> | ||

[21] http:// | [21] http://en.wikipedia.org/wiki/Thread_pool_pattern <br /> | ||

[22] http://en.wikipedia.org/wiki/Barrier_%28computer_science%29 <br /> | |||

[23] http://www.cs.usfca.edu/~parrt/course/601/lectures/threads.html | |||

Latest revision as of 21:21, 14 October 2009

Thread-Safe Programming and Concurrency Patterns

Introduction to Thread-Safe Programming

In computer programming, thread-safe describes a program portion or routine that can be called from multiple programming threads without unwanted interaction between the threads [1]. A piece of code is thread-safe if it functions correctly during simultaneous execution by multiple threads and in particular, it must satisfy the need for multiple threads to access the same shared data, and the need for a shared piece of data to be accessed by only one thread at any given time [2].

Here's an illustration of a thread unsafe scenario. Suppose that your application creates several threads, each of which makes a call to the same library routine such that this library routine accesses/modifies a global structure or location in memory then as each thread calls this routine it is possible that they may try to modify this global structure/memory location at the same time [4].

Two important questions arise:

- What are the consequences of running a thread-unsafe program? Thread programming errors may lead to unpredictable results like data errors possibly followed by fatal application exceptions. There may also be the problem of endless blocked threads due to race-conditions or deadlocks and all these errors are especially difficult to find but easy to introduce by inexperienced programmers [3].

- How do we ensure that a particular multi-threaded code is thread safe? Some ways to unsure thread safety are that concurrent threads use algorithms that cooperate with each other and confine the address of a shared object to one thread whenever an unasynchronized event is active [1].

Here's an example of some thread safe code. In the C language, local variables are dynamically allocated on the stack [5]. Therefore, any function that does not use static data or other shared resources is trivially thread-safe, as in the following example [5]:

/* thread-safe function */

int diff(int x, int y)

{

int delta;

delta = y - x;

if (delta < 0)

delta = -delta;

return delta;

}

Another approach employed to make the multi-threaded code safer is the use of the Barrier. In parallel computing, a barrier is a type of synchronization method. A barrier for a group of threads or processes in the source code means any thread/process must stop at this point and cannot proceed until all other threads/processes reach this barrier [22]. For example, a parallel do loop in OpenMP will not be allowed to continue on any thread until the last iteration is completed. This is in case the program relies on the result of the loop immediately after its completion [22].

Concurrency Patterns

Definitions

- Design Patterns

- In software engineering, a design pattern is a general reusable solution to a commonly occurring problem in software design and not a finished design that can be transformed directly into code [6]. It is a description or template for how to solve a problem that can be used in many different situations and typically shows relationships and interactions between classes or objects, without specifying the final application classes or objects that are involved [6].

- Concurrency Pattern

- To ensure the above discussed thread safe programming in a multi-threaded programming paradigm where the focus is on the interactions between tasks that execute in parallel, we need to design applications using design patterns called concurrency patterns [7].

Some Important Concurrency Patterns

Balking Pattern

The balking pattern only executes an action on an object when the object is in a particular state so if we are trying to access an object in a state which differs from the expected state, the object will "balk" (ie. refuse to proceed) [10].

For example, in Java, an IllegalStateException might be thrown in this case [10]. To avoid such an exception from occurring, the object can attempt to acquire write locks which will time out if the object is not in the right state and if such an object’s method is called then the method should return without doing anything [11].

An example of the Balking pattern in Java [10]:

public class Example{

private boolean jobInProgress = false;

public void job(){

synchronized(this){

if(jobInProgress){

return;

}

jobInProgress = true;

}

}

}

In the above code, "synchronized" is used to check the state so that if multiple threads call the "job" method, only one of those will be able to proceed and all others will return as one job is already in progress.

Guarded Suspension Pattern

In this pattern, an operation on an object requires not only a lock but also a condition to be met before the operation is executed [14]. So as the name suggests, the execution is "suspended" until the "guard" or condition is satisfied [14]. Because it is blocking, there is a limitation here such that this pattern is only used when the developer knows that a method call will only be suspended for a finite and reasonable period of time because if a method call is suspended for too long, then the overall program will slow down or stop, waiting for the precondition to be satisfied [14]. In case the method in question is expected to run for a long period of time then the balking pattern may be preferred here [14].

An example of Guarded Suspension [15]:

synchronized void guardedMethod () {

while (! precondition) { wait (); }

... // code requiring precondition

}

Another example of this pattern may be explained with the help of the common data structure, a queue [14]. A queue has get() and put() methods such that the get() methods will read the existing nodes of the queue and the put() method will add a node into the queue. Here, the get() method can have a guard with a condition that the method will only run if the queue is not empty. The put() method when called can notify the get() so that the guard may be removed since the queue is not empty [14].

Read/Write Lock Pattern

This pattern allows concurrent read access to an object but requires exclusive access for write operations [11]. This means that when an object is being written to, the object access will be blocked for all other read operations.

The Java module ReadWriteLock is a good example of this pattern [12]. It maintains a pair of associated locks, one for the read-only operations and one for writing so that the read lock may be held simultaneously by multiple reader threads as long as there are no writers however, the write lock is exclusive [12].

An example of the read/write locking in Java [13]:

public void setWriteLock(boolean lock) {

if (lock) {

rwLock.writeLock().lock();

WaitTimerTask job = new WaitTimerTask(Thread.currentThread(),false);

waitTimer.schedule(job, maxWait);

} else {

rwLock.writeLock().unlock();

WaitTimerTask job = lockTaskStack.get().pop();

job.cancel();

}

}

Monitor Pattern

In this pattern, an object may be used by multiple threads using mutual exclusion such that at any time only a single thread will be executing on that object [16]. This simplifies the code complexity by removing the need to parallelize the code between the multiple threads. Monitors also provide a mechanism for threads to temporarily give up exclusive access, in order to wait for some condition to be met, before regaining exclusive access and resuming their task or have a mechanism for signaling other threads that such conditions have been met [16].

An example of a monitor for performing bank transactions [16]:

monitor class Account {

private int balance := 0

invariant balance >= 0

public method boolean withdraw(int amount)

{

if amount < 0 then error "Amount may not be negative"

else if balance < amount then return false

else { balance := balance - amount ; return true }

}

public method deposit(int amount) {

if amount < 0 then error "Amount may not be negative"

else balance := balance + amount

}

}

In the above example, even when multiple threads are executing, only one thread has control of the monitor. So before running any of the above class methods, a thread must wait for any other thread to finish its execution of a method. Without this mutual exclusion mechanism in this case, the transactions between threads will cause the severe errors in the bank account data [16].

Active Object

The Active Object pattern decouples method execution from method invocation in order to simplify synchronized access to an object that resides in its own thread of control so that one or more independent threads can access data modeled as a single object [8]. This pattern is commonly used in distributed systems requiring multi-threaded servers and in client applications, such as windowing systems and network browsers which employ active objects to simplify concurrent, asynchronous network operations [8].

This pattern consists of certain important components and describes the interactions between these components [8]: The Proxy component provides interface for clients with publicly accessible methods. The Scheduler component schedules requests to decide which request will be executed next. There is another interface which defines the method request on an active object. There also exists a list of pending requests from clients along with the implementation of the active object method. These inter-component interactions can be seen in the diagram below.

A java example of the Scheduler component of this pattern [9]:

public class Scheduler

{

private List act_list = new ArrayList();

private synchronized IMethodRequest get()

public synchronized void insert(IMethodRequest mr)

private class Dispatcher extends Thread

}

Thread Pool Pattern

In this pattern, several different threads are created to execute tasks which are organized in the form of a queue such that the number of threads created is typically smaller than the number of tasks [19]. The threads will execute tasks from the queue independently such that as soon as a thread finishes a task it will request the next task in the queue so that multiple threads work on different tasks [19]. Once all the tasks are completed, the threads can either terminate or sleep until new tasks are added to the queue [19]. Now, the number of threads can be dynamic based on the number of waiting tasks and this number is a parameter that can be tuned to provide the best performance [20]. A typical thread pool is shown in the figure below:

Consider the following example that uses this pattern:

A web server can add threads if numerous web page requests come in and can remove threads when those requests taper down so that the cost of having a larger thread pool is increased resource usage [20].

Scheduler Pattern

As its name suggests, this pattern acts as a scheduler when multiple threads may want access to a single resource [17]. In such a case it becomes necessary to schedule these threads so that each gets access to the resource depending on some scheduling policy such as round-robin, first come first served (FCFS) or based on some other priority [17]. The right scheduling policy is very important so that none of the threads are starved (ie. each thread gets its share of access of the resource).

The scheduler pattern uses an object that explicitly sequences waiting threads and provides a mechanism to implement a scheduling policy, but is independent of any specific scheduling policy — the policy is encapsulated in its own class and is reusable [18]. However, this adds significant overhead beyond that required to call a synchronized method [18].

References

[1] http://searchcio-midmarket.techtarget.com/sDefinition/0,,sid183_gci331590,00.html

[2] http://en.wikipedia.org/wiki/Thread_safety

[3] http://www.multicoreinfo.com/2009/03/thread-safe/

[4] http://www.cdac.in/html/events/beta-test/PEEP-2008-web-page/peep-2008-handson-webpage-mode-1/pthreads-peep-2008/pthreads-peep-2008-overview.html

[5] http://publib.boulder.ibm.com/infocenter/systems/index.jsp?topic=/com.ibm.aix.genprogc/doc/genprogc/writing_reentrant_thread_safe_code.htm

[6] http://en.wikipedia.org/wiki/Design_pattern_%28computer_science%29

[7] http://en.wikipedia.org/wiki/Concurrency_pattern

[8] http://www.cs.wustl.edu/~schmidt/PDF/Act-Obj.pdf

[9] http://www.cs.uu.nl/docs/vakken/no/active_object_pattern.pdf

[10] http://en.wikipedia.org/wiki/Balking_pattern

[11] http://www.mindspring.com/~mgrand/pattern_synopses.htm

[12] http://java.sun.com/j2se/1.5.0/docs/api/java/util/concurrent/locks/ReadWriteLock.html

[13] http://today.java.net/pub/a/today/2007/06/28/extending-reentrantreadwritelock.html

[14] http://en.wikipedia.org/wiki/Guarded_suspension

[15] http://web.cecs.pdx.edu/~antoy/Courses/Patterns/slides/GuardedSuspension.html

[16] http://en.wikipedia.org/wiki/Monitor_%28synchronization%29

[17] http://c2.com/cgi/wiki?SchedulerPattern

[18] http://en.wikipedia.org/wiki/Scheduler_pattern

[19] http://threadpool.sourceforge.net/design/pattern.html

[20] http://www.bookrags.com/wiki/Thread_pool_pattern

[21] http://en.wikipedia.org/wiki/Thread_pool_pattern

[22] http://en.wikipedia.org/wiki/Barrier_%28computer_science%29

[23] http://www.cs.usfca.edu/~parrt/course/601/lectures/threads.html

![Thread Barrier [23]](/images/e/ed/Thread_barrier.jpg)

![Class Diagram for Active Object [9]](/images/f/f0/Active.jpg)

![Thread Pool [21]](/images/5/5e/Thread_pool.png)