CSC/ECE 506 Fall 2007/wiki1 4 a1: Difference between revisions

| (131 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Architectural Trends == | == Architectural Trends == | ||

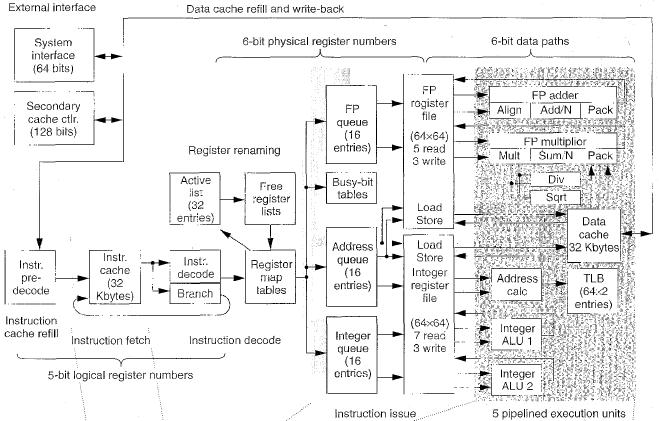

[[Image:MIPSR10000.jpg|thumb|right|300px|Fig.1 MIPS R10000 Block Diagram (From Fig. 2 of [3])]] | |||

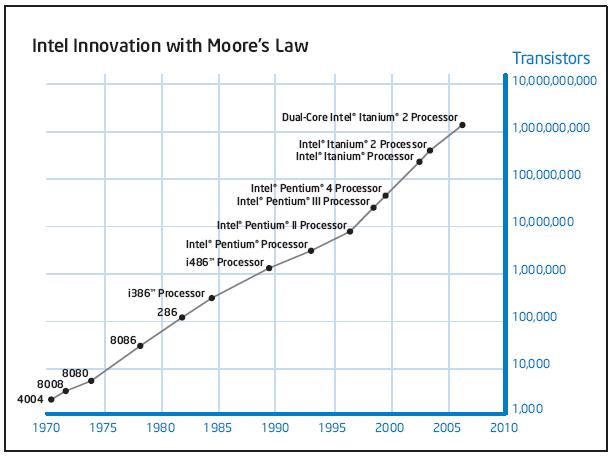

[[Image:IntelMoorsLaw.jpg|thumb|right|300px|Fig.2 The number of trnasistors on a chip of Intel]] | |||

Feature size means the minimum size of transistors or a wire width which are used for connectiong transistors and other circuit components. Feature sizes have dramatically decreased from 10 microns in 1971 to 0.18 microns in 2001. These advanced integrated circuit processes allowed the integration of one billion transistors on a single chip and enabled more complicated and faster microprocessor architecure which have evolved to the direction of increasing parallelism; [http://en.wikipedia.org/wiki/Instruction_level_parallelism ILP] and [http://en.wikipedia.org/wiki/Thread_level_parallelism TLP]. With respect to microprocessor architecture, as superscalar processor prevails, several additional exploitable architectures were also proposed during past 10 years as other past decades did. Based on superscalar architecture, VLIW, superspeculative, simultaneous multithreading, chip multiprocessor and so on were proposed and explored. These techniques tried to overcome the control and data hazard as deep pipelining and multiple issue overwhelms as well as to maximize the throughput of computing by TLP. | |||

For example, MIPS R10000 is a superscalar processor executed by out of order manner, which has 6.8 million transistors on 16.64mm x 17.934 mm(298mm<sup>2</sup>) dimension using 0.35um process. It fetches 4 instructions simultaneously and has total of 6 pipelines; 5 pipe lines for execution and 1 pipe line for fetching and decoding. Each execution pipelines can be categorized into 3 kinds of execution - integer, float and load/store. | |||

--------- | |||

---- | |||

=== VLIW === | |||

VLIW(Very Long Instruction Word) is one way to expedite ILP under multiple-issue processors. Multiple-issue processors are attainable by two basics - superscalar and VLIW. The big difference between superscalar and VLIW is located on the scheduling method of instructions. Whlie superscalar processors issue multiple numbers of instructions per clock, which are scheduled either statically or dynamically, VLIWs issue statically sceduled instructions by the compiler. Both superscalar and VLIW have multiple and independent functional units. | |||

VLIW processor's compiler analyzes the programmer's instructions and then groups multiple independent instructions into a large packaged instruction. VLIW issues a fixed number of instructions, the format of which can be either one large instruction or a fixed instruction packet with the parallelism. | |||

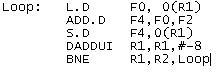

To look into the inside of VLIW operation, assume the below example code for MIPS[1]. | |||

for (i=1000; i>0; i=i-1) x[i] = x[i] + s; | |||

The standard MIPS code looks like this: | |||

[[Image:simpleMIPS.jpg]] | |||

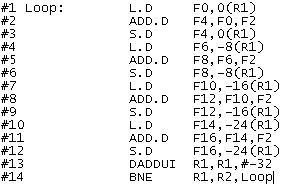

If loop-unrolling and scheduling the code are applied, then | |||

[[Image:loopunrollMIPS.jpg]] | |||

it takes 14 cycles for loop body. | |||

If VLIW instructions are used, then | |||

[[Image:VLIW.jpg]] | |||

it takes 9 cycles assuming 5 execution pipelines. | |||

MIPS R10000 is also a good example. It has 2 integer functional units and 3 types of operands. Hence, the compiler can generate one instruction which contains 3 integer operations with the corresponding operands to each operation. Yet another example of VLIW is i860 of Trimedia. | |||

---- | |||

=== Multi-threading === | === Multi-threading === | ||

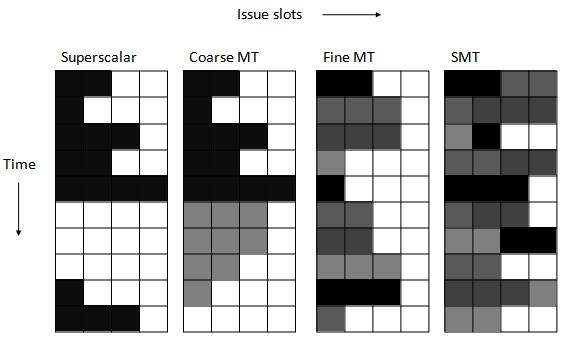

[[Image:SMTEx.jpg|thumb|right|300px|Fig.3 Four different approaches of using issue slots in superscalar processor (Redrawn from Fig 6.44 of [1])]] | |||

Multi-threading enables exploiting thread-level parallelism(TLP) within a single processor. It allows multiple threads to share the functional units of a single processor by an overlapping manner. For this sharing, the processor has to maintain the duplicated state information of each thread-register file, PC, page table and so on. In addition, the processor can switch the different thread quickly. | |||

For attaining multi-threading, there are two basic approaches; fine-grained multi-threading and coarse-grained multi-threading. The former switches each instruction between multiple interleaved threads. For this interleaving, the processor can switch threads on every clock cycle. The advantage of this architecture can prohibit stalling, because other instructions from other threads can be performed when one thread stalls. The disadvantage makes slow down the individual thread's execution, because even though the instruction is ready to be executed, it can be interleaved by another thread's instruction. | |||

The latter switches threads when it meets the stall only with a high cost. This policy reduces unnecessary switching of thread, so that the individual thread does not need to slow down its execution contrary to the fine-grained case. However, it has the cost when switching occurs to fill the pipeline. This kind of processor issues instructions from a single thread, although it switches the running thread. If the stall occurs, the pipeline is empty. Then, in order to execute a new thread instead of stalled thread, the pipeline has to be filled, which results in the cost. | |||

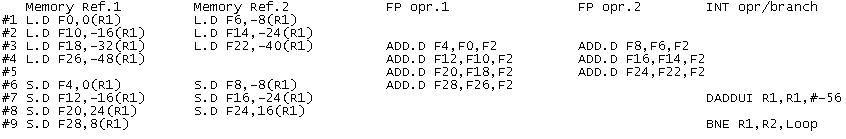

The Simultaneous multithreading (SMT) is a kind of multithreading that uses the resources of a multiple-issue, dynamically scheduled processor to exploit TLP. At the same time it exploits ILP using the issue slots in a single clock cycle. Figure 3 shows the comparison between three kinds of multi-threading in addition to a superscalar processor. | |||

---- | |||

=== Multi-core === | |||

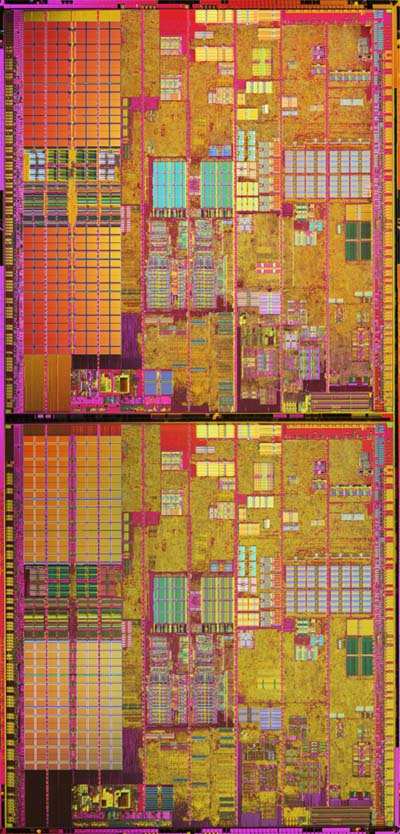

[[Image:Smithfield_die_med.jpg|thumb|right|80px|Fig.4 Intel® Pentium® processor Extreme Edition processor die [7]]] | |||

Multi-core CPUs have multiple numbers of CPU cores on a single die. They are connected to each other through a shared L2 or L3 cache, or a glue logic like switch and bus on a die. Every CPU core on a die shares interconnect components with which to interface to other processors and the rest of the system. These components include a FSB (Front Side Bus), a memory controller, a cache coherent link to other processors, and a non-coherent link to the southbridge and I/O devices. The advantages of multi-core chips are power-efficiency and simplicity around the processors. Since multiple processors are packed into a single die, the glue logics which are required to connect to each processor are also packed into a die. It saves power and simplifies auxilary circuits than coupled processors, which need PCB circuits. | |||

Intel Pentium Extreme, Coreduo and Coreduo2 are good examples of multi-core processors. | |||

Intel Xeon X7300 series has quad-core in a single die with 65nm processing. | |||

---- | |||

=== Speculative Execution === | === Speculative Execution === | ||

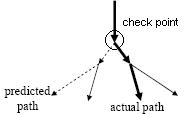

While trying to get more ILP, managing control dependencies becomes more important but more burden. To reduce the cost of stall because of branch, branch prediction techinque is applied for the instruction fetching stage. However, for the processor which executes multiple instructions per clock, more than just accurate prediction are required. To speculate is to act on these predictions; fetch and execute instructions from the predicted path.[12] | |||

[[Image:speculative.jpg]] | |||

Under speculative execution, fetch, issue, and execute instructions are performed as if branch predictions were always correct. When misprediction occurs, the recovery mechanism handles this situation. If the processor meets a branch, it predicts the branch target and follows that path as well as does checkpoint. While checkpointing, the processor duplicates the copy of information such as register files and control information and another possible branch target and so on. If the prediction is correct, the processor reclaims the stored information for use by new predicted branches. But if the prediction is incorrect, it resotres the execution information from the corresponding checkpoint. There are examples like PowerPC 603/604/G3/G4, MIPS R10000/R12000, Intel Pentium II/III/4, Alpha 21264, and AMD K5/K6/Athlon. | |||

---- | |||

== Updated Figure 1.8 & 1.9 == | |||

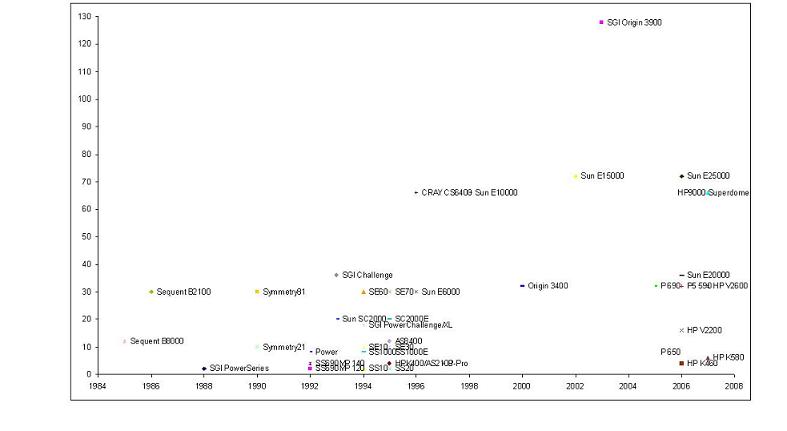

[[Image:fig18.jpg|frame|Figure 1.8 Number of processors in fully configured commercial bus-based shared memory multiprocessors]] | |||

Figure 1.8 of our book has been updated to incorporate trends from 2000 to the present. SGI Origin 3000 series were reintroduced as Origin 3400 and Origin 3900 in year 2000 and 2003, respectively. Sun introduced even more powerful enterprise servers than E10000, which are E15000 in year 2002, E20000 and E25000 in year 2006. HP's high-end supercomputer 9000 Superdome with 16, 32, and 64 processors are released this year(2007). | |||

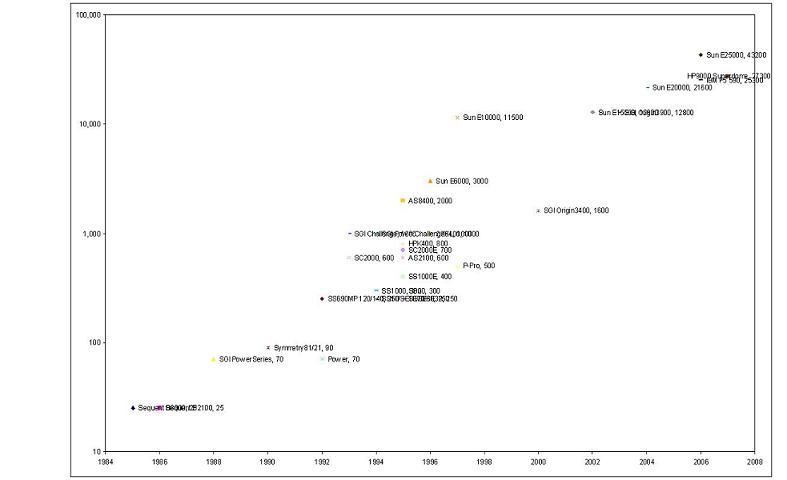

[[Image:fig19.jpg|frame|Figure 1.9 Bandwidth of the shared memory bus in commercial multiprocessors(Y-axis is log-scaled)]] | |||

Figure 1.9 shows the bandwidth of shared memory bus of those servers introduced in figure 1.8, which are SGI Origin 3000 series, SUN Enterprise 15K, 20K, and 25K as well as IBM p5 590 and HP9000 Superdome. In the case of Sun E25K, the bandwidth available is 43.2 GBps and the aggregated bandwith exceeds 100GBps. Origin 3900 has 12.8 GBps bandwidth and the aggregate bandwidth of 172.8 GBps. | |||

---- | |||

== References == | == References == | ||

[1] John L. Hennessy, David A. Patterson, "Computer Architecture: A Quantitative Approach" 3rd Ed., Morgan Kaufmann, CA, USA | |||

[2] CE Kozyrakis, DA Patterson, "A new direction for computer architecture research", | |||

Computer Volume 31 Issue 11, IEEE, Nov 1998, pp24-32 | |||

[3] K.C. Yeager, "The MIPS R10000 Superscalar Microprocessor", IEEE Micro Volume 16 Issue 2, Apr. 1996, pp28-41 | |||

[4] Geoff Koch, "Discovering Multi-Core: Extending the Benefits of Moore’s Law", Technology@Intel Magazine, Jul 2005, pp1-6 | |||

[5] Richard Low, "Microprocessor trends:multicore, memory, and power developments", Embedded Computing Design, Sep 2005 | |||

[6] Artur Klauser, "Trends in High-Performance Microprocessor Design", Telematik 1, 2001 | |||

[7] http://www.intel.com & http://www.intel.com/pressroom/kits/pentiumee | |||

[8] http://www.alimartech.com/9000_servers.htm | |||

[9] http://www.sun.com/servers/index.jsp?gr0=cpu&fl0=cpu4&gr1= | |||

[10] http://www.sgi.com/pdfs/3867.pdf | |||

[11] http://www-03.ibm.com/systems/p/hardware/highend/590/index.html | |||

[12] Eric Rotenberg, ECE721 Advanced Microarchitecture lecture notes, NCSU, 2007 | |||

Latest revision as of 02:28, 11 September 2007

Architectural Trends

Feature size means the minimum size of transistors or a wire width which are used for connectiong transistors and other circuit components. Feature sizes have dramatically decreased from 10 microns in 1971 to 0.18 microns in 2001. These advanced integrated circuit processes allowed the integration of one billion transistors on a single chip and enabled more complicated and faster microprocessor architecure which have evolved to the direction of increasing parallelism; ILP and TLP. With respect to microprocessor architecture, as superscalar processor prevails, several additional exploitable architectures were also proposed during past 10 years as other past decades did. Based on superscalar architecture, VLIW, superspeculative, simultaneous multithreading, chip multiprocessor and so on were proposed and explored. These techniques tried to overcome the control and data hazard as deep pipelining and multiple issue overwhelms as well as to maximize the throughput of computing by TLP.

For example, MIPS R10000 is a superscalar processor executed by out of order manner, which has 6.8 million transistors on 16.64mm x 17.934 mm(298mm2) dimension using 0.35um process. It fetches 4 instructions simultaneously and has total of 6 pipelines; 5 pipe lines for execution and 1 pipe line for fetching and decoding. Each execution pipelines can be categorized into 3 kinds of execution - integer, float and load/store.

VLIW

VLIW(Very Long Instruction Word) is one way to expedite ILP under multiple-issue processors. Multiple-issue processors are attainable by two basics - superscalar and VLIW. The big difference between superscalar and VLIW is located on the scheduling method of instructions. Whlie superscalar processors issue multiple numbers of instructions per clock, which are scheduled either statically or dynamically, VLIWs issue statically sceduled instructions by the compiler. Both superscalar and VLIW have multiple and independent functional units.

VLIW processor's compiler analyzes the programmer's instructions and then groups multiple independent instructions into a large packaged instruction. VLIW issues a fixed number of instructions, the format of which can be either one large instruction or a fixed instruction packet with the parallelism.

To look into the inside of VLIW operation, assume the below example code for MIPS[1].

for (i=1000; i>0; i=i-1) x[i] = x[i] + s;

The standard MIPS code looks like this:

If loop-unrolling and scheduling the code are applied, then

it takes 14 cycles for loop body.

If VLIW instructions are used, then

it takes 9 cycles assuming 5 execution pipelines.

MIPS R10000 is also a good example. It has 2 integer functional units and 3 types of operands. Hence, the compiler can generate one instruction which contains 3 integer operations with the corresponding operands to each operation. Yet another example of VLIW is i860 of Trimedia.

Multi-threading

Multi-threading enables exploiting thread-level parallelism(TLP) within a single processor. It allows multiple threads to share the functional units of a single processor by an overlapping manner. For this sharing, the processor has to maintain the duplicated state information of each thread-register file, PC, page table and so on. In addition, the processor can switch the different thread quickly.

For attaining multi-threading, there are two basic approaches; fine-grained multi-threading and coarse-grained multi-threading. The former switches each instruction between multiple interleaved threads. For this interleaving, the processor can switch threads on every clock cycle. The advantage of this architecture can prohibit stalling, because other instructions from other threads can be performed when one thread stalls. The disadvantage makes slow down the individual thread's execution, because even though the instruction is ready to be executed, it can be interleaved by another thread's instruction.

The latter switches threads when it meets the stall only with a high cost. This policy reduces unnecessary switching of thread, so that the individual thread does not need to slow down its execution contrary to the fine-grained case. However, it has the cost when switching occurs to fill the pipeline. This kind of processor issues instructions from a single thread, although it switches the running thread. If the stall occurs, the pipeline is empty. Then, in order to execute a new thread instead of stalled thread, the pipeline has to be filled, which results in the cost.

The Simultaneous multithreading (SMT) is a kind of multithreading that uses the resources of a multiple-issue, dynamically scheduled processor to exploit TLP. At the same time it exploits ILP using the issue slots in a single clock cycle. Figure 3 shows the comparison between three kinds of multi-threading in addition to a superscalar processor.

Multi-core

Multi-core CPUs have multiple numbers of CPU cores on a single die. They are connected to each other through a shared L2 or L3 cache, or a glue logic like switch and bus on a die. Every CPU core on a die shares interconnect components with which to interface to other processors and the rest of the system. These components include a FSB (Front Side Bus), a memory controller, a cache coherent link to other processors, and a non-coherent link to the southbridge and I/O devices. The advantages of multi-core chips are power-efficiency and simplicity around the processors. Since multiple processors are packed into a single die, the glue logics which are required to connect to each processor are also packed into a die. It saves power and simplifies auxilary circuits than coupled processors, which need PCB circuits. Intel Pentium Extreme, Coreduo and Coreduo2 are good examples of multi-core processors. Intel Xeon X7300 series has quad-core in a single die with 65nm processing.

Speculative Execution

While trying to get more ILP, managing control dependencies becomes more important but more burden. To reduce the cost of stall because of branch, branch prediction techinque is applied for the instruction fetching stage. However, for the processor which executes multiple instructions per clock, more than just accurate prediction are required. To speculate is to act on these predictions; fetch and execute instructions from the predicted path.[12]

Under speculative execution, fetch, issue, and execute instructions are performed as if branch predictions were always correct. When misprediction occurs, the recovery mechanism handles this situation. If the processor meets a branch, it predicts the branch target and follows that path as well as does checkpoint. While checkpointing, the processor duplicates the copy of information such as register files and control information and another possible branch target and so on. If the prediction is correct, the processor reclaims the stored information for use by new predicted branches. But if the prediction is incorrect, it resotres the execution information from the corresponding checkpoint. There are examples like PowerPC 603/604/G3/G4, MIPS R10000/R12000, Intel Pentium II/III/4, Alpha 21264, and AMD K5/K6/Athlon.

Updated Figure 1.8 & 1.9

Figure 1.8 of our book has been updated to incorporate trends from 2000 to the present. SGI Origin 3000 series were reintroduced as Origin 3400 and Origin 3900 in year 2000 and 2003, respectively. Sun introduced even more powerful enterprise servers than E10000, which are E15000 in year 2002, E20000 and E25000 in year 2006. HP's high-end supercomputer 9000 Superdome with 16, 32, and 64 processors are released this year(2007).

Figure 1.9 shows the bandwidth of shared memory bus of those servers introduced in figure 1.8, which are SGI Origin 3000 series, SUN Enterprise 15K, 20K, and 25K as well as IBM p5 590 and HP9000 Superdome. In the case of Sun E25K, the bandwidth available is 43.2 GBps and the aggregated bandwith exceeds 100GBps. Origin 3900 has 12.8 GBps bandwidth and the aggregate bandwidth of 172.8 GBps.

References

[1] John L. Hennessy, David A. Patterson, "Computer Architecture: A Quantitative Approach" 3rd Ed., Morgan Kaufmann, CA, USA

[2] CE Kozyrakis, DA Patterson, "A new direction for computer architecture research", Computer Volume 31 Issue 11, IEEE, Nov 1998, pp24-32

[3] K.C. Yeager, "The MIPS R10000 Superscalar Microprocessor", IEEE Micro Volume 16 Issue 2, Apr. 1996, pp28-41

[4] Geoff Koch, "Discovering Multi-Core: Extending the Benefits of Moore’s Law", Technology@Intel Magazine, Jul 2005, pp1-6

[5] Richard Low, "Microprocessor trends:multicore, memory, and power developments", Embedded Computing Design, Sep 2005

[6] Artur Klauser, "Trends in High-Performance Microprocessor Design", Telematik 1, 2001

[7] http://www.intel.com & http://www.intel.com/pressroom/kits/pentiumee

[8] http://www.alimartech.com/9000_servers.htm

[9] http://www.sun.com/servers/index.jsp?gr0=cpu&fl0=cpu4&gr1=

[10] http://www.sgi.com/pdfs/3867.pdf

[11] http://www-03.ibm.com/systems/p/hardware/highend/590/index.html

[12] Eric Rotenberg, ECE721 Advanced Microarchitecture lecture notes, NCSU, 2007