E1929 Visualizations for Instructors: Difference between revisions

(→Team) |

No edit summary |

||

| (42 intermediate revisions by 3 users not shown) | |||

| Line 10: | Line 10: | ||

__TOC__ | __TOC__ | ||

== Problem Statement == | == Problem Statement == | ||

| Line 21: | Line 22: | ||

=== Old Charts === | === Old Charts === | ||

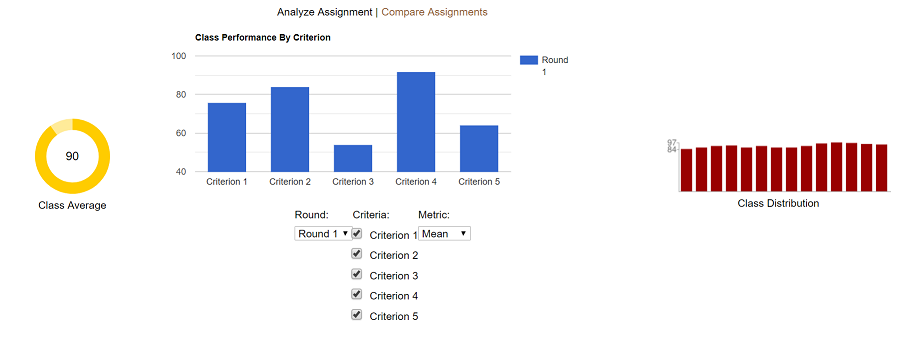

[[File:Existing_Assignment_Grades_Wed.png|frame|centre|'''Figure 1: Existing assignment grade charts - '''The existing assignment grade charts show the average grade for the assignment and the grade distribution. During this project, we aimed to add a third chart which shows the distribution for specific rubric criteria within the assignment.]] | [[File:Existing_Assignment_Grades_Wed.png|frame|centre|'''Figure 1: Existing assignment grade charts - '''The existing assignment grade charts show the average grade for the assignment and the grade distribution. During this project, we aimed to add a third chart which shows the distribution for specific rubric criteria within the assignment.]] | ||

== Accomplished == | == Accomplished == | ||

=== Executed on Plans === | === Executed on Plans === | ||

We successfully executed all of the ideas we had during the planning phase: | |||

* Add new feature to show mean and median data for rubric criteria in a given assignment | |||

* Add new feature to show comparison | |||

* Performed UI testing to ensure features were operational, including edge cases | |||

* Added and ran RSpec and Capybara automated tests | |||

=== New Charts Inserted === | === New Charts Inserted === | ||

| Line 31: | Line 39: | ||

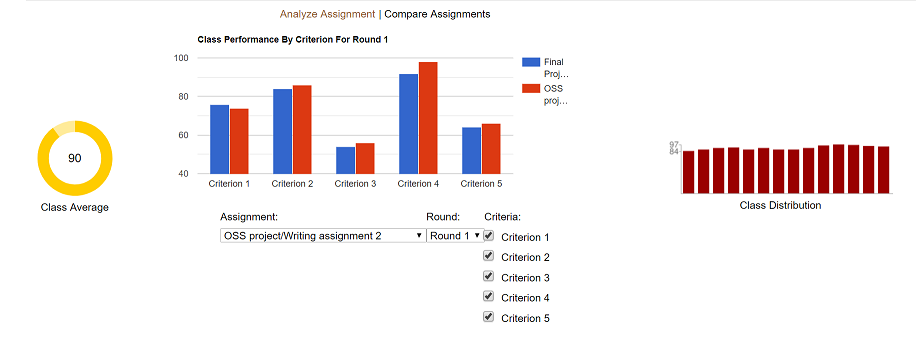

[[File:CompareAssignmentsWed.png|frame|centre|'''Figure 3: New Rubric Cross-Assignment Comparison Visualization - '''The instructor can see a comparison between selected criteria between the current assignment and the selected assignment. The rubric criteria must be compatible in order for it to even show up in the selection list. This comparison visualization is done on the "Compare Assignments" tab which must be selected after the "View Scores" page has been loaded.]] | [[File:CompareAssignmentsWed.png|frame|centre|'''Figure 3: New Rubric Cross-Assignment Comparison Visualization - '''The instructor can see a comparison between selected criteria between the current assignment and the selected assignment. The rubric criteria must be compatible in order for it to even show up in the selection list. This comparison visualization is done on the "Compare Assignments" tab which must be selected after the "View Scores" page has been loaded.]] | ||

=== Extra Issues Fixed === | === Extra Issues Fixed === | ||

* Refactored [https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts.html.erb _team_charts.html.erb] partial view to use partials for each graphic. Previously both charts were defined together in _team_charts.html.erb, but now each graphic has its own partial view ([https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts_averages.html.erb _team_charts_averages.html.erb], [https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts_distribution.html.erb _team_charts_distribution.html.erb], [https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts_rubric_stats.html.erb _team_charts_rubric_stats.html.erb]). This allows more flexibility going forward, if it's decided one chart should move to a new place on the page, or to a new page. | * Refactored [https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts.html.erb _team_charts.html.erb] partial view to use partials for each graphic. Previously both charts were defined together in _team_charts.html.erb, but now each graphic has its own partial view ([https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts_averages.html.erb _team_charts_averages.html.erb], [https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts_distribution.html.erb _team_charts_distribution.html.erb], [https://github.com/gshugh/expertiza/blob/OSS-HW4/app/views/grades/_team_charts_rubric_stats.html.erb _team_charts_rubric_stats.html.erb]). This allows more flexibility going forward, if it's decided one chart should move to a new place on the page, or to a new page. | ||

=== Code Changes === | |||

[https://github.com/expertiza/expertiza/pull/1440/files Files Added/Modified] | |||

Added: 10 + Modified: 11 = Total: 21 | |||

* app/assets/javascripts/rubric_stats.js '''(new)''' | |||

* app/controllers/grades_controller.rb | |||

* app/helpers/assignment_stats_helper.rb '''(new)''' | |||

* app/models/assignment.rb | |||

* app/models/assignment_questionnaire.rb | |||

* app/models/assignment_stats.rb '''(new)''' | |||

* app/models/criterion_stats.rb '''(new)''' | |||

* app/models/question.rb | |||

* app/models/questionnaire.rb | |||

* app/models/review_round_stats.rb '''(new)''' | |||

* app/models/score_view.rb | |||

* app/views/grades/_team_charts.html.erb | |||

* app/views/grades/_team_charts_averages.html.erb '''(new)''' | |||

* app/views/grades/_team_charts_distribution.html.erb '''(new)''' | |||

* app/views/grades/_team_charts_rubric_stats.html.erb '''(new)''' | |||

* spec/controllers/grades_controller_spec.rb | |||

* spec/factories/factories.rb | |||

* spec/features/grade_interface_spec.rb '''(new)''' | |||

* spec/features/helpers/grade_interface_helper.rb '''(new)''' | |||

* spec/models/assignment_spec.rb | |||

* spec/models/questionnaire_spec.rb | |||

== Testing == | == Testing == | ||

| Line 41: | Line 78: | ||

=== RSpec Framework Tests === | === RSpec Framework Tests === | ||

The RSpec Testing Framework, automated testing, was used to verify the models of the Expertiza web application feature set. Since this feature is dealing with visualizations (charts) that are intimately tied with Active Record models, we seeded the testing database with known data via | The RSpec Testing Framework, automated testing, was used to verify the models of the Expertiza web application feature set. Since this feature is dealing with visualizations (charts) that are intimately tied with Active Record models, we seeded the testing database with known data via FactoryBot gem. These changes were automatically rolled-back once the testing was complete. | ||

RSpec was used to increase/maintain automated test coverage for the methods that we added into assignment and questionnaire models. Mocks and stubs were utilized in order to decouple the model under test from dependencies of other models. This will allow us to identify easily if our model updates are causing an issue. | |||

=== Capybara Tests === | === Capybara Tests === | ||

RSpec Capybara was used to provide integration testing of the charts. The Test Database was seeded with the appropriate information for executing the results of the charts. An additional GEM was needed, webdrivers, to utilize the predefined drivers of Selenium. The default web driver, Rack-Test, does not execute java scripts. Since the graphs that we are using require java script, thus soliciting the necessity of Selenium with its predefined web driver, selenium_chrome_headless. This provides the required functionality to process the java scripts and to automatically ensure that they exist in the DOM. | |||

In order to use the headless web driver with the seeded Test Database, a different database strategy was required. As a result, for this test only, the database strategy was changed to truncation. Ruby 2.2.7 is used in the current version of Expertiza. This version does not allow for sharing of the database thread, and thus requires the to be written to the database outside of a transaction block. This allows for the headless web driver to see that the data is persisted the Test Database and is able to run as normal. Once the test is completed, the database tables are truncated and all data is removed. | |||

=== UI Tests === | === UI Tests === | ||

In addition to the automated tests above we also performed manual testing of the newly added features to include: | In addition to the automated tests above we also performed manual testing of the newly added features to include: | ||

* The new feature properly initializes | |||

* Chart is | *# Analyze Assignment tab is loaded by default | ||

*# Chart is in between the other two existing charts | |||

*# Round 1, all Criteria selected, and Mean are selected by default | |||

* Analyze Assignment tab operating correctly | |||

*# Bars are showing up where expected | *# Bars are showing up where expected | ||

*# Bar annotations are showing the expected value | *# Bar annotations are showing the expected value | ||

| Line 56: | Line 100: | ||

*# Hover text is displaying the correct values | *# Hover text is displaying the correct values | ||

*# Null values are not present on the chart | *# Null values are not present on the chart | ||

*# Round dropdown menu shows all rounds for the assignment | *# Round dropdown menu shows all rounds for the assignment | ||

*# Selecting a round changes the criteria checkboxes | *# Selecting a round changes the criteria checkboxes | ||

*# All checkboxes are displayed with appropriate text | *# All checkboxes are displayed with appropriate text | ||

*# Checkboxes correctly remove or add criterion bars to the chart | *# Checkboxes correctly remove or add criterion bars to the chart | ||

* Compare Assignments tab operating correctly | |||

*# Compare Assignments tab is only displayed if compatible assignments exist | |||

*# Clicking Compare Assignments tab loads that tab | |||

*# (All tests from Analyze Assignment tab apply to this tab as well) | |||

*# The two colors for assignment comparison are correct | |||

=== Testing Issues === | |||

Using the Ubuntu image, one issue was discovered was with the ScoreView view. The migration files is building the view out as a table instead of a view as required, this can be seen in the schema file. The Development database shows the ScoreView as a view, but the Test database shows it as an table. It appears that the Development database was altered outside of migrations to force the ScoreView to be a view instead of table. With this scenario, the automated integration testing was forced to seed the ScoreView as a table. Further gems are available to alleviate this issue. | |||

== Shortcomings == | == Shortcomings == | ||

* We were unable to find two compatible assignments in the Expertiza test database to fully test our Compare Assignments feature. We did test all the functionality by seeding the database, but it technically wasn't using real data. | |||

== Decisions == | == Decisions == | ||

* | 1. Put our new chart on the View Scores page, rather than on a new page | ||

* The | * Since it was able to fit on the existing page while displaying all the necessary info, we figured it'd be beneficial to have all grade statistics charts together. | ||

2. Use GoogleCharts for our new charts | |||

* | * The group who previously attempted this project chose GoogleCharts because it has a high compatibility and its charts look of similar quality to those already existing in Expertiza. We agreed with this, and determined GoogleCharts had all of the features we would need. | ||

3. Added the AssignmentStats, CriterionStats, and ReviewRoundStats models | |||

* This was a logical, structured way to organize the various data and methods we needed. | |||

4. Encapsulation of the data within the new models | |||

* Since the various statistics we needed to form our chart were stored in multiple models, there would be no good place to put the new methods we'd need. The way we did it promotes cohesion, since the new classes have the sole focus of computing statistics. This also allows for future refactoring of other sporadic statistics methods. | |||

5. Placed AssignmentStats methods in assignment_stats_helper.rb rather than in the model or controller. | |||

* The methods of interest were really not business logic, but more like massaging of data. For that reason it belonged more in the controller than the model. However, to keep things neat and clean in the controller, the methods were moved out to the helper file. | |||

6. The exposure of mean and median as the metric_names in grades_controller.rb | |||

* Since we are explicitly calling the avg_data and med_data methods in the controller, we thought it made sense to name the metric_names nearby, within the controller, rather than hidden off in the helper file. | |||

7. Moved most of the javascript code in _team_charts_rubric_stats.html.erb into app/assets/javascripts/rubric_stats.js. We had to keep our dynamic references of Ruby code in the view. | |||

* This keeps the view more clean and concise. Also, in the previous semester, one of the reasons the team's pull request was rejected was because they had too much javascript code in their views. | |||

== Documentation == | == Documentation == | ||

| Line 76: | Line 139: | ||

[https://github.com/gshugh/expertiza/tree/OSS-HW4 GitHub Repo] | [https://github.com/gshugh/expertiza/tree/OSS-HW4 GitHub Repo] | ||

[https://drive.google.com/file/d/1jmOYfbNOlXQObtsK4MoIti-LsjJ_Y88P/view Demo Video] | |||

[https://docs.google.com/document/d/1Ozw2Bj2u_LyeKUdDqAyQtz1stEIKrxkmRnwmNyUgmzw/edit#heading=h.5kwrelqdnewh Problem Statement] | [https://docs.google.com/document/d/1Ozw2Bj2u_LyeKUdDqAyQtz1stEIKrxkmRnwmNyUgmzw/edit#heading=h.5kwrelqdnewh Problem Statement] | ||

== Team == | == Team == | ||

* Akshay Ravichandran | * Akshay Ravichandran '''(Mentor)''' | ||

* Chris Adkins | * Chris Adkins | ||

* Bobby DeMarco | * Bobby DeMarco | ||

* George Hugh | * George Hugh | ||

* John Warren | * John Warren | ||

Latest revision as of 03:21, 3 May 2019

Introduction

This Expertiza project, completed in the Spring of 2019, aimed to improve the charts instructors could view to see the grade statistics for a given assignment. Two charts already existed, one showing the grade distribution for all teams, and another simply showing the class average grade. Our goal for this project was to add a third chart, using Google Charts, which would show grade statistics for the various rubric grades within that assignment. The chart is interactive so the user (instructor) can toggle which rubric criteria, and which statistics, to display.

Along with displaying the rubric criteria for a single assignment, we aimed to add a feature which would allow the comparison of compatible rubric grades from two different assignments. Again, this functionality is interactive, allowing the instructor to choose which statistics will be populated in the chart.

By viewing our new visualization of rubric grades, an instructor can better judge which aspects of the course are well-understood, and which may need a bit more attention.

Problem Statement

E1929 - Visualizations for Instructors - Class performance on specific rubrics

An interactive visualization or table that shows how a class performed on selected rubric criteria would be immensely helpful. It would show the instructor what he / she will need to focus more attention on. For example, could you create a graph showing the range and clustering of scores for the 5 main rubric criteria? And, if these same 5 criteria are used in the preliminary and final assignments, it would be nice to be able to compare performance between assignments in a visualization that showed the class results on 3 separate artifacts.

Webpage: login as instructor -> Manage -> Assignments -> View scores

Old Charts

Accomplished

Executed on Plans

We successfully executed all of the ideas we had during the planning phase:

- Add new feature to show mean and median data for rubric criteria in a given assignment

- Add new feature to show comparison

- Performed UI testing to ensure features were operational, including edge cases

- Added and ran RSpec and Capybara automated tests

New Charts Inserted

Extra Issues Fixed

- Refactored _team_charts.html.erb partial view to use partials for each graphic. Previously both charts were defined together in _team_charts.html.erb, but now each graphic has its own partial view (_team_charts_averages.html.erb, _team_charts_distribution.html.erb, _team_charts_rubric_stats.html.erb). This allows more flexibility going forward, if it's decided one chart should move to a new place on the page, or to a new page.

Code Changes

Added: 10 + Modified: 11 = Total: 21

- app/assets/javascripts/rubric_stats.js (new)

- app/controllers/grades_controller.rb

- app/helpers/assignment_stats_helper.rb (new)

- app/models/assignment.rb

- app/models/assignment_questionnaire.rb

- app/models/assignment_stats.rb (new)

- app/models/criterion_stats.rb (new)

- app/models/question.rb

- app/models/questionnaire.rb

- app/models/review_round_stats.rb (new)

- app/models/score_view.rb

- app/views/grades/_team_charts.html.erb

- app/views/grades/_team_charts_averages.html.erb (new)

- app/views/grades/_team_charts_distribution.html.erb (new)

- app/views/grades/_team_charts_rubric_stats.html.erb (new)

- spec/controllers/grades_controller_spec.rb

- spec/factories/factories.rb

- spec/features/grade_interface_spec.rb (new)

- spec/features/helpers/grade_interface_helper.rb (new)

- spec/models/assignment_spec.rb

- spec/models/questionnaire_spec.rb

Testing

We performed automated tests using RSpec Framework and Capybara. In addition, we performed manual tests of the user interface (UI), by using the app.

RSpec Framework Tests

The RSpec Testing Framework, automated testing, was used to verify the models of the Expertiza web application feature set. Since this feature is dealing with visualizations (charts) that are intimately tied with Active Record models, we seeded the testing database with known data via FactoryBot gem. These changes were automatically rolled-back once the testing was complete.

RSpec was used to increase/maintain automated test coverage for the methods that we added into assignment and questionnaire models. Mocks and stubs were utilized in order to decouple the model under test from dependencies of other models. This will allow us to identify easily if our model updates are causing an issue.

Capybara Tests

RSpec Capybara was used to provide integration testing of the charts. The Test Database was seeded with the appropriate information for executing the results of the charts. An additional GEM was needed, webdrivers, to utilize the predefined drivers of Selenium. The default web driver, Rack-Test, does not execute java scripts. Since the graphs that we are using require java script, thus soliciting the necessity of Selenium with its predefined web driver, selenium_chrome_headless. This provides the required functionality to process the java scripts and to automatically ensure that they exist in the DOM.

In order to use the headless web driver with the seeded Test Database, a different database strategy was required. As a result, for this test only, the database strategy was changed to truncation. Ruby 2.2.7 is used in the current version of Expertiza. This version does not allow for sharing of the database thread, and thus requires the to be written to the database outside of a transaction block. This allows for the headless web driver to see that the data is persisted the Test Database and is able to run as normal. Once the test is completed, the database tables are truncated and all data is removed.

UI Tests

In addition to the automated tests above we also performed manual testing of the newly added features to include:

- The new feature properly initializes

- Analyze Assignment tab is loaded by default

- Chart is in between the other two existing charts

- Round 1, all Criteria selected, and Mean are selected by default

- Analyze Assignment tab operating correctly

- Bars are showing up where expected

- Bar annotations are showing the expected value

- Criteria labels are for the correct bar and displaying correct values

- Hover text is displaying the correct values

- Null values are not present on the chart

- Round dropdown menu shows all rounds for the assignment

- Selecting a round changes the criteria checkboxes

- All checkboxes are displayed with appropriate text

- Checkboxes correctly remove or add criterion bars to the chart

- Compare Assignments tab operating correctly

- Compare Assignments tab is only displayed if compatible assignments exist

- Clicking Compare Assignments tab loads that tab

- (All tests from Analyze Assignment tab apply to this tab as well)

- The two colors for assignment comparison are correct

Testing Issues

Using the Ubuntu image, one issue was discovered was with the ScoreView view. The migration files is building the view out as a table instead of a view as required, this can be seen in the schema file. The Development database shows the ScoreView as a view, but the Test database shows it as an table. It appears that the Development database was altered outside of migrations to force the ScoreView to be a view instead of table. With this scenario, the automated integration testing was forced to seed the ScoreView as a table. Further gems are available to alleviate this issue.

Shortcomings

- We were unable to find two compatible assignments in the Expertiza test database to fully test our Compare Assignments feature. We did test all the functionality by seeding the database, but it technically wasn't using real data.

Decisions

1. Put our new chart on the View Scores page, rather than on a new page

- Since it was able to fit on the existing page while displaying all the necessary info, we figured it'd be beneficial to have all grade statistics charts together.

2. Use GoogleCharts for our new charts

- The group who previously attempted this project chose GoogleCharts because it has a high compatibility and its charts look of similar quality to those already existing in Expertiza. We agreed with this, and determined GoogleCharts had all of the features we would need.

3. Added the AssignmentStats, CriterionStats, and ReviewRoundStats models

- This was a logical, structured way to organize the various data and methods we needed.

4. Encapsulation of the data within the new models

- Since the various statistics we needed to form our chart were stored in multiple models, there would be no good place to put the new methods we'd need. The way we did it promotes cohesion, since the new classes have the sole focus of computing statistics. This also allows for future refactoring of other sporadic statistics methods.

5. Placed AssignmentStats methods in assignment_stats_helper.rb rather than in the model or controller.

- The methods of interest were really not business logic, but more like massaging of data. For that reason it belonged more in the controller than the model. However, to keep things neat and clean in the controller, the methods were moved out to the helper file.

6. The exposure of mean and median as the metric_names in grades_controller.rb

- Since we are explicitly calling the avg_data and med_data methods in the controller, we thought it made sense to name the metric_names nearby, within the controller, rather than hidden off in the helper file.

7. Moved most of the javascript code in _team_charts_rubric_stats.html.erb into app/assets/javascripts/rubric_stats.js. We had to keep our dynamic references of Ruby code in the view.

- This keeps the view more clean and concise. Also, in the previous semester, one of the reasons the team's pull request was rejected was because they had too much javascript code in their views.

Documentation

Team

- Akshay Ravichandran (Mentor)

- Chris Adkins

- Bobby DeMarco

- George Hugh

- John Warren