CSC/ECE 517 Fall 2018/E1876 Completion/Progress view: Difference between revisions

| (31 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

= Problem Statement = | ='''Problem Statement'''= | ||

A key component of Expertiza is peer reviews, which provide feedback to authors so that they can improve their work. Expertiza also supports grading of these reviews to ensure students write quality reviews, helping them learn more about the assignment by looking at their peers' work. In addition, Expertiza allows for metareviews, which are reviews the authors of the original work write for the reviews of their original work. This author feedback is useful for grading the reviews because it indicates how helpful this review was to the authors of the original work. The objective of this project is to add metareview or author feedback information to the review report page, which shows a summary of all the reviews written by the students for an assignment. | |||

='''Goal'''= | |||

= Goal = | |||

The aim of this project is to build this into the system. We need an additional column in the 'Review Report' page for reviews which shows the calculation of the author feedback. This will help instructor's to know how the reviews proved useful to the authors/team. The aim of this project is to integrate the author feedback column in the summary page | The aim of this project is to build this into the system. We need an additional column in the 'Review Report' page for reviews which shows the calculation of the author feedback. This will help instructor's to know how the reviews proved useful to the authors/team. The aim of this project is to integrate the author feedback column in the summary page | ||

= Design = | ='''Design'''= | ||

== User Interface Enhancements == | |||

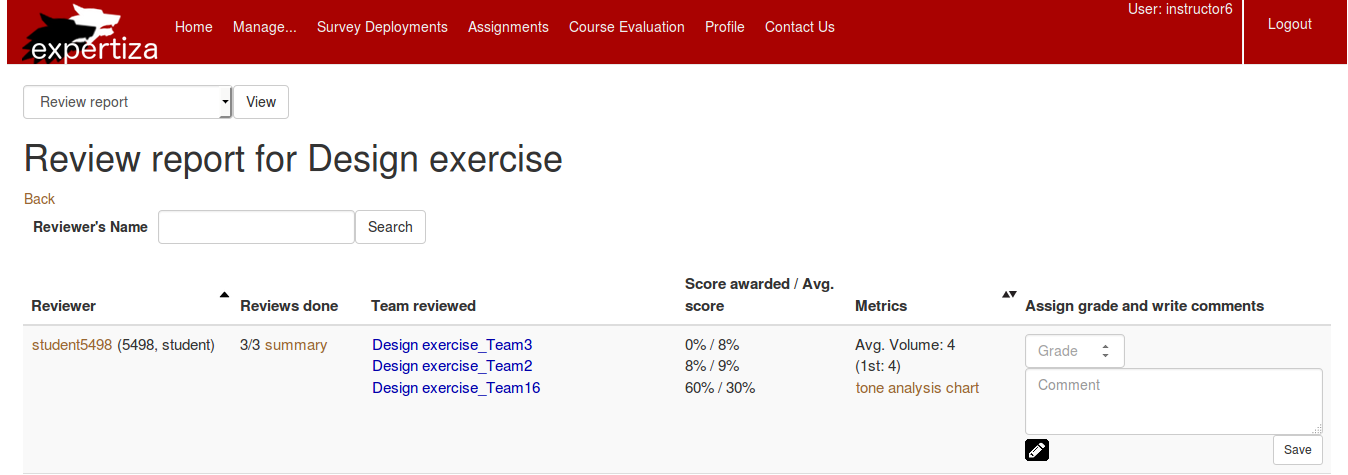

In the page "Review report for Design exercise" (Log in as an instructor then go to Manage -> Assignments -> View review report.), we are planning to add one more column to show the average ratings for the author feedback for a student's review of a particular assignment. The logic for calculating the average score for the metareviews would be similar to already implemented logic for the "Score Awarded/Average Score" column. Below is the page we are planning to edit. | |||

[[File:Feedback_new.png]] | |||

== Controller-level Logic == | |||

== Questions Table Structure== | The following method shows the code logic we are planning to write for calculating the average scores for the feedback given by authors for the reviews of their work. | ||

<pre style="color: black; border:1px;"> | |||

def calculate_avg_score_by_feedback(question_answers, q_max_score) | |||

# get score and summary of answers for each question | |||

# only include divide the valid_answer_sum with the number of valid answers | |||

valid_answer_counter = 0 | |||

question_score = 0.0 | |||

question_answers.each do |ans| | |||

# calculate score per question | |||

unless ans.answer.nil? | |||

question_score += ans.answer | |||

valid_answer_counter += 1 | |||

end | |||

end | |||

if valid_answer_counter > 0 and q_max_score > 0 | |||

# convert the score in percentage | |||

question_score /= (valid_answer_counter * q_max_score) | |||

question_score = question_score.round(2) * 100 | |||

end | |||

question_score | |||

end | |||

</pre> | |||

== Relevant Database Tables == | |||

The following are the table structures we will need for this feature. First, the questions table has all the questions based on the questionnaire. We will be only concerned with the questions in the feedback questionnaire. The answers for each question in the feedback questionnaire are saved in the Answers table below based on the Question ID. Now, in order to know if the answer is a feedback by team members or a review by reviewer, the mapping for the Answers table is done by the response_id which is a foreign key to the Response table. This Response table gives us the map_id which maps to a response map table. Now, the response map table gives us information on the reviewer_id, reviewee_id, reviewed_object_id (which is the ID for the assignment being reviewed) and the type (whether it's a teammate review, author feedback, a regular review, etc.). We will have to fetch the answers from the Answer table based on response_id because in our case, the response is from a previous reviewee and not a reviewer. So, we will fetch those answers whose response type is FeedbackResponseMap and calculate scores for those questions for the corresponding ReviewScores table. Below are excerpts from the [http://wiki.expertiza.ncsu.edu/index.php/Documentation_on_Database_Tables Expertiza database documentation] which describe the database tables relevant to our design. | |||

=== Questions Table Structure === | |||

<!-- Questions page already exists,so created a page with the name Questions table and gave an external link on the tables page--> | <!-- Questions page already exists,so created a page with the name Questions table and gave an external link on the tables page--> | ||

| Line 62: | Line 97: | ||

|} | |} | ||

== Answer Table Structure == | === Answer Table Structure === | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 88: | Line 123: | ||

|} | |} | ||

== Response Table Structure == | === Response Table Structure === | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 126: | Line 161: | ||

|} | |} | ||

== Response Map Table == | === Response Map Table === | ||

{| class="wikitable" | {| class="wikitable" | ||

| Line 163: | Line 198: | ||

|} | |} | ||

''' | == UML Flow-Chart == | ||

[[ | |||

[[File:UML-progress.png]] | |||

== Files That Will be Changed == | |||

1. To calculate the average author feedback score: https://github.com/jainmohit1/expertiza/blob/master/app/models/on_the_fly_calc.rb | |||

2. To populate the average author feedback score for the view: https://github.com/jainmohit1/expertiza/blob/master/app/controllers/review_mapping_controller.rb | |||

3. To add a field in the view: https://github.com/jainmohit1/expertiza/blob/master/app/views/review_mapping/response_report.html.haml | |||

4. To add a field in the partial : https://github.com/jainmohit1/expertiza/blob/master/app/views/review_mapping/_review_report.html.erb | |||

5. To add a field in the partial: https://github.com/jainmohit1/expertiza/blob/master/app/views/review_mapping/_team_score.html.erb | |||

= Implementation = | |||

We added functionality in the on_the_fly_calc.rb file in Models. The methods in consideration are:<br> | |||

1. compute_author_feedback_scores<br> | |||

2. calc_avg_feedback_score(response)<br> | |||

3. calc_feedback_scores_sum<br> | |||

The first method fetches the Feedback Response from ResponseMaps table based on the responses given earlier. Responses means the reviews given. Each feedback is saved to ResponseMap table with type = 'FeedbackResponseMap'. The feedback is fetched according to the assignment id, the reviewer who gave the review for that assignment and the reviews in each round. For each feedback response, we get the weight of the answer(feedback) submitted by author from Answer table and the weight of each answer from Questions table for the FeedbackQuestionnaire in consideration which we fetched from Questionnaire table. We calculate by taking an average of all feedbacks given by all team members to the reviews that they got. Following is the code snippet: | |||

<pre style="color: black; border:1px;"> | |||

def compute_author_feedback_scores | |||

@author_feedback_scores = {} | |||

@response_maps = ResponseMap.where('reviewed_object_id = ? && type = ?', self.id, 'ReviewResponseMap') | |||

rounds = self.rounds_of_reviews | |||

(1..rounds).each do |round| | |||

@response_maps.each do |response_map| | |||

response = Response.where('map_id = ?', response_map.id) | |||

response = response.select {|response| response.round == round } | |||

@round = round | |||

@response_map = response_map | |||

calc_avg_feedback_score(response) unless response.empty? | |||

end | |||

end | |||

@author_feedback_scores | |||

end | |||

</pre> | |||

<pre style="color: black; border:1px;"> | |||

def calc_avg_feedback_score(response) | |||

# Retrieve the author feedback response maps for the teammates reviewing the review of their work. | |||

author_feedback_response_maps = ResponseMap.where('reviewed_object_id = ? && type = ?', response.first.id, 'FeedbackResponseMap') | |||

author_feedback_response_maps.each do |author_feedback_response_map| | |||

@corresponding_response = Response.where('map_id = ?', author_feedback_response_map.id) | |||

next if @corresponding_response.empty? | |||

calc_feedback_scores_sum | |||

end | |||

# Divide the sum of the author feedback scores for this review by their number to get the | |||

# average. | |||

if !@author_feedback_scores[@response_map.reviewer_id].nil? && | |||

!@author_feedback_scores[@response_map.reviewer_id][@round].nil? && | |||

!@author_feedback_scores[@response_map.reviewer_id][@round][@response_map.reviewee_id].nil? && | |||

!author_feedback_response_maps.empty? | |||

@author_feedback_scores[@response_map.reviewer_id][@round][@response_map.reviewee_id] /= author_feedback_response_maps.count | |||

end | |||

end | |||

</pre> | |||

<pre style="color: black; border:1px;"> | |||

def calc_feedback_scores_sum | |||

@respective_scores = {} | |||

if !@author_feedback_scores[@response_map.reviewer_id].nil? && !@author_feedback_scores[@response_map.reviewer_id][@round].nil? | |||

@respective_scores = @author_feedback_scores[@response_map.reviewer_id][@round] | |||

end | |||

author_feedback_questionnaire_id = feedback_questionnaire_id(@corresponding_response) | |||

@questions = Question.where('questionnaire_id = ?', author_feedback_questionnaire_id) | |||

# Calculate the score of the author feedback review. | |||

calc_review_score | |||

# Compute the sum of the author feedback scores for this review. | |||

@respective_scores[@response_map.reviewee_id] = 0 if @respective_scores[@response_map.reviewee_id].nil? | |||

@respective_scores[@response_map.reviewee_id] += @this_review_score | |||

# The reviewer is the metareviewee whose review the authors or teammates are reviewing. | |||

@author_feedback_scores[@response_map.reviewer_id] = {} if @author_feedback_scores[@response_map.reviewer_id].nil? | |||

@author_feedback_scores[@response_map.reviewer_id][@round] = {} if @author_feedback_scores[@response_map.reviewer_id][@round].nil? | |||

@author_feedback_scores[@response_map.reviewer_id][@round] = @respective_scores | |||

end | |||

</pre> | |||

== View == | |||

We added another column to show the average author feedback score for each student who reviewed and for each team the student reviewed. | |||

<pre style="color: black; border:1px;"> | |||

<td align = 'left' > | |||

<% @response_maps.each_with_index do |ri, index| %> | |||

<% if Team.where(id: ri.reviewee_id).length > 0 %> | |||

<%@team = Team.find(ri.reviewee_id) %> | |||

<%= render partial: 'team_feedback_score', locals: {bgcolor: @bgcolor, team_id: @team.id, reviewer_id: r.id} %> | |||

<%end %> | |||

<%end %> | |||

</td> | |||

</pre> | |||

= Test Plan = | = Test Plan = | ||

We plan to test the response report page (/review_mapping/response_report?id={:assignmentID}) to make sure the new field (average author feedback) exists. | |||

Using [http://rspec.info/ RSpec] we will add a test case to review_mapping_controller_spec.rb. | |||

<pre> | |||

context 'when type is FeedbackResponseMap' do | |||

context 'when assignment has author feedback feature' do | |||

it 'renders response_report page with average author feedback data' do | |||

allow(assignment).to receive(:varying_rubrics_by_round?).and_return(true) | |||

allow(FeedbackResponseMap).to receive(:feedback_response_report).with('1', 'FeedbackResponseMap') | |||

.and_return([participant, participant1], [1, 2], [3, 4], []) | |||

params = { | |||

id: 1, | |||

report: {type: 'FeedbackResponseMap'}, | |||

} | |||

get :response_report, params | |||

expect(response).to render_template(:response_report) | |||

expect(response).to have(:avg_author_feedback) | |||

end | |||

end | |||

end | |||

</pre> | |||

We also plan to manually test the response report page to make sure the new field is aligning well in the UI in the expected place. We will attach the screenshot of the UI as the test result. We will test the cases of one and multiple reviews by a reviewer and verify the number and average scores of the metareviews for those reviews are rendered correctly. | |||

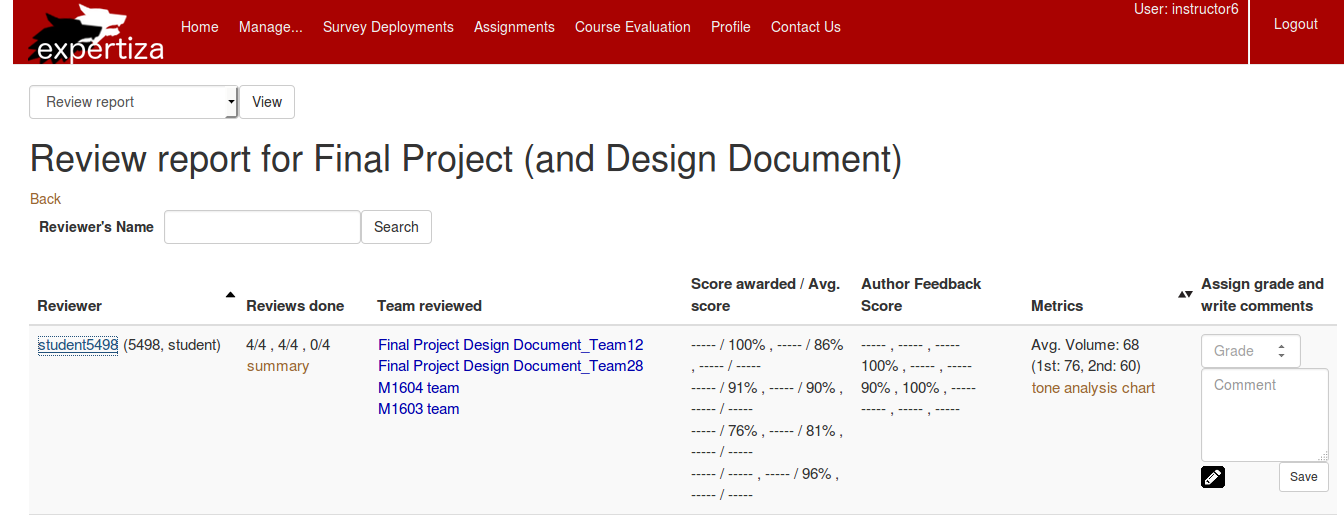

== Review Report Page == | |||

Below is the screenshot of review report page which now shows a new column for Author Feedback Score. <br> | |||

[[File:impl_screenshot.png]] | |||

== Test Implementation == | |||

We added a rspec test in on_the_fly_calc_spec.rb which tests our method: 'compute_author_feedback_scores' that calculates the average feedback score. Below is the snippet: | |||

<pre style="color: black; border:1px;"> | |||

describe '#compute_author_feedback_score' do | |||

let(:reviewer) { build(:participant, id: 1) } | |||

let(:feedback) { Answer.new(answer: 2, response_id: 1, comments: 'Feedback Text', question_id: 2) } | |||

let(:feedback_question) { build(:question, questionnaire: questionnaire2, weight: 1, id: 2) } | |||

let(:questionnaire2) { build(:questionnaire, name: "feedback", private: 0, min_question_score: 0, max_question_score: 10, instructor_id: 1234) } | |||

let(:reviewer1) { build(:participant, id: 2) } | |||

let score = {} | |||

let(:team_user) { build(:team_user, team: 2, user: 2) } | |||

let(:feedback_response) { build(:response, id: 2, map_id: 2, scores: [feedback]) } | |||

let(:feedback_response_map) { build(:response_map, id: 2, reviewed_object_id: 1, reviewer_id: 2, reviewee_id: 1) } | |||

before(:each) do | |||

allow(on_the_fly_calc).to receive(:rounds_of_reviews).and_return(1) | |||

allow(on_the_fly_calc).to receive(:review_questionnaire_id).and_return(1) | |||

end | |||

context 'verifies feedback score' do | |||

it 'computes feedback score based on reviews' do | |||

expect(assignment.compute_author_feedback_scores).to eq(score) | |||

end | |||

end | |||

end | |||

</pre> | |||

= Project Walk-through = | |||

https://www.youtube.com/watch?v=14bx6vD3dOE&feature=youtu.be | |||

= References = | = References = | ||

1 | 1. http://wiki.expertiza.ncsu.edu/index.php/Documentation_on_Database_Tables | ||

2. https://github.com/jainmohit1/expertiza | |||

3. https://github.com/expertiza/expertiza/pull/1290 | |||

Latest revision as of 18:39, 6 December 2019

Problem Statement

A key component of Expertiza is peer reviews, which provide feedback to authors so that they can improve their work. Expertiza also supports grading of these reviews to ensure students write quality reviews, helping them learn more about the assignment by looking at their peers' work. In addition, Expertiza allows for metareviews, which are reviews the authors of the original work write for the reviews of their original work. This author feedback is useful for grading the reviews because it indicates how helpful this review was to the authors of the original work. The objective of this project is to add metareview or author feedback information to the review report page, which shows a summary of all the reviews written by the students for an assignment.

Goal

The aim of this project is to build this into the system. We need an additional column in the 'Review Report' page for reviews which shows the calculation of the author feedback. This will help instructor's to know how the reviews proved useful to the authors/team. The aim of this project is to integrate the author feedback column in the summary page

Design

User Interface Enhancements

In the page "Review report for Design exercise" (Log in as an instructor then go to Manage -> Assignments -> View review report.), we are planning to add one more column to show the average ratings for the author feedback for a student's review of a particular assignment. The logic for calculating the average score for the metareviews would be similar to already implemented logic for the "Score Awarded/Average Score" column. Below is the page we are planning to edit.

Controller-level Logic

The following method shows the code logic we are planning to write for calculating the average scores for the feedback given by authors for the reviews of their work.

def calculate_avg_score_by_feedback(question_answers, q_max_score)

# get score and summary of answers for each question

# only include divide the valid_answer_sum with the number of valid answers

valid_answer_counter = 0

question_score = 0.0

question_answers.each do |ans|

# calculate score per question

unless ans.answer.nil?

question_score += ans.answer

valid_answer_counter += 1

end

end

if valid_answer_counter > 0 and q_max_score > 0

# convert the score in percentage

question_score /= (valid_answer_counter * q_max_score)

question_score = question_score.round(2) * 100

end

question_score

end

Relevant Database Tables

The following are the table structures we will need for this feature. First, the questions table has all the questions based on the questionnaire. We will be only concerned with the questions in the feedback questionnaire. The answers for each question in the feedback questionnaire are saved in the Answers table below based on the Question ID. Now, in order to know if the answer is a feedback by team members or a review by reviewer, the mapping for the Answers table is done by the response_id which is a foreign key to the Response table. This Response table gives us the map_id which maps to a response map table. Now, the response map table gives us information on the reviewer_id, reviewee_id, reviewed_object_id (which is the ID for the assignment being reviewed) and the type (whether it's a teammate review, author feedback, a regular review, etc.). We will have to fetch the answers from the Answer table based on response_id because in our case, the response is from a previous reviewee and not a reviewer. So, we will fetch those answers whose response type is FeedbackResponseMap and calculate scores for those questions for the corresponding ReviewScores table. Below are excerpts from the Expertiza database documentation which describe the database tables relevant to our design.

Questions Table Structure

| Field Name | Type | Description |

|---|---|---|

| id | int(11) | unique identifier for the record |

| txt | text | the question string |

| weight | int(11) | specifies the weighting of the question |

| questionnaire_id | int(11) | the id of the questionnaire that this question belongs to |

| seq | DECIMAL | |

| type | VARCHAR(255) | Type of question |

| size | VARCHAR(255) | Size of the question |

| alternatives | VARCHAR(255) | Other question which means the same |

| break_before | BIT | |

| max_label | VARCHAR(255) | |

| min_label | VARCHAR(255) |

Answer Table Structure

| Field Name | Type | Description |

|---|---|---|

| id | int(11) | Unique ID for each Answers record. |

| question_id | int(11) | ID of Question. |

| answer | int(11) | Value of each of the answer. |

| comments | text | Comment given to the answer. |

| reponse_id | int(11) | ID of the response associated with this Answer. |

Response Table Structure

| Field Name | Type | Description |

|---|---|---|

| id | int(11) | The unique record id |

| map_id | int(11) | The ID of the response map defining the relationship that this response applies to |

| additional_comment | text | An additional comment provided by the reviewer to support his/her response |

| updated_at | datetime | The timestamp indicating when this response was last modified |

| created_at | datetime | The timestamp indicating when this response was created |

| version_num | int(11) | The version of the review. |

| round | int(11) | The round the review is connected to. |

| is_submitted | tinyint(1) | Boolean Field to indicate whether the review is submitted. |

Response Map Table

| Field Name | Type | Description |

|---|---|---|

| id | int(11) | The unique record id |

| reviewed_object_id | int(11) | The object being reviewed in the response. Possible objects include other ResponseMaps or assignments |

| reviewer_id | int(11) | The participant (actually AssignmentParticipant) providing the response |

| reviewee_id | int(11) | The team (AssignmentTeam) receiving the response |

| type | varchar(255) | Used for subclassing the response map. Available subclasses are ReviewResponseMap, MetareviewResponseMap, FeedbackResponseMap, TeammateReviewResponseMap |

| created_at | DATETIME | Date and Time for when the record was created |

| updated_at | DATETIME | Date and Time when the last update was made |

| calibrate_to | BIT |

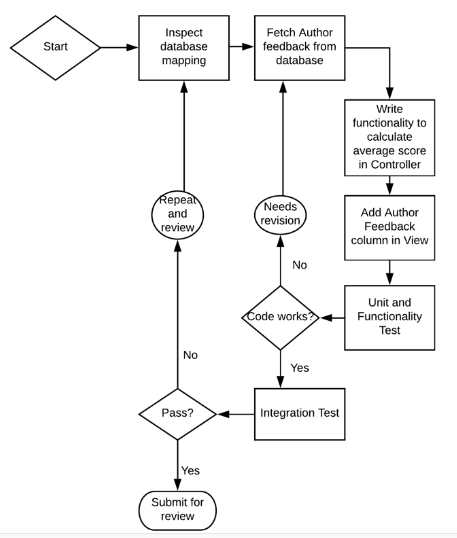

UML Flow-Chart

Files That Will be Changed

1. To calculate the average author feedback score: https://github.com/jainmohit1/expertiza/blob/master/app/models/on_the_fly_calc.rb

2. To populate the average author feedback score for the view: https://github.com/jainmohit1/expertiza/blob/master/app/controllers/review_mapping_controller.rb

3. To add a field in the view: https://github.com/jainmohit1/expertiza/blob/master/app/views/review_mapping/response_report.html.haml

4. To add a field in the partial : https://github.com/jainmohit1/expertiza/blob/master/app/views/review_mapping/_review_report.html.erb

5. To add a field in the partial: https://github.com/jainmohit1/expertiza/blob/master/app/views/review_mapping/_team_score.html.erb

Implementation

We added functionality in the on_the_fly_calc.rb file in Models. The methods in consideration are:

1. compute_author_feedback_scores

2. calc_avg_feedback_score(response)

3. calc_feedback_scores_sum

The first method fetches the Feedback Response from ResponseMaps table based on the responses given earlier. Responses means the reviews given. Each feedback is saved to ResponseMap table with type = 'FeedbackResponseMap'. The feedback is fetched according to the assignment id, the reviewer who gave the review for that assignment and the reviews in each round. For each feedback response, we get the weight of the answer(feedback) submitted by author from Answer table and the weight of each answer from Questions table for the FeedbackQuestionnaire in consideration which we fetched from Questionnaire table. We calculate by taking an average of all feedbacks given by all team members to the reviews that they got. Following is the code snippet:

def compute_author_feedback_scores

@author_feedback_scores = {}

@response_maps = ResponseMap.where('reviewed_object_id = ? && type = ?', self.id, 'ReviewResponseMap')

rounds = self.rounds_of_reviews

(1..rounds).each do |round|

@response_maps.each do |response_map|

response = Response.where('map_id = ?', response_map.id)

response = response.select {|response| response.round == round }

@round = round

@response_map = response_map

calc_avg_feedback_score(response) unless response.empty?

end

end

@author_feedback_scores

end

def calc_avg_feedback_score(response)

# Retrieve the author feedback response maps for the teammates reviewing the review of their work.

author_feedback_response_maps = ResponseMap.where('reviewed_object_id = ? && type = ?', response.first.id, 'FeedbackResponseMap')

author_feedback_response_maps.each do |author_feedback_response_map|

@corresponding_response = Response.where('map_id = ?', author_feedback_response_map.id)

next if @corresponding_response.empty?

calc_feedback_scores_sum

end

# Divide the sum of the author feedback scores for this review by their number to get the

# average.

if !@author_feedback_scores[@response_map.reviewer_id].nil? &&

!@author_feedback_scores[@response_map.reviewer_id][@round].nil? &&

!@author_feedback_scores[@response_map.reviewer_id][@round][@response_map.reviewee_id].nil? &&

!author_feedback_response_maps.empty?

@author_feedback_scores[@response_map.reviewer_id][@round][@response_map.reviewee_id] /= author_feedback_response_maps.count

end

end

def calc_feedback_scores_sum

@respective_scores = {}

if !@author_feedback_scores[@response_map.reviewer_id].nil? && !@author_feedback_scores[@response_map.reviewer_id][@round].nil?

@respective_scores = @author_feedback_scores[@response_map.reviewer_id][@round]

end

author_feedback_questionnaire_id = feedback_questionnaire_id(@corresponding_response)

@questions = Question.where('questionnaire_id = ?', author_feedback_questionnaire_id)

# Calculate the score of the author feedback review.

calc_review_score

# Compute the sum of the author feedback scores for this review.

@respective_scores[@response_map.reviewee_id] = 0 if @respective_scores[@response_map.reviewee_id].nil?

@respective_scores[@response_map.reviewee_id] += @this_review_score

# The reviewer is the metareviewee whose review the authors or teammates are reviewing.

@author_feedback_scores[@response_map.reviewer_id] = {} if @author_feedback_scores[@response_map.reviewer_id].nil?

@author_feedback_scores[@response_map.reviewer_id][@round] = {} if @author_feedback_scores[@response_map.reviewer_id][@round].nil?

@author_feedback_scores[@response_map.reviewer_id][@round] = @respective_scores

end

View

We added another column to show the average author feedback score for each student who reviewed and for each team the student reviewed.

<td align = 'left' >

<% @response_maps.each_with_index do |ri, index| %>

<% if Team.where(id: ri.reviewee_id).length > 0 %>

<%@team = Team.find(ri.reviewee_id) %>

<%= render partial: 'team_feedback_score', locals: {bgcolor: @bgcolor, team_id: @team.id, reviewer_id: r.id} %>

<%end %>

<%end %>

</td>

Test Plan

We plan to test the response report page (/review_mapping/response_report?id={:assignmentID}) to make sure the new field (average author feedback) exists.

Using RSpec we will add a test case to review_mapping_controller_spec.rb.

context 'when type is FeedbackResponseMap' do

context 'when assignment has author feedback feature' do

it 'renders response_report page with average author feedback data' do

allow(assignment).to receive(:varying_rubrics_by_round?).and_return(true)

allow(FeedbackResponseMap).to receive(:feedback_response_report).with('1', 'FeedbackResponseMap')

.and_return([participant, participant1], [1, 2], [3, 4], [])

params = {

id: 1,

report: {type: 'FeedbackResponseMap'},

}

get :response_report, params

expect(response).to render_template(:response_report)

expect(response).to have(:avg_author_feedback)

end

end

end

We also plan to manually test the response report page to make sure the new field is aligning well in the UI in the expected place. We will attach the screenshot of the UI as the test result. We will test the cases of one and multiple reviews by a reviewer and verify the number and average scores of the metareviews for those reviews are rendered correctly.

Review Report Page

Below is the screenshot of review report page which now shows a new column for Author Feedback Score.

Test Implementation

We added a rspec test in on_the_fly_calc_spec.rb which tests our method: 'compute_author_feedback_scores' that calculates the average feedback score. Below is the snippet:

describe '#compute_author_feedback_score' do

let(:reviewer) { build(:participant, id: 1) }

let(:feedback) { Answer.new(answer: 2, response_id: 1, comments: 'Feedback Text', question_id: 2) }

let(:feedback_question) { build(:question, questionnaire: questionnaire2, weight: 1, id: 2) }

let(:questionnaire2) { build(:questionnaire, name: "feedback", private: 0, min_question_score: 0, max_question_score: 10, instructor_id: 1234) }

let(:reviewer1) { build(:participant, id: 2) }

let score = {}

let(:team_user) { build(:team_user, team: 2, user: 2) }

let(:feedback_response) { build(:response, id: 2, map_id: 2, scores: [feedback]) }

let(:feedback_response_map) { build(:response_map, id: 2, reviewed_object_id: 1, reviewer_id: 2, reviewee_id: 1) }

before(:each) do

allow(on_the_fly_calc).to receive(:rounds_of_reviews).and_return(1)

allow(on_the_fly_calc).to receive(:review_questionnaire_id).and_return(1)

end

context 'verifies feedback score' do

it 'computes feedback score based on reviews' do

expect(assignment.compute_author_feedback_scores).to eq(score)

end

end

end

Project Walk-through

https://www.youtube.com/watch?v=14bx6vD3dOE&feature=youtu.be

References

1. http://wiki.expertiza.ncsu.edu/index.php/Documentation_on_Database_Tables