CSC/ECE 517 Fall 2017/E1790 Text metrics: Difference between revisions

(Created page with "==Introduction== In this final project “Text Metric”, first, we will integrate a couple of external sources such as Github, Trello to fetch information. Second, we will intro...") |

m (Indicate the OC principle used in the design) |

||

| (34 intermediate revisions by one other user not shown) | |||

| Line 2: | Line 2: | ||

In this final project “Text Metric”, first, we will integrate a couple of external sources such as Github, Trello to fetch information. Second, we will introduce the idea of "Readability." To get the level of readability, we will import the content of write-ups written by students, split the sentences to get the number of sentences, the number of words, etc., and then we calculate the indices by using these numbers and formulas. | In this final project “Text Metric”, first, we will integrate a couple of external sources such as Github, Trello to fetch information. Second, we will introduce the idea of "Readability." To get the level of readability, we will import the content of write-ups written by students, split the sentences to get the number of sentences, the number of words, etc., and then we calculate the indices by using these numbers and formulas. | ||

Our primary task for the final project is to design tables which allow the Expertiza app to store data fetched from external sources, such as GitHub, Trello, and write-ups. For the next step, we would like to utilize this raw data for virtualized charts and grading metrics. | Our primary task for the final project is to design tables which allow the Expertiza app to store data fetched from external sources, such as GitHub, Trello, and write-ups. For the next step, we would like to utilize this raw data for virtualized charts and grading metrics. | ||

==Current Design== | |||

Currently, there are three models created to store the raw data from metrics source. (Metrics, Metric_data_points, Metric_data_point_types) | |||

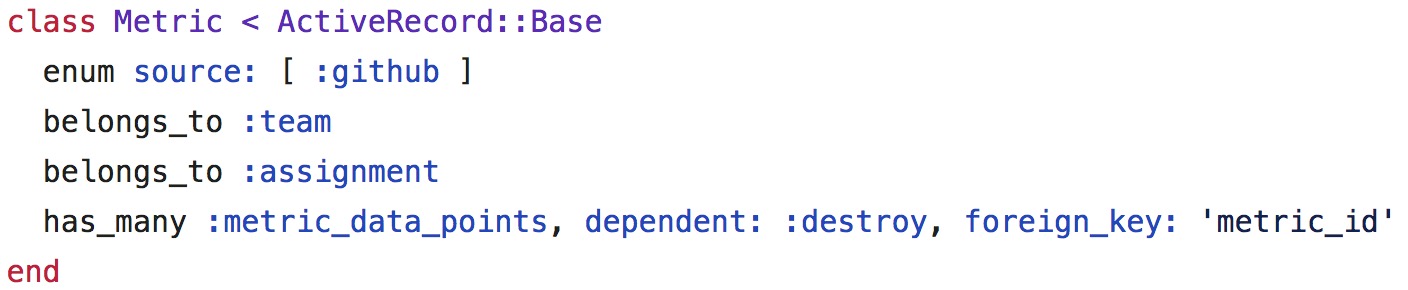

===<span style="color: blue">Metric</span>=== | |||

====Model:==== | |||

[[File:Metrics_models.jpg]] | |||

<br/> | |||

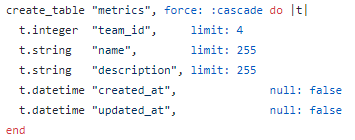

====Schema:==== | |||

[[File: metrics_schema.png]] | |||

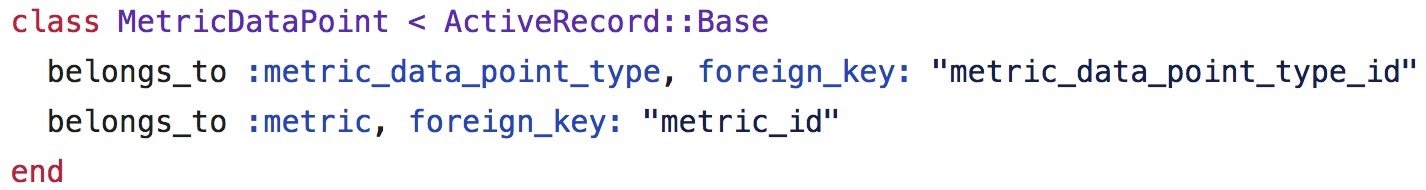

===<span style="color: blue">Metric_data_points</span>=== | |||

====Model:==== | |||

[[File:metric_data_points_model.jpg]] | |||

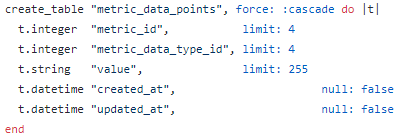

====Schema:==== | |||

[[File:metric_data_points_schema.png]] | |||

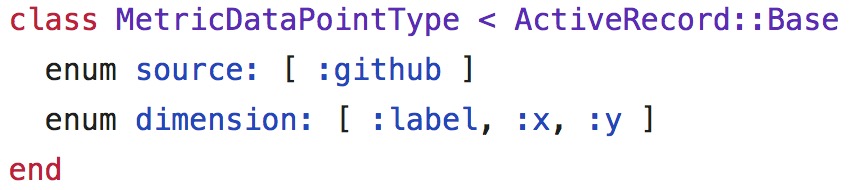

===<span style="color: blue">Metric_data_point_types</span>=== | |||

====Model:==== | |||

[[File:metric_data_point_types_model.jpg]] | |||

====Schema:==== | |||

[[File:metric_data_points_schema.png]] | |||

The current framework only defined the schema, but the models are still empty, and the methods of the data parser have not been implemented yet. | |||

This schema is a clever design because it follows the "Open to Extension and Closed to Modification" principle. When new data is added to the database, developers don't have to change the metric_data_point_types and metric_data_points tables. The developers only need to add two methods to translate the data type to and from strings. By browsing the code, the most basic types already have those methods to meet our requirements. But it is not flawless, and we will talk about the problems in the next section. | |||

Besides, we only have GitHub to be our data source currently. As a result, we also need to find other data sources to be one of the grading metrics. | |||

==Analysis of the Problem== | ==Analysis of the Problem== | ||

<li>In model metric_data_points, it is defined that each metric_data_point belongs to a metric and a metric_data_point_type. However, in both model metric_data_point and metric, they haven’t defined has_many metric_data_points. So we can’t query all the metric_data_points of a metric or a metric_data_point_type.</li> | |||

<li>In schema metric_data_points, value has been defined as a string to accommodate different data type. But this requires the program to translate data into strings when storing the data and translating the string back to data when accessing it. To make it worse, using strings to store data types, such as float type or Time class, would either lose the precision or incur more abundant storage space.</li> | |||

<li>More external sources are needed.</li> | |||

==Proposed Design== | ==Proposed Design== | ||

==='''Current source: GitHub'''=== | |||

Our first and current source for grading metrics is GitHub, and Zach and Tyler have implemented the integration with GitHub API for fetching the commit data. However, the integration looks that it is still in the first stage; the app can fetch the data and store into the database. We don't have actual implementations of getting the valid data and the usage of this data to be one of the grading metrics yet. | |||

From the API offered by GitHub, we can fetch the commit information that the number of additions and the number of deletions is made by each contributor in the repository. We can just use these numbers to calculate the contributions for the metrics, or we can put these numbers into some equations to get the impact factor to represent as contributions for each group member. | |||

==='''Proposed source for metrics:'''=== | |||

===='''1. [https://www.webpagefx.com/tools/read-able/ Readability]'''==== | |||

Sometimes, students only have project write-ups to submit (for example, this stage of the final project). As a result, there might be no GitHub commits to check the number of additions or deletions of the working repository. Here, we introduce some formulas for calculating "Readability" of those write-ups to be one of grading metrics. | |||

The readability indices contain: | |||

====='''Flesch Kincaid Reading Ease'''===== | |||

Based on a 0-100 scale. A high score means the text is easier to read. Low scores suggest the text is complicated to understand. | |||

206.835 - 1.015 x (words/sentences) - 84.6 x (syllables/words) | |||

A value between 60 and 80 should be easy for a 12 to 15 year old to understand. | |||

====='''Flesch Kincaid Grade Level'''===== | |||

0.39 x (words/sentences) + 11.8 x (syllables/words) - 15.59 | |||

====='''Gunning Fog Score'''===== | |||

0.4 x ( (words/sentences) + 100 x (complexWords/words) ) | |||

====='''SMOG Index'''===== | |||

1.0430 x sqrt( 30 x complexWords/sentences ) + 3.1291 | |||

====='''Coleman Liau Index'''===== | |||

5.89 x (characters/words) - 0.3 x (sentences/words) - 15.8 | |||

====='''Automated Readability Index (ARI)'''===== | |||

4.71 x (characters/words) + 0.5 x (words/sentences) - 21.43 | |||

To calculate these indices, we need to fetch the article to get the number of sentences, the number of words and the number of complex words, and then we can use these numbers to calculate the indices mentioned above to get the readability level. | |||

===='''2.[https://developers.trello.com/reference#tokens Trello]'''==== | |||

Trello is a web-based project management application. It helps students to understand what tasks have been accomplished, what works are in progress and what jobs are waiting for being started by adding cards and writing down to-do lists inside, and it also helps instructors to keep track of how students work by looking into these cards and lists. | |||

It is useful for both coding projects and writing projects because we can fetch the information from the activities to calculate the percentages of the workloads for each group member, and this result can be one of the grading metrics. | |||

For example, a group of students has a to-do list, which contains eight tasks. Student A finishes two tasks, Student B finishes one, and Student finishes one as well. We can use the information fetched from the activity of project from Trello RESTful API to calculate the percentage of finished jobs (which is 50%) and the proportions of contributions for each student (which are 50%, 25%, 25%, respectively). | |||

Using this data from Trello might be a good idea for being one of the grading metrics because we cannot merely conclude contributions by observing the number of additions and the number of deletions. What if a student just adjusts the indentation for all files in the project for an hour and the other student thinks about a complicated algorithm to get a correct answer for days or even weeks? | |||

However, the data from Trello offers a different aspect of the grading rubric; it concludes the contributions by calculating how many tasks are done by each student. Also, it can be used as a grading metric for both coding projects and writing projects because we only care about the todo-lists. | |||

====='''Model Improvement'''===== | |||

1.Since each metric and metric_data_point_type could have many metric_data_points, we need to add has_many metric_data_points in those models. | |||

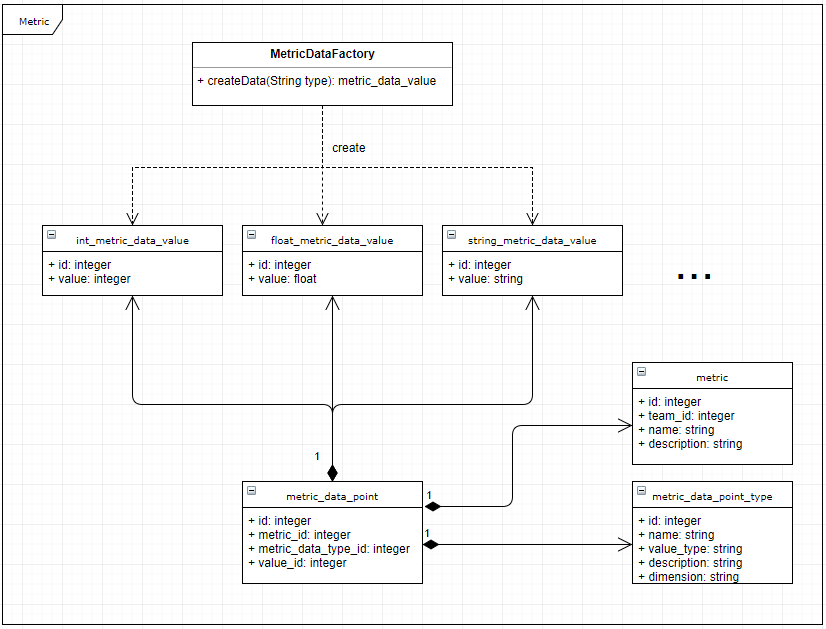

To make the database store different data types as they are, we can create a metric_data_value table for each data type. Then we can change the the value field in table metric_data_points to the value_id, which help us to find the value in the corresponding metric_data_value table. | |||

When storing data in the database, we can create the new data using the <span style="font-weight:bold">factory pattern</span> as shown in the figure below, and the string parameter “type” could be used for specifying which type of data is created. Each metric_data_point_type and metric combination could only have one metric_data_point, so if it already exists, the new data will replace the old one. Here each metric_data_point_type row actually represent one field of a metric. If two or more metric_data_point_type rows have the same value_type, their name should be different. So each row of metric_data_point_type is like a column header of a table, which make the database more extensible. | |||

When querying data in the database, we can first utilize the has_many relationship between metric and metri_data_point to get all the metric_data_points. Then get the each metric_data_value table by the value_type field in the metric_data_point_type. Finally use the value_id in metric_data_points table to find the data. | |||

[[File:Metric_data_factory.png]] | |||

This design could solve the problem discussed above. Each data would be stored as their original data type. Thus, we do not need to convert data type to/from strings which lead to precision loss or space problem. | |||

This design also follows "Open to Extension and Closed to Modification" principle. Each time when we need to add a new datatype, we don’t need to modify the previous schema, we only need to add a new model and schema. | |||

For example, even if the new data type is an array, we could use the dimension field in metric_data_point_type to specify the length of the array, then use the value_id field in metric_data_point to specify the start id of the array, finally in the corresponding | |||

metric_data_value table we can use the start id and the dimension to get all the elements in the array. | |||

But this design has drawbacks too. First of all, the logic becomes more complex, it would be more difficult for a programmer to understand. Secondly, the table of each data type would need to store the id, which costs extra space. However, this overhead should be less than the overhead caused by string storage or frequent type casting. | |||

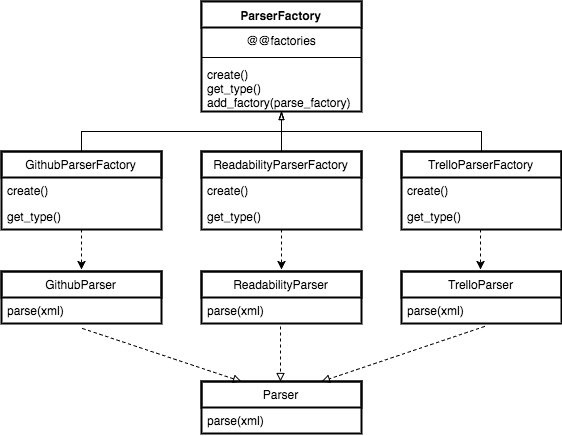

2. We also need to implement the data parser methods. The data parser methods will be implemented in the data source model class. We can utilize Ruby’s duck typing character to implement polymorphism, so when the application gets a data source object, it could invoke the parser method without knowing which type of data source it is. This ensures this program is closed to modification and the data source is open to extension. | |||

==Design Schema== | |||

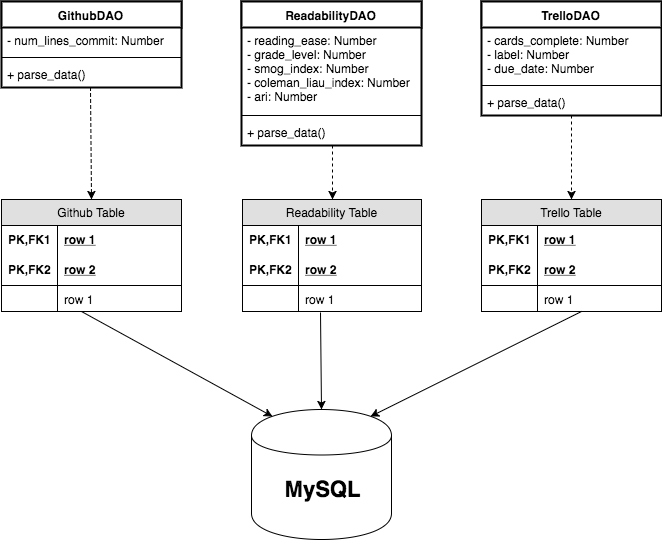

[[File: CSC517_Design_Schema_factory.png]] | |||

To implement all the parsers, we planned to use a factory pattern as shown in the diagram above. It allows further extension on new metric sources. For each parser class, we implement duck typing by defining the parse method with the same signature, so we don’t really need a super class or interface. The caller code could execute the parese method without knowing exactly which parser is created by the factory. Thus the caller code is close to modification, and this design follows open/closed principle. | |||

[[File:CSC517_Design_Schema_database.png]] | |||

Each parser will parse the given data source and use the metric data factory to create model for each data then use these data to create the metric model. | |||

==Test Plan== | |||

<ol> | |||

<li>Given a valid url of GitHub, the GitHub parser could correctly get all the data. | |||

<li>Given a valid url of Readability, the Readability parser could correctly get all the data. | |||

<li>Given a valid url of Trello, the Trello parser could correctly get all the data. | |||

<li>Test that the parser should correctly create metric_data_point_type if it doesn’t exist. | |||

<li>Test that the parser should be able to create the correct type of metric_data_value model according to the type specified by the metric_data_point_type. | |||

<li>Test that the parser should correctly create metric_data_value models if it doesn’t exist. | |||

<li>Test that the parser should correctly update metric_data_value models if it already exist. | |||

<li>Test that the metric_data_point_value could be correctly queried with the data from a given metric. | |||

</ol> | |||

==Reference== | |||

#[https://www.webpagefx.com/tools/read-able/ Readability1] | |||

#[https://raventools.com/blog/ultimate-list-of-online-content-readability-tests/ Readability2] | |||

#[https://trello.com/ Trello1] | |||

#[https://developers.trello.com/reference#tokens Trello2] | |||

Latest revision as of 21:46, 15 November 2017

Introduction

In this final project “Text Metric”, first, we will integrate a couple of external sources such as Github, Trello to fetch information. Second, we will introduce the idea of "Readability." To get the level of readability, we will import the content of write-ups written by students, split the sentences to get the number of sentences, the number of words, etc., and then we calculate the indices by using these numbers and formulas.

Our primary task for the final project is to design tables which allow the Expertiza app to store data fetched from external sources, such as GitHub, Trello, and write-ups. For the next step, we would like to utilize this raw data for virtualized charts and grading metrics.

Current Design

Currently, there are three models created to store the raw data from metrics source. (Metrics, Metric_data_points, Metric_data_point_types)

Metric

Model:

Schema:

Metric_data_points

Model:

Schema:

Metric_data_point_types

Model:

Schema:

The current framework only defined the schema, but the models are still empty, and the methods of the data parser have not been implemented yet.

This schema is a clever design because it follows the "Open to Extension and Closed to Modification" principle. When new data is added to the database, developers don't have to change the metric_data_point_types and metric_data_points tables. The developers only need to add two methods to translate the data type to and from strings. By browsing the code, the most basic types already have those methods to meet our requirements. But it is not flawless, and we will talk about the problems in the next section.

Besides, we only have GitHub to be our data source currently. As a result, we also need to find other data sources to be one of the grading metrics.

Analysis of the Problem

Proposed Design

Current source: GitHub

Our first and current source for grading metrics is GitHub, and Zach and Tyler have implemented the integration with GitHub API for fetching the commit data. However, the integration looks that it is still in the first stage; the app can fetch the data and store into the database. We don't have actual implementations of getting the valid data and the usage of this data to be one of the grading metrics yet.

From the API offered by GitHub, we can fetch the commit information that the number of additions and the number of deletions is made by each contributor in the repository. We can just use these numbers to calculate the contributions for the metrics, or we can put these numbers into some equations to get the impact factor to represent as contributions for each group member.

Proposed source for metrics:

1. Readability

Sometimes, students only have project write-ups to submit (for example, this stage of the final project). As a result, there might be no GitHub commits to check the number of additions or deletions of the working repository. Here, we introduce some formulas for calculating "Readability" of those write-ups to be one of grading metrics.

The readability indices contain:

Flesch Kincaid Reading Ease

Based on a 0-100 scale. A high score means the text is easier to read. Low scores suggest the text is complicated to understand.

206.835 - 1.015 x (words/sentences) - 84.6 x (syllables/words) A value between 60 and 80 should be easy for a 12 to 15 year old to understand.

Flesch Kincaid Grade Level

0.39 x (words/sentences) + 11.8 x (syllables/words) - 15.59

Gunning Fog Score

0.4 x ( (words/sentences) + 100 x (complexWords/words) )

SMOG Index

1.0430 x sqrt( 30 x complexWords/sentences ) + 3.1291

Coleman Liau Index

5.89 x (characters/words) - 0.3 x (sentences/words) - 15.8

Automated Readability Index (ARI)

4.71 x (characters/words) + 0.5 x (words/sentences) - 21.43

To calculate these indices, we need to fetch the article to get the number of sentences, the number of words and the number of complex words, and then we can use these numbers to calculate the indices mentioned above to get the readability level.

2.Trello

Trello is a web-based project management application. It helps students to understand what tasks have been accomplished, what works are in progress and what jobs are waiting for being started by adding cards and writing down to-do lists inside, and it also helps instructors to keep track of how students work by looking into these cards and lists.

It is useful for both coding projects and writing projects because we can fetch the information from the activities to calculate the percentages of the workloads for each group member, and this result can be one of the grading metrics.

For example, a group of students has a to-do list, which contains eight tasks. Student A finishes two tasks, Student B finishes one, and Student finishes one as well. We can use the information fetched from the activity of project from Trello RESTful API to calculate the percentage of finished jobs (which is 50%) and the proportions of contributions for each student (which are 50%, 25%, 25%, respectively).

Using this data from Trello might be a good idea for being one of the grading metrics because we cannot merely conclude contributions by observing the number of additions and the number of deletions. What if a student just adjusts the indentation for all files in the project for an hour and the other student thinks about a complicated algorithm to get a correct answer for days or even weeks?

However, the data from Trello offers a different aspect of the grading rubric; it concludes the contributions by calculating how many tasks are done by each student. Also, it can be used as a grading metric for both coding projects and writing projects because we only care about the todo-lists.

Model Improvement

1.Since each metric and metric_data_point_type could have many metric_data_points, we need to add has_many metric_data_points in those models.

To make the database store different data types as they are, we can create a metric_data_value table for each data type. Then we can change the the value field in table metric_data_points to the value_id, which help us to find the value in the corresponding metric_data_value table.

When storing data in the database, we can create the new data using the factory pattern as shown in the figure below, and the string parameter “type” could be used for specifying which type of data is created. Each metric_data_point_type and metric combination could only have one metric_data_point, so if it already exists, the new data will replace the old one. Here each metric_data_point_type row actually represent one field of a metric. If two or more metric_data_point_type rows have the same value_type, their name should be different. So each row of metric_data_point_type is like a column header of a table, which make the database more extensible.

When querying data in the database, we can first utilize the has_many relationship between metric and metri_data_point to get all the metric_data_points. Then get the each metric_data_value table by the value_type field in the metric_data_point_type. Finally use the value_id in metric_data_points table to find the data.

This design could solve the problem discussed above. Each data would be stored as their original data type. Thus, we do not need to convert data type to/from strings which lead to precision loss or space problem.

This design also follows "Open to Extension and Closed to Modification" principle. Each time when we need to add a new datatype, we don’t need to modify the previous schema, we only need to add a new model and schema.

For example, even if the new data type is an array, we could use the dimension field in metric_data_point_type to specify the length of the array, then use the value_id field in metric_data_point to specify the start id of the array, finally in the corresponding

metric_data_value table we can use the start id and the dimension to get all the elements in the array.

But this design has drawbacks too. First of all, the logic becomes more complex, it would be more difficult for a programmer to understand. Secondly, the table of each data type would need to store the id, which costs extra space. However, this overhead should be less than the overhead caused by string storage or frequent type casting.

2. We also need to implement the data parser methods. The data parser methods will be implemented in the data source model class. We can utilize Ruby’s duck typing character to implement polymorphism, so when the application gets a data source object, it could invoke the parser method without knowing which type of data source it is. This ensures this program is closed to modification and the data source is open to extension.

Design Schema

To implement all the parsers, we planned to use a factory pattern as shown in the diagram above. It allows further extension on new metric sources. For each parser class, we implement duck typing by defining the parse method with the same signature, so we don’t really need a super class or interface. The caller code could execute the parese method without knowing exactly which parser is created by the factory. Thus the caller code is close to modification, and this design follows open/closed principle.

Each parser will parse the given data source and use the metric data factory to create model for each data then use these data to create the metric model.

Test Plan

- Given a valid url of GitHub, the GitHub parser could correctly get all the data.

- Given a valid url of Readability, the Readability parser could correctly get all the data.

- Given a valid url of Trello, the Trello parser could correctly get all the data.

- Test that the parser should correctly create metric_data_point_type if it doesn’t exist.

- Test that the parser should be able to create the correct type of metric_data_value model according to the type specified by the metric_data_point_type.

- Test that the parser should correctly create metric_data_value models if it doesn’t exist.

- Test that the parser should correctly update metric_data_value models if it already exist.

- Test that the metric_data_point_value could be correctly queried with the data from a given metric.