CSC/ECE 517 Fall 2017/M1754 Mutation Testing on Servo: Difference between revisions

No edit summary |

|||

| (23 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Servo uses the Web Platform Test (WPT) suite for testing, but does not perform an evaluation of the breadth of the tests. The goal of this project is to use techniques from mutation testing to evaluate the performance of the WPT suite when bugs are deliberately introduced into the code base. | Servo uses the Web Platform Test (WPT) suite for testing, but does not perform an evaluation of the breadth of the tests. The goal of this project is to use techniques from mutation testing to evaluate the performance of the WPT suite when bugs are deliberately introduced into the code base. | ||

The implementation of this project was done by writing python scripts that would modify the source code to generate mutants and run tests on them | The implementation of this project was done by writing python scripts that would modify the source code to generate mutants and run tests on them expecting failures. The scripts would temporarily modify the source codes, call the corresponding tests and revert back to the original code by reversing the changes that were made earlier. This process was repeated for multiple iterations by modifying various parts of the source code in random order. | ||

==Introduction== | ==Introduction== | ||

| Line 14: | Line 14: | ||

===Web-platform-tests=== | ===Web-platform-tests=== | ||

The web-platform-tests Project is a W3C-coordinated attempt to build a cross-browser test suite for the Web-platform stack. Writing tests in a way that allows them to be run in all browsers gives browser projects confidence that they are shipping software that is compatible with other implementations, and that later implementations will be compatible with their implementations. This in turn, gives Web authors/developers confidence that they can actually rely on the Web platform to deliver on the promise of working across browsers and devices without needing extra layers of abstraction to paper over the gaps left by specification editors and implementors. | The [https://github.com/w3c/web-platform-tests web-platform-tests Project] is a W3C-coordinated attempt to build a cross-browser test suite for the Web-platform stack. Writing tests in a way that allows them to be run in all browsers gives browser projects confidence that they are shipping software that is compatible with other implementations, and that later implementations will be compatible with their implementations. This, in turn, gives Web authors/developers confidence that they can actually rely on the Web platform to deliver on the promise of working across browsers and devices without needing extra layers of abstraction to paper over the gaps left by specification editors and implementors. | ||

===Mutation Testing=== | ===Mutation Testing=== | ||

| Line 20: | Line 20: | ||

=='''Project description'''== | =='''Project description'''== | ||

=== | ===Environment Setup=== | ||

* The steps to setup the environment | * Clone the repository from [https://github.com/dsandeephegde/servo link] | ||

* The steps to setup the environment for different OS are mentioned in readme file [https://github.com/servo/servo link]. | |||

====Install packages==== | |||

Example, In OSX(homebrew): | |||

brew install automake pkg-config python cmake yasm | |||

pip install virtualenv | |||

====Running test after setup==== | |||

After the setup, make sure that everything works in your machine by building and running tests. | |||

build in release mode: | |||

./mach build --release | ./mach build --release | ||

run WPT tests: | |||

./mach test -wpt --release | ./mach test -wpt --release | ||

| Line 36: | Line 50: | ||

* Step 2: A test framework was defined to identify the source files that require mutation testing along with their corresponding WPTs. This framework is implemented in /servo/components/script/dom. | * Step 2: A test framework was defined to identify the source files that require mutation testing along with their corresponding WPTs. This framework is implemented in /servo/components/script/dom. | ||

* Step 3: The script was expanded to include the test framework and automate the process of generating mutations for multiple source files and running their corresponding WPTs based on the test_mapping.json. The script also traverses through | * Step 3: The script was expanded to include the test framework and automate the process of generating mutations for multiple source files and running their corresponding WPTs based on the test_mapping.json. The script also traverses through subfolders of the parsed path to check for the .json file. The script also logs on the terminal any mutants that the WPT failed to kill. | ||

* Step 4: Integrated the script so that it can be invoked from the CI tool. | * Step 4: Integrated the script so that it can be invoked from the CI tool. | ||

===Execution=== | ===Execution=== | ||

* | * Mutation test can be ran using either of the following command from the servo directory: | ||

python python/servo/mutation/init.py components/script/dom | |||

or | |||

python etc/ci/mutation_test.py | |||

===Excecution Flow=== | |||

Given a path | |||

:mutation test(path) | |||

::Look for test mapping file in that path | |||

::for each file the in the test mapping | |||

:::mutate a random line using some strategy in the file | |||

:::build project and run WPT tests corresponding to it | |||

:::if wpt tests pass? | |||

::::report mautation test failure | |||

:::else | |||

::::report mutation test success | |||

for each subfolder folder in the path | |||

:mutation test(subfolder) | |||

===Output=== | ===Output=== | ||

| Line 84: | Line 103: | ||

===Challenges=== | ===Challenges=== | ||

1. Setting up environment | 1. Setting up the environment on the local machine: | ||

*The amount of time taken to build and test the WPT | *The amount of time taken to build and test the WPT on the local machine was pretty long due to machine memory. Additionally faced problems on intermittent WPT test failures. It is expected that any reviewer will face a similar challenge. | ||

We overcame this challenge by discussing possibilities with Mozilla team who suggested us to use | We overcame this challenge by discussing possibilities with Mozilla team who suggested us to use Janitor Technology. The servo image would be already set up with the latest code, we can edit code in cloud9 and execute it. Using this platform for our testing made the project agnostic of the environment on the local machine and any hassle associated with same. | ||

2. Defining the test mapping framework: | 2. Defining the test mapping framework: | ||

*Servo consists of multiple components of which there are many | *Servo consists of multiple components of which there are many WPT tests written for each component. The test cases are organized according to the functionality they test, whereas the servo source code is organized according to the specific components. Due to a large number of source files and WPT tests for the entire project, it was difficult for one individual to have information on the mapping of components to their corresponding WPTs. | ||

Hence, we had to have a mapping to run the corresponding tests for a source file. The mapping framework enabled us to run tests on a few components and provide a base for other members from Mozilla team to add and replicate the same framework as per their requirement. | Hence, we had to have a mapping to run the corresponding tests for a source file. The mapping framework enabled us to run tests on a few components and provide a base for other members from Mozilla team to add and replicate the same framework as per their requirement. We still need to find if there can be any automated way to populate the mapping. | ||

3. | 3. The extent of mutation testing to be performed. | ||

There are standard techniques that define what a mutant is. For example, replacing '&&' with '||'. However, there is no specification for the number of replacements that must be made within a single source code, nor is there any specification on the permutations of possible mutations for each technique. This is an ambiguity in the project and our implementation replaces all instances of '&&' to '||' to generate a single type of mutant for | *There are standard techniques that define what a mutant is. For example, replacing '&&' with '||'. However, there is no specification for the number of replacements that must be made within a single source code, nor is there any specification on the permutations of possible mutations for each technique. This is an ambiguity in the project and our initial implementation replaces all instances of '&&' to '||' to generate a single type of mutant for each file of the source code. But now our implementation mutates random lines using a strategy. The code needs to evolve based on how runs/performs. And there is no predefined answer for it. | ||

==Pull Request== | ==Pull Request== | ||

Latest revision as of 23:47, 2 November 2017

Servo uses the Web Platform Test (WPT) suite for testing, but does not perform an evaluation of the breadth of the tests. The goal of this project is to use techniques from mutation testing to evaluate the performance of the WPT suite when bugs are deliberately introduced into the code base.

The implementation of this project was done by writing python scripts that would modify the source code to generate mutants and run tests on them expecting failures. The scripts would temporarily modify the source codes, call the corresponding tests and revert back to the original code by reversing the changes that were made earlier. This process was repeated for multiple iterations by modifying various parts of the source code in random order.

Introduction

Servo

Servo is a modern, high-performance browser engine designed for both application and embedded use. Servo is a web browser layout engine written in Rustand is currently being developed by Mozilla. The aim of the project is not to create a full browser but is rather to create a highly parallel environment that allows for many components be handled by fine-grained, isolated tasks. [1]

Rust

Rust is an open source, systems programming language sponsored by Mozilla Research. Rust performs the majority of its safety checks and memory management decisions at compile time, so that program’s runtime performance is not impacted. Making it useful in programs with predictable space and time requirements, embedding in other languages, and writing low-level code, like device drivers and operating systems.

Web-platform-tests

The web-platform-tests Project is a W3C-coordinated attempt to build a cross-browser test suite for the Web-platform stack. Writing tests in a way that allows them to be run in all browsers gives browser projects confidence that they are shipping software that is compatible with other implementations, and that later implementations will be compatible with their implementations. This, in turn, gives Web authors/developers confidence that they can actually rely on the Web platform to deliver on the promise of working across browsers and devices without needing extra layers of abstraction to paper over the gaps left by specification editors and implementors.

Mutation Testing

Mutation Testing is a type of software testing where we mutate (change) certain statements in the source code and check if the test cases are able to find the errors.The goal of Mutation Testing is to assess the quality of the test cases which should be robust enough to fail mutant code. This method is also called as a Fault-based testing strategy as it involves creating faults in the program.Faults are introduced into the source code of the program by creating many versions called mutants. Each mutant should contain a single fault, and the goal is to cause the mutant version to fail which demonstrates the effectiveness of the test cases.[2]

Project description

Environment Setup

- Clone the repository from link

- The steps to setup the environment for different OS are mentioned in readme file link.

Install packages

Example, In OSX(homebrew):

brew install automake pkg-config python cmake yasm pip install virtualenv

Running test after setup

After the setup, make sure that everything works in your machine by building and running tests.

build in release mode:

./mach build --release

run WPT tests:

./mach test -wpt --release

Implemented steps

The approach adopted was based on the requirements mentioned here. The below steps implement the initial steps mentioned in the project description.

- Step 1: A python script was written to mutate one source file and the corresponding WPT was run on it to check if the mutant was killed.

- Step 2: A test framework was defined to identify the source files that require mutation testing along with their corresponding WPTs. This framework is implemented in /servo/components/script/dom.

- Step 3: The script was expanded to include the test framework and automate the process of generating mutations for multiple source files and running their corresponding WPTs based on the test_mapping.json. The script also traverses through subfolders of the parsed path to check for the .json file. The script also logs on the terminal any mutants that the WPT failed to kill.

- Step 4: Integrated the script so that it can be invoked from the CI tool.

Execution

- Mutation test can be ran using either of the following command from the servo directory:

python python/servo/mutation/init.py components/script/dom

or

python etc/ci/mutation_test.py

Excecution Flow

Given a path

- mutation test(path)

- Look for test mapping file in that path

- for each file the in the test mapping

- mutate a random line using some strategy in the file

- build project and run WPT tests corresponding to it

- if wpt tests pass?

- report mautation test failure

- else

- report mutation test success

for each subfolder folder in the path

- mutation test(subfolder)

Output

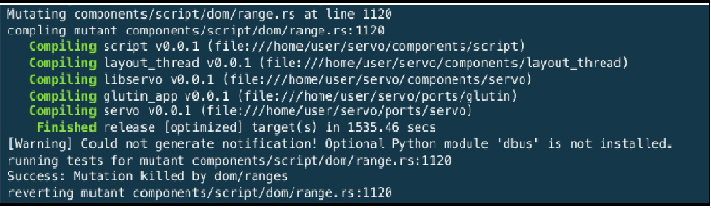

The log for success to kill a mutant is as shown below:

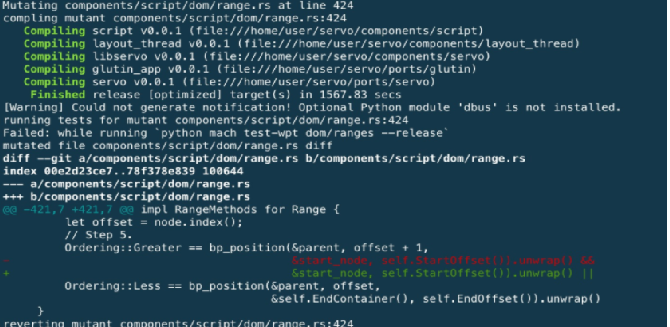

The log for failure to kill a mutant is as shown below:

Testing

The project is about writing a python script to change the source code and run tests. It does not add any functionality to Servo. So there is no scope for testing in this project.

Subsequent steps

The following subsequent steps will be followed to meet the project requirements as per this [3].

- implement mutations like replacing if statements by if true/if false, duplicating statements, reordering statements, changing arithmetic & atomic string constant.

- improving the performance of the testing, for example randomizing the test order, fast-failing, running tests with faster builds (e.g. ./mach build -d).

- find heuristics for identifying false positives, that is mutations which are expected to have no effect, for example removing logging.

- find search heuristics for identifying mutations that cause no test failures.

Challenges

1. Setting up the environment on the local machine:

- The amount of time taken to build and test the WPT on the local machine was pretty long due to machine memory. Additionally faced problems on intermittent WPT test failures. It is expected that any reviewer will face a similar challenge.

We overcame this challenge by discussing possibilities with Mozilla team who suggested us to use Janitor Technology. The servo image would be already set up with the latest code, we can edit code in cloud9 and execute it. Using this platform for our testing made the project agnostic of the environment on the local machine and any hassle associated with same.

2. Defining the test mapping framework:

- Servo consists of multiple components of which there are many WPT tests written for each component. The test cases are organized according to the functionality they test, whereas the servo source code is organized according to the specific components. Due to a large number of source files and WPT tests for the entire project, it was difficult for one individual to have information on the mapping of components to their corresponding WPTs.

Hence, we had to have a mapping to run the corresponding tests for a source file. The mapping framework enabled us to run tests on a few components and provide a base for other members from Mozilla team to add and replicate the same framework as per their requirement. We still need to find if there can be any automated way to populate the mapping.

3. The extent of mutation testing to be performed.

- There are standard techniques that define what a mutant is. For example, replacing '&&' with '||'. However, there is no specification for the number of replacements that must be made within a single source code, nor is there any specification on the permutations of possible mutations for each technique. This is an ambiguity in the project and our initial implementation replaces all instances of '&&' to '||' to generate a single type of mutant for each file of the source code. But now our implementation mutates random lines using a strategy. The code needs to evolve based on how runs/performs. And there is no predefined answer for it.

Pull Request

Here is our pull request link.

References

<references/>

1. https://en.wikipedia.org/wiki/Servo_(layout_engine)

2. https://www.guru99.com/mutation-testing.html

3. https://github.com/servo/servo

4. https://github.com/servo/servo/wiki/Mutation-testing-project