CSC/ECE 506 Fall 2007/wiki4 2 helperThreads: Difference between revisions

No edit summary |

No edit summary |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

'''What is a helper thread?''' | '''What is a helper thread?''' | ||

The potential of chip multiprocessors (CMPs) | It has been experienced that enhancing the speed of microprocessors is becoming an increasingly difficult | ||

divided into semi - independent parts, or threads that can operate simultaneously across the processors | task. It is because of various reasons of which few are stated below: | ||

within a system. The simplest way to use parallel threads within a CMP to increase the performance of a | |||

single thread is to have helper threads. A 'helper' thread performs work on behalf of main thread in an | 1) Typical instruction streams have only limited parallelism. So a superscalar pipeline which can issue more than four instruction per cycle is of little benefit compared to the cost it incurs. | ||

effort to accelerate its performance. | |||

2) As the depth of the pipeline increases, the cost of pipeline flushes during branch mispredictions becomes intolerable. | |||

3) As the speed and number of transistors on chip increase, the power required to drive complex logic circuits at high clock rate also increases beyond tolerable limit. | |||

So, processor manufacturers are switching to a new design paradigm: the chip microprocessors, which is also known as multicore microprocessors or manycore microprocessors. As the name implies, a chip multiprocessor is simply a group of uniprocessors integrated onto the same processor chip so that they may act as a team, filling the area that would have originally been filled with a single large processors with several smaller cores. | |||

The depth of the pipeline of the uniprocessor cores used will be just enough to exploit the limited instruction level parallelism present in programs inorder to keep power utilization low.So to better exploit the potential of chip multiprocessors (CMPs)the applications are divided into semi - independent parts, or threads that can operate simultaneously across the processors within a system. | |||

The simplest way to use parallel threads within a CMP to increase the performance of a single thread is to have helper threads. A 'helper' thread performs work on behalf of main thread in an effort to accelerate its performance. Helper threads run ahead of main thread because all the instructions that are not absolutely necessary for performing the function that the helper thread is meant for is removed from the program that the helper thread executes. Hence they can accuarately predict branches, start the long latency operations early, prefect the data needed by the main thread etc which are explained in detail below. | |||

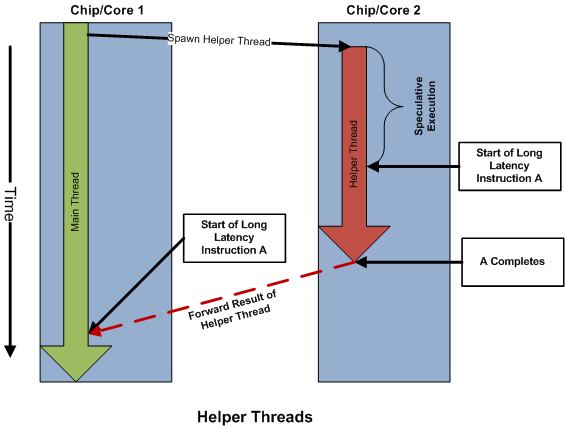

The following figure shows the main thread and helper thread in a two core chip multiprocessor.Main thread spawns the helper thread. Helper thread has the instructions that form a subset of the instructions in the main thread. As a result helper thread executes less instructions than the main thread and hence effectively executes ahead of main thread. As a result, it can start a long latency operations early and provide the result to the main thread by the time main thread starts executing that long latency operation. | |||

[[Image:Helper threads.png]] | [[Image:Helper threads.png]] | ||

| Line 16: | Line 28: | ||

'''Predicting branch at early stages:''' | '''Predicting branch at early stages:''' | ||

As mentioned earlier, helper threads are made up of program copies of the main thread stripped of all unessential parts which are not of absolute necessity for the helper thread to achieve it tasks. Hence helper threads runs ahead of main thread. Hence helper threads can do computations that are necessary to decide the direction of the branch. As the branch direction is decided using the result of actual computation and not just prediction, the branch predictor will be trained in favour to the actual branch direction taken by the helper thread. So, branch predictions will be more accuarate. This will inturn remove branch mispredictions which may occur when branch predictions are used. | |||

which are not of absolute necessity for the helper thread to achieve it tasks. Hence helper threads runs | |||

computations that are necessary to decide the direction of the branch. This will inturn remove branch | |||

mispredictions which may occur when branch predictions are used. | |||

'''Prefetching of Data:''' | '''Prefetching of Data:''' | ||

Since helper threads run ahead of main thread, they can predict | Since helper threads run ahead of main thread, they can predict which data in memory is needed in | ||

future by the main thread.they prefetch the data required by the main thread. The helper thread prefetches | future by the main thread.they prefetch the data required by the main thread. The helper thread prefetches | ||

the data and places it in the nearest cache accessible to the main thread. This avoids most of the L1 cache | the data and places it in the nearest cache accessible to the main thread. This avoids most of the L1 cache | ||

| Line 51: | Line 59: | ||

The speculative reduced program is called A-stream (Advanced Stream) and runs ahead of the unreduced program (main thread or Redundant stream).The R-stream exploits the A-stream to get an accurate picture of the future. For example, the A-stream | The speculative reduced program is called A-stream (Advanced Stream) and runs ahead of the unreduced program (main thread or Redundant stream).The R-stream exploits the A-stream to get an accurate picture of the future. For example, the A-stream | ||

provides very accurate branch and value predictions. The predictions are more accurate than predictions made by conventional history-based predictors because they are produced by future program computation. | provides very accurate branch and value predictions. The predictions are more accurate than predictions made by conventional history-based predictors because they are produced by future program computation. speculative A-stream occasionally (but | ||

infrequently) goes astray. The R-stream serves as a checker of the speculative A-stream and redirects it when needed. | infrequently) goes astray. The R-stream serves as a checker of the speculative A-stream and redirects it when needed. | ||

Synchronization between an R-stream and its corresponding A-stream is required for two reasons.First, an A-stream that has taken a completely wrong control path and is generating useless data access predictions has to be | Synchronization between an R-stream and its corresponding A-stream is required for two reasons.First, an A-stream that has taken a completely wrong control path and is generating useless data access predictions has to be corrected. Second, limit how far the A-stream gets ahead, so that its prefetches are not issued so early that the prefetched lines are often replaced or invalidated in the L2 cache before the R-stream uses them. A single semaphore is required between each A-stream/ | ||

R-stream pair to control their synchronization. It can be a shared hardware register, but any shared location that supports an atomic read-modify-write operation is sufficient. Using this semaphore, the following are controlled<br/> | R-stream pair to control their synchronization. It can be a shared hardware register, but any shared location that supports an atomic read-modify-write operation is sufficient. Using this semaphore, the following are controlled<br/> | ||

(a) how many synchronization events the A-stream can skip without waiting for the | (a) how many synchronization events the A-stream can skip without waiting for the R-stream<br/> | ||

(b) whether the synchronization is local (involving only the companion R-stream) or global (involving all R-streams). The initial value of the semaphore indicates how many sessions the A-stream may proceed ahead of the Rstream. <br/> | (b) whether the synchronization is local (involving only the companion R-stream) or global (involving all R-streams). The initial value of the semaphore indicates how many sessions the A-stream may proceed ahead of the Rstream. <br/> | ||

This can be viewed as creating an initial pool of tokens that are consumed as the A-stream enters a new session. When there are no tokens, the | This can be viewed as creating an initial pool of tokens that are consumed as the A-stream enters a new session. When there are no tokens, the A-stream may not proceed. The R-stream issues tokens by incrementing the semaphore counter.Once the A thread gets the semaphore, A threads advances and gets past the R thread. | ||

'''Disadvantages of helper threads:''' | '''Disadvantages of helper threads:''' | ||

| Line 64: | Line 72: | ||

A very tight synchronisation is needed between the main thread and the helper threads in oder to keep them the proper distance ahead of main thread. If the helper threads are too far ahead, then they will cause cache thrashing by prefetching data and then replaing it with subsequent prefetches beofre the main thread can even use it. If they are not far enough ahead, they might not be able to prefetch cache lines in time. | A very tight synchronisation is needed between the main thread and the helper threads in oder to keep them the proper distance ahead of main thread. If the helper threads are too far ahead, then they will cause cache thrashing by prefetching data and then replaing it with subsequent prefetches beofre the main thread can even use it. If they are not far enough ahead, they might not be able to prefetch cache lines in time. | ||

Only certain types of single threaded programs can be | Only certain types of single threaded programs can be speeded up with these techniques. Most integer applications have regular data access patterns and hence there will be only few misses to eliminate. The applications with larger memory footprints are easily parellelisable and hence doesn't run on a single main thread. Most floating point applications the data access patterns are fairly regular and hence can be prefetched easily using hardware or software prefetch mechanisms. Hence the range of programs that can be accelerated using helper threads is fairly limited. | ||

'''References''' | '''References''' | ||

| Line 75: | Line 83: | ||

4)http://www.tinker.ncsu.edu/ericro/research/slipstream.htm | 4)http://www.tinker.ncsu.edu/ericro/research/slipstream.htm | ||

5)A Survey of Helper Threads And Their Implementation by Ahren Studer | |||

6)Design and Implementation of a Compiler Framework for Helper Threading on Multicore Processors http://pact05.ce.ucsc.edu/datacentric.pdf | |||

Latest revision as of 01:31, 4 December 2007

A helper thread is a thread that does some of the work of the main thread in advance of the main thread so that the main thread can work more quickly. The Olukotun text only scratches the surface on all the different ways that helper threads can be used. Survey these ways, making sure to include the Slipstream approach developed at NCSU.

What is a helper thread?

It has been experienced that enhancing the speed of microprocessors is becoming an increasingly difficult task. It is because of various reasons of which few are stated below:

1) Typical instruction streams have only limited parallelism. So a superscalar pipeline which can issue more than four instruction per cycle is of little benefit compared to the cost it incurs.

2) As the depth of the pipeline increases, the cost of pipeline flushes during branch mispredictions becomes intolerable.

3) As the speed and number of transistors on chip increase, the power required to drive complex logic circuits at high clock rate also increases beyond tolerable limit.

So, processor manufacturers are switching to a new design paradigm: the chip microprocessors, which is also known as multicore microprocessors or manycore microprocessors. As the name implies, a chip multiprocessor is simply a group of uniprocessors integrated onto the same processor chip so that they may act as a team, filling the area that would have originally been filled with a single large processors with several smaller cores.

The depth of the pipeline of the uniprocessor cores used will be just enough to exploit the limited instruction level parallelism present in programs inorder to keep power utilization low.So to better exploit the potential of chip multiprocessors (CMPs)the applications are divided into semi - independent parts, or threads that can operate simultaneously across the processors within a system.

The simplest way to use parallel threads within a CMP to increase the performance of a single thread is to have helper threads. A 'helper' thread performs work on behalf of main thread in an effort to accelerate its performance. Helper threads run ahead of main thread because all the instructions that are not absolutely necessary for performing the function that the helper thread is meant for is removed from the program that the helper thread executes. Hence they can accuarately predict branches, start the long latency operations early, prefect the data needed by the main thread etc which are explained in detail below.

The following figure shows the main thread and helper thread in a two core chip multiprocessor.Main thread spawns the helper thread. Helper thread has the instructions that form a subset of the instructions in the main thread. As a result helper thread executes less instructions than the main thread and hence effectively executes ahead of main thread. As a result, it can start a long latency operations early and provide the result to the main thread by the time main thread starts executing that long latency operation.

Uses of helper threads

Predicting branch at early stages:

As mentioned earlier, helper threads are made up of program copies of the main thread stripped of all unessential parts which are not of absolute necessity for the helper thread to achieve it tasks. Hence helper threads runs ahead of main thread. Hence helper threads can do computations that are necessary to decide the direction of the branch. As the branch direction is decided using the result of actual computation and not just prediction, the branch predictor will be trained in favour to the actual branch direction taken by the helper thread. So, branch predictions will be more accuarate. This will inturn remove branch mispredictions which may occur when branch predictions are used.

Prefetching of Data:

Since helper threads run ahead of main thread, they can predict which data in memory is needed in future by the main thread.they prefetch the data required by the main thread. The helper thread prefetches the data and places it in the nearest cache accessible to the main thread. This avoids most of the L1 cache misses that would have been encountered by the main thread had if helper thread was not present. As the memory latency is reduced, the execution of main thread speeds up.

Memory Bug Reduction:

Memory related bugs such as reads from unitialised memory, read or writes using dangling pointers or memory leaks are difficult to detect by code inspection because they may invoive different code fragments and exist in differnt modules or source code files. Compilers are of little help becuase it fails to disambiguate pointers. Hence, in practice memory bug detection relies on run time checkers that insert monitor code to the application during testing. In CMPs, the program which detects the memory bugs can run as a helper thread.

Ex: Heapmon, a memory bug checker monitors application heap space to detect heap memory bugs. The memory access events in the application thread are automatically forwarded to helper thread using appropriate hardware mechanisms. Also the redundant and unnecessary memory accessess are filtered out. Hence the helper thread approach completely decouples bug monitoring from appication execution and the filtering mechanism reduces bug - check frequency. Conseqently, HeapMon achieves a very low performance overhead.

Slipstream approach for helper threads:

Slipstream approach is developed at North Carolina State University. Slipstream execution provides a simple means to use a second processor in a CMP to accelerate a single program.Slipstream execution involves running two redundant copies of the program. A significant number of dynamic instructions are speculatively removed from one of the program copies, without sacrificing its ability to make correct forward progress. The second program verifies the forward progress of the first and is also sped up in the process.

The speculative reduced program is called A-stream (Advanced Stream) and runs ahead of the unreduced program (main thread or Redundant stream).The R-stream exploits the A-stream to get an accurate picture of the future. For example, the A-stream provides very accurate branch and value predictions. The predictions are more accurate than predictions made by conventional history-based predictors because they are produced by future program computation. speculative A-stream occasionally (but infrequently) goes astray. The R-stream serves as a checker of the speculative A-stream and redirects it when needed.

Synchronization between an R-stream and its corresponding A-stream is required for two reasons.First, an A-stream that has taken a completely wrong control path and is generating useless data access predictions has to be corrected. Second, limit how far the A-stream gets ahead, so that its prefetches are not issued so early that the prefetched lines are often replaced or invalidated in the L2 cache before the R-stream uses them. A single semaphore is required between each A-stream/

R-stream pair to control their synchronization. It can be a shared hardware register, but any shared location that supports an atomic read-modify-write operation is sufficient. Using this semaphore, the following are controlled

(a) how many synchronization events the A-stream can skip without waiting for the R-stream

(b) whether the synchronization is local (involving only the companion R-stream) or global (involving all R-streams). The initial value of the semaphore indicates how many sessions the A-stream may proceed ahead of the Rstream.

This can be viewed as creating an initial pool of tokens that are consumed as the A-stream enters a new session. When there are no tokens, the A-stream may not proceed. The R-stream issues tokens by incrementing the semaphore counter.Once the A thread gets the semaphore, A threads advances and gets past the R thread.

Disadvantages of helper threads:

A very tight synchronisation is needed between the main thread and the helper threads in oder to keep them the proper distance ahead of main thread. If the helper threads are too far ahead, then they will cause cache thrashing by prefetching data and then replaing it with subsequent prefetches beofre the main thread can even use it. If they are not far enough ahead, they might not be able to prefetch cache lines in time.

Only certain types of single threaded programs can be speeded up with these techniques. Most integer applications have regular data access patterns and hence there will be only few misses to eliminate. The applications with larger memory footprints are easily parellelisable and hence doesn't run on a single main thread. Most floating point applications the data access patterns are fairly regular and hence can be prefetched easily using hardware or software prefetch mechanisms. Hence the range of programs that can be accelerated using helper threads is fairly limited.

References

1)ChipMultiprocessor Architecture:Techniques to Improve Throughput and Latency by Kunle Olukotun, Lance Hammond, and James Laudon

2)Slipstream Execution Mode for CMP-Based Multiprocessors by Khaled Z. Ibrahim, Gregory T. Byrd, and Eric Rotenberg

3)http://www.research.ibm.com/journal/rd/502/shetty.html

4)http://www.tinker.ncsu.edu/ericro/research/slipstream.htm

5)A Survey of Helper Threads And Their Implementation by Ahren Studer

6)Design and Implementation of a Compiler Framework for Helper Threading on Multicore Processors http://pact05.ce.ucsc.edu/datacentric.pdf