CSC/ECE 506 Spring 2014/2b hd: Difference between revisions

| (55 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

2b. Overall, this chapter is not so much about GPUs in general as about CUDA, OpenGL, and OpenCL. There should be a section describing GPUs in general prefacing everything else. Read up on other GPU architectures as well, so you can write a good, non-vendor-specific, introduction to data parallelism in GPUs. It may or may not be helpful to consult the two 2011 pages, one on NVIDIA's Fermi architecture, and the other on the AMD HD 6900 series. | |||

The section on thread management CUDA doesn’t indicate what threads and blocks are used for. It’s necessary to study the following section before the reader can understand the section on thread management. See if you are confused when you start to read, and fix it so it’s clearer. | |||

Format variables and code correctly. Use italics for variable names appearing in running text. Use a typewriter font for code snippets in running text. Don’t use such a small font size for displayed examples; they are hard to read. It would be good if you could juxtapose the description of a section of code in the right column with the actual code in the left column. That is, create another column to the right of the code where you describe its workings in prose. | |||

==Introduction to GPU== | ==Introduction to GPU== | ||

Graphical Processing Unit (GPU) is | Graphical Processing Unit (GPU) is an hardware accelerator used for graphical computation, necessary to display the image on the screen. The GPUs were designed initially to alleviate the CPU work load. GPUs are characterized by high programmability, fast floating point parallel computation, minimal dependency on a CPU, large data sets and cost efficiency. The immense capability of the GPU to perform high speed computations involving vectors and matrices has made them suitable for non-graphical applications as well. <ref name= GPU vs CPU by NVIDIA> GPU vs CPU by NVIDIA. URL http://cs.utsa.edu/~qitian/seminar/Spring11/03_04_11/GPU.pdf </ref> | ||

The various functionalities carried out by a GPU include computations related to 3D computer graphics, texture mapping, geometric and vertex calculations and Rasterization. This makes it a best choice processor for applications such as Video decoding, Molecular dynamics, Quantum Chemistry, Material Science, Visualization and Docking Software, Bioinformatics, Numerical Analytics, Physics, Defense and Intelligence, Computational Finance, Computational Fluid Dynamics, Computational Structural mechanics, Computer Aided Design, Animation and Modeling, Color correction and grain management, Oil and Gas. | The various functionalities carried out by a GPU include computations related to 3D computer graphics, texture mapping, geometric and vertex calculations and Rasterization. This makes it a best choice processor for applications such as Video decoding, Molecular dynamics, Quantum Chemistry, Material Science, Visualization and Docking Software, Bioinformatics, Numerical Analytics, Physics, Defense and Intelligence, Computational Finance, Computational Fluid Dynamics, Computational Structural mechanics, Computer Aided Design, Animation and Modeling, Color correction and grain management, Oil and Gas. | ||

<ref name= GPU Applications by NVIDIA> GPU Applications by NVIDIA. URL http://www.nvidia.com/content/tesla/pdf/gpu-accelerated-applications-for-hpc.pdf </ref> | |||

GPU is the mainstream movement in the world of processors and currently has many vendors like Nvidia, AMD , Intel , PowerVR, Qualcomm. | GPU is the mainstream movement in the world of processors and currently has many vendors like Nvidia, AMD , Intel , PowerVR, Qualcomm. | ||

===GPU VS CPU=== | ===GPU VS CPU=== | ||

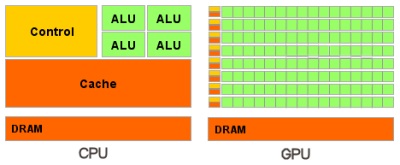

A GPU is made up of many tiny processing cores which are very efficient, easily programmable and highly suitable for parallel computation. In contrast, a CPU has few cores optimized for sequential serial processing. The diagram below shows how the processing cores are arranged in CPU and GPU | A GPU is made up of many tiny processing cores which are very efficient, easily programmable and highly suitable for parallel computation. In contrast, a CPU has few cores optimized for sequential serial processing. The cache size and flow control units are however of smaller size when compared to the CPU. This indicates that during the fabrication, bulk of the transistors are devoted for data processing. The diagram below shows how the processing cores are arranged in CPU and GPU <ref name= GPU vs CPU by NVIDIA> GPU vs CPU by NVIDIA. URL http://www.nvidia.com/object/what-is-gpu-computing.html </ref> | ||

[[File:GPUvsCPU.jpg|center| | [[File:GPUvsCPU.jpg|center|Figure1. GPU vs CPU ]] | ||

<center>Figure 1. GPU vs CPU</center> | <center>Figure 1. GPU vs CPU <ref name= GPUCPU> GPU vs CPU. URL http://docs.nvidia.com/cuda/cuda-c-programming-guide/ </ref> </center> | ||

==GPU Architecture== | ==GPU Architecture== | ||

The internal architecture of a GPU can be segregated as below.<br /> | The internal architecture of a GPU can be segregated as below.<br /> | ||

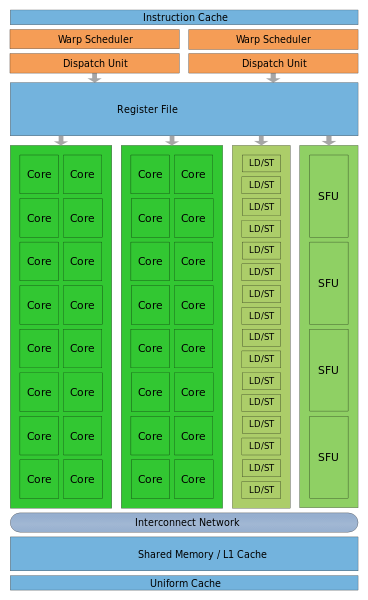

'''''Multithreaded Single Instruction Multiple Data Multiprocessors (SIMD):''''' A GPU is made up of multiple SIMD multiprocessors which are characterized by its capability to handle a stream of instructions which can perform parallel computation on multiple data stream. These processors are often known as Stream Multiprocessors (NVIDIA) and Compute Unit (AMD). Each SIMD multiprocessor comprises of a very large number of cores. The latest NVIDIA GEFORCE GTX 780 TI is made up of 2880 cores | '''''Multithreaded Single Instruction Multiple Data Multiprocessors (SIMD):''''' A GPU is made up of multiple SIMD multiprocessors which are characterized by its capability to handle a stream of instructions which can perform parallel computation on multiple data stream. These processors are often known as Stream Multiprocessors (NVIDIA) and Compute Unit (AMD)[Figure 2 & Figure 3]. Each SIMD multiprocessor comprises of a very large number of cores. The latest NVIDIA GEFORCE GTX 780 TI is made up of 2880 cores | ||

<ref name= GTX> GTX 700 GPU NVIDIA. URL http://www.nvidia.com/gtx-700-graphics-cards/gtx-780ti </ref> | |||

whereas AMD R9 290X has cores up to 2816 | |||

<ref name= AMD> AMD GPU. URL http://www.amd.com/us/products/desktop/graphics/r9/Pages/amd-radeon-hd-r9-series.aspx </ref> | |||

[[File:A2.png|center]] | [[File:A2.png|center]] | ||

| Line 28: | Line 38: | ||

'''''L2 Cache:''''' The L2 provides efficient, high speed data sharing across the GPU. It also facilitates atomic operation. | '''''L2 Cache:''''' The L2 provides efficient, high speed data sharing across the GPU. It also facilitates atomic operation. | ||

'''''Memory Controller:''''' The memory controllers tie the GPU together and provide data to nearly every part of the system. | '''''Memory Controller:''''' The memory controllers tie the GPU together and provide data to nearly every part of the system. <ref> GCN WhitePaper. URL http://www.amd.com/us/Documents/GCN_Architecture_whitepaper.pdf </ref> | ||

'''''Thread Block Scheduler:''''' This is responsible for scheduling of the SM threads and context switches between the threads. It is often referred to as GIGA thread engine (NVIDIA) and Ultra Threaded dispatch engine (AMD). | '''''Thread Block Scheduler:''''' This is responsible for scheduling of the SM threads and context switches between the threads. It is often referred to as GIGA thread engine (NVIDIA) and Ultra Threaded dispatch engine (AMD). | ||

'''''Host Interface:''''' The GPU is connected to the CPU through a powerful interface bus called as PCI. The transfer rate in NVIDIA GEFORCE GTX 780 TI and AMD R9 290X both use PCI express 3.0 | '''''Host Interface:''''' The GPU is connected to the CPU through a powerful interface bus called as PCI. The transfer rate in NVIDIA GEFORCE GTX 780 TI and AMD R9 290X both use PCI express 3.0 <ref name= GTX> GTX 700 GPU NVIDIA. </ref> <ref name= AMD> AMD GPU. </ref> whose peak transfer rate is 32GB/Sec | ||

==Data parallelism== | ==Data parallelism== | ||

| Line 50: | Line 60: | ||

===Hardware Aspect=== | ===Hardware Aspect=== | ||

<hr size=2> | |||

The GPU comprises of multiple SIMD multiprocessors which is in turn made up of many cores. Each core internally has multiple fully pipelined Arithmetic Logic Unit and Floating Point Unit (ALU and FPU). The core is also equipped with features like fused multiply add (FMA), wherein the multiplication and addition is done in a single final rounding step, with no loss of precision in the addition | The GPU comprises of multiple SIMD multiprocessors which is in turn made up of many cores. Each core internally has multiple fully pipelined Arithmetic Logic Unit and Floating Point Unit (ALU and FPU). The core is also equipped with features like fused multiply add (FMA), wherein the multiplication and addition is done in a single final rounding step, with no loss of precision in the addition <ref name= Fermi> NVIDIA Fermi Architecture. URL http://www.nvidia.com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper.pdf </ref> | ||

. GPU also has multiple load and store units which help to calculate the addresses quickly. Thread scheduler helps in managing the available resources and efficiently channeling the threads to the cores exhibiting an efficient context switching. Shared memory enables threads within the same thread block to cooperate, facilitates extensive reuse of on-chip data, and greatly reduces off-chip traffic. The distributed L2 cache is the central point of coherency in the GPU. It acts as a backstop for the read-only L1 instruction and scalar caches that are shared by a cluster of cores, as well as the L1 data caches in every SIMD multiprocessor. The L2 cache is physically partitioned into slices that are coupled to each memory channel, and accesses flow through a crossbar fabric from the SIMD multiprocessors to the cache and memory partitions <ref name= AMD> AMD GCN Architecture. URL http://www.amd.com/us/Documents/GCN_Architecture_whitepaper.pdf </ref>. All these hardware features of the GPU helps to achieve data parallelism. | |||

===Software Aspect=== | ===Software Aspect=== | ||

<hr size=2> | |||

====CUDA==== | ====CUDA==== | ||

| Line 61: | Line 73: | ||

CUDA provides the programmers with direct access to the virtual instruction set and memory for the CUDA GPU's. CUDA is used along with GPU's for general purpose processing(other than the graphics intensive applications), this approach is now popularly known as GPGPU (General Purpose Graphical Processing Units). GPU's are harnessed using CUDA to dramatically improve computation performance. The CUDA programming interface is available to programmers through extension of various languages like : C, C++, Fortran. Along with these things, CUDA also supports a few other standard computational interfaces such as Khronos Group's OpenCL, Microsoft's DirectCompute and C++ AMP(Accelerated Massive Parallelism built on DirectX11). | CUDA provides the programmers with direct access to the virtual instruction set and memory for the CUDA GPU's. CUDA is used along with GPU's for general purpose processing(other than the graphics intensive applications), this approach is now popularly known as GPGPU (General Purpose Graphical Processing Units). GPU's are harnessed using CUDA to dramatically improve computation performance. The CUDA programming interface is available to programmers through extension of various languages like : C, C++, Fortran. Along with these things, CUDA also supports a few other standard computational interfaces such as Khronos Group's OpenCL, Microsoft's DirectCompute and C++ AMP(Accelerated Massive Parallelism built on DirectX11). | ||

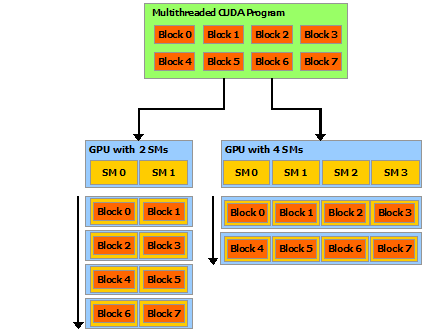

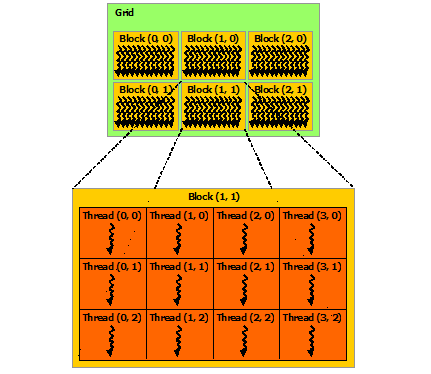

With the advent of multi-core CPU's and the many core GPU's, writing programs that can scale and leverage this increased parallel computational power which is available is a challenge. The CUDA programming model is designed to overcome this challenge by providing the programmers with minimal extensions to the programming languages. CUDA provides programmers with constructs such as threads, shared memories and synchronization techniques. The programmer need to analyze his program, carve out sub-problems which can be executed in parallel(task level parallelism or coarse grained parallelism). Each of these sub-problems is further executed by blocks of threads. Inside each of these blocks we need to further identify pieces of code which by themselves can execute in parallel in each of the threads present in the block of threads. CUDA enables us to schedule and run each block of thread in any of the available multiprocessor within the GPU and this model makes the scalability problem a lot easier to handle. This can be observed from the | With the advent of multi-core CPU's and the many core GPU's, writing programs that can scale and leverage this increased parallel computational power which is available is a challenge. The CUDA programming model is designed to overcome this challenge by providing the programmers with minimal extensions to the programming languages. CUDA provides programmers with constructs such as threads, shared memories and synchronization techniques. The programmer need to analyze his program, carve out sub-problems which can be executed in parallel(task level parallelism or coarse grained parallelism). Each of these sub-problems is further executed by blocks of threads. Inside each of these blocks we need to further identify pieces of code which by themselves can execute in parallel in each of the threads present in the block of threads. CUDA enables us to schedule and run each block of thread in any of the available multiprocessor within the GPU and this model makes the scalability problem a lot easier to handle. This can be observed from the figure given below : | ||

[[File:GPU_Scale.png|center| Scalability in GPU's]] | [[File:GPU_Scale.png|center| Scalability in GPU's]] | ||

<center> Figure 4. Scalability in GPU's </center> | <center> Figure 4. Scalability in GPU's <ref name= Scalability> Scalability in GPU. URL http://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html </ref> </center> | ||

=====Programming Model===== | =====Programming Model===== | ||

| Line 71: | Line 83: | ||

'''''i) Kernels :''''' | '''''i) Kernels :''''' | ||

CUDA extends C by allowing the programmer to declare functions called kernel which are identified by the __global__ identifier. These functions when called would be called N times on N different CUDA threads in contrast with a single execution thread for normal C code. The number of CUDA threads that need to be spawned to execute this kernel function in parallel is specified by new<<<. . . . >>> syntax. Each thread that executes this kernel code is given | CUDA extends C by allowing the programmer to declare functions called kernel which are identified by the __global__ identifier. These functions when called would be called N times on N different CUDA threads in contrast with a single execution thread for normal C code. The number of CUDA threads that need to be spawned to execute this kernel function in parallel is specified by new<<<. . . . >>> syntax. Each thread that executes this kernel code is given an unique thread-id which can accessed with the function by threadIdX variable. Code snippet below : | ||

'''''ii) Thread Hierarchy:''''' | '''''ii) Thread Hierarchy:''''' | ||

| Line 80: | Line 92: | ||

[[File : Thread.png | center | Grid of Thread Blocks.]] | [[File : Thread.png | center | Grid of Thread Blocks.]] | ||

<center> Figure 5. Grid of Thread Blocks </center> | <center> Figure 5. Grid of Thread Blocks <ref name= Scalability> Scalability in GPU. </ref> </center> | ||

Thread blocks are required to execute independently of others, i.e. they must be allowed to be executed in any order. Having this restriction allows the hardware to schedule thread blocks onto any processor that is available. | Thread blocks are required to execute independently of others, i.e. they must be allowed to be executed in any order. Having this restriction allows the hardware to schedule thread blocks onto any processor that is available. | ||

| Line 89: | Line 101: | ||

[[File : mem.png | center | Memory Hierarchy in CUDA.]] | [[File : mem.png | center | Memory Hierarchy in CUDA.]] | ||

<center> FIgure 6. Memory Hierarchy </center> | <center> FIgure 6. Memory Hierarchy <ref name= Memory Hierarchy in CUDA> Memory Hierarchy in CUDA. URL http://www.sdsc.edu/us/training/assets/docs/NVIDIA-02-BasicsOfCUDA.pdf </ref> </center> | ||

=====Data parallelism in CUDA: Example of a vector sum on a GPU using CUDA C===== | =====Data parallelism in CUDA: Example of a vector sum on a GPU using CUDA C===== | ||

The following code<ref name=CUDA by example>CUDA by example. URL http://developer.download.nvidia.com/books/cuda-by-example/cuda-by-example-sample.pdf | The following code<ref name=CUDA by example>CUDA by example. URL http://developer.download.nvidia.com/books/cuda-by-example/cuda-by-example-sample.pdf </ref> illustrates data parallelism in a GPU by using CUDA C. CUDA C is an extension to C language. In CUDA C parallel execution of code is implemented by defining the code as a parallel kernel. This kernel is similar to a function in C except that the compiler executes the code in parallel on N processors by creating N threads. Each thread within a thread block executes an instance of the kernel, and has a thread ID within its thread block, program counter, registers, per-thread private memory, inputs, and output results. | ||

<pre> | <pre> | ||

#include "../common/book.h" | #include "../common/book.h" | ||

| Line 156: | Line 168: | ||

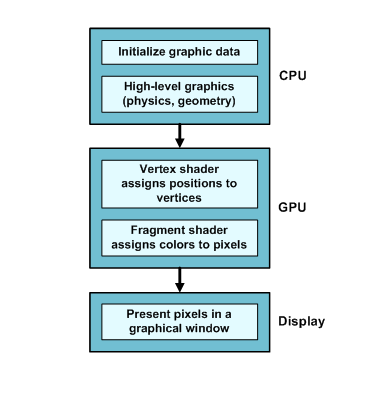

OpenGL<ref name= OpenGL1></ref>(Open Graphics Library) is one of the most popular toolsets available for graphical processing, and many computer games and CAD tools rely on it for 3-Drendering. Originally developed by Silicon Graphics in the early 1990s, OpenGL has been ported to Windows, Linux, Mac OS. It is supported by NVidia and AMD on their GPU’s. On desktop computers, a modern OpenGL application consists of two parts: a host application that runs on the CPU and special-purpose routines called shaders that execute on the graphics processing unit, or GPU. In general, the CPU handles complex graphical routines such as physics and geometry and the GPU performs simple tasks like assigning positions to vertices and colors to pixels. Figure 1.3 depicts the relationship between the host and the shaders in an OpenGL application. | OpenGL<ref name= OpenGL1></ref>(Open Graphics Library) is one of the most popular toolsets available for graphical processing, and many computer games and CAD tools rely on it for 3-Drendering. Originally developed by Silicon Graphics in the early 1990s, OpenGL has been ported to Windows, Linux, Mac OS. It is supported by NVidia and AMD on their GPU’s. On desktop computers, a modern OpenGL application consists of two parts: a host application that runs on the CPU and special-purpose routines called shaders that execute on the graphics processing unit, or GPU. In general, the CPU handles complex graphical routines such as physics and geometry and the GPU performs simple tasks like assigning positions to vertices and colors to pixels. Figure 1.3 depicts the relationship between the host and the shaders in an OpenGL application. | ||

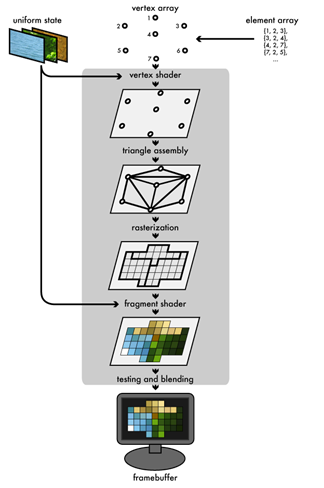

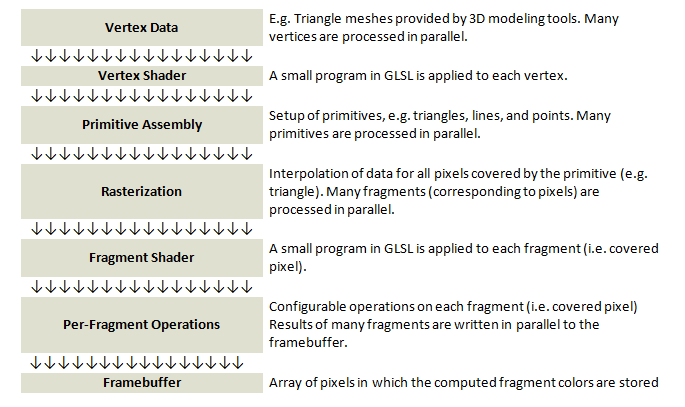

===The graphics pipeline of OpenGL=== | =====The graphics pipeline of OpenGL===== | ||

[[Image:OpenGL2.png|thumb|right|450px|Figure 4:<ref name = OpenGL2>Graphics pipeline in OpenGL. URL http://duriansoftware.com/joe/An-intro-to-modern-OpenGL.-Chapter-1:-The-Graphics-Pipeline.html></ref> Graphics pipeline of OpenGL]] | [[Image:OpenGL2.png|thumb|right|450px|Figure 4:<ref name = OpenGL2>Graphics pipeline in OpenGL. URL http://duriansoftware.com/joe/An-intro-to-modern-OpenGL.-Chapter-1:-The-Graphics-Pipeline.html></ref> Graphics pipeline of OpenGL]] | ||

The graphics pipeline<ref name=OpenGL2></ref> is a sequence followed for rendering an image on the screen (frame buffer). Here, the scene which is to be rendered contains multiple vertices arranged in an array. The attributes of these vertices are stored in a vertex buffer. Attributes include the position of the vertex in the real world, etc. These attributes are given to a vertex shader. This shader maps all the vertices in 3D space. A vertex shader can also assign some color information to the vertices. The triangle assembly module connects the vertices to form triangles. The rasterization module converts the triangles into discrete regions consisting of pixels. If the vertices contained color data, this data will be filled in the discrete regions enclosed the boundaries that were previously the edges of the triangles. The fragment shader combines the data from the rasterization module as well as the uniform data which is passed to the frame buffer. | The graphics pipeline<ref name=OpenGL2></ref> is a sequence followed for rendering an image on the screen (frame buffer). Here, the scene which is to be rendered contains multiple vertices arranged in an array. The attributes of these vertices are stored in a vertex buffer. Attributes include the position of the vertex in the real world, etc. These attributes are given to a vertex shader. This shader maps all the vertices in 3D space. A vertex shader can also assign some color information to the vertices. The triangle assembly module connects the vertices to form triangles. The rasterization module converts the triangles into discrete regions consisting of pixels. If the vertices contained color data, this data will be filled in the discrete regions enclosed the boundaries that were previously the edges of the triangles. The fragment shader combines the data from the rasterization module as well as the uniform data which is passed to the frame buffer. | ||

===Data parallelism in OpenGL=== | |||

=====Data parallelism in OpenGL===== | |||

[[Image:OpenGL3.png|thumb|center|450px|Figure 5:<ref name = OpenGL3>GLSL programming URL http://en.wikibooks.org/wiki/GLSL_Programming/OpenGL_ES_2.0_Pipeline></ref> Parallel implementation of graphics pipeline of OpenGL]] | [[Image:OpenGL3.png|thumb|center|450px|Figure 5:<ref name = OpenGL3>GLSL programming URL http://en.wikibooks.org/wiki/GLSL_Programming/OpenGL_ES_2.0_Pipeline></ref> Parallel implementation of graphics pipeline of OpenGL]] | ||

Parallelism in OpenGL<ref name= OpenGL3></ref> with respect to the graphics pipeline can be accomplished using vertical parallelism and horizontal parallelism methods. | Parallelism in OpenGL<ref name= OpenGL3></ref> with respect to the graphics pipeline can be accomplished using vertical parallelism and horizontal parallelism methods. | ||

'''Vertical parallelism''' | |||

Vertical parallelism is achieved by assigning the different stages of the pipeline to different processors. Strictly speaking this type of parallelism is more of functional parallelism than data parallelism. | Vertical parallelism is achieved by assigning the different stages of the pipeline to different processors. Strictly speaking this type of parallelism is more of functional parallelism than data parallelism. | ||

'''Horizontal parallelism''' | |||

Horizontal parallelism resembles data parallelism. It calls for multiple pipelines working on different sets of data. | Horizontal parallelism resembles data parallelism. It calls for multiple pipelines working on different sets of data. | ||

The figure illustrates the graphics pipeline parallelism. Vertical parallelism is denoted by the various stages which are executed in parallel. Horizontal parallelism is denoted by the arrows, denoting that multiple pipelines are executed in parallel. | The figure illustrates the graphics pipeline parallelism. Vertical parallelism is denoted by the various stages which are executed in parallel. Horizontal parallelism is denoted by the arrows, denoting that multiple pipelines are executed in parallel. | ||

| Line 177: | Line 191: | ||

{ | { | ||

int id = get_global_id (0); | int id = get_global_id (0); | ||

if (id < N) | if (id < N) | ||

c[id] = a[id] * b[id]; | c[id] = a[id] * b[id]; | ||

} | } | ||

//In main() | //In main() | ||

{ | { | ||

// create a compute context with GPU device | ... | ||

context = clCreateContextFromType(NULL, CL_DEVICE_TYPE_GPU, NULL, NULL, NULL); | // create a compute context with GPU device | ||

context = clCreateContextFromType(NULL, CL_DEVICE_TYPE_GPU, NULL, NULL, NULL); | |||

// create a command queue | // create a command queue | ||

clGetDeviceIDs( NULL, CL_DEVICE_TYPE_DEFAULT, 1, &device_id, NULL ); | clGetDeviceIDs( NULL, CL_DEVICE_TYPE_DEFAULT, 1, &device_id, NULL ); | ||

cl_command_queue queue = clCreateCommandQueue(context, device_id, 0, NULL); | cl_command_queue queue = clCreateCommandQueue(context, device_id, 0, NULL); | ||

// Build program object | // Build program object | ||

cl_program program = clCreateProgramWithSource(context, 1, &dp_mul_kernel_src, NULL, NULL); | cl_program program = clCreateProgramWithSource(context, 1, &dp_mul_kernel_src, NULL, NULL); | ||

// Build the compute program executable | // Build the compute program executable | ||

clBuildProgram(program, 0, NULL, NULL, NULL, NULL); | clBuildProgram(program, 0, NULL, NULL, NULL, NULL); | ||

// create the compute kernel | // create the compute kernel | ||

cl_kernel kernel = clCreateKernel(program, “dp_mul", NULL); | cl_kernel kernel = clCreateKernel(program, “dp_mul", NULL); | ||

// Create buffers on host and device | // Create buffers on host and device | ||

size_t size = 100000 * sizeof(int); | size_t size = 100000 * sizeof(int); | ||

int* h_buffer = (int*)malloc(size); | int* h_buffer = (int*)malloc(size); | ||

cl_mem a_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL); | cl_mem a_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL); | ||

cl_mem b_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL); | cl_mem b_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL); | ||

cl_mem c_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL); | cl_mem c_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL); | ||

// Set up kernel arguments | // Set up kernel arguments | ||

clSetKernelArg(kernel, 0, sizeof(cl_mem), (void*)&a_buffer); | clSetKernelArg(kernel, 0, sizeof(cl_mem), (void*)&a_buffer); | ||

clSetKernelArg(kernel, 1, sizeof(cl_mem), (void*)&b_buffer); | clSetKernelArg(kernel, 1, sizeof(cl_mem), (void*)&b_buffer); | ||

clSetKernelArg(kernel, 2, sizeof(cl_mem), (void*)&c_buffer); | clSetKernelArg(kernel, 2, sizeof(cl_mem), (void*)&c_buffer); | ||

clSetKernelArg(kernel, 3, sizeof(int), (void*)&N); | clSetKernelArg(kernel, 3, sizeof(int), (void*)&N); | ||

// Write to buffer object from host memory | // Write to buffer object from host memory | ||

clEnqueueWriteBuffer(cmd_queue, a_buffer, CL_FALSE, 0, size, h_buffer, 0, NULL, NULL); | clEnqueueWriteBuffer(cmd_queue, a_buffer, CL_FALSE, 0, size, h_buffer, 0, NULL, NULL); | ||

clEnqueueWriteBuffer(cmd_queue, b_buffer, CL_FALSE, 0, size, h_buffer, 0, NULL, NULL); | clEnqueueWriteBuffer(cmd_queue, b_buffer, CL_FALSE, 0, size, h_buffer, 0, NULL, NULL); | ||

// Set number of work-items in a work-group | // Set number of work-items in a work-group | ||

size_t localWorkSize = 256; | size_t localWorkSize = 256; | ||

int numWorkGroups = (N + localWorkSize – 1) / localWorkSize; // round up | int numWorkGroups = (N + localWorkSize – 1) / localWorkSize; // round up | ||

size_t globalWorkSize = numWorkGroups * localWorkSize; // must be evenly divisible by localWorkSize | size_t globalWorkSize = numWorkGroups * localWorkSize; // must be evenly divisible by localWorkSize | ||

//Sequence of commands scheduled for execution on queue. This command basically invokes the kernel over the global workspace. | //Sequence of commands scheduled for execution on queue. This command basically invokes the kernel over the global workspace. | ||

clEnqueueNDRangeKernel(cmd_queue, kernel, 1, NULL, &globalWorkSize, &localWorkSize, 0, NULL, NULL); | clEnqueueNDRangeKernel(cmd_queue, kernel, 1, NULL, &globalWorkSize, &localWorkSize, 0, NULL, NULL); | ||

// Read from buffer object to host memory | // Read from buffer object to host memory | ||

clEnqueueReadBuffer(cmd_queue, c_buffer , CL_TRUE, 0, size, h_buffer , 0, NULL, NULL); | clEnqueueReadBuffer(cmd_queue, c_buffer , CL_TRUE, 0, size, h_buffer , 0, NULL, NULL); | ||

} | } | ||

</pre> | </pre> | ||

| Line 241: | Line 256: | ||

==Further readings== | ==Further readings== | ||

More information on OpenCL can be obtained at OpenGL documentation by Apple. <ref name=OpenGLApple> Documentation on OpenGl by Apple URL http://developer.apple.com/library/mac/documentation/Performance/Conceptual/OpenCL_MacProgGuide/Introduction/Introduction.html></ref> Also refer to the tutorial by Khronos group.<ref name= OpenCLEx> </ref>. Real world technologies is a good resource for more information on GPU's and GPU Architecture.<ref name= | More information on OpenCL can be obtained at OpenGL documentation by Apple. <ref name=OpenGLApple> Documentation on OpenGl by Apple URL http://developer.apple.com/library/mac/documentation/Performance/Conceptual/OpenCL_MacProgGuide/Introduction/Introduction.html></ref> Also refer to the tutorial by Khronos group.<ref name= OpenCLEx> </ref>. Real world technologies is a good resource for more information on GPU's and GPU Architecture. <ref name=Real World Technologies> Real World Technologies URL http://www.realworldtech.com/gpu/</ref> NVIDIA website provides an excellent resource on CUDA, NVIDIA’s GPU parallel platform. | ||

==Questions== | ==Questions== | ||

*Q1. Suppose a GPU from AMD supporting the Cayman architecture has 24 SIMD cores and 1728 processing elements in total. How many processing elements are present in 1 core? | *Q1. What makes GPU a best choice for graphical operations? | ||

*Q2. What is the difference between a CPU and a GPU? | |||

*Q3. Suppose a GPU from AMD supporting the Cayman architecture has 24 SIMD cores and 1728 processing elements in total. How many processing elements are present in 1 core? | |||

*Q4. Explain how the GPU hardware is supportive in achieving data parallelism. | |||

*Q5. Name the programming model developed by NVidia | |||

*Q6. What is the importance of CUDA language? | |||

*Q7. What is the syntax used to create N kernels in CUDA C | |||

* | *Q8. In CUDA, what directive is used to denote that the code is a parallel kernel? | ||

* | *Q9. How is parallel programming achieved using CUDA language? | ||

* | *Q10. Who was responsible for the initial development of OpenGL? | ||

* | *Q11. What is the primary difference between OpenCL and OpenGL application operations? | ||

* | *Q12. What other types of parallelism are present in GPU architecture? | ||

* | *Q13. What advantages do GPUs have over CPUs? | ||

* | *Q14. In OpenGL, which tasks are assigned to CPUs and which tasks are handled by GPUs? | ||

* | *Q15. What are the main functions of kernels in OpenCL? | ||

==References== | ==References== | ||

<references/> | <references/> | ||

Latest revision as of 04:19, 11 February 2014

2b. Overall, this chapter is not so much about GPUs in general as about CUDA, OpenGL, and OpenCL. There should be a section describing GPUs in general prefacing everything else. Read up on other GPU architectures as well, so you can write a good, non-vendor-specific, introduction to data parallelism in GPUs. It may or may not be helpful to consult the two 2011 pages, one on NVIDIA's Fermi architecture, and the other on the AMD HD 6900 series. The section on thread management CUDA doesn’t indicate what threads and blocks are used for. It’s necessary to study the following section before the reader can understand the section on thread management. See if you are confused when you start to read, and fix it so it’s clearer. Format variables and code correctly. Use italics for variable names appearing in running text. Use a typewriter font for code snippets in running text. Don’t use such a small font size for displayed examples; they are hard to read. It would be good if you could juxtapose the description of a section of code in the right column with the actual code in the left column. That is, create another column to the right of the code where you describe its workings in prose.

Introduction to GPU

Graphical Processing Unit (GPU) is an hardware accelerator used for graphical computation, necessary to display the image on the screen. The GPUs were designed initially to alleviate the CPU work load. GPUs are characterized by high programmability, fast floating point parallel computation, minimal dependency on a CPU, large data sets and cost efficiency. The immense capability of the GPU to perform high speed computations involving vectors and matrices has made them suitable for non-graphical applications as well. <ref name= GPU vs CPU by NVIDIA> GPU vs CPU by NVIDIA. URL http://cs.utsa.edu/~qitian/seminar/Spring11/03_04_11/GPU.pdf </ref>

The various functionalities carried out by a GPU include computations related to 3D computer graphics, texture mapping, geometric and vertex calculations and Rasterization. This makes it a best choice processor for applications such as Video decoding, Molecular dynamics, Quantum Chemistry, Material Science, Visualization and Docking Software, Bioinformatics, Numerical Analytics, Physics, Defense and Intelligence, Computational Finance, Computational Fluid Dynamics, Computational Structural mechanics, Computer Aided Design, Animation and Modeling, Color correction and grain management, Oil and Gas. <ref name= GPU Applications by NVIDIA> GPU Applications by NVIDIA. URL http://www.nvidia.com/content/tesla/pdf/gpu-accelerated-applications-for-hpc.pdf </ref>

GPU is the mainstream movement in the world of processors and currently has many vendors like Nvidia, AMD , Intel , PowerVR, Qualcomm.

GPU VS CPU

A GPU is made up of many tiny processing cores which are very efficient, easily programmable and highly suitable for parallel computation. In contrast, a CPU has few cores optimized for sequential serial processing. The cache size and flow control units are however of smaller size when compared to the CPU. This indicates that during the fabrication, bulk of the transistors are devoted for data processing. The diagram below shows how the processing cores are arranged in CPU and GPU <ref name= GPU vs CPU by NVIDIA> GPU vs CPU by NVIDIA. URL http://www.nvidia.com/object/what-is-gpu-computing.html </ref>

GPU Architecture

The internal architecture of a GPU can be segregated as below.

Multithreaded Single Instruction Multiple Data Multiprocessors (SIMD): A GPU is made up of multiple SIMD multiprocessors which are characterized by its capability to handle a stream of instructions which can perform parallel computation on multiple data stream. These processors are often known as Stream Multiprocessors (NVIDIA) and Compute Unit (AMD)[Figure 2 & Figure 3]. Each SIMD multiprocessor comprises of a very large number of cores. The latest NVIDIA GEFORCE GTX 780 TI is made up of 2880 cores <ref name= GTX> GTX 700 GPU NVIDIA. URL http://www.nvidia.com/gtx-700-graphics-cards/gtx-780ti </ref> whereas AMD R9 290X has cores up to 2816 <ref name= AMD> AMD GPU. URL http://www.amd.com/us/products/desktop/graphics/r9/Pages/amd-radeon-hd-r9-series.aspx </ref>

L2 Cache: The L2 provides efficient, high speed data sharing across the GPU. It also facilitates atomic operation.

Memory Controller: The memory controllers tie the GPU together and provide data to nearly every part of the system. <ref> GCN WhitePaper. URL http://www.amd.com/us/Documents/GCN_Architecture_whitepaper.pdf </ref>

Thread Block Scheduler: This is responsible for scheduling of the SM threads and context switches between the threads. It is often referred to as GIGA thread engine (NVIDIA) and Ultra Threaded dispatch engine (AMD).

Host Interface: The GPU is connected to the CPU through a powerful interface bus called as PCI. The transfer rate in NVIDIA GEFORCE GTX 780 TI and AMD R9 290X both use PCI express 3.0 <ref name= GTX> GTX 700 GPU NVIDIA. </ref> <ref name= AMD> AMD GPU. </ref> whose peak transfer rate is 32GB/Sec

Data parallelism

Parallel execution of code is defined as distributing the computation of code amongst the parallel processing elements (PE’s). There are various levels at which we can exploit parallelism which include bit, instruction, data and task level parallelism. Data parallelism (also called as loop level parallelism) is a type of parallelism where the data is distributed among the P.E’s. Here, the PE’s perform the same task on different subsets of data.

Example of data parallelism

for (i = 0; i < N ; i++)

{

a[i] = b[i] + c[i];

}

This is a highly simplified example of a code that can support data parallelism. Here, the array a[] contains the sum of arrays b[] and c[]. If we have N PE’s, we can allocate the computation of each element in array a[] to a processing element. In practice however, data dependencies exist that prevent data parallelism.

Hardware Aspect

The GPU comprises of multiple SIMD multiprocessors which is in turn made up of many cores. Each core internally has multiple fully pipelined Arithmetic Logic Unit and Floating Point Unit (ALU and FPU). The core is also equipped with features like fused multiply add (FMA), wherein the multiplication and addition is done in a single final rounding step, with no loss of precision in the addition <ref name= Fermi> NVIDIA Fermi Architecture. URL http://www.nvidia.com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper.pdf </ref> . GPU also has multiple load and store units which help to calculate the addresses quickly. Thread scheduler helps in managing the available resources and efficiently channeling the threads to the cores exhibiting an efficient context switching. Shared memory enables threads within the same thread block to cooperate, facilitates extensive reuse of on-chip data, and greatly reduces off-chip traffic. The distributed L2 cache is the central point of coherency in the GPU. It acts as a backstop for the read-only L1 instruction and scalar caches that are shared by a cluster of cores, as well as the L1 data caches in every SIMD multiprocessor. The L2 cache is physically partitioned into slices that are coupled to each memory channel, and accesses flow through a crossbar fabric from the SIMD multiprocessors to the cache and memory partitions <ref name= AMD> AMD GCN Architecture. URL http://www.amd.com/us/Documents/GCN_Architecture_whitepaper.pdf </ref>. All these hardware features of the GPU helps to achieve data parallelism.

Software Aspect

CUDA

CUDA(Compute Unified Device Architecture) is a parallel computing platform and programming model that was created by NVDIA for the GPU's that they produce. It leverages the parallel computational engine that is present in the modern GPU's to solve several complex computational problems quickly which would take a considerably large amount of time when executed on a normal CPU.

CUDA provides the programmers with direct access to the virtual instruction set and memory for the CUDA GPU's. CUDA is used along with GPU's for general purpose processing(other than the graphics intensive applications), this approach is now popularly known as GPGPU (General Purpose Graphical Processing Units). GPU's are harnessed using CUDA to dramatically improve computation performance. The CUDA programming interface is available to programmers through extension of various languages like : C, C++, Fortran. Along with these things, CUDA also supports a few other standard computational interfaces such as Khronos Group's OpenCL, Microsoft's DirectCompute and C++ AMP(Accelerated Massive Parallelism built on DirectX11).

With the advent of multi-core CPU's and the many core GPU's, writing programs that can scale and leverage this increased parallel computational power which is available is a challenge. The CUDA programming model is designed to overcome this challenge by providing the programmers with minimal extensions to the programming languages. CUDA provides programmers with constructs such as threads, shared memories and synchronization techniques. The programmer need to analyze his program, carve out sub-problems which can be executed in parallel(task level parallelism or coarse grained parallelism). Each of these sub-problems is further executed by blocks of threads. Inside each of these blocks we need to further identify pieces of code which by themselves can execute in parallel in each of the threads present in the block of threads. CUDA enables us to schedule and run each block of thread in any of the available multiprocessor within the GPU and this model makes the scalability problem a lot easier to handle. This can be observed from the figure given below :

Programming Model

There are 3 main parts in the CUDA programming which are very important to understand : kernel, thread hierarchy and memory hierarchy. A brief description of each of these is given below :

i) Kernels :

CUDA extends C by allowing the programmer to declare functions called kernel which are identified by the __global__ identifier. These functions when called would be called N times on N different CUDA threads in contrast with a single execution thread for normal C code. The number of CUDA threads that need to be spawned to execute this kernel function in parallel is specified by new<<<. . . . >>> syntax. Each thread that executes this kernel code is given an unique thread-id which can accessed with the function by threadIdX variable. Code snippet below :

ii) Thread Hierarchy:

Threads in CUDA referred by threadIdX in the code is a 3-D component so that threads can be identified using 1-dimensional, 2-dimensional or 3-dimensional thread index which can be used to reference 1-dimensional, 2-dimensional or 3-dimensional thread block. Having this structure makes it easier to perform computation for Array, Matrix or Volume objects. The number of threads in a block are limited to 1024 as all the threads of a block are expected to reside on the same GPU processor and share the memory resources which are limited.

CUDA threads are very lightweight and they use 1000's of thread to achieve efficiency as opposed to multi-core CPU's which can use only a few. Co-operation is needed among threads to synchronize on memory accesses and to share results among themselves to avoid redundant computation. Threads within a block co-operate via shared memory, and threads within different blocks cannot co-operate. Blocks are further organized as one-dimensional, two-dimensional, or three-dimensional grid of thread blocks as illustrated in the Figure below.

Thread blocks are required to execute independently of others, i.e. they must be allowed to be executed in any order. Having this restriction allows the hardware to schedule thread blocks onto any processor that is available.

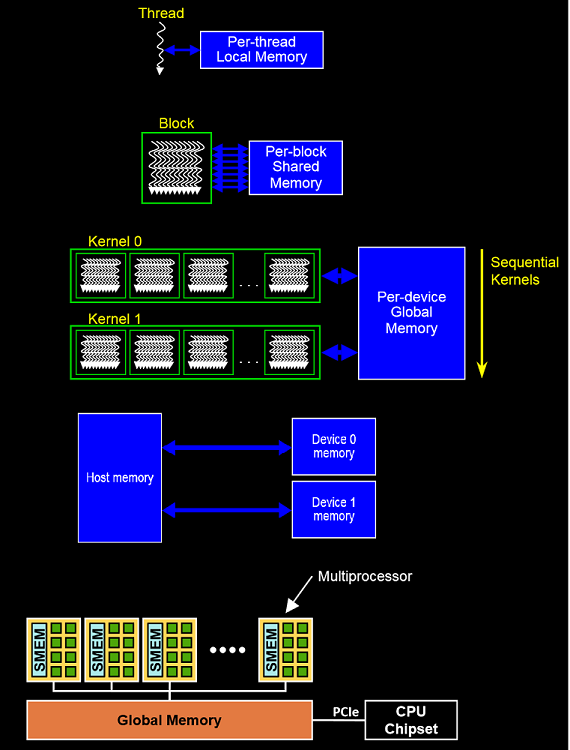

iii)Memory Hierarchy:

In CUDA, each thread has access to several levels of memory. It has access to local memory which is specific to each thread, which has the same lifetime as the thread. Each thread also has access to a super fast shared memory which is visible to all threads within that block, which has the same lifetime as that of the block. Each thread also has registers specific to the thread, Along with these memory, each thread also has access to the Global(device) memory which can be accessed by all threads/blocks/grids as well as the host(CPU). This memory would be present to the lifetime between allocation to de-allocation.

Data parallelism in CUDA: Example of a vector sum on a GPU using CUDA C

The following code<ref name=CUDA by example>CUDA by example. URL http://developer.download.nvidia.com/books/cuda-by-example/cuda-by-example-sample.pdf </ref> illustrates data parallelism in a GPU by using CUDA C. CUDA C is an extension to C language. In CUDA C parallel execution of code is implemented by defining the code as a parallel kernel. This kernel is similar to a function in C except that the compiler executes the code in parallel on N processors by creating N threads. Each thread within a thread block executes an instance of the kernel, and has a thread ID within its thread block, program counter, registers, per-thread private memory, inputs, and output results.

#include "../common/book.h"

#define N 10

// add function

//__global__ denotes parallel kernel.

__global__ void add ( int*a, int*b, int*c )

{

int tid = blockIdx.x; // handle the data at this index

if (tid < N)

{

c[tid] = a[tid] + b[tid];

}

int main( void)

{

int a[N], b[N], c[N];

int *dev_a, *dev_b, *dev_c;

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a, N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b, N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c, N * sizeof(int) ) );

// fill the arrays 'a' and 'b' on the CPU

for(int i=0; i<N; i++)

{

a[i] = -i;

b[i] = i * i;

}

// copy the arrays 'a' and 'b' to the GPU

HANDLE_ERROR( cudaMemcpy( dev_a, a, N * sizeof(int),cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMemcpy( dev_b, b, N * sizeof(int),cudaMemcpyHostToDevice ) );

add<<<N,1>>>( dev_a, dev_b, dev_c ); //N denotes the number of threads to execute.

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( c, dev_c, N * sizeof(int),cudaMemcpyDeviceToHost ) );

// display the results

for(int i=0; i<N; i++)

{

printf( "%d + %d = %d\n", a[i], b[i], c[i] );

}

// free the memory allocated on the GPU

cudaFree( dev_a );

cudaFree( dev_b );

cudaFree( dev_c );

return 0;

}

The code above takes the sum of 2 arrays and stores the result in the third array. Before beginning computations on the GPU, we need to allocate memory on the GPU corresponding to the 3 arrays using (cudaMalloc) and copy the data in the 2 arrays using (cudaMemcpy). The function add which actually does the addition has a directive __global__ to denote that it is a parallel kernel. The syntax << N, 1>> creates N threads corresponding to the kernel. The important part in add function is blockId.x. This is a built-in variable of CUDA which is available at runtime. This variable basically contains the id of the block in the GPU which is running the routine.

OpenGL

OpenGL<ref name= OpenGL1></ref>(Open Graphics Library) is one of the most popular toolsets available for graphical processing, and many computer games and CAD tools rely on it for 3-Drendering. Originally developed by Silicon Graphics in the early 1990s, OpenGL has been ported to Windows, Linux, Mac OS. It is supported by NVidia and AMD on their GPU’s. On desktop computers, a modern OpenGL application consists of two parts: a host application that runs on the CPU and special-purpose routines called shaders that execute on the graphics processing unit, or GPU. In general, the CPU handles complex graphical routines such as physics and geometry and the GPU performs simple tasks like assigning positions to vertices and colors to pixels. Figure 1.3 depicts the relationship between the host and the shaders in an OpenGL application.

The graphics pipeline of OpenGL

The graphics pipeline<ref name=OpenGL2></ref> is a sequence followed for rendering an image on the screen (frame buffer). Here, the scene which is to be rendered contains multiple vertices arranged in an array. The attributes of these vertices are stored in a vertex buffer. Attributes include the position of the vertex in the real world, etc. These attributes are given to a vertex shader. This shader maps all the vertices in 3D space. A vertex shader can also assign some color information to the vertices. The triangle assembly module connects the vertices to form triangles. The rasterization module converts the triangles into discrete regions consisting of pixels. If the vertices contained color data, this data will be filled in the discrete regions enclosed the boundaries that were previously the edges of the triangles. The fragment shader combines the data from the rasterization module as well as the uniform data which is passed to the frame buffer.

Data parallelism in OpenGL

Parallelism in OpenGL<ref name= OpenGL3></ref> with respect to the graphics pipeline can be accomplished using vertical parallelism and horizontal parallelism methods. Vertical parallelism Vertical parallelism is achieved by assigning the different stages of the pipeline to different processors. Strictly speaking this type of parallelism is more of functional parallelism than data parallelism.

Horizontal parallelism Horizontal parallelism resembles data parallelism. It calls for multiple pipelines working on different sets of data. The figure illustrates the graphics pipeline parallelism. Vertical parallelism is denoted by the various stages which are executed in parallel. Horizontal parallelism is denoted by the arrows, denoting that multiple pipelines are executed in parallel.

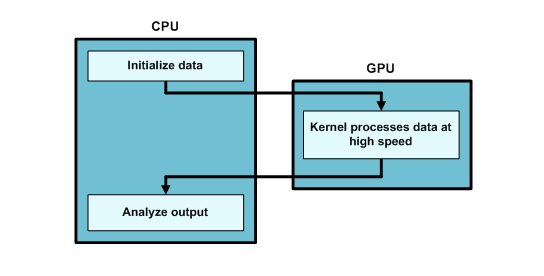

OpenCL

OpenCL<ref name= OpenGL1></ref> (Open Compute Language) is only a few years old and isn't nearly as well-known as OpenGL. However, it allows developers to access GPUs (and many other devices) for purposes other than graphics. Because of this general-purpose GPU (GPGPU) processing, OpenCL is frequently employed to crunch numbers at high speed, and common OpenCL applications include data sorting, statistical computation, and frequency analysis. An OpenCL application consists of a host application that runs on the CPU and general-purpose routines called kernels that can execute on any OpenCL-compliant device, including a GPU. Figure 1.4 shows how an OpenCL application combines CPU and GPU processing.

Data parallelism in OpenCL

The following partial code<ref name= OpenCLEx>OpenCL tutorial URL http://www.cc.gatech.edu/~vetter/keeneland/tutorial-2011-04-14/06-intro_to_opencl.pdf></ref> is used to illustrate data parallelism in OpenCL. Here we multiply two arrays and store the result in the 3rd array. The directive __kernel before the multiplication function denotes that this function (kernel) will be executed by “N” number of PE’s, N being an argument to the function. Kernels are executed across a global domain of work-items. A program can contain multiple work-items. The kernel will get the id of the processing element in the GPU which will perform the multiplication using get_global_id(0) .

__kernel void dp_mul (__global const float *a, __global const float *b, __global float *c, int N)

{

int id = get_global_id (0);

if (id < N)

c[id] = a[id] * b[id];

}

//In main()

{

...

// create a compute context with GPU device

context = clCreateContextFromType(NULL, CL_DEVICE_TYPE_GPU, NULL, NULL, NULL);

// create a command queue

clGetDeviceIDs( NULL, CL_DEVICE_TYPE_DEFAULT, 1, &device_id, NULL );

cl_command_queue queue = clCreateCommandQueue(context, device_id, 0, NULL);

// Build program object

cl_program program = clCreateProgramWithSource(context, 1, &dp_mul_kernel_src, NULL, NULL);

// Build the compute program executable

clBuildProgram(program, 0, NULL, NULL, NULL, NULL);

// create the compute kernel

cl_kernel kernel = clCreateKernel(program, “dp_mul", NULL);

// Create buffers on host and device

size_t size = 100000 * sizeof(int);

int* h_buffer = (int*)malloc(size);

cl_mem a_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL);

cl_mem b_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL);

cl_mem c_buffer = clCreateBuffer(context, CL_MEM_READ_WRITE, size, NULL, NULL);

// Set up kernel arguments

clSetKernelArg(kernel, 0, sizeof(cl_mem), (void*)&a_buffer);

clSetKernelArg(kernel, 1, sizeof(cl_mem), (void*)&b_buffer);

clSetKernelArg(kernel, 2, sizeof(cl_mem), (void*)&c_buffer);

clSetKernelArg(kernel, 3, sizeof(int), (void*)&N);

// Write to buffer object from host memory

clEnqueueWriteBuffer(cmd_queue, a_buffer, CL_FALSE, 0, size, h_buffer, 0, NULL, NULL);

clEnqueueWriteBuffer(cmd_queue, b_buffer, CL_FALSE, 0, size, h_buffer, 0, NULL, NULL);

// Set number of work-items in a work-group

size_t localWorkSize = 256;

int numWorkGroups = (N + localWorkSize – 1) / localWorkSize; // round up

size_t globalWorkSize = numWorkGroups * localWorkSize; // must be evenly divisible by localWorkSize

//Sequence of commands scheduled for execution on queue. This command basically invokes the kernel over the global workspace.

clEnqueueNDRangeKernel(cmd_queue, kernel, 1, NULL, &globalWorkSize, &localWorkSize, 0, NULL, NULL);

// Read from buffer object to host memory

clEnqueueReadBuffer(cmd_queue, c_buffer , CL_TRUE, 0, size, h_buffer , 0, NULL, NULL);

}

The main function contains code for performing the following steps:

- Creating a context with the GPU device: This means creating an environment within which the work-items operate.

- Creating a command queue: All the work that is to be submitted to the GPU is in the form of a command queue.

- Creating a program of the source code which is to be executed in parallel and building the program: With regards to the code, this is the dp_mul function which we want to parallelize.

- Creating a kernel of the program which will be executed simultaneously across N PE’s.

- Creating buffers for reading and writing data.

- Setting up the arguments which are to be passed to the kernel.

- Work items can be grouped together to form a work group. We need to set up the number of work groups and the number of work items per work group.

- Invoking the kernel

Further readings

More information on OpenCL can be obtained at OpenGL documentation by Apple. <ref name=OpenGLApple> Documentation on OpenGl by Apple URL http://developer.apple.com/library/mac/documentation/Performance/Conceptual/OpenCL_MacProgGuide/Introduction/Introduction.html></ref> Also refer to the tutorial by Khronos group.<ref name= OpenCLEx> </ref>. Real world technologies is a good resource for more information on GPU's and GPU Architecture. <ref name=Real World Technologies> Real World Technologies URL http://www.realworldtech.com/gpu/</ref> NVIDIA website provides an excellent resource on CUDA, NVIDIA’s GPU parallel platform.

Questions

- Q1. What makes GPU a best choice for graphical operations?

- Q2. What is the difference between a CPU and a GPU?

- Q3. Suppose a GPU from AMD supporting the Cayman architecture has 24 SIMD cores and 1728 processing elements in total. How many processing elements are present in 1 core?

- Q4. Explain how the GPU hardware is supportive in achieving data parallelism.

- Q5. Name the programming model developed by NVidia

- Q6. What is the importance of CUDA language?

- Q7. What is the syntax used to create N kernels in CUDA C

- Q8. In CUDA, what directive is used to denote that the code is a parallel kernel?

- Q9. How is parallel programming achieved using CUDA language?

- Q10. Who was responsible for the initial development of OpenGL?

- Q11. What is the primary difference between OpenCL and OpenGL application operations?

- Q12. What other types of parallelism are present in GPU architecture?

- Q13. What advantages do GPUs have over CPUs?

- Q14. In OpenGL, which tasks are assigned to CPUs and which tasks are handled by GPUs?

- Q15. What are the main functions of kernels in OpenCL?

References

<references/>