CSC 456 Fall 2013/8a wa: Difference between revisions

(→MOESI) |

|||

| (52 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

==Original Article== | |||

This article is a revision of http://wiki.expertiza.ncsu.edu/index.php/CSC_456_Spring_2012/ch7_MN. | |||

==Introduction== | ==Introduction== | ||

Though the migration from [http://en.wikipedia.org/wiki/Uniprocessor_system uniprocessor system] to [http://en.wikipedia.org/wiki/Multiprocessing multiprocessing] systems is not new, the world of parallel computers is undergoing a continuous change. Parallel computers, which started as high-end super-computing systems for carrying out huge calculations, are now ubiquitous and are present in all mainstream architectures for servers, desktops, and embedded systems. In order to design parallel architectures to meet programmer's needs and expectations more closely, exciting and challenging changes exist. The three main areas which are being considered by scientists today are: [http://en.wikipedia.org/wiki/Cache_coherence cache coherence], memory consistency and [http://en.wikipedia.org/wiki/Synchronization_%28computer_science%29 synchronization]. | Though the migration from [http://en.wikipedia.org/wiki/Uniprocessor_system uniprocessor system] to [http://en.wikipedia.org/wiki/Multiprocessing multiprocessing] systems is not new, the world of parallel computers is undergoing a continuous change. Parallel computers, which started as high-end super-computing systems for carrying out huge calculations, are now ubiquitous and are present in all mainstream architectures for servers, desktops, and embedded systems. In order to design parallel architectures to meet programmer's needs and expectations more closely, exciting and challenging changes exist. The three main areas which are being considered by scientists today are: [http://en.wikipedia.org/wiki/Cache_coherence cache coherence], memory consistency and [http://en.wikipedia.org/wiki/Synchronization_%28computer_science%29 synchronization]. | ||

| Line 9: | Line 12: | ||

In a system with single processor (single core), maintaining cache coherence is simple and easy but in a multiprocessor system, it is much more complicated. Data can be present in any processor's cache and the protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated. | In a system with single processor (single core), maintaining cache coherence is simple and easy but in a multiprocessor system, it is much more complicated. Data can be present in any processor's cache and the protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated. | ||

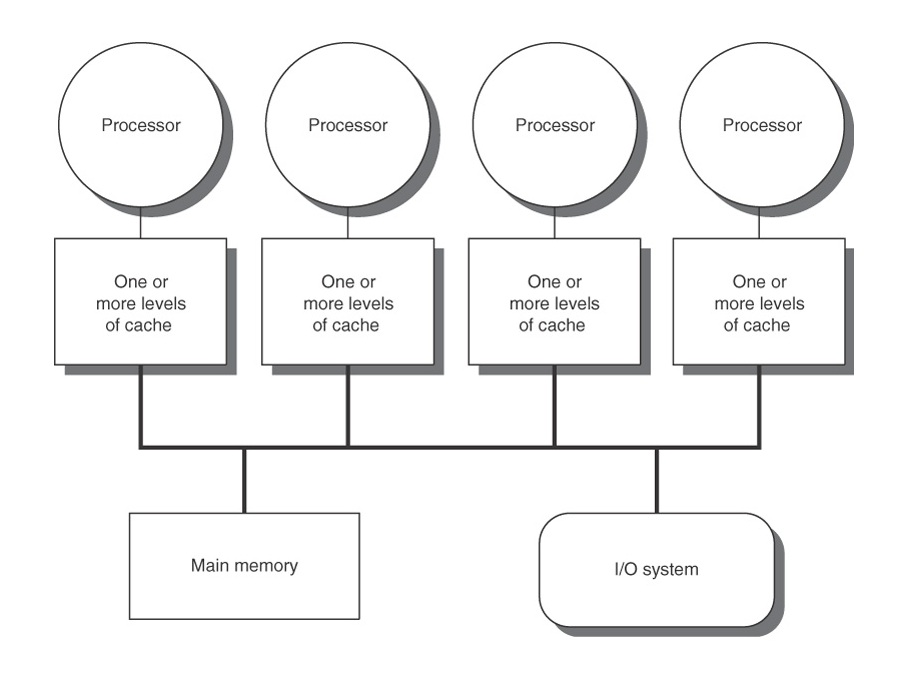

In Figure 1 shown here, there is a 4 processor shared memory system where each processor has its own cache. Suppose processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is the responsibility of cache coherence protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement. | In Figure 1 shown here, there is a 4 processor shared memory system where each processor has its own cache. Suppose processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is the responsibility of cache coherence protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement.<ref name="neu01">Neupane, Mahesh. http://cse.csusb.edu/schubert/tutorials/csci610/w04/MN_Cache_Coherence.pdf{{cite web | ||

<ref name="neu01">Neupane, Mahesh. http://cse.csusb.edu/schubert/tutorials/csci610/w04/MN_Cache_Coherence.pdf{{cite web | |||

| url = http://cse.csusb.edu/schubert/tutorials/csci610/w04/MN_Cache_Coherence.pdf | | url = http://cse.csusb.edu/schubert/tutorials/csci610/w04/MN_Cache_Coherence.pdf | ||

| title = Cache Coherence | | title = Cache Coherence | ||

| Line 26: | Line 28: | ||

==Cache Coherence Protocols== | ==Cache Coherence Protocols== | ||

In order to maintain coherence across caches, there must be a way to identify whether or not caches contain stale data. The Snooping Protocol exists on the inter-processor bus (shown in Figure 1 as the line connecting the caches to memory) to determine this and to instigate the change of data in caches if necessary. The two basic methods that utilize Snooping Protocol to notify other processors when a processor changes something in its cache are | In order to maintain coherence across caches, there must be a way to identify whether or not caches contain stale data. The Snooping Protocol exists on the inter-processor bus (shown in Figure 1 as the line connecting the caches to memory) to determine this and to instigate the change of data in caches if necessary. The two basic methods that utilize Snooping Protocol to notify other processors when a processor changes something in its cache are the ''update'' and ''invalidate'' methods. In the update method, if variable 'x' is modified by processor 1, processor 1 has to notify the Snooper to send the updated value of 'x' onto the inter-processor bus. Each cache listens to the Snooper and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable.<ref name="hit01">Hitchcock, Russell. http://www.windowsnetworking.com/articles-tutorials/common/Cache-Coherency.html{{cite web | ||

<ref name="hit01">Hitchcock, Russell. http://www.windowsnetworking.com/articles-tutorials/common/Cache-Coherency.html{{cite web | |||

| url = http://www.windowsnetworking.com/articles-tutorials/common/Cache-Coherency.html | | url = http://www.windowsnetworking.com/articles-tutorials/common/Cache-Coherency.html | ||

| title = Cache Coherency | | title = Cache Coherency | ||

| Line 40: | Line 41: | ||

In case of invalidation, an invalidation message is sent onto the inter-processor bus when a variable is changed. The other caches will read this invalidation signal and if its processor attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.<ref name="neu01" /> | In case of invalidation, an invalidation message is sent onto the inter-processor bus when a variable is changed. The other caches will read this invalidation signal and if its processor attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.<ref name="neu01" /> | ||

The update method results in significant amount of traffic on the inter-processor bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method. | The update method results in significant amount of traffic on the inter-processor bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method. In order to improve cache coherence performance over the years, several protocols have been proposed.<ref name="hit01" /> | ||

In order to improve cache coherence performance over the years, several protocols have been proposed. | |||

===MSI=== | ===MSI=== | ||

[[Image:MSI.jpg|thumbnail|right|150px|MSI State Diagram]] | [[Image:MSI.jpg|thumbnail|right|150px|MSI State Diagram]] | ||

MSI stands for Modified, Shared, and Invalid, based on the three states that a line of cache can be in. The modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The invalid state means that the value of the variable has been modified by another cache and this value is invalid; the cache must read a new value from main memory (or another cache). | ''MSI'' stands for '''Modified''', '''Shared''', and '''Invalid''', based on the three states that a line of cache can be in. The modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The invalid state means that the value of the variable has been modified by another cache and this value is invalid; the cache must read a new value from main memory (or another cache).<ref name="hit01" /> ''MSI'' is also sometimes referred to as ''Synapse'', an equivalent four-state protocol with the states '''Not-In''', '''Valid''', '''Dirty''', and '''Invalid'''.<ref name="yan1143">Yang, Quing. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=30868&tag=1.{{cite journal | ||

| url = http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=30868&tag=1 | |||

| title = Analysis and comparison of cache coherence protocols for a packet-switched multiprocessor | |||

| last1 = Yang | |||

| first1 = Quing | |||

| last2 = Bhuyan | |||

| first2 = Laxmi | |||

| last3 = Liu | |||

| first3 = Bao-Chyn | |||

| date = August 1989 | |||

| journal = IEEE Transactions on Computers | |||

| volume = 38 | |||

| issue = 8 | |||

| pages = 1143-1153 | |||

| doi = 10.1109/12.30868 | |||

| accessdate = November 8, 2013 | |||

| separator = , | |||

}} | |||

</ref> | |||

A drawback to this protocol occurs when a single processor wants to read blocks and then write to them without another processor sharing that block. After reading the block, a bus transaction places the block into a shared state. The write then occurs and another bus transaction is sent to invalidate the shared copy. This second transaction is useless as no other processors are sharing the block, but the MSI protocol has no way to specify this. | A drawback to this protocol occurs when a single processor wants to read blocks and then write to them without another processor sharing that block. After reading the block, a bus transaction places the block into a shared state. The write then occurs and another bus transaction is sent to invalidate the shared copy. This second transaction is useless as no other processors are sharing the block, but the MSI protocol has no way to specify this. | ||

| Line 53: | Line 69: | ||

===MESI=== | ===MESI=== | ||

[[Image:MESI.jpg|thumbnail|right|150px|MESI State Diagram]] | [[Image:MESI.jpg|thumbnail|right|150px|MESI State Diagram]] | ||

MESI stands for Modified, Exclusive, Shared, and Invalid. The modified and invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the exclusive state. The exclusive state means that the variable is in only this cache and the value of it matches the value within the main memory. This now means that the shared state indicates that the variable is contained in more than one cache. | ''MESI'' stands for '''Modified''', '''Exclusive''', '''Shared''', and '''Invalid'''. The modified and invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the exclusive state. The exclusive state means that the variable is in only this cache and the value of it matches the value within the main memory. This now means that the shared state indicates that the variable is contained in more than one cache.<ref name="hit01" /> ''MESI'' is also sometimes referred to as ''Illinois'', an equivalent five-state protocol with the states '''Not-In''', '''Valid-Only''', '''Shared''', '''Dirty''', and '''Invalid'''.<ref name="yan1143" /> | ||

===MOSI=== | ===MOSI=== | ||

The ''MOSI'' protocol is identical to the ''MSI'' protocol except that it adds an owned state. The '''Owned''' state means that the processor "owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the owning cache much, much, faster.<ref name="hit01" /> ''MOSI'' is sometimes also referred to as ''Berkeley'', an equivalent five-state protocol with the states '''Not-In''', '''Shared-Dirty''', '''Shared''', '''Dirty''', and '''Invalid'''.<ref name="yan1143" /> | |||

The MOSI protocol is identical to the MSI protocol except that it adds an owned state. The | |||

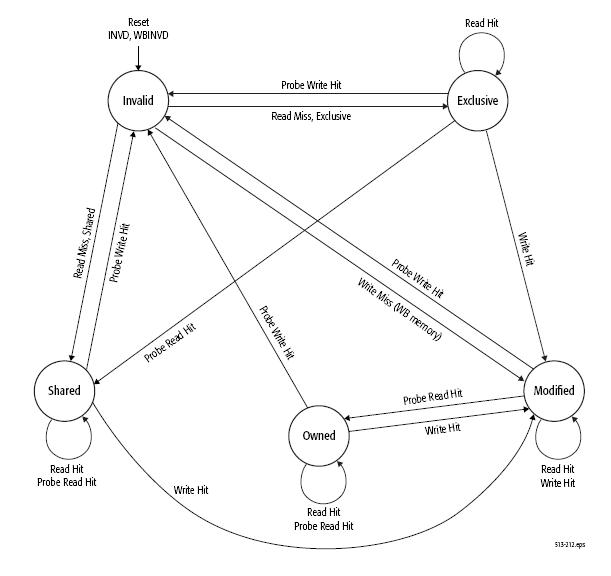

===MOESI=== | ===MOESI=== | ||

[[Image:MOESI.jpg|thumbnail|right|150px|MOESI State Diagram]] | [[Image:MOESI.jpg|thumbnail|right|150px|MOESI State Diagram]] | ||

The MOESI protocol is a combination of the MESI and MOSI protocols. | The ''MOESI'' protocol is a combination of the ''MESI'' and ''MOSI'' protocols, having separate a '''Owned''' state as well as a '''Exclusive''' state.<ref name="hit01" /> According to the AMD64 Architecture Programmer's Manual, | ||

<blockquote> | |||

The specific operation of external-bus signals and transactions and how they influence a cache MOESI state are implementation dependent. For example, an implementation could convert a write miss to a WB [write-back] memory type into two separate MOESI-state changes. The first would be a read-miss placing the cache line in the exclusive state. This would be followed by a write hit into the exclusive cache line, changing the cache-line state to modified.<ref name="amd169">Advanced Micro Devices. http://web.archive.org/web/20110719185356/http://support.amd.com/us/Embedded_TechDocs/24593.pdf.{{cite manual | |||

| url = http://web.archive.org/web/20110719185356/http://support.amd.com/us/Embedded_TechDocs/24593.pdf | |||

| title = AMD64 Architecture Programmer’s Manual | |||

| author = Advanced Micro Devices | |||

| date = May 2011 | |||

| series = AMD64 Technology | |||

| volume = Volume 2: System Programming | |||

| publication = 24593 | |||

| revision = 3.18 | |||

| pages = 169-172 | |||

| accessdate = November 19, 2013 | |||

| separator = , | |||

}} | |||

</ref> | |||

</blockquote> | |||

As with ''MOSI'', ''MOESI'' achieves better performance in situations where there is a read miss, and there is a more up-to-date copy of data '''Owned''' by a separate cache than the data in memory. In this case, the data can be transferred directly from the owner to the requesting processor's cache without having to flush it back to memory.<ref name="hit01" /> If, however, the copy of data in memory is already up-to-date, then the transfer must be made from memory since no processor "owns" the data and that is a pre-requisite for bus transfers between caches.<ref name="amd169" /> | |||

===MESIF(new)=== | ===MESIF (new)=== | ||

[[Image:Mesif.jpg|right|150px|MESIF State Diagram]] | [[Image:Mesif.jpg|right|150px|MESIF State Diagram]] | ||

MESIF is the same as MESI with the only difference being the addition of the | ''MESIF'' is the same as ''MESI'' with the only difference being the addition of the '''Forward''' state. This state signifies a go-to state for a line in cache. When this state is set, the cache in the F state (housing cache) is the first place where the line will be looked up. When the same line in cache is in the S state in other caches, only one cache can have the line set in the F state.<br> | ||

MESIF was designed as a point to point protocol, with a 2 hop latency for common operations. This eliminates the hop to the bus, creating faster communication to the receiving cache. Because of the speed, MESIF can out perform a bus based protocol.<br> | |||

[[File:2-3hop.png]]<br> | |||

we can see in this performance graph from [https://researchspace.auckland.ac.nz/bitstream/handle/2292/11594/MESIF-2009.pdf?sequence=6 Auckland], that under different loads the 2 hop always has better performance than the standard 3 hop baseline.<br> | |||

This 2 hop method, allowed the number of caches to increase. Allowing the benefits of having even more processors, to again out weigh the slow down that plagued a 3 hop cache coherence protocol. | |||

<br> | |||

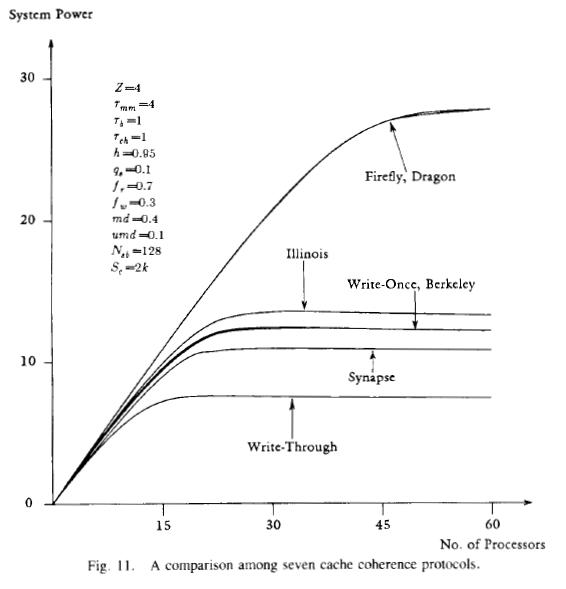

===Performance Comparison(new)=== | |||

[[Image:CacheCoherenceProtocolComparison.jpg|thumbnail|right|150px|Protocol performance comparison]] | |||

[[Image:2-3hop.png|thumbnail|right|150px|MESIF comparison]] | |||

The ''MESIF'' protocol was designed to perform better with lots of cache sharing. It is also highly efficient for a small number of nodes. The system is scale-able to a larger system. It is scale-able by a hierarchical manner. By being hierarchical, a cluster can interact as if it was a single node. MESIF was designed as a point to point protocol, with a 2 hop latency for common operations. Because of the speed, MESIF can out perform a bus based protocol. Common bus based protocols use 3 hops. <br> | |||

With the hierarchical scaling, This protocol will out perform any bus based protocol on big tasks by cutting down the hops to keep processors in sync. this small gain can greatly improve performance on multicore machines, bettered by the speed of the processor. <br> | |||

By having the F state in the designated processor, it cuts down on the bus traffic to keep the caches updated. The bus protocol provides assurance that the order of messages will be kept. | |||

Performance is gained on a Exclusive hit or a modified hit, saving a step in traffic to propagate the other caches. | |||

=Memory Consistency Problem= | =Memory Consistency Problem= | ||

| Line 181: | Line 229: | ||

<span id="8foot">[[#8body|8.]]</span> | <span id="8foot">[[#8body|8.]]</span> | ||

http://people.freebsd.org/~lstewart/articles/cache-performance-x86-2009.pdf<br> | http://people.freebsd.org/~lstewart/articles/cache-performance-x86-2009.pdf<br> | ||

<span id="9foot">[[#9body|9.]]</span> | |||

https://researchspace.auckland.ac.nz/bitstream/handle/2292/11594/MESIF-2009.pdf?sequence=6<br> | |||

Latest revision as of 17:27, 19 November 2013

Original Article

This article is a revision of http://wiki.expertiza.ncsu.edu/index.php/CSC_456_Spring_2012/ch7_MN.

Introduction

Though the migration from uniprocessor system to multiprocessing systems is not new, the world of parallel computers is undergoing a continuous change. Parallel computers, which started as high-end super-computing systems for carrying out huge calculations, are now ubiquitous and are present in all mainstream architectures for servers, desktops, and embedded systems. In order to design parallel architectures to meet programmer's needs and expectations more closely, exciting and challenging changes exist. The three main areas which are being considered by scientists today are: cache coherence, memory consistency and synchronization.

Cache Coherence Problem

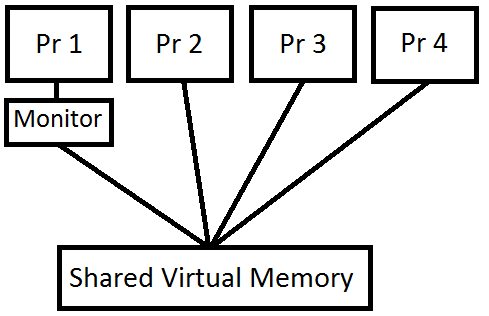

In a system with single processor (single core), maintaining cache coherence is simple and easy but in a multiprocessor system, it is much more complicated. Data can be present in any processor's cache and the protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated.

In Figure 1 shown here, there is a 4 processor shared memory system where each processor has its own cache. Suppose processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is the responsibility of cache coherence protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement.<ref name="neu01">Neupane, Mahesh. http://cse.csusb.edu/schubert/tutorials/csci610/w04/MN_Cache_Coherence.pdf </ref>

One may think that cache write policy can provide cache coherence, but this is incorrect. Cache write policy only controls how a change in value of cache is propagated to lower level cache or main memory. It is not responsible for propagating changes to other caches.

Cache Coherence Protocols

In order to maintain coherence across caches, there must be a way to identify whether or not caches contain stale data. The Snooping Protocol exists on the inter-processor bus (shown in Figure 1 as the line connecting the caches to memory) to determine this and to instigate the change of data in caches if necessary. The two basic methods that utilize Snooping Protocol to notify other processors when a processor changes something in its cache are the update and invalidate methods. In the update method, if variable 'x' is modified by processor 1, processor 1 has to notify the Snooper to send the updated value of 'x' onto the inter-processor bus. Each cache listens to the Snooper and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable.<ref name="hit01">Hitchcock, Russell. http://www.windowsnetworking.com/articles-tutorials/common/Cache-Coherency.html </ref>

In case of invalidation, an invalidation message is sent onto the inter-processor bus when a variable is changed. The other caches will read this invalidation signal and if its processor attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.<ref name="neu01" />

The update method results in significant amount of traffic on the inter-processor bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method. In order to improve cache coherence performance over the years, several protocols have been proposed.<ref name="hit01" />

MSI

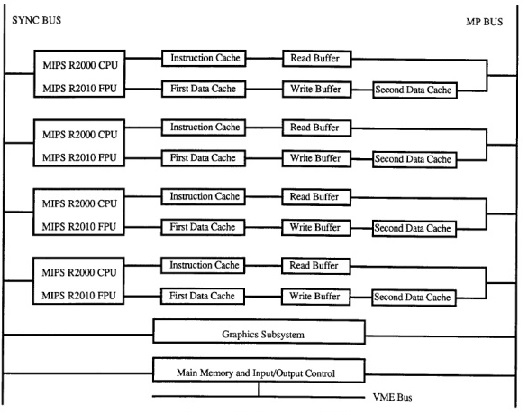

MSI stands for Modified, Shared, and Invalid, based on the three states that a line of cache can be in. The modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The invalid state means that the value of the variable has been modified by another cache and this value is invalid; the cache must read a new value from main memory (or another cache).<ref name="hit01" /> MSI is also sometimes referred to as Synapse, an equivalent four-state protocol with the states Not-In, Valid, Dirty, and Invalid.<ref name="yan1143">Yang, Quing. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=30868&tag=1. </ref>

A drawback to this protocol occurs when a single processor wants to read blocks and then write to them without another processor sharing that block. After reading the block, a bus transaction places the block into a shared state. The write then occurs and another bus transaction is sent to invalidate the shared copy. This second transaction is useless as no other processors are sharing the block, but the MSI protocol has no way to specify this.

MESI

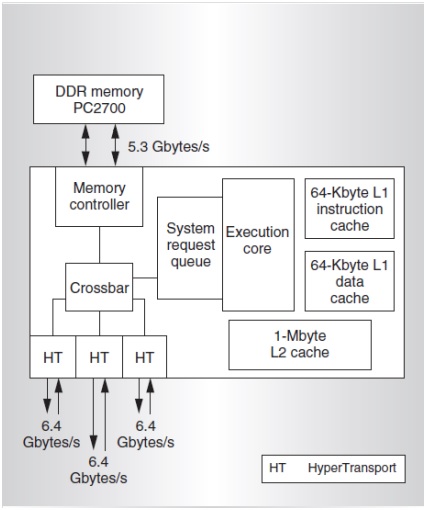

MESI stands for Modified, Exclusive, Shared, and Invalid. The modified and invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the exclusive state. The exclusive state means that the variable is in only this cache and the value of it matches the value within the main memory. This now means that the shared state indicates that the variable is contained in more than one cache.<ref name="hit01" /> MESI is also sometimes referred to as Illinois, an equivalent five-state protocol with the states Not-In, Valid-Only, Shared, Dirty, and Invalid.<ref name="yan1143" />

MOSI

The MOSI protocol is identical to the MSI protocol except that it adds an owned state. The Owned state means that the processor "owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the owning cache much, much, faster.<ref name="hit01" /> MOSI is sometimes also referred to as Berkeley, an equivalent five-state protocol with the states Not-In, Shared-Dirty, Shared, Dirty, and Invalid.<ref name="yan1143" />

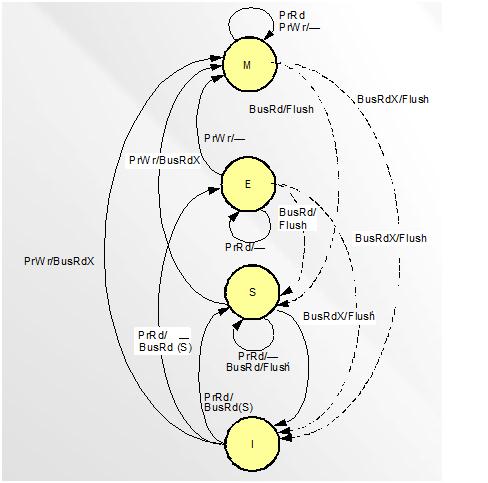

MOESI

The MOESI protocol is a combination of the MESI and MOSI protocols, having separate a Owned state as well as a Exclusive state.<ref name="hit01" /> According to the AMD64 Architecture Programmer's Manual,

The specific operation of external-bus signals and transactions and how they influence a cache MOESI state are implementation dependent. For example, an implementation could convert a write miss to a WB [write-back] memory type into two separate MOESI-state changes. The first would be a read-miss placing the cache line in the exclusive state. This would be followed by a write hit into the exclusive cache line, changing the cache-line state to modified.<ref name="amd169">Advanced Micro Devices. http://web.archive.org/web/20110719185356/http://support.amd.com/us/Embedded_TechDocs/24593.pdf.Template:Cite manual </ref>

As with MOSI, MOESI achieves better performance in situations where there is a read miss, and there is a more up-to-date copy of data Owned by a separate cache than the data in memory. In this case, the data can be transferred directly from the owner to the requesting processor's cache without having to flush it back to memory.<ref name="hit01" /> If, however, the copy of data in memory is already up-to-date, then the transfer must be made from memory since no processor "owns" the data and that is a pre-requisite for bus transfers between caches.<ref name="amd169" />

MESIF (new)

MESIF is the same as MESI with the only difference being the addition of the Forward state. This state signifies a go-to state for a line in cache. When this state is set, the cache in the F state (housing cache) is the first place where the line will be looked up. When the same line in cache is in the S state in other caches, only one cache can have the line set in the F state.

MESIF was designed as a point to point protocol, with a 2 hop latency for common operations. This eliminates the hop to the bus, creating faster communication to the receiving cache. Because of the speed, MESIF can out perform a bus based protocol.

we can see in this performance graph from Auckland, that under different loads the 2 hop always has better performance than the standard 3 hop baseline.

This 2 hop method, allowed the number of caches to increase. Allowing the benefits of having even more processors, to again out weigh the slow down that plagued a 3 hop cache coherence protocol.

Performance Comparison(new)

The MESIF protocol was designed to perform better with lots of cache sharing. It is also highly efficient for a small number of nodes. The system is scale-able to a larger system. It is scale-able by a hierarchical manner. By being hierarchical, a cluster can interact as if it was a single node. MESIF was designed as a point to point protocol, with a 2 hop latency for common operations. Because of the speed, MESIF can out perform a bus based protocol. Common bus based protocols use 3 hops.

With the hierarchical scaling, This protocol will out perform any bus based protocol on big tasks by cutting down the hops to keep processors in sync. this small gain can greatly improve performance on multicore machines, bettered by the speed of the processor.

By having the F state in the designated processor, it cuts down on the bus traffic to keep the caches updated. The bus protocol provides assurance that the order of messages will be kept. Performance is gained on a Exclusive hit or a modified hit, saving a step in traffic to propagate the other caches.

Memory Consistency Problem

Memory consistency deals with the ordering of memory operations (load and store) to different memory locations. In a single processor system, code will execute correctly if the compiler preserves the order of the access to synchronization variables and other dependent variables. But in shared memory model with multiple processors, two threads could access a shared data element, such as a synchronization variable, the output of the threads would change based on which thread, accesses the shared data element earlier. If this were to occur, then the program output may not be the value expected. Maintaining program order is very important for memory consistency but it comes with performance degradation.

Memory Consistency Models

The memory consistency model of a shared-memory multiprocessor is a formal specification of how the memory system appears to the programmer. It eliminates the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read, in a shared-memory program execution.

In a single processor system, in order to maintain memory consistency, it needs to ensure that the compiler preserves the program order when accessing synchronization variables. But in a multiprocessor system, it is required to ensure that accesses of one processor appear to execute in program order to all other processors, at least partially.

Memory semantics in Uniprocessor systems

Uniprocessor languages use simple sequential semantics for memory operations, which allow the programmer to assume that all memory operations will occur one at a time in the sequential order specified by the program. Thus, one can expect for the value in a particular location be the same for a read as it was for the last write because of the sequential program execution. It is sufficient to only maintain uniprocessor data and control dependences. The compiler and hardware can freely reorder operations to different locations if the uniprocessor data and control dependences are respected. This enables compiler optimizations such as register allocation, code motion, and loop transformations, and hardware optimizations, such as pipelining, multiple issue, write buffer bypassing and forwarding, and lockup-free caches, all of which lead to overlapping and reordering of memory operations.[4]

Memory semantics in multiprocessor systems

Programmer's implicit expectations are:

- memory accesses in a processor takes place according to the program order.

- Each memory access is performed atomically.

A strong consistency model attempting uniprocessor-like consistency could cause global bottleneck, costing performance. Thus, weak consistency models are deployed to improve performance. The advanatges of such models are:

- They support out-of-order execution within individual CPUs

- Relaxes latency issues with near-simultaneous accesses by different CPUs

The following are the various consistency models and it is the programmer who must take into account the memory consistency model to create correct software:

| Type of Constancy Modle | Strictness rank, 1-10(lowest-highest) |

|---|---|

| linearizability (also known as strict or atomic consistency) | 3 |

| sequential consistency | 3 |

| causal consistency | 1 |

| release consistency | 3 |

| eventual consistency | 2 |

| delta consistency | 1 |

| PRAM consistency (also known as FIFO consistency) | 1 |

| weak consistency | 1 |

| vector-field consistency | 3 |

| fork consistency | 2 |

| serializability | 3 |

| one-copy serializability | 3 |

| entry consistency | 3 |

The memory coherence problem in a shared virtual memory system and in multicache systems are different. In a multicache multiprocessor, there are processors sharing a physical memory through their private caches. The relatively small size of a cache and the fast bus connection to the shared memory, enables using a sophisticated coherence protocol for the multicache hardware such that the time delay of conflicting writes to a memory location is small.

In contrast, in a shared virtual memory on a loosely coupled multiprocessor which has no physically shared memory, and having a nontrivial communication cost between processors, conflicts are not likely to be solved with negligible delay, and they resemble much more a “page fault” in a traditional virtual memory system. Thus, there are two design choices that greatly influence the implementation of a shared virtual memory: the granularity of the memory units (i.e., the “page size”) and the strategy for maintaining coherence.

Memory coherence strategies are classified based on how they deal with page synchronization and page ownership. The algorithms for memory coherence depend on the page fault handlers, their servers and the data structures used. So page table becomes an important part of these protocols.

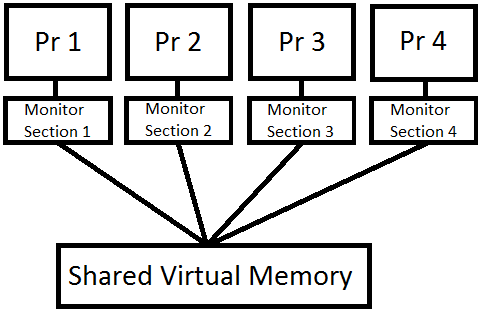

Two classes of algorithms for solving the memory coherence problem are centralized manager (figure 2) and distributed manager (figure 3). In the centralized system, one processor is designated the "monitor". This processor contains a list of information for each page in the cache. The list includes the owner of the page (which processor accessed it last) and all the processors that have copies of the page. When a page is invalidated, then invalidation message is only sent to processors that have copies of the page. This differs from a bus-based system, as the invalidation message is broadcasted to all processors. A drawback to the centralized manager is that there is a bottleneck at the monitor processor. This bottleneck is alleviated by using a distributed manager.

The distributed manager is similar to a centralized manager, but instead of one processor monitoring all pages, a subset of the pages is given to each processor. So, processor 0 would only monitor pages 1 through i, and processor 1 would only monitor pages i+1 through n.[5]

Synchronization

Two related concepts dealing with synchronization are process synchronization and data synchronization. Process synchronization is concerned with different processes committing to a certain sequence of actions. Data synchronization deals with maintaining data integrity across various copies of a dataset. Process synchronization primitives can be used to implement data synchronization.

Mutual exclusion is the main requirement to be fulfilled in order to synchronize processes, and is needed in both single-processor and multiprocessor systems. There are various approaches to provide mutual exclusion in a system:

- Disabling interrupts

- Locks

- Mutex

- Semaphores

- Barriers

- Test and Set

The next section discusses if there is an alternative to implement mutual exclusion without requiring any hardware support. Peterson's algorithm is one such software solution for guaranteeing mutual exclusion.

Hardware support

Exclusive locking assumes the worst and proceeds only after acquiring all locks such that no other thread can interfere. This is a pessimistic approach. In contrast, the optimistic approach proceeds with an update, hoping that it can be completed without any interference. This requires collision detection during the update. The optimistic approach is thus, more efficient in fine-grained operations.

Special instructions are provided by processors designed for multiprocessor operations in order to manage concurrent access to shared variables. Atomic instructions like test-and-set, fetch-and-increment and swap were sufficient for early processors to implement mutexes for concurrent objects. Today, every modern processor relies on some form of read-modify-write atomic instruction such as compare-and-swap, LL/SC etc. for the same.

References

<references />

Resources

1. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_jp#Memory_Consistency_Problem

2. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_ss#Cache_Coherence

3. http://www.windowsnetworking.com/articles_tutorials/Cache-Coherency.html

4.http://web.sfc.keio.ac.jp/~rdv/keio/sfc/teaching/architecture/architecture-2007/lec08.html

5.http://dl.acm.org/citation.cfm?id=75105

6.http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=30868

7.http://www.realworldtech.com/common-system-interface/5/

8.

http://people.freebsd.org/~lstewart/articles/cache-performance-x86-2009.pdf

9.

https://researchspace.auckland.ac.nz/bitstream/handle/2292/11594/MESIF-2009.pdf?sequence=6