CSC/ECE 517 Fall 2013/oss E814 vd: Difference between revisions

| (19 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Expertiza is a web application that supports peer-review for a variety of entities. As it is still evolving, expertiza has several areas that require work. Among these include areas that require refactoring existing code to improve code quality. The graph_generator.rb file is one such area. This write-up describes our refactoring decisions for this file. | Expertiza is a web application that supports peer-review for a variety of entities. As it is still evolving, expertiza has several areas that require work. Among these include areas that require refactoring existing code to improve code quality. The graph_generator.rb file is one such area. This write-up describes our refactoring decisions for this file. | ||

==Background== | ==Background== | ||

The graph_generator.rb file an automated metareview feature responsible for parsing the | The graph_generator.rb file is an automated metareview feature responsible for parsing the text from a review and creating a graph of the review's content. This file was plagued with several cases of duplication and a very high code complexity. We attempted to identify areas in need of refactoring and fix as many of these areas as possible. In the rest of this page, we go method-by-method to describe our changes to the original file. After doing so, we describe the improvements (based on Code Climate's metrics) we achieved. Finally, we identify areas that proved quite problematic for our assignment. | ||

==Changes by Method== | ==Changes by Method== | ||

===generate_graph=== | ===generate_graph=== | ||

====tagged_token_check==== | ====tagged_token_check==== | ||

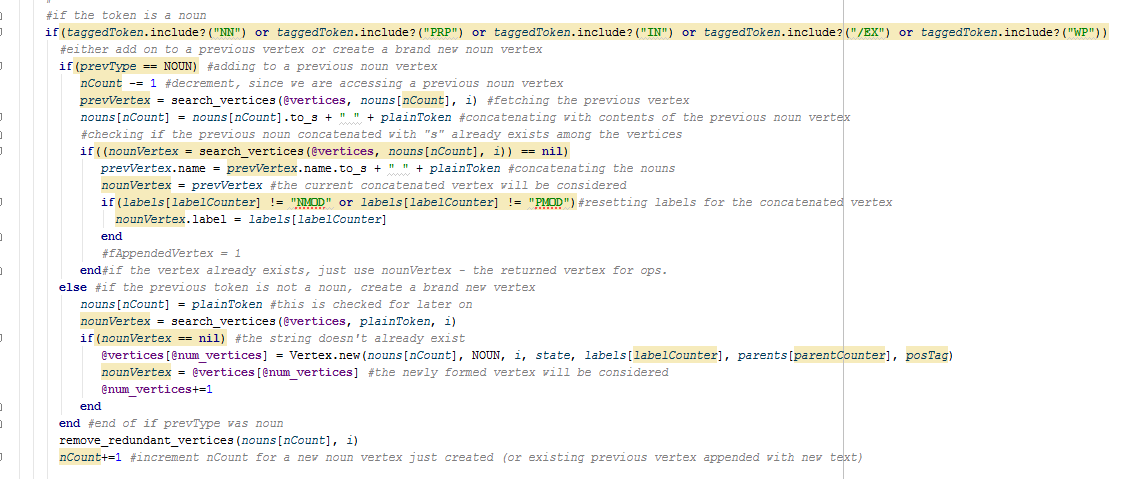

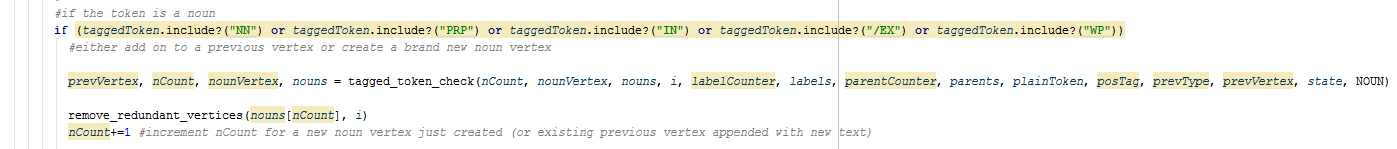

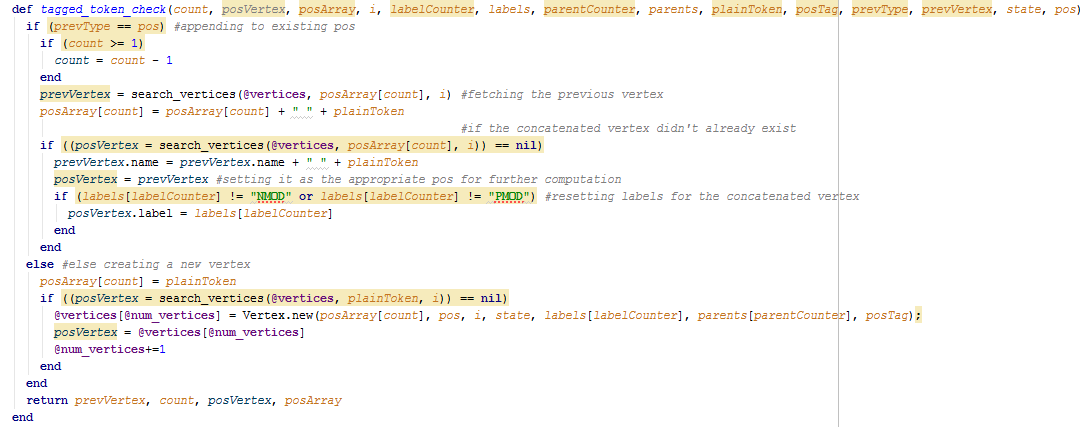

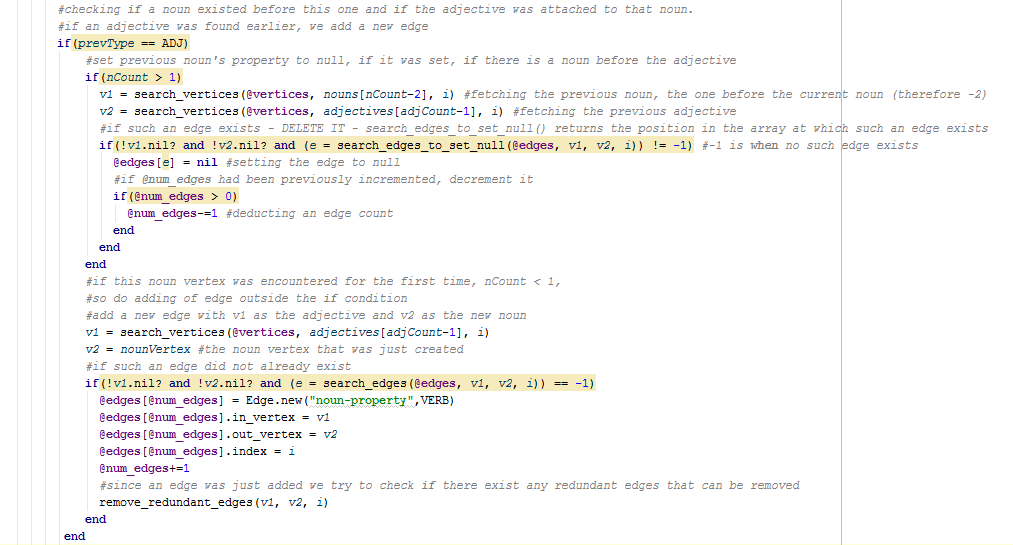

The first change that can be seen within this method is the extracting of code that adds on to a previous vertex or creates a new one, all based upon the part of speech defined of the tokens encountered while parsing the review. We called our extracted method tagged_token_check. | The first change that can be seen within this method is the extracting of code that adds on to a previous vertex or creates a new one, all based upon the part of speech defined of the tokens encountered while parsing the review. We called our extracted method tagged_token_check. By extracting this method, we were able to reduce duplication (since such similar code occurred four times in the method for tokens that are nouns, adjectives, adverbs, and verbs). Since the code contained nested if statements, we also were able to reduce the complexity of the method. Screenshots of the code before and after refactoring are included below. The top picture is the code before refactoring, the middle is the code after, and the bottom is the code within the extracted method. | ||

---- | ---- | ||

---- | ---- | ||

| Line 18: | Line 18: | ||

---- | ---- | ||

---- | ---- | ||

====update_pos_property==== | ====update_pos_property==== | ||

The next extracted method in generate_graph is called update_pos_property, and it helps reduce duplication (previously, there were two occurrences of the extracted code) and complexity (the extracted code removes a nested if statement). The pictures below, from top to bottom, show the code in the original file, the generate_graph code changed by extracting the method, and the extracted code for the extracted method. | The next extracted method in generate_graph is called update_pos_property, and it helps reduce duplication (previously, there were two occurrences of the extracted code) and complexity (the extracted code removes a nested if statement). The pictures below, from top to bottom, show the code in the original file, the generate_graph code changed by extracting the method, and the extracted code for the extracted method. | ||

| Line 40: | Line 41: | ||

===remove_redundant_edges and remove_redundant_vertices=== | ===remove_redundant_edges and remove_redundant_vertices=== | ||

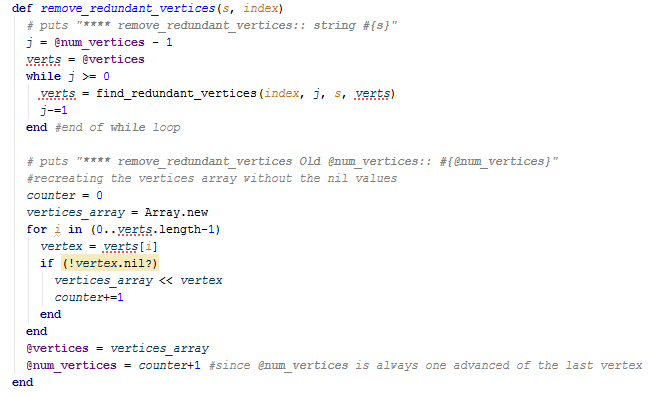

The remove_redundant_edges method also suffered from high code complexity, so we extracted a new method called find_redundant_edges to reduce such complexity | The remove_redundant_edges method also suffered from high code complexity, so we extracted a new method called find_redundant_edges to reduce such complexity. A find_redundant_vertices method was also extracted from remove_redundant_vertices. It should be noted that in the future, the edges and vertices methods should probably be combined, but such was outside the scope of our project (given the many other refactoring needs). The screenshots below show the contents of the original code of the remove_redundant_vertices method (on top), the changed code with the extracted method, and the extracted method code (on the bottom). | ||

---- | |||

---- | |||

[[File:find_redundant_vertices_0.png]] | |||

---- | |||

---- | |||

[[File:find_redundant_vertices_1.png]] | |||

---- | |||

---- | |||

[[File:find_redundant_vertices_2.png]] | |||

---- | |||

---- | |||

===search_edges=== | ===search_edges=== | ||

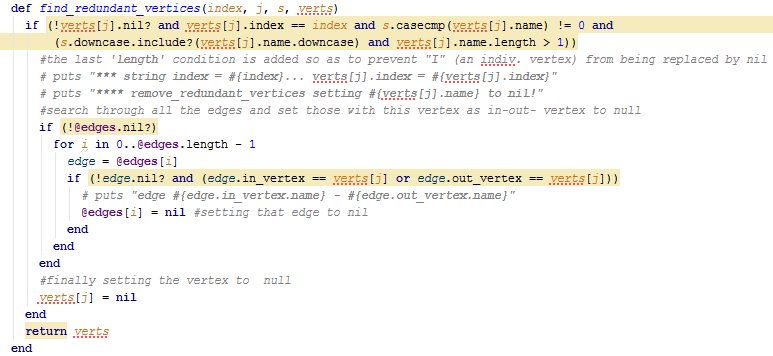

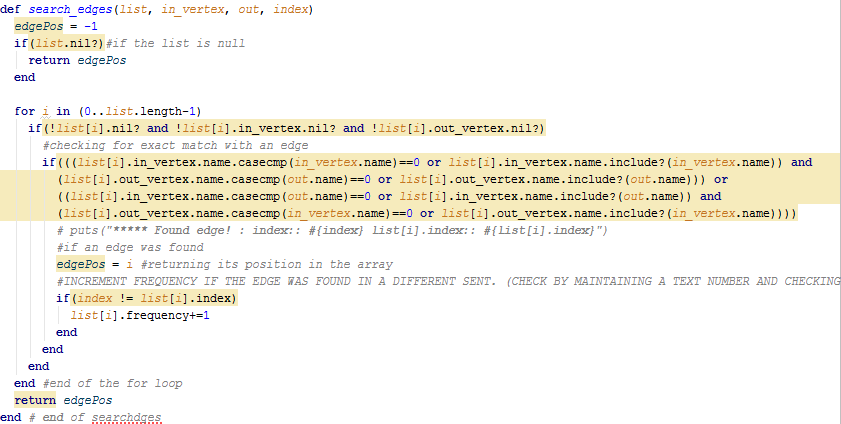

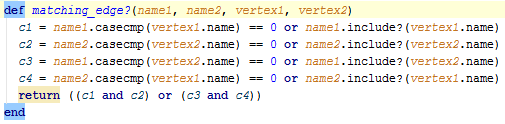

For the search_edges method, there were two long if statements whose contents we both extracted and simplified. In the screenshots below can be seen the original method, the refactored method, and the two extracted methods. In the case of the first method, edge_not_nil?, duplication is also lessened (similar code occurs in two other methods). In the case of the second extracted method, matching_edge?, an incredibly long if statement is rewritten, improving complexity. In a similar method called search_edges_to_set_null, a similar method to matching_edge? (called null_matching_edge?) was extracted. Again, this is an area where search_edges and search_edges_to_set_null could be extracted into a similar method. The original code, however, was different enough to dissuade us from trying to do this right away. | |||

---- | |||

---- | |||

[[File:search_edges_0.png]] | |||

---- | |||

---- | |||

[[File:search_edges_1.png]] | |||

---- | |||

---- | |||

[[File:search_edges_2.png]] | |||

---- | |||

---- | |||

[[File:search_edges_3.png]] | |||

---- | |||

---- | |||

===print_graph=== | ===print_graph=== | ||

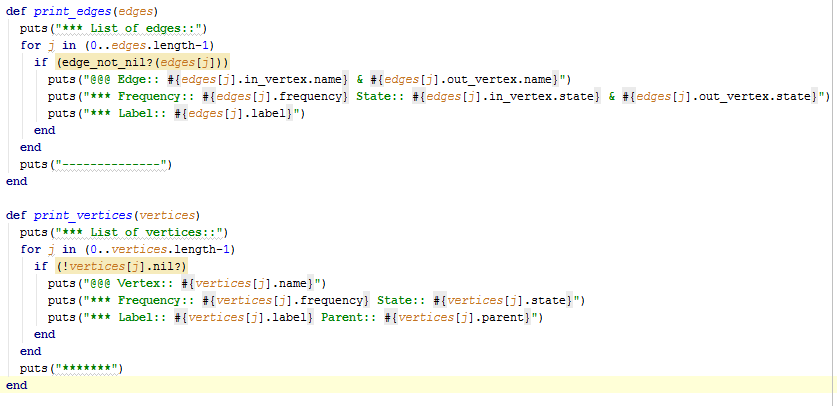

The original print_graph method contained two separate loop statements: one for vertices and one for edges. As such, we extracted the two into print_vertices and print_edges methods. The code for the two is shown below. | |||

---- | |||

---- | |||

[[File:print_graph.png]] | |||

---- | |||

---- | |||

===find_parents=== | ===find_parents=== | ||

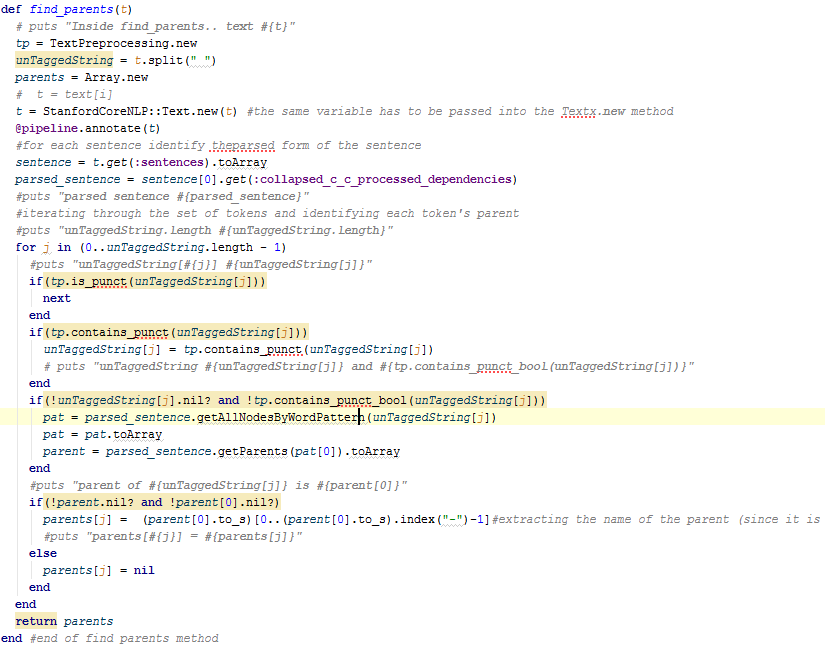

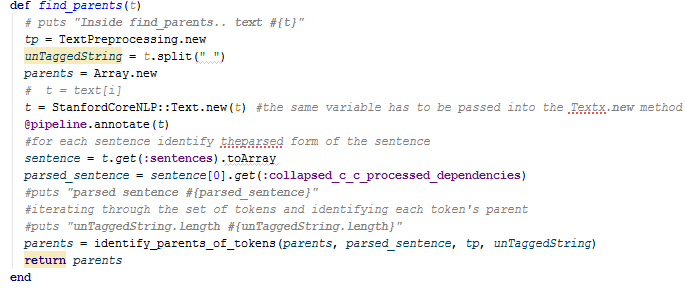

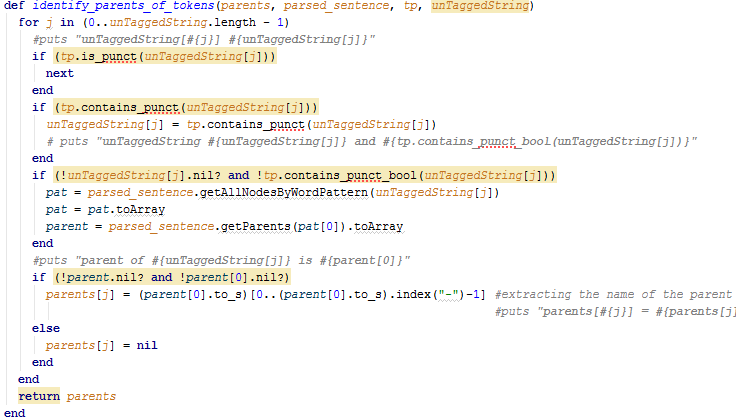

For this method, we extracted a series of if statements into a new identify_parents_of_tokens method. This reduced complexity of the original method. The original method, the original method after the extraction, and the extracted method are shown below. | |||

---- | |||

---- | |||

[[File:find_parents_0.png]] | |||

---- | |||

---- | |||

[[File:find_parents_1.png]] | |||

---- | |||

---- | |||

[[File:find_parents_2.png]] | |||

---- | |||

---- | |||

===set_semantic_labels_for_edges=== | ===set_semantic_labels_for_edges=== | ||

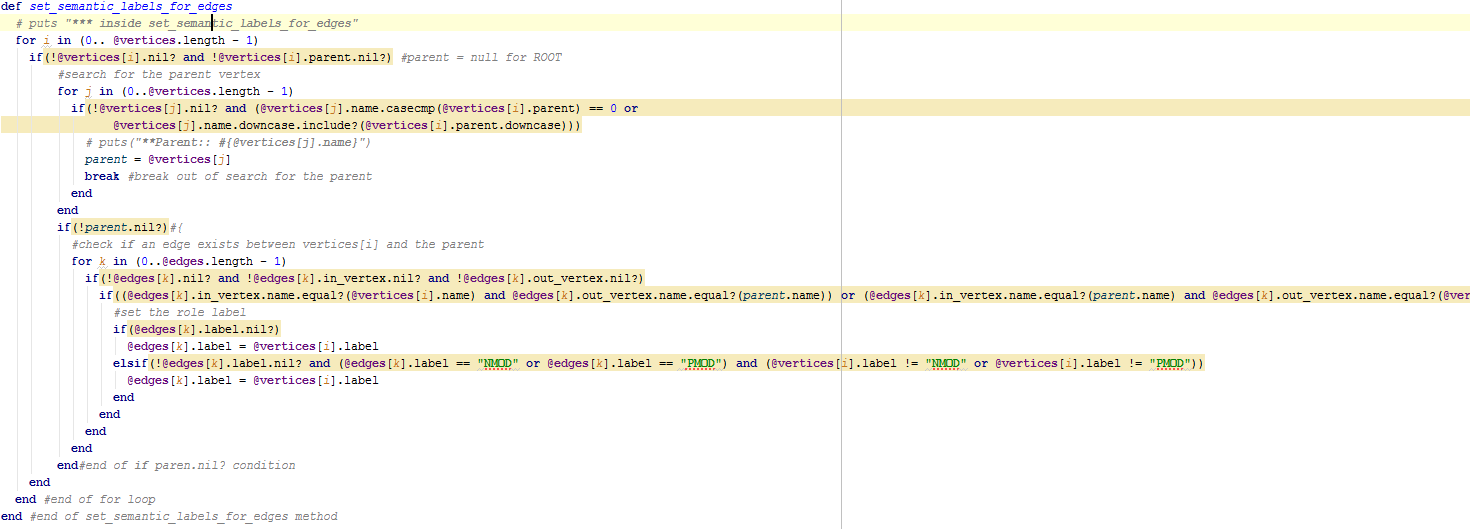

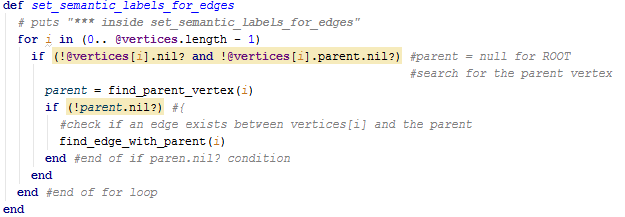

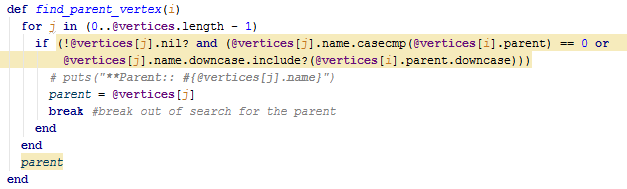

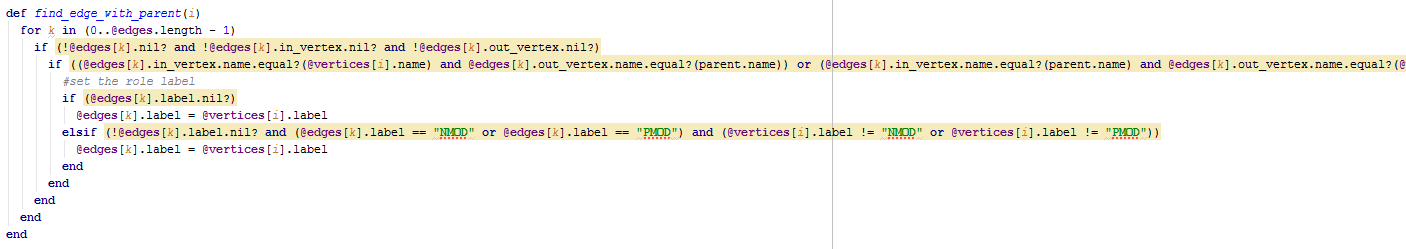

In this method, we extracted two methods (find_edge_with_parent and find_parent_vertex) to reduce the complexity of the set_semantic_labels_for_edges method. Again, the original method, the refactored method, and the extracted methods are shown below. | |||

---- | |||

---- | |||

[[File:semantic_labels_0.png]] | |||

---- | |||

---- | |||

[[File:semantic_labels_1.png]] | |||

---- | |||

---- | |||

[[File:semantic_labels_2.png]] | |||

---- | |||

---- | |||

[[File:semantic_labels_3.png]] | |||

---- | |||

---- | |||

==Results== | ==Results== | ||

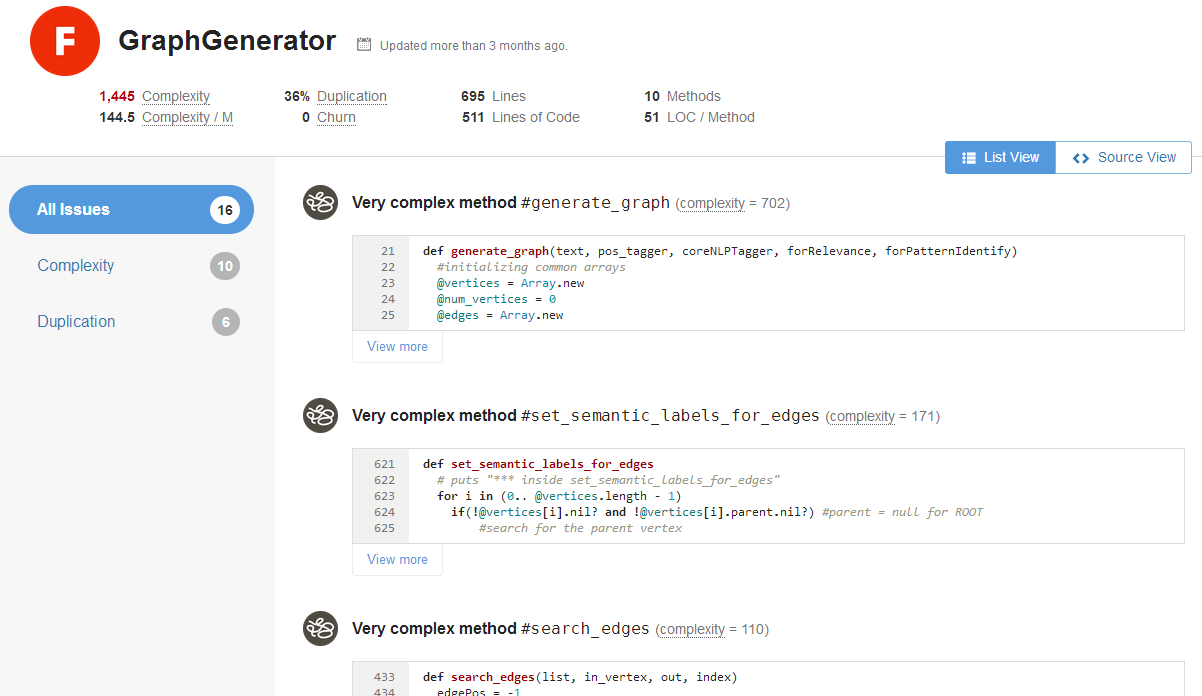

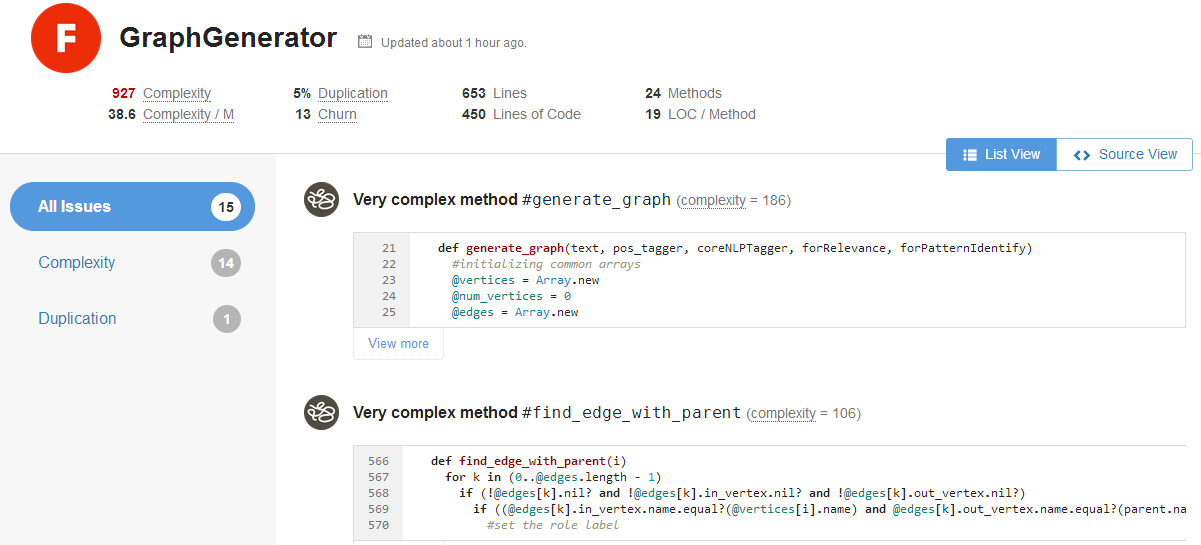

Overall, the graph_generator.rb class still receives a failing grade on Code Climate, so there is much work to be done still. Through our refactoring, we improved the code significantly. By extracting 14 methods, we reduced overall complexity from 1,445 to 927, duplication from 36% to 5%, lines of code from 511 to 450, and complexity per method from 144.5 to 38.6. The data showing these figures, as provided by Code Climate, is shown below. The top picture represents the original file and the bottom represents the refactored file. | |||

---- | ---- | ||

---- | ---- | ||

| Line 65: | Line 123: | ||

==Appendix== | ==Appendix== | ||

===Issues=== | ===Issues=== | ||

We encountered many issues while working on this project, and we know that many others have had similar problems. Although we were responsible for refactoring the graph_generator.rb file, we had to modify many others to get the project running. Even at this point, we have not been able to reach successful review submission in order to make sure our code is executed properly. | |||

Running tests for this class (and in general) also proved problematic. For example, some fixtures were not properly coded syntactically. Fixing these issues, it still appears that fixture parameters do not match up with database columns. Finally, in removing fixtures (and therefore default loading of fixtures before testing in RubyMine) altogether, we still encountered the issue with the stanford-nlp jar file. Although we downloaded and placed the necessary file into the appropriate bin folder of the gem, something still appears to be missing. As such, testing was not possible for us at this stage. | |||

==Resources== | ==Resources== | ||

| Line 71: | Line 132: | ||

*[https://github.com/vermavikrant/expertiza Github repository for refactored project] | *[https://github.com/vermavikrant/expertiza Github repository for refactored project] | ||

*[http://152.46.18.78:3000/ VCL location] | *[http://152.46.18.78:3000/ VCL location] | ||

Latest revision as of 03:29, 31 October 2013

Expertiza is a web application that supports peer-review for a variety of entities. As it is still evolving, expertiza has several areas that require work. Among these include areas that require refactoring existing code to improve code quality. The graph_generator.rb file is one such area. This write-up describes our refactoring decisions for this file.

Background

The graph_generator.rb file is an automated metareview feature responsible for parsing the text from a review and creating a graph of the review's content. This file was plagued with several cases of duplication and a very high code complexity. We attempted to identify areas in need of refactoring and fix as many of these areas as possible. In the rest of this page, we go method-by-method to describe our changes to the original file. After doing so, we describe the improvements (based on Code Climate's metrics) we achieved. Finally, we identify areas that proved quite problematic for our assignment.

Changes by Method

generate_graph

tagged_token_check

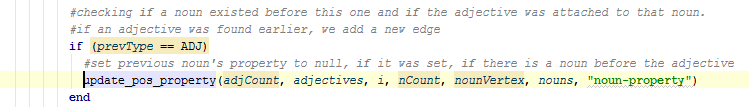

The first change that can be seen within this method is the extracting of code that adds on to a previous vertex or creates a new one, all based upon the part of speech defined of the tokens encountered while parsing the review. We called our extracted method tagged_token_check. By extracting this method, we were able to reduce duplication (since such similar code occurred four times in the method for tokens that are nouns, adjectives, adverbs, and verbs). Since the code contained nested if statements, we also were able to reduce the complexity of the method. Screenshots of the code before and after refactoring are included below. The top picture is the code before refactoring, the middle is the code after, and the bottom is the code within the extracted method.

update_pos_property

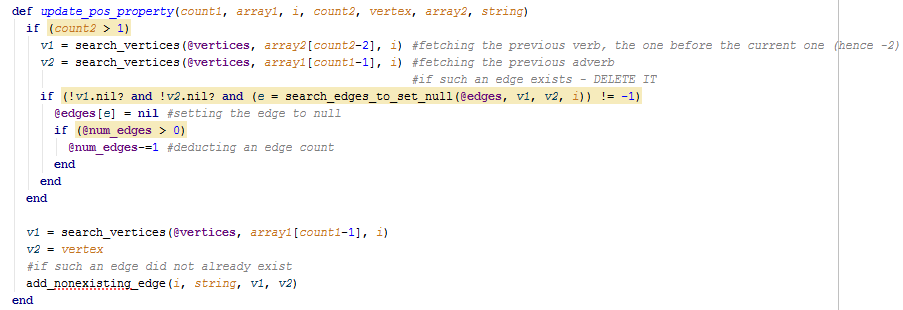

The next extracted method in generate_graph is called update_pos_property, and it helps reduce duplication (previously, there were two occurrences of the extracted code) and complexity (the extracted code removes a nested if statement). The pictures below, from top to bottom, show the code in the original file, the generate_graph code changed by extracting the method, and the extracted code for the extracted method.

add_nonexisting_edge

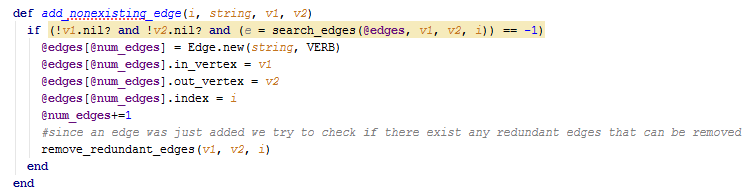

As seen in the code for update_pos_property, add_nonexisting_edge was further extracted from update_pos_property to reduce complexity.

remove_redundant_edges and remove_redundant_vertices

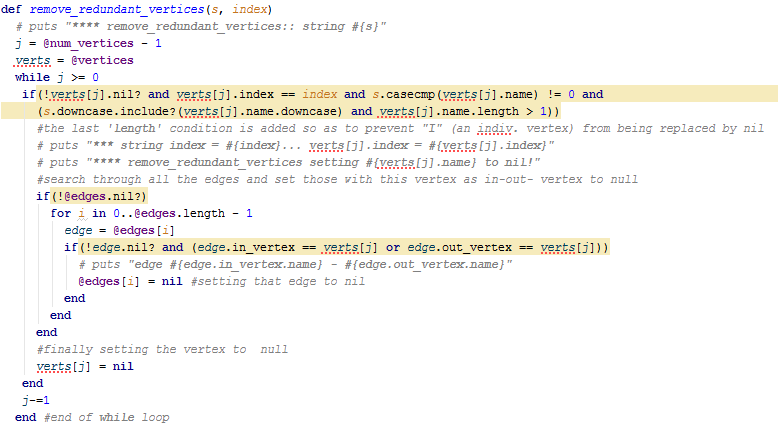

The remove_redundant_edges method also suffered from high code complexity, so we extracted a new method called find_redundant_edges to reduce such complexity. A find_redundant_vertices method was also extracted from remove_redundant_vertices. It should be noted that in the future, the edges and vertices methods should probably be combined, but such was outside the scope of our project (given the many other refactoring needs). The screenshots below show the contents of the original code of the remove_redundant_vertices method (on top), the changed code with the extracted method, and the extracted method code (on the bottom).

search_edges

For the search_edges method, there were two long if statements whose contents we both extracted and simplified. In the screenshots below can be seen the original method, the refactored method, and the two extracted methods. In the case of the first method, edge_not_nil?, duplication is also lessened (similar code occurs in two other methods). In the case of the second extracted method, matching_edge?, an incredibly long if statement is rewritten, improving complexity. In a similar method called search_edges_to_set_null, a similar method to matching_edge? (called null_matching_edge?) was extracted. Again, this is an area where search_edges and search_edges_to_set_null could be extracted into a similar method. The original code, however, was different enough to dissuade us from trying to do this right away.

print_graph

The original print_graph method contained two separate loop statements: one for vertices and one for edges. As such, we extracted the two into print_vertices and print_edges methods. The code for the two is shown below.

find_parents

For this method, we extracted a series of if statements into a new identify_parents_of_tokens method. This reduced complexity of the original method. The original method, the original method after the extraction, and the extracted method are shown below.

set_semantic_labels_for_edges

In this method, we extracted two methods (find_edge_with_parent and find_parent_vertex) to reduce the complexity of the set_semantic_labels_for_edges method. Again, the original method, the refactored method, and the extracted methods are shown below.

Results

Overall, the graph_generator.rb class still receives a failing grade on Code Climate, so there is much work to be done still. Through our refactoring, we improved the code significantly. By extracting 14 methods, we reduced overall complexity from 1,445 to 927, duplication from 36% to 5%, lines of code from 511 to 450, and complexity per method from 144.5 to 38.6. The data showing these figures, as provided by Code Climate, is shown below. The top picture represents the original file and the bottom represents the refactored file.

Appendix

Issues

We encountered many issues while working on this project, and we know that many others have had similar problems. Although we were responsible for refactoring the graph_generator.rb file, we had to modify many others to get the project running. Even at this point, we have not been able to reach successful review submission in order to make sure our code is executed properly.

Running tests for this class (and in general) also proved problematic. For example, some fixtures were not properly coded syntactically. Fixing these issues, it still appears that fixture parameters do not match up with database columns. Finally, in removing fixtures (and therefore default loading of fixtures before testing in RubyMine) altogether, we still encountered the issue with the stanford-nlp jar file. Although we downloaded and placed the necessary file into the appropriate bin folder of the gem, something still appears to be missing. As such, testing was not possible for us at this stage.