CSC 456 Fall 2013/1b ra: Difference between revisions

| (59 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== | ==Sectored Cache== | ||

Sectored caching was one type of early CPU cache | Sectored caching was one type of early CPU cache architecture that has fallen out of common use since its first implementation due to inferior performance against other more common cache architectures. Sectored caching involves dividing a cache up into sectors and subsectors to allow for faster searching and more efficient use of space than some other cache architectures. | ||

===Background=== | ===Background=== | ||

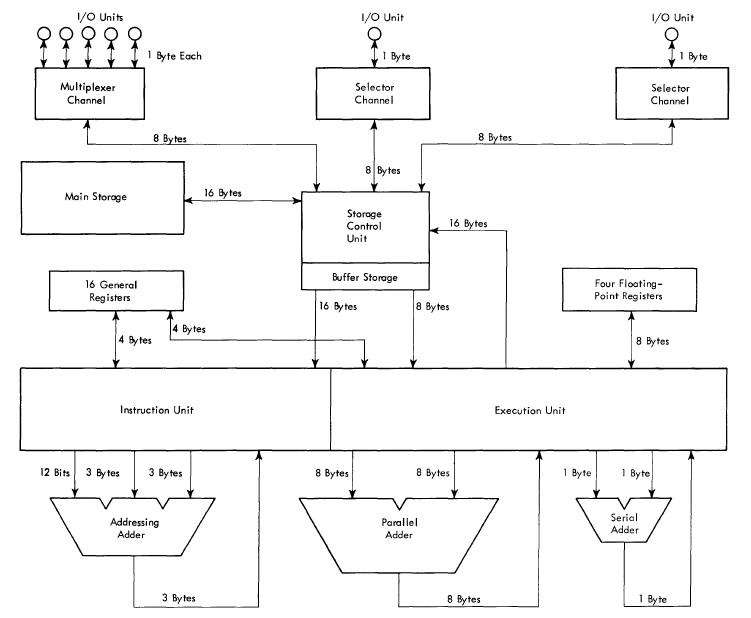

====IBM Model 85==== | [[File:Model85Organization.jpg|thumb|500px|left|link=http://wiki.expertiza.ncsu.edu/index.php/File:Model85Organization.jpg|alt=Model 85 Organization|The layout of an IBM System/360 Model 85 machine. Here the buffer storage represents the sectored cache.]] | ||

====IBM System/360 Model 85==== | |||

Sectored caching was first used on the IBM System/360 Model 85, which was the earliest commercial system that used a CPU data cache of any kind. Sectored caching allowed for smaller tag sizes in the addresses, which made searching in cache easier and quicker, without requiring cache lines to be excessively long; and it was easier to build than other kinds of cache organizations with the circuit technology of the time. | Sectored caching was first used on the IBM System/360 Model 85, which was the earliest commercial system that used a CPU data cache of any kind. Sectored caching allowed for smaller tag sizes in the addresses, which made searching in cache easier and quicker, without requiring cache lines to be excessively long; and it was easier to build than other kinds of cache organizations with the circuit technology of the time. | ||

<ref name=" | <ref name="rot99">Rothman, Jeffrey B. and Alan Jay Smith. Sector Cache Design and Performance. http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf | ||

{{cite web | {{cite web | ||

| url = http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf | | url = http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf | ||

| Line 21: | Line 22: | ||

}} | }} | ||

</ref> | </ref> | ||

Released in 1968, the Model 85 was one of the first computers to have a CPU data cache, which at the time was known as "buffer storage", come as a standard feature. As with most caches, the cache on the Model 85 attempts to fetch data from the cache first before fetching from main memory. This allowed for much faster data fetching and storage, reducing the average system storage cycle time to about one third or one quarter what it would be if using main memory alone. | Released in 1968, the Model 85 was one of the first computers to have a CPU data cache, which at the time was known as "buffer storage", come as a standard feature. The cache on the Model 85 could store approximately 16 kilobytes of data divided into 16 one-kilobyte sectors and 16 sixty-four-byte subsectors (6 bits long), had a bus width of 16 bytes, and an average cycle time of 80 nanoseconds. As with most caches, the cache on the Model 85 attempts to fetch data from the cache first before fetching from main memory. This allowed for much faster data fetching and storage, reducing the average system storage cycle time to about one third or one quarter what it would be if using main memory alone. | ||

<ref name=" | <ref name="ibm68">IBM. IBM System/360 Model 85 Functional Characteristics. http://www.bitsavers.org/pdf/ibm/360/funcChar/A22-6916-1_360-85_funcChar_Jun68.pdf | ||

{{cite manual | {{cite manual | ||

| author = IBM | | author = IBM | ||

| Line 35: | Line 36: | ||

</ref> | </ref> | ||

This new type of cache organization represented a significant departure from cache organizations used previously in the IBM System/360 line of computers. In testing during the design of the Model 85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers. | This new type of cache organization represented a significant departure from cache organizations used previously in the IBM System/360 line of computers. In testing during the design of the Model 85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers. | ||

<ref name=" | <ref name="lip68">Liptay, J. S. Structural Aspects of the System/360 Model 85, Part II: The Cache. http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5388402&isnumber=5388400 | ||

{{cite journal | {{cite journal | ||

| url = http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5388402&isnumber=5388400 | | url = http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5388402&isnumber=5388400 | ||

| Line 53: | Line 54: | ||

}} | }} | ||

</ref> | </ref> | ||

After the release of the Model 85, however, it soon became clear that sectored caching was less effective than set-associative caching for computer and chip architectures at that time and fell out of common use.<ref name=" | |||

After the release of the Model 85, however, it soon became clear that sectored caching was less effective than set-associative caching for computer and chip architectures at that time and fell out of common use.<ref name="rot99"/> | |||

{{Clear}} | |||

{{Break}} | |||

====Reappearance==== | ====Reappearance==== | ||

In a 1999 article, Alan Jay Smith and Jeffrey B. Rothman revisited the idea of sectored caching to determine if changes in technology from 1968 had made sectored caching useful. They looked at whether there would be any performance gain if sectored caching was used in multi-level cache architectures. | In a 1999 article, Alan Jay Smith and Jeffrey B. Rothman revisited the idea of sectored caching to determine if changes in technology from 1968 had made sectored caching useful. They looked at whether there would be any performance gain if sectored caching was used in multi-level cache architectures, a relatively new technology of the time. What they discovered was that, while there was still no performance gain for the majority of multi-level cache designs, there could be a significant performance gain in one specific case. Since sectored caching allows for fairly small tag sizes, the tags themselves could be stored in a very small and fast first-level cache while the sectored data they reference could be stored on a much larger but slower second-level cache. In their analysis, this design allowed for a much higher hit ratio (and thus performance level) compared to Smith and Rothman's theoretical targets than any other cache architecture. For most modern computers, however, CPU cache size was not enough of a concern to merit this type of architecture and sectored caching is still not widely used.<ref name="rot99"/> | ||

===Implementation=== | |||

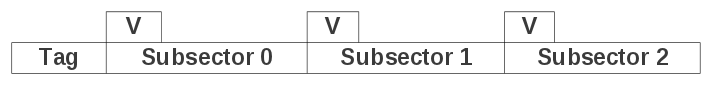

[[Image:Sectorcache.png|thumb|right|400px|link=http://wiki.expertiza.ncsu.edu/images/1/15/Sectorcache.png|alt=generalized setored cache address|Sectors in cache are made of subsectors. Each subsector has a validity bit indicating whether or not it has been loaded with data.]] | |||

The cache is divided into sectors, which correspond to logical sectors on the main storage device. The assignment and update of the sectors in the cache to sectors in main memory functions on a least-recently-used basis.<ref name="ibm68"/> When sectors are needed, they are not loaded into cache all at once, but in smaller pieces known as subsectors. Subsectors are similar to the lines in a direct mapped cache, and are only loaded into cache when needed. This prevents large amounts of data from having to be transferred for every memory reference. Subsectors have a validity bit that indicates whether they are loaded with data or not.<ref name="lip68"/> | |||

===Miss Handling=== | |||

When a process requests data from a disk sector that is not in the cache, a cache sector is assigned to the sector on the main storage device where the requested data is stored. Then the subsector where the data is located is loaded into the cache. The subsector's validity bit is then set to reflect that it has been loaded from the main storage. When data from other subsectors within a loaded sector are requested, the system loads those subsectors into the cache sector and sets their validity bits. Sectors are not removed from the cache until the system needs to reclaim the space to process another request.<ref name="lip68"/> | |||

===Advantages/Disadvantages=== | |||

After the implementation of sectored caching in the Model 85, the designers quickly realized that a set-associative cache would be more efficient since most memory references required fetching of sequential blocks. In a sectored cache, this would require multiple fetches of subsectors/blocks, increasing the possibility of cache misses.<ref name="lip68"/> | |||

As discovered by Smith and Rothman, however, sectored caching can work well in a specific situation. If cache size is a concern, then the cache can be divided into a first-level cache that is small and fast, can reside on the CPU chip, and stores only the tags for each sector; and a second-level chip that is larger and slower, can reside off-chip, and stores the sectored data of the cache. In this design, there can be a significant performance gain using sectored caches as opposed to other cache architectures. In most modern computers, CPU cache size is not limited enough where this kind of implementation is beneficial.<ref name="rot99"/> | |||

==Victim Cache== | |||

===Background=== | |||

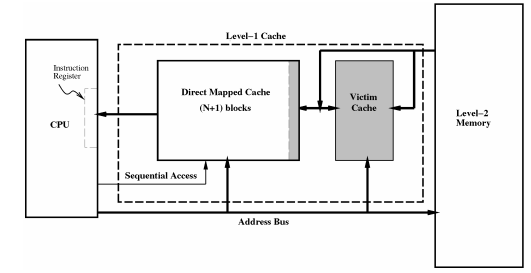

Victim caches were first proposed by Norman P. Jouppi in 1990. Victim caching implements a small, fully-associative cache between direct-mapped L1 memory and the next level of memory. The cache allows lines evicted from the L1 cache a “second-chance” by loading them into the victim cache. Victim caches decrease the overall conflict miss rate | |||

<ref name="Jouppi">Jouppi, Norman P. Improving direct-mapped cache performance by the addition of a small fully-associative cache and prefetch buffers. http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=134547 | |||

{{cite web | |||

| url = http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=134547 | |||

| title = Improving direct-mapped cache performance by the addition of a small fully-associative cache and prefetch buffers | |||

| last1 = Jouppi | |||

| first1 = Norman | |||

| middle1 = P. | |||

| location = Seattle, WA | |||

| date =May 1990 | |||

| accessdate = September 22, 2013 | |||

| separator = , | |||

}} | |||

</ref>. | |||

Direct-mapped caches can especially benefit from victim caching due to their large miss rates. Victim caching allows direct-mapped caches to still be used in order to take advantage of their speed while decreasing the miss rate to an even lower rate than the miss rate found in set-associative caches <ref name = "Jouppi"/>. | |||

[ | Victim caches were first proposed by Norman P. Jouppi in 1990 at the 17th Annual International Symposium on Computer Architecture. During this time the performance of processors was increasing drastically; however memory hierarchies had not advanced in the same manner. Jouppi observed that if this trend continued the newer machines would easily lose half of their potential performance due to the shortcomings of the state of memory hierarchy at the time. This is illustrated in the figure (Figure 2.1) below which is from Jouppi's 1990 paper.which shows how the cost of cache misses was dramatically increasing<ref name = "Jouppi"/>. | ||

[[File:Cost_of_cache_misses_from_Jouppi.png|center]] | |||

<div style = "text-align: center;">'''Figure 2.1 Cache Miss'''</div> | |||

---- | ---- | ||

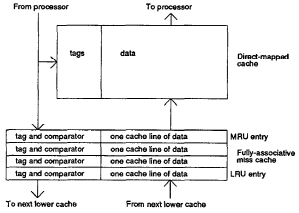

===Organization=== | ===Organization=== | ||

====Victim Cache==== | |||

In order for a system to use a victim cache it needs to be use a direct-mapping cache system. Victim caching is a technique of optimizing the use of a miss cache<ref name = "Jouppi"/>. A miss cache is a small on-chip cache between the first-level cache and the access point to the second-level cache<ref name = "Jouppi"/>. The miss cache would typically store two to five lines, when a miss would occur the data would not only be loaded into the direct-mapped cache but also into the miss cache replacing the least recently used item<ref name = "Jouppi"/>. While this did help with reducing the amount of conflict misses the optimization of that small cache was not very good considering it could have a lot of the same lines in the direct-mapped cache. The organization of the miss cache can be seen below in the Figure 2.2 which was shown in the 1990 paper by Jouppi<ref name = "Jouppi"/>. | |||

- | [[File:Miss_Cache_Organization.png|center]] | ||

<div style = "text-align:center">'''Figure 2.2 Miss Cache Organization'''</div> | |||

====Selective Victim Cache==== | |||

An improvement made upon the victim caching technique came from Stiliadis and Verma in 1997 with their introduction of selective victim caching in 1997 <ref name="Verma">Dimitrios Stiliadis and Anujan Verma. Selective Victim Caching: A Method to Improve the Performance of Direct-Mapped Caches. http://ieeexplore.ieee.org.prox.lib.ncsu.edu/xpl/articleDetails.jsp?arnumber=589235&tag=1 | |||

{{cite web | |||

| url = http://ieeexplore.ieee.org.prox.lib.ncsu.edu/xpl/articleDetails.jsp?arnumber=589235&tag=1 | |||

| title = Selective Victim Caching: A Method to Improve the Performance of Direct-Mapped Caches. | |||

| last1 = Stiliadis | |||

| first1 = Dimitrios | |||

| last2 = Verma | |||

| first2 = Anujan | |||

| location = AT&T Bell Labs, Holmdel, NJ | |||

| date = May 1997 | |||

| accessdate = September 20, 2013 | |||

| separator = , | |||

}} | |||

</ref>. | |||

This cache management organization is similar to the initial victim cache system proposed by Jouppi but instead of the pushing replaced block into the victim and automatically interchanging between the two if the main cache needs a block it uses a prediction algorithm based on the past usage of the block<ref name = "Verma"/>. This is accomplished by adding an additional block to the main cache called the "transitory block"<ref name="Verma"/>. This block in the main cache is used both predict sequential references by determining repeated patterns of access and act as a buffer between the main and victim caches during an interchange to predict if it is necessary<ref name = "Verma"/>. The below figure(Figure 2.3) shows a Sequential State Machine that switches between the normal state which is when the memory is decoded from the main cache and the special state which is when access is directed towards the transitory block<ref name="Verma"/>. | |||

- | [[File:Transitory_Block_Sequential_Predictor.png|center]] | ||

<div style = "text-align:center">'''Figure 2.3 Sequential State Machine for Selective Victim Caching'''</div> | |||

=== | The other important function of the algorithm is the cache block swapping prediction based on past usage. This is used when a miss occurs in the main cache but the block is found in the victim cache. The first step is to load the block into the transitory block<ref name = "Verma"/>. The algorithm then looks at the prediction bits in the transitory block and compares them to those found in the block store in the main cache<ref name="Verma"/>. If the blocks in the main cache are more likely to be used again before the buffered block the block in the transitory section is accessed and then sent back to the victim cache<ref name = "Verma"/>. If the block is more likely to be accessed again than another block in the main cache the normal victim cache swapping occurs<ref name = "Verma"/>. This process can be seen in the figure(Figure 2.4) below which illustrates the movement of memory blocks between the level-one cache,the victim cache, the CPU, and the level-two cache<ref name = "Verma"/>. In the paper by Stiliadis and Verma they showed that this new modification to the victim caching set-up improved performance in both smaller and larger cache size. For example when a simulation was run using ten instruction traces it showed an average improvement of 21% in miss rates and 70% reduction in exchanges between the main and victim caches<ref name="Verma"/>. | ||

= | [[File:Selective_Victim_Cache_Organization.png|center]] | ||

<div style = "text-align:center">'''Figure 2.4 Selective Victim Cache Organization'''</div> | |||

----- | |||

=== | ===Evolution=== | ||

From the time of Jouppi first introducing the idea of victim caching in 1990 to the present day there have been many modifications and adaptations of this caching technique. In the above section [[CSC 456 Fall 2013/1b ra#Selective Victim Cache|Selective Victim Caching]] is described which is one of the variations with the least amount of change to how the victim cache works. This section will describe a few others that vary a little more to show how this caching scheme has evolved. | |||

====XOR-Based Placement==== | |||

Not long after the article on Selective Victim Caching was published another article, in July of 1997, detailing how the use of XOR mapping techniques could improve many different caching techniques, victim caching included. The XOR-mapping scheme for victim caching entails using several XOR operations in order to obtain a cache index. Since the XOR operations can be completed in parallel the delay associated with this is one XOR gate | |||

<ref name="XOR">Antonio González, Mateo Valero, Nigel Topham and Joan M. Parcerisa. Eliminating Cache Conflict Misses Through XOR-Based Placement Functions. http://people.ac.upc.edu/jmanel/papers/ics97.pdf | |||

{{cite web | |||

| url = http://people.ac.upc.edu/jmanel/papers/ics97.pdf | |||

| title = Eliminating Cache Conflict Misses Through XOR-Based Placement Functions. | |||

| last1 = González | |||

| first1 = Antonio | |||

| last2 = Valero | |||

| first2 = Mateo | |||

| last3 = Topham | |||

| first3 = Nigel | |||

| last4 = Parcerisa | |||

| first4 = Joan | |||

| location = Barcelona, Spain and Edinburgh, UK | |||

| date =July 1997 | |||

| accessdate = September 21, 2013 | |||

| separator = , | |||

}} | |||

</ref>. | |||

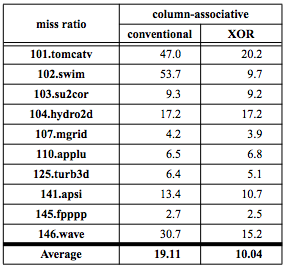

These XOR operations typically take place in the end of the address computation stage which it usually not a very critical point in the pipeline cycle thus not really creating a lot of time delay. This allows for quicker access to memory blocks and reduce the chance of hitting issues later in the pipelining process<ref name = "XOR"/>. XOR mapping also uses a bit to check whether a block has been used since it's last miss or not and switches the block around accordingly, similar to the Selective Victim Cache method<ref name = "XOR"/>. Upon completion of mixing the the new XOR mapping technique with the Victim Cache Scheme it had a miss ratio similar to a two-way skewed-associative cache<ref name = "XOR"/>. The only points during their tests where it made almost no difference was during the points when the miss ratios were lower than normal as seen in Figure 2.5 below. | |||

- | [[File: XOR_Miss_Ratio_Table.png|center]] | ||

<div style="text-align:center">'''Figure 2.5 XOR Miss Ratio Comparison Table'''</div> | |||

=== | ====Victim Replication==== | ||

=== | Victim Replication is a newer cache management scheme, first presented in 2005, by Zhang and Asanovic for use in level-two caches with in a tiled chip multiprocessor <ref name="Zhang1">Michael Zhang and Krste Asanovic´. Victim Replication: Maximizing Capacity while Hiding Wire Delay in Tiled Chip Multiprocessors. http://people.cs.pitt.edu/~cho/cs2410/papers/zhang-isca05.pdf | ||

{{cite web | |||

| url = http://people.cs.pitt.edu/~cho/cs2410/papers/zhang-isca05.pdf | |||

| title = Victim Replication: Maximizing Capacity while Hiding Wire Delay in Tiled Chip Multiprocessors. | |||

| last1 = Zhang | |||

| first1 = Michael | |||

| last2 = Asanovic | |||

| first2 = Krste | |||

| location = MIT Cambridge, Mass. | |||

| date = June 2005 | |||

| accessdate = September 23, 2013 | |||

| separator = , | |||

}} | |||

</ref>. | |||

This variation focuses on combining the positive qualities of private and shared level-two caching schemes. The private scheme uses a slice of the level-two cache as a private cache so that the level-one cache can communicate directly to it<ref name = "Zhang1"/>. The benefit to using this strategy is that since the level-one and the level-two communicate directly to each other it reduces the need for off-chip requests<ref name = "Zhang1"/>. This choice can be costly however if a request off-chip is needed since it become a three-way communication. The shared scheme uses all the level-two slices as a shared cache which can be useful due a level-one request not having to only rely on one slice of the level-two cache however it can experience latency issues depending on the amount of requests that it is handling at one time<ref name = "Zhang1"/>. The Victim Replication method combines the two by creating a shared level-two cache and some private level-two slices. When a victim is brought in a replication of it is stored in the private slices from the shared cache<ref name = "Zhang1"/>. If there is a miss in the level-two cache it is sent to the primary cache, if that does not provide the needed date the private level-two slices are then inspected. From all of these checks it was shown that using victim replication the latency of level-two caches in mutli-threaded benchmarking decreased by 16% and single-threaded benchmarking decreased by 21%<ref name = "Zhang1"/>. | |||

---- | |||

=== | ===Advantages/Disadvantages=== | ||

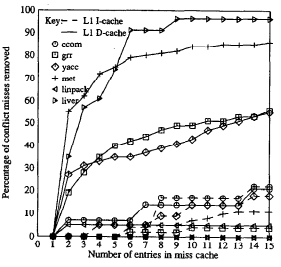

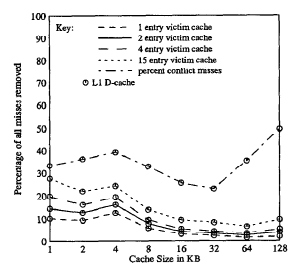

== | Some of the biggest advantages of simple victim caching can be seen in the work the Jouppi did in 1990[[CSC 456 Fall 2013/1b ra#Victim Cache|| Victim Cache Background]]. One of the biggest advantages for using this cache scheme is the number of conflict misses that it removes. This breakthrough in memory management helped provide the way for more sophisticated processors to not be hampered by the memory organizations that were previously used<ref name="Jouppi"/>. Figure 2.6 and 2.7 are from Jouppi's paper and demonstrate the differences between the miss cache and the victim cache,respectively, conflict miss reduction rates<ref name="Jouppi"/>. | ||

[[File:Miss_Cache_Miss_Ratios.png|center]] | |||

<div style="text-align:center">'''Figure 2.6 Graph of conflict misses avoided by miss cache'''</div> | |||

[[File:Victim_Cache_Miss_Ratios.png|center]] | |||

<div style="text-align:center">'''Figure 2.7 Graph of conflict misses avoided by victim cache''' </div> | |||

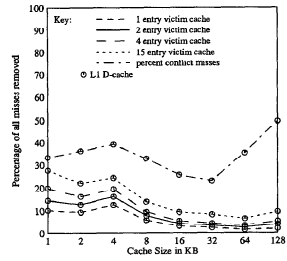

== | Another advantage provided by Jouppi is the ability for the victim caches performance to only improve as technology improves. As computers advance caches and line sizes will only get bigger and some memory organizations will either maintain the same level miss ratio or even get worse the more data they have to handle. Jouppi tested the effects of increasing the line size and how it affected the victim cache's abilities<ref name = "Jouppi"/>. In his article he showed that the victim cache only improves with the increased space as illustrated in Figure 2.8<ref name = "Jouppi"/>. | ||

[[File:Victim_Cache_Line_Size.png|center]] | |||

<div style="text-align:center">'''Figure 2.8 Graph of victim cache performance in respect to line size'''</div> | |||

In contrast however, the original form of the victim cache does not improve when only the cache size is improved Jouppi discovered<ref name ="Jouppi"/>. As the graph in Figure 2.9 demonstrates the victim cache starts off strong but the looses it's performance the more space you simply just add onto the cache. This shows that while the need for this type of memory management was required for that time as our computers continue to develop so must our methods of memory hierarchy management continue to evolve. | |||

[ | [[File:Victim_Cache_Cache_Size.png|center]] | ||

<div style="text-align:center">'''Figure 2.9 Graph of victim cache performance in respect to primary cache size'''</div> | |||

---- | |||

Victim | ===Architectures=== | ||

Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. ''Computer Architecture: A Quantitative Approach''. Elsevier, Inc., 2012, p. B-14.</ref> | |||

=== | Victim caching is also gaining popularity in mobile systems in order to decrease their latency time. One example of this is the NAND IXP system which is used for software embedded systems for a low-cost but high density performance. <ref name="NAND">Chanik Park, Jaeyu Seo, Sunghwan Bae, Hyojun Kim, Shinhan Kim and Bumsoo Kim. A Low-cost Memory Architecture with NAND XIP for Mobile Embedded Systems. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.160.1129&rep=rep1&type=pdf | ||

==== | {{cite web | ||

| http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.160.1129&rep=rep1&type=pdf | |||

| title = A Low-cost Memory Architecture with NAND XIP for Mobile Embedded Systems. | |||

| last1 = Jouppi | |||

| first1 = Norman | |||

| middle1 = P. | |||

| location = Seoul, Korea | |||

| date =October 2003 | |||

| accessdate = September 23, 2013 | |||

| separator = , | |||

}} | |||

</ref>. | |||

This system uses the victim cache to store high-priority pages from the system's main flash memory so that the latency upon requesting the page again is decreased dramatically<ref name = "NAND"/>. The other user that the system gets from having a victim cache is when it is using it's XIP(execute-in-place) functionality the victim cache is used to prefetch information based on profiling information gathered earlier<ref name="NAND"/>. This allows the system to again reduce the latency of obtaining the data it needs to execute some piece of code. In figure 2.10 it shows the organization of the NAND structure that includes the victim cache<ref name="NAND"/>. | |||

[[File:NAND_Organization.png|center]] | |||

<div style="text-align:center">'''Figure 2.10 The NAND Architecture cache organization.'''</div> | |||

---- | |||

== | ==Quiz== | ||

1. What was the first system to use sector caching? | |||

A) Macbook | |||

B) Pentium 4 | |||

C) IBM Model 85 | |||

D) Commodore 64 | |||

2. On the system from the previous question what percentile of maximum efficiency did it achieve compared to what the designers expected? | |||

A)63% | |||

B)81% | |||

C)92% | |||

D)11% | |||

3. In sectored caching what bit decided if a subsector has data loaded or not? | |||

A) Validity | |||

B) Dirty | |||

C) The Second One | |||

D) Parity | |||

4. When are sectors removed from the cache in sectored caching? | |||

A) When the system needs the space | |||

B) When the data it has is used | |||

C) Whenever it wants to | |||

D) When it's parity bit is set to 0 | |||

5. Who first introduced victim caching? | |||

A) Verma | |||

B) Zhang | |||

C) Gates | |||

D) Jouppi | |||

6. In selective victim caching what extra block of memory is integral to it's cache scheme? | |||

A Transitory | |||

B) Transistor | |||

C) Miss Cache | |||

D) Level-Two Cache | |||

==References== | ==References== | ||

Latest revision as of 16:23, 1 October 2013

Sectored Cache

Sectored caching was one type of early CPU cache architecture that has fallen out of common use since its first implementation due to inferior performance against other more common cache architectures. Sectored caching involves dividing a cache up into sectors and subsectors to allow for faster searching and more efficient use of space than some other cache architectures.

Background

IBM System/360 Model 85

Sectored caching was first used on the IBM System/360 Model 85, which was the earliest commercial system that used a CPU data cache of any kind. Sectored caching allowed for smaller tag sizes in the addresses, which made searching in cache easier and quicker, without requiring cache lines to be excessively long; and it was easier to build than other kinds of cache organizations with the circuit technology of the time. <ref name="rot99">Rothman, Jeffrey B. and Alan Jay Smith. Sector Cache Design and Performance. http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf

</ref> Released in 1968, the Model 85 was one of the first computers to have a CPU data cache, which at the time was known as "buffer storage", come as a standard feature. The cache on the Model 85 could store approximately 16 kilobytes of data divided into 16 one-kilobyte sectors and 16 sixty-four-byte subsectors (6 bits long), had a bus width of 16 bytes, and an average cycle time of 80 nanoseconds. As with most caches, the cache on the Model 85 attempts to fetch data from the cache first before fetching from main memory. This allowed for much faster data fetching and storage, reducing the average system storage cycle time to about one third or one quarter what it would be if using main memory alone. <ref name="ibm68">IBM. IBM System/360 Model 85 Functional Characteristics. http://www.bitsavers.org/pdf/ibm/360/funcChar/A22-6916-1_360-85_funcChar_Jun68.pdf Template:Cite manual </ref> This new type of cache organization represented a significant departure from cache organizations used previously in the IBM System/360 line of computers. In testing during the design of the Model 85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers. <ref name="lip68">Liptay, J. S. Structural Aspects of the System/360 Model 85, Part II: The Cache. http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5388402&isnumber=5388400

</ref>

After the release of the Model 85, however, it soon became clear that sectored caching was less effective than set-associative caching for computer and chip architectures at that time and fell out of common use.<ref name="rot99"/> Template:Clear Template:Break

Reappearance

In a 1999 article, Alan Jay Smith and Jeffrey B. Rothman revisited the idea of sectored caching to determine if changes in technology from 1968 had made sectored caching useful. They looked at whether there would be any performance gain if sectored caching was used in multi-level cache architectures, a relatively new technology of the time. What they discovered was that, while there was still no performance gain for the majority of multi-level cache designs, there could be a significant performance gain in one specific case. Since sectored caching allows for fairly small tag sizes, the tags themselves could be stored in a very small and fast first-level cache while the sectored data they reference could be stored on a much larger but slower second-level cache. In their analysis, this design allowed for a much higher hit ratio (and thus performance level) compared to Smith and Rothman's theoretical targets than any other cache architecture. For most modern computers, however, CPU cache size was not enough of a concern to merit this type of architecture and sectored caching is still not widely used.<ref name="rot99"/>

Implementation

The cache is divided into sectors, which correspond to logical sectors on the main storage device. The assignment and update of the sectors in the cache to sectors in main memory functions on a least-recently-used basis.<ref name="ibm68"/> When sectors are needed, they are not loaded into cache all at once, but in smaller pieces known as subsectors. Subsectors are similar to the lines in a direct mapped cache, and are only loaded into cache when needed. This prevents large amounts of data from having to be transferred for every memory reference. Subsectors have a validity bit that indicates whether they are loaded with data or not.<ref name="lip68"/>

Miss Handling

When a process requests data from a disk sector that is not in the cache, a cache sector is assigned to the sector on the main storage device where the requested data is stored. Then the subsector where the data is located is loaded into the cache. The subsector's validity bit is then set to reflect that it has been loaded from the main storage. When data from other subsectors within a loaded sector are requested, the system loads those subsectors into the cache sector and sets their validity bits. Sectors are not removed from the cache until the system needs to reclaim the space to process another request.<ref name="lip68"/>

Advantages/Disadvantages

After the implementation of sectored caching in the Model 85, the designers quickly realized that a set-associative cache would be more efficient since most memory references required fetching of sequential blocks. In a sectored cache, this would require multiple fetches of subsectors/blocks, increasing the possibility of cache misses.<ref name="lip68"/>

As discovered by Smith and Rothman, however, sectored caching can work well in a specific situation. If cache size is a concern, then the cache can be divided into a first-level cache that is small and fast, can reside on the CPU chip, and stores only the tags for each sector; and a second-level chip that is larger and slower, can reside off-chip, and stores the sectored data of the cache. In this design, there can be a significant performance gain using sectored caches as opposed to other cache architectures. In most modern computers, CPU cache size is not limited enough where this kind of implementation is beneficial.<ref name="rot99"/>

Victim Cache

Background

Victim caches were first proposed by Norman P. Jouppi in 1990. Victim caching implements a small, fully-associative cache between direct-mapped L1 memory and the next level of memory. The cache allows lines evicted from the L1 cache a “second-chance” by loading them into the victim cache. Victim caches decrease the overall conflict miss rate <ref name="Jouppi">Jouppi, Norman P. Improving direct-mapped cache performance by the addition of a small fully-associative cache and prefetch buffers. http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=134547

</ref>. Direct-mapped caches can especially benefit from victim caching due to their large miss rates. Victim caching allows direct-mapped caches to still be used in order to take advantage of their speed while decreasing the miss rate to an even lower rate than the miss rate found in set-associative caches <ref name = "Jouppi"/>.

Victim caches were first proposed by Norman P. Jouppi in 1990 at the 17th Annual International Symposium on Computer Architecture. During this time the performance of processors was increasing drastically; however memory hierarchies had not advanced in the same manner. Jouppi observed that if this trend continued the newer machines would easily lose half of their potential performance due to the shortcomings of the state of memory hierarchy at the time. This is illustrated in the figure (Figure 2.1) below which is from Jouppi's 1990 paper.which shows how the cost of cache misses was dramatically increasing<ref name = "Jouppi"/>.

Organization

Victim Cache

In order for a system to use a victim cache it needs to be use a direct-mapping cache system. Victim caching is a technique of optimizing the use of a miss cache<ref name = "Jouppi"/>. A miss cache is a small on-chip cache between the first-level cache and the access point to the second-level cache<ref name = "Jouppi"/>. The miss cache would typically store two to five lines, when a miss would occur the data would not only be loaded into the direct-mapped cache but also into the miss cache replacing the least recently used item<ref name = "Jouppi"/>. While this did help with reducing the amount of conflict misses the optimization of that small cache was not very good considering it could have a lot of the same lines in the direct-mapped cache. The organization of the miss cache can be seen below in the Figure 2.2 which was shown in the 1990 paper by Jouppi<ref name = "Jouppi"/>.

Selective Victim Cache

An improvement made upon the victim caching technique came from Stiliadis and Verma in 1997 with their introduction of selective victim caching in 1997 <ref name="Verma">Dimitrios Stiliadis and Anujan Verma. Selective Victim Caching: A Method to Improve the Performance of Direct-Mapped Caches. http://ieeexplore.ieee.org.prox.lib.ncsu.edu/xpl/articleDetails.jsp?arnumber=589235&tag=1

</ref>. This cache management organization is similar to the initial victim cache system proposed by Jouppi but instead of the pushing replaced block into the victim and automatically interchanging between the two if the main cache needs a block it uses a prediction algorithm based on the past usage of the block<ref name = "Verma"/>. This is accomplished by adding an additional block to the main cache called the "transitory block"<ref name="Verma"/>. This block in the main cache is used both predict sequential references by determining repeated patterns of access and act as a buffer between the main and victim caches during an interchange to predict if it is necessary<ref name = "Verma"/>. The below figure(Figure 2.3) shows a Sequential State Machine that switches between the normal state which is when the memory is decoded from the main cache and the special state which is when access is directed towards the transitory block<ref name="Verma"/>.

The other important function of the algorithm is the cache block swapping prediction based on past usage. This is used when a miss occurs in the main cache but the block is found in the victim cache. The first step is to load the block into the transitory block<ref name = "Verma"/>. The algorithm then looks at the prediction bits in the transitory block and compares them to those found in the block store in the main cache<ref name="Verma"/>. If the blocks in the main cache are more likely to be used again before the buffered block the block in the transitory section is accessed and then sent back to the victim cache<ref name = "Verma"/>. If the block is more likely to be accessed again than another block in the main cache the normal victim cache swapping occurs<ref name = "Verma"/>. This process can be seen in the figure(Figure 2.4) below which illustrates the movement of memory blocks between the level-one cache,the victim cache, the CPU, and the level-two cache<ref name = "Verma"/>. In the paper by Stiliadis and Verma they showed that this new modification to the victim caching set-up improved performance in both smaller and larger cache size. For example when a simulation was run using ten instruction traces it showed an average improvement of 21% in miss rates and 70% reduction in exchanges between the main and victim caches<ref name="Verma"/>.

Evolution

From the time of Jouppi first introducing the idea of victim caching in 1990 to the present day there have been many modifications and adaptations of this caching technique. In the above section Selective Victim Caching is described which is one of the variations with the least amount of change to how the victim cache works. This section will describe a few others that vary a little more to show how this caching scheme has evolved.

XOR-Based Placement

Not long after the article on Selective Victim Caching was published another article, in July of 1997, detailing how the use of XOR mapping techniques could improve many different caching techniques, victim caching included. The XOR-mapping scheme for victim caching entails using several XOR operations in order to obtain a cache index. Since the XOR operations can be completed in parallel the delay associated with this is one XOR gate <ref name="XOR">Antonio González, Mateo Valero, Nigel Topham and Joan M. Parcerisa. Eliminating Cache Conflict Misses Through XOR-Based Placement Functions. http://people.ac.upc.edu/jmanel/papers/ics97.pdf

</ref>. These XOR operations typically take place in the end of the address computation stage which it usually not a very critical point in the pipeline cycle thus not really creating a lot of time delay. This allows for quicker access to memory blocks and reduce the chance of hitting issues later in the pipelining process<ref name = "XOR"/>. XOR mapping also uses a bit to check whether a block has been used since it's last miss or not and switches the block around accordingly, similar to the Selective Victim Cache method<ref name = "XOR"/>. Upon completion of mixing the the new XOR mapping technique with the Victim Cache Scheme it had a miss ratio similar to a two-way skewed-associative cache<ref name = "XOR"/>. The only points during their tests where it made almost no difference was during the points when the miss ratios were lower than normal as seen in Figure 2.5 below.

Victim Replication

Victim Replication is a newer cache management scheme, first presented in 2005, by Zhang and Asanovic for use in level-two caches with in a tiled chip multiprocessor <ref name="Zhang1">Michael Zhang and Krste Asanovic´. Victim Replication: Maximizing Capacity while Hiding Wire Delay in Tiled Chip Multiprocessors. http://people.cs.pitt.edu/~cho/cs2410/papers/zhang-isca05.pdf

</ref>. This variation focuses on combining the positive qualities of private and shared level-two caching schemes. The private scheme uses a slice of the level-two cache as a private cache so that the level-one cache can communicate directly to it<ref name = "Zhang1"/>. The benefit to using this strategy is that since the level-one and the level-two communicate directly to each other it reduces the need for off-chip requests<ref name = "Zhang1"/>. This choice can be costly however if a request off-chip is needed since it become a three-way communication. The shared scheme uses all the level-two slices as a shared cache which can be useful due a level-one request not having to only rely on one slice of the level-two cache however it can experience latency issues depending on the amount of requests that it is handling at one time<ref name = "Zhang1"/>. The Victim Replication method combines the two by creating a shared level-two cache and some private level-two slices. When a victim is brought in a replication of it is stored in the private slices from the shared cache<ref name = "Zhang1"/>. If there is a miss in the level-two cache it is sent to the primary cache, if that does not provide the needed date the private level-two slices are then inspected. From all of these checks it was shown that using victim replication the latency of level-two caches in mutli-threaded benchmarking decreased by 16% and single-threaded benchmarking decreased by 21%<ref name = "Zhang1"/>.

Advantages/Disadvantages

Some of the biggest advantages of simple victim caching can be seen in the work the Jouppi did in 1990| Victim Cache Background. One of the biggest advantages for using this cache scheme is the number of conflict misses that it removes. This breakthrough in memory management helped provide the way for more sophisticated processors to not be hampered by the memory organizations that were previously used<ref name="Jouppi"/>. Figure 2.6 and 2.7 are from Jouppi's paper and demonstrate the differences between the miss cache and the victim cache,respectively, conflict miss reduction rates<ref name="Jouppi"/>.

Another advantage provided by Jouppi is the ability for the victim caches performance to only improve as technology improves. As computers advance caches and line sizes will only get bigger and some memory organizations will either maintain the same level miss ratio or even get worse the more data they have to handle. Jouppi tested the effects of increasing the line size and how it affected the victim cache's abilities<ref name = "Jouppi"/>. In his article he showed that the victim cache only improves with the increased space as illustrated in Figure 2.8<ref name = "Jouppi"/>.

In contrast however, the original form of the victim cache does not improve when only the cache size is improved Jouppi discovered<ref name ="Jouppi"/>. As the graph in Figure 2.9 demonstrates the victim cache starts off strong but the looses it's performance the more space you simply just add onto the cache. This shows that while the need for this type of memory management was required for that time as our computers continue to develop so must our methods of memory hierarchy management continue to evolve.

Architectures

Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. Computer Architecture: A Quantitative Approach. Elsevier, Inc., 2012, p. B-14.</ref>

Victim caching is also gaining popularity in mobile systems in order to decrease their latency time. One example of this is the NAND IXP system which is used for software embedded systems for a low-cost but high density performance. <ref name="NAND">Chanik Park, Jaeyu Seo, Sunghwan Bae, Hyojun Kim, Shinhan Kim and Bumsoo Kim. A Low-cost Memory Architecture with NAND XIP for Mobile Embedded Systems. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.160.1129&rep=rep1&type=pdf

</ref>. This system uses the victim cache to store high-priority pages from the system's main flash memory so that the latency upon requesting the page again is decreased dramatically<ref name = "NAND"/>. The other user that the system gets from having a victim cache is when it is using it's XIP(execute-in-place) functionality the victim cache is used to prefetch information based on profiling information gathered earlier<ref name="NAND"/>. This allows the system to again reduce the latency of obtaining the data it needs to execute some piece of code. In figure 2.10 it shows the organization of the NAND structure that includes the victim cache<ref name="NAND"/>.

Quiz

1. What was the first system to use sector caching? A) Macbook B) Pentium 4 C) IBM Model 85 D) Commodore 64

2. On the system from the previous question what percentile of maximum efficiency did it achieve compared to what the designers expected? A)63% B)81% C)92% D)11%

3. In sectored caching what bit decided if a subsector has data loaded or not? A) Validity B) Dirty C) The Second One D) Parity

4. When are sectors removed from the cache in sectored caching? A) When the system needs the space B) When the data it has is used C) Whenever it wants to D) When it's parity bit is set to 0

5. Who first introduced victim caching? A) Verma B) Zhang C) Gates D) Jouppi

6. In selective victim caching what extra block of memory is integral to it's cache scheme? A Transitory B) Transistor C) Miss Cache D) Level-Two Cache

References

<references />

Topic 1b: Sectored Caches & Victim Caches

Sectored Cache:

Links: Wikipedia page saying IBM 360/85 --Taolande 11:31, 3 September 2013 (EDT)