CSC/ECE 506 Spring 2013/1c ad: Difference between revisions

No edit summary |

|||

| (57 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Multiple Instruction Single Data architecture= | |||

==Overview== | ==Overview== | ||

This wiki article explores the Multiple Instruction Single Data architecture of | This wiki article explores the Multiple Instruction Single Data architecture(MISD) of parallel architecture as classified by Flynn’s Taxonomy. The article starts with a description of Flynn’s Taxonomy and classification followed by [http://en.wikipedia.org/wiki/MISD MISD architecture] and its realization. It also talks about the authors' and researchers' works on MISD and ends by providing implementations and examples of the architecture. | ||

==Parallel Architecture== | [Note: This topic relates to copy and update of previous [http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/1c_dm wiki ] | ||

==Parallel Architecture<ref>http://en.wikipedia.org/wiki/Parallel_computing</ref>== | |||

"Parallel operation has many different forms within a computer | "Parallel operation has many different forms within a computer | ||

| Line 28: | Line 30: | ||

data stream. These are typically | data stream. These are typically | ||

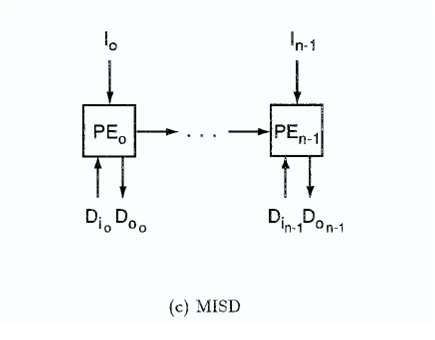

systolic arrays | systolic arrays | ||

[[File:Flynn%27s_MISD.jpg|200px|thumb|right|Flynn's MISD]] | |||

(4) MIMD: multiple instruction, multiple | (4) MIMD: multiple instruction, multiple | ||

| Line 37: | Line 40: | ||

a class of architectures and a corresponding | a class of architectures and a corresponding | ||

type of parallelism." | type of parallelism." | ||

[http://public.callutheran.edu/~reinhart/CSC521MSCS/Week5/FlynnTaxonomies.pdf | [http://public.callutheran.edu/~reinhart/CSC521MSCS/Week5/FlynnTaxonomies.pdf Flynn's Taxonomy] | ||

==Flynn’s Taxonomy of Parallel Computers<ref>http://en.wikipedia.org/wiki/Flynn's_taxonomy</ref><ref>http://www.phy.ornl.gov/csep/ca/node11.html</ref>== | ===Flynn’s Taxonomy of Parallel Computers<ref>http://en.wikipedia.org/wiki/Flynn's_taxonomy</ref><ref>http://www.phy.ornl.gov/csep/ca/node11.html</ref>=== | ||

Flynn defined the taxonomy of parallel computers [[http://en.wikipedia.org/wiki/Michael_J._Flynn Flynn], 1972] based on the number of instruction streams and data streams. | Flynn defined the taxonomy of parallel computers [[http://en.wikipedia.org/wiki/Michael_J._Flynn Flynn], 1972] based on the number of instruction streams and data streams. | ||

| Line 54: | Line 57: | ||

==Multiple Instructions Single Data (MISD)== | |||

[[Image:MISD.PNG|thumb|right|100px|Figure 4. MISD Architecture [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | [[Image:MISD.PNG|thumb|right|100px|Figure 4. MISD Architecture [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | ||

MISD (multiple instruction, single data) is an architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. It is a type of parallel computing architecture where many functional units perform different operations on the same data. | MISD (multiple instruction, single data) is an architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. It is a type of parallel computing architecture where many functional units perform different operations on the same data. | ||

===What actually MISD architecture means === | |||

As explained in detail by Flynn <ref>http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=1447203&url=http%3A%2F%2Fieeexplore.ieee.org%2Fiel5%2F5%2F31091%2F01447203 Flynn's paper</ref>: | |||

"It basically employs the high bandwidth dedicated execution | |||

unit. This unit is then shared by n virtual machines operating on | |||

program sequences independent of one another. Each | |||

virtual machine has access to the execution hardware once | |||

per cycle. Each virtual machine, of course, has its own | |||

private instruction memory and interaction between instruction | |||

streams occurs only via the common data memory." | |||

"Presumably, if there are N instruction units then the bandwidth | |||

of the common data storage must be N times greater | |||

than the individual instruction storage. This requirement | |||

could be substantially reduced by use of a modified version | |||

of Tomasulo’s tag-forwarding algorithm and a separate | |||

common forwarding bus." | |||

[[File:MISD_architecture.jpg|200px|thumb|right|MISD architecture]] | |||

"Another version of this would force forwarding of operands. Thus the data stream presented to Execution | |||

Unit 2 is the resultant of Execution Unit 1 operating its | |||

instruction on the source data stream. The instruction that | |||

any unit performs may be fixed (specialized such that the | |||

interconnection (or setup) of units must be flexible), semifixed | |||

(such that the function of any unit is fixed for one | |||

pass of a data file) or variable (so that the streamof instructions | |||

operates at any point on thes ingle data stream). Under | |||

such an arrangement, only the first execution unit sees the | |||

source data stream and while it is processing the ith operand | |||

the ith execution unit is processing the ith derivation of the | |||

first operand of the source stream." | |||

== | ===Realizing MISD<ref>http://public.callutheran.edu/~reinhart/CSC521MSCS/Week5/FlynnTaxonomies.pdf</ref>=== | ||

"Although it is easy to both envision and design MISD processors, there has been | |||

little interest in this type of parallel | |||

architecture. The reason, so far anyway, | |||

is that no ready programming constructs | |||

easily map programs into the | |||

MISD organization. | |||

Abstractly, the MISD is a pipeline of | |||

multiple independently executing functional | |||

units operating on a single | |||

stream of data, forwarding results from | |||

one functional unit to the next. On the | |||

microarchitecture level, this is exactly | |||

what the vector processor does. However, | |||

in the vector pipeline the operations | |||

are simply fragments of an assembly- | |||

level operation, as distinct from | |||

being a complete operation in themselves. | |||

Surprisingly, some of the earliest | |||

attempts at computers in the 1940s | |||

could be seen as the MISD concept. | |||

They used plug boards for programs, | |||

where data in the form of a punched | |||

card was introduced into the first stage | |||

of a multistage processor. A sequential | |||

series of actions was taken in which the | |||

intermediate results were forwarded | |||

from stage to stage until at the final | |||

stage a result was punched into a new | |||

card." | |||

==Implementations of MISD architecture== | |||

Currrently the applications for MISD are limited and commercial implementation scarce due to cost factors.However,it is a research interest in many fields.Describe below are areas where MISD architecture has been implemented. | |||

== | |||

===== | ===VLSI : A recursive MISD architecture for pattern matching<ref>http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=1308207&url=http%3A%2F%2Fieeexplore.ieee.org%2Fiel5%2F92%2F29030%2F01308207.pdf%3Farnumber%3D1308207</ref>=== | ||

"Many applications require searching for multiple patterns in large data streams for which there is no preprocessed index to rely on for efficient lookups. An multiple instruction stream-single data stream (MISD) VLSI architecture that is based on a recursive divide and conquer approach to pattern matching is proposed. This architecture allows searching for multiple patterns simultaneously. " | |||

The hardware in the above implementation is suitable for searching an online data stream using | |||

queries expressed in many languages. | |||

It is primarily designed | |||

for search problems where one would rapidly find the occurrences | |||

of one or more patterns in a large database. This means | |||

that once a query is supplied by a user, both the delay before the | |||

query is evaluated and the time needed to search the database | |||

should be as small as possible. An important factor for reaching | |||

the former goal, is that the architecture is able to evaluate queries | |||

in parallel whenever possible. | |||

===Acoustics, Speech and Signal Processing<ref>http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=1164924&contentType=Journals+%26+Magazines&queryText%3DA+multimicroprocessor+architecture+for+real-time+computation+of+a+class+of+DFT+algorithms</ref>=== | |||

"Design of a multi microprocessor architecture for real-time computation of a class of [http://en.wikipedia.org/wiki/Discrete_Fourier_transform DFT ]algorithms | |||

The applications considered require the computation of a subset of an N-input | |||

Fourier transform under the assumption of a serial data input.The | |||

suitability of two different algorithms and the corresponding parallel | |||

architectures SIMD (single instruction | |||

multiple data) machine to a MISD (multiple instruction single data) machine.The | |||

results indicate that the latter is better suited for the targeted applications. | |||

An actual operational MISD computer, implemented with offthe- | |||

shelf microprocessors intended for one of the targeted applications, | |||

is described." | |||

For some applications it is more efficient to use an MISD architecture | |||

and than the generally accepted SIMD computer. | |||

===Communictions and Networking<ref> | |||

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=592193&contentType=Conference+Publications&queryText%3DHorizontal+architecture+for+high+performance+parallel+communication+systems</ref>=== | |||

"Horizontal architecture for high performance parallel communication systems are in use | |||

with the advent of high-speed networking technologies such as fiber optics.Now, the speed at which a processor can execute a communication protocol is the most significant limiting factor in protocol performance. The question is, how can a multimedia application take best advantage of these high-speed networks? By adopting an optimized implementation approach that allows efficient use of the bandwidth available on today's high-speed networks. This means processing data as soon as it arrives from the network. This model increases the processing speed by using a multiple-instruction, single-data (MISD) scheme." | |||

The technique applied a MISD scheme to the [http://en.wikipedia.org/wiki/OSI_protocols OSI protocol] | |||

to improve the performance of the communication | |||

subsystem. | |||

Early prototyping shows that the model has | |||

performance improvement up to 60% more than the | |||

conventional approach. | |||

===Systems,Circuits and Devices<ref>http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=5681487&contentType=Conference+Publications&queryText%3DTowards+an+embedded+biologically-inspired+machine+vision+processor | |||

</ref>=== | |||

= | "An embedded biologically-inspired machine [http://www.visiononline.org/market-data.cfm?id=73 vision processor] | ||

that attempts to capture aspects of the computational | |||

architecture of the brain.Meanwhile, | |||

the increasing ubiquity of inexpensive cameras in a wide array | |||

of embedded devices presents an enormous opportunity for the | |||

deployment of embedded machine vision systems. As a first | |||

step towards an embedded implementation, considering the | |||

main requirements for the design of an embedded processor | |||

for biologically-inspired object recognition a | |||

multiple instruction, single data (MISD) pipeline implementation | |||

offers good performance per unit silicon area and power dissipation in comparison | |||

to traditional CPU and GPU implementations." | |||

===Information Technology Computing<ref> | |||

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=844198&contentType=Conference+Publications&queryText%3DEmbedded+zero+wavelet+coefficient+coding+method+for+FPGA+implementation+of+video+codec+in+real-time+systems</ref>=== | |||

[http://ws2.binghamton.edu/fowler/fowler%20personal%20page/EE523_files/Ch_15_4%20EZW%20(PPT)%20revised.pdf Embedded zero wavelet]coefficient coding method for FPGA implementation of video codec in real-time systems. | |||

"The MISD | |||

architecture is proposed as a solution of the | |||

video coding problem. The architecture is characterised by high speed | |||

execution,simplicity and performance . | |||

The important advantages of MISD architectures in real time video systems is | |||

enlarged by the fact that fast computing units that has been | |||

designed that can be re-programmed." | |||

===Fault Tolerant Systems<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#Types_of_fault_tolerance</ref>=== | ===Fault Tolerant Systems<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#Types_of_fault_tolerance</ref>=== | ||

[http://en.wikipedia.org/wiki/Fault-tolerant_system Fault tolerant systems ]are designed to handle the possible failures in software, hardware or interfaces.Hardware faults include hard disk failures, input or output device failures, etc.Software and interface faults include driver failures; operator errors, installing unexpected software etc.Hardware faults can be detected and identified by implementing redundant hardware and multiple backups.Software faults can be tolerable by removing the program errors by executing the software redundantly or by implementing small programs that take over the tasks that crash or generate errors. | |||

===MISD image processing systems<ref>Pattern Recognition and Image Analysis, v 6, n 2, p 414-15, April-June 1996</ref>=== | |||

"Frame memory assignment is | |||

one of the tasks arising in the design of problem-oriented image processing systems. The hardware of most problem-oriented image processing systems with MISD architecture known to date permits operation in a mode where the images being processed have a format that is a fraction of a frame memory page. " | |||

=== Supercomputing machine based on MISD architecture<ref>ICS 88. Third International Conference on Supercomputing. Proceedings, Supercomputing '88, p 377-82 vol.3, 1988</ref>=== | |||

"Today's successful supercomputers have mostly been developed in the restricted area of numerical computations. Conventional pipelining is adopted to realize high-speed [http://www.cs.berkeley.edu/~pattrsn/252S98/Lec06-vector.pdf vector processing]. From the point of evolution of applications requiring more computational power, however, coming supercomputers should be used even for symbolic computations.The pipelined supercomputing of function-level programs dealing with list-structured data can be performed on the general-purpose pipeline designed to be an MISD architecture. Effectiveness of the approach is demonstrated by the simulation results of benchmark programs." | |||

====The Flight Control System – MISD Example for fault tolerance==== | ====The Flight Control System – MISD Example for fault tolerance==== | ||

| Line 150: | Line 219: | ||

There are redundant flight control computers present in the flight control system. If one of the flight-control computers crashes, gets damaged or is affected by electromagnetic pulses, the other computer can overrule the faulty one and hence the flight of the aircraft is unharmed. [[Image:fig13.png|thumb|right|250px|Figure 13: Architecture of triple redundant 777 primary flight computer [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-7 6]]]The number of redundant flight control computers is generally more than two, so that any computer whose results disagree with the others is ruled out to be faulty and is either ignored or rebooted.[[Image:fig14.png|thumb|right|250px|Figure 14: PFC with instruction and data streams]] | There are redundant flight control computers present in the flight control system. If one of the flight-control computers crashes, gets damaged or is affected by electromagnetic pulses, the other computer can overrule the faulty one and hence the flight of the aircraft is unharmed. [[Image:fig13.png|thumb|right|250px|Figure 13: Architecture of triple redundant 777 primary flight computer [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-7 6]]]The number of redundant flight control computers is generally more than two, so that any computer whose results disagree with the others is ruled out to be faulty and is either ignored or rebooted.[[Image:fig14.png|thumb|right|250px|Figure 14: PFC with instruction and data streams]] | ||

====Multiple Processors Implementation in Boeing 777<ref>http://www. | ====Multiple Processors Implementation in Boeing 777<ref>http://www.coe.pku.edu.cn/tpic/20119263710178.pdf Y.C. (Bob) Yeh, Boeing Commercial Airplane Group, "Triple-Triple Redundant 777 Primary Flight Computer" </ref>==== | ||

In modern computers, the redundant flight control computations are carried out by multiprocessor systems. The triple redundant 777 primary flight computer, has the architecture as shown in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Fig13.png Figure 13]. | In modern computers, the redundant flight control computations are carried out by multiprocessor systems. The triple redundant 777 primary flight computer, has the architecture as shown in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Fig13.png Figure 13]. | ||

| Line 164: | Line 233: | ||

=='''Glossary'''== | =='''Glossary'''== | ||

*'''CU''': Control Unit | *'''CU''': Control Unit | ||

| Line 173: | Line 241: | ||

*'''FPGA''': Field Programmable Gate Array | *'''FPGA''': Field Programmable Gate Array | ||

*'''ILP''': Instruction Level Parallelism | *'''ILP''': Instruction Level Parallelism | ||

| Line 189: | Line 253: | ||

*'''PFC''': Primary Flight Computer | *'''PFC''': Primary Flight Computer | ||

*'''SIMD''': Single Instruction Multiple Data | *'''SIMD''': Single Instruction Multiple Data | ||

Latest revision as of 06:15, 14 February 2013

Multiple Instruction Single Data architecture

Overview

This wiki article explores the Multiple Instruction Single Data architecture(MISD) of parallel architecture as classified by Flynn’s Taxonomy. The article starts with a description of Flynn’s Taxonomy and classification followed by MISD architecture and its realization. It also talks about the authors' and researchers' works on MISD and ends by providing implementations and examples of the architecture.

[Note: This topic relates to copy and update of previous wiki

Parallel Architecture<ref>http://en.wikipedia.org/wiki/Parallel_computing</ref>

"Parallel operation has many different forms within a computer system. Using a model based on the different streams used in the computation process, we represent some of the different kinds of parallelism available. A stream is a sequence of objects such as data, or of actions such as instructions. Each stream is independent of all other streams, and each element of a stream can consist of one or more objects or actions. We thus have four combinations that describe most familiar parallel architectures:

(1) SISD: single instruction, single data stream. This is the traditional uniprocessor

(2)SIMD: single instruction, multiple data stream. This includes vector processors as well as massively parallel processors

(3) MISD: multiple instruction, single data stream. These are typically systolic arrays

(4) MIMD: multiple instruction, multiple data stream. This includes traditional multiprocessors as well as the newer networks of workstations

Each of these combinations characterizes a class of architectures and a corresponding type of parallelism." Flynn's Taxonomy

Flynn’s Taxonomy of Parallel Computers<ref>http://en.wikipedia.org/wiki/Flynn's_taxonomy</ref><ref>http://www.phy.ornl.gov/csep/ca/node11.html</ref>

Flynn defined the taxonomy of parallel computers [Flynn, 1972] based on the number of instruction streams and data streams.

• An Instruction stream is a sequence of instructions followed from a single program counter

• A Data stream is an address in memory which the instruction operates on.

A control unit fetches instructions from a single program counter, decodes them, and issues them to the processing element. The processing element is assumed to be a functional unit. Instruction and data are both supplied from the memory.

The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) and data streams available in the architecture are<ref>https://computing.llnl.gov/tutorials/parallel_comp/#Flynn</ref>

Multiple Instructions Single Data (MISD)

MISD (multiple instruction, single data) is an architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. It is a type of parallel computing architecture where many functional units perform different operations on the same data.

What actually MISD architecture means

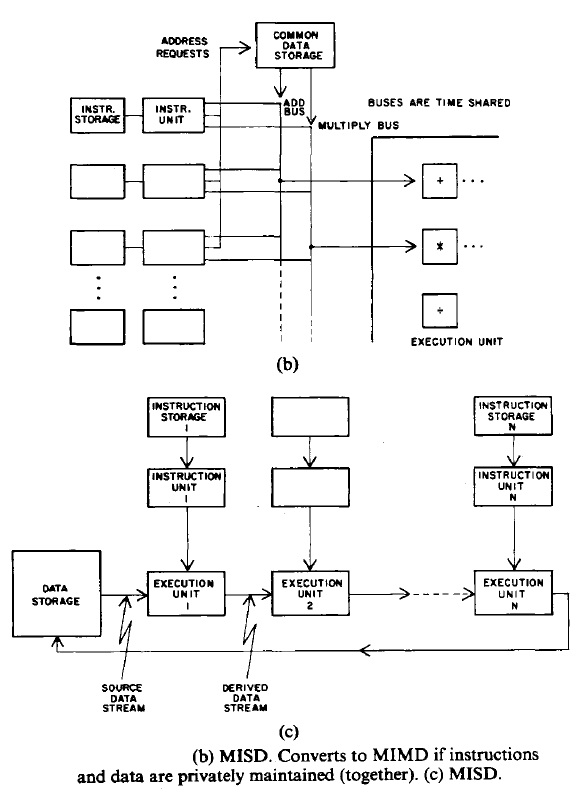

As explained in detail by Flynn <ref>http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=1447203&url=http%3A%2F%2Fieeexplore.ieee.org%2Fiel5%2F5%2F31091%2F01447203 Flynn's paper</ref>: "It basically employs the high bandwidth dedicated execution unit. This unit is then shared by n virtual machines operating on program sequences independent of one another. Each virtual machine has access to the execution hardware once per cycle. Each virtual machine, of course, has its own private instruction memory and interaction between instruction streams occurs only via the common data memory."

"Presumably, if there are N instruction units then the bandwidth of the common data storage must be N times greater than the individual instruction storage. This requirement could be substantially reduced by use of a modified version of Tomasulo’s tag-forwarding algorithm and a separate common forwarding bus."

"Another version of this would force forwarding of operands. Thus the data stream presented to Execution Unit 2 is the resultant of Execution Unit 1 operating its instruction on the source data stream. The instruction that any unit performs may be fixed (specialized such that the interconnection (or setup) of units must be flexible), semifixed (such that the function of any unit is fixed for one pass of a data file) or variable (so that the streamof instructions operates at any point on thes ingle data stream). Under such an arrangement, only the first execution unit sees the source data stream and while it is processing the ith operand the ith execution unit is processing the ith derivation of the first operand of the source stream."

Realizing MISD<ref>http://public.callutheran.edu/~reinhart/CSC521MSCS/Week5/FlynnTaxonomies.pdf</ref>

"Although it is easy to both envision and design MISD processors, there has been little interest in this type of parallel architecture. The reason, so far anyway, is that no ready programming constructs easily map programs into the MISD organization.

Abstractly, the MISD is a pipeline of multiple independently executing functional units operating on a single stream of data, forwarding results from one functional unit to the next. On the microarchitecture level, this is exactly what the vector processor does. However, in the vector pipeline the operations are simply fragments of an assembly- level operation, as distinct from being a complete operation in themselves.

Surprisingly, some of the earliest attempts at computers in the 1940s could be seen as the MISD concept. They used plug boards for programs, where data in the form of a punched card was introduced into the first stage of a multistage processor. A sequential series of actions was taken in which the intermediate results were forwarded from stage to stage until at the final stage a result was punched into a new card."

Implementations of MISD architecture

Currrently the applications for MISD are limited and commercial implementation scarce due to cost factors.However,it is a research interest in many fields.Describe below are areas where MISD architecture has been implemented.

VLSI : A recursive MISD architecture for pattern matching<ref>http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=1308207&url=http%3A%2F%2Fieeexplore.ieee.org%2Fiel5%2F92%2F29030%2F01308207.pdf%3Farnumber%3D1308207</ref>

"Many applications require searching for multiple patterns in large data streams for which there is no preprocessed index to rely on for efficient lookups. An multiple instruction stream-single data stream (MISD) VLSI architecture that is based on a recursive divide and conquer approach to pattern matching is proposed. This architecture allows searching for multiple patterns simultaneously. "

The hardware in the above implementation is suitable for searching an online data stream using queries expressed in many languages. It is primarily designed for search problems where one would rapidly find the occurrences of one or more patterns in a large database. This means that once a query is supplied by a user, both the delay before the query is evaluated and the time needed to search the database should be as small as possible. An important factor for reaching the former goal, is that the architecture is able to evaluate queries in parallel whenever possible.

Acoustics, Speech and Signal Processing<ref>http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=1164924&contentType=Journals+%26+Magazines&queryText%3DA+multimicroprocessor+architecture+for+real-time+computation+of+a+class+of+DFT+algorithms</ref>

"Design of a multi microprocessor architecture for real-time computation of a class of DFT algorithms The applications considered require the computation of a subset of an N-input Fourier transform under the assumption of a serial data input.The suitability of two different algorithms and the corresponding parallel architectures SIMD (single instruction multiple data) machine to a MISD (multiple instruction single data) machine.The results indicate that the latter is better suited for the targeted applications. An actual operational MISD computer, implemented with offthe- shelf microprocessors intended for one of the targeted applications, is described."

For some applications it is more efficient to use an MISD architecture and than the generally accepted SIMD computer.

===Communictions and Networking<ref> http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=592193&contentType=Conference+Publications&queryText%3DHorizontal+architecture+for+high+performance+parallel+communication+systems</ref>===

"Horizontal architecture for high performance parallel communication systems are in use with the advent of high-speed networking technologies such as fiber optics.Now, the speed at which a processor can execute a communication protocol is the most significant limiting factor in protocol performance. The question is, how can a multimedia application take best advantage of these high-speed networks? By adopting an optimized implementation approach that allows efficient use of the bandwidth available on today's high-speed networks. This means processing data as soon as it arrives from the network. This model increases the processing speed by using a multiple-instruction, single-data (MISD) scheme."

The technique applied a MISD scheme to the OSI protocol to improve the performance of the communication subsystem. Early prototyping shows that the model has performance improvement up to 60% more than the conventional approach.

===Systems,Circuits and Devices<ref>http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=5681487&contentType=Conference+Publications&queryText%3DTowards+an+embedded+biologically-inspired+machine+vision+processor </ref>===

"An embedded biologically-inspired machine vision processor that attempts to capture aspects of the computational architecture of the brain.Meanwhile, the increasing ubiquity of inexpensive cameras in a wide array of embedded devices presents an enormous opportunity for the deployment of embedded machine vision systems. As a first step towards an embedded implementation, considering the main requirements for the design of an embedded processor for biologically-inspired object recognition a multiple instruction, single data (MISD) pipeline implementation offers good performance per unit silicon area and power dissipation in comparison to traditional CPU and GPU implementations."

===Information Technology Computing<ref> http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&arnumber=844198&contentType=Conference+Publications&queryText%3DEmbedded+zero+wavelet+coefficient+coding+method+for+FPGA+implementation+of+video+codec+in+real-time+systems</ref>=== Embedded zero waveletcoefficient coding method for FPGA implementation of video codec in real-time systems.

"The MISD architecture is proposed as a solution of the video coding problem. The architecture is characterised by high speed execution,simplicity and performance . The important advantages of MISD architectures in real time video systems is enlarged by the fact that fast computing units that has been designed that can be re-programmed."

Fault Tolerant Systems<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#Types_of_fault_tolerance</ref>

Fault tolerant systems are designed to handle the possible failures in software, hardware or interfaces.Hardware faults include hard disk failures, input or output device failures, etc.Software and interface faults include driver failures; operator errors, installing unexpected software etc.Hardware faults can be detected and identified by implementing redundant hardware and multiple backups.Software faults can be tolerable by removing the program errors by executing the software redundantly or by implementing small programs that take over the tasks that crash or generate errors.

MISD image processing systems<ref>Pattern Recognition and Image Analysis, v 6, n 2, p 414-15, April-June 1996</ref>

"Frame memory assignment is one of the tasks arising in the design of problem-oriented image processing systems. The hardware of most problem-oriented image processing systems with MISD architecture known to date permits operation in a mode where the images being processed have a format that is a fraction of a frame memory page. "

Supercomputing machine based on MISD architecture<ref>ICS 88. Third International Conference on Supercomputing. Proceedings, Supercomputing '88, p 377-82 vol.3, 1988</ref>

"Today's successful supercomputers have mostly been developed in the restricted area of numerical computations. Conventional pipelining is adopted to realize high-speed vector processing. From the point of evolution of applications requiring more computational power, however, coming supercomputers should be used even for symbolic computations.The pipelined supercomputing of function-level programs dealing with list-structured data can be performed on the general-purpose pipeline designed to be an MISD architecture. Effectiveness of the approach is demonstrated by the simulation results of benchmark programs."

The Flight Control System – MISD Example for fault tolerance

A Fly-By-Wire system is used to replace the manual flight control by an electronic control interface. The movements of the flight control in the cockpit are converted to electronic signals and are transmitted to the actuators by wires. The control computers use the feedback from the sensors to compute and control the movement of the actuators to provide the expected response. These computers also perform the task to stabilize the aircraft and perform other tasks without the knowledge of the pilot. Flight control systems must meet extremely high levels of accuracy and functional integrity.

There are redundant flight control computers present in the flight control system. If one of the flight-control computers crashes, gets damaged or is affected by electromagnetic pulses, the other computer can overrule the faulty one and hence the flight of the aircraft is unharmed.

The number of redundant flight control computers is generally more than two, so that any computer whose results disagree with the others is ruled out to be faulty and is either ignored or rebooted.

Multiple Processors Implementation in Boeing 777<ref>http://www.coe.pku.edu.cn/tpic/20119263710178.pdf Y.C. (Bob) Yeh, Boeing Commercial Airplane Group, "Triple-Triple Redundant 777 Primary Flight Computer" </ref>

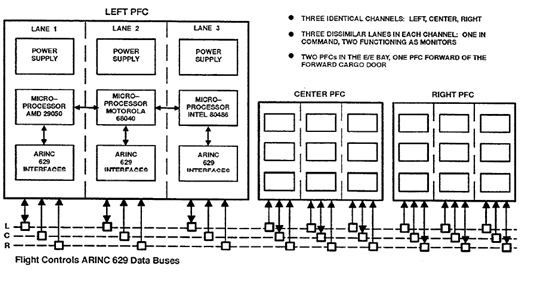

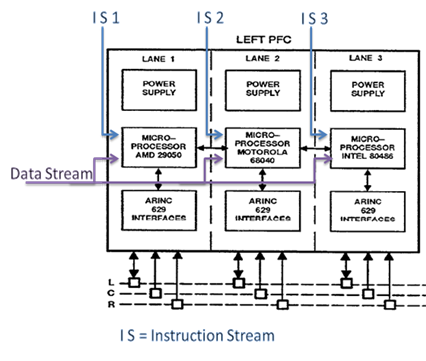

In modern computers, the redundant flight control computations are carried out by multiprocessor systems. The triple redundant 777 primary flight computer, has the architecture as shown in Figure 13.

The system has three primary flight control computers, each of them having three lanes with different processors. The flight control program is compiled for each of the processors which get the input data from the same data bus but drive the output on their individual control bus. Thus each processor executes different instructions but they process the same data. Thus, it is the best suited example of Multiple Instruction Single Data (MISD) architecture.

The three processors selected for the flight control system of Boeing 777 were Intel 80486, Motorola 68040 and AMD 29050. The dissimilar processors lead to dissimilar interface hardware circuits and compilers. Each lane of the flight control computer is data synchronized with the other lanes so that all of the lanes read the same frame of data from the flight sensors. As the outputs of each lane can be different, the median value of the outputs is used to select the output of the lane to be considered. The lane which has the median value select hardware selected is said to be in “command mode” whereas the other lanes are said to be in “monitoring mode”. It receives the data from the other Primary Flight Computer (PFC) lanes and performs a median select of the outputs. This provides a fault blocking mechanism before the fault detection and identification by the cross-lane monitoring system. Thus, the MISD based multi computer architecture is capable of detecting generic errors in compilers or in complex hardware devices providing assurance beyond reasonable doubt of the dependability of the Fly-By-Wire system.

The above mentioned system clearly has individual Instruction Streams as the architecture of each processor is different, thus different instruction sets and different instruction streams. These processors have frame synchronized input data which means they have same set of data to work upon which is fed from a single data stream. Thus the flight control system can be classified under MISD architecture.

References

<references/>

Glossary

- CU: Control Unit

- DS: Data Stream

- Fly-By-Wire: System that replaces the conventional manual flight controls of an aircraft with an electronic interface

- FPGA: Field Programmable Gate Array

- ILP: Instruction Level Parallelism

- IS: Instruction Stream

- MIMD: Multiple Instruction Multiple Data

- MISD: Multiple Instruction Single Data

- PE: Processing Element

- PFC: Primary Flight Computer

- SIMD: Single Instruction Multiple Data

- SISD: Single Instruction Single Data

- VLSI: Very Large Scale Integration