CSC/ECE 506 Spring 2013/1b dj: Difference between revisions

| (81 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= The Supercomputer Landscape Today = | |||

= | == Introduction == | ||

<p> | <p>[http://dictionary.reference.com/browse/supercomputer Supercomputers] are extremely capable computers and can solve complex tasks in a relatively small amount of time. Their ability to massively outperform typical home and office computers is made possible normally either by an abundance of processor cores or smaller computers working in conjunction together. Supercomputers are generally specialized computers that tend to be very expensive and used for computations where large amounts of numerical processing is required. They are used in scientific, military, graphics applications and for other number or data intensive computations <ref>http://dictionary.reference.com/browse/supercomputer Definition of supercomputer</ref>, <ref>http://www.webopedia.com/TERM/S/supercomputer.html Definition of supercomputer</ref>.</p> | ||

<p>As technology has advanced, supercomputers have continued to be surpassed by one another. This has lead to a drive for engineers and scientists to design and create supercomputers that continue to outperform others<ref>http://en.wikipedia.org/wiki/Supercomputer#History</ref>. This article talks about the landscape of today's modern supercomputers by describing the benchmarks and characteristics of today's top supercomputers, and compares the different supercomputer architecture approaches. </p> | |||

<p> | <p>For the history and development of supercomputers, please refer to these [http://wiki.expertiza.ncsu.edu/index.php/Main_Page wiki articles].</p> | ||

= Characteristics = | == Benchmarks == | ||

<p>Supercomputers are originally compared qualitatively using floating point [http://kevindoran.blogspot.com/2011/04/comparing-performance-of-supercomputers.html FLOPS] - operations per second, or KiloFLOPS – thousands of FLOPS. As the computing power of the supercomputers increases, FLOPS can no longer satisfy the measurement system, and MegaFLOPS, GigaFLOPS, or even teraFLOPS starts to become the primary measurement of the system. For example, Titan, the world’s fastest supercomputer as November 2012, can go up to 17590.0 teraFLOPS per second. In addition, a software package called [http://www.top500.org/project/linpack LINPACK] is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. <ref>http://www.top500.org/project/linpack LINPACK defined</ref>. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer <ref>http://www.xtremesystems.org/forums/showthread.php?197835-IntelBurnTest-The-new-stress-testing-program Intel Benchmark Software</ref>.</p> | |||

<p>Furthermore, starting in 1993, [http://www.TOP500.org TOP500.org] began collecting performance data on computers and update their list every six months <ref>http://top500.org/faq/what_top500 What is the TOP500</ref>. This appears to be an excellent online source of information that collects benchmark data submitted by users of computers and readily provides performance statistics by Vendor, Application, Architecture and nine (9) other areas <ref name="t500stats">http://i.top500.org/stats TOP500 Stats</ref>. This article, in order to be vendor neutral, is providing the comparison by architecture. However, there are many ways to compare supercomputers and the user interface at [http://www.TOP500.org TOP500.org] makes these comparisons easy to do.</p> | |||

== Characteristics == | |||

<p>Based on the [http://www.TOP500.org TOP500.org] data as of November 2012, today’s supercomputers share the following key characteristics:</p> | <p>Based on the [http://www.TOP500.org TOP500.org] data as of November 2012, today’s supercomputers share the following key characteristics:</p> | ||

*'''High Processing Speed''' is the primary feature of supercomputer. As the technology grows, more and more calculation-intensive tasks such as weather forecasting and molecular modeling needed to be performed and resolved in a | *'''High Processing Speed''' is the primary feature of supercomputer. As the technology grows, more and more calculation-intensive tasks such as weather forecasting and molecular modeling needed to be performed and resolved in a small time frame. These tasks used to take years to complete, but a supercomputer can now solve the same problem in minutes. As November 2012, the world’s #1 supercomputer, [http://top500.org/system/177975 Cray Titan], can handle more than 20,000 trillion calculation per second. It is targeting to conduct [http://news.cnet.com/8301-11386_3-57541791-76/titan-supercomputer-debuts-for-open-scientific-research/ researches] such as material science code, climate change, and biofuels to bring tremendous real world societal benefits. | ||

*'''Massive Parallel System''' can be used to describe the architecture of the supercomputer. By the end of 20th century, connecting thousands of processors with fast connections started to become the main approach for building supercomputers. Today, there are two main approaches to build this massive parallel system – the grid computing and computer cluster approaches, which will be described in the | *'''Massive Parallel System''' can be used to describe the architecture of the supercomputer. By the end of 20th century, connecting thousands of processors with fast connections started to become the main approach for building supercomputers. Today, there are two main approaches to build this massive parallel system – the grid computing and computer cluster approaches, which will be described in the later sections. | ||

*'''Large Power Consumption''' is one of the major problems for running a supercomputer. Each supercomputer can contain thousands of processors and requires a tremendous amount of power to run. For example, [http://en.wikipedia.org/wiki/K_computer K computer], a Japanese supercomputer on [http://www.TOP500.org TOP500.org] reported the highest total power consumption of 9.89 MW in 2011, which is the equivalent of the total power consumption for 10,000 suburban homes in one year. | *'''Large Power Consumption''' is one of the major problems for running a supercomputer. Each supercomputer can contain thousands of processors and requires a tremendous amount of power to run. For example, [http://en.wikipedia.org/wiki/K_computer K computer], a Japanese supercomputer on [http://www.TOP500.org TOP500.org] reported the highest total power consumption of 9.89 MW in 2011, which is the equivalent of the total power consumption for 10,000 suburban homes in one year. | ||

*'''Heat Management''' is another major problem for supercomputer and affect the computer system in various ways. For instances, it can | *'''Heat Management''' is another major problem for supercomputer and affect the computer system in various ways. For instances, it can cause processors delay, system shutdown, or even equipments burn-down. In order to reduce the heat, various cooling technologies can be applied, such as liquid cooling, air cooling, etc. An interesting example was the [http://www.extremetech.com/extreme/131259-ibm-deploys-hot-water-cooled-supercomputer dubbed Aquasar], a hot-water cooling system developed by IBM that uses boiled water to cool down the system and can consume 40 percent less energy than a comparable air-cooling system. | ||

*'''High Cost''' is | *'''High Cost''' is last major issue of supercomputer. For example, the initial upgrade of Titan supercomputer was $60 million, and the estimated total cost for the system was $97 million. | ||

<p></p> | |||

== Supercomputers Architecture == | |||

<p>Traditional supercomputers of today are composed of three (3) types of parallel processing architectures. These architectures are Massively Parallel Processing or MPP, Cluster, and Constellation <ref name="t500stats" />. A non-traditional, or disruptive approach, to supercomputers is [http://searchdatacenter.techtarget.com/definition/grid-computing Grid Computing]<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>.</p> | |||

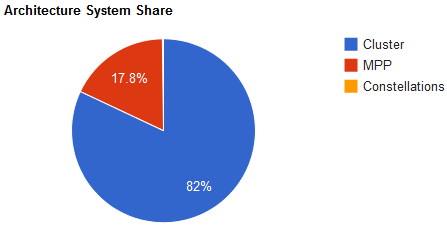

<p>The graphic generated at [http://www.TOP500.org/ TOP500.org] shows the distribution of supercomputers by architecture. The Massively Parallel Processing section of the graph makes up 17.8% of the total number of current supercomputers. The bulk of supercomputers is made up of clustered systems, which Constellation architectures make up a fraction of a percent of supercomputers. Each architecture is further discussed, below.</p> | |||

<p> | |||

[[File:2011nov-top500-architecture.png|thumb|center|upright|500px|<b>Figure 1:</b>Current distribution of architecture types among the top 500 supercomputers. Image from i.TOP500.org/stats]] | |||

[[File:2011nov-top500-architecture.png|thumb| | |||

<p> | === Massively Parallel Processing (MPP) === | ||

[[File:MPP.png|thumb|right|upright|325px|<b>Figure 2:</b>Massively Parallel Processing Architecture. Image from [http://encyclopedia2.thefreedictionary.com/MPP MPP]]] | |||

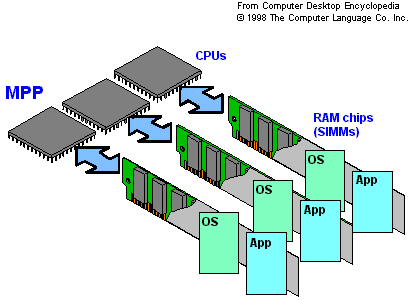

<p>[http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html Massively Parallel Processing] or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion <ref name="ttmppdef">http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html</ref>. Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores <ref name="ttmppdef" />.</p> | |||

<p>The picture on the right shows a simple MPP architecture in which each CPU is a subsystem with its own memory and copy of the operating system and application. Each such subsystem communicates with the other subsystems via a high-speed interconnect. Some of the advantages of MPP architecture are that MPP architecture can be efficiently and fully used in solving problems that can be broken down into parallel pieces which can be processed simultaneously. Example of such problems can be mathematical and simulation problems. Apart from research work MPP architecture can also be used in parallel data query or searches. For example, if we have an MPP system with 26 CPU’s we can use this to perform a sequential search, each processor searching one letter of the alphabet.<ref>http://encyclopedia2.thefreedictionary.com/MPP</ref></p> | |||

<p>Disadvantages of MPP architecture includes absence of general memory which reduces speed of an inter-processor exchange as there is no way to store general data used by different processor. Secondly local memory and storage can result in bottlenecks as each processor can only use their individual memory. Full system resource use might not be possible with MPP architecture as each subsystem works individually. MPP architectures are costly to build as it requires separate memory, storage and CPU for each subsystem. This can be achieved in cluster architecture which is implemented on load balancing. Cluster architecture is explained in detailed in the next section.</p> | |||

<p>The total processing power of the top 500 MPP supercomputers is 23,823.97 TFLOPS. The trend of MPP supercomputers, like cluster based supercomputers, has leveled off<ref name="t500stats" />.</p> | |||

=== Cluster === | |||

<p> In cluster architecture group of computers are connected together through networking and they appear as a single system to the outside world. All the processing in this architecture is carried out using load balancing and resource sharing which is done completely on the back ground. Invented by Digital Equipment Corporation in the 1980's, clusters of computers form the largest number of supercomputers available today <ref>http://books.google.com/books?id=Hd_JlxD7x3oC&pg=PA90&lpg=PA90&dq=what+is+a+constellation+in+parallel+computing?&source=bl&ots=Rf9nxSqOgL&sig=-xleas5wXvNpvkgYYxguvP1tSLA&hl=en&sa=X&ei=aDcnT-XRNqHX0QHymbjrAg&ved=0CGMQ6AEwBw#v=onepage&q=what%20is%20a%20constellation%20in%20parallel%20computing%3F&f=false Applied Parallel Computing</ref>, <ref name="t500stats" />.</p> | |||

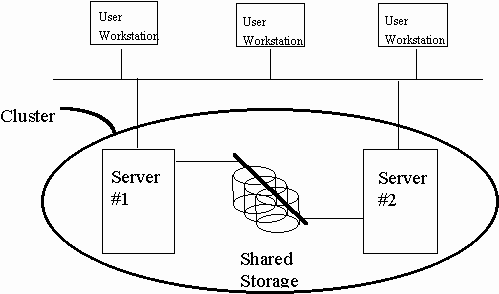

<p>[http://www.TOP500.org TOP500.org] data as of November 2011 shows that Cluster computing makes up the largest subset of supercomputers at 82 percent.</p> | |||

[[File:ClustersSharedStorage.png|thumb|right|upright|325px|<b>Figure 3:</b>Shared storage in cluster architecture. Image from [http://www.unix.org/whitepapers/Image11.gif Shared Storage]]] | |||

<p>In cluster architecture computers are harnessed together and they work independent of the application interacting with it. In fact the application or user running on the architecture sees them as a single resource.</p> | |||

<p> | |||

<p> | <p>There are three main components to cluster architecture namely interconnect technology, storage and memory.</p> | ||

<p>Interconnect technology is responsible for coordinating the work of the nodes and for effecting failover procedures in the case of a subsystem failure. Interconnect technology is responsible for making the cluster appear to be a monolithic system and is also the basis for system management tools <ref>http://www.unix.org/whitepapers/cluster.html</ref>. The main components used for this technology are mainly network or dedicated buses specifically used for achieving this interconnect reliability. </p> | |||

<p>The | <p>Secondly storage in cluster architecture can be shared or distributed. The picture on the right shows an example of shared storage architecture for clusters. As you can see in here all the computers use the same storage. One of the benefit of using shared storage is it has less overhead of syncing different storages. Additionally, shared storage makes sense if the applications running on it have large shared databases. In distributed storage each node in cluster has its own storage. Information sharing between nodes is carried out using message passing on network. Additional work is needed to keep all the storage in sync in case of failover.</p> | ||

<p>Lastly memory in clusters also comes in shared or distributed flavors. Most commonly in clusters distributed memory is used but in certain cases shared memory can also be used depending on the final use of the system.</p> | |||

<p> | <p>Some of the benefits of using cluster architecture are to produce higher performance, higher availability, greater scalability and lower operating costs. Cluster architectures are famous for providing continuous and uninterrupted service. This is achieved using redundancy. </p> | ||

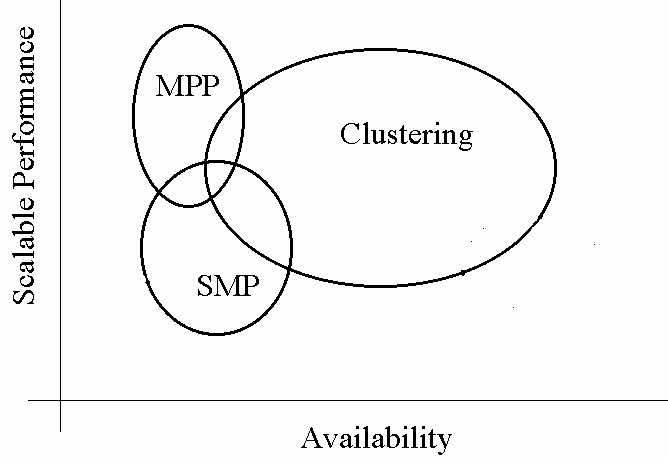

<p> | <p>Now that we know how cluster architecture works let’s see how this architecture differs from MPP. The picture below compares different architectures and as you can see each of the approaches aims to improve system performance and or system reliability. But cluster architecture provides both broad scalable performance and high availability. </p> | ||

[[File:Cluster_MPP_&_SMP.png|thumb|center|upright|500px|<b>Figure 4:</b>Different supercomputer architecture. Image from [http://www.unix.org/whitepapers/Image11.gif Compare]]] | |||

<p>The total processing power of the top 500 | <p>SMP in the above picture stands for symmetric multiprocessing (SMP) found in today's computer systems or laptops. The total processing power of the top 500 cluster supercomputers is reported at 50,192.82 TFLOPS and the trend for growth of cluster based supercomputers has leveled off<ref name="t500stats" />.</p> | ||

== Constellation == | === Constellation === | ||

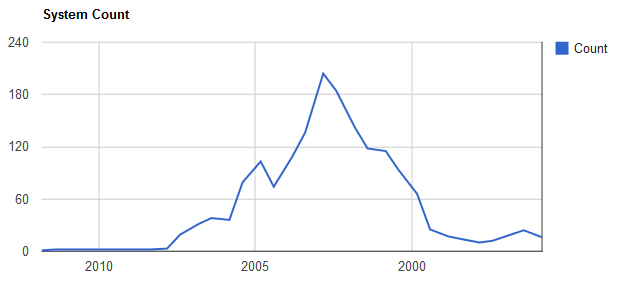

[[File:2011nov-top500-constellation-count.png|thumb|right|upright|350px|Number of constellation systems among the top 500 supercomputers since before 2000. Image from i.TOP500.org/stats]] | [[File:2011nov-top500-constellation-count.png|thumb|right|upright|350px|<b>Figure 5:</b>Number of constellation systems among the top 500 supercomputers since before 2000. Image from i.TOP500.org/stats]] | ||

<p>A [http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf Constellation] is a cluster of supercomputers <ref>http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf</ref>. [http://www.TOP500.org TOP500.org] shows only one constellation supercomputer as of November 2011. The graph shows rapid growth and decline in the first 5 years of the 21st century.</p> | <p>A [http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf Constellation] is a cluster of supercomputers <ref>http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf</ref>. [http://www.TOP500.org TOP500.org] shows only one constellation supercomputer as of November 2011. The graph shows rapid growth and decline in the first 5 years of the 21st century.</p> | ||

| Line 53: | Line 72: | ||

<p>The total processing power of the constellation supercomputer is: 52.84 TFLOPS<ref name="t500stats" />.</p> | <p>The total processing power of the constellation supercomputer is: 52.84 TFLOPS<ref name="t500stats" />.</p> | ||

== Grid Computing == | === Grid Computing === | ||

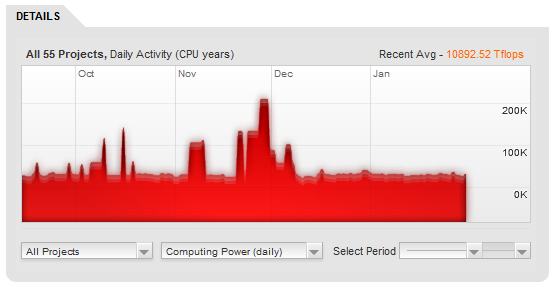

[[File:20120130-grid-computing-graph.png|thumb|right|upright|350px|Daily computing power (TFLOPS) of grid supercomputers over a several month period. Image from http://www.gridrepublic.org/index.php?page=stats]] | [[File:20120130-grid-computing-graph.png|thumb|right|upright|350px|<b>Figure 6:</b>Daily computing power (TFLOPS) of grid supercomputers over a several month period. Image from http://www.gridrepublic.org/index.php?page=stats]] | ||

<p>Grid Computing is defined as applying many networked computers to solving a single problem simultaneously<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>. It is also defined as a network of computers used by a single company or organization to solve a problem<ref>http://boinc.berkeley.edu/trac/wiki/DesktopGrid</ref>. Yet another definition as implemented by GridRepublic.org creates a supercomputing grid by using volunteer computers from across the globe<ref>http://www.gridrepublic.org/index.php?page=about</ref>. All of these definitions have something in common, and that is using parallel processing to attack a problem that can be broken up into many pieces.</p> | <p>Grid Computing is defined as applying many networked computers to solving a single problem simultaneously<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>. It is also defined as a network of computers used by a single company or organization to solve a problem<ref>http://boinc.berkeley.edu/trac/wiki/DesktopGrid</ref>. Yet another definition as implemented by GridRepublic.org creates a supercomputing grid by using volunteer computers from across the globe<ref>http://www.gridrepublic.org/index.php?page=about</ref>. All of these definitions have something in common, and that is using parallel processing to attack a problem that can be broken up into many pieces.</p> | ||

| Line 62: | Line 81: | ||

<p>The GridRepublic.org statistics is for 55 applications running using a total of 10,979,114 GFLOPS or 10,979.114 TFLOPS<ref name="grstats">http://www.gridrepublic.org/index.php?page=stats</ref>.</p> | <p>The GridRepublic.org statistics is for 55 applications running using a total of 10,979,114 GFLOPS or 10,979.114 TFLOPS<ref name="grstats">http://www.gridrepublic.org/index.php?page=stats</ref>.</p> | ||

= Top 10 Supercomputers = | == Advantages & Disadvantages of Supercomputers == | ||

<p>An excellent way to compare the advantages and disadvantages of supercomputers is to use a table. Although this list is not exhaustive, it generally sums up the advantages as being the ability solve large number crunching problems quickly but at a high cost due to the specialty of the hardware, the physical scale of the system and power requirements.</p> | |||

{|class="wikitable" | |||

!Advantage | |||

!Disadvantage | |||

|- | |||

|Ability to process large amounts of data. Examples include atmospheric modeling and oceanic modeling. Processing large matrices and weapons simulation<ref name="paulmurphy">http://www.zdnet.com/blog/murphy/uses-for-supercomputers/746 Murphy, Paul (December 2006) Uses for supercomputers</ref>. | |||

|Limited scope of applications, or in general, they're not general purpose computers. Supercomputers are usually engaged in scientific, military or mathematical applications<ref name="paulmurphy" />. | |||

|- | |||

|The ability to process large amounts of data quickly and in parallel, when compared to the ability of low end commercial systems or user computers<ref>http://nickeger.blogspot.com/2011/11/supercomputers-advantages-and.html Eger, Nick (November 2011) Supercomputer advantages adn disadvantages</ref>. | |||

|Cost, power and cooling. Commercial supercomputers costs hundreds of millions of dollars. They have on-going energy and cooling requirements that are expensive<ref name="robertharris">http://www.zdnet.com/blog/storage/build-an-8-ps3-supercomputer/220?tag=rbxccnbzd1 Harris, Robert (October 2007) Build an 8 PS3 supercomputer</ref>. | |||

|} | |||

<p>Although these are advantages and disadvantages of the traditional supercomputer, there is movement towards the consumerization of supercomputers which could result in supercomputers being affordable to the average person<ref name="robertharris" />. | |||

== Top 10 Supercomputers == | |||

[[File:Top500-november-2012.png|thumb|right|upright|350px|[http://www.datacenterknowledge.com/top-10-supercomputers-illustrated-november-2012/ <b>Figure 7:</b>Top 10 Supercomputers]]] | |||

<p>According to [http://www.top500.org/ Top500.org], the top 10 supercomputers in the world, as of November 2012, are listed below:</p> | <p>According to [http://www.top500.org/ Top500.org], the top 10 supercomputers in the world, as of November 2012, are listed below:</p> | ||

{|class="wikitable" | {|class="wikitable" | ||

| Line 111: | Line 149: | ||

<p>For a more detailed version of this list, see [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1a_ry#Top_10_supercomputers_of_today.5B9.5D a fellow student's wiki on supercomputers].</p> | <p>For a more detailed version of this list, see [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1a_ry#Top_10_supercomputers_of_today.5B9.5D a fellow student's wiki on supercomputers].</p> | ||

= | == Summary== | ||

= | |||

Cluster supercomputers account for about twice as much processing in TFLOPS as MPP based supercomputers. The statistics tracked by [http://www.GridRepublic.org GridRepublic.org] for 55 applications shows that grid computing is using about the same amount of processing power as the fastest individual supercomputer listed on the [http://www.TOP500.org TOP500.org] list of supercomputers. The fastest computer listed is the | Cluster supercomputers account for about twice as much processing in TFLOPS as MPP based supercomputers. The statistics tracked by [http://www.GridRepublic.org GridRepublic.org] for 55 applications shows that grid computing is using about the same amount of processing power as the fastest individual supercomputer listed on the [http://www.TOP500.org TOP500.org] list of supercomputers. The fastest computer listed is the | ||

| Line 135: | Line 156: | ||

<p>Grid Computing as an alternative to individually defined supercomputers seems to be growing and the expense of operating it is fully distributed across the volunteers that are apart of it. However, with any system where you don't have complete control of its parts, you can't rely on all of those parts being there all the time.</p> | <p>Grid Computing as an alternative to individually defined supercomputers seems to be growing and the expense of operating it is fully distributed across the volunteers that are apart of it. However, with any system where you don't have complete control of its parts, you can't rely on all of those parts being there all the time.</p> | ||

= References = | == References == | ||

<references /> | <references /> | ||

Latest revision as of 01:52, 11 February 2013

The Supercomputer Landscape Today

Introduction

Supercomputers are extremely capable computers and can solve complex tasks in a relatively small amount of time. Their ability to massively outperform typical home and office computers is made possible normally either by an abundance of processor cores or smaller computers working in conjunction together. Supercomputers are generally specialized computers that tend to be very expensive and used for computations where large amounts of numerical processing is required. They are used in scientific, military, graphics applications and for other number or data intensive computations <ref>http://dictionary.reference.com/browse/supercomputer Definition of supercomputer</ref>, <ref>http://www.webopedia.com/TERM/S/supercomputer.html Definition of supercomputer</ref>.

As technology has advanced, supercomputers have continued to be surpassed by one another. This has lead to a drive for engineers and scientists to design and create supercomputers that continue to outperform others<ref>http://en.wikipedia.org/wiki/Supercomputer#History</ref>. This article talks about the landscape of today's modern supercomputers by describing the benchmarks and characteristics of today's top supercomputers, and compares the different supercomputer architecture approaches.

For the history and development of supercomputers, please refer to these wiki articles.

Benchmarks

Supercomputers are originally compared qualitatively using floating point FLOPS - operations per second, or KiloFLOPS – thousands of FLOPS. As the computing power of the supercomputers increases, FLOPS can no longer satisfy the measurement system, and MegaFLOPS, GigaFLOPS, or even teraFLOPS starts to become the primary measurement of the system. For example, Titan, the world’s fastest supercomputer as November 2012, can go up to 17590.0 teraFLOPS per second. In addition, a software package called LINPACK is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. <ref>http://www.top500.org/project/linpack LINPACK defined</ref>. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer <ref>http://www.xtremesystems.org/forums/showthread.php?197835-IntelBurnTest-The-new-stress-testing-program Intel Benchmark Software</ref>.

Furthermore, starting in 1993, TOP500.org began collecting performance data on computers and update their list every six months <ref>http://top500.org/faq/what_top500 What is the TOP500</ref>. This appears to be an excellent online source of information that collects benchmark data submitted by users of computers and readily provides performance statistics by Vendor, Application, Architecture and nine (9) other areas <ref name="t500stats">http://i.top500.org/stats TOP500 Stats</ref>. This article, in order to be vendor neutral, is providing the comparison by architecture. However, there are many ways to compare supercomputers and the user interface at TOP500.org makes these comparisons easy to do.

Characteristics

Based on the TOP500.org data as of November 2012, today’s supercomputers share the following key characteristics:

- High Processing Speed is the primary feature of supercomputer. As the technology grows, more and more calculation-intensive tasks such as weather forecasting and molecular modeling needed to be performed and resolved in a small time frame. These tasks used to take years to complete, but a supercomputer can now solve the same problem in minutes. As November 2012, the world’s #1 supercomputer, Cray Titan, can handle more than 20,000 trillion calculation per second. It is targeting to conduct researches such as material science code, climate change, and biofuels to bring tremendous real world societal benefits.

- Massive Parallel System can be used to describe the architecture of the supercomputer. By the end of 20th century, connecting thousands of processors with fast connections started to become the main approach for building supercomputers. Today, there are two main approaches to build this massive parallel system – the grid computing and computer cluster approaches, which will be described in the later sections.

- Large Power Consumption is one of the major problems for running a supercomputer. Each supercomputer can contain thousands of processors and requires a tremendous amount of power to run. For example, K computer, a Japanese supercomputer on TOP500.org reported the highest total power consumption of 9.89 MW in 2011, which is the equivalent of the total power consumption for 10,000 suburban homes in one year.

- Heat Management is another major problem for supercomputer and affect the computer system in various ways. For instances, it can cause processors delay, system shutdown, or even equipments burn-down. In order to reduce the heat, various cooling technologies can be applied, such as liquid cooling, air cooling, etc. An interesting example was the dubbed Aquasar, a hot-water cooling system developed by IBM that uses boiled water to cool down the system and can consume 40 percent less energy than a comparable air-cooling system.

- High Cost is last major issue of supercomputer. For example, the initial upgrade of Titan supercomputer was $60 million, and the estimated total cost for the system was $97 million.

Supercomputers Architecture

Traditional supercomputers of today are composed of three (3) types of parallel processing architectures. These architectures are Massively Parallel Processing or MPP, Cluster, and Constellation <ref name="t500stats" />. A non-traditional, or disruptive approach, to supercomputers is Grid Computing<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>.

The graphic generated at TOP500.org shows the distribution of supercomputers by architecture. The Massively Parallel Processing section of the graph makes up 17.8% of the total number of current supercomputers. The bulk of supercomputers is made up of clustered systems, which Constellation architectures make up a fraction of a percent of supercomputers. Each architecture is further discussed, below.

Massively Parallel Processing (MPP)

Massively Parallel Processing or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion <ref name="ttmppdef">http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html</ref>. Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores <ref name="ttmppdef" />.

The picture on the right shows a simple MPP architecture in which each CPU is a subsystem with its own memory and copy of the operating system and application. Each such subsystem communicates with the other subsystems via a high-speed interconnect. Some of the advantages of MPP architecture are that MPP architecture can be efficiently and fully used in solving problems that can be broken down into parallel pieces which can be processed simultaneously. Example of such problems can be mathematical and simulation problems. Apart from research work MPP architecture can also be used in parallel data query or searches. For example, if we have an MPP system with 26 CPU’s we can use this to perform a sequential search, each processor searching one letter of the alphabet.<ref>http://encyclopedia2.thefreedictionary.com/MPP</ref>

Disadvantages of MPP architecture includes absence of general memory which reduces speed of an inter-processor exchange as there is no way to store general data used by different processor. Secondly local memory and storage can result in bottlenecks as each processor can only use their individual memory. Full system resource use might not be possible with MPP architecture as each subsystem works individually. MPP architectures are costly to build as it requires separate memory, storage and CPU for each subsystem. This can be achieved in cluster architecture which is implemented on load balancing. Cluster architecture is explained in detailed in the next section.

The total processing power of the top 500 MPP supercomputers is 23,823.97 TFLOPS. The trend of MPP supercomputers, like cluster based supercomputers, has leveled off<ref name="t500stats" />.

Cluster

In cluster architecture group of computers are connected together through networking and they appear as a single system to the outside world. All the processing in this architecture is carried out using load balancing and resource sharing which is done completely on the back ground. Invented by Digital Equipment Corporation in the 1980's, clusters of computers form the largest number of supercomputers available today <ref>http://books.google.com/books?id=Hd_JlxD7x3oC&pg=PA90&lpg=PA90&dq=what+is+a+constellation+in+parallel+computing?&source=bl&ots=Rf9nxSqOgL&sig=-xleas5wXvNpvkgYYxguvP1tSLA&hl=en&sa=X&ei=aDcnT-XRNqHX0QHymbjrAg&ved=0CGMQ6AEwBw#v=onepage&q=what%20is%20a%20constellation%20in%20parallel%20computing%3F&f=false Applied Parallel Computing</ref>, <ref name="t500stats" />.

TOP500.org data as of November 2011 shows that Cluster computing makes up the largest subset of supercomputers at 82 percent.

In cluster architecture computers are harnessed together and they work independent of the application interacting with it. In fact the application or user running on the architecture sees them as a single resource.

There are three main components to cluster architecture namely interconnect technology, storage and memory.

Interconnect technology is responsible for coordinating the work of the nodes and for effecting failover procedures in the case of a subsystem failure. Interconnect technology is responsible for making the cluster appear to be a monolithic system and is also the basis for system management tools <ref>http://www.unix.org/whitepapers/cluster.html</ref>. The main components used for this technology are mainly network or dedicated buses specifically used for achieving this interconnect reliability.

Secondly storage in cluster architecture can be shared or distributed. The picture on the right shows an example of shared storage architecture for clusters. As you can see in here all the computers use the same storage. One of the benefit of using shared storage is it has less overhead of syncing different storages. Additionally, shared storage makes sense if the applications running on it have large shared databases. In distributed storage each node in cluster has its own storage. Information sharing between nodes is carried out using message passing on network. Additional work is needed to keep all the storage in sync in case of failover.

Lastly memory in clusters also comes in shared or distributed flavors. Most commonly in clusters distributed memory is used but in certain cases shared memory can also be used depending on the final use of the system.

Some of the benefits of using cluster architecture are to produce higher performance, higher availability, greater scalability and lower operating costs. Cluster architectures are famous for providing continuous and uninterrupted service. This is achieved using redundancy.

Now that we know how cluster architecture works let’s see how this architecture differs from MPP. The picture below compares different architectures and as you can see each of the approaches aims to improve system performance and or system reliability. But cluster architecture provides both broad scalable performance and high availability.

SMP in the above picture stands for symmetric multiprocessing (SMP) found in today's computer systems or laptops. The total processing power of the top 500 cluster supercomputers is reported at 50,192.82 TFLOPS and the trend for growth of cluster based supercomputers has leveled off<ref name="t500stats" />.

Constellation

A Constellation is a cluster of supercomputers <ref>http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf</ref>. TOP500.org shows only one constellation supercomputer as of November 2011. The graph shows rapid growth and decline in the first 5 years of the 21st century.

This author's speculation about the decline of constellations is based on several factors: Multiple processor and/or multiple core computers have been getting faster and less expensive. Combine these less expensive computers into very large clusters and you can get computing power that rivals a constellation. Alternatively, more and more computers have symmetric multiprocessing, SMP, and the concept of constellations and clusters is converging.

The total processing power of the constellation supercomputer is: 52.84 TFLOPS<ref name="t500stats" />.

Grid Computing

Grid Computing is defined as applying many networked computers to solving a single problem simultaneously<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>. It is also defined as a network of computers used by a single company or organization to solve a problem<ref>http://boinc.berkeley.edu/trac/wiki/DesktopGrid</ref>. Yet another definition as implemented by GridRepublic.org creates a supercomputing grid by using volunteer computers from across the globe<ref>http://www.gridrepublic.org/index.php?page=about</ref>. All of these definitions have something in common, and that is using parallel processing to attack a problem that can be broken up into many pieces.

The graph generated by data at GridRepublic.org shows the average processing power of this supercomputer created by volunteers from around the world.

The GridRepublic.org statistics is for 55 applications running using a total of 10,979,114 GFLOPS or 10,979.114 TFLOPS<ref name="grstats">http://www.gridrepublic.org/index.php?page=stats</ref>.

Advantages & Disadvantages of Supercomputers

An excellent way to compare the advantages and disadvantages of supercomputers is to use a table. Although this list is not exhaustive, it generally sums up the advantages as being the ability solve large number crunching problems quickly but at a high cost due to the specialty of the hardware, the physical scale of the system and power requirements.

| Advantage | Disadvantage |

|---|---|

| Ability to process large amounts of data. Examples include atmospheric modeling and oceanic modeling. Processing large matrices and weapons simulation<ref name="paulmurphy">http://www.zdnet.com/blog/murphy/uses-for-supercomputers/746 Murphy, Paul (December 2006) Uses for supercomputers</ref>. | Limited scope of applications, or in general, they're not general purpose computers. Supercomputers are usually engaged in scientific, military or mathematical applications<ref name="paulmurphy" />. |

| The ability to process large amounts of data quickly and in parallel, when compared to the ability of low end commercial systems or user computers<ref>http://nickeger.blogspot.com/2011/11/supercomputers-advantages-and.html Eger, Nick (November 2011) Supercomputer advantages adn disadvantages</ref>. | Cost, power and cooling. Commercial supercomputers costs hundreds of millions of dollars. They have on-going energy and cooling requirements that are expensive<ref name="robertharris">http://www.zdnet.com/blog/storage/build-an-8-ps3-supercomputer/220?tag=rbxccnbzd1 Harris, Robert (October 2007) Build an 8 PS3 supercomputer</ref>. |

Although these are advantages and disadvantages of the traditional supercomputer, there is movement towards the consumerization of supercomputers which could result in supercomputers being affordable to the average person<ref name="robertharris" />.

Top 10 Supercomputers

According to Top500.org, the top 10 supercomputers in the world, as of November 2012, are listed below:

| Number | Name | System |

|---|---|---|

| 1 | Titan | Cray XK7 , Opteron 6274 16C 2.200GHz, Cray Gemini interconnect, NVIDIA K20x |

| 2 | Sequoia | BlueGene/Q, Power BQC 16C 1.60 GHz, Custom |

| 3 | K computer | SPARC64 VIIIfx 2.0GHz, Tofu interconnect |

| 4 | Mira | BlueGene/Q, Power BQC 16C 1.60GHz, Custom |

| 5 | JUQUEEN | BlueGene/Q, Power BQC 16C 1.600GHz, Custom Interconnect |

| 6 | SuperMUC | iDataPlex DX360M4, Xeon E5-2680 8C 2.70GHz, Infiniband FDR |

| 7 | Stampede | PowerEdge C8220, Xeon E5-2680 8C 2.700GHz, Infiniband FDR, Intel Xeon Phi |

| 8 | Tianhe-1A | NUDT YH MPP, Xeon X5670 6C 2.93 GHz, NVIDIA 2050 |

| 9 | Fermi-100 | BlueGene/Q, Power BQC 16C 1.60GHz, Custom |

| 10 | DARPA Trial Subset | Power 775, POWER7 8C 3.836GHz, Custom Interconnect |

For a more detailed version of this list, see a fellow student's wiki on supercomputers.

Summary

Cluster supercomputers account for about twice as much processing in TFLOPS as MPP based supercomputers. The statistics tracked by GridRepublic.org for 55 applications shows that grid computing is using about the same amount of processing power as the fastest individual supercomputer listed on the TOP500.org list of supercomputers. The fastest computer listed is the

RIKEN located at the Advanced Institute for Computational Science (AICS) in Japan, which is a K computer, SPARC64 VIIIfx 2.0GHz, and Tofu interconnect that operates at 10510.00 TFLOPS<ref>http://www.top500.org/list/2011/11/100</ref>.

Grid Computing as an alternative to individually defined supercomputers seems to be growing and the expense of operating it is fully distributed across the volunteers that are apart of it. However, with any system where you don't have complete control of its parts, you can't rely on all of those parts being there all the time.

References

<references />