CSC/ECE 506 Spring 2012/11a hn: Difference between revisions

| (65 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Distributed Shared Memory== | ==Distributed Shared Memory== | ||

===Evolution of DSM=== | |||

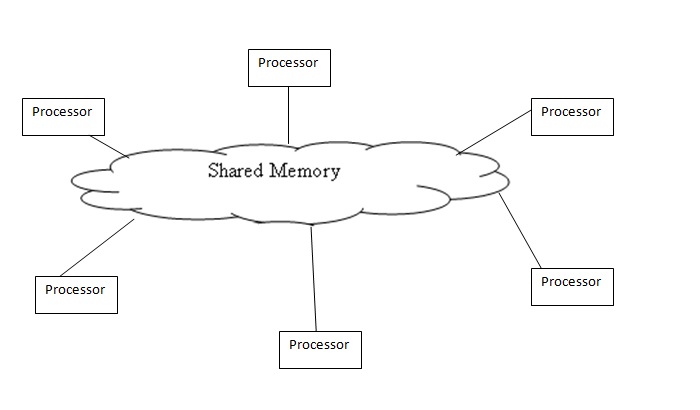

The [http://www.ece.rutgers.edu/~parashar/Classes/99-00/ece566/slides/lecture11.pdf Shared Memory system] is not scalable for a large network with multiple nodes and [http://en.wikipedia.org/wiki/Distributed_computing Distributed Memory systems] is complex to program and less efficient. The [http://en.wikipedia.org/wiki/Distributed_shared_memory Distributed Shared Memory (DSM)] was built to remove the disadvantages of both systems and combine the advantages. | |||

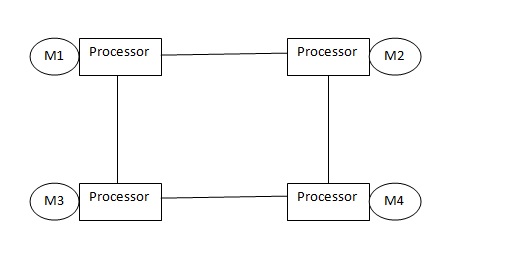

The Shared Memory system has a group of processors sharing global memory which allows any processor to access the memory location[[#References|<sup>[1]</sup>]]. The Distributed memory systems have a cluster each (i.e each processor has its own memory location ) and use message passing to communicate between the clusters[[#References|<sup>[2]</sup>]]. The DSM on the other hand has several processors sharing the same address space i.e a location in memory will be the same physical address for all processors. Unlike the Shared Memory System they do not actually use a global memory which is accessible by all processors, instead it is a logical abstraction of a single address space for different memory locations which can be accessed by all processors. | |||

[[image:Shared_memory.jpg|thumb|center|400px|alt=Shared Memory System.|Illustration of Shared memory system [[#References|<sup>[3]</sup>]].]] | [[image:Shared_memory.jpg|thumb|center|400px|alt=Shared Memory System.|Illustration of Shared memory system [[#References|<sup>[3]</sup>]].]] | ||

[[image:Distributed_Memory.jpg|thumb|center|400px|alt=Distributed Memory System.|Illustration of Distributed memory system [[#References|<sup>[3]</sup>]]. | [[image:Distributed_Memory.jpg|thumb|center|400px|alt=Distributed Memory System.|Illustration of Distributed memory system [[#References|<sup>[3]</sup>]].]] | ||

From the above pictures it can be noticed that in Distributed Systems each processor | From the above pictures it can be noticed that in Distributed Systems each processor has its own memory,but in case of Distributed Shared memory though each processor has its own memory there is an abstraction that all processors have the same view of memory i.e the main memory of a cluster of processors is made to look like a single memory with a single address space. The Distributed systems are scalable for a large number of processors but is difficult to program because of message passing. The Shared Memory on the other hand is less scalable but is easy to program as all the processors can access each others data and makes parallel programming easier. Therefore DSM uses a more scalable and less expensive model by supporting the abstraction of shared memory by using message passing. The DSM allows the programmer to share and use variables without having to worry about their management. Hence a processor can access a address space held by other processors main memory. It allows end-users to use the shared memory without knowing the message passing,the idea is to allow inter processor communication which is invisible to the user. | ||

===Hardware of a Distributed Shared Memory system=== | |||

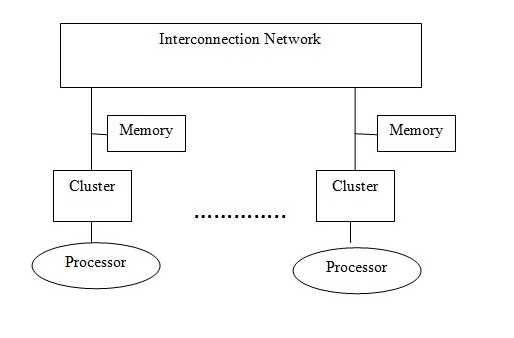

A DSM system in general has clusters connected to a interconnection network. A cluster can have a uni-processor or multi-processor system with local memory and cache to remove memory latency. The local memory of a cluster is entirely or partially mapped to the DSM global memory system. A directory is maintained which has information of location of each data block and the state of the block. Directory organization can be that of a linked list,double linked list or a full map storage,the organization of directory will affect the system scalabilty[[#References|<sup>[5]</sup>]]. | A DSM system in general has clusters connected to a interconnection network. A cluster can have a uni-processor or multi-processor system with local memory and cache to remove memory latency. The local memory of a cluster is entirely or partially mapped to the DSM global memory system. A directory is maintained which has information of location of each data block and the state of the block. Directory organization can be that of a linked list,double linked list or a full map storage,the organization of directory will affect the system scalabilty[[#References|<sup>[5]</sup>]]. | ||

A DSM system provides the shared memory abstraction by creating a software which would be cost efficient as it would not change anything in the cluster. Some systems provide the abstraction in hardware which could cost extra but the performance of hardware based DSM is better than software based [[#References|<sup>[4]</sup>]]. | A DSM system provides the shared memory abstraction by creating a software which would be cost efficient as it would not change anything in the cluster. Some systems provide the abstraction in hardware which could cost extra but the performance of hardware based DSM is better than software based [[#References|<sup>[4]</sup>]]. | ||

=== | === Challenges of using a Bus Based protocol for a Distributed Memory === | ||

The Distributed Shared Memory(DSM) architecture requires a shared memory space. Message passing using interconnect networks is the preferred mode of communication to maintain coherence in a DSM. This is because | The Distributed Shared Memory(DSM) architecture requires a shared memory space. Message passing using interconnect networks is the preferred mode of communication to maintain coherence in a DSM. This is because | ||

- Physically, the DSM is designed to allow a large number of nodes to interact with each other. With a bus-based system, the bus | - Physically, the DSM is designed to allow a large number of nodes to interact with each other. With a bus-based system, the bus simply becomes too long to connect various nodes of a DSM. In a bus based multiprocessor for a smaller set of nodes having a common memory, latency of communication is not discernable. Also because the nodes of a bus-based system use snoopy protocols, clock frequency synchronization is also critical. With larger number of nodes and a longer bus, maintaining clock frequency becomes a bigger challenge. | ||

- Whatever cache coherence protocol is used, a larger number of nodes leads to a larger number of transactions on the bus. The bus using a shared channel to transfer cache blocks/lines, an increase in bus traffic causes greater latency in communcation. | - Whatever cache coherence protocol is used, a larger number of nodes leads to a larger number of transactions on the bus. The bus using a shared channel to transfer cache blocks/lines, an increase in bus traffic causes greater latency in communcation. | ||

- It is preferable to design a DSM on a non-uniform memory access(NUMA) based architecture, because that would allow the use of memory to be addressed globally, but placed locally to each node. | - It is preferable to design a DSM on a non-uniform memory access(NUMA) based architecture, because that would allow the use of memory to be addressed globally, but placed locally to each node. | ||

| Line 21: | Line 25: | ||

For the above mentioned reasons, a message passing model is used for communication between nodes. A message passing model augmented with a point to point connection between nodes is also preferable. The following section explains why. | For the above mentioned reasons, a message passing model is used for communication between nodes. A message passing model augmented with a point to point connection between nodes is also preferable. The following section explains why. | ||

=== | ===Coherence implementation on the DSM=== | ||

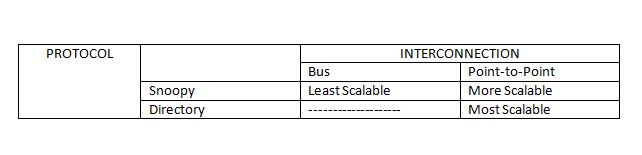

As explored in the previous section, it is not preferable to use a monlithic bus for communication. Instead, short wires are used for point-to-point interconnections. As a result, these wires can afford to have greater frequency clocked. The bandwidth can also now afford to increase. | As explored in the previous section, it is not preferable to use a monlithic bus for communication. Instead, short wires are used for point-to-point interconnections. As a result, these wires can afford to have greater frequency clocked. The bandwidth can also now afford to increase. | ||

[[image:Protocol_and_interconnect.JPG|thumb|center| | [[image:Protocol_and_interconnect.JPG|thumb|center|600px|alt=Distributed Memory System.|Efficiency of point-to-point interconnect for a DSM [[#References|<sup>[3]</sup>]].]] | ||

As illustrated in the figure, a point-to-point interconnection is coupled with a directory based protocol for a DSM. Since the memory is distributed, a directory can be maintained, which has information about which caches have a copy of memory blocks. Having a directory based protocol, with each node having information about the blocks maintatined by the caches, each time a read/write is done by a cache of a node, based on it being a hit or miss, allows a node to contact the directory system, and then send updates/signals to only those nodes in the system, thus avoiding traffic. | As illustrated in the figure, a point-to-point interconnection is coupled with a directory based protocol for a DSM. Since the memory is distributed, a directory can be maintained, which has information about which caches have a copy of memory blocks. Having a directory based protocol, with each node having information about the blocks maintatined by the caches, each time a read/write is done by a cache of a node, based on it being a hit or miss, allows a node to contact the directory system, and then send updates/signals to only those nodes in the system, thus avoiding traffic. | ||

===Hardware DSM=== | ===Hardware DSM=== | ||

| Line 37: | Line 40: | ||

<li> Reflective Memory systems</li> | <li> Reflective Memory systems</li> | ||

</ul> | </ul> | ||

CC-NUMA being the most important is discussed here. | CC-NUMA being the most important is discussed here.COMA and RM are explained in detail in [[#References|<sup>[5]</sup>]] for further reading. | ||

| Line 46: | Line 49: | ||

There if a fixed physical memory location for each data block in CC-NUMA. Directory based architecture is used in which each cluster has equal parts of systems shared memory which is called the home property.The various coherence protocols, | There if a fixed physical memory location for each data block in CC-NUMA. Directory based architecture is used in which each cluster has equal parts of systems shared memory which is called the home property.The various coherence protocols, memory consistency models like weak consistency and processor consistency are implemented in CC-NUMA. The CC-NUMA is simple and cost effective but when multiple processors want to access the same block in succession there can be a huge performance downfall for CC-NUMA [[#References|<sup>[6]</sup>]]. | ||

The hardware DSM fairs better than software DSM in terms of performance but the cost of hardware is very high and hence changes are being made in the implementation of software DSM for better performance and hence this article will deal in detail with software DSM ,their performance and performance improvement measures. | The hardware DSM fairs better than software DSM in terms of performance but the cost of hardware is very high and hence changes are being made in the implementation of software DSM for better performance and hence this article will deal in detail with software DSM ,their performance and performance improvement measures. | ||

=== Software DSM === | |||

The software components of a DSM form a critical part of the DSM application. Robust Software design decisions better help the DSM system to work efficiently. The idea of a good software component for a DSM depends on factors like portability to different operating systems, efficient memory allocation, and coherence protocols to make sure the data in maintained up to date. As we shall see further, in modern day DSMs the software needs to co-operate with the underlying hardware in order to ensure ideal system performance.[[#References|<sup>[9]</sup>]] | |||

The software components of a DSM form a critical part of the DSM application. Robust Software design decisions better help the DSM system to work efficiently. The idea of a good software component for a DSM depends on factors like portability to different operating systems, efficient memory allocation, and | |||

In case of a distributed shared memory, it is imperative that a software component that can ease the burden of the programmer writing message passing programs. The software distributed shared memory is responsible for actually providing the abstraction of a shared memory over a hardware. | In case of a distributed shared memory, it is imperative that a software component that can ease the burden of the programmer writing message passing programs. The software distributed shared memory is responsible for actually providing the abstraction of a shared memory over a hardware. | ||

== Fine- grained v Coarse grained approaches to software shared memory == | ==== Fine- grained v Coarse grained approaches to software shared memory ==== | ||

In the early days of the DSM development, a so-called "fine-grained" approach was implemented which used virtual memory based pages. Page faults caused invalidations and updates to occur. But the challenges caused by this model are the fact that synchronization within a page causes great overhead. | In the early days of the DSM development, a so-called "fine-grained" approach was implemented which used virtual memory based pages. Page faults caused invalidations and updates to occur. But the challenges caused by this model are the fact that synchronization within a page causes great overhead.[[#References|<sup>[9]</sup>]] | ||

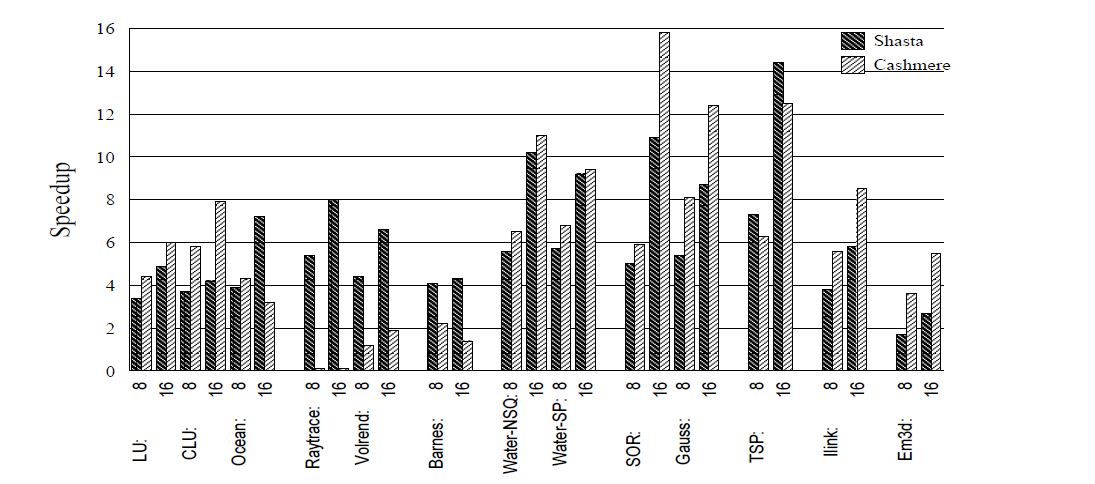

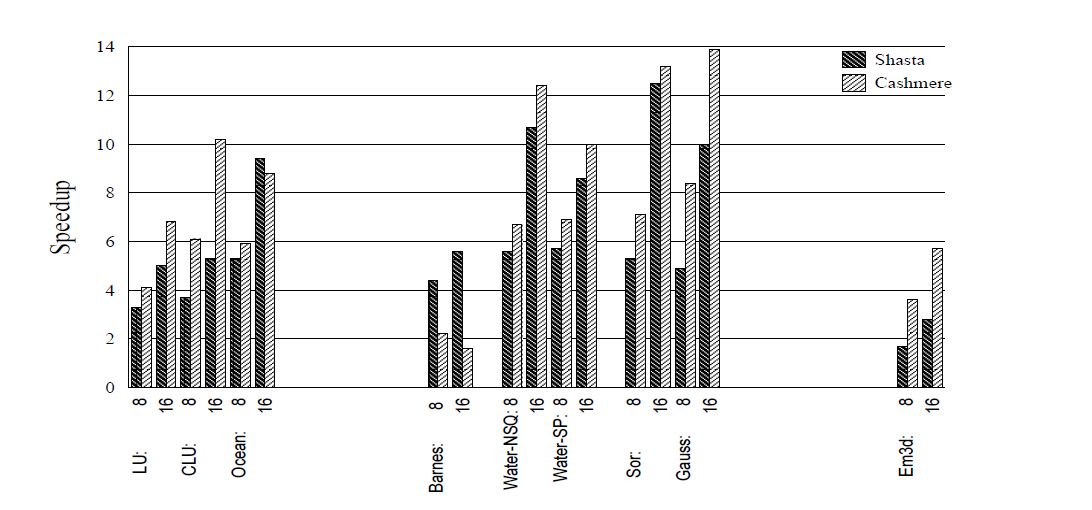

The coarse-grained approach is more a VM-based approach while the fine-grained approach is mostly an instrumentation-based approach. This section looks at how fine-grained and coarse grained approaches perform under similar underlying hardware. A good example of a fine-grained approach is the Shasta approach, while an example for the coarse-grained approach is the Cashmere approach. | The coarse-grained approach is more a VM-based approach while the fine-grained approach is mostly an instrumentation-based approach. This section looks at how fine-grained and coarse grained approaches perform under similar underlying hardware. A good example of a fine-grained approach is the Shasta approach, while an example for the coarse-grained approach is the Cashmere approach. | ||

It is shown that the Shasta performs better in applications that involve synchronization at a finer level instruction-set, while Cashmere, predictably, has a better performance on applications that have a coarse level synchronization structure. At granularity levels larger than a cache line, Shasta performs better because Shasta has more useful data in the form of pages owing to its finer granularity. If large amounts of private data needs to be synchronized, then the coarser Cashmere is preferred. In case of false sharing, Cashmere, which allows aggregation of protocol operations, performs better. False sharing is defined as misses on memory/caches that occur due to the fact that multiple threads share different blocks on different words residing in the same cache block. But in case of applications that have a high level of false write-write sharing, page based finer protocols perform better, because the software overhead required to maintain coherence is removed. This is because coherence within nodes is managed by the hardware itself. | ==== Shasta protocol ==== | ||

It is shown that the Shasta performs better in applications that involve synchronization at a finer level instruction-set, while Cashmere, predictably, has a better performance on applications that have a coarse level synchronization structure. At granularity levels larger than a cache line, Shasta performs better because Shasta has more useful data in the form of pages owing to its finer granularity. If large amounts of private data needs to be synchronized, then the coarser Cashmere is preferred. In case of false sharing, Cashmere, which allows aggregation of protocol operations, performs better. False sharing is defined as misses on memory/caches that occur due to the fact that multiple threads share different blocks on different words residing in the same cache block. But in case of applications that have a high level of false write-write sharing, page based finer protocols perform better, because the software overhead required to maintain coherence is removed. This is because coherence within nodes is managed by the hardware itself. [[#References|<sup>[9]</sup>]] | |||

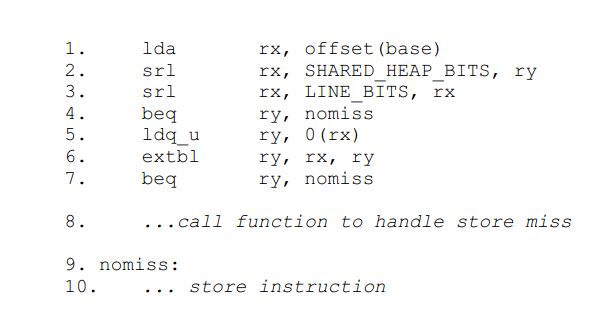

Shasta uses an interesting approach for inter and intra-node communication. Firstly, it uses timely checks to determine if misses occur and processes them in software. The shared address space is divided into blocks, which are different for different systems. The blocks are further divided into lines, and each line maintains its state information in a state table. These periodic checks for misses take up a mere 7 instructions. Stack and memory local to a thread is not checked because they are not shared among nodes. Optimizing these checks are done by aggregating checks for nearby blocks. | Shasta uses an interesting approach for inter and intra-node communication. Firstly, it uses timely checks to determine if misses occur and processes them in software. The shared address space is divided into blocks, which are different for different systems. The blocks are further divided into lines, and each line maintains its state information in a state table. These periodic checks for misses take up a mere 7 instructions. Stack and memory local to a thread is not checked because they are not shared among nodes. Optimizing these checks are done by aggregating checks for nearby blocks. | ||

[[image:Store_miss_code.JPG|thumb|center|400px|alt=CC-NUMA.|The 7 instruction Periodic Miss check done in software[[#References|<sup>[7]</sup>]].]] | [[image:Store_miss_code.JPG|thumb|center|400px|alt=CC-NUMA.|The 7 instruction Periodic Miss check done in software[[#References|<sup>[7]</sup>]].]] | ||

The code first checks if the target address is in shared memory | The code first checks if the target address is in shared memory, and if it is not, goes ahead with executing the rest of the code. Otherwise, as shown it checks the state table entry for the location of the block. Optimization done to arrive at this code are to not save or restore registers and look for unused registers to perform the operation. Stack pointers and global pointers, which correspond to data private to a thread are not checked.[[#References|<sup>[10]</sup>]] | ||

There are challenges that are posed with the above approach. Sometimes, these checks for misses create a huge overhead, often exceeding the time taken for sequential execution itself! | |||

==Performance of DSM== | <b>Optimization for improving performance of Shasta</b> | ||

There is an efficient optimization technique for communication between and within nodes. Within a node, race conditions are avoided by locking blocks individually during coherence protocol operations. Instead of a bus based system within a node, messages are sent between nodes instead. Processes must also wait until all operations on the node pending are complete. If the program already has provisions for handling race conditions, this time wait required for release can be removed. Messages from other processors are done through polling. No interrupt based mechanism is used for message handling because of a high cost associated with it. This way, the processor is free to do other tasks. Polling is done when waiting for messages, and the polling location is common to all processors within a node, which is very efficient. Also just three instructions are needed to do the polling activity.[[#References|<sup>[7]</sup>]] | |||

==== Cashmere protocol ==== | |||

Cashmere is purely a page-based protocol for a distributed shared memory system. It requires the application programmer to handle data race conditions. Shared memory accesses are handled using a lock mechanism of 'Acquire' and 'Release'. As the name suggests, 'Acquire' gains access to shared data, while 'Release' grants access to data it has previously been holding. Invalidations are sent during the release operation, and its effects are only seen during the next acquire, during which any other operations that depend on that update can proceed with acquiring the lock. Hence, Cashmere forces the programmer to take into account these factors.[[#References|<sup>[7]</sup>]] | |||

Cashmere uses the Memory Channel(MC) network to maintain directories that possess information of its constituent pages. Page faults are used that allow requests to be made for an up to date copy of the block. Modifications made by each node, as a result of writes, are also kept a track of by the directory. A per-processor dirty list is maintained that has all the updates made by a processor to a particular page. If the list of writers does not include the home node, ownership of the home to the processor currently writing it. And if that processor is the only one holding a copy of that block, it is moved to an exclusive state.[[#References|<sup>[7]</sup>]] | |||

<b>Optimization for improving performance of Cashmere</b> | |||

Cashmere uses a concept of twin to keep track of local updates to a copy of the block. The twin page is created when the processor that is the home node does not hold the exclusive copy of the block. When a release is done, the twin is compared to the newly written node. The difference is what is present in the home node, which now holds the clean copy of the block . The processor holding the release sends write updates to all dirty sharers of the block. The releaser downgrades permission for writes maintained by all copies holding the dirty version of the block. Then, the list of all nodes who performed a write on the block is also cleared.[[#References|<sup>[7]</sup>]] | |||

Cashmere uses hardware support for performing its coherence. The concept of coalescing, where updates from different processors on the same node are aggregated and updated, allows much reduced overhead instead of processing each write request one by one. The concept of diffs on these twin pages on the coarse grained blocks that Cashmere operates on is what allows this protocol to operate efficiently.[[#References|<sup>[7]</sup>]] | |||

==== Challenges faced by Cashmere and Shasta==== | |||

Cashmere makes heavy use of the Memory channel for communication between nodes. It depends on the programmer for maintaining causality of messages after a processor releases the lock. Thus Cashmere assumes global ordering of messages in order to work correctly. It is too expensive to move away from the MC protocol for the Cashmere, since protocol changes need to be made at the code level. Shasta on the other hand is designed for networks that support fast message passing between nodes. Shasta, though, faces the challenge of implementation. Detailed know-how of the compiler and the underlying processor is required to build a Shasta system. It is a challenge to move architecture design to another type, as number of processors that need to access memory increase. Cashmere on the other hand has close to no tolerance on the underlying processor architecture because it supports virtual memory, and works on a trap based mechanism to handle misses.[[#References|<sup>[7]</sup>]] | |||

[[image:Shasta_cashmere_small_dataset.JPG|thumb|center|600px|alt=CC-NUMA.|Speedup comparison for Cashmere and Shasta for a small data set[[#References|<sup>[7]</sup>]].]][[image:Shasta_cashmere_large_dataset.JPG|thumb|center|600px|alt=CC-NUMA.|Speedup comparison for Cashmere and Shasta for a large data set[[#References|<sup>[7]</sup>]].]] | |||

==Factors affecting the Performance of DSM== | |||

===<b>Memory Consistency Model</b>=== | ===<b>Memory Consistency Model</b>=== | ||

A memory consistency model in a shared memory space gives the order in which the memory can be accessed. The | A memory consistency model in a shared memory space gives the order in which the memory can be accessed. The type of memory consistency model chosen will affect the performance of the system. In DSM using a strict consistency model makes it easier for the programmer, an example of strict memory consistency model is sequential consistency. When using sequential consistency the results are similar to executing all the instructions in sequential order and the instructions should be executed in the program order specified for each processor. These properties of sequential consistency decrease the performance of parallel programs and can cause an overhead for performance for programs which have a higher parallelism. The higher granularity leads to false sharing which is also another performance issue that can cause an overhead for software DSM.[[#References|<sup>[9]</sup>]] | ||

<b>Improvement for Memory Consistency</b> | <b>Improvement for Memory Consistency</b> | ||

Using a memory consistency model cannot be avoided and hence many relaxed consistency models were developed with a goal to improve performance of software DSM systems. Release consistency showed better performance than most other relaxed consistency models. Number of messages sent is also affecting the performance of software DSM as sending a message is more costly than hardware DSM. In a lazy implementation of Release consistency all the messages are buffered until a release occurs and are sent out as a single message which decreases the communication overhead also. The modifications done by a processor are also transmitted to only the processor which acquires the block and hence also decrease the amount of data sent along with number of messages sent. | Using a memory consistency model cannot be avoided and hence many relaxed consistency models were developed with a goal to improve performance of software DSM systems. Release consistency showed better performance than most other relaxed consistency models. Number of messages sent is also affecting the performance of software DSM as sending a message is more costly than hardware DSM. In a lazy implementation of Release consistency all the messages are buffered until a release occurs and are sent out as a single message which decreases the communication overhead also. The modifications done by a processor are also transmitted to only the processor which acquires the block and hence also decrease the amount of data sent along with number of messages sent.[[#References|<sup>[9]</sup>]] | ||

===<b>Cache Coherence Protocol</b>=== | ===<b>Cache Coherence Protocol</b>=== | ||

In a DSM system to make sure that when changes are made in a page which is cached in multiple locations the change has to be updated to other copies or the other copies should be invalidated. The protocols are called write update or write invalidate based on the protocol followed to maintain a coherent view of shared data. | In a DSM system to make sure that when changes are made in a page which is cached in multiple locations the change has to be updated to other copies or the other copies should be invalidated. The protocols are called write update or write invalidate based on the protocol followed to maintain a coherent view of shared data. | ||

<b>Write Invalidate vs Write update:</b> Write invalidate protocol invalidates all the shared copies of a block when it is being modified. The disadvantage of write invalidate protocol is that it causes a lot of false sharing (even if different part of the block is modified all copies are invalidated) whether or not the modified block is shared by other processors. | <b>Write Invalidate vs Write update:</b> Write invalidate protocol invalidates all the shared copies of a block when it is being modified. The disadvantage of write invalidate protocol is that it causes a lot of false sharing (even if different part of the block is modified all copies are invalidated) whether or not the modified block is shared by other processors.[[#References|<sup>[9]</sup>]] | ||

In case if update protocols all the shared part of the block are updated immediately after an update, it reduces false sharing but it updates the shared blocks even if they are not in use. | In case if update protocols all the shared part of the block are updated immediately after an update, it reduces false sharing but it updates the shared blocks even if they are not in use. | ||

| Line 91: | Line 115: | ||

<b>Snoopy vs Directory based:</b> | <b>Snoopy vs Directory based:</b> | ||

Traditionally for DSM snoopy and directory based are the two protocols used. Snoopy protocol provides scalability to point to point connections and is less scalable in bus based systems but it needs hardware support and is not widely used for software DSM. For data that is frequently read and written there is lot more traffic saving in using directory based protocol that snoopy. | Traditionally for DSM snoopy and directory based are the two protocols used. Snoopy protocol provides scalability to point to point connections and is less scalable in bus based systems but it needs hardware support and is not widely used for software DSM. For data that is frequently read and written there is lot more traffic saving in using directory based protocol that snoopy. [[#References|<sup>[9]</sup>]] | ||

<b>Organization of directory based protocol:</b> | <b>Organization of directory based protocol:</b> | ||

In directory based protocols the performance of DSM mainly depends on the organization of the directory. There are two kinds of directory based protocols memory based directory and cache based directory. In memory based directory the directory is placed in the main memory. In cache based directory each cache has a part of the directory. Cache based directory is scalable but very complicated to implement. A centralized memory based directory will a cause bottle neck as only one node has the directory and all if many nodes need the information at the same time. The centralized directory is not scalable as well as the directory is away from most of the processors and this can also affect performance of the system as memory latency will increase with the increase in distance from the processor. | In directory based protocols the performance of DSM mainly depends on the organization of the directory. There are two kinds of directory based protocols memory based directory and cache based directory. In memory based directory the directory is placed in the main memory. In cache based directory each cache has a part of the directory. Cache based directory is scalable but very complicated to implement. A centralized memory based directory will a cause bottle neck as only one node has the directory and all if many nodes need the information at the same time. The centralized directory is not scalable as well as the directory is away from most of the processors and this can also affect performance of the system as memory latency will increase with the increase in distance from the processor. [[#References|<sup>[8]</sup>]] | ||

<b>Physical location of the directory:</b> | <b>Physical location of the directory:</b> | ||

If main memory is used for storing the directories it causes contention of main memory bandwidth as directory information should be accessed along with data from the main memory. The type of storage used also is a concern for performance as DRAM is dense but has slower access and SRAM is less dense and is faster and hence based on the need of the application a different physical location is chosen. | If main memory is used for storing the directories it causes contention of main memory bandwidth as directory information should be accessed along with data from the main memory. The type of storage used also is a concern for performance as DRAM is dense but has slower access and SRAM is less dense and is faster and hence based on the need of the application a different physical location is chosen.[[#References|<sup>[8]</sup>]] | ||

===<b>Improvement | Additional memory to maintain the directory is still a performance issue for directory based protocols. As the number of nodes increase the amount of memory for directory increases. The scalability of the protocol is limited due to the complexity to keep the copies coherent and also the memory considerations.[[#References|<sup>[9]</sup>]] | ||

===<b> Performance Improvement for Cache Coherence protocols</b>=== | |||

We first discuss how the directory based protocol can be improved and then provide another protocol which is not largely used but has a better performance than a directory based protocol | We first discuss how the directory based protocol can be improved and then provide another protocol which is not largely used but has a better performance than a directory based protocol | ||

| Line 106: | Line 132: | ||

<b>Organization:</b> | <b>Organization:</b> | ||

A distributed directory approach can be used to remove the bottle neck and improve the performance of DSM over centralized directory. The distributed approach maintains the directory information on many nodes and makes sure no bottle necks occur and also by keeping the directory information of a block in its home node. This reduces the memory latency as we can easily find the home node of the block and hence easily identify the directory information for that block. | A distributed directory approach can be used to remove the bottle neck and improve the performance of DSM over centralized directory. The distributed approach maintains the directory information on many nodes and makes sure no bottle necks occur and also by keeping the directory information of a block in its home node. This reduces the memory latency as we can easily find the home node of the block and hence easily identify the directory information for that block.[[#References|<sup>[8]</sup>]] | ||

<b>Physical location:</b> | <b>Physical location:</b> | ||

There is no proper improvement for this but a separate memory location can be maintained for directory information and can use separate wires for accessing information which will not occupy the bandwidth needed for data. But the disadvantage of this method is excess cost. | There is no proper improvement for this but a separate memory location can be maintained for directory information and can use separate wires for accessing information which will not occupy the bandwidth needed for data. But the disadvantage of this method is excess cost.[[#References|<sup>[8]</sup>]] | ||

<b>Lock based protocol:</b> | <b>Lock based protocol:</b> | ||

A lock based cache coherence protocol is designed to eliminate the performance concerns caused by directory based systems in the DSM. In the directory based system the owner of the block had the responsibility of propagating the changes caused to all other processors sharing the block. In order to avoid broadcasting the changes to all the processors a directory had to be maintained which would cause memory overhead. To avoid this problem in the new protocol we have the associated processors for a block to actively fetch the modified block and this removes the need for having a directory as well as removes the overhead of invalidation and update messages. The processors that share a block should know when should they acquire a new value and it can be done using the Release consistency model which is the best memory consistency model. Hence the lock based protocol is more efficient and scalable than the directory based protocol extensively used in DSM’s. | A lock based cache coherence protocol is designed to eliminate the performance concerns caused by directory based systems in the DSM. In the directory based system the owner of the block had the responsibility of propagating the changes caused to all other processors sharing the block. In order to avoid broadcasting the changes to all the processors a directory had to be maintained which would cause memory overhead. To avoid this problem in the new protocol we have the associated processors for a block to actively fetch the modified block and this removes the need for having a directory as well as removes the overhead of invalidation and update messages. The processors that share a block should know when should they acquire a new value and it can be done using the Release consistency model which is the best memory consistency model. Hence the lock based protocol is more efficient and scalable than the directory based protocol extensively used in DSM’s.[[#References|<sup>[9]</sup>]] | ||

===<b>System overhead of DSM</b>=== | |||

In using a software based DSM for maintaining memory in distributed systems, a lot of overhead is encountered processing memory misses. The programmer is provided the abstraction that the processor can access any memory location in the common address space directly. The DSM layer of the processor is responsible for this abstractions. | |||

When an access miss occurs, a signal violation message is sent(SIGSEGV), and the DSM layer takes over to process this message. Each atomic operation as part of handling this access miss can be considered an overhead. The various overheads encountered as part of the violation handling process are: time spent in processing the violation handler and entering and exiting its code, interrupt time on the remote processor to retrieve the page required. | |||

With optimization in processing instructions that allow relaxed consistency models, the overhead becomes even larger. Because synchronization operations are required in multi-threaded applications that cannot afford to have a lax memory consistency model. Depending on the cache coherence protocol being used, aggregating operations could be done where multiple writes can occur, and all of the locks acquired to execute the write operation may be required to be released before the next write can be done.[[#References|<sup>[9]</sup>]] | |||

In operations that use a diff and twin based mechanism for aggregating writes and changing the home node, extra overhead is consumed. These overheads are discernible performance costs that affect the efficiency of DSM systems. | |||

===<b>Load balancing and scheduling in DSM</b>=== | |||

A big challenge faced in a DSM is the meta computing and its efficiency across a network of computers. Important large computation tasks are performed by a Network of workstations(NOW). These NOWs need to be assigned resources and processing duties, and scalability needs to be done according to the size of the given task. For this a meta computing environment is necessary with a provision for dynamic scheduling to achieve better performance and utilization. [[#References|<sup>[9]</sup>]] | |||

The processing done at various nodes belonging to the NOWs need to be scheduled according to the distribution of data in those nodes. If the operations performed are heterogeneous across the nodes, then a dynamic work and load balancing mechanism is to be used. One such scheduling methodology is loop scheduling. This dynamic protocol allows the meta computing logic to dynamically vary the amount of work done by the nodes belonging to a cluster. If a particular application involves multiple contexts of execution in a DOALL loop, then it is possible to change the number of iterations performed by each processor, such that the overall load is balanced across the processing nodes. The main disadvantage of this rather naive approach is its affinity to the fine-grain allocation of resources. If the instructions to be performed atomically by a single processor turns out to be heterogeneous, it may be impossible for an allocating mechanism to balance the load equally across all processors. Additional overhead could be caused due to the time required to allocate, balance and enable remote communication for loop scheduling. This is not assuming the inherent and practical heterogeneity in the underlying hardware, and their less than equal computing power, which could further cause load imbalance. In practical applications, static scheduling, which allocates balancing at compile time, is also not an option, and could in fact be even worse than a dynamic scheduling decision. Static scheduling is however preferred in a simple embarrassingly parallel environment, and has negligible loop allocation overhead involved. [[#References|<sup>[9]</sup>]] | |||

The practical approach to scheduling jobs in the DSM is dynamic scheduling. The self scheduling mechanism allows each processor to fetch an iteration when it becomes idle. The disadvantage of this is its lack of scalability, with the overhead involved in loop scheduling being proportional to the number of processors in the NOW. | |||

The most efficient allocation and balancing scheme is the affinity based scheduling, where a deterministic policy is used to allocate a particular task to a processor, based on its affinity. A processor is assigned repeated executions of a loop based on its previous executions, thus ensuring memory hits locally and minimizing remote page retrievals. This protocol uses per-processor local queues, that reduces the synchronization overhead required for communication between processors. Processors also grab tasks from loaded processors, thus allowing for a distributed scheduling structure.[[#References|<sup>[9]</sup>]] | |||

The load balancing for a DSM varies between implementations, and is extremely application and industry specific. An ideal scheduling protocol can be rendered useless due to the different computing powers of the nodes in the underlying hardware. | |||

===<b>Communication overhead in DSMs</b>=== | |||

The communication overhead can be considered to be the most expensive in terms of DSM performance. While processor speed is high, the distributed nature of memory in the DSM, and the latency caused due to the inter-node communication requires network bandwidth to be utilized. This is a challenge but also provides for a great room for improving DSM performance. [[#References|<sup>[9]</sup>]] | |||

The improvement in communication in a DSM system can be done in the application level. High level design decisions pertaining to the type of network protocol being used plays a huge part. Using relaxed memory consistency models, and other techniques like efficient distribution of data to minimize has brought down the effect of communication in DSM performance. The main overhead involved in communication in a DSM system is the requirement to invoke interrupts, and kernel operations. Thus, communication techniques can also be improved at a lower level of abstraction to improve overall performance. Also, either TCP or UDP can be used depending on the error tolerance of the application. Early implementations of the DSM had provisions for message passing and remote memory write, but not interrupt-based remote communications.[[#References|<sup>[9]</sup>]] | |||

A DSM should also ensure robust design for buffers for sending packets across the networks. Bottlenecks due to slow network channels also cause the performance of the system to go down. Also data transfer to remote nodes can be done using DMA. But one main problem with the DMA is that mapping from physical to virtual addresses cannot be done directly unless the kernel is invoked. Thus, data needs to be copied into a buffer in the OS kernel before a DMA is done. This creates overhead.[[#References|<sup>[9]</sup>]] | |||

Network interfaces(NI) also need to make sure that they are secure. NIs ensure protection by establishing communication between the two endpoints. But this poses the problem of scalability. Due to the limited memory in each NI, only a few processes can concurrently access the NI. | |||

Communication can also be improved by arrival notification. The overhead of handling acknowledgement from many processors. This is done by simply polling the NI's status flag for an acknowledgement. The overhead in performing this operations can be reduced by increasing the time between checks. This way, based on the application and its tolerance for overhead, one can set the frequency of polling.[[#References|<sup>[9]</sup>]] | |||

==Conclusions and future direction of DSM== | |||

The DSM model has been improved greatly since its early design days. Changes have been made to improve communication between processors within a single node. Lock-based protocols tend to work better in a NOW based environment. The home based concept of holding data in a DSM allows for flexible placement of data blocks according to it frequency of use. Updates to home nodes are done eagerly, while information fetched by other nodes are done in a lazy format. [[#References|<sup>[9]</sup>]] | |||

Research on improvements to the DSM system are mostly done in the area of improving network performance, and optimization and minimization of remote retrieval of blocks. Algorithms have been developed, like the JIAJIA's memory organization scheme, where no page copy is requried to be made during home migration phase. Further research has also been done in improving the affinity based scheduling of processor tasks.[[#References|<sup>[9]</sup>]] | |||

The DSM will move away from trying to achieve a homogeneous protocol in performing its tasks. CPUs can also delegate intensive tasks to auxiliary processors like the Graphics Processing Unit(GPU) cluster. These accelerator memory components should not rely on the programmer to manage the data transfers between the CPU and the accelerators. This is done by allowing the CPUs to maintain a shared memory for accessing the objects in the accelerators' physical memory, but not vice versa. Thus, if a process/application requires accelerator to perform the action, the physical memory can also be assigned and operated upon by the CPU. This allows for an elegant context switch between processors. Heterogeneous systems benefit from a data-centric programming model, where programmers control the data transfer between general purpose CPUs and GPUs. The asymmetry is taken advantage of, with all the configuration, coherence and consistency actions to be executed on the CPU. Looking at applications of DSM mostly in the field of supercomputing, data-centric computing methodologies is the future, and combining CPUs and GPUs as part of the DSM is a promising way ahead. | |||

[[#References|<sup>[11]</sup>]] | |||

==Glossary== | |||

<b>Distributed Shared Memory</b>- A class of memory architecture where memories which are physically separated, but logically a single entity in a shared address space. | |||

<b>Non-Uniform Memory Access(NUMA)</b> - Memory design used in multiprocessors, where memory access times depend on the proximity of the data being accessed to the processor accessing it. | |||

<b>Snoopy protocol</b>- Protocol in which cache coherence communication takes place via bus transactions, which are picked up by the processors that its relevant to. | |||

<b>Directory protocol</b>- Protocol of cache coherence where a directory is maintained, which holds information indicating which processor's cache holds data pertaining to a memory location. | |||

<b>Cache coherent NUMA</b>- NUMA based architecture which has a special-purpose hardware to maintain cache coherence. Uses inter-processor communication between cache controllers to keep a consistent memory image when more than one cache stores the same memory location. | |||

<b>Shasta protocol</b>- A cache coherent protocol for DSMs that supports a shared address space in software in a cluster/distributed system. Shared data can be kept coherent at a fine granularity. Shasta implements this coherence by inserting inline code that checks the cache state of shared data before each load or store . | |||

<b>Cashmere protocol</b>- Protocol that uses a virtual memory based mechanism at page level granularity to maintain cache coherence across processors. It can be considered a coarsely granular memory coherence protocol. | |||

<b>Load balancing</b>- Methodology to distribute workload across multiple computers or a computer cluste | |||

<b>Network of Workstations(NOW)</b>- A loosely based term for computer clusters, i.e. a system loosely connected computers that work together so that in many respects they can be viewed as a single system. | |||

<b>Graphics processing Unit(GPU)</b>- Specialized processor, intended to rapidly manipulate a data intensive input buffer, with a parallel processing paradigm. Can be used in embedded devices, mobile phones, or just as an auxiliary processor to the computer's CPU. | |||

==References== | ==References== | ||

<ol> | <ol> | ||

| Line 125: | Line 215: | ||

<li>[http://www.hpl.hp.com/techreports/Compaq-DEC/CRL-98-6.pdf Comparative Evaluation of Fine- and Coarse-Grain Software Distributed Shared Memory]</li> | <li>[http://www.hpl.hp.com/techreports/Compaq-DEC/CRL-98-6.pdf Comparative Evaluation of Fine- and Coarse-Grain Software Distributed Shared Memory]</li> | ||

<li>[http://www.cesr.ncsu.edu/solihin/Main.html Fundamentals of Parallel Computer Architecture]</li> | <li>[http://www.cesr.ncsu.edu/solihin/Main.html Fundamentals of Parallel Computer Architecture]</li> | ||

<li>[http://www.cs.wayne.edu/~weisong/papers/shi00-jiajia.pdf Performance Optimization of Software Distributed Shared Memory Systems]</li> | |||

<li>[http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-97-2.pdf Performance of Shasta Distributed Shared Memory Systems]</li> | |||

<li>[http://delivery.acm.org/10.1145/1740000/1736059/p347-gelado.pdf?ip=152.14.220.57&acc=ACTIVE%20SERVICE&CFID=77654166&CFTOKEN=41817975&__acm__=1334607466_39fb7a7d962905dfcdf4c20dc36b621c An Asymmetric Distributed Shared Memory Model for Heterogeneous Parallel Systems] </li> | |||

</ol> | </ol> | ||

Latest revision as of 22:12, 26 April 2012

Evolution of DSM

The Shared Memory system is not scalable for a large network with multiple nodes and Distributed Memory systems is complex to program and less efficient. The Distributed Shared Memory (DSM) was built to remove the disadvantages of both systems and combine the advantages.

The Shared Memory system has a group of processors sharing global memory which allows any processor to access the memory location[1]. The Distributed memory systems have a cluster each (i.e each processor has its own memory location ) and use message passing to communicate between the clusters[2]. The DSM on the other hand has several processors sharing the same address space i.e a location in memory will be the same physical address for all processors. Unlike the Shared Memory System they do not actually use a global memory which is accessible by all processors, instead it is a logical abstraction of a single address space for different memory locations which can be accessed by all processors.

From the above pictures it can be noticed that in Distributed Systems each processor has its own memory,but in case of Distributed Shared memory though each processor has its own memory there is an abstraction that all processors have the same view of memory i.e the main memory of a cluster of processors is made to look like a single memory with a single address space. The Distributed systems are scalable for a large number of processors but is difficult to program because of message passing. The Shared Memory on the other hand is less scalable but is easy to program as all the processors can access each others data and makes parallel programming easier. Therefore DSM uses a more scalable and less expensive model by supporting the abstraction of shared memory by using message passing. The DSM allows the programmer to share and use variables without having to worry about their management. Hence a processor can access a address space held by other processors main memory. It allows end-users to use the shared memory without knowing the message passing,the idea is to allow inter processor communication which is invisible to the user.

A DSM system in general has clusters connected to a interconnection network. A cluster can have a uni-processor or multi-processor system with local memory and cache to remove memory latency. The local memory of a cluster is entirely or partially mapped to the DSM global memory system. A directory is maintained which has information of location of each data block and the state of the block. Directory organization can be that of a linked list,double linked list or a full map storage,the organization of directory will affect the system scalabilty[5].

A DSM system provides the shared memory abstraction by creating a software which would be cost efficient as it would not change anything in the cluster. Some systems provide the abstraction in hardware which could cost extra but the performance of hardware based DSM is better than software based [4].

Challenges of using a Bus Based protocol for a Distributed Memory

The Distributed Shared Memory(DSM) architecture requires a shared memory space. Message passing using interconnect networks is the preferred mode of communication to maintain coherence in a DSM. This is because

- Physically, the DSM is designed to allow a large number of nodes to interact with each other. With a bus-based system, the bus simply becomes too long to connect various nodes of a DSM. In a bus based multiprocessor for a smaller set of nodes having a common memory, latency of communication is not discernable. Also because the nodes of a bus-based system use snoopy protocols, clock frequency synchronization is also critical. With larger number of nodes and a longer bus, maintaining clock frequency becomes a bigger challenge. - Whatever cache coherence protocol is used, a larger number of nodes leads to a larger number of transactions on the bus. The bus using a shared channel to transfer cache blocks/lines, an increase in bus traffic causes greater latency in communcation. - It is preferable to design a DSM on a non-uniform memory access(NUMA) based architecture, because that would allow the use of memory to be addressed globally, but placed locally to each node.

For the above mentioned reasons, a message passing model is used for communication between nodes. A message passing model augmented with a point to point connection between nodes is also preferable. The following section explains why.

Coherence implementation on the DSM

As explored in the previous section, it is not preferable to use a monlithic bus for communication. Instead, short wires are used for point-to-point interconnections. As a result, these wires can afford to have greater frequency clocked. The bandwidth can also now afford to increase.

As illustrated in the figure, a point-to-point interconnection is coupled with a directory based protocol for a DSM. Since the memory is distributed, a directory can be maintained, which has information about which caches have a copy of memory blocks. Having a directory based protocol, with each node having information about the blocks maintatined by the caches, each time a read/write is done by a cache of a node, based on it being a hit or miss, allows a node to contact the directory system, and then send updates/signals to only those nodes in the system, thus avoiding traffic.

Hardware DSM

To achieve the hardware DSM we need special kind of network interfaces and cache coherence circuits. There are 3 prominent groups of hardware which are interesting

- Cache Coherent Non Uniform Memory Architecture (CC-NUMA)

- Cache only Memory Architecture(COMA)

- Reflective Memory systems

CC-NUMA being the most important is discussed here.COMA and RM are explained in detail in [5] for further reading.

CC-NUMA

A CC-NUMA architecture has its shared virtual address space distributed between various clusters local memories so that the local processor as well as other processors in the network can access with different access time as it is a non uniform memory architecture. "The address space of the architecture maps onto the local memories of each cluster in a NUMA fashion[5]". The cache in each cluster is used to replicate blocks of memory which are reserved in remote hosts.

There if a fixed physical memory location for each data block in CC-NUMA. Directory based architecture is used in which each cluster has equal parts of systems shared memory which is called the home property.The various coherence protocols, memory consistency models like weak consistency and processor consistency are implemented in CC-NUMA. The CC-NUMA is simple and cost effective but when multiple processors want to access the same block in succession there can be a huge performance downfall for CC-NUMA [6].

The hardware DSM fairs better than software DSM in terms of performance but the cost of hardware is very high and hence changes are being made in the implementation of software DSM for better performance and hence this article will deal in detail with software DSM ,their performance and performance improvement measures.

Software DSM

The software components of a DSM form a critical part of the DSM application. Robust Software design decisions better help the DSM system to work efficiently. The idea of a good software component for a DSM depends on factors like portability to different operating systems, efficient memory allocation, and coherence protocols to make sure the data in maintained up to date. As we shall see further, in modern day DSMs the software needs to co-operate with the underlying hardware in order to ensure ideal system performance.[9]

In case of a distributed shared memory, it is imperative that a software component that can ease the burden of the programmer writing message passing programs. The software distributed shared memory is responsible for actually providing the abstraction of a shared memory over a hardware.

In the early days of the DSM development, a so-called "fine-grained" approach was implemented which used virtual memory based pages. Page faults caused invalidations and updates to occur. But the challenges caused by this model are the fact that synchronization within a page causes great overhead.[9]

The coarse-grained approach is more a VM-based approach while the fine-grained approach is mostly an instrumentation-based approach. This section looks at how fine-grained and coarse grained approaches perform under similar underlying hardware. A good example of a fine-grained approach is the Shasta approach, while an example for the coarse-grained approach is the Cashmere approach.

Shasta protocol

It is shown that the Shasta performs better in applications that involve synchronization at a finer level instruction-set, while Cashmere, predictably, has a better performance on applications that have a coarse level synchronization structure. At granularity levels larger than a cache line, Shasta performs better because Shasta has more useful data in the form of pages owing to its finer granularity. If large amounts of private data needs to be synchronized, then the coarser Cashmere is preferred. In case of false sharing, Cashmere, which allows aggregation of protocol operations, performs better. False sharing is defined as misses on memory/caches that occur due to the fact that multiple threads share different blocks on different words residing in the same cache block. But in case of applications that have a high level of false write-write sharing, page based finer protocols perform better, because the software overhead required to maintain coherence is removed. This is because coherence within nodes is managed by the hardware itself. [9]

Shasta uses an interesting approach for inter and intra-node communication. Firstly, it uses timely checks to determine if misses occur and processes them in software. The shared address space is divided into blocks, which are different for different systems. The blocks are further divided into lines, and each line maintains its state information in a state table. These periodic checks for misses take up a mere 7 instructions. Stack and memory local to a thread is not checked because they are not shared among nodes. Optimizing these checks are done by aggregating checks for nearby blocks.

The code first checks if the target address is in shared memory, and if it is not, goes ahead with executing the rest of the code. Otherwise, as shown it checks the state table entry for the location of the block. Optimization done to arrive at this code are to not save or restore registers and look for unused registers to perform the operation. Stack pointers and global pointers, which correspond to data private to a thread are not checked.[10]

There are challenges that are posed with the above approach. Sometimes, these checks for misses create a huge overhead, often exceeding the time taken for sequential execution itself!

Optimization for improving performance of Shasta

There is an efficient optimization technique for communication between and within nodes. Within a node, race conditions are avoided by locking blocks individually during coherence protocol operations. Instead of a bus based system within a node, messages are sent between nodes instead. Processes must also wait until all operations on the node pending are complete. If the program already has provisions for handling race conditions, this time wait required for release can be removed. Messages from other processors are done through polling. No interrupt based mechanism is used for message handling because of a high cost associated with it. This way, the processor is free to do other tasks. Polling is done when waiting for messages, and the polling location is common to all processors within a node, which is very efficient. Also just three instructions are needed to do the polling activity.[7]

Cashmere protocol

Cashmere is purely a page-based protocol for a distributed shared memory system. It requires the application programmer to handle data race conditions. Shared memory accesses are handled using a lock mechanism of 'Acquire' and 'Release'. As the name suggests, 'Acquire' gains access to shared data, while 'Release' grants access to data it has previously been holding. Invalidations are sent during the release operation, and its effects are only seen during the next acquire, during which any other operations that depend on that update can proceed with acquiring the lock. Hence, Cashmere forces the programmer to take into account these factors.[7]

Cashmere uses the Memory Channel(MC) network to maintain directories that possess information of its constituent pages. Page faults are used that allow requests to be made for an up to date copy of the block. Modifications made by each node, as a result of writes, are also kept a track of by the directory. A per-processor dirty list is maintained that has all the updates made by a processor to a particular page. If the list of writers does not include the home node, ownership of the home to the processor currently writing it. And if that processor is the only one holding a copy of that block, it is moved to an exclusive state.[7]

Optimization for improving performance of Cashmere

Cashmere uses a concept of twin to keep track of local updates to a copy of the block. The twin page is created when the processor that is the home node does not hold the exclusive copy of the block. When a release is done, the twin is compared to the newly written node. The difference is what is present in the home node, which now holds the clean copy of the block . The processor holding the release sends write updates to all dirty sharers of the block. The releaser downgrades permission for writes maintained by all copies holding the dirty version of the block. Then, the list of all nodes who performed a write on the block is also cleared.[7]

Cashmere uses hardware support for performing its coherence. The concept of coalescing, where updates from different processors on the same node are aggregated and updated, allows much reduced overhead instead of processing each write request one by one. The concept of diffs on these twin pages on the coarse grained blocks that Cashmere operates on is what allows this protocol to operate efficiently.[7]

Challenges faced by Cashmere and Shasta

Cashmere makes heavy use of the Memory channel for communication between nodes. It depends on the programmer for maintaining causality of messages after a processor releases the lock. Thus Cashmere assumes global ordering of messages in order to work correctly. It is too expensive to move away from the MC protocol for the Cashmere, since protocol changes need to be made at the code level. Shasta on the other hand is designed for networks that support fast message passing between nodes. Shasta, though, faces the challenge of implementation. Detailed know-how of the compiler and the underlying processor is required to build a Shasta system. It is a challenge to move architecture design to another type, as number of processors that need to access memory increase. Cashmere on the other hand has close to no tolerance on the underlying processor architecture because it supports virtual memory, and works on a trap based mechanism to handle misses.[7]

Factors affecting the Performance of DSM

Memory Consistency Model

A memory consistency model in a shared memory space gives the order in which the memory can be accessed. The type of memory consistency model chosen will affect the performance of the system. In DSM using a strict consistency model makes it easier for the programmer, an example of strict memory consistency model is sequential consistency. When using sequential consistency the results are similar to executing all the instructions in sequential order and the instructions should be executed in the program order specified for each processor. These properties of sequential consistency decrease the performance of parallel programs and can cause an overhead for performance for programs which have a higher parallelism. The higher granularity leads to false sharing which is also another performance issue that can cause an overhead for software DSM.[9]

Improvement for Memory Consistency Using a memory consistency model cannot be avoided and hence many relaxed consistency models were developed with a goal to improve performance of software DSM systems. Release consistency showed better performance than most other relaxed consistency models. Number of messages sent is also affecting the performance of software DSM as sending a message is more costly than hardware DSM. In a lazy implementation of Release consistency all the messages are buffered until a release occurs and are sent out as a single message which decreases the communication overhead also. The modifications done by a processor are also transmitted to only the processor which acquires the block and hence also decrease the amount of data sent along with number of messages sent.[9]

Cache Coherence Protocol

In a DSM system to make sure that when changes are made in a page which is cached in multiple locations the change has to be updated to other copies or the other copies should be invalidated. The protocols are called write update or write invalidate based on the protocol followed to maintain a coherent view of shared data.

Write Invalidate vs Write update: Write invalidate protocol invalidates all the shared copies of a block when it is being modified. The disadvantage of write invalidate protocol is that it causes a lot of false sharing (even if different part of the block is modified all copies are invalidated) whether or not the modified block is shared by other processors.[9]

In case if update protocols all the shared part of the block are updated immediately after an update, it reduces false sharing but it updates the shared blocks even if they are not in use.

Therefore the type of protocol should be chosen based on the application i.e., for applications with sequential sharing we can use write invalidate and write update can be sued for applications with tight sharing.

Snoopy vs Directory based: Traditionally for DSM snoopy and directory based are the two protocols used. Snoopy protocol provides scalability to point to point connections and is less scalable in bus based systems but it needs hardware support and is not widely used for software DSM. For data that is frequently read and written there is lot more traffic saving in using directory based protocol that snoopy. [9]

Organization of directory based protocol: In directory based protocols the performance of DSM mainly depends on the organization of the directory. There are two kinds of directory based protocols memory based directory and cache based directory. In memory based directory the directory is placed in the main memory. In cache based directory each cache has a part of the directory. Cache based directory is scalable but very complicated to implement. A centralized memory based directory will a cause bottle neck as only one node has the directory and all if many nodes need the information at the same time. The centralized directory is not scalable as well as the directory is away from most of the processors and this can also affect performance of the system as memory latency will increase with the increase in distance from the processor. [8]

Physical location of the directory: If main memory is used for storing the directories it causes contention of main memory bandwidth as directory information should be accessed along with data from the main memory. The type of storage used also is a concern for performance as DRAM is dense but has slower access and SRAM is less dense and is faster and hence based on the need of the application a different physical location is chosen.[8]

Additional memory to maintain the directory is still a performance issue for directory based protocols. As the number of nodes increase the amount of memory for directory increases. The scalability of the protocol is limited due to the complexity to keep the copies coherent and also the memory considerations.[9]

Performance Improvement for Cache Coherence protocols

We first discuss how the directory based protocol can be improved and then provide another protocol which is not largely used but has a better performance than a directory based protocol

Directory based protocol:

Organization: A distributed directory approach can be used to remove the bottle neck and improve the performance of DSM over centralized directory. The distributed approach maintains the directory information on many nodes and makes sure no bottle necks occur and also by keeping the directory information of a block in its home node. This reduces the memory latency as we can easily find the home node of the block and hence easily identify the directory information for that block.[8]

Physical location: There is no proper improvement for this but a separate memory location can be maintained for directory information and can use separate wires for accessing information which will not occupy the bandwidth needed for data. But the disadvantage of this method is excess cost.[8]

Lock based protocol: A lock based cache coherence protocol is designed to eliminate the performance concerns caused by directory based systems in the DSM. In the directory based system the owner of the block had the responsibility of propagating the changes caused to all other processors sharing the block. In order to avoid broadcasting the changes to all the processors a directory had to be maintained which would cause memory overhead. To avoid this problem in the new protocol we have the associated processors for a block to actively fetch the modified block and this removes the need for having a directory as well as removes the overhead of invalidation and update messages. The processors that share a block should know when should they acquire a new value and it can be done using the Release consistency model which is the best memory consistency model. Hence the lock based protocol is more efficient and scalable than the directory based protocol extensively used in DSM’s.[9]

System overhead of DSM

In using a software based DSM for maintaining memory in distributed systems, a lot of overhead is encountered processing memory misses. The programmer is provided the abstraction that the processor can access any memory location in the common address space directly. The DSM layer of the processor is responsible for this abstractions.

When an access miss occurs, a signal violation message is sent(SIGSEGV), and the DSM layer takes over to process this message. Each atomic operation as part of handling this access miss can be considered an overhead. The various overheads encountered as part of the violation handling process are: time spent in processing the violation handler and entering and exiting its code, interrupt time on the remote processor to retrieve the page required.

With optimization in processing instructions that allow relaxed consistency models, the overhead becomes even larger. Because synchronization operations are required in multi-threaded applications that cannot afford to have a lax memory consistency model. Depending on the cache coherence protocol being used, aggregating operations could be done where multiple writes can occur, and all of the locks acquired to execute the write operation may be required to be released before the next write can be done.[9]

In operations that use a diff and twin based mechanism for aggregating writes and changing the home node, extra overhead is consumed. These overheads are discernible performance costs that affect the efficiency of DSM systems.

Load balancing and scheduling in DSM

A big challenge faced in a DSM is the meta computing and its efficiency across a network of computers. Important large computation tasks are performed by a Network of workstations(NOW). These NOWs need to be assigned resources and processing duties, and scalability needs to be done according to the size of the given task. For this a meta computing environment is necessary with a provision for dynamic scheduling to achieve better performance and utilization. [9]

The processing done at various nodes belonging to the NOWs need to be scheduled according to the distribution of data in those nodes. If the operations performed are heterogeneous across the nodes, then a dynamic work and load balancing mechanism is to be used. One such scheduling methodology is loop scheduling. This dynamic protocol allows the meta computing logic to dynamically vary the amount of work done by the nodes belonging to a cluster. If a particular application involves multiple contexts of execution in a DOALL loop, then it is possible to change the number of iterations performed by each processor, such that the overall load is balanced across the processing nodes. The main disadvantage of this rather naive approach is its affinity to the fine-grain allocation of resources. If the instructions to be performed atomically by a single processor turns out to be heterogeneous, it may be impossible for an allocating mechanism to balance the load equally across all processors. Additional overhead could be caused due to the time required to allocate, balance and enable remote communication for loop scheduling. This is not assuming the inherent and practical heterogeneity in the underlying hardware, and their less than equal computing power, which could further cause load imbalance. In practical applications, static scheduling, which allocates balancing at compile time, is also not an option, and could in fact be even worse than a dynamic scheduling decision. Static scheduling is however preferred in a simple embarrassingly parallel environment, and has negligible loop allocation overhead involved. [9]

The practical approach to scheduling jobs in the DSM is dynamic scheduling. The self scheduling mechanism allows each processor to fetch an iteration when it becomes idle. The disadvantage of this is its lack of scalability, with the overhead involved in loop scheduling being proportional to the number of processors in the NOW.

The most efficient allocation and balancing scheme is the affinity based scheduling, where a deterministic policy is used to allocate a particular task to a processor, based on its affinity. A processor is assigned repeated executions of a loop based on its previous executions, thus ensuring memory hits locally and minimizing remote page retrievals. This protocol uses per-processor local queues, that reduces the synchronization overhead required for communication between processors. Processors also grab tasks from loaded processors, thus allowing for a distributed scheduling structure.[9]

The load balancing for a DSM varies between implementations, and is extremely application and industry specific. An ideal scheduling protocol can be rendered useless due to the different computing powers of the nodes in the underlying hardware.

Communication overhead in DSMs

The communication overhead can be considered to be the most expensive in terms of DSM performance. While processor speed is high, the distributed nature of memory in the DSM, and the latency caused due to the inter-node communication requires network bandwidth to be utilized. This is a challenge but also provides for a great room for improving DSM performance. [9]

The improvement in communication in a DSM system can be done in the application level. High level design decisions pertaining to the type of network protocol being used plays a huge part. Using relaxed memory consistency models, and other techniques like efficient distribution of data to minimize has brought down the effect of communication in DSM performance. The main overhead involved in communication in a DSM system is the requirement to invoke interrupts, and kernel operations. Thus, communication techniques can also be improved at a lower level of abstraction to improve overall performance. Also, either TCP or UDP can be used depending on the error tolerance of the application. Early implementations of the DSM had provisions for message passing and remote memory write, but not interrupt-based remote communications.[9]