CSC/ECE 506 Spring 2012/12b ad: Difference between revisions

No edit summary |

|||

| (13 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

==Introduction== | ==Introduction== | ||

As the number of processors in multiple-processor systems increases, increasing consideration needs to be given to how those processors communicate. With current technology, for a small number of processors [http://en.wikipedia.org/wiki/Shared_memory shared-memory] arrangements are quite effective. However, as the number of processors increases contention for available resources (memory, bus time, etc) increases, negatively impacting performance of the system. However, keeping these processors all on the same physical piece of hardware is convenient and helps performance due to physical proximity. As such, it is desirable to design hardware where [http://en.wikipedia.org/wiki/Multiprocessing many cores] are part of the same die while allowing for the performance gains possible with [http://en.wikipedia.org/wiki/Computer_network interconnections]. Recently there has been more research into these [http://en.wikipedia.org/wiki/Network_On_Chip on-chip interconnects], and this article will explore the state of those efforts. | As the number of processors in multiple-processor systems increases, increasing consideration needs to be given to how those processors communicate. With current technology, for a small number of processors [http://en.wikipedia.org/wiki/Shared_memory shared-memory] arrangements are quite effective. However, as the number of processors increases contention for available resources (memory, bus time, etc.) increases, negatively impacting performance of the system. However, keeping these processors all on the same physical piece of hardware is convenient and helps performance due to physical proximity. As such, it is desirable to design hardware where [http://en.wikipedia.org/wiki/Multiprocessing many cores] are part of the same die while allowing for the performance gains possible with [http://en.wikipedia.org/wiki/Computer_network interconnections]. Recently there has been more research into these [http://en.wikipedia.org/wiki/Network_On_Chip on-chip interconnects], and this article will explore the state of those efforts. | ||

==Topologies== | ==Topologies== | ||

The | The intricacies of semiconductor design and layout afford many different kinds of possible layouts when creating [http://en.wikipedia.org/wiki/Network_topology networking topologies] on [http://en.wikipedia.org/wiki/System-on-a-Chip SoCs]. Specifically, designs need to be amenable to creation on a two-dimension [http://en.wikipedia.org/wiki/Die_(integrated_circuit) substrate], and as such practically limits the use of some more advanced topologies like hypercubes<ref name="GrotKeckler">Grot and Keckler, [http://www.cs.utexas.edu/~bgrot/docs/CMP-MSI_08.pdf Scalable On-Chip Interconnect Topologies]</ref> (circularly-connected mesh). | ||

===Rings=== | ===Rings=== | ||

| Line 16: | Line 16: | ||

===Flattened Butterfly=== | ===Flattened Butterfly=== | ||

The flattened butterfly offers the benefits of a tree (less | The flattened butterfly offers the benefits of a tree (less constraints on root-level bandwidth [Solihin 367]) as well as the ability to actually be mapped to a substrate, but because of node concentration<ref name="GrotKeckler"/> the number of channels required for high scalability is cost- and validation-prohibitive. | ||

===Crossbar Switch=== | ===Crossbar Switch=== | ||

A crossbar switch topology uses a bus arrangement with the bus lines physically perpendicular to each other and whose intersections are connected or disconnected with a switch. In the case of [http://en.wikipedia.org/wiki/Multi-core_(computing) CMPs], this switch is a transistor or, depending on the desired characteristics of the system, a programmable fuse. Due to their ability to be [http://en.wikipedia.org/wiki/Multistage_interconnection_networks multi-staged]<ref name="wikicrossbarsemi">"[http://en.wikipedia.org/wiki/Crossbar_switch#Semiconductor Crossbar switch]." Wikipedia. Last accessed April 24, 2012.</ref>, these topologies lend themselves to being used for memory in large-scale systems. | A crossbar switch topology uses a bus arrangement with the bus lines physically perpendicular to each other and whose intersections are connected or disconnected with a switch. In the case of [http://en.wikipedia.org/wiki/Multi-core_(computing) CMPs], this switch is a transistor or, depending on the desired characteristics of the system, a programmable fuse. Due to their ability to be [http://en.wikipedia.org/wiki/Multistage_interconnection_networks multi-staged]<ref name="wikicrossbarsemi">"[http://en.wikipedia.org/wiki/Crossbar_switch#Semiconductor Crossbar switch]." Wikipedia. Last accessed April 24, 2012.</ref>, these topologies lend themselves to being used for memory in large-scale systems. The IBM Cyclops64 architecture is an example of the implementation of this architecture<ref name="cyclops64">Zhang, Ying Ping. "[http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=1639301 A study of the on-chip interconnection network for the IBM Cyclops64 multi-core architecture]. April 2006. IEEE Xplore.</ref>. | ||

==Design Considerations== | ==Design Considerations== | ||

===Energy Consumption=== | ===Energy Consumption=== | ||

Multi-processor SoCs require additional energy in order to operate the on-chip interconnection hardware like routers. Too, the links between components can introduce increased losses in regards to required voltages and physical arrangement. Indeed, "on-chip network power has been estimated to consume up to 28% of total chip power" due to "channels, router fifos and router crossbar fabrics"<ref name="GrotKeckler" />. Simple topologies use less power due to simpler routers, but the increased number of hops can lead to | Multi-processor SoCs require additional energy in order to operate the on-chip interconnection hardware like routers. Too, the links between components can introduce increased losses in regards to required voltages and physical arrangement. Indeed, "on-chip network power has been estimated to consume up to 28% of total chip power" due to "channels, router fifos and router crossbar fabrics"<ref name="GrotKeckler" />. Simple topologies use less power due to simpler routers, but the increased number of hops can lead to overall increased power consumption. | ||

===Scalability=== | ===Scalability=== | ||

Scalability ties back to both energy and cost, but also to engineering constraints as well. | Scalability ties back to both energy and cost, but also to engineering constraints as well. Specifically, the architecture needs to be sensitive to the power and heat requirements of the design, as well as the physical die size. Further, the design requires the ability to be fabricated predictably and within reasonable costs. To mitigate some of these factors, concentration of network interfaces can be employed, where a network interface is shared by multiple terminals. Efforts to scale more complicated topologies like the butterfly (into a "flattened" butterfly) have yielded promising results, but at the expense of too much energy expenditure<ref name="GrotKeckler" />. | ||

==Modern Implementations== | ==Modern Implementations== | ||

| Line 38: | Line 38: | ||

One part of Intel's efforts in the multiprocessor research realm revolves its Many Integrated Cores project<ref name="intelmic">Blyler, John. "[http://chipdesignmag.com/sld/blog/2011/09/22/proprietary-on-chip-connections-yield-to-noc-designs/ Proprietary On-Chip Connections Yield To NoC Designs]" System-Level Design Community Blog. September 22, 2011.</ref> These cores would communicate using a Message Parsing Interface. Further, to connect these cores, there is the Intel On-Chip System Fabric, which is a proprietary chassis for its popular Atom computing platform<ref name="inteliosf">Blyler, John. "[http://www.chipestimate.com/blogs/IPInsider/?p=295 Intel Challenges ARM with IP and Interconnect Strategy]" Semiconductor IP Blog. August 26, 2011.</ref>. The architecture of the IOSF lends itself to communication with traditional PCI buses, making it a viable technology for use in backwards-compatible general purpose computers. Additionally, through numerous licensing agreements, Intel has acquired the use of a wide variety of devices from graphics to modems and Wi-Fi Ethernet adapters. Coupled with the IOSF, conceivably entire computing platforms (SoCs) could be made at low cost and small footprint. | One part of Intel's efforts in the multiprocessor research realm revolves its Many Integrated Cores project<ref name="intelmic">Blyler, John. "[http://chipdesignmag.com/sld/blog/2011/09/22/proprietary-on-chip-connections-yield-to-noc-designs/ Proprietary On-Chip Connections Yield To NoC Designs]" System-Level Design Community Blog. September 22, 2011.</ref> These cores would communicate using a Message Parsing Interface. Further, to connect these cores, there is the Intel On-Chip System Fabric, which is a proprietary chassis for its popular Atom computing platform<ref name="inteliosf">Blyler, John. "[http://www.chipestimate.com/blogs/IPInsider/?p=295 Intel Challenges ARM with IP and Interconnect Strategy]" Semiconductor IP Blog. August 26, 2011.</ref>. The architecture of the IOSF lends itself to communication with traditional PCI buses, making it a viable technology for use in backwards-compatible general purpose computers. Additionally, through numerous licensing agreements, Intel has acquired the use of a wide variety of devices from graphics to modems and Wi-Fi Ethernet adapters. Coupled with the IOSF, conceivably entire computing platforms (SoCs) could be made at low cost and small footprint. | ||

===ARM CoreLink Interconnect=== | |||

The ARM CoreLink Interconnect is a highly flexible and configurable interconnection network specification that implements the [http://en.wikipedia.org/wiki/Advanced_Microcontroller_Bus_Architecture AMBA] (Advanced Microcontroller Bus Architecture) protocol. The AMBA protocol is "an open standard, on-chip interconnect specification for the connection and management of functional blocks in a System-on-Chip (SoC). It enables development of multi-processor designs with large numbers of controllers and peripherals."<ref name="ambadoc">[http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.set.amba/index.html AMBA] on the ARM Info Center.</ref> | |||

===Other=== | |||

The above are examples of modern actual realizations of SoC topologies. Some more examples<ref name="wikicompetitors">[http://en.wikipedia.org/wiki/Advanced_Microcontroller_Bus_Architecture#Competitors AMBA Competitors] on Wikipedia.</ref> of realizations of SoCs include the Opencores [http://en.wikipedia.org/wiki/Wishbone_(computer_bus) Wishbone], [http://www.altera.com/literature/manual/mnl_avalon_spec.pdf Altera Avalon], and [http://en.wikipedia.org/wiki/CoreConnect IBM CoreConnect]. | |||

==Summary== | ==Summary== | ||

Considering that the AMBA protocol was first published in 1996, it is obvious that the movement toward SoCs is technologically mature. The several competing topologies and technologies, coupled with on-going research and the search for the ultimate in performance and power reduction have led to an exciting amount of innovation in recent years. Meshes and crossbars seem to the scalability option of choice, especially as process sizes shrink which allow for more flexible scaling options. | |||

==Quiz== | |||

'''Question 1)''' What is another name for the physical substrate of an integrated circuit? | |||

# Fabric | |||

# Canvas | |||

# Die | |||

# Matrix | |||

'''Question 2)''' Which of the below companies are ''not'' actively involved in SoC design? | |||

# Altera | |||

# ARM | |||

# IBM | |||

# Cray | |||

'''Question 3)''' Why are mesh topologies generally preferred over ring topologies? | |||

# Lower latency | |||

# Cheaper to implement | |||

# Lower power consumption | |||

# Better reliability | |||

'''Question 4)''' What is meant by "SoCs?" | |||

# Scalability of Connections | |||

# System on a Chip | |||

# Speeds over Capacities | |||

# Shared (memory) on a Cable | |||

'''Question 5)''' What is meant by "NoCs?" | |||

# No Capacitance Effects | |||

# Network on a Chip | |||

# Network Obligation of Channel | |||

# Notification of Cache Sharing | |||

'''Question 6)''' What is the name of a discrete processing unit used in the construction of certain topologies? | |||

# Channel | |||

# Router | |||

# Sentry | |||

# Tile | |||

'''Question 7)''' Which choice best characterizes the number of cores in a "medium scale" system? | |||

# Around 2-8 cores | |||

# Around 8-16 cores | |||

# Around 32-64 cores | |||

# Around 64-128 cores | |||

'''Question 8)''' What is a major prohibitive factor of using butterfly topologies in commercial SoC products for a large number of cores? | |||

# High number of channels | |||

# High link bandwidth | |||

# High latency on cache misses | |||

# Low return on investment | |||

'''Question 9)''' Which of these is the most major factor in limiting physical size in a SoC design? | |||

# Electrical characteristics (capacitance, noise, clock skew, current, voltage) | |||

# Process limitations (required equipment, equipment calibration, material purity) | |||

# Power density (process size, material thermal characteristics, number of substrate layers) | |||

# All of the above have similar magnitude in limitation considerations | |||

'''Question 10)''' Which is the best reason CMPs require special programming libraries for best performance? | |||

# Proprietary code paths for vendor lock-out | |||

# Allow NoC to more effectively coordinate all cores | |||

# Implementation is incompatible with operating system | |||

# Attempt to create new de facto programming standards | |||

- | |||

'''Answers:''' In numerical question order: 3-4-1-2-2-4-2-1-4-2 | |||

==Further Reading== | |||

''[http://www.cesr.ncsu.edu/solihin/Main.html Fundamentals of Parallel Computer Architecture]'' by Yan Solihin. | |||

==References== | ==References== | ||

<references></references> | <references></references> | ||

Latest revision as of 04:30, 25 April 2012

On-chip Interconnects

Introduction

As the number of processors in multiple-processor systems increases, increasing consideration needs to be given to how those processors communicate. With current technology, for a small number of processors shared-memory arrangements are quite effective. However, as the number of processors increases contention for available resources (memory, bus time, etc.) increases, negatively impacting performance of the system. However, keeping these processors all on the same physical piece of hardware is convenient and helps performance due to physical proximity. As such, it is desirable to design hardware where many cores are part of the same die while allowing for the performance gains possible with interconnections. Recently there has been more research into these on-chip interconnects, and this article will explore the state of those efforts.

Topologies

The intricacies of semiconductor design and layout afford many different kinds of possible layouts when creating networking topologies on SoCs. Specifically, designs need to be amenable to creation on a two-dimension substrate, and as such practically limits the use of some more advanced topologies like hypercubes<ref name="GrotKeckler">Grot and Keckler, Scalable On-Chip Interconnect Topologies</ref> (circularly-connected mesh).

Rings

Ring topologies can be effective when the “number of cores is still relatively small but is larger than what can be supported using a bus” [Solihin 409]. Such cases are considered to use “medium-scale” interconnection networks.

Meshes

2D mesh networks are used when the scale of the network topology breaks into “larger-than-medium” scale. This is especially true when the dimensions are divisible by a factor two, as the benefit in the number of hops versus a traditional ring network can be tremendous.

For example, in a worst-case scenario a sixteen-core multiprocessor would require eight hops to get to the farthest node. In a 2D mesh, however, creating a 4x4 grid guarantees that the maximum number of hops is six. Assuming random data distributed evenly among the cores, the expectation value (1/16 chance for each node) of the number of hops in a ring would be 3.6 (1/16*[0+1+2+3+... hops]), whereas the expectation value for the 2D mesh in minimal path would be 2.8. This benefit, though, comes at the necessary cost of increased power for the extra processing required. A potential solution to this "power problem" is to group cores together in what is called a concentrated mesh, but even that requires increased crossbar complexity<ref name="GrotKeckler"/>.

Flattened Butterfly

The flattened butterfly offers the benefits of a tree (less constraints on root-level bandwidth [Solihin 367]) as well as the ability to actually be mapped to a substrate, but because of node concentration<ref name="GrotKeckler"/> the number of channels required for high scalability is cost- and validation-prohibitive.

Crossbar Switch

A crossbar switch topology uses a bus arrangement with the bus lines physically perpendicular to each other and whose intersections are connected or disconnected with a switch. In the case of CMPs, this switch is a transistor or, depending on the desired characteristics of the system, a programmable fuse. Due to their ability to be multi-staged<ref name="wikicrossbarsemi">"Crossbar switch." Wikipedia. Last accessed April 24, 2012.</ref>, these topologies lend themselves to being used for memory in large-scale systems. The IBM Cyclops64 architecture is an example of the implementation of this architecture<ref name="cyclops64">Zhang, Ying Ping. "A study of the on-chip interconnection network for the IBM Cyclops64 multi-core architecture. April 2006. IEEE Xplore.</ref>.

Design Considerations

Energy Consumption

Multi-processor SoCs require additional energy in order to operate the on-chip interconnection hardware like routers. Too, the links between components can introduce increased losses in regards to required voltages and physical arrangement. Indeed, "on-chip network power has been estimated to consume up to 28% of total chip power" due to "channels, router fifos and router crossbar fabrics"<ref name="GrotKeckler" />. Simple topologies use less power due to simpler routers, but the increased number of hops can lead to overall increased power consumption.

Scalability

Scalability ties back to both energy and cost, but also to engineering constraints as well. Specifically, the architecture needs to be sensitive to the power and heat requirements of the design, as well as the physical die size. Further, the design requires the ability to be fabricated predictably and within reasonable costs. To mitigate some of these factors, concentration of network interfaces can be employed, where a network interface is shared by multiple terminals. Efforts to scale more complicated topologies like the butterfly (into a "flattened" butterfly) have yielded promising results, but at the expense of too much energy expenditure<ref name="GrotKeckler" />.

Modern Implementations

Tilera Tile Processor

Tilera is a fabless semiconductor company that has developed a "tile processor" whereby the fabrication of the multi-processor device is greatly simplified by the placement of processor "tiles" on the die. The technology behind this innovation is iMesh, which is the name of the on-chip interconnection technology used in the Tile Processor's architecture<ref name="Tilera">"On-Chip Interconnection Architecture of the Tile Processor," Wentzlaff, et al. 2007. IEEE Xplore.</ref>. The Tile Processor is innovative due to its highly scalable implementation of an on-chip network that utilizes 2D meshes. These are physically organized (as opposed to logically organized) due to design considerations when scaling and laying out new designs.

Each tile of the Tile Processor is its own self-contained processor and can effectively function by itself; multiple processors can be combined to form an SMP system. The Tile Processor can be further enhanced by using its custom C language-based programming libraries (both POSIX threads and iLib), which fully leverage the processing capabilities of the CMP.

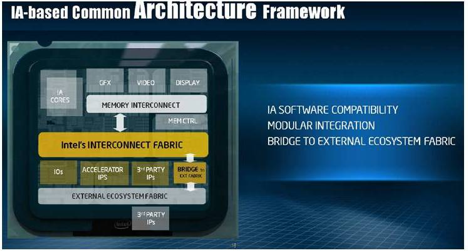

Intel MIC and IOSF

One part of Intel's efforts in the multiprocessor research realm revolves its Many Integrated Cores project<ref name="intelmic">Blyler, John. "Proprietary On-Chip Connections Yield To NoC Designs" System-Level Design Community Blog. September 22, 2011.</ref> These cores would communicate using a Message Parsing Interface. Further, to connect these cores, there is the Intel On-Chip System Fabric, which is a proprietary chassis for its popular Atom computing platform<ref name="inteliosf">Blyler, John. "Intel Challenges ARM with IP and Interconnect Strategy" Semiconductor IP Blog. August 26, 2011.</ref>. The architecture of the IOSF lends itself to communication with traditional PCI buses, making it a viable technology for use in backwards-compatible general purpose computers. Additionally, through numerous licensing agreements, Intel has acquired the use of a wide variety of devices from graphics to modems and Wi-Fi Ethernet adapters. Coupled with the IOSF, conceivably entire computing platforms (SoCs) could be made at low cost and small footprint.

ARM CoreLink Interconnect

The ARM CoreLink Interconnect is a highly flexible and configurable interconnection network specification that implements the AMBA (Advanced Microcontroller Bus Architecture) protocol. The AMBA protocol is "an open standard, on-chip interconnect specification for the connection and management of functional blocks in a System-on-Chip (SoC). It enables development of multi-processor designs with large numbers of controllers and peripherals."<ref name="ambadoc">AMBA on the ARM Info Center.</ref>

Other

The above are examples of modern actual realizations of SoC topologies. Some more examples<ref name="wikicompetitors">AMBA Competitors on Wikipedia.</ref> of realizations of SoCs include the Opencores Wishbone, Altera Avalon, and IBM CoreConnect.

Summary

Considering that the AMBA protocol was first published in 1996, it is obvious that the movement toward SoCs is technologically mature. The several competing topologies and technologies, coupled with on-going research and the search for the ultimate in performance and power reduction have led to an exciting amount of innovation in recent years. Meshes and crossbars seem to the scalability option of choice, especially as process sizes shrink which allow for more flexible scaling options.

Quiz

Question 1) What is another name for the physical substrate of an integrated circuit?

- Fabric

- Canvas

- Die

- Matrix

Question 2) Which of the below companies are not actively involved in SoC design?

- Altera

- ARM

- IBM

- Cray

Question 3) Why are mesh topologies generally preferred over ring topologies?

- Lower latency

- Cheaper to implement

- Lower power consumption

- Better reliability

Question 4) What is meant by "SoCs?"

- Scalability of Connections

- System on a Chip

- Speeds over Capacities

- Shared (memory) on a Cable

Question 5) What is meant by "NoCs?"

- No Capacitance Effects

- Network on a Chip

- Network Obligation of Channel

- Notification of Cache Sharing

Question 6) What is the name of a discrete processing unit used in the construction of certain topologies?

- Channel

- Router

- Sentry

- Tile

Question 7) Which choice best characterizes the number of cores in a "medium scale" system?

- Around 2-8 cores

- Around 8-16 cores

- Around 32-64 cores

- Around 64-128 cores

Question 8) What is a major prohibitive factor of using butterfly topologies in commercial SoC products for a large number of cores?

- High number of channels

- High link bandwidth

- High latency on cache misses

- Low return on investment

Question 9) Which of these is the most major factor in limiting physical size in a SoC design?

- Electrical characteristics (capacitance, noise, clock skew, current, voltage)

- Process limitations (required equipment, equipment calibration, material purity)

- Power density (process size, material thermal characteristics, number of substrate layers)

- All of the above have similar magnitude in limitation considerations

Question 10) Which is the best reason CMPs require special programming libraries for best performance?

- Proprietary code paths for vendor lock-out

- Allow NoC to more effectively coordinate all cores

- Implementation is incompatible with operating system

- Attempt to create new de facto programming standards

-

Answers: In numerical question order: 3-4-1-2-2-4-2-1-4-2

Further Reading

Fundamentals of Parallel Computer Architecture by Yan Solihin.

References

<references></references>