CSC/ECE 506 Spring 2012/9a mm: Difference between revisions

| (63 intermediate revisions by 2 users not shown) | |||

| Line 25: | Line 25: | ||

==Monitors in Java== | ==Monitors in Java== | ||

Monitors in Java are conceptual spaces that can be occupied by only one thread at a time. Entering the monitor space is termed ''entering the monitor''. The monitor space has a conceptual inner region which when entering is called ''acquiring the monitor''. Occupying this inner region is called ''owning the monitor'' while leaving it is called ''releasing the monitor'' and exiting the monitor space all together is called ''exiting the monitor''. No more than one thread can own the monitor at any given time. This region of the monitor typically contains data and code that potentially support two types of synchronization available with Java, mutual exclusion and cooperation. Monitors can contain many code regions, related or not, which each when executed must do so with a [[File:Java_monitor.gif|500px|thumb|rignt|Java Monitor]]guarantee of atomicity with respect to other executing threads. Each code region has its own ''entry set''. This means that each monitor has multiple points of entry, each of which is associated with a particular region of code that for correctness must be synchronized. When a thread, in its course of execution, comes upon a region controlled by the monitor it is placed in the entry set of that region. If the monitor is not occupied, the thread acquires and then occupies the monitor and continues with its execution of that region. If the monitor is occupied then the thread is forced to wait (along with other waiting threads) for the occupying thread to release the monitor. All waiting threads then compete for the opportunity to acquire the monitor. | Monitors in Java are conceptual spaces that can be occupied by only one thread at a time. Entering the monitor space is termed ''entering the monitor''. The monitor space has a conceptual inner region which when entering is called ''acquiring the monitor''. Occupying this inner region is called ''owning the monitor'' while leaving it is called ''releasing the monitor'' and exiting the monitor space all together is called ''exiting the monitor''. No more than one thread can own the monitor at any given time. This region of the monitor typically contains data and code that potentially support two types of synchronization available with Java, mutual exclusion and cooperation. Monitors can contain many code regions, related or not, which each when executed must do so with a [[File:Java_monitor.gif|500px|thumb|rignt|Java Monitor. Taken from [[#References|[15] ]]]]guarantee of atomicity with respect to other executing threads. Each code region has its own ''entry set''. This means that each monitor has multiple points of entry, each of which is associated with a particular region of code that for correctness must be synchronized. When a thread, in its course of execution, comes upon a region controlled by the monitor it is placed in the entry set of that region. If the monitor is not occupied, the thread acquires and then occupies the monitor and continues with its execution of that region. If the monitor is occupied then the thread is forced to wait (along with other waiting threads) for the occupying thread to release the monitor. All waiting threads then compete for the opportunity to acquire the monitor. | ||

Mutual exclusion is guaranteed by the monitor because no more than one thread is allowed in any region of a particular monitor. Cooperation between threads can be orchestrated with a "Wait and Notify" (or "Signal and Continue") monitor. In this type of monitor a thread, while occupying the monitor, can execute a ''wait command''. Executing a wait causes a thread to release the monitor and enter a ''wait set''. The thread, now in the wait set, will stay in a suspended state until after it receives a ''notify command'' '''and''' it is able to occupy the monitor according to protocols already described. A thread which executes a ''notify command'' (the ''Signal'') continues to occupy the monitor in its normal course of execution until it releases the monitor or suspends as well via a wait command. | Mutual exclusion is guaranteed by the monitor because no more than one thread is allowed in any region of a particular monitor. Cooperation between threads can be orchestrated with a "Wait and Notify" (or "Signal and Continue") monitor. In this type of monitor a thread, while occupying the monitor, can execute a ''wait command''. Executing a wait causes a thread to release the monitor and enter a ''wait set''. The thread, now in the wait set, will stay in a suspended state until after it receives a ''notify command'' '''and''' it is able to occupy the monitor according to protocols already described. A thread which executes a ''notify command'' (the ''Signal'') continues to occupy the monitor in its normal course of execution until it releases the monitor or suspends as well via a wait command. | ||

| Line 42: | Line 42: | ||

=Thin Locks= | =Thin Locks= | ||

Thin Locks were first described by Bacon, et al. in 1998. Alternatively referred to as "Featherweight Synchronization", thin locks efficiently handle common locking scenarios and exist on top of native, heavyweight locking implementation such as [http://en.wikipedia.org/wiki/Pthreads pthreads]. The ambition for thin locks was a top level locking capability that had speed and compactness and was scalable, simple, and maintainable. For situations where thin locks are able to be applied this results in not only a speed up due to inefficiencies inherit in using a monitor, but potentially space as well, because it can reduce the number of synchronized objects created. | Thin Locks were first described by [http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf Bacon, et al.] in 1998. Alternatively referred to as "Featherweight Synchronization", thin locks efficiently handle common locking scenarios and exist on top of native, heavyweight locking implementation such as [http://en.wikipedia.org/wiki/Pthreads pthreads]. The ambition for thin locks was a top level locking capability that had speed and compactness and was scalable, simple, and maintainable. For situations where thin locks are able to be applied this results in not only a speed up due to inefficiencies inherit in using a monitor, but potentially space as well, because it can reduce the number of synchronized objects created. | ||

Monitors are multi-word structures and include space for a thread pointer, a nested lock count and queues. Thin locks work by incorporating a special synchronization word into each object header and they require the availability of instructions that can be used to build atomic primitives such as a compare-and-swap or store-conditional. In the [http://en.wikipedia.org/wiki/Java_virtual_machine JVM] system that was used to develop thin locks each object was made of a three word header followed by data. The original implementation reserved 24 bits of the three word header for the synchronization word but was able to maintain the three word size of the header through compression techniques. The structure of the 24-bit lock field is key to facilitating the facile locking ability of the thin lock in common locking situations. The lock field represents either a thin lock or a reference to a ''fat lock'', or native heavyweight lock. The first bit, referred to as the ''monitor shape bit'' is 0 if the lock field refers to a thin lock and 1 if it is a reference to a fat lock. If the monitor shape bit is 1 the remaining 23 bits are an index of a fat lock. If the monitor shape bit is 0 the next 15 bits constitute a thread identifier and the last 8 bits serve as a measure of nesting depth- the number of times an already locked object is locked. When the thread identifier is 0 the object is unlocked, but if it is non-zero it is an index into a table which maps thread indices to thread pointers. | Monitors are multi-word structures and include space for a thread pointer, a nested lock count and queues. Thin locks work by incorporating a special synchronization word into each object header and they require the availability of instructions that can be used to build atomic primitives such as a compare-and-swap or store-conditional. In the [http://en.wikipedia.org/wiki/Java_virtual_machine JVM] system that was used to develop thin locks each object was made of a three word header followed by data. The original implementation reserved 24 bits of the three word header for the synchronization word but was able to maintain the three word size of the header through compression techniques. The structure of the 24-bit lock field is key to facilitating the facile locking ability of the thin lock in common locking situations. The lock field represents either a thin lock or a reference to a ''fat lock'', or native heavyweight lock. The first bit, referred to as the ''monitor shape bit'' is 0 if the lock field refers to a thin lock and 1 if it is a reference to a fat lock. If the monitor shape bit is 1 the remaining 23 bits are an index of a fat lock. If the monitor shape bit is 0 the next 15 bits constitute a thread identifier and the last 8 bits serve as a measure of nesting depth- the number of times an already locked object is locked. When the thread identifier is 0 the object is unlocked, but if it is non-zero it is an index into a table which maps thread indices to thread pointers. | ||

Situations that are applicable to thin lock usage include objects that do not have the cooperation commands of ''wait, notify, or notifyAll'' performed on them, are not subject to contention and are not locked to a nesting depth of greater than 257. These restrictions sound significant but in most applications the majority of objects meet these criteria. When an object transitions from thin state to fat it remains there for its duration. Key to the performance of a thin lock is the design element that while an object is owned by a particular thread it is never modified by another thread. This means that [[File:Locking_word.jpg|300px|thumb|left|Locking a thin lock]]once an object is locked all subsequent operations can be performed with loads and stores as opposed to using atomic primitives. | Situations that are applicable to thin lock usage include objects that do not have the cooperation commands of ''wait, notify, or notifyAll'' performed on them, are not subject to contention and are not locked to a nesting depth of greater than 257. These restrictions sound significant but in most applications the majority of objects meet these criteria. When an object transitions from thin state to fat it remains there for its duration. Key to the performance of a thin lock is the design element that while an object is owned by a particular thread it is never modified by another thread. This means that [[File:Locking_word.jpg|300px|thumb|left|Locking a thin lock. Taken from [[#References|[1] ]]]]once an object is locked all subsequent operations can be performed with loads and stores as opposed to using atomic primitives. | ||

Assume initially that an object is unlocked and the entire lock field is 0 (figure left | Assume initially that an object is unlocked and the entire lock field is 0 (figure left ''(a)''). If a thread A wants to lock the object, it first performs a compare-and-swap operation on the word containing the lock field. The old value given to the compare-and-swap is shown in the figure as the upper state, built by masking the high 24 bits. The new value corresponds to ''(b)'' in the figure and contains 0 for the monitor bit shape, a thread index which corresponds to thread A and a count of zero. The new value is generated by a bitwise '''or''' operation of the old value and the index and then shifting 16 bits left. The thread index can be accessed in a single load instruction from a separate data structure. If thread A owns the lock and attempts to lock again (nested locking) it first begins a CAS operation, which fails because the object is already locked. The routine then will check the monitor bit shape, thread index and count. If the bit shape is 0, the thread index matches that of thread A and if the count is under 255 then count is incremented with a simple store. This situation corresponds to ''(c)'' of the left figure. | ||

If thread A has the object locked and thread B tries to lock the object, it will try a CAS which fails. It will check to see if it owns the lock and that will fail because the object is locked by another thread. Thread B then initiates a transition on the object lock from thin to fat. Because only the owner thread can act on a thin object lock, thread B spin locks on the object until thread A releases and then thread B obtains the lock. Thread B creates a fat lock by assigning a monitor index to the newly created monitor object and changes the lock field monitor shape bit (to 1) and rest of the field to monitor index. When the object is unlocked by thread B it remains in the inflated lock state for the rest of its lifetime. | If thread A has the object locked and thread B tries to lock the object, it will try a CAS which fails. It will check to see if it owns the lock and that will fail because the object is locked by another thread. Thread B then initiates a transition on the object lock from thin to fat. Because only the owner thread can act on a thin object lock, thread B spin locks on the object until thread A releases and then thread B obtains the lock. Thread B creates a fat lock by assigning a monitor index to the newly created monitor object and changes the lock field monitor shape bit (to 1) and rest of the field to monitor index. When the object is unlocked by thread B it remains in the inflated lock state for the rest of its lifetime. | ||

An example implementation of the lock follows: | |||

''atomic_t unlocked = obj->monword & ~LOCK_MASK'' | |||

''atomic_t locked = unlocked | thread;'' | |||

''if (CMP&SWP(obj->monword, unlocked, locked))'' | |||

''return;'' | |||

''unsigned xored = obj->monword ^ thread;'' | |||

''if (xored < COUNT_MASK) {'' | |||

''obj->monword += COUNT_INCREMENT;'' | |||

''return;'' | |||

''}'' | |||

First, the values for the CAS operation are generated via logical operations on the objects lock field word ("''obj->monword''"). A CAS is executed and if that operation succeeds the atomic function returns true and the locking method completes. If the calling process finds that it owns the lock (''if (xored < COUNT_MASK)'') then it increments the lock count. If a different object owns the lock then locking fails and steps to transition the lock from thin to fat are initiated by the calling process. | |||

=Biased Locks= | =Biased Locks= | ||

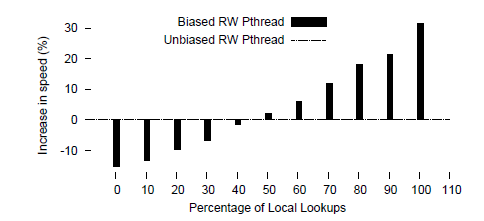

One of the techniques, previously introduced, that are used to test and capture the locks is compare&swap, CAS. These atomic instructions are indeed very intensive, and consume many bus cycles (in a [http://en.wikipedia.org/wiki/Intel_Core Core Duo], a CAS operation can consume up to seven times longer than a increment [10] | One of the techniques, previously introduced, that are used to test and capture the locks is compare&swap, CAS. These atomic instructions are indeed very intensive, and consume many bus cycles (in a [http://en.wikipedia.org/wiki/Intel_Core Core Duo], a CAS operation can consume up to seven times longer than a increment [[#References|[10]]]). They solely could be blamed on using a significant percentage of the computing time and resources of a processor. (It is interesting to state here that this effect depends on the processor architecture, as well as the VM implementation and, hence, [http://publib.boulder.ibm.com/infocenter/javasdk/v1r4m2/index.jsp?topic=%2Fcom.ibm.java.doc.diagnostics.142%2Fhtml%2Funderthejvm.html IBM Java VM] is less disposed to this effect than [http://www.oracle.com/technetwork/java/javase/tech/vmoptions-jsp-140102.html Oracle's]. Also, recent processors, like the Intel Core family, have been optimized to perform this task) Due to its architecture and way of use, Java is prone to use a considerable amount of locking, and that amount has a tremendous effect on the computing time of every program. Due to the idiosyncrasy of the spins, it is not uncommon [[#References|[1]]] that, when we compare the times used in the execution of a program serially or using threads, the latter shows higher inefficiency and latencies grow exponentially with relation to the number of threads used. [[File:Comparison_biased.PNG|400px|thumb|right|Comparison biased-not biased for a Readers-Writers process (taken from [[#References|[1]]] )]] | ||

One of the solutions proposed to mitigate this problem is based on taking advantage of some observations in the distribution of locks by the programs: in most cases, due to the way the programs are structured, there is one thread that consumes the locks more often than the others, that is the lock is more commonly used by a single owner and, in occasions, that thread could be entering the locks a number of times several order the magnitude higher. So why not to make it easier for it? | One of the solutions proposed to mitigate this problem is based on taking advantage of some observations in the distribution of locks by the programs: in most cases, due to the way the programs are structured, there is one thread that consumes the locks more often than the others, that is the lock is more commonly used by a single owner and, in occasions, that thread could be entering the locks a number of times several order the magnitude higher. So why not to make it easier for it? | ||

| Line 65: | Line 77: | ||

# Release, where the thread release the lock for others to acquire. | # Release, where the thread release the lock for others to acquire. | ||

As we can imagine that we stay more or less in the mutual exclusion area will depend on how many threads or processes we have in that area, the frequency with we use it; if we collide or not with other processes and what kind of lock we are using. We need to find ways to make these characteristics to work in our advantage. | As we can imagine that we stay more or less in the mutual exclusion area will depend on how many threads or processes we have in that area, the frequency with we use it; if we collide or not with other processes and what kind of lock we are using. We need to find ways to make these characteristics to work in our advantage. | ||

Biased locking exploits just one of those characteristics, the frequency. As we have mentioned before, the case where a thread uses a lock more frequently than any others is very common. In that case, we could grant ownership of a lock to that thread and just purposely neglect doing the unlock operation (to be able to do this, the object need to allow it, i.e, in Java, the object header has to have the biased bit set). In this way, the next time that the thread wants to enter the lock, it will have it already, hence gaining the time to compete for it (even if there is no other competition, there will still be the CAS), lock it and release it. Effectively it is like they never leave the critical section. Now, could this generate contention problems? Not with the same process, that could not be in that region (it has just abandoned it); nor with others, as the former process still hold the lock. These will make the lock task nimbler to the more frequent thread and longer for the less frequent. But that is exactly what we are looking for. In fact, for cases where the contention between processes is very low, there are proposals to eliminate the atomicity all together [11]. | Biased locking exploits just one of those characteristics, the frequency. As we have mentioned before, the case where a thread uses a lock more frequently than any others is very common. In that case, we could grant ownership of a lock to that thread and just purposely neglect doing the unlock operation (to be able to do this, the object need to allow it, i.e, in Java, the object header has to have the biased bit set). In this way, the next time that the thread wants to enter the lock, it will have it already, hence gaining the time to compete for it (even if there is no other competition, there will still be the CAS), lock it and release it. Effectively it is like they never leave the critical section. Now, could this generate contention problems? Not with the same process, that could not be in that region (it has just abandoned it); nor with others, as the former process still hold the lock. These will make the lock task nimbler to the more frequent thread and longer for the less frequent. But that is exactly what we are looking for. In fact, for cases where the contention between processes is very low, there are proposals to eliminate the atomicity all together [[#References|[11]]]. | ||

There are then two issues we need to resolve: how to decide which thread is "the most frequent", that we will call '''the owner'''; and how to let any other thread not-the-owner use the lock. For the first question, we could use some gathered data or some heuristics. For instance: | There are then two issues we need to resolve: how to decide which thread is "the most frequent", that we will call '''the owner'''; and how to let any other thread not-the-owner use the lock. For the first question, we could use some gathered data or some heuristics. For instance: | ||

| Line 75: | Line 87: | ||

* Define a flag for biasing in an object type. | * Define a flag for biasing in an object type. | ||

* When entering a lock, check the flag: if asserted, then bias; if not, use a thin lock until a mechanism, for instance age, flags the biased flag. | * When entering a lock, check the flag: if asserted, then bias; if not, use a thin lock until a mechanism, for instance age, flags the biased flag. | ||

Regarding the question of how the main thread decides when to leave the lock. The important factor here is to do it efficiently and many proposals have been made. In Kawachiya's work [3], one of the pioneers in these techniques, the author proposes that when a new thread needs the lock, it would just stop the owner thread and enter it. This task is very expensive because implies the revocation of the owner thread. Onodera et al [12] and Vasudevan et al [10] propose hybrid methods that mix a 2 process algorithm with n-process mutual algorithms (for instance, CAS). Vasudevan's algorithm, for instance, is capable of passing the lock to the younger thread without having to suspend the owner and only uses an extra two assignments and two comparisons. Russell and Detlefs [9] also offers lock transfer, but their transfer algorithm is more expensive and it has been questioned because the difficulty to ascertain if the biased lock is actually in possession of a particular thread. | Regarding the question of how the main thread decides when to leave the lock. The important factor here is to do it efficiently and many proposals have been made. In Kawachiya's work [[#References|[3]]], one of the pioneers in these techniques, the author proposes that when a new thread needs the lock, it would just stop the owner thread and enter it. This task is very expensive because implies the revocation of the owner thread. Onodera et al [[#References|[12]]] and Vasudevan et al [[#References|[10]]] propose hybrid methods that mix a 2 process algorithm with n-process mutual algorithms (for instance, CAS). Vasudevan's algorithm, for instance, is capable of passing the lock to the younger thread without having to suspend the owner and only uses an extra two assignments and two comparisons. Russell and Detlefs [[#References|[9]]] also offers lock transfer, but their transfer algorithm is more expensive and it has been questioned because the difficulty to ascertain if the biased lock is actually in possession of a particular thread. | ||

Following, a biased lock snippet proposed by Vasudevan et al [10]. | Following, a biased lock snippet proposed by Vasudevan et al [[#References|[10]]]. It first defines a structure with the id of the owner of thread and the two locks, the light weight and the heavier weight. In the biased locks we see that while the thread id is that of the owner, only the light weight lock is executed. When the thread is other than the owner, both locks are executed.The heavy weight, or not dominant, lock could be a | ||

variant of the [http://en.wikipedia.org/wiki/Peterson%27s_algorithm Peterson's Algorithm] before and after the critical section. | |||

'' typedef struct { | '' typedef struct { | ||

| Line 101: | Line 114: | ||

'' }'' | '' }'' | ||

== | Often, the problem of using the Peterson's Algorithm is that it uses barriers and that can make the dominant and other processes to step back and spin. To solve this issue '''asymmetric locks''' [[#References|[10]]] are used, where the non-dominant threads actually ask for permission to the dominant one to step in, making used of a flag and a grant variable. The flag indicates that there is a request from a process from a lesser thread. When the dominant is about to end with its critical section, checks if the flag is set. If so, sets the grant variable and retires with a barrier until the lesser thread ends, moment at which the latter would reset the grant variable, so the dominant can come back in. | ||

==An example of biased locks: Rogers' lock== | |||

[http://www.cs.man.ac.uk/~irogers/index.php Dr. Ian Rogers] (Manchester University, Azul Systems, Google) has an extensive curriculum in computer science, going from network security to binary translators and was a program committee member for [http://pppj2011.imm.dtu.dk PPPJ2011]. He is also part of the Android mobile group at Google. | [http://www.cs.man.ac.uk/~irogers/index.php Dr. Ian Rogers] (Manchester University, Azul Systems, Google) has an extensive curriculum in computer science, going from network security to binary translators and was a program committee member for [http://pppj2011.imm.dtu.dk PPPJ2011]. He is also part of the Android mobile group at Google. | ||

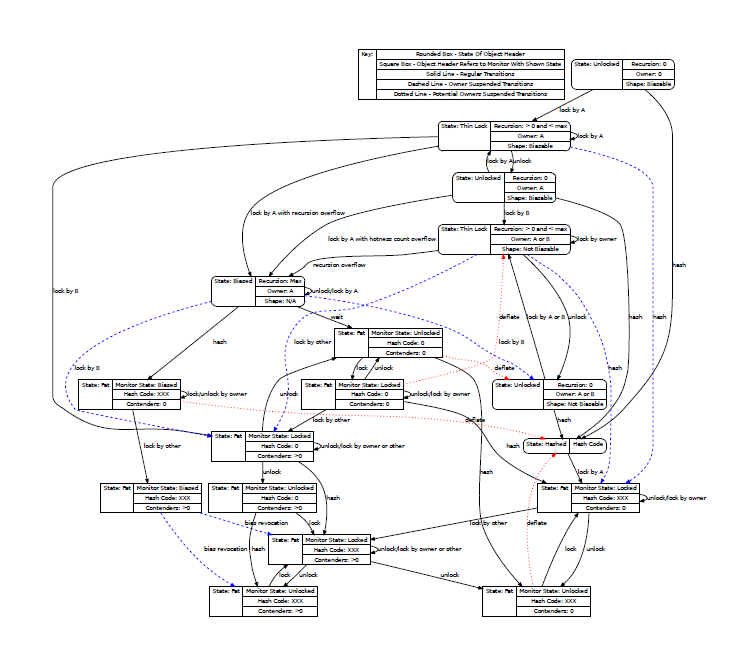

In 2011 he presented some work on thin and biased locks | In 2011 he presented some work on thin and biased locks [[#References|[4]]] [[#References|[5]]] where he proposed a biased lock with the following characteristics: [[File:RogersLock.PNG|400px|thumb|right|Rogers Lock State Machine (taken from [[#References|[5]]] )]] | ||

* Use and declaration of biased bit in the object header, using existing states in that header. | * Use and declaration of biased bit in the object header, using existing states in that header. | ||

| Line 120: | Line 134: | ||

The object header for this model would then be like: | The object header for this model would then be like: | ||

[[File:ObjectHeader.PNG|300px|thumb|left|Rogers Model Object Header (taken from [5])]] | [[File:ObjectHeader.PNG|300px|thumb|left|Rogers Model Object Header (taken from [[#References|[5]]] )]] | ||

Where every field is defined as, according to Rogers: | Where every field is defined as, according to Rogers: | ||

| Line 129: | Line 143: | ||

* Age: Times that the object has been promotable | * Age: Times that the object has been promotable | ||

* Class Information: type identifier | * Class Information: type identifier | ||

* Hashed: indication of whether the locking information is from a hash code instead. | * Hashed: indication of whether the locking information is from a [http://en.wikipedia.org/wiki/Hash_function hash code] instead. | ||

* Recursion count: Number of lock entries. 0 means unlocked. Thread id holds the previous owner. Maximum number means the lock is biased to the thread id. | * Recursion count: Number of lock entries. 0 means unlocked. Thread id holds the previous owner. Maximum number means the lock is biased to the thread id. | ||

* Monitor: Id pointing to the monitor associated to the object header when it is inflated. | * Monitor: Id pointing to the monitor associated to the object header when it is inflated. | ||

| Line 140: | Line 154: | ||

* ''Dr. Rogers'website at Manchester Uni.'': http://www.cs.man.ac.uk/~irogers/index.php | * ''Dr. Rogers'website at Manchester Uni.'': http://www.cs.man.ac.uk/~irogers/index.php | ||

* ''Java HotSpot'': http://www.oracle.com/technetwork/java/javase/tech/index-jsp-136373.html | * ''Java HotSpot'': http://www.oracle.com/technetwork/java/javase/tech/index-jsp-136373.html | ||

* ''Java theory and practice: Synchronization optimizations in Mustang'': http://www.ibm.com/developerworks/java/library/j-jtp10185/index.html | |||

=List of Acronyms= | |||

{| class="wikitable sortable" | {| class="wikitable sortable" | ||

! Acronym !! Expansion | ! Acronym !! Expansion | ||

|- | |- | ||

| | | [http://en.wikipedia.org/wiki/Compare-and-swap CAS] || Compare and Swap | ||

|- | |||

| [http://en.wikipedia.org/wiki/Test-and-set TAS] || Test and Set | |||

|- | |||

| [http://en.wikipedia.org/wiki/Load-link/store-conditional LL/SC] || Load Link / Store Conditional | |||

|- | |||

| [http://en.wikipedia.org/wiki/Java_virtual_machine JVM] || Java Virtual Machine | |||

|- | |||

| [http://en.wikipedia.org/wiki/Virtual_machine VM] || Virtual Machine | |||

|- | |- | ||

| | | [http://en.wikipedia.org/wiki/Central_processing_unit CPU] || Central Processing Unit | ||

|- | |- | ||

| | | [http://pppj2011.imm.dtu.dk PPPJ2011] || Principles and Practice of Programming in Java, 2011 | ||

|- | |- | ||

| | | QRL || Quickly Recoverable Locks | ||

|- | |- | ||

|} | |} | ||

| Line 160: | Line 183: | ||

# ''David's Dice Weblog: Biased Locking in HotSpot''. Dice, 2010: https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot | # ''David's Dice Weblog: Biased Locking in HotSpot''. Dice, 2010: https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot | ||

# ''Java Locks: Analysis and Acceleration (Thesis)'', 2004. Kiyokuni Kawachiya: http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf | # ''Java Locks: Analysis and Acceleration (Thesis)'', 2004. Kiyokuni Kawachiya: http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf | ||

# ''Reducing Biased Lock Revocation By Learning - presentation'',2011. Rogers, Iyengar: http://www.cs.man.ac.uk/%7Eirogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf | # ''Reducing Biased Lock Revocation By Learning - presentation'', 2011. Rogers, Iyengar: http://www.cs.man.ac.uk/%7Eirogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf | ||

# ''Reducing Biased Lock Revocation By Learning'', 2011. Rogers, Ivengar: http://delivery.acm.org.prox.lib.ncsu.edu/10.1145/2070000/2069179/a7-rogers.pdf?ip=152.1.24.251&acc=ACTIVE%20SERVICE&CFID=95559947&CFTOKEN=76517280&__acm__=1333547803_ec09d783f7ad5ce9a828bfad3f619f2a | # ''Reducing Biased Lock Revocation By Learning'', 2011. Rogers, Ivengar: http://delivery.acm.org.prox.lib.ncsu.edu/10.1145/2070000/2069179/a7-rogers.pdf?ip=152.1.24.251&acc=ACTIVE%20SERVICE&CFID=95559947&CFTOKEN=76517280&__acm__=1333547803_ec09d783f7ad5ce9a828bfad3f619f2a | ||

# ''Quickly Reacquirable Locks''. Dice, Moir and Scherer, 2002: http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf | # ''Quickly Reacquirable Locks''. Dice, Moir and Scherer, 2002: http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf | ||

| Line 170: | Line 193: | ||

# ''Lock Reservation for Java Reconsidered'', Onodera, Kawachiya, Koseki, 2004: http://www.springerlink.com.prox.lib.ncsu.edu/content/7ufdkv0gc0fddr39/fulltext.pdf | # ''Lock Reservation for Java Reconsidered'', Onodera, Kawachiya, Koseki, 2004: http://www.springerlink.com.prox.lib.ncsu.edu/content/7ufdkv0gc0fddr39/fulltext.pdf | ||

# [http://www.artima.com/insidejvm/blurb.html ''Inside the Java Virtual Machine 2''], Bill Venners, McGraw-Hill Companies; 2nd edition 2000 | # [http://www.artima.com/insidejvm/blurb.html ''Inside the Java Virtual Machine 2''], Bill Venners, McGraw-Hill Companies; 2nd edition 2000 | ||

# [http://www.research.ibm.com/people/d/dfb/talks/Bacon98ThinTalk.pdf ''Thin Locks: Featherweight Synchronization for Java'']. Paper presented at the ACM Conference on Programming Language Design and Implementation, Montreal, Canada, June 1998. | |||

# ''Thread Synchronization'', Artima, Inc, 2009: http://www.artima.com/insidejvm/ed2/threadsynch.html | |||

=Quiz= | =Quiz= | ||

1. Which one of this actions is not part of the locking process: | |||

a. Acquire | |||

b. Spinning | |||

c. Release | |||

d. Critical Section | |||

2. What is the main characteristic of a monitor in Java. | |||

a. It's an object that includes synchronization properties. | |||

b. The maximum resolution that is capable in number of pixels. | |||

c. The frequency of the locks. | |||

d. The size of the code it uses. | |||

3. Which of the following is not an atomic operation | |||

a. Compare and Swap | |||

b. Fetch and Increment | |||

c. LL/SC | |||

d. Read and Fetch | |||

4. What would be beneficial for a process that passes through the critical section very frequently | |||

a. To short the time it takes to pass that critical section | |||

b. To make longer the time it takes to pass that critical section | |||

c. There is nothing that can be done. | |||

d. To make the time to pass that critical section equal for all the processes. | |||

5. What happens when the owner has a biased lock, it is not in the critical section and another process tries to acquire the lock. | |||

a. The owner gracefully concedes. | |||

b. The new process can enter the lock, as it is already open. | |||

c. The new task has to wait until the lock is released. | |||

d. An arbiter decides who has the right to the lock. | |||

6. What in this list is not a cause of trouble with locks? | |||

a. Race Conditions. | |||

b. Contention. | |||

c. Lack of Storage. | |||

d. Deadlocks. | |||

7. In the monitor space, when we are in the inner region of the monitor, it is referred as: | |||

a. Entering the monitor. | |||

b. Owning the monitor. | |||

c. Turning the monitor on. | |||

d. Exiting the monitor. | |||

8. What of the following do thin locks need to work? | |||

a. A special synchronization word into each object header. | |||

b. An infinite loop governed by a while clause. | |||

c. A longer pointer to memory. | |||

d. A bigger L2 cache. | |||

9. In the Rogers' Lock, the header has how many bits? | |||

a. 27 | |||

b. 32 | |||

c. 16 | |||

d. 64 | |||

10. Why is it convenient to wait some time before declaring an object "biaseble"? | |||

a. Because the locking mechanism is rather slow. | |||

b. Because routines often have a different behavior during initialization and in the core of the program. | |||

c. Because it is recommended by the Java Manual of Style. | |||

d. Because it has been so determined by the IEEE. | |||

''Answers: 1- | ''Answers: 1-b,2-a,3-d,4-a,5-c,6-c,7-b,8-a,9-a,10-b'' | ||

Latest revision as of 19:35, 11 April 2012

Reducing Locking Overhead

Thread synchronization in a multiprocessor environment seeks to ensure memory consistency during concurrent execution. Several mechanisms exist to address synchronization and central to the solution is typically the provision of atomicity. When a thread performs a set of operations that are seen by other threads and the rest of the system as instantaneous, atomicity has been provided. The operations themselves of course are not physically instantaneous and rely upon locking other processes out of protected memory locations while critical accesses by a single thread occur. The overhead associated with providing thread synchronization can be significant, and the aim of this article is to discuss atomicity via locks and optimizations that improve locking performance.

Locks

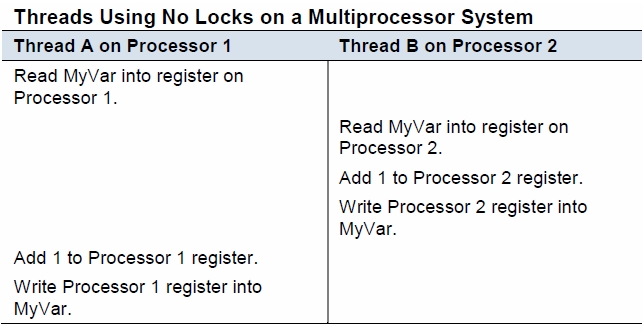

Locks are a mechanism for providing concurrency control among concurrently executing processes. Locks are important when multiple processes share data, unless in a read only manner, and also when a set of operations executed by a process must be accomplished atomically. A multiprocessor environment that fails to provide concurrency control can suffer correctness problems as demonstrated in the figure below.

Consider two processes that access and write a shared variable MyVar with three processor instructions: Read MyVar into a register, increment MyVar, and write the new value back to the memory location. The diagram shows two processes attempting to concurrently increment MyVar and the incorrectness can follow when the three operations are not executed atomically. Only the Thread A update is preserved in the execution sequence shown as it overwrites the results of Thread B.

Locks are ususally constructed with hardware support although methods such as Peterson's or Dekker's algorithms have been developed for environments where hardware primitives are not available.

Atomic Primitives

These instructions are used directly by compilers and operating systems but are also used in library functions in higher-level languages to implement locking. These include operations such as atomic read-write, atomic swap, test-and-set, fetch-and-add, compare-and-swap (CAS), and Load-Link/Store-Conditional (LL/SC). For example, a C function that implements compare-and-swap might look like this:

int compare_and_swap (int* reg, int oldval, int newval)

{

int old_reg_val = *reg;

if (old_reg_val == oldval)

*reg = newval;

return old_reg_val;

}

This function shows the logic for the swap but does not yet address correctness because it does not guarantee atomicity. Compare-and-swap takes as parameters a memory value that is read and remembered (oldval) and a calculated value based on the old value (newval) as well as the address of the memory location. In the function, the memory location is tested and if it still contains the value oldval then the new value is swapped in. Although this function provides the logic it still does not provide a guarantee of atomicity.

Monitors

Some languages have mechanisms for synchronizing entire methods or collections of methods. Monitors are objects or modules that are designed to be use safely in a multiprogramming environment due to their guarantee of mutual exclusion. At any given time only a single thread may be executing any of a monitor object's methods. Many languages have supported monitors including Java, C#, Python and Ruby.

Monitors in Java

Monitors in Java are conceptual spaces that can be occupied by only one thread at a time. Entering the monitor space is termed entering the monitor. The monitor space has a conceptual inner region which when entering is called acquiring the monitor. Occupying this inner region is called owning the monitor while leaving it is called releasing the monitor and exiting the monitor space all together is called exiting the monitor. No more than one thread can own the monitor at any given time. This region of the monitor typically contains data and code that potentially support two types of synchronization available with Java, mutual exclusion and cooperation. Monitors can contain many code regions, related or not, which each when executed must do so with a

guarantee of atomicity with respect to other executing threads. Each code region has its own entry set. This means that each monitor has multiple points of entry, each of which is associated with a particular region of code that for correctness must be synchronized. When a thread, in its course of execution, comes upon a region controlled by the monitor it is placed in the entry set of that region. If the monitor is not occupied, the thread acquires and then occupies the monitor and continues with its execution of that region. If the monitor is occupied then the thread is forced to wait (along with other waiting threads) for the occupying thread to release the monitor. All waiting threads then compete for the opportunity to acquire the monitor.

Mutual exclusion is guaranteed by the monitor because no more than one thread is allowed in any region of a particular monitor. Cooperation between threads can be orchestrated with a "Wait and Notify" (or "Signal and Continue") monitor. In this type of monitor a thread, while occupying the monitor, can execute a wait command. Executing a wait causes a thread to release the monitor and enter a wait set. The thread, now in the wait set, will stay in a suspended state until after it receives a notify command and it is able to occupy the monitor according to protocols already described. A thread which executes a notify command (the Signal) continues to occupy the monitor in its normal course of execution until it releases the monitor or suspends as well via a wait command.

The figure on the right shows the monitor as the space inclusive of the inner region, which includes protected data and code, as well as the entry and wait sets. The diagram also numbers the different entry and exit opportunities and the names associated with each status change.

Problems with Locks

Although locks provide a mechanism to ensure correctness during execution they have downsides. Some well documented negatives of locks include:

- overhead due to the blocking nature of locks and lock acquisition.

- their potential to cause race conditions or deadlocks.

- overhead due to contention.

- the possibility of priority inversion and convoying.

- their demand on resources and the complexity they add for the programmer.

Much effort has been spent on addressing problems associated with locks. Two adaptations of locks will be described here, the first being thin locks and the second, a further optimization of thin locks, are biased locks.

Thin Locks

Thin Locks were first described by Bacon, et al. in 1998. Alternatively referred to as "Featherweight Synchronization", thin locks efficiently handle common locking scenarios and exist on top of native, heavyweight locking implementation such as pthreads. The ambition for thin locks was a top level locking capability that had speed and compactness and was scalable, simple, and maintainable. For situations where thin locks are able to be applied this results in not only a speed up due to inefficiencies inherit in using a monitor, but potentially space as well, because it can reduce the number of synchronized objects created.

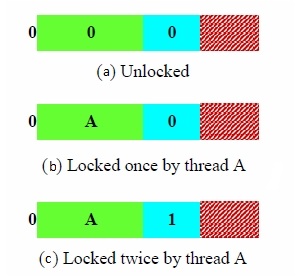

Monitors are multi-word structures and include space for a thread pointer, a nested lock count and queues. Thin locks work by incorporating a special synchronization word into each object header and they require the availability of instructions that can be used to build atomic primitives such as a compare-and-swap or store-conditional. In the JVM system that was used to develop thin locks each object was made of a three word header followed by data. The original implementation reserved 24 bits of the three word header for the synchronization word but was able to maintain the three word size of the header through compression techniques. The structure of the 24-bit lock field is key to facilitating the facile locking ability of the thin lock in common locking situations. The lock field represents either a thin lock or a reference to a fat lock, or native heavyweight lock. The first bit, referred to as the monitor shape bit is 0 if the lock field refers to a thin lock and 1 if it is a reference to a fat lock. If the monitor shape bit is 1 the remaining 23 bits are an index of a fat lock. If the monitor shape bit is 0 the next 15 bits constitute a thread identifier and the last 8 bits serve as a measure of nesting depth- the number of times an already locked object is locked. When the thread identifier is 0 the object is unlocked, but if it is non-zero it is an index into a table which maps thread indices to thread pointers.

Situations that are applicable to thin lock usage include objects that do not have the cooperation commands of wait, notify, or notifyAll performed on them, are not subject to contention and are not locked to a nesting depth of greater than 257. These restrictions sound significant but in most applications the majority of objects meet these criteria. When an object transitions from thin state to fat it remains there for its duration. Key to the performance of a thin lock is the design element that while an object is owned by a particular thread it is never modified by another thread. This means that

once an object is locked all subsequent operations can be performed with loads and stores as opposed to using atomic primitives.

Assume initially that an object is unlocked and the entire lock field is 0 (figure left (a)). If a thread A wants to lock the object, it first performs a compare-and-swap operation on the word containing the lock field. The old value given to the compare-and-swap is shown in the figure as the upper state, built by masking the high 24 bits. The new value corresponds to (b) in the figure and contains 0 for the monitor bit shape, a thread index which corresponds to thread A and a count of zero. The new value is generated by a bitwise or operation of the old value and the index and then shifting 16 bits left. The thread index can be accessed in a single load instruction from a separate data structure. If thread A owns the lock and attempts to lock again (nested locking) it first begins a CAS operation, which fails because the object is already locked. The routine then will check the monitor bit shape, thread index and count. If the bit shape is 0, the thread index matches that of thread A and if the count is under 255 then count is incremented with a simple store. This situation corresponds to (c) of the left figure.

If thread A has the object locked and thread B tries to lock the object, it will try a CAS which fails. It will check to see if it owns the lock and that will fail because the object is locked by another thread. Thread B then initiates a transition on the object lock from thin to fat. Because only the owner thread can act on a thin object lock, thread B spin locks on the object until thread A releases and then thread B obtains the lock. Thread B creates a fat lock by assigning a monitor index to the newly created monitor object and changes the lock field monitor shape bit (to 1) and rest of the field to monitor index. When the object is unlocked by thread B it remains in the inflated lock state for the rest of its lifetime.

An example implementation of the lock follows:

atomic_t unlocked = obj->monword & ~LOCK_MASK

atomic_t locked = unlocked | thread;

if (CMP&SWP(obj->monword, unlocked, locked))

return;

unsigned xored = obj->monword ^ thread;

if (xored < COUNT_MASK) {

obj->monword += COUNT_INCREMENT;

return;

}

First, the values for the CAS operation are generated via logical operations on the objects lock field word ("obj->monword"). A CAS is executed and if that operation succeeds the atomic function returns true and the locking method completes. If the calling process finds that it owns the lock (if (xored < COUNT_MASK)) then it increments the lock count. If a different object owns the lock then locking fails and steps to transition the lock from thin to fat are initiated by the calling process.

Biased Locks

One of the techniques, previously introduced, that are used to test and capture the locks is compare&swap, CAS. These atomic instructions are indeed very intensive, and consume many bus cycles (in a Core Duo, a CAS operation can consume up to seven times longer than a increment [10]). They solely could be blamed on using a significant percentage of the computing time and resources of a processor. (It is interesting to state here that this effect depends on the processor architecture, as well as the VM implementation and, hence, IBM Java VM is less disposed to this effect than Oracle's. Also, recent processors, like the Intel Core family, have been optimized to perform this task) Due to its architecture and way of use, Java is prone to use a considerable amount of locking, and that amount has a tremendous effect on the computing time of every program. Due to the idiosyncrasy of the spins, it is not uncommon [1] that, when we compare the times used in the execution of a program serially or using threads, the latter shows higher inefficiency and latencies grow exponentially with relation to the number of threads used.

One of the solutions proposed to mitigate this problem is based on taking advantage of some observations in the distribution of locks by the programs: in most cases, due to the way the programs are structured, there is one thread that consumes the locks more often than the others, that is the lock is more commonly used by a single owner and, in occasions, that thread could be entering the locks a number of times several order the magnitude higher. So why not to make it easier for it?

The idea behind the biased locks, also known as Quickly Recoverable Locks (QRL), Lock Reservations or Asymmetric Locks, is not to released the lock for that thread once its task is finished. For this matter, an extra state is added to the thin lock and, when in that state, the object would not abandon the lock. In that way if, as foreseen, the same task comes back to get the same lock, it finds it ready for it, and can save the expensive CAS operation.

There are four steps in the process of synchronization, that are normally cyclical:

- Remainder, where a thread realizes that is entering a critical section and gets ready for it.

- Acquire, where a thread fights for and obtain a lock using some atomic instruction, like CAS; and/or barriers.

- Critical Section, where a thread executes some functions with mutual exclusion.

- Release, where the thread release the lock for others to acquire.

As we can imagine that we stay more or less in the mutual exclusion area will depend on how many threads or processes we have in that area, the frequency with we use it; if we collide or not with other processes and what kind of lock we are using. We need to find ways to make these characteristics to work in our advantage. Biased locking exploits just one of those characteristics, the frequency. As we have mentioned before, the case where a thread uses a lock more frequently than any others is very common. In that case, we could grant ownership of a lock to that thread and just purposely neglect doing the unlock operation (to be able to do this, the object need to allow it, i.e, in Java, the object header has to have the biased bit set). In this way, the next time that the thread wants to enter the lock, it will have it already, hence gaining the time to compete for it (even if there is no other competition, there will still be the CAS), lock it and release it. Effectively it is like they never leave the critical section. Now, could this generate contention problems? Not with the same process, that could not be in that region (it has just abandoned it); nor with others, as the former process still hold the lock. These will make the lock task nimbler to the more frequent thread and longer for the less frequent. But that is exactly what we are looking for. In fact, for cases where the contention between processes is very low, there are proposals to eliminate the atomicity all together [11].

There are then two issues we need to resolve: how to decide which thread is "the most frequent", that we will call the owner; and how to let any other thread not-the-owner use the lock. For the first question, we could use some gathered data or some heuristics. For instance:

- A thread could keep track of how many times it holds a particular lock and decide that needs to own it and flip the biased bit.

- The compiler/interpreter could monitor the frequency and decide which tasks have a better chance to take advantage of the biasing.

- Declare a particular type of object as "biaseble"

- Declare a particular object "biaseble" according to its age in the system.

The process behavior is likely to change during initialization, so it would be wise to wait some time, until the system is stabilized, to decide on the ownership. A possible algorithm proposed by Russell and Detlefs, bulk rebiasing [9], is described next:

- Define a flag for biasing in an object type.

- When entering a lock, check the flag: if asserted, then bias; if not, use a thin lock until a mechanism, for instance age, flags the biased flag.

Regarding the question of how the main thread decides when to leave the lock. The important factor here is to do it efficiently and many proposals have been made. In Kawachiya's work [3], one of the pioneers in these techniques, the author proposes that when a new thread needs the lock, it would just stop the owner thread and enter it. This task is very expensive because implies the revocation of the owner thread. Onodera et al [12] and Vasudevan et al [10] propose hybrid methods that mix a 2 process algorithm with n-process mutual algorithms (for instance, CAS). Vasudevan's algorithm, for instance, is capable of passing the lock to the younger thread without having to suspend the owner and only uses an extra two assignments and two comparisons. Russell and Detlefs [9] also offers lock transfer, but their transfer algorithm is more expensive and it has been questioned because the difficulty to ascertain if the biased lock is actually in possession of a particular thread.

Following, a biased lock snippet proposed by Vasudevan et al [10]. It first defines a structure with the id of the owner of thread and the two locks, the light weight and the heavier weight. In the biased locks we see that while the thread id is that of the owner, only the light weight lock is executed. When the thread is other than the owner, both locks are executed.The heavy weight, or not dominant, lock could be a variant of the Peterson's Algorithm before and after the critical section.

typedef struct {

ThreadId owner;

Lock2 t; /* lightweight, 2->process lock */

LockN n; /* N->process lock */

} Lock;

biased_lock(Lock *l) {

if (this_thread_id == l->owner)

lock2(l->t);

else {

lockN(l->n);

lock2(l->t);

}

}

biased_unlock(Lock *l) {

if (this_thread_id == l->owner)

unlock2(l->t);

else {

unlock2(l->t);

unlockN(l->n);

}

}

Often, the problem of using the Peterson's Algorithm is that it uses barriers and that can make the dominant and other processes to step back and spin. To solve this issue asymmetric locks [10] are used, where the non-dominant threads actually ask for permission to the dominant one to step in, making used of a flag and a grant variable. The flag indicates that there is a request from a process from a lesser thread. When the dominant is about to end with its critical section, checks if the flag is set. If so, sets the grant variable and retires with a barrier until the lesser thread ends, moment at which the latter would reset the grant variable, so the dominant can come back in.

An example of biased locks: Rogers' lock

Dr. Ian Rogers (Manchester University, Azul Systems, Google) has an extensive curriculum in computer science, going from network security to binary translators and was a program committee member for PPPJ2011. He is also part of the Android mobile group at Google.

In 2011 he presented some work on thin and biased locks [4] [5] where he proposed a biased lock with the following characteristics:

- Use and declaration of biased bit in the object header, using existing states in that header.

- Use of an unlocked state, containing information about the previous owner.

- When the first locks are tried, thin locking would be used.

- Ownership changes are detected at lock times. The biased bit would be set so the object is not biased.

- After a number of thin locks tries, the lock will become biased.

The object then would go through five different states of locking: unlocked, hashed, thin locked, bias locked and heavyweight locked.

Rogers used these basis to adapt a number of heuristics, the sites of the object allocation or the lock; the age of the object, how loaded the CPU is or how many spin locks attempts have been; and tries to come up with a solution to the question of how to decide when to bias an object.

Main concern with the model is the very assumption that the lock is unlikely to be revoked. If the locks are not hold for long times the advantages disappear, as cost of revoking and granting the locks again would overpass the benefits of the technique.

The object header for this model would then be like:

Where every field is defined as, according to Rogers:

- Mark: HotSpot's reachable indication for garbage collection

- Sample Count: number of transitions form unlocked state to locked.

- Shape: When set, it forbids biased locking.

- Age: Times that the object has been promotable

- Class Information: type identifier

- Hashed: indication of whether the locking information is from a hash code instead.

- Recursion count: Number of lock entries. 0 means unlocked. Thread id holds the previous owner. Maximum number means the lock is biased to the thread id.

- Monitor: Id pointing to the monitor associated to the object header when it is inflated.

The state machine that Rogers design is depicted in the companion figure. In his own words, "no short change"

See Also

- Mostly Lock-Free Malloc. Dice, Garthwaite: http://home.comcast.net/~pjbishop/Dave/lfmalloc-ismm02-submit.pdf

- Quickly Reacquirable Locks, US Patent. Dice et al. http://patft.uspto.gov/netacgi/nph-Parser?Sect1=PTO1&Sect2=HITOFF&d=PALL&p=1&u=%2Fnetahtml%2FPTO%2Fsrchnum.htm&r=1&f=G&l=50&s1=7814488.PN.&OS=PN/7814488&RS=PN/7814488

- Lock Prediction. Lucia et al: http://www.cs.washington.edu/homes/tbergan/papers/hotpar10-lockprediction.pdf

- Dr. Rogers'website at Manchester Uni.: http://www.cs.man.ac.uk/~irogers/index.php

- Java HotSpot: http://www.oracle.com/technetwork/java/javase/tech/index-jsp-136373.html

- Java theory and practice: Synchronization optimizations in Mustang: http://www.ibm.com/developerworks/java/library/j-jtp10185/index.html

List of Acronyms

| Acronym | Expansion |

|---|---|

| CAS | Compare and Swap |

| TAS | Test and Set |

| LL/SC | Load Link / Store Conditional |

| JVM | Java Virtual Machine |

| VM | Virtual Machine |

| CPU | Central Processing Unit |

| PPPJ2011 | Principles and Practice of Programming in Java, 2011 |

| QRL | Quickly Recoverable Locks |

References

- Thin Locks: Featherweight Synchronization for Java. Bacon et al, 1998: http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf

- David's Dice Weblog: Biased Locking in HotSpot. Dice, 2010: https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot

- Java Locks: Analysis and Acceleration (Thesis), 2004. Kiyokuni Kawachiya: http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf

- Reducing Biased Lock Revocation By Learning - presentation, 2011. Rogers, Iyengar: http://www.cs.man.ac.uk/%7Eirogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf

- Reducing Biased Lock Revocation By Learning, 2011. Rogers, Ivengar: http://delivery.acm.org.prox.lib.ncsu.edu/10.1145/2070000/2069179/a7-rogers.pdf?ip=152.1.24.251&acc=ACTIVE%20SERVICE&CFID=95559947&CFTOKEN=76517280&__acm__=1333547803_ec09d783f7ad5ce9a828bfad3f619f2a

- Quickly Reacquirable Locks. Dice, Moir and Scherer, 2002: http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf

- Implementing fast java[tm] monitors with relaxed-locks. Dice, 2001: http://static.usenix.org/event/jvm01/full_papers/dice/dice.pdf

- One-sided Mutual Exclusion: A new Approach to Mutual Exclusion Primitives. Siebert, 2004: http://www.tu-chemnitz.de/informatik/service/if-berichte/ps/CSR-04-05.ps

- Eliminating synchronization-related atomic operations with biased locking and bulk rebiasing. Russel, Deflets, 2006: http://www.oracleimg.com/technetwork/java/biasedlocking-oopsla2006-wp-149958.pdf

- Simple and fast biased locks.Vasudevan, Nanjosi, Edwards, 2010: http://www.cs.columbia.edu/~sedwards/papers/vasudevan2010simple.pdf

- A fast Mutual Exclusion Algorithm. Lamport, 1986: http://research.microsoft.com/en-us/um/people/lamport/pubs/fast-mutex.pdf

- Lock Reservation for Java Reconsidered, Onodera, Kawachiya, Koseki, 2004: http://www.springerlink.com.prox.lib.ncsu.edu/content/7ufdkv0gc0fddr39/fulltext.pdf

- Inside the Java Virtual Machine 2, Bill Venners, McGraw-Hill Companies; 2nd edition 2000

- Thin Locks: Featherweight Synchronization for Java. Paper presented at the ACM Conference on Programming Language Design and Implementation, Montreal, Canada, June 1998.

- Thread Synchronization, Artima, Inc, 2009: http://www.artima.com/insidejvm/ed2/threadsynch.html

Quiz

1. Which one of this actions is not part of the locking process:

a. Acquire b. Spinning c. Release d. Critical Section

2. What is the main characteristic of a monitor in Java.

a. It's an object that includes synchronization properties. b. The maximum resolution that is capable in number of pixels. c. The frequency of the locks. d. The size of the code it uses.

3. Which of the following is not an atomic operation

a. Compare and Swap b. Fetch and Increment c. LL/SC d. Read and Fetch

4. What would be beneficial for a process that passes through the critical section very frequently

a. To short the time it takes to pass that critical section b. To make longer the time it takes to pass that critical section c. There is nothing that can be done. d. To make the time to pass that critical section equal for all the processes.

5. What happens when the owner has a biased lock, it is not in the critical section and another process tries to acquire the lock.

a. The owner gracefully concedes. b. The new process can enter the lock, as it is already open. c. The new task has to wait until the lock is released. d. An arbiter decides who has the right to the lock.

6. What in this list is not a cause of trouble with locks?

a. Race Conditions. b. Contention. c. Lack of Storage. d. Deadlocks.

7. In the monitor space, when we are in the inner region of the monitor, it is referred as:

a. Entering the monitor. b. Owning the monitor. c. Turning the monitor on. d. Exiting the monitor.

8. What of the following do thin locks need to work?

a. A special synchronization word into each object header. b. An infinite loop governed by a while clause. c. A longer pointer to memory. d. A bigger L2 cache.

9. In the Rogers' Lock, the header has how many bits?

a. 27 b. 32 c. 16 d. 64

10. Why is it convenient to wait some time before declaring an object "biaseble"?

a. Because the locking mechanism is rather slow. b. Because routines often have a different behavior during initialization and in the core of the program. c. Because it is recommended by the Java Manual of Style. d. Because it has been so determined by the IEEE.

Answers: 1-b,2-a,3-d,4-a,5-c,6-c,7-b,8-a,9-a,10-b