CSC/ECE 506 Spring 2012/9a ms: Difference between revisions

No edit summary |

(→Quiz) |

||

| (20 intermediate revisions by 2 users not shown) | |||

| Line 131: | Line 131: | ||

[[Image:Thin Locks1.png|thumb|right|450px|Thin Locks Example]] | |||

If the lock is not yet been reserved, then it is checked for being occupied. The free lock is set to be reserved and acquired simultaneously with a single CAS operation. If the lock becomes busy then, the system checks whether the lock is fat. | If the lock is not yet been reserved, then it is checked for being occupied. The free lock is set to be reserved and acquired simultaneously with a single CAS operation. If the lock becomes busy then, the system checks whether the lock is fat. | ||

The lock table holds a mapping between the fat lock ID and the actual monitor. Fat monitors are extracted from the lock table and acquired. If the lock is not fat and reserved by another thread, then this thread suspends the execution of the lock owner thread, removes the reservation, and resumes the owner thread. After that, the lock acquisition is tried again. | The lock table holds a mapping between the fat lock ID and the actual monitor. Fat monitors are extracted from the lock table and acquired. If the lock is not fat and reserved by another thread, then this thread suspends the execution of the lock owner thread, removes the reservation, and resumes the owner thread. After that, the lock acquisition is tried again. | ||

=== Example === | === Example === | ||

As shown in figure, consider a thin lock entry for a block which is initially not acquired by any thread. | As shown in figure, consider a thin lock entry for a block which is initially not acquired by any thread. | ||

Thread A requests access to the block. As the lock is not acquired by any other thread, Thread A is granted access and Reserved bit is set to one. The recursion value is still zero as there is no recursion. | Thread A requests access to the block. As the lock is not acquired by any other thread, Thread A is granted access and Reserved bit is set to one. The recursion value is still zero as there is no recursion. | ||

Thread A re enters the same code block and requests to access the block. The lock is already acquired by Thread A, hence it is granted access to the block and recursion count is incremented to one - denotes that Thread A has acquired the lock twice. | Thread A re enters the same code block and requests to access the block. The lock is already acquired by Thread A, hence it is granted access to the block and recursion count is incremented to one - denotes that Thread A has acquired the lock twice. | ||

This is followed by a request from Thread B to access the same block. As thread A has the lock, thread B is not granted access and is placed in the entry code to wait till the Thread A releases the lock. | This is followed by a request from Thread B to access the same block. As thread A has the lock, thread B is not granted access and is placed in the entry code to wait till the Thread A releases the lock. | ||

Thread A releases the lock twice to decrement the recursion count and finally release the lock thus resetting the Reserved bit to zero. On this release, Thread B is allowed to acquire the lock. As there is a contention on the lock, the contention bit is set to 1 and the lock is inflated to a fat lock. Here the Fat Lock ID points to the Fat Lock in the fat lock table which is now acquired by Thread B. The lock count in the fat lock is incremented to one and denotes that Thread B has acquired it once. | Thread A releases the lock twice to decrement the recursion count and finally release the lock thus resetting the Reserved bit to zero. On this release, Thread B is allowed to acquire the lock. As there is a contention on the lock, the contention bit is set to 1 and the lock is inflated to a fat lock. Here the Fat Lock ID points to the Fat Lock in the fat lock table which is now acquired by Thread B. The lock count in the fat lock is incremented to one and denotes that Thread B has acquired it once. | ||

When Thread B releases the lock, the lock count in the fat lock is decremented to zero. Thus the lock is now free to use by any other thread. It is to be noted that the lock stays inflated now onward. | When Thread B releases the lock, the lock count in the fat lock is decremented to zero. Thus the lock is now free to use by any other thread. It is to be noted that the lock stays inflated now onward. | ||

== Biased Lock == | == Biased Lock == | ||

| Line 172: | Line 175: | ||

The CAS can be accomplished locally, with no bus transactions, if the line is already in M-state. CAS is usually implemented on top of the existing MESI snoop-based cache coherence protocol, but in terms of the bus, CAS is no different than a store. | The CAS can be accomplished locally, with no bus transactions, if the line is already in M-state. CAS is usually implemented on top of the existing MESI snoop-based cache coherence protocol, but in terms of the bus, CAS is no different than a store. | ||

===Example | ===Example=== | ||

Let us assume that we have a true 16-way system. We launch a thread that executes the compare-and-swap (CAS) instruction 1 billion times to a thread-private location, and measure the elapsed time. | Let us assume that we have a true 16-way system. We launch a thread that executes the compare-and-swap (CAS) instruction 1 billion times to a thread-private location, and measure the elapsed time. | ||

| Line 203: | Line 206: | ||

*[http://en.wikipedia.org/wiki/Multithreading_(computer_architecture) '''multi-threading''']: Multithreading computers have hardware support to efficiently execute multiple threads. | *[http://en.wikipedia.org/wiki/Multithreading_(computer_architecture) '''multi-threading''']: Multithreading computers have hardware support to efficiently execute multiple threads. | ||

*[http://en.wikipedia.org/wiki/Mutual_exclusion '''mutual exclusion''']: It refers to the problem of ensuring that no two processes or threads (henceforth referred to only as processes) can be in their critical section at the same time. | *[http://en.wikipedia.org/wiki/Mutual_exclusion '''mutual exclusion''']: It refers to the problem of ensuring that no two processes or threads (henceforth referred to only as processes) can be in their critical section at the same time. | ||

*'''QRL''': | *[http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf '''QRL''']: Quickly Reacquirable Lock | ||

*[http://en.wikipedia.org/wiki/Symmetric_multiprocessing '''SMP''']: Symmetric multiprocessing (SMP) involves a multiprocessor computer hardware architecture where two or more identical processors are connected to a single shared main memory and are controlled by a single OS instance | *[http://en.wikipedia.org/wiki/Symmetric_multiprocessing '''SMP''']: Symmetric multiprocessing (SMP) involves a multiprocessor computer hardware architecture where two or more identical processors are connected to a single shared main memory and are controlled by a single OS instance | ||

*[http://en.wikipedia.org/wiki/Test-and-set '''test-and-set''']: It is an instruction used to write to a memory location and return its old value as a single atomic (i.e., non-interruptible) operation | *[http://en.wikipedia.org/wiki/Test-and-set '''test-and-set''']: It is an instruction used to write to a memory location and return its old value as a single atomic (i.e., non-interruptible) operation | ||

| Line 228: | Line 231: | ||

==Quiz== | ==Quiz== | ||

1. Which of the following lock artifacts hurt performance? | |||

a) Repeated reads of a cached lock variable | |||

b) Fairness | |||

c) Repeated test and sets | |||

d) Using a single lock variable (rather than multiple lock variables) | |||

2. Which of the following functions used to implement Java locks? | |||

a)lock_acquire() | |||

b)lock_release() | |||

c)wait() | |||

d)notify() | |||

e)notifyall() | |||

3. Transitions from and to the spin-lock mode are called __________ and ____________ , respectively. | |||

4. For a given lock, if its lock sequence contains a very long repetition of a specific thread, the lock is said to exhibit __________ locality, while the specific thread is said to be the ____________ locker. | |||

===Solutions:=== | |||

1 - a,b,c,d. | |||

2 - a,b,c,d,e. | |||

3 - inflation,deflation | |||

4 - thread,dominant | |||

Latest revision as of 02:18, 10 April 2012

Reducing locking overhead

Introduction

The cost of locking is not only the cost of executing the hardware instructions (such as test-and-set or LL/SC), but also the associated software overhead of creating a monitor, and the system call for acquiring the actual lock. The mutual exclusion problem arises in an activity wherein each participating process executes, in strict cyclic order, program regions labeled remainder, acquire, critical section, and then release. This mutual exclusion problem has a long history. A solution to the mutual exclusion problem consists of code for the acquire() and release() operation, which ensures that only one process is executing the critical section at any given time and no other process will complete an acquire() operation before the rest process invokes a release() operation. Solutions to the mutual exclusion problem are often referred to as locks.

Synchronization in Java

The support for multi-threading at language level is the strength of Java programming language. Hence most of Java programming language is centered around coordinating the sharing of data among the multiple threads. To limit memory overhead, the Java runtime system kept information about locked objects in a (software) table, called a monitor cache. Access to this cache needed to be serialized too. This meant that as the program used more locks, performance got worse and worse.

Memory Model for Data

The JVM organizes the data of a running Java application into several runtime data areas: one or more Java stacks, a heap, and a method area.

Each thread has its own Java stack. The stack contains data that cannot be accessed by other threads (including the local variables, parameters, and return values of each method the thread has invoked). The data on the stack is limited to primitive types and object references. The JVM has only one heap which is shared by all threads. The heap contains objects. The Method Area is another place where data can reside. It contains all the class (or static) variables used by the program. The method area is similar to the stack in that it contains only primitive types and object references. Unlike the stack, however, the class variables in the method area are shared by all threads.

Sharing and Locks

The sharing of data in a multiprocessor differs from that of the uniprocessor. In a uni-processor system, multiple threads do not execute concurrently but they time share the processor for execution. Whereas on multiprocessor, multiple threads execute concurrently on different processors. Thus they have a tight contention for locks and strong sharing rules on multi processor system.

As mentioned above, the heap and the method area contain all the data that is shared by multiple threads. To coordinate shared data access among multiple threads, the Java virtual machine associates a lock with each object and class. A lock is like a privilege that only one thread can "possess" at any one time. If a thread wants to lock a particular object or class, it asks the JVM. At some point after the thread asks the JVM for a lock -- maybe very soon, maybe later, possibly never -- the JVM gives the lock to the thread. When the thread no longer needs the lock, it returns it to the JVM. If another thread has requested the same lock, the JVM passes the lock to that thread. Class locks are actually implemented as object locks. When the JVM loads a class file, it creates an instance of class java.lang.Class. When you lock a class, you are actually locking that class's Class object. Threads need not obtain a lock to access instance or class variables. If a thread does obtain a lock, however, no other thread can access the locked data until the thread that owns the lock releases it.

Monitors

The JVM uses locks in conjunction with [monitors. A monitor is basically a guardian in that it watches over a sequence of code, making sure only one thread at a time executes the code. Each monitor is associated with an object reference. They combine the below three features,

- Shared data.

- Operations on the data.

- Synchronization, scheduling.

They are especially convenient for synchronization involving lots of state. Compare monitors to modules and abstract data types. Monitors are embedded in some concurrent programming languages. When a thread arrives at the first instruction in a block of code that is under the watchful eye of a monitor, the thread must obtain a lock on the referenced object. The thread is not allowed to execute the code until it obtains the lock. Once it has obtained the lock, the thread enters the block of protected code. When the thread leaves the block, no matter how it leaves the block, it releases the lock on the associated object. In the style of C, a queue manipulation monitor might look like:<ref>http://courses.mpi-sws.org/os-ss11/lectures/proc5.pdf</ref>

monitor QueueHandler;

struct {

int add, remove, buffer[200];

} queue;

void AddToQueue(int val)

{ – add val to end of queue – }

int RemoveFromQueue()

{ – remove value from queue, return it – }

end monitor

Synchronization<ref>http://www.javaworld.com/javaworld/jw-07-1997/jw-07-hood.html?page=1</ref>

A single thread is allowed to lock the same object multiple times. For each object, the JVM maintains a count of the number of times the object has been locked. An unlocked object has a count of zero. When a thread acquires the lock for the first time, the count is incremented to one. Each time the thread acquires a lock on the same object, a count is incremented. Each time the thread releases the lock, the count is decremented. When the count reaches zero, the lock is released and made available to other threads.

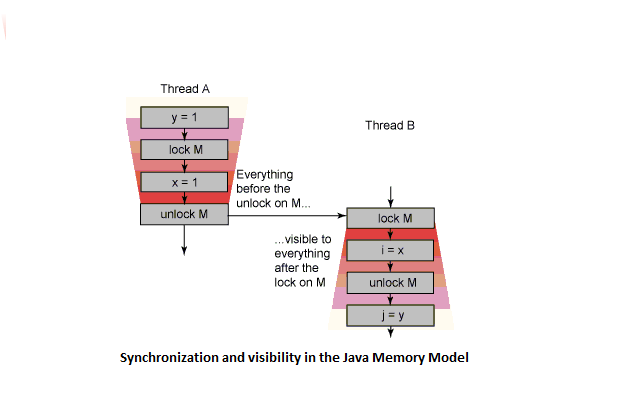

The Java Memory Model says that one thread exiting a synchronized block happens-before another thread enters a synchronized block protected by that same lock; this means that whatever memory operations are visible to thread A when it exits a synchronized block protected by lock M are visible to thread B when it enters a synchronized block protected by M, as shown in the adjacent figure <ref>http://www.ibm.com/developerworks/java/library/j-jtp10185/index.html</ref>

For a java developer, the keyword synchronized is provided to enforce critical execution on a statement or a method. On entering a synchronized block, a lock is acquired. The block is not executed till a lock is acquired. The opcodes monitorenter and monitorexit, are used while entering and exiting the synchronized block. When the JVM encounters monitorenter, it acquires the lock for the object referred. If the thread already owns the lock for the object, the lock count is incremented. Similarly, when monitorexit is executed by the JVM, the count is decremented. The monitor lock is released when the count reaches zero.

Sun's Java virtual machine specification states that synchronization is based on monitors. This point is reinforced at the Java VM level by the presence of monitorenter and monitorexit instructions.

First suggested by E. W. Dijkstra in 1971, conceptualized by P. Brinch Hansen in 1972-1973, and refined by C. A. R. Hoare in 1974, a monitor is a concurrency construct that encapsulates data and functionality for allocating and releasing shared resources (such as network connections, memory buffers, printers, and so on). To accomplish resource allocation or release, a thread calls a monitor entry (a special function or procedure that serves as an entry point into a monitor). If there is no other thread executing code within the monitor, the calling thread is allowed to enter the monitor and execute the monitor entry's code. But if a thread is already inside of the monitor, the monitor makes the calling thread wait outside of the monitor until the other thread leaves the monitor. The monitor then allows the waiting thread to enter. Because synchronization is guaranteed, problems such as data being lost or scrambled are avoided. To learn more about monitors, study Hoare's landmark paper, "Monitors: An Operating System Structuring Concept," <ref> http://john.cs.olemiss.edu/~dwilkins/Seminar/S05/Monitors.pdf </ref> first published by the Communications of the Association for Computing Machinery Inc. in 1974.

The Java virtual machine specification goes on to state that monitor behavior can be explained in terms of locks. Think of a lock as a token that a thread must acquire before a monitor allows that thread to execute inside of a monitor entry. That token is automatically released when the thread exits the monitor, to give another thread an opportunity to get the token and enter the monitor.

Java associates locks with objects: each object is assigned its own lock, and each lock is assigned to one object. A thread acquires an object's lock prior to entering the lock-controlled monitor entry, which Java represents at the source code level as either a synchronized method <ref> http://docs.oracle.com/javase/tutorial/essential/concurrency/syncmeth.html </ref> or a synchronized statement <ref> http://www.javamex.com/tutorials/synchronization_concurrency_synchronized1.shtml </ref>.

Problems with Monitors

Thin Lock <ref>http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf</ref>

In Java methods of an object can be declared as synchronized, which implies that the object must be locked for the duration of method s execution. But there is a substantial performance degradation when in the absence of any true concurrency. One of the way to speed up the synchronization is by dedicating a portion of each object as a lock. Hence all objects in Java are potential locks (monitors). This potential is realized as an actual lock as soon as any thread enters a synchronized block on that object. When a lock is created in this way, it is a kind of lock that is known as a "thin lock."

Thin Locks were invented by compiler genius DavidBacon, of International Business Machines, and have been much played with and improved on since then.

Characteristics

A thin lock has the following characteristics:

- Speed:These locks are fast for uncontended acquisitions, which are the most common case in many situations. There is little overhead compared to no locking, which is good since a lot of Java code (especially in the class library) use lot of synchronization. In the absence of any contention, the initial locking and nested locking are very fast as it has only few machine instructions and during the presence of any contention it still performs better.

- Compactness: It doesn't requires no extra memory—all information about the lock as it is stored in the object itself. Only 24 bits of the object are used for locking and other compression techniques ensure that this doesn't have an impact on the size of the object.

- Scalability: Usage of global locks and synchronization instructions that are used to broadcast the changes to global bus are kept to an absolute minimum, which in turn results in effective execution on large multiprocessors.

- Maintainability: Thin lock code is portable assuming that it consists only CAS instructions.

Algorithm

As said earlier for locks that are mostly uncontended, thin locks are efficient. There is little overhead compared to no locking, which is good since a lot of Java code (especially in the class library) use lot of synchronization.

But, as soon as a lock becomes contended, the situation is no longer as obvious as to what is most efficient. If a lock is held for just a very short moment of time, and JRockit is running on a multi-CPU (SMP) machine, then the best strategy is to "spin-lock." This means that the thread that wants to acquire the lock continuously checks if the lock is still taken, "spinning" in a tight loop. This of course means some performance loss: as there is no actual user code that is running during this duration, and the CPU is wasting time that could have been spent on other threads. Still this method is preferable, if the lock is released by the other threads after just a few cycles in the spin loop. This is what's meant by a contended thin lock

Let us consider all the cases in order to optimize the Java's locking performance. Below is the list of all the cases with each being less common compared to the case preceding it,

- Locking an object, which is unlocked.

- Locking an object, which is already locked by the current thread a small number of times i.e. which is referred to as Shallowly nested locking.

- Locking an object, which is already locked by the current thread many times i.e. which is referred to as Deeply nested locking.

- Attempting to lock an object, which is already locked by another thread, for which no other threads are waiting.

- Attempting to lock an object, which is already locked by another thread, for which other threads are waiting.

Let us assume that thin locks consist of only "compare-and-swap" atomic instruction. In general compare-and-swap instruction takes only three inputs - an address, old value and a new value. If the content of the address matches the old value then the new value is stored in the address and true is returned. Else the address content remains unchanged and false is returned.

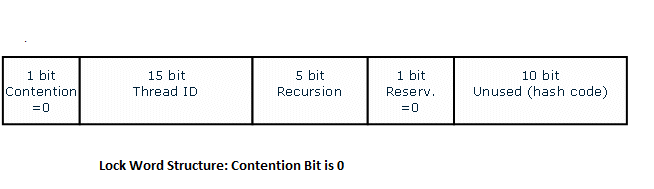

Using the encoding techniques we are able to obtain 24 free bits of the header, which are reserved in order to implement the thin locks as shown in the below figures. The basic structure of a thin lock word is shown in the adjacent for the first instance of lock acquiring etc..The lock bits either refer to the thin lock or flat lock. The '0' corresponds to the thin lock where as the '1' represents the flat lock <ref>http://harmony.apache.org/subcomponents/drlvm/TM.html</ref>

In the absence of contention, the lock type is zero, and the lock word has the following structure:

In the above figure,

- Contention bit : 0 indicating that absence of contention

- Thread ID (15 bits): the ID of the owning thread, or 0 if the lock is free

- Recursion count: the number of times that the lock has been acquired by the same thread minus 1

- Reservation bit: the flag indicating whether the lock is reserved by a thread.

- Rightmost 10 bits unused in TM and reserved for storing the hash codes of Java* objects

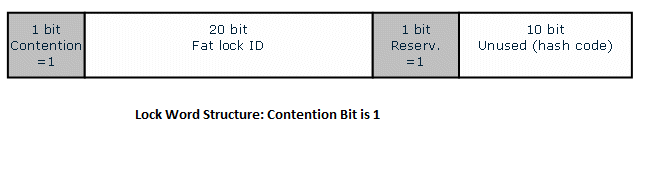

In the presence of contention, the contention bit is set to 1, and a thin compressed lock becomes a fat inflated lock with the following figure:<ref>http://dl.acm.org/citation.cfm?id=582433</ref>

In the above figure,

- Contention bit: 1 indicating presence of contention

- Fat Lock ID (20 bits): the ID of the corresponding fat lock

- Reservation bit: the flag indicating whether the lock is reserved by a thread.

- Rightmost 10 bits unused in TM and reserved for storing the hash codes of Java* objects

This method on contention would lead to bad performance if the lock is not going to be released very fast. In this case, the lock is "inflated" to a "fat lock." A fat lock has the following characteristics: It requires a little extra memory, in terms of a separate list of threads wanting to acquire the lock and It is relatively slow to take and One (or more) threads can register as queuing for (blocking on) that lock. A thread that encounters contention on a fat lock register itself as blocking on that lock, and goes to sleep. This means giving up the rest of its time quantum given to it by the OS. While this means that the CPU will be used for running real user code on another thread, the extra context switch is still expensive, compared to spin locking. When a thread does this, we have a "contended fat lock."

Whenever the last contending thread releases a fat lock, the lock normally remains fat. Taking this fat lock, even without contention, is more expensive than taking a fat lock (but less expensive than converting a thin lock to a fat lock). If JRockit believes that the lock would benefit from being thin (basically, if the contention was pure "bad luck" and the lock normally is uncontended), it might "deflate" it to a thin lock again. A special note regarding locks is that: if a wait/notify/notifyAll is called on a lock, it will automatically inflate to a fat lock. So a good practice (not only for this reason) is therefore not to mix actual locking with this kind of notification on a single object.

The monitor acquiring process with the help of the "hythread_thin_monitor_try_enter()" function is shown on the following diagram:

At the starting, the thread uses the reservation bit to check whether the required lock is owned by this thread. If yes, the thread increases the recursion count by 1 and exits the function. This makes the fast path of the monitor enter operation for a single-threaded application. The fast path involves only a few assembly instructions and does no expensive atomic compare-and-swap (CAS) operations.

If the lock is not yet been reserved, then it is checked for being occupied. The free lock is set to be reserved and acquired simultaneously with a single CAS operation. If the lock becomes busy then, the system checks whether the lock is fat.

The lock table holds a mapping between the fat lock ID and the actual monitor. Fat monitors are extracted from the lock table and acquired. If the lock is not fat and reserved by another thread, then this thread suspends the execution of the lock owner thread, removes the reservation, and resumes the owner thread. After that, the lock acquisition is tried again.

Example

As shown in figure, consider a thin lock entry for a block which is initially not acquired by any thread.

Thread A requests access to the block. As the lock is not acquired by any other thread, Thread A is granted access and Reserved bit is set to one. The recursion value is still zero as there is no recursion.

Thread A re enters the same code block and requests to access the block. The lock is already acquired by Thread A, hence it is granted access to the block and recursion count is incremented to one - denotes that Thread A has acquired the lock twice.

This is followed by a request from Thread B to access the same block. As thread A has the lock, thread B is not granted access and is placed in the entry code to wait till the Thread A releases the lock.

Thread A releases the lock twice to decrement the recursion count and finally release the lock thus resetting the Reserved bit to zero. On this release, Thread B is allowed to acquire the lock. As there is a contention on the lock, the contention bit is set to 1 and the lock is inflated to a fat lock. Here the Fat Lock ID points to the Fat Lock in the fat lock table which is now acquired by Thread B. The lock count in the fat lock is incremented to one and denotes that Thread B has acquired it once.

When Thread B releases the lock, the lock count in the fat lock is decremented to zero. Thus the lock is now free to use by any other thread. It is to be noted that the lock stays inflated now onward.

Biased Lock

Biased locks are an optimization over thin locks. Biased locking takes advantage of the empirically known fact that most locks are only acquired by a single thread during their lifetime. This allows a thread to never actually give up the lock on "lock release." The next time the same thread tries to acquire the lock, it will find that it already owns the lock. This saves the owner thread the additional synchronization instruction (e.g., LL/SC) when it attempts to acquire the lock after the first time. Thus, this particular lock is "biased" towards the owner thread. The lock is inflated into a thick lock and the bias is "revoked," if a non-owner thread attempts to acquire a biased lock, since now there is another thread interested in acquiring this lock.

In all the algorithms discussed above consists of atomic instructions like compare-and-swap operations. Considering that atomic operations are especially expensive (memory fence on modern hardware - i.e. need to flush memory queues) in modern architectures, they are becoming the major overhead factor in Java locks. The atomic operations are very effective in the situation where multiple threads acquire a lock symmetrically. But in general this is not the best solution when there is an asymmetry in the lock acquisitions. This case is very common in an important class of applications that includes such systems as Java Virtual Machines. If an object’s lock is frequently acquired by a specific thread, the lock’s cost may be further reduced by giving a certain precedence to that thread, while shifting costs to other threads. This optimized technique is known as quickly reacquirable mutual exclusion locks (QRLs) or Biased locking or Reservation Lock.

Algorithm<ref>https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot</ref>

To make this optimized technique effective, there must exist a locality such that each object’s lock is frequently acquired by a specific thread, for which the lock is to be reserved. This locality is known as thread locality and it is defined in terms of the lock sequence, the sequence of threads (in temporal order) that acquire the lock. The key idea is to allow a lock to be reserved for a thread. The reservation-owner thread can perform the lock processing without atomic operations, so the lock overhead is minimized. If another thread attempts to acquire the reserved lock, the reservation must first be canceled, and the lock processing falls back to an existing algorithm. For a given lock, if its lock sequence contains a very long repetition of a specific thread, the lock is said to exhibit thread locality, while the specific thread is said to be the dominant locker.

The Reservation lock mechanism can be explained in detail as below. The key idea of this algorithm is to reserve locks for threads. When a thread attempts to acquire an object’s lock, one of the following actions is taken in accordance with the lock’s reservation status:

- If the object’s lock is reserved for the thread, the runtime system allows the thread to acquire the lock with a few instructions involving no atomic operations.

- If the object’s lock is reserved for another thread, the runtime system cancels the reservation, and falls back to a conventional algorithm for further processing.

- If the object’s lock is not reserved, or the reservation was already canceled, the runtime system uses a conventional algorithm.

If another thread tries to acquire a biased object, however, we need to revoke the bias from the original thread. (At this juncture we can either rebias the object or simply revert to normal locking for the remainder of the object's lifetime).Revocation must suspend a thread to scan its stack - or ask the thread to do it itself. The key challenge in revocation is to coordinate the revoker and the revokee (the bias holding thread).we must ensure that the revokee doesn't lock or unlock the object during revocation.

The QRL is strictly in response to the latency of compare-and-swap (CAS). It is important to note that CAS incurs local latency, but does not impact scalability on the modern processors. A common assumption is that each CAS operation "goes on the bus", and, given that the interconnect is a fixed a contended resource, use of CAS can impair scalability. This assumption is false. The CAS can be accomplished locally, with no bus transactions, if the line is already in M-state. CAS is usually implemented on top of the existing MESI snoop-based cache coherence protocol, but in terms of the bus, CAS is no different than a store.

Example

Let us assume that we have a true 16-way system. We launch a thread that executes the compare-and-swap (CAS) instruction 1 billion times to a thread-private location, and measure the elapsed time.

If we then launch 16 threads, all CASing to thread-private locations, the elapsed time will be the same. The threads don't interfere with or impede each other in any way. Even if we launch 16 threads all CASing to the same location we will typically see a massive slow-down because of interconnect traffic. (The sole exception to that claim is Sun's Niagara, which can gracefully tolerate sharing on a massive scale as the L2$ serves as the interconnect). If we then change that CAS to a normal store we will also see a similar slow-down; as noted before, in terms of coherency bus traffic, CAS isn't appreciably different than a normal store. Some of the misinformation regarding CAS probably arises from the original implementation of lock:cmpxchg (CAS) on Intel processors.

The lock: prefix caused the LOCK# signal to be asserted, acquiring exclusive access to the bus. This didn't scale of course. Subsequent implementations of lock:cmpxchg leverage cache coherency protocol -- typically snoop-based MESI -- and don't assert LOCK#. Note that lock:cmpxchg will still drive LOCK# in one extremely exotic case -- when the memory address is misaligned and spans 2 cache lines. Finally, we can safely use cmpxchg on uniprocessors but must use lock:cmpxchg on multiprocessor systems. Lock:cmpxchg incurs more latency, but then again it's a fundamentally different instruction that cmpxchg. Lock:cmpxchg is serializing, providing bidirectional mfence-equivalent semantics. (Fence or barrier instructions are never needed for uniprocessors) This fact might also have contributed to the myth that CAS is more expensive on MP systems. But of course lock:cmpxchg incurs no more latency on a 2x system than on an 8x system.

And on bus operations, let us assume that a load is followed closely in program order by a store or CAS to the same cache line. If the cache line is not present in the issuing processor then the load will generate a request-to-share transaction to get the line in S-state and the store or CAS will result in a subsequent request-to-own transaction to force the line into M-state. This second transaction can be avoided on some platforms by using a prefetch-for-write instruction before the load, which will force the line directly into M-state.

It's also worth mentioning that on typical classic SMP systems, pure read-sharing is very efficient. All the requesting processors can have the cache line(s) replicated in their caches. But if even one processor is writing to a shared cache line, those writes will generate considerable cache coherence traffic; assuming a write-invalidate cache coherence policy (as opposed to write-update) the readers will continually re-load the cache line just to have it subsequently invalidated by the writer(s). Put differently, loads to a cache line are cheap if other processors are loading from but not storing to that same line. Stores are cheap only if no other processors are concurrently storing to or loading from that same line. (We can draw an imprecise analogy between cache coherency protocols and read-write locks in that for a given cache line there can only be one writer at any given time. That's the processor with the line in M-state. Multiple readers of the line allowed and of course the lifetime of a reader can't overlap a write.

Unlike traditional read-write locks, however, the cache coherency protocol allows writers to invalidate readers, so we can't push the analogy too far. In a twisted sense, the coherency protocol is obstruction-free). Coherency bandwidth is a fixed and contended global resource, so in addition to local latency, excessive sharing traffic will impact overall scalability and impede the progress of threads running on other processors. A so-called coherency miss -- for example a load on processor P1 where processor P2 has the cache line in M-state -- is typically much slower than a normal miss (except on Niagara). Recall too, that acquiring a lock involves a store (CAS, really) to the lock metadata, so if you have threads on processors P1 and P2 iterating, acquiring the same, the lock acquisition itself will generate coherency traffic and result in the cache "sloshing" of the line(s) holding the metadata. Generally, excessive coherency traffic is to be avoided on classic SMP systems. But as usual, there's an exception to any rule, and in this case that exception is Sun's Niagara, which can tolerate sharing gracefully.

Conclusion

The QRL locks are a novel class of mutual exclusion algorithms that are heavily optimized for a very common data access pattern in which a single process repeatedly and solely acquires a lock. The QRL locks represent the first true atomic-free locks for this ultra fast path. Because they can be generalized to use any mutual exclusion algorithm with a standard interface, as well as many algorithms that do not use a standard interface, QRL locks can obtain the benefits of any properties of such locks for the uncontended case at the expense of a mere handful of non-atomic instructions in their critical path. QRL locks are optimized for a single-process repeated-acquisition data access pattern; however, we have also demonstrated rebiasable QRLs that can be used with migratory data access patterns.

Another approach to improve the performance of java locks by totally eliminating the locks rather than to reduce the cost of the locks. The most common eliminating techniques is to identify objects which are only accessible by their creator threads by using escape analysis and to eliminate all lock operations for such non-escaping objects. There are several techniques to eliminate recursive locks. For example when we incline one synchronize method in the other then the JIT compiler can eliminate the inner locks if it detects that the receiver objects of these methods are always identical.

References

<references/>

Glossary

- CAS: Compare-and-swap (CAS) is an atomic CPU instruction used in multithreading to achieve synchronization.

- JIT: Just-in-time compilation, also known as dynamic translation, is a method to improve the runtime performance of computer programs.

- JVM: A Java virtual machine (JVM) is a virtual machine capable of executing Java bytecode.

- LL/SC: Load-linked/Store-Conditional

- MESI: The MESI protocol (known also as Illinois protocol) is a widely used cache coherency and memory coherence protocol. It is the most common protocol which supports write-back cache.

- monitor: A monitor is basically a guardian in that it watches over a sequence of code, making sure only one thread at a time executes the code.

- MP: Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system.

- multi-threading: Multithreading computers have hardware support to efficiently execute multiple threads.

- mutual exclusion: It refers to the problem of ensuring that no two processes or threads (henceforth referred to only as processes) can be in their critical section at the same time.

- QRL: Quickly Reacquirable Lock

- SMP: Symmetric multiprocessing (SMP) involves a multiprocessor computer hardware architecture where two or more identical processors are connected to a single shared main memory and are controlled by a single OS instance

- test-and-set: It is an instruction used to write to a memory location and return its old value as a single atomic (i.e., non-interruptible) operation

See Also

1. Locking and Synchronization in Java - http://www.artima.com/insidejvm/ed2/threadsynch.html

2. C.A.R. Hoare, "Monitors: An Operating System Structuring Concept," http://john.cs.olemiss.edu/~dwilkins/Seminar/S05/Monitors.pdf

3. Java Tech: The ABCs of Synchronization - http://today.java.net/pub/a/today/2004/08/02/sync1.html

4. Synchronization in Java - http://www.javaworld.com/javaworld/jw-07-1997/jw-07-hood.html?page=1

5. Kiyokuni Kawachiya, "Java Locks: Analysis and Acceleration" - http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf

6. Thin Locks - http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf

7. Biased Locks - http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf

8. http://www.cs.man.ac.uk/~irogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf

9. Concurrency in Java - http://jeremymanson.blogspot.com/2007/08/atomicity-visibility-and-ordering.html

Quiz

1. Which of the following lock artifacts hurt performance?

a) Repeated reads of a cached lock variable

b) Fairness

c) Repeated test and sets

d) Using a single lock variable (rather than multiple lock variables)

2. Which of the following functions used to implement Java locks?

a)lock_acquire()

b)lock_release()

c)wait()

d)notify()

e)notifyall()

3. Transitions from and to the spin-lock mode are called __________ and ____________ , respectively.

4. For a given lock, if its lock sequence contains a very long repetition of a specific thread, the lock is said to exhibit __________ locality, while the specific thread is said to be the ____________ locker.

Solutions:

1 - a,b,c,d.

2 - a,b,c,d,e.

3 - inflation,deflation

4 - thread,dominant