CSC/ECE 506 Spring 2012/6b am: Difference between revisions

No edit summary |

|||

| (69 intermediate revisions by 2 users not shown) | |||

| Line 6: | Line 6: | ||

===Write Buffers in Uni-processors=== | ===Write Buffers in Uni-processors=== | ||

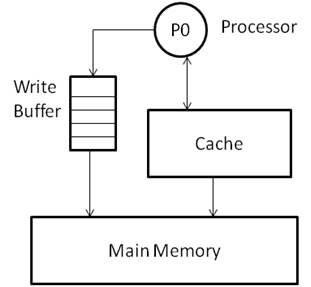

A write to be performed is | [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Uniprocessor_With_WB.png Figure 1] shows the cache-based single processor system with a write buffer. A write to be performed is pushed into the buffer implemented as a [http://en.wikipedia.org/wiki/FIFO FIFO] (First In - First Out) [http://en.wikipedia.org/wiki/Queue_(abstract_data_type) queue], which essentially ensures that the writes are performed in the order that they were called. In a uni-processor model, with the requirement and possibility of extracting [http://en.wikipedia.org/wiki/Instruction_level_parallelism Instruction Level Parallelism (ILP)], the writes may also be called out-of-order, provided there are some hardware/software protocols implemented to check the writes for any dependences that may exist in the instruction stream. | ||

A generic approach towards read-write accesses can be described as follows [http://people.cs.umass.edu/~weems/CmpSci635A/Lecture10/L10.24.html][http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0246a/Cjafdjgj.html]: | |||

* If a write is followed by a read request to the same memory location while the write is still in the buffer, the buffered value is returned | |||

* If a write is followed by a write request to the same memory location while the write is still in the buffer, the earlier write is overridden and is updated with the new write value | |||

This follows that the write buffers not only save memory access overhead on the first write to a location, but also on the closely-spaced successive read-after-write and write-after-write sequences. | |||

[[Image:Uniprocessor With WB.png|thumb|center|400px|Figure 1.Uni-processor cache based system with write buffer ]] | |||

[[Image: | |||

''Interested reader may read about the store buffer and its implementation in ARM Cortex-R series processors.<ref>http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0363e/Chdcahcf.html</ref><ref>http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0246a/Cjafdjgj.html</ref>'' | |||

== | ===Write Buffer Issues in Multiprocessors=== | ||

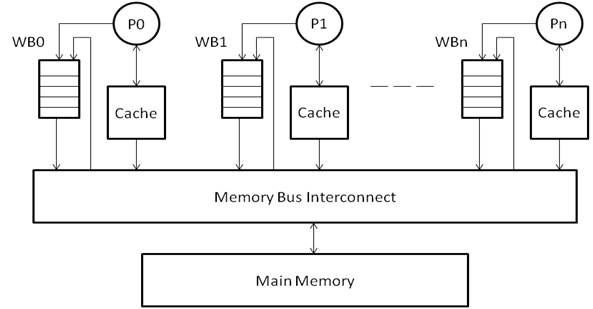

In a multiprocessor system, the same design can be extended, as shown in the [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Multiprocessor_with_WB.png Figure 2]. In a generic design, each processor will have its own private cache and a write buffer corresponding to the cache. The caches are connected by the means of an interconnect with each other as well as with the main memory. As can be seen in the [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Multiprocessor_with_WB.png Figure 2], each processor makes a write by pushing it into its write buffer, and the write buffer completes the task of performing the write to the main memory or the cache. Consistency is maintained between ordering of memory accesses at individual processors in the same way as explained in the section [[#Write Buffers in Uni-processors|above]]. | |||

[[Image:Multiprocessor with WB.png|thumb|center|400px|Figure 2. Cache- based multiprocessor system with write buffer <ref name="dubois">[http://mprc.pku.cn/mentors/training/ISCAreading/1986/p434-dubois/p434-dubois.pdf</ref>]] | |||

===The Coherence Problem=== | |||

=== | |||

However, with multiple processors working on the same task, it is essential that the memory ordering be consistent not only at individual processor level, but also with respect to all other processors in the system as well. Consider the case where a write (STORE A) has been issued by processor P1 into the WB1, and the write is waiting to be executed. Since the write has not yet been performed to the cache or the main memory, the other processors do not have any knowledge about the changes made to address A. As a result, a read operation by another processor from address A will take the value from either its own cache, write buffer, or the main memory (depending on whether it hits or misses in the cache). | |||

This is a classic problem in all multi-processor systems, termed as [http://en.wikipedia.org/wiki/Cache_coherence Cache Coherence] problem. It is the job of the designer to employ protocols that will take care of the sequential ordering of the instructions, and ensure that the writes made to any one of the caches are propagated and updated in all of the processor caches. With the addition of a write-buffer, we add another level to this problem, as we now have to ensure that pending write requests in a processor's buffer do not go unnoticed by other processors. | |||

The sequential ordering model used in the system holds a strong bearing on the write buffer management policy, as is explained in a [[#Sequential Consistency|later]] section. We will now take a look at the definitions of different cache coherence policies and then move on to study various hardware implementations of the write buffer and the policies employed. | |||

==Coherence Models== | |||

<blockquote>"A memory scheme is coherent if the value returned on a LOAD instruction is always the value given by the latest STORE instruction with the same address."<ref name="dubois" /></blockquote> | |||

= | The two conventional models followed to attain this objective are the snoopy-bus protocol and the directory-based coherence protocol<ref name="chapter-two">http://www.csl.cornell.edu/~heinrich/dissertation/ChapterTwo.pdf</ref>. The directory-based protocol is used for distributed memory systems, in which every processor keeps track of what data is being stored using a directory entry. In the snoopy-bus protocol, every processor monitors the reads and writes that are being serviced by the memory bus. The read and write requests can be broadcast on the bus for all the processors to respond based on whether or not they are in possession of that data in their cache. | ||

With the addition of write buffers in the system, it is now essential that a processor be aware of the memory transactions occurring not only at the caches of other processors, but also in their write buffers. The snoopy bus protocol can maintain coherence between multiple write buffers by communicating using one of the two protocols explained below. | |||

=== Write-Update === | === Write-Update === | ||

In this approach, a write request by a processor is first pushed onto its local write buffer, and then is broadcast on the bus for all other processors to see. Each processor updates its local cache and buffer with the new value of the data. This saves bus bandwidth in terms of writes, as there is only one broadcast that needs to be made in order for all the caches to be up to date with the newest value of the data. This is disadvantageous, however, when a snooped write transaction is present in a processor's cache - it needs to be updated right away, stalling other read operations that may be happening on that cache. | |||

===Write-Invalidate=== | |||

This approach is common when dealing with systems where a data block is being written by one processor, but is being read by multiple processors. Whenever a write is made to shared data, it is pushed onto the local write buffer, and an invalidate signal is sent to all the caches and buffers, asking for that data block to be made invalid, as the value is not updated to the latest one. This approach alleviates us of the problem presented by the update protocol, as a snooped cache block is invalidated right away and does not keep any read waiting on the cache. However, this may cause additional traffic on the bus as a result, and a separate bus for invalidation requests may be included in the design. | |||

Write–update strategy is liable to keep and update unnecessary data in the cache - the least recently used [http://en.wikipedia.org/wiki/Cache_algorithms#Least_Recently_Used (LRU)] algorithm may evict the useful data from the cache and keep the redundant updated data. Most present day processors employ write-invalidate policy because of its ease of implementation<ref name="chapter-two" />. In this article, for write-propagation of all hardware and software based designs we follow the write-invalidate strategy. | |||

== Coherence in Write Buffers == | == Coherence in Write Buffers == | ||

| Line 97: | Line 60: | ||

In hardware-based protocols accesses to the shared memory are communicated using hardware invalidate signals that are broadcasted to all the processor memories. Hardware support may also be required for a LOAD to be broadcasted to all the caches, so that a read can be performed directly from a remote memory. There are two approaches to maintaining coherence in write buffers and caches for multiprocessor system- | In hardware-based protocols accesses to the shared memory are communicated using hardware invalidate signals that are broadcasted to all the processor memories. Hardware support may also be required for a LOAD to be broadcasted to all the caches, so that a read can be performed directly from a remote memory. There are two approaches to maintaining coherence in write buffers and caches for multiprocessor system- | ||

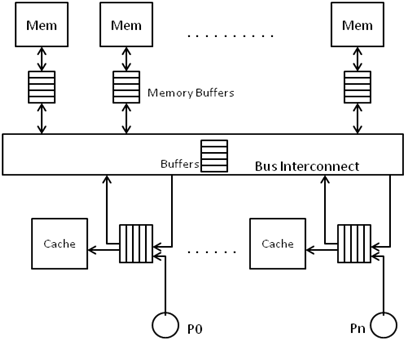

====Unique Buffer per Processor==== | ====Unique Buffer per Processor<ref name="dubois" />==== | ||

[[Image:Unique Buffer Per Processor.png|thumb|right|250px|Figure 3: Cache based system with unique buffer per processor | [[Image:Unique Buffer Per Processor.png|thumb|right|250px|Figure 3: Cache based system with unique buffer per processor | ||

[[http://mprc.pku.cn/mentors/training/ISCAreading/1986/p434-dubois/p434-dubois.pdf]]]] | [[http://mprc.pku.cn/mentors/training/ISCAreading/1986/p434-dubois/p434-dubois.pdf]]]] | ||

In this configuration, every processor has its own unique buffer that holds the loads and stores of the local processor as well as invalidates and loads of remote processors wanting access to the shared data. A store request in local cache will ensue invalidating of that data block in all other caches and main memory. A STORE is said to have been performed when | In this configuration, every processor has its own unique buffer as seen in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Unique_Buffer_Per_Processor.png Figure 3] that holds the loads and stores of the local processor as well as invalidates and loads of remote processors wanting access to the shared data. A store request in local cache will ensue invalidating of that data block in all other caches and main memory. A STORE is said to have been performed when | ||

# the request is serviced in the buffer and the cache is updated on a local hit and | # the request is serviced in the buffer and the cache is updated on a local hit and | ||

| Line 111: | Line 74: | ||

# For non-bus MP systems, the caches are connected in point-to-point mesh-based or interconnect ring-based topology. When a store is encountered in one of the processors, the shared data is first locked in the shared memory to ensure atomic access, and then the invalidate signal is propagated along the point-to-point interconnect in a decided order. The STORE to shared memory is performed only when the invalidation has been propagated to all the processor caches and buffers. If any processor issues a LOAD on the same address as that is being STOREd, then the LOAD request has to be rejected or buffered to be serviced at a later time, to maintain atomic access to the shared data block. | # For non-bus MP systems, the caches are connected in point-to-point mesh-based or interconnect ring-based topology. When a store is encountered in one of the processors, the shared data is first locked in the shared memory to ensure atomic access, and then the invalidate signal is propagated along the point-to-point interconnect in a decided order. The STORE to shared memory is performed only when the invalidation has been propagated to all the processor caches and buffers. If any processor issues a LOAD on the same address as that is being STOREd, then the LOAD request has to be rejected or buffered to be serviced at a later time, to maintain atomic access to the shared data block. | ||

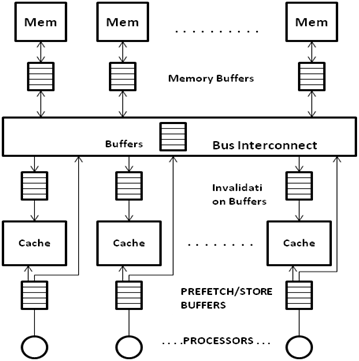

====Separate Buffers for Local and Remote Accesses==== | ====Separate Buffers for Local and Remote Accesses<ref name="dubois" />==== | ||

[[Image:Separate Invalidate Buffers.png|thumb|right|250px|Figure 4: Cache based system with separate write-invalidate buffers | [[Image:Separate Invalidate Buffers.png|thumb|right|250px|Figure 4: Cache based system with separate write-invalidate buffers | ||

[[http://mprc.pku.cn/mentors/training/ISCAreading/1986/p434-dubois/p434-dubois.pdf]]]] | [[http://mprc.pku.cn/mentors/training/ISCAreading/1986/p434-dubois/p434-dubois.pdf]]]] | ||

Another approach to maintaining coherence is for every processor to have separate buffers for local data requests and remote data requests. As seen in the | Another approach to maintaining coherence is for every processor to have separate buffers for local data requests and remote data requests. As seen in the [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Separate_Invalidate_Buffers.png Figure 4], every processor has a Local-buffer which queues the local LOADs and STOREs, and a Remote-buffer (also termed Invalidate buffer) that stores the invalidation requests and LOADs coming in from different processors. | ||

In bus-based processor system, this approach makes it difficult to maintain write atomicity, as different invalidate buffers may hold different number of invalidation requests, putting uncertainty on the time to invalidate the concerned data block in the cache. In non-bus based systems, however, this approach is successful in maintaining strong coherence, provided the invalidate signal makes sure that the data is invalidated at a cache before moving on to the next processor (rather than just pushing the invalidate request in the buffers and moving on). | In bus-based processor system, this approach makes it difficult to maintain write atomicity, as different invalidate buffers may hold different number of invalidation requests, putting uncertainty on the time to invalidate the concerned data block in the cache. In non-bus based systems, however, this approach is successful in maintaining strong coherence, provided the invalidate signal makes sure that the data is invalidated at a cache before moving on to the next processor (rather than just pushing the invalidate request in the buffers and moving on)<ref name="dubois" />. | ||

====Universal read/write Buffer==== | ====Universal read/write Buffer<ref>http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=342629&tag=1</ref>==== | ||

[[Image:Universal Read Write Buffer.png|thumb|right|300px|Figure 5: Universal Read/Write Buffer [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/6b_dm#cite_note-4 5]]] | [[Image:Universal Read Write Buffer.png|thumb|right|300px|Figure 5: Universal Read/Write Buffer [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/6b_dm#cite_note-4 5]]] | ||

In this approach, a shared bus controller resides in between the local bus and memory bus, where local bus is connected to all private caches of the processors (illustrated in | In this approach, a shared bus controller resides in between the local bus and memory bus, where local bus is connected to all private caches of the processors (illustrated in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Universal_Read_Write_Buffer.png Figure 5]). This technique supports multiple processors with same or different coherence protocols like; MSI, MESI and MOESI write invalidate strategy. Shared bus controller consists of the local bus controller, the data buffer and the address buffer. Each private cache provides two bit tri-state signals to the local bus – '''INTERVENE''' and '''SHARE'''. | ||

# INTERVENE is asserted when any cache wants to provide a valid data to other caches. | # INTERVENE is asserted when any cache wants to provide a valid data to other caches. | ||

# Cache controller | # Cache controller asserts the SHARE when there is an address match with any of its own tag address. | ||

===== | =====Structural Components===== | ||

The | The '''Data Buffer''' consists of three FIFOs | ||

# The ''non_block write-FIFO'' receives non cacheable memory access data from CPU. These requests do not require any cache eviction or line-fill cycles. | # The ''non_block write-FIFO'' receives non cacheable memory access data from CPU. These requests do not require any cache eviction or line-fill cycles. | ||

| Line 135: | Line 98: | ||

# The ''read-FIFO'' is used for storing data from non_block write FIFO, Write-back FIFO or memory. Data is temporarily stored in this FIFO until local bus is cleared and ready for new data. | # The ''read-FIFO'' is used for storing data from non_block write FIFO, Write-back FIFO or memory. Data is temporarily stored in this FIFO until local bus is cleared and ready for new data. | ||

The '''Address Buffer''' also consists of three FIFOs | |||

# ''Non_block write FIFO'' - stores address of non-blocking accesses. The depth of this FIFO should be the same as the corresponding Data Buffer FIFO. | # ''Non_block write FIFO'' - stores address of non-blocking accesses. The depth of this FIFO should be the same as the corresponding Data Buffer FIFO. | ||

| Line 143: | Line 104: | ||

# ''Line-fill FIFO''- stores the starting address of line-fill cycle. | # ''Line-fill FIFO''- stores the starting address of line-fill cycle. | ||

Snoop logic is started whenever there is read | Snoop logic is started whenever there is read access to main memory. Snoop logic compares the read request address with both write-back FIFO and line-fill FIFO. If there is a match, the HIT flag is set and CPU gets the data immediately through the internal bypass path. By doing so, stale data will not be read and the CPU doesn’t have to waste memory latency time. | ||

For | For non_block write cycle, snoop logic block compares the new requested address and Byte Enable bits with the previously stored non_block write FIFO. If there is an address match but no Byte-Enable overlapped, BGO signal will be asserted and pointer of the non_block write FIFO will not move forward to prevent the FIFO from filling up quickly. | ||

In this approach, | In this approach, the read access has priority over the write access, so whenever the memory bus is available, read address will get direct possession of the memory bus. | ||

Controller monitors the local bus for status bits (M, O, E, S, I), INTERVENE# and SHARE# bits and | The '''Local Bus Controller ''' monitors the local bus for status bits (M, O, E, S, I), INTERVENE# and SHARE# bits and determines that a transaction needs to access main memory or private cache. For main memory access, controller informs the buffer with the signal of WB (Write Back), LF (Line Fill) or O3 (owned) status bit. | ||

'''Controller Algorithm''' | |||

''For the MSI and MOSI protocols:'' | |||

: | |||

:If there is a read miss on the local bus -'''INTERVENE''' is asserted. | |||

:If there is a write miss with '''M''' status on the local bus, write back cycle will be performed -'''WB''' is asserted. | |||

:If there is a read miss or write miss from any of the protocol '''LF''' cycle will be performed -'''LF''' is asserted. | |||

If there is a read miss on the local bus | ''For the MOESI protocol:'' | ||

:If there is a read miss on the local bus -'''INTERVENE''' is asserted. | |||

The above outputs can be written in Boolean equations as shown in the example<ref>http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=00342629</ref> below: | The above outputs can be written in Boolean equations as shown in the example<ref>http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=00342629</ref> below: | ||

| Line 182: | Line 137: | ||

=====''' Algorithms '''===== | =====''' Algorithms '''===== | ||

The algorithm followed by each of the processors in a multiprocessor system is given below. The Processors may be following different protocols i.e. MSI/MESI/MOESI, but the algorithms below will ensure that the memory accesses are carried out coherently. | The algorithm followed by each of the processors in a multiprocessor system is given below. The Processors may be following different protocols i.e. MSI/MESI/MOESI as shown in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Universal_Read_Write_Buffer.png Figure 5], but the algorithms below will ensure that the memory accesses are carried out coherently. | ||

'''S''' state is common for all three protocols but this system takes the advantage of MESI and MOESI protocol by reducing memory bus | The ''''S'''' state is common for all three protocols but this system takes the advantage of MESI and MOESI protocol by reducing memory bus transactions. In shared state(S), multiple caches have the same updated data but memory may or may not have a copy. This change requires the following protocol translation. | ||

The algorithms for the '''MOESI''' protocol are as follows: | |||

''The algorithms for the '''MOESI''' protocol are as follows:'' | |||

::If read hit initiated by other cache | ::If read hit initiated by other cache | ||

:::• '''M''' state provides data to other cache | :::• '''M''' state provides data to other cache | ||

:::• Changes its state from '''M''' to''' O''' | :::• Changes its state from '''M''' to''' O''' | ||

::If same data is hit again | ::If same data is hit again | ||

:::• Cache with '''O''' state is responsible of providing data to the requesting cache | :::• Cache with '''O''' state is responsible of providing data to the requesting cache | ||

::If there is a write cache misses and INTERVENE is not activated | ::If there is a write cache misses and INTERVENE is not activated | ||

:::• Generate a line-fill cycle | :::• Generate a line-fill cycle | ||

The algorithms for the '''MSI''' and '''MESI''' protocols are as follows | |||

Algorithm for MSI protocol, '''M''' state provides data to the other caches & main memory and also changes the state from '''M''' to '''S'''. This transaction requires main memory access, but local bus translation logic plays a big role | ''The algorithms for the '''MSI''' and '''MESI''' protocols are as follows:'' | ||

''(These protocols have no '''O''' state)'' | |||

Algorithm for MSI protocol, '''M''' state provides data to the other caches & main memory and also changes the state from '''M''' to '''S'''. This transaction requires main memory access, but local bus translation logic plays a big role in reducing the bus access and improve the performance. | |||

::• Local Bus controller changes the status of MOESI to '''O''' instead of '''S''' | ::• Local Bus controller changes the status of MOESI to '''O''' instead of '''S''' | ||

::•''' M''' of MSI or MESI changes to '''S''' and no update will occur on main memory. | ::•''' M''' of MSI or MESI changes to '''S''' and no update will occur on main memory. | ||

| Line 209: | Line 167: | ||

:::• No cache will involve of data transfer. | :::• No cache will involve of data transfer. | ||

:::• Main memory will provide the data. | :::• Main memory will provide the data. | ||

==Maintaining Sequential Consistency== | |||

When operating as a part of a multiprocessor, it is not enough to check dependencies between the writes only at the local level. As data is shared and the course of events in different processors may affect the outcomes of each other, it has to be ensured that the sequence of writes is preserved between the multiple processors. | |||

<blockquote>“A system is said to be sequentially consistent if the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operation of each individual processor appear in this sequence in the order specified by its program.”<ref name="node6">http://www.cs.jhu.edu/~gyn/publications/memorymodels/node6.html</ref></blockquote> | |||

As mentioned earlier, the sequential ordering policy applied has a strong bearing on the write-buffer management policy. Buffer management refers to the order in which multiple buffer requests are treated. In most cases, the requests are treated in a strict FIFO order, while in some cases requests may be allowed to pass each other in the buffer. This is referred to as jockeying<ref name="dubois" />. Jockeying is often permitted between memory requests for different memory words, but is not permitted between requests with the same memory word. This approach is called restricted jockeying<ref name="dubois" />. | |||

We will discuss some ordering approaches, and explore how they are significant to write buffer coherence management. | |||

===Strong Ordering=== | |||

In strong ordering, the requirements for a system are as follows<ref>http://www.ece.cmu.edu/~ece548/handouts/19coher.pdf</ref>: | |||

#All memory operations appear to execute one at a time | |||

#All memory operations from a single CPU appear to execute in-order | |||

#All memory operations from different processors are “cleanly” interleaved with each other (serialization) | |||

Serialization is ensured on a single processor by keeping all the accesses to the memory consistent and in order. This implies that there will be no jockeying allowed within the write buffer, and any write access to shared data will result in invalidation of all corresponding copies in the caches and buffers. Strong ordering thus enforces strong sequential consistency by strictly serializing local accesses and communicating shared accesses via invalidations. The serialization, however, imposes a penalty on the efficiency of the processor, as the instructions have to be performed in serial fashion for most of the time. | |||

===Weak Ordering=== | |||

In weakly ordered systems, processors can issue shared memory accesses without waiting for previous accesses to be performed. Jockeying is allowed in the buffers, and accesses can pass each other out of serial program order. However, there is necessity to maintain consistency while accessing the synchronization variables. Synchronization variables are the variables that are responsible in the multi-program to maintain concurrency between processes running on separate processors<ref name="node6" />. Needless to say, as accesses can pass each other, the efficiency of the weakly ordered systems is much higher than the strongly ordered systems, but the implementation is much more complex, for the necessity to maintain the correctness of the program. | |||

===Example=== | |||

Examples for Sequential Consistency: | |||

In the following program, consider variables “a”, “b”, “flag1” and “flag2” are initialized with 0 and both processors (CPU1 and CPU 2) are sharing all the variables | |||

a = b = flag1 = flag2 = 0; // initial value | |||

CPU1 CPU 2 | |||

Flag 1 = 1; flag2 = 1; | |||

a = 1; a = 2; | |||

r1 = a; r3 = a; | |||

r2 = flag2; r4 = flag1; | |||

SPARC V8 architecture follows the Total Store Ordering model which is a relaxed (weak) ordering model where the reads may bypass writes, but writes have to execute in program order. Possible result we can get: r1 = 1, r3 = 2, r2 = r4 = 0 | |||

But strong ordering enforces strict atomicity thus we will get different results based on the execution order between two processors’ instructions. | |||

==Overcoming Buffer Stalls<ref name="store-wait-free">http://www.eecg.toronto.edu/~moshovos/research/store-wait-free.pdf</ref>== | |||

Although the write buffer does the job of off-loading the write responsibility and hence the write access latency overhead from processor time, there are certain conditions where the processor performance cannot be improved a beyond a certain point. The processor system needs to be stalled if the write buffer overflows, for example. There are two types of stalls that mainly affect the processor performance : | |||

#Buffer capacity related stalls : The buffer capacity related stalls occur when a write buffer overflows, and the processor cannot buffer any more stores. This occurs during store bursts, which are a number of stores that are requested in quick succession. The ''Scalable Store Buffer''<ref name="store-wait-free" /> is a technique that aims at overcoming capacity-related stalls. | |||

#Ordering related stalls : Modern processors have the ability to execute speculatively<ref>http://www.pcguide.com/ref/cpu/arch/int/featSpeculative-c.html</ref>, which means that they can execute down a predicted path to save processor time. But there are time when the speculation could go wrong, and the state of the system has to be brought to a true state by draining out all requests down the bad path. This incurs a stall penalty on the processor, as no execution can proceed till the system is rolled back to a non-speculative, true state. ''Atomic Sequence Ordering''<ref name="store-wait-free" /> is a technique that takes care of the ordering-related stalls. | |||

The two are explained here in brief. | |||

===Scalable Store Buffer (SSB)=== | |||

The SSB as mentioned above is employed to overcome the buffer capacity-related stalls in a write-buffer based system. Conventional buffers follow Total Store Ordering<ref name="store-wait-free" /> in which store values are forwarded to matching loads, and stores maintain total order of execution. The key architecture changes employed by the SSB are : | |||

#The store forwarding is performed through L1 cache, so that the CAM look-up within the write buffer to match loads with stores can be done away with. | |||

##Thus the L1 cache contains data values that are private to the processor | |||

##Also, the stores are now buffered as a FIFO structure called the Total Store Order Buffer (TSOB) | |||

#When the stores commit, they drain into the L2 cache, where the values are globally visible. All coherence requests are serviced by the L2 cache. | |||

By using this approach, the effective size of the write buffer becomes equivalent to the size of the L1 cache, making the chances of the store buffer overflowing, infinitesimally small. In event that such an overflow does happen, the stores are then stalled until outstanding stores drain out, making room for newer ones. | |||

===Atomic Sequence Ordering (ASO)=== | |||

ASO aims at reducing the ordering-related stalls that occur in a multiprocessor. Ordering-related stalls frequently occur because of memory accesses being serviced out of order, so that the sequential consistency is not maintained - to retain the sequential consistency, the processors are forced to go into the stall state. Using the ASO approach, accesses are grouped into atomic sequences such that these sequences will always be accesses sequentially and all accesses will be atomic. Multiple such sequences may execute out of order, but the order of execution within a sequence is always obeyed. This provides us with a coarse-grain ordering of sequences, such that ordering stalls may now be avoided. | |||

ASO employs the technique of check-pointing the memory accesses, so that in case of a race condition, the state of the system can be restored. A race condition is defined as a specific sequence of memory accesses that violate the sequential ordering of a program. Using this technique, we can define the system to be in three distinct states as mentioned below: | |||

#''Accumulate'': In this state, a check point has been created and an atomic store sequence is being put together for execution. Once the size of the atomic sequence reaches a predetermined size, the sequence moves from the Accumulate state to the Await Permission state. | |||

#''Await Permission'': In this state, the atomic sequence waits for all of the accesses to be granted permission for the store. Once the permissions for all of the instructions have arrived, the sequence then moves into the Commit state. | |||

#''Commit'': In the commit state, all the writes in the sequence commit and are drained into the memory. The sequence is considered to be committed once all its writes are globally visible. | |||

A sequence can transition directly from the ''Accumulate'' state to the ''Commit'' state, if the write permissions for all the accesses have already arrived while the sequence was in the ''Accumulate'' state. | |||

Thus, we can see that ASO removes ordering constraints in the store accesses such that the stalls due to ordering constraints can be reduced to a minimum. | |||

==Conclusions and Observations== | |||

Although SSB and ASO are not essentially means by which write buffers communicate in the multiprocessor systems, these are the techniques that are necessary for complete utilization of write buffer hardware when using weak ordering approaches, like TSO. Interested reader may read more about these two schemes in <ref name="store-wait-free" />. | |||

We can see from the above architectural techniques that using a write buffer in a cache-based multiprocessor system is much helpful in maximizing processor performance by off-loading the store latency to the write-buffer. With hardware and software techniques that take care of issues like the coherence between multiple buffers, capacity limitations of the write buffers and the ordering policy that is followed while implementation, write buffering can be a very strong technique to improve the performance of a multiprocessor system. | |||

=='''References'''== | =='''References'''== | ||

<references/> | <references/> | ||

Latest revision as of 22:40, 6 March 2012

With the present day processor speeds increasing at a much faster rate than memory speeds<ref>http://www.cesr.ncsu.edu/solihin/Main.html</ref>, there arises a need that the data transactions between the processor and the memory system be managed such that the slow memory speeds do not affect the performance of the processor system. While the read operations require that the processor wait for the read to complete before resuming execution, the write operations do not have this requirement. This is where a write buffer (WB) comes into the picture, assisting the processor in writes, so that the processor can continue its operation while the write buffer takes complete responsibility of executing the write. Use of a write buffer in this manner also frees up the cache to service read requests while the write is taking place. For a processor that operates at a speed much higher than the memory speed, this hardware scheme prevents performance bottlenecks caused by long-latency writes.<ref>http://en.wikipedia.org/wiki/Write_buffer</ref>

Introduction

Write Buffers in Uni-processors

Figure 1 shows the cache-based single processor system with a write buffer. A write to be performed is pushed into the buffer implemented as a FIFO (First In - First Out) queue, which essentially ensures that the writes are performed in the order that they were called. In a uni-processor model, with the requirement and possibility of extracting Instruction Level Parallelism (ILP), the writes may also be called out-of-order, provided there are some hardware/software protocols implemented to check the writes for any dependences that may exist in the instruction stream.

A generic approach towards read-write accesses can be described as follows [3][4]:

- If a write is followed by a read request to the same memory location while the write is still in the buffer, the buffered value is returned

- If a write is followed by a write request to the same memory location while the write is still in the buffer, the earlier write is overridden and is updated with the new write value

This follows that the write buffers not only save memory access overhead on the first write to a location, but also on the closely-spaced successive read-after-write and write-after-write sequences.

Interested reader may read about the store buffer and its implementation in ARM Cortex-R series processors.<ref>http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0363e/Chdcahcf.html</ref><ref>http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0246a/Cjafdjgj.html</ref>

Write Buffer Issues in Multiprocessors

In a multiprocessor system, the same design can be extended, as shown in the Figure 2. In a generic design, each processor will have its own private cache and a write buffer corresponding to the cache. The caches are connected by the means of an interconnect with each other as well as with the main memory. As can be seen in the Figure 2, each processor makes a write by pushing it into its write buffer, and the write buffer completes the task of performing the write to the main memory or the cache. Consistency is maintained between ordering of memory accesses at individual processors in the same way as explained in the section above.

The Coherence Problem

However, with multiple processors working on the same task, it is essential that the memory ordering be consistent not only at individual processor level, but also with respect to all other processors in the system as well. Consider the case where a write (STORE A) has been issued by processor P1 into the WB1, and the write is waiting to be executed. Since the write has not yet been performed to the cache or the main memory, the other processors do not have any knowledge about the changes made to address A. As a result, a read operation by another processor from address A will take the value from either its own cache, write buffer, or the main memory (depending on whether it hits or misses in the cache).

This is a classic problem in all multi-processor systems, termed as Cache Coherence problem. It is the job of the designer to employ protocols that will take care of the sequential ordering of the instructions, and ensure that the writes made to any one of the caches are propagated and updated in all of the processor caches. With the addition of a write-buffer, we add another level to this problem, as we now have to ensure that pending write requests in a processor's buffer do not go unnoticed by other processors.

The sequential ordering model used in the system holds a strong bearing on the write buffer management policy, as is explained in a later section. We will now take a look at the definitions of different cache coherence policies and then move on to study various hardware implementations of the write buffer and the policies employed.

Coherence Models

"A memory scheme is coherent if the value returned on a LOAD instruction is always the value given by the latest STORE instruction with the same address."<ref name="dubois" />

The two conventional models followed to attain this objective are the snoopy-bus protocol and the directory-based coherence protocol<ref name="chapter-two">http://www.csl.cornell.edu/~heinrich/dissertation/ChapterTwo.pdf</ref>. The directory-based protocol is used for distributed memory systems, in which every processor keeps track of what data is being stored using a directory entry. In the snoopy-bus protocol, every processor monitors the reads and writes that are being serviced by the memory bus. The read and write requests can be broadcast on the bus for all the processors to respond based on whether or not they are in possession of that data in their cache.

With the addition of write buffers in the system, it is now essential that a processor be aware of the memory transactions occurring not only at the caches of other processors, but also in their write buffers. The snoopy bus protocol can maintain coherence between multiple write buffers by communicating using one of the two protocols explained below.

Write-Update

In this approach, a write request by a processor is first pushed onto its local write buffer, and then is broadcast on the bus for all other processors to see. Each processor updates its local cache and buffer with the new value of the data. This saves bus bandwidth in terms of writes, as there is only one broadcast that needs to be made in order for all the caches to be up to date with the newest value of the data. This is disadvantageous, however, when a snooped write transaction is present in a processor's cache - it needs to be updated right away, stalling other read operations that may be happening on that cache.

Write-Invalidate

This approach is common when dealing with systems where a data block is being written by one processor, but is being read by multiple processors. Whenever a write is made to shared data, it is pushed onto the local write buffer, and an invalidate signal is sent to all the caches and buffers, asking for that data block to be made invalid, as the value is not updated to the latest one. This approach alleviates us of the problem presented by the update protocol, as a snooped cache block is invalidated right away and does not keep any read waiting on the cache. However, this may cause additional traffic on the bus as a result, and a separate bus for invalidation requests may be included in the design.

Write–update strategy is liable to keep and update unnecessary data in the cache - the least recently used (LRU) algorithm may evict the useful data from the cache and keep the redundant updated data. Most present day processors employ write-invalidate policy because of its ease of implementation<ref name="chapter-two" />. In this article, for write-propagation of all hardware and software based designs we follow the write-invalidate strategy.

Coherence in Write Buffers

Software-Based Coherence

The software technique relies on the compiler to ensure that no dependencies exist between the STORE/LOAD accesses carried out at different processors on the shared memory. The compiler, based on indications from the programmer will make sure that there are no incoherent accesses to shared memory. There is a possibility of having a shared READ/WRITE buffer between all processors to access the main memory.

Hardware-Based Coherence

In hardware-based protocols accesses to the shared memory are communicated using hardware invalidate signals that are broadcasted to all the processor memories. Hardware support may also be required for a LOAD to be broadcasted to all the caches, so that a read can be performed directly from a remote memory. There are two approaches to maintaining coherence in write buffers and caches for multiprocessor system-

Unique Buffer per Processor<ref name="dubois" />

In this configuration, every processor has its own unique buffer as seen in Figure 3 that holds the loads and stores of the local processor as well as invalidates and loads of remote processors wanting access to the shared data. A store request in local cache will ensue invalidating of that data block in all other caches and main memory. A STORE is said to have been performed when

- the request is serviced in the buffer and the cache is updated on a local hit and

- the request is issued to the buffer and an invalidate request has been sent to all other processor on the shared data.

This can be achieved in two ways depending on the topology of the connection between the caches -

- For a bus-based MP system, the LOADs that miss in the local cache and the STOREs that need invalidation in other caches are broadcast over the bus to all processor caches and memory. The STORE request is then considered performed with respect to all processors when the invalidation signals have been sent out to the private buffers of all the caches.

- For non-bus MP systems, the caches are connected in point-to-point mesh-based or interconnect ring-based topology. When a store is encountered in one of the processors, the shared data is first locked in the shared memory to ensure atomic access, and then the invalidate signal is propagated along the point-to-point interconnect in a decided order. The STORE to shared memory is performed only when the invalidation has been propagated to all the processor caches and buffers. If any processor issues a LOAD on the same address as that is being STOREd, then the LOAD request has to be rejected or buffered to be serviced at a later time, to maintain atomic access to the shared data block.

Separate Buffers for Local and Remote Accesses<ref name="dubois" />

Another approach to maintaining coherence is for every processor to have separate buffers for local data requests and remote data requests. As seen in the Figure 4, every processor has a Local-buffer which queues the local LOADs and STOREs, and a Remote-buffer (also termed Invalidate buffer) that stores the invalidation requests and LOADs coming in from different processors. In bus-based processor system, this approach makes it difficult to maintain write atomicity, as different invalidate buffers may hold different number of invalidation requests, putting uncertainty on the time to invalidate the concerned data block in the cache. In non-bus based systems, however, this approach is successful in maintaining strong coherence, provided the invalidate signal makes sure that the data is invalidated at a cache before moving on to the next processor (rather than just pushing the invalidate request in the buffers and moving on)<ref name="dubois" />.

Universal read/write Buffer<ref>http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=342629&tag=1</ref>

In this approach, a shared bus controller resides in between the local bus and memory bus, where local bus is connected to all private caches of the processors (illustrated in Figure 5). This technique supports multiple processors with same or different coherence protocols like; MSI, MESI and MOESI write invalidate strategy. Shared bus controller consists of the local bus controller, the data buffer and the address buffer. Each private cache provides two bit tri-state signals to the local bus – INTERVENE and SHARE.

- INTERVENE is asserted when any cache wants to provide a valid data to other caches.

- Cache controller asserts the SHARE when there is an address match with any of its own tag address.

Structural Components

The Data Buffer consists of three FIFOs

- The non_block write-FIFO receives non cacheable memory access data from CPU. These requests do not require any cache eviction or line-fill cycles.

- The write-back FIFO is used for eviction cycles. If there is a cache miss and eviction is required, cache miss data will be read (line-fill cycle) from memory to the cache just after the evicted data moved to the FIFO. This FIFO will be written back to memory later on. So, CPU will get the new data but eviction process time will be hidden from the CPU.

- The read-FIFO is used for storing data from non_block write FIFO, Write-back FIFO or memory. Data is temporarily stored in this FIFO until local bus is cleared and ready for new data.

The Address Buffer also consists of three FIFOs

- Non_block write FIFO - stores address of non-blocking accesses. The depth of this FIFO should be the same as the corresponding Data Buffer FIFO.

- Write-back FIFO - stores starting address of eviction cycle and holds the address until memory bus is free.

- Line-fill FIFO- stores the starting address of line-fill cycle.

Snoop logic is started whenever there is read access to main memory. Snoop logic compares the read request address with both write-back FIFO and line-fill FIFO. If there is a match, the HIT flag is set and CPU gets the data immediately through the internal bypass path. By doing so, stale data will not be read and the CPU doesn’t have to waste memory latency time.

For non_block write cycle, snoop logic block compares the new requested address and Byte Enable bits with the previously stored non_block write FIFO. If there is an address match but no Byte-Enable overlapped, BGO signal will be asserted and pointer of the non_block write FIFO will not move forward to prevent the FIFO from filling up quickly.

In this approach, the read access has priority over the write access, so whenever the memory bus is available, read address will get direct possession of the memory bus.

The Local Bus Controller monitors the local bus for status bits (M, O, E, S, I), INTERVENE# and SHARE# bits and determines that a transaction needs to access main memory or private cache. For main memory access, controller informs the buffer with the signal of WB (Write Back), LF (Line Fill) or O3 (owned) status bit.

Controller Algorithm

For the MSI and MOSI protocols:

- If there is a read miss on the local bus -INTERVENE is asserted.

- If there is a write miss with M status on the local bus, write back cycle will be performed -WB is asserted.

- If there is a read miss or write miss from any of the protocol LF cycle will be performed -LF is asserted.

For the MOESI protocol:

- If there is a read miss on the local bus -INTERVENE is asserted.

The above outputs can be written in Boolean equations as shown in the example<ref>http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=00342629</ref> below:

WB = RD * MOESI * INTV + WR * [STATUS=M]

LF = INTV

03 = RD * MOESI * INTV

MOESI means the cycle is initiated by MOESI protocol.

INTV means INTERVENE signal which is asserted when any of cache provides a valid data to other cache.

Algorithms

The algorithm followed by each of the processors in a multiprocessor system is given below. The Processors may be following different protocols i.e. MSI/MESI/MOESI as shown in Figure 5, but the algorithms below will ensure that the memory accesses are carried out coherently.

The 'S' state is common for all three protocols but this system takes the advantage of MESI and MOESI protocol by reducing memory bus transactions. In shared state(S), multiple caches have the same updated data but memory may or may not have a copy. This change requires the following protocol translation.

The algorithms for the MOESI protocol are as follows:

- If read hit initiated by other cache

- • M state provides data to other cache

- • Changes its state from M to O

- If same data is hit again

- • Cache with O state is responsible of providing data to the requesting cache

- If there is a write cache misses and INTERVENE is not activated

- • Generate a line-fill cycle

- If read hit initiated by other cache

The algorithms for the MSI and MESI protocols are as follows:

(These protocols have no O state)

Algorithm for MSI protocol, M state provides data to the other caches & main memory and also changes the state from M to S. This transaction requires main memory access, but local bus translation logic plays a big role in reducing the bus access and improve the performance.

- • Local Bus controller changes the status of MOESI to O instead of S

- • M of MSI or MESI changes to S and no update will occur on main memory.

- So, if same data is requested by other cache

- • O of MOESI will provide the data instead of main memory.

- If write cycle initiated

- If MOESI cache has a valid line with M or O

- MOESI cache will send out the data line on to local bus and change the state to I.

- (Because the line will be written and outdated by other cache.)

- If MOESI cache has a valid line with M or O

- If the status is E or S

- • No cache will involve of data transfer.

- • Main memory will provide the data.

Maintaining Sequential Consistency

When operating as a part of a multiprocessor, it is not enough to check dependencies between the writes only at the local level. As data is shared and the course of events in different processors may affect the outcomes of each other, it has to be ensured that the sequence of writes is preserved between the multiple processors.

“A system is said to be sequentially consistent if the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operation of each individual processor appear in this sequence in the order specified by its program.”<ref name="node6">http://www.cs.jhu.edu/~gyn/publications/memorymodels/node6.html</ref>

As mentioned earlier, the sequential ordering policy applied has a strong bearing on the write-buffer management policy. Buffer management refers to the order in which multiple buffer requests are treated. In most cases, the requests are treated in a strict FIFO order, while in some cases requests may be allowed to pass each other in the buffer. This is referred to as jockeying<ref name="dubois" />. Jockeying is often permitted between memory requests for different memory words, but is not permitted between requests with the same memory word. This approach is called restricted jockeying<ref name="dubois" />.

We will discuss some ordering approaches, and explore how they are significant to write buffer coherence management.

Strong Ordering

In strong ordering, the requirements for a system are as follows<ref>http://www.ece.cmu.edu/~ece548/handouts/19coher.pdf</ref>:

- All memory operations appear to execute one at a time

- All memory operations from a single CPU appear to execute in-order

- All memory operations from different processors are “cleanly” interleaved with each other (serialization)

Serialization is ensured on a single processor by keeping all the accesses to the memory consistent and in order. This implies that there will be no jockeying allowed within the write buffer, and any write access to shared data will result in invalidation of all corresponding copies in the caches and buffers. Strong ordering thus enforces strong sequential consistency by strictly serializing local accesses and communicating shared accesses via invalidations. The serialization, however, imposes a penalty on the efficiency of the processor, as the instructions have to be performed in serial fashion for most of the time.

Weak Ordering

In weakly ordered systems, processors can issue shared memory accesses without waiting for previous accesses to be performed. Jockeying is allowed in the buffers, and accesses can pass each other out of serial program order. However, there is necessity to maintain consistency while accessing the synchronization variables. Synchronization variables are the variables that are responsible in the multi-program to maintain concurrency between processes running on separate processors<ref name="node6" />. Needless to say, as accesses can pass each other, the efficiency of the weakly ordered systems is much higher than the strongly ordered systems, but the implementation is much more complex, for the necessity to maintain the correctness of the program.

Example

Examples for Sequential Consistency: In the following program, consider variables “a”, “b”, “flag1” and “flag2” are initialized with 0 and both processors (CPU1 and CPU 2) are sharing all the variables

a = b = flag1 = flag2 = 0; // initial value CPU1 CPU 2 Flag 1 = 1; flag2 = 1; a = 1; a = 2; r1 = a; r3 = a; r2 = flag2; r4 = flag1;

SPARC V8 architecture follows the Total Store Ordering model which is a relaxed (weak) ordering model where the reads may bypass writes, but writes have to execute in program order. Possible result we can get: r1 = 1, r3 = 2, r2 = r4 = 0 But strong ordering enforces strict atomicity thus we will get different results based on the execution order between two processors’ instructions.

Overcoming Buffer Stalls<ref name="store-wait-free">http://www.eecg.toronto.edu/~moshovos/research/store-wait-free.pdf</ref>

Although the write buffer does the job of off-loading the write responsibility and hence the write access latency overhead from processor time, there are certain conditions where the processor performance cannot be improved a beyond a certain point. The processor system needs to be stalled if the write buffer overflows, for example. There are two types of stalls that mainly affect the processor performance :

- Buffer capacity related stalls : The buffer capacity related stalls occur when a write buffer overflows, and the processor cannot buffer any more stores. This occurs during store bursts, which are a number of stores that are requested in quick succession. The Scalable Store Buffer<ref name="store-wait-free" /> is a technique that aims at overcoming capacity-related stalls.

- Ordering related stalls : Modern processors have the ability to execute speculatively<ref>http://www.pcguide.com/ref/cpu/arch/int/featSpeculative-c.html</ref>, which means that they can execute down a predicted path to save processor time. But there are time when the speculation could go wrong, and the state of the system has to be brought to a true state by draining out all requests down the bad path. This incurs a stall penalty on the processor, as no execution can proceed till the system is rolled back to a non-speculative, true state. Atomic Sequence Ordering<ref name="store-wait-free" /> is a technique that takes care of the ordering-related stalls.

The two are explained here in brief.

Scalable Store Buffer (SSB)

The SSB as mentioned above is employed to overcome the buffer capacity-related stalls in a write-buffer based system. Conventional buffers follow Total Store Ordering<ref name="store-wait-free" /> in which store values are forwarded to matching loads, and stores maintain total order of execution. The key architecture changes employed by the SSB are :

- The store forwarding is performed through L1 cache, so that the CAM look-up within the write buffer to match loads with stores can be done away with.

- Thus the L1 cache contains data values that are private to the processor

- Also, the stores are now buffered as a FIFO structure called the Total Store Order Buffer (TSOB)

- When the stores commit, they drain into the L2 cache, where the values are globally visible. All coherence requests are serviced by the L2 cache.

By using this approach, the effective size of the write buffer becomes equivalent to the size of the L1 cache, making the chances of the store buffer overflowing, infinitesimally small. In event that such an overflow does happen, the stores are then stalled until outstanding stores drain out, making room for newer ones.

Atomic Sequence Ordering (ASO)

ASO aims at reducing the ordering-related stalls that occur in a multiprocessor. Ordering-related stalls frequently occur because of memory accesses being serviced out of order, so that the sequential consistency is not maintained - to retain the sequential consistency, the processors are forced to go into the stall state. Using the ASO approach, accesses are grouped into atomic sequences such that these sequences will always be accesses sequentially and all accesses will be atomic. Multiple such sequences may execute out of order, but the order of execution within a sequence is always obeyed. This provides us with a coarse-grain ordering of sequences, such that ordering stalls may now be avoided.

ASO employs the technique of check-pointing the memory accesses, so that in case of a race condition, the state of the system can be restored. A race condition is defined as a specific sequence of memory accesses that violate the sequential ordering of a program. Using this technique, we can define the system to be in three distinct states as mentioned below:

- Accumulate: In this state, a check point has been created and an atomic store sequence is being put together for execution. Once the size of the atomic sequence reaches a predetermined size, the sequence moves from the Accumulate state to the Await Permission state.

- Await Permission: In this state, the atomic sequence waits for all of the accesses to be granted permission for the store. Once the permissions for all of the instructions have arrived, the sequence then moves into the Commit state.

- Commit: In the commit state, all the writes in the sequence commit and are drained into the memory. The sequence is considered to be committed once all its writes are globally visible.

A sequence can transition directly from the Accumulate state to the Commit state, if the write permissions for all the accesses have already arrived while the sequence was in the Accumulate state.

Thus, we can see that ASO removes ordering constraints in the store accesses such that the stalls due to ordering constraints can be reduced to a minimum.

Conclusions and Observations

Although SSB and ASO are not essentially means by which write buffers communicate in the multiprocessor systems, these are the techniques that are necessary for complete utilization of write buffer hardware when using weak ordering approaches, like TSO. Interested reader may read more about these two schemes in <ref name="store-wait-free" />. We can see from the above architectural techniques that using a write buffer in a cache-based multiprocessor system is much helpful in maximizing processor performance by off-loading the store latency to the write-buffer. With hardware and software techniques that take care of issues like the coherence between multiple buffers, capacity limitations of the write buffers and the ordering policy that is followed while implementation, write buffering can be a very strong technique to improve the performance of a multiprocessor system.

References

<references/>