Scalable Coherent Interface: Difference between revisions

(→Race Conditions: adding more sections) |

|||

| (19 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

SCI is an IEEE standard for shared-memory multiprocessing. The standard was approved in 1992. View the [http://en.wikipedia.org/wiki/Scalable_Coherent_Interface#History SCI Wikipedia article] for more information on the interface's history. The article covers the cache-coherence portions of the IEEE standard. | SCI is an IEEE standard for shared-memory multiprocessing. The standard was approved in 1992. View the [http://en.wikipedia.org/wiki/Scalable_Coherent_Interface#History SCI Wikipedia article] for more information on the interface's history. The article covers the cache-coherence portions of the IEEE standard. | ||

SCI design goals include | |||

*Scalability, so that the same mechanisms can be used in high-volume, single-processor (or few-processor) systems such as one might find in desktop machines, as well as in large, highly parallel multiprocessors (next-generation supercomputers); | |||

*Coherence, to support the efficient use of cache memories in the most general and easiest-to-use multiprocessor model, distributed shared memory; and | |||

*An interface, a standardized open communication architecture that allows products from multiple vendors to be incorporated into one system and interoperate smoothly. | |||

=General Operation= | =General Operation= | ||

Cache coherence in SCI uses several main components to ensure reliability. | |||

==Memory Directory== | |||

SCI operates by using directories in every processor's memory. When a memory block has not been cached, the directory holds this block in the '''Home state''', signifying that the block is at home. After a read request, the memory holds this block in the '''Fresh state''' and will supply the data immediately to any requesting cache. After a write request, the memory holds the block in the '''Gone state'''. The memory does not supply the data to further requesting caches, but instead supplies the cache with a link to a cache that holds this data. | |||

The directory can be expected to take up to 4% of the total memory space. | |||

==Linked List== | |||

Each cache that holds a given block is a member of that block's linked list. When a new cache requests a block, the memory supplies the id of the '''Head cache'''. Each cache in the list contains a link to the previous cache and the next cache in the list. The last cache in the list is the '''Tail cache'''. In this way, the list is distributed. | |||

Because SCI is an invalidation protocol, the linked list is cleared upon every write. First, the writing cache adds itself to the head of the list, then indicates to the next cache that it is invalidating the block. | |||

==Cache Lines== | |||

Besides the data for the linked list, each cache line needs to store the standard cache information (i.e. tag). This information is expected to be about 7% of the total cache memory. | |||

=Cache State Machine= | =Cache State Machine= | ||

| Line 11: | Line 26: | ||

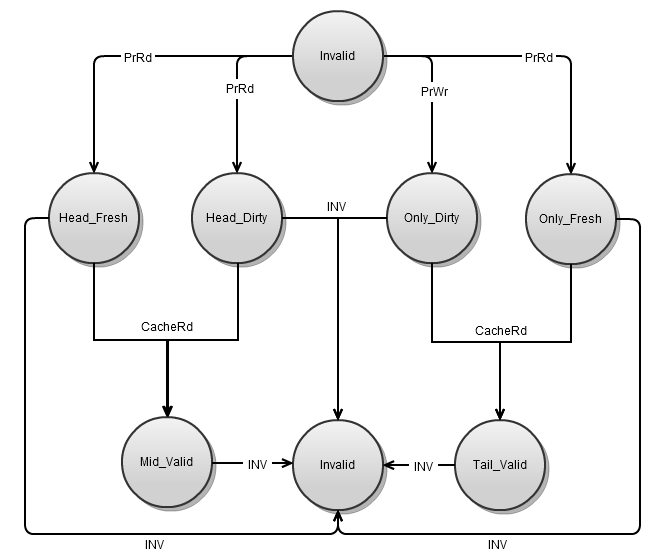

The caches states cover two different dimensions: the cache's position in a linked list, and the modified state of a block. | The caches states cover two different dimensions: the cache's position in a linked list, and the modified state of a block. | ||

*Head | *Head - The first, but not only member of the list. | ||

*Mid | *Mid - Neither the first nor last member of the list. | ||

*Tail | *Tail - The last member, but not only member of the list. | ||

*Only | *Only - The only member of the list. | ||

*Clean - The data is unmodified, and only I have it. | |||

*Modified - The data is modified. | |||

*Fresh - The data is unmodified, and I and and others have it. | |||

*Valid - My copy of the data is valid, but others have it as well. | |||

*Invalid - I do not have a valid copy of the data. | |||

The possible combinations of these two attributes are shown in the state diagram below. | |||

[[Image:MattIsAwesome.png]] | [[Image:MattIsAwesome.png]] | ||

| Line 29: | Line 49: | ||

*Home - The block is not cached. It is at "home". | *Home - The block is not cached. It is at "home". | ||

*Gone | *Gone | ||

*Fresh | **The block is cached by at least one cache, and the cached value is probably dirty. | ||

**When a cache requests the block, the memory will supply an identifier for the first cache in the list. | |||

*Fresh | |||

**The block is cached by at least one cache, but the cached value is not dirty. | |||

**The memory will supply the data upon cache requests. | |||

=Race Conditions= | =Race Conditions= | ||

In addition to the above stabe states, the existance of race conditions require numerous pending cache and memory states. One of the additional states is the busy state. | |||

==Handling Races due to Non-Instantaneous Processing of a Request== | |||

The simplest kind of race conditions results from simultaneous write requests to the same block. If both requests proceed in parallel, the system will fail. This problem can avoided by serializing the requests. This is solved in three ways: | |||

*The directory only accepts one request and then enters the busy state. Future requests are denied until the busy state is left. | |||

*The home node stores the requests in the order received and services them in order, utilizing the busy state to know when a write has finished. | |||

*The directory always services requests, and the requests are stored and serialized at the cache head. | |||

See the Solihin text, section 11.4.2 for more information. | |||

==Handling Races due to Out-of-Sync Directory State== | |||

See the Solihin text, section 11.4.1 for more information. | |||

Why so many states needed? | |||

Why pending states needed? | |||

=References= | =References= | ||

Latest revision as of 19:55, 15 April 2011

SCI is an IEEE standard for shared-memory multiprocessing. The standard was approved in 1992. View the SCI Wikipedia article for more information on the interface's history. The article covers the cache-coherence portions of the IEEE standard.

SCI design goals include

- Scalability, so that the same mechanisms can be used in high-volume, single-processor (or few-processor) systems such as one might find in desktop machines, as well as in large, highly parallel multiprocessors (next-generation supercomputers);

- Coherence, to support the efficient use of cache memories in the most general and easiest-to-use multiprocessor model, distributed shared memory; and

- An interface, a standardized open communication architecture that allows products from multiple vendors to be incorporated into one system and interoperate smoothly.

General Operation

Cache coherence in SCI uses several main components to ensure reliability.

Memory Directory

SCI operates by using directories in every processor's memory. When a memory block has not been cached, the directory holds this block in the Home state, signifying that the block is at home. After a read request, the memory holds this block in the Fresh state and will supply the data immediately to any requesting cache. After a write request, the memory holds the block in the Gone state. The memory does not supply the data to further requesting caches, but instead supplies the cache with a link to a cache that holds this data.

The directory can be expected to take up to 4% of the total memory space.

Linked List

Each cache that holds a given block is a member of that block's linked list. When a new cache requests a block, the memory supplies the id of the Head cache. Each cache in the list contains a link to the previous cache and the next cache in the list. The last cache in the list is the Tail cache. In this way, the list is distributed.

Because SCI is an invalidation protocol, the linked list is cleared upon every write. First, the writing cache adds itself to the head of the list, then indicates to the next cache that it is invalidating the block.

Cache Lines

Besides the data for the linked list, each cache line needs to store the standard cache information (i.e. tag). This information is expected to be about 7% of the total cache memory.

Cache State Machine

The caches states cover two different dimensions: the cache's position in a linked list, and the modified state of a block.

- Head - The first, but not only member of the list.

- Mid - Neither the first nor last member of the list.

- Tail - The last member, but not only member of the list.

- Only - The only member of the list.

- Clean - The data is unmodified, and only I have it.

- Modified - The data is modified.

- Fresh - The data is unmodified, and I and and others have it.

- Valid - My copy of the data is valid, but others have it as well.

- Invalid - I do not have a valid copy of the data.

The possible combinations of these two attributes are shown in the state diagram below.

Directory State Machine

Each cache block's directory can be in one of three states. These states designate whether the block is cached, and whether the cached block is dirty.

- Home - The block is not cached. It is at "home".

- Gone

- The block is cached by at least one cache, and the cached value is probably dirty.

- When a cache requests the block, the memory will supply an identifier for the first cache in the list.

- Fresh

- The block is cached by at least one cache, but the cached value is not dirty.

- The memory will supply the data upon cache requests.

Race Conditions

In addition to the above stabe states, the existance of race conditions require numerous pending cache and memory states. One of the additional states is the busy state.

Handling Races due to Non-Instantaneous Processing of a Request

The simplest kind of race conditions results from simultaneous write requests to the same block. If both requests proceed in parallel, the system will fail. This problem can avoided by serializing the requests. This is solved in three ways:

- The directory only accepts one request and then enters the busy state. Future requests are denied until the busy state is left.

- The home node stores the requests in the order received and services them in order, utilizing the busy state to know when a write has finished.

- The directory always services requests, and the requests are stored and serialized at the cache head.

See the Solihin text, section 11.4.2 for more information.

Handling Races due to Out-of-Sync Directory State

See the Solihin text, section 11.4.1 for more information.

Why so many states needed? Why pending states needed?

References

- Wikipedia, Scalable Coherent Interface

- SCI