CSC/ECE 517 Fall 2010/ch4 4h sk: Difference between revisions

Jump to navigation

Jump to search

(→Roodi) |

|||

| (32 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

:If there is one thing that has proven to be true over time is is that humans are fallible. We by nature, make mistakes. As a result, our humanity must be factored in to the software engineering and development that we produce. Development teams discovered early on that code review was the best way to discover mistakes and bugs in their software. This proved to be a daunting task however. Assembling teams to identify problems in the code base as well as training them took up large amounts of time and valuable resources. Obviously code review and bug discovery were critical areas of software development that needed to be addressed, but development teams needed a more efficient way to go about doing it. | :If there is one thing that has proven to be true over time is is that humans are fallible. We by nature, make mistakes. As a result, our humanity must be factored in to the software engineering and development that we produce. Development teams discovered early on that code review was the best way to discover mistakes and bugs in their software. This proved to be a daunting task however. Assembling teams to identify problems in the code base as well as training them took up large amounts of time and valuable resources. Obviously code review and bug discovery were critical areas of software development that needed to be addressed, but development teams needed a more efficient way to go about doing it. | ||

:In the 1970's Stephen Johnson at Bell Laboratories wrote a program | :In the 1970's Stephen Johnson at Bell Laboratories wrote a program called [http://en.wikipedia.org/wiki/Lint_%28software%29 Lint]. Lint's functionality was to look at C programs that had made it through compilation without any errors and examine the source code to locate any bugs that were not detected. With this, static code analysis was born. | ||

:Static code analysis defines tools used to verify the quality and reliability of software systems quickly and efficiently. There are many static code analysis tools out there for many different languages. Some of these are open source products and some are commercially based. Their scope and accuracy can vary quite a bit depending on what tool a development team uses. There are some traits of these tools that are for the most part universal however. We will go over some of these now. | :Static code analysis defines tools used to verify the quality and reliability of software systems quickly and efficiently. There are many static code analysis tools out there for many different languages. Some of these are open source products and some are commercially based. Their scope and accuracy can vary quite a bit depending on what tool a development team uses. There are some traits of these tools that are for the most part universal however. We will go over some of these now. | ||

===How do Static Code Analysis Tools Identify Problems?=== | ===How do Static Code Analysis Tools Identify Problems?=== | ||

:While humans are fallible, at least we tend to be consistent about it. No matter the company or region of the world, software developers tend to fall into the same traps over and over. This creates patterns and known high risk | :While humans are fallible, at least we tend to be consistent about it. No matter the company or region of the world, software developers tend to fall into the same traps over and over. This creates patterns and known high risk situations that can be identified through programmatic means. Key aspects of a program that pretty much all static code analysis tools focus on are: | ||

:*'''Input validation and representation''' - Based on different key combinations and encoding of alphanumeric characters, what user flows put the code at risk to attack. | :*'''Input validation and representation''' - Based on different key combinations and encoding of alphanumeric characters, what user flows put the code at risk to attack. | ||

:*'''API abuse''' - Does the caller violate the API terms | :*'''API abuse''' - Does the caller violate the API terms | ||

| Line 31: | Line 31: | ||

:*'''Negative Returns''' - Check to see if a function returns a negative value that is returned inappropriately which may lead to memory corruption, crashes, infinite loops, etc. | :*'''Negative Returns''' - Check to see if a function returns a negative value that is returned inappropriately which may lead to memory corruption, crashes, infinite loops, etc. | ||

:This process is much more efficient then having to compile the entire code base every time the development team wanted to run analysis tools on their software. These tools are also quite flexible. Many of which allow the rules and constraints used by these tools to be customized for the specific | :This process is much more efficient then having to compile the entire code base every time the development team wanted to run analysis tools on their software. These tools are also quite flexible. Many of which allow the rules and constraints used by these tools to be customized for the specific needs of a particular development studio. | ||

===Is Static Code Analysis Important?=== | ===Is Static Code Analysis Important?=== | ||

| Line 63: | Line 63: | ||

==Static Code Analysis in Ruby== | ==Static Code Analysis in Ruby== | ||

:While many static code analysis tools have matured and become more complete through years of usage, that is not the case with Ruby. With Ruby only now beginning to see an influx of attention due to it being integrated with the Rails framework, code analysis tools are quickly emerging. One of the major | :While many static code analysis tools have matured and become more complete through years of usage, that is not the case with Ruby. With Ruby only now beginning to see an influx of attention due to it being integrated with the Rails framework, code analysis tools are quickly emerging. One of the major challenges in developing these analysis tools for Ruby was that there was not a standardized way to access the [http://en.wikipedia.org/wiki/Abstract_syntax_tree Abstract Syntax Tree]. Versions of Ruby prior to 1.9 do not support this. There are Gems that have been created to address this issue but currently the support is inconsistent. This is addressed in Ruby 1.9, which is bundled with a library called Ripper that allows the parsing of source files. | ||

:Even though static analysis tools for Ruby are young, there are several options. We will take a look at some of these here. | :Even though static analysis tools for Ruby are young, there are several options. We will take a look at some of these here. | ||

===Flog=== | ===Flog=== | ||

:Flog is a Gem written by Ryan Davis and Eric Hodel. Where it shines is in analyzing code complexity. Overly complex code is often bug prone and unclear. These make great candidates to identify and refactor. Flog does this using a scoring system that is a part of the [http://www.c2.com/cgi/wiki?AbcMetric ABC metric]. This scoring system is then reported back to the developer to show them areas that are candidates for reevaluation. A report from Flog | :[http://github.com/seattlerb/flog Flog] is a [http://en.wikipedia.org/wiki/RubyGems Gem] written by Ryan Davis and Eric Hodel. Where it shines is in analyzing code complexity. Overly complex code is often bug prone and unclear. These make great candidates to identify and refactor. Flog does this using a scoring system that is a part of the [http://www.c2.com/cgi/wiki?AbcMetric ABC metric]. This scoring system is then reported back to the developer to show them areas that are candidates for reevaluation. A report from Flog looks like this: | ||

<pre> | <pre> | ||

Total score = 211.720690020501 | Total score = 211.720690020501 | ||

| Line 107: | Line 107: | ||

===Flay=== | ===Flay=== | ||

:After Flog, Ryan Davis wrote another Gem called Flay. Flay's main focus is on identifying where the code | :After Flog, Ryan Davis wrote another Gem called [http://github.com/seattlerb/flay Flay]. Flay's main focus is on identifying where the code base does not subscribe to the format of [http://en.wikipedia.org/wiki/Don%27t_repeat_yourself DRY]. It uses the Abstract Syntax Tree to identify duplicate sections of the code base. It can not only identify exact matches, but also areas that closely match except for superficial differences. This is beneficial for when code segments are identical except for variable names for example. Flay is able to ignore differences in literal values, names, whitespace, and programming style when comparing subtrees to identify duplicate sections of code. Like Flog, reports generated by Flay is a list of repeated code nodes ranked and weighted by severity. | ||

===Reek=== | ===Reek=== | ||

:Remember how I said programmers from all over the world | :Remember how I said programmers from all over the world routinely fall into the same trap? Well common traps and bad coding practices are actually lumped into a category called [http://github.com/kevinrutherford/reek/wiki/Code-Smells Code Smells]. Kevin Rutherford is a software developer who wrote a [http://silkandspinach.net/2008/09/15/ruby-refactoring-workbook/ book] discussing these different code smells and also developed a tool to locate them in Ruby source code. This tool is called [http://github.com/kevinrutherford/reek/wiki Reek]. Reek scans either source files or in-memory Class objects looking for some of these code smells. Specifically, Reek focuses on: | ||

:*'''Attribute''' - Warns if a class publishes a getter or setter for an instance variable caused the client to become too intimate with the inner workings of the class | :*'''Attribute''' - Warns if a class publishes a getter or setter for an instance variable caused the client to become too intimate with the inner workings of the class | ||

:*'''Class Variables''' - Warns that class variables are a part of the runtime state. Different parts of the system can inadvertently depend on other parts of the system causing unintended consequences | :*'''Class Variables''' - Warns that class variables are a part of the runtime state. Different parts of the system can inadvertently depend on other parts of the system causing unintended consequences | ||

| Line 150: | Line 150: | ||

===Roodi=== | ===Roodi=== | ||

:[http://github.com/martinjandrews/roodi/tree Roodi] (Ruby Object Oriented Design Inferometer) and | :[http://github.com/martinjandrews/roodi/tree Roodi] (Ruby Object Oriented Design Inferometer) and other static analysis tools for Ruby, parses the source code to examine it for flaws, bugs, and vulnerabilities. One key area where Roodi differs however it its dependence on JRuby. Roodi's author Marty Andrews felt that he could make the software more useful by making it depend on JRuby's Abstract Syntax Tree instead of Ruby's built or their party solutions. While JRuby's version is not a perfect version either, he felt it's functionality was better suited for static code analysis than the others. The checks that Roodi performs are: | ||

:'''AssignmentInConditionalCheck''' - Warns if there is an assignment inside a conditional | :'''AssignmentInConditionalCheck''' - Warns if there is an assignment inside a conditional | ||

| Line 213: | Line 213: | ||

===Saikuro=== | ===Saikuro=== | ||

:[http://github.com/devver/saikuro Saikuro] is written by Zev Blut. It is a code complexity analyzer much in the same vain as Flog. It rates the complexity of methods and modules and returns token values for each instruction. The way the cyclomatic complexity is calculated is by using a [http://hissa.nist.gov/HHRFdata/Artifacts/ITLdoc/235/chapter4.htm Simplified Complexity Calculation] on each branch point of each method. A method's score begins at 1 since there is at least one path through the code, and for each conditional that is met within the method the score is increased by one. Saikuro is a single file command line tool that is run on its own instead of as a Gem. | |||

===Metric_fu=== | ===Metric_fu=== | ||

[http://metric-fu.rubyforge.org/ Metric_fu] is a static code analysis tool that pulls together many of the other Gems out there into one aggregated package. Written by Jake Scruggs, Metric_fu hopes by combining the most popular analysis tools, the developer can get the most accurate picture possible of the quality of their code. The other tools that Metric_fu requires and get automatically installed when installing the Metric_fu Gem are: | :[http://metric-fu.rubyforge.org/ Metric_fu] is a static code analysis tool that pulls together many of the other related Gems out there into one aggregated package. Written by Jake Scruggs, Metric_fu hopes by combining the most popular analysis tools, the developer can get the most accurate picture possible of the quality of their code. The other tools that Metric_fu requires and get automatically installed when installing the Metric_fu Gem are: | ||

:*'''Flog''' - Checks the complexity of the code | |||

:*'''Flay''' - Checks to see if code segments are duplicated | |||

:*'''Reek''' - Checks to see if your code contains known bad practices | |||

:*'''Roodi''' - Checks to see if your code contains known bad practices | |||

:*'''Saikuro''' - Rates the complexity level of the code | |||

:*'''Churn''' - Checks to see what files change the most | |||

:*'''RailsBestPractices''' - Rails metrics aggregator | |||

:*'''Subversion''' - Version control system | |||

:*'''GIT''' - Version control system | |||

:*'''Rcov''' - Code-coverage checker invokes automated tasks and generates reporting | |||

:After installing Metric_fu the developer can run tests on their code by running: | |||

<pre> | <pre> | ||

: | :rake metrics:all | ||

: | |||

</pre> | </pre> | ||

=== | :Once the tests are complete, Metric_fu generates visual reports to show the developer how vulnerable their code base is. | ||

<div style="height:750px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_flog.gif]] | |||

<br /> | |||

<small>Flog report from Metric_Fu</small> | |||

</div> | |||

<div style="height:750px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_flay.gif]] | |||

<br /> | |||

<small>Flay report from Metric_Fu</small> | |||

</div> | |||

<div style="height:750px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_reek.gif]] | |||

<br /> | |||

<small>Reek report from Metric_Fu</small> | |||

</div> | |||

<div style="height:750px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_roodi.gif]] | |||

<br /> | |||

<small>Roodi report from Metric_Fu</small> | |||

</div> | |||

<div style="height:500px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_saikuro.gif]] | |||

<br /> | |||

<small>Saikuro report from Metric_Fu</small> | |||

</div> | |||

<div style="height:500px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_churn.gif]] | |||

<br /> | |||

<small>Churn report from Metric_Fu</small> | |||

</div> | |||

<div style="height:600px;margin-left:auto;margin-right:auto;text-align:center;width:1025px;"> | |||

[[Image:metric_fu_rcov.gif]] | |||

<br /> | |||

<small>Rcov report from Metric_Fu</small> | |||

</div> | |||

==Conclusions== | ==Conclusions== | ||

:Static code analysis has proven itself to be a valuable tool for improving the quality and security of software. It is important to remember that it is only one of many tools required to insure improvements are made. Meticulousness and care must be taken in every phase of development, from the requirement outline, design, construction, and testing phases. It is up to the developers to use these tools to their fullest potential. A practice that is becoming popular among development teams is to integrate a standardized rule set for source code. This is usually done be establishing two segments, a coding standard and a style guideline document. Even though right now static code analysis for the most part only has an install base in large development studios, the surge of dynamic development frameworks and how well they integrate with code analysis tools will help grow that install base to a much wider audience. As logs are kept of the performance of these tools on a large scale, they will continue to grow and improve. For young aspiring developers today, static code analysis will be another tool they will have to learn and put to good use. Their code, and the industry, will be better as a result. | |||

==References== | ==References== | ||

:[http://portal.acm.org.www.lib.ncsu.edu:2048/citation.cfm?id=1375696.1375707&coll=ACM&dl=ACM&CFID=106680131&CFTOKEN=34135462 Evaluating the Cost Reduction of Static Code Analysis for Software Security]<br /> | |||

:[http://portal.acm.org.www.lib.ncsu.edu:2048/citation.cfm?id=347324.348031&coll=ACM&dl=ACM&CFID=106680131&CFTOKEN=34135462Putting Static Analysis to Work for Verification: A Case Study]<br /> | |||

:[http://ieeexplore.ieee.org.www.lib.ncsu.edu:2048/search/srchabstract.jsp?tp=&arnumber=1657940&queryText%3Dstatic+code+analysis%26openedRefinements%3D*%26searchField%3DSearch+All Static Code Analysis]<br /> | |||

:[http://portal.acm.org/citation.cfm?id=327338.327354&coll=GUIDE&dl=GUIDE&CFID=109701982&CFTOKEN=17961341 At the Forge]<br /> | |||

:http://en.wikipedia.org/wiki/Static_code_analysis<br /> | |||

:http://www.viva64.com/terminology/Static_code_analysis.html<br /> | |||

:http://www.eetimes.com/design/automotive-design/4006735/Integrate-static-analysis-into-a-software-development-process<br /> | |||

:http://www.eetimes.com/design/industrial-control/4008909/Using-static-code-analysis-for-Agile-software-development?pageNumber=0<br /> | |||

:http://www.infoq.com/news/2008/11/static-analysis-tool-roundup<br /> | |||

:http://www.infoq.com/news/2009/09/code-quality-metric-fu<br /> | |||

:http://www.rubyinside.com/3-tools-for-drying-your-ruby-code-1305.html<br /> | |||

:http://on-ruby.blogspot.com/2007/12/flog-profiling-complexity.html<br /> | |||

:http://ruby.sadi.st/Flog.html<br /> | |||

:http://pivotallabs.com/users/miked/blog<br /> | |||

:http://silkandspinach.net/2008/09/23/reek-a-code-smells-detector-for-ruby/<br /> | |||

:http://github.com/kevinrutherford/reek/wiki<br /> | |||

:http://github.com/kevinrutherford/reek/wiki/Simulated-Polymorphism<br /> | |||

:http://www.ruby-forum.com/topic/166651<br /> | |||

:http://on-ruby.blogspot.com/2008/11/reek-for-ruby-rocks.html<br /> | |||

:http://blog.martyandrews.net/2008/09/roodi-checkstyle-for-ruby.html<br /> | |||

:http://github.com/martinjandrews/roodi/tree<br /> | |||

:http://roodi.rubyforge.org/<br /> | |||

:http://www.infoq.com/news/2008/11/static-analysis-tool-roundup<br /> | |||

:http://rufus.rubyforge.org/rufus-treechecker/files/README_txt.html<br /> | |||

:http://rufus.rubyforge.org/<br /> | |||

:http://github.com/jmettraux/rufus-treechecker/blob/master/lib/rufus/treechecker.rb<br /> | |||

:http://metric-fu.rubyforge.org/<br /> | |||

:http://github.com/devver/saikuro<br /> | |||

</div> | </div> | ||

Latest revision as of 00:40, 21 October 2010

What is Static Code Analysis?

- If there is one thing that has proven to be true over time is is that humans are fallible. We by nature, make mistakes. As a result, our humanity must be factored in to the software engineering and development that we produce. Development teams discovered early on that code review was the best way to discover mistakes and bugs in their software. This proved to be a daunting task however. Assembling teams to identify problems in the code base as well as training them took up large amounts of time and valuable resources. Obviously code review and bug discovery were critical areas of software development that needed to be addressed, but development teams needed a more efficient way to go about doing it.

- In the 1970's Stephen Johnson at Bell Laboratories wrote a program called Lint. Lint's functionality was to look at C programs that had made it through compilation without any errors and examine the source code to locate any bugs that were not detected. With this, static code analysis was born.

- Static code analysis defines tools used to verify the quality and reliability of software systems quickly and efficiently. There are many static code analysis tools out there for many different languages. Some of these are open source products and some are commercially based. Their scope and accuracy can vary quite a bit depending on what tool a development team uses. There are some traits of these tools that are for the most part universal however. We will go over some of these now.

How do Static Code Analysis Tools Identify Problems?

- While humans are fallible, at least we tend to be consistent about it. No matter the company or region of the world, software developers tend to fall into the same traps over and over. This creates patterns and known high risk situations that can be identified through programmatic means. Key aspects of a program that pretty much all static code analysis tools focus on are:

- Input validation and representation - Based on different key combinations and encoding of alphanumeric characters, what user flows put the code at risk to attack.

- API abuse - Does the caller violate the API terms

- Security features - Are the security steps taken legal. Is the encryption used valid.

- Time and state - Are race conditions introduced a components seek to share state.

- Errors - Are there holes left in the code base that can be exploited.

- Code Quality - Poor code quality introduces high risk situations and unpredictable behavior. The system may be stressed in unexpected ways.

- Encapsulation - Are the boundaries between encapsulated code structures sound.

- Environment - Anything outside the code base that might still be a security risk.

How do Static Code Analysis Tools Identify Problems Quickly?

- First and foremost static analysis tools are efficient to use because they don't actually execute the code. These tools actually use what are known as static checkers which construct an abstract model of the code base and then travel through the program looking for patterns and common traps developers typically perform. Some of the most common and most useful criteria these static checkers use on this abstract model of the code base are:

- Null Returns - Check to see if functions can return unexpected NULLs and create a segmentation fault

- Forward Null - Check to see what paths generate a NULL pointer de-reference and create denial of service risks

- Reverse Null - Check to see if pointers are checked against NULL before being de-referenced

- Reverse Negative - Check to see if negative values are used in inappropriate places that open the program to security risk

- Sizecheck - Check to see if correct memory allocation is used to prevent memory out of bounds errors

- Resource Leak - Check to see if memory leaks exist that can introduce performance and crash problems

- Use After Free - Check to see if once resources have been de-allocated from a heap, that these are not used again as they may cause nondeterministic results

- Uninit - Check to see if variables are initialized before use

- Overrun Static - Check to see if there are invalid accesses to a static array which may lead to buffer overrun security risks

- Overrun Dynamic - Check to see if there are invalid accesses to a dynamic array which may lead to buffer overrun security risks

- Negative Returns - Check to see if a function returns a negative value that is returned inappropriately which may lead to memory corruption, crashes, infinite loops, etc.

- This process is much more efficient then having to compile the entire code base every time the development team wanted to run analysis tools on their software. These tools are also quite flexible. Many of which allow the rules and constraints used by these tools to be customized for the specific needs of a particular development studio.

Is Static Code Analysis Important?

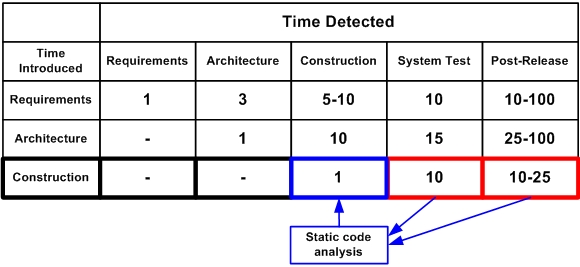

- In a word, yes! As the software industry has progressed, design systems have grown more complex and product functionality more robust. It has also been shown that bug correction at the construction stage is much less expensive then correcting it at the testing phase. It has also been shown that static analysis tools reduce software defects by a factor of six and also detect 60% of possible post release failures. There are some tests that have even shown that up to 91% of errors can be removed from source code using static analysis. Below you see a graph from the book "Code Complete" by C. McConnell showing his findings that correcting an error at the construction phase can be as much as ten times less expensive than if it was found at the testing phase.

A graph from C. McConnell's book "Code Complete" illustrating the benefits of correcting code errors at the construction phase instead of the testing phase

- One very important area where static analysis excels is with data flow analysis. This is where the static checkers observe the flow users take as they would use the software. The purpose of this is to do vulnerability checking. Hackers and abusive software are a fact of life in today's world. Common exploits such as buffer overflow attacks and sql injection should be a concerns for every developer. Static code analysis is a way to discover if these vulnerabilities exist in the code base. These tools are not only important for discovering bugs and pointing out vulnerabilities, they can also analyze paths in which memory is allocated but never freed. This lets the development team know if there are memory leaks in their software. Other key uses for static analysis tools include the detection of program crashes and concurrency problems.

- To give a concrete example of the importance of these tools, we will use medical software. Medical software is rapidly growing in its sophistication. It has grown to the point where the FDA has identified static code analysis as a means of improving the quality and reliability of the software across the medical profession.

Are There Drawbacks to Using Static Code Analysis?

- Unfortunately that is a yes too. Like anything else, static analysis tools are not the one perfect solution for writing great programs. At its best software analysis is making educated guesses. None of these tools will ever be able to truthfully say is has discovered all possible runtime errors for a piece of software and it be fact. It has been proven that finding all possible runtime errors of a program is undecidable. Therefore, static analysis is not a magical tool to flawlessly transforms a code base. Quality control still begins and ends at the developer level. The intricacies of these tools can be be rather complex and difficult for developers to use to their fullest potential. Combine this with their often lofty expense, and static analysis tools are a large investment to make. This is one of the reasons why these tools are mostly limited to corporations with a vast development umbrella.

- There is also the issue of false positives in static analysis. The first few static analysis tools generated a great deal of false positives and depending on the quality of the tool a development studio might be using, there still might be a great number generated. One thing is for sure though, false positive will happen. The results these tools provide should not be considered blanket issues that need to be addressed. Remember, these are educated cases only. It is still the requirement of the developer to investigate these results and take appropriate actions. False positives are common enough that pretty much all of the static analysis tools have a way of filtering out results the user has deemed inaccurate.

- The last key hurdle I want to mention is that of legacy code. Legacy code and static analysis do not get along. Many development studio's have given software analysis a try only to abandon it when over 100,000 errors were reported. Depending on the age of the code base, the programming paradigms used, and how disciplined the programmers were, the error reports can quickly get out of hand. It is also the case that as the developer starts trying to correct these problems, more serious problems may arise. False positives can also be much more common. For a developer, trying to improve their code only to find out it was a false positive that led to serious faults in the software will certainly lead to frustration to say the least.

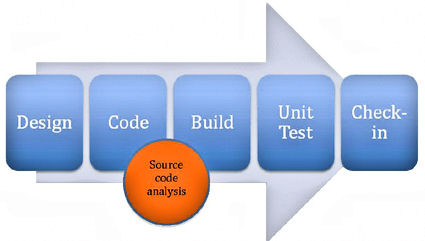

Static Code Analysis in Dynamically Typed Languages

- As the world of software development has matured, so have the demands imposed upon the developers. With clients a rapidly growing industry full of clients that want their products now, development studios have been trying to discover ways to decrease development time and get the product to market as quickly as possible. Because of this, agile development solutions and static code analysis are a great combination. One of the key points of agile development is to get software up and running as quickly as possible. By combining static analysis tools, agile developers can begin checking the quality and security of their software from the very beginning of the development process. Another bonus is that agile developers are accustomed to writing unit tests with their projects so checking and verifying the software as they go is already a part of the development process. Below is an illustration of the typical development cycle with static analysis tools included.

Static Code Analysis in Ruby

- While many static code analysis tools have matured and become more complete through years of usage, that is not the case with Ruby. With Ruby only now beginning to see an influx of attention due to it being integrated with the Rails framework, code analysis tools are quickly emerging. One of the major challenges in developing these analysis tools for Ruby was that there was not a standardized way to access the Abstract Syntax Tree. Versions of Ruby prior to 1.9 do not support this. There are Gems that have been created to address this issue but currently the support is inconsistent. This is addressed in Ruby 1.9, which is bundled with a library called Ripper that allows the parsing of source files.

- Even though static analysis tools for Ruby are young, there are several options. We will take a look at some of these here.

Flog

- Flog is a Gem written by Ryan Davis and Eric Hodel. Where it shines is in analyzing code complexity. Overly complex code is often bug prone and unclear. These make great candidates to identify and refactor. Flog does this using a scoring system that is a part of the ABC metric. This scoring system is then reported back to the developer to show them areas that are candidates for reevaluation. A report from Flog looks like this:

Total score = 211.720690020501

WatchR#analyze_entry: (34.2)

9.8: assignment

7.0: branch

4.5: mark_host_last_seen

3.2: pattern

2.8: []

2.8: is_event?

2.0: alert_type

2.0: alert_target

1.8: alert_msg

1.8: notify

1.6: event_notify?

1.3: notify_log

1.3: join

1.3: split

1.3: each

1.3: now

1.3: each_value

1.3: record_host_if_unknown

0.4: lit_fixnum

WatchR#event_threshold_reached?: (31.6)

21.3: []

2.6: branch

1.8: tv_sec

1.6: -

1.5: length

1.4: >

1.4: assignment

1.3: >=

1.3: mark_alert_last_seen

1.3: delete_if

- The higher the score the higher risk the code base is for bugs, errors, and vulnerabilities. The way this score is calculated using the ABC metric is pattern based. As the software development industry as matured, several coding patterns have been identified as at risk coding structure. Patterns that have a high level of dependencies are such candidates. When these patterns are identified by Flog, they receive a high score.

Flay

- After Flog, Ryan Davis wrote another Gem called Flay. Flay's main focus is on identifying where the code base does not subscribe to the format of DRY. It uses the Abstract Syntax Tree to identify duplicate sections of the code base. It can not only identify exact matches, but also areas that closely match except for superficial differences. This is beneficial for when code segments are identical except for variable names for example. Flay is able to ignore differences in literal values, names, whitespace, and programming style when comparing subtrees to identify duplicate sections of code. Like Flog, reports generated by Flay is a list of repeated code nodes ranked and weighted by severity.

Reek

- Remember how I said programmers from all over the world routinely fall into the same trap? Well common traps and bad coding practices are actually lumped into a category called Code Smells. Kevin Rutherford is a software developer who wrote a book discussing these different code smells and also developed a tool to locate them in Ruby source code. This tool is called Reek. Reek scans either source files or in-memory Class objects looking for some of these code smells. Specifically, Reek focuses on:

- Attribute - Warns if a class publishes a getter or setter for an instance variable caused the client to become too intimate with the inner workings of the class

- Class Variables - Warns that class variables are a part of the runtime state. Different parts of the system can inadvertently depend on other parts of the system causing unintended consequences

- Control Coupling - Warns when a parameter is used to determine the execution path. This is duplication since the caller know what path should be taken

- Data Clump - Warns when several items appear frequently together in classes or parameter lists

- Duplication - Warns when two fragments of code look nearly identical

- Irresponsible Module - Warns if classes or modules are not properly annotated

- Long Method - Warns if a method has more that 5 statements. Every statement within a control structure (if, case, for, etc) is considered 1

- Large Class - Warns if a class has more than a configurable number of methods or instance variables. These max values default to 25 for methods, and 9 for instance variables

- Feature Envy - Warns if any method refers to self less often that it refers to another object

- Uncommunicative Name - Warns if a name does not represent its intent well enough

- Long Parameter List - Warns if a method has more than two parameters or if a method yields more than two objects to a block

- Utility Function - Warns if a function has no dependency on the state of the instance

- Nested Iterators - Warns if a block contains another block

- Simulated Polymorphism - Warns if multiple conditionals test the same value throughout a class

- An example of how Reek works is below:

:class Dirty

# This method smells of :reek:NestedIterators but ignores them

def awful(x, y, offset = 0, log = false)

puts @screen.title

@screen = widgets.map {|w| w.each {|key| key += 3}}

puts @screen.contents

end

end

- Reek will report the following code smells in this file:

$ reek demo.rb :spec/samples/demo/demo.rb -- 6 warnings: Dirty has no descriptive comment (IrresponsibleModule) Dirty#awful has 4 parameters (LongParameterList) Dirty#awful has boolean parameter 'log' (ControlCouple) Dirty#awful has the parameter name 'x' (UncommunicativeName) Dirty#awful has the parameter name 'y' (UncommunicativeName) Dirty#awful has the variable name 'w' (UncommunicativeName)

Roodi

- Roodi (Ruby Object Oriented Design Inferometer) and other static analysis tools for Ruby, parses the source code to examine it for flaws, bugs, and vulnerabilities. One key area where Roodi differs however it its dependence on JRuby. Roodi's author Marty Andrews felt that he could make the software more useful by making it depend on JRuby's Abstract Syntax Tree instead of Ruby's built or their party solutions. While JRuby's version is not a perfect version either, he felt it's functionality was better suited for static code analysis than the others. The checks that Roodi performs are:

- AssignmentInConditionalCheck - Warns if there is an assignment inside a conditional

- CaseMissingElseCheck - Warns if a case statement does not have an else statement, thus not covering all possibilities

- ClassLineCountCheck - Warns if the count of lines in a class is below threshold

- ClassNameCheck - Warns if class names do not match convention

- CyclomaticComplexityBlockCheck - Warns if the cyclomatic complexity of all blocks is below threshold

- CyclomaticComplexityMethodCheck - Warns if the cyclomatic complexity of all methods is below the threshold.

- EmptyRescueBodyCheck - Warns if there are empty rescue blocks.

- ForLoopCheck - Warns if for loops are used instead of Enumerable.each

- MethodLineCountCheck - Warns if the number of lines in a method is above threshold

- MethodNameCheck - Warns if method names do not match convention.

- ModuleLineCountCheck - Warns if the number of lines in a module is above threshold

- ModuleNameCheck - Warns if module names do not match convention

- ParameterNumberCheck - Warns if the number of parameters for a method is above threshold

- An example of Roodi's output looks like this:

:rspec/lib/spec/rake/spectask.rb:152 - Block cyclomatic complexity is 11. It should be 4 or less. :rspec/lib/spec/rake/verify_rcov.rb:37 - Block cyclomatic complexity is 6. It should be 4 or less. :rspec/lib/spec/matchers/be.rb:57 - Method name "match_or_compare" has a cyclomatic complexity is 12. It should be 8 or less. :rspec/lib/spec/matchers/change.rb:12 - Method name "matches?" has a cyclomatic complexity is 9. It should be 8 or less. :rspec/lib/spec/matchers/have.rb:28 - Method name "matches?" has a cyclomatic complexity is 11. It should be 8 or less. :rspec/lib/spec/expectations/errors.rb:6 - Rescue block should not be empty. :rspec/lib/spec/rake/spectask.rb:186 - Rescue block should not be empty. :rspec/lib/spec/expectations/differs/default.rb:20 - Method name "diff_as_string" has 21 lines. It should have 20 or less. :rspec/lib/spec/matchers/change.rb:35 - Method name "failure_message" has 24 lines. It should have 20 or less. :rspec/lib/spec/matchers/include.rb:31 - Method name "_message" should match pattern (?-mix:^[a-z]+[a-z0-9_]*[!\?]?$). :rspec/lib/spec/matchers/include.rb:35 - Method name "_pretty_print" should match pattern (?-mix:^[a-z]+[a-z0-9_]*[!\?]?$).

Rufus

- Rufus was written by John Mettraux and uses the standard ruby parse tree. It uses this parse tree to convert this code into SexpProcessor classes that can then be evaluated. By using the Rufus treechecker the developer can dictate what patterns to generate errors for:

:require 'rubygems'

:require 'rufus-treechecker'

:tc = Rufus::TreeChecker.new do

: exclude_fvcall :abort

: exclude_fvcall :exit, :exit!

:end

:tc.check("1 + 1; abort") # will raise a SecurityError

:tc.check("puts (1..10).to_a.inspect") # OK

- Rufus is actually made of several Gems that make up the ruote open source workflow. These Gems include:

- rufus-decision – CSV decision tables, in Ruby

- rufus-dollar – substituting ${stuff} in text strings

- rufus-lru – small LRU implementation (max size based)

- rufus-lua – Lua embedded in Ruby, via ruby FFI

- rufus-mnemo – turning integers into easier to remember ‘words’ and vice-et-versa

- rufus-rtm – A Remember The Milk gem

- rufus-scheduler – the gem formerly known as openwferu-scheduler, cron, at and every job scheduler

- rufus-sixjo – a Rack application, RESTfully serving

- rufus-sqs – a gem for interacting with Amazon SQS

- rufus-tokyo – a ruby-ffi based lib for handling Tokyo Cabinet hashes (or trees)

- rufus-treechecker – for checking untrusted code before an eval

- rufus-verbs – the verbs of HTTP, get, post, put, delete wrapped in a Ruby gem

Saikuro

- Saikuro is written by Zev Blut. It is a code complexity analyzer much in the same vain as Flog. It rates the complexity of methods and modules and returns token values for each instruction. The way the cyclomatic complexity is calculated is by using a Simplified Complexity Calculation on each branch point of each method. A method's score begins at 1 since there is at least one path through the code, and for each conditional that is met within the method the score is increased by one. Saikuro is a single file command line tool that is run on its own instead of as a Gem.

Metric_fu

- Metric_fu is a static code analysis tool that pulls together many of the other related Gems out there into one aggregated package. Written by Jake Scruggs, Metric_fu hopes by combining the most popular analysis tools, the developer can get the most accurate picture possible of the quality of their code. The other tools that Metric_fu requires and get automatically installed when installing the Metric_fu Gem are:

- Flog - Checks the complexity of the code

- Flay - Checks to see if code segments are duplicated

- Reek - Checks to see if your code contains known bad practices

- Roodi - Checks to see if your code contains known bad practices

- Saikuro - Rates the complexity level of the code

- Churn - Checks to see what files change the most

- RailsBestPractices - Rails metrics aggregator

- Subversion - Version control system

- GIT - Version control system

- Rcov - Code-coverage checker invokes automated tasks and generates reporting

- After installing Metric_fu the developer can run tests on their code by running:

:rake metrics:all

- Once the tests are complete, Metric_fu generates visual reports to show the developer how vulnerable their code base is.

Conclusions

- Static code analysis has proven itself to be a valuable tool for improving the quality and security of software. It is important to remember that it is only one of many tools required to insure improvements are made. Meticulousness and care must be taken in every phase of development, from the requirement outline, design, construction, and testing phases. It is up to the developers to use these tools to their fullest potential. A practice that is becoming popular among development teams is to integrate a standardized rule set for source code. This is usually done be establishing two segments, a coding standard and a style guideline document. Even though right now static code analysis for the most part only has an install base in large development studios, the surge of dynamic development frameworks and how well they integrate with code analysis tools will help grow that install base to a much wider audience. As logs are kept of the performance of these tools on a large scale, they will continue to grow and improve. For young aspiring developers today, static code analysis will be another tool they will have to learn and put to good use. Their code, and the industry, will be better as a result.

References

- Evaluating the Cost Reduction of Static Code Analysis for Software Security

- Static Analysis to Work for Verification: A Case Study

- Static Code Analysis

- At the Forge

- http://en.wikipedia.org/wiki/Static_code_analysis

- http://www.viva64.com/terminology/Static_code_analysis.html

- http://www.eetimes.com/design/automotive-design/4006735/Integrate-static-analysis-into-a-software-development-process

- http://www.eetimes.com/design/industrial-control/4008909/Using-static-code-analysis-for-Agile-software-development?pageNumber=0

- http://www.infoq.com/news/2008/11/static-analysis-tool-roundup

- http://www.infoq.com/news/2009/09/code-quality-metric-fu

- http://www.rubyinside.com/3-tools-for-drying-your-ruby-code-1305.html

- http://on-ruby.blogspot.com/2007/12/flog-profiling-complexity.html

- http://ruby.sadi.st/Flog.html

- http://pivotallabs.com/users/miked/blog

- http://silkandspinach.net/2008/09/23/reek-a-code-smells-detector-for-ruby/

- http://github.com/kevinrutherford/reek/wiki

- http://github.com/kevinrutherford/reek/wiki/Simulated-Polymorphism

- http://www.ruby-forum.com/topic/166651

- http://on-ruby.blogspot.com/2008/11/reek-for-ruby-rocks.html

- http://blog.martyandrews.net/2008/09/roodi-checkstyle-for-ruby.html

- http://github.com/martinjandrews/roodi/tree

- http://roodi.rubyforge.org/

- http://www.infoq.com/news/2008/11/static-analysis-tool-roundup

- http://rufus.rubyforge.org/rufus-treechecker/files/README_txt.html

- http://rufus.rubyforge.org/

- http://github.com/jmettraux/rufus-treechecker/blob/master/lib/rufus/treechecker.rb

- http://metric-fu.rubyforge.org/

- http://github.com/devver/saikuro