CSC/ECE 506 Fall 2007/wiki1 10 mt: Difference between revisions

| (2 intermediate revisions by the same user not shown) | |||

| Line 11: | Line 11: | ||

The dataflow model of architecture, in contrast, is a distributive model where there is no single point of control and the execution of an instructions takes place only when the required data is available. Dataflow models are typically represented as a graph of nodes where each node in the graph is an operation to be executed when its operands become available along with the address of the subsequent nodes in the graph that need the results of the operation. | The dataflow model of architecture, in contrast, is a distributive model where there is no single point of control and the execution of an instructions takes place only when the required data is available. Dataflow models are typically represented as a graph of nodes where each node in the graph is an operation to be executed when its operands become available along with the address of the subsequent nodes in the graph that need the results of the operation. | ||

Included in the dataflow model of architecture there is also static and dynamic dataflow. The static dataflow model is characterized by the use of the memory address to specify the destination nodes that are data dependent. The dynamic model uses content-addressable memory which searches the computer memory for specific tags. Each subprogram or subgraph should be able to execute in parallel as separate instances. In the dynamic dataflow model, programs are executed by dealing with tokens which contain both data and a tag. A node is executed when incoming tokens with identical tags are present. | Included in the dataflow model of architecture there is also ''static'' and ''dynamic'' dataflow. The static dataflow model is characterized by the use of the memory address to specify the destination nodes that are data dependent. The dynamic model uses content-addressable memory which searches the computer memory for specific tags. Each subprogram or subgraph should be able to execute in parallel as separate instances. In the dynamic dataflow model, programs are executed by dealing with tokens which contain both data and a tag. A node is executed when incoming tokens with identical tags are present. | ||

[[Image:Dataflow.jpg|thumbnail|center|300px|Dataflow Graph]] | [[Image:Dataflow.jpg|thumbnail|center|300px|Dataflow Graph]] | ||

| Line 41: | Line 41: | ||

In the regular dataflow model, loops or subprograms that do not display parallelism are relegated to a single thread that executes sequentially, typically with poor performance. In the ''threaded dataflow'' model, threads of instructions are processed consecutively by the token matching unit without matching any tokens except for the first instructions of the thread. Instruction on the same thread of execution pass data back and forth via local registers and can be referred to by any of the following instructions in the thread. This improves upon the poor single threaded performance of the dataflow model. | In the regular dataflow model, loops or subprograms that do not display parallelism are relegated to a single thread that executes sequentially, typically with poor performance. In the ''threaded dataflow'' model, threads of instructions are processed consecutively by the token matching unit without matching any tokens except for the first instructions of the thread. Instruction on the same thread of execution pass data back and forth via local registers and can be referred to by any of the following instructions in the thread. This improves upon the poor single threaded performance of the dataflow model. | ||

''RISC dataflow'' takes advantage of hydrid data model my using a RISC instruction set and implementing support for multithreaded computation. In the ''Large-grain dataflow'' hydrid model a dataflow graph is altered so that the nodes of graph contain both fine grained nodes (or pure dataflow) and macro nodes (or coarse-grain) nodes which are really nodes that represent a sequential block of instructions. Lastly, ''complex dataflow'' makes use of complex machine instructions such as storing instructions in vectors so that they can be pipelined and referenced in blocks of data instead of element wise. | |||

=== Systolic Architecture Currently === | === Systolic Architecture Currently === | ||

Latest revision as of 22:48, 10 September 2007

Dataflow & Systolic Architectures

The dataflow and systolic models are two of the many possible parallel computer architectures. Unlike shared address, message passing and data parallel processing, the dataflow and systolic architectures were not as commonly used for parallel programming systems although they recieved a considerable amount of analysis from both private industry and academia.

Dataflow

Dataflow architecture is in oppostion to the von Neumann or control flow architecture which has memory, and I/O subsystem, an arithmetic unit and a control unit. The one shared memory is used for both program instructions and data with a data bus and address bus between the memory and processing unit. Because instructions and data must be fetched in sequential order, a bottleneck may occur limiting the throughput between the CPU and the memory.

The dataflow model of architecture, in contrast, is a distributive model where there is no single point of control and the execution of an instructions takes place only when the required data is available. Dataflow models are typically represented as a graph of nodes where each node in the graph is an operation to be executed when its operands become available along with the address of the subsequent nodes in the graph that need the results of the operation.

Included in the dataflow model of architecture there is also static and dynamic dataflow. The static dataflow model is characterized by the use of the memory address to specify the destination nodes that are data dependent. The dynamic model uses content-addressable memory which searches the computer memory for specific tags. Each subprogram or subgraph should be able to execute in parallel as separate instances. In the dynamic dataflow model, programs are executed by dealing with tokens which contain both data and a tag. A node is executed when incoming tokens with identical tags are present.

Systolic

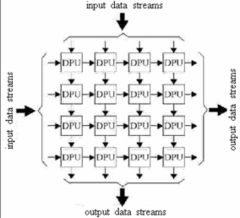

Systolic architecture sought to replace the uniprocessor architecture by stringing together a system of processing elements in arrays, known as systolic arrays. Their initial birth came from the bottleneck that can occur between a Central Processing Unit (CPU) and a memory request to the main-memory. A uniprocessor must sit and wait for the result from main memory to return or request another data item. In a systolic architecture, the data moves through a system via regular, timed "heartbeats" (the term systolic actually refers to the systolic contraction of heartbeats [2]) and work is done in-between each heartbeat. Each processor produces a new data item after each heartbeat. Those items are then either continued on the journey toward completion, or returned to the main memory. The systolic architecture's ability to put highly specialized computation under simple, regular and highly localized communication patterns are the key to the systolic architecture. [3]

New Developments in Dataflow and Systolic Architectures

Dataflow Architecture Currently

Since the 1990's, little advancement has been made in the field of dataflow architecture. Dataflow was primarily abandoned due to several problems.

- The dynamic dataflow model requires some sort of associative memory to store the tokens waiting to be matched. Unfortunately, even in moderate size programs the required memory needed for storage tends to be large and therefore not very cost efficient.

- Dataflow programs typically made use of multiple threads since parallel functions and loops were frequently used in the programming. Therefore, if there wasn't enough of a workload for multiple threads, single threaded execution of a program provided poor performance.

- The dataflow model failed to take advantage of locality such as the usage of local registers and cache. Since all information for the tokens (data and tags) moves through the network, it is difficult to transfer all that information in a timely efficient manner over a large parallel system.

Regardless of the problems that the dataflow model of machine design encountered, today out of order execution, which is a form of restricted dataflow is one of the most successful models of microprocessor design. AMD and Intel both implemented an architecture where after decoding into RISC instructions, the instructions are placed in a central pool where they are allowed to execute in the order which is best matched to the current resources available.

One particular attempt at improving the performance of the dynamic dataflow model was the explicit token store approach dubbed Monsoon and developed by Gregory M. Papadopoulos and David Culler. There approach was a more efficient method for token matching by developing a form of local storage for the tokens. Monsoon makes use of an activation frame where each active loop instance or subprogram is assigned it's own activation frame in the frame memory in which to provide storage for all the tokens generated by the loop or subprogram. Each frame is comprised of slots that hold operands for the current invocation and since access to the slots is a direct, no associative memory search is needed.

Another use of the dataflow model is utilizing control flow to enhance the dataflow paradigm. By using dataflow mechanisms with control flow mechanisms we can improve on the overhead associated with token matching, improve sequential code performance (in the case of the single threaded execution) and utilize registers for local storage. Several hybrid variations of the dataflow/control flow model have emerged including:

- threaded dataflow

- RISC dataflow

- large-grain dataflow

- complex dataflow

In the regular dataflow model, loops or subprograms that do not display parallelism are relegated to a single thread that executes sequentially, typically with poor performance. In the threaded dataflow model, threads of instructions are processed consecutively by the token matching unit without matching any tokens except for the first instructions of the thread. Instruction on the same thread of execution pass data back and forth via local registers and can be referred to by any of the following instructions in the thread. This improves upon the poor single threaded performance of the dataflow model.

RISC dataflow takes advantage of hydrid data model my using a RISC instruction set and implementing support for multithreaded computation. In the Large-grain dataflow hydrid model a dataflow graph is altered so that the nodes of graph contain both fine grained nodes (or pure dataflow) and macro nodes (or coarse-grain) nodes which are really nodes that represent a sequential block of instructions. Lastly, complex dataflow makes use of complex machine instructions such as storing instructions in vectors so that they can be pipelined and referenced in blocks of data instead of element wise.

Systolic Architecture Currently

There are several limitations to systolic architecture that have held back it's progress:

- They are restricted to applications with strictly regular data dependencies.

- They have a general lack of flexibility.

- They are only suitable, once designed, to support only one application problem, not several.[4]

The majority of advancements in the realm of systolic architecture focus more on the application of the architecture as opposed to advancement of the architecture itself. Several papers propose different applications for systolic architecture:

- One such paper, A Unified Systolic Architecture for Combined Inter and Intra Predictions in H.264/AVC Decoder, focuses on the efficiency of video coding. Presented by three professors, Chih-Hung Li, Chih-Chieh Chen, Wei-Chi Su, of the Cheng-Kung University in Taiwain, this 2000 paper presents an increase in hardware utilization and minimization of cost using a combination of inter and intra predictions. These predictions are produced via a re-programmable FIR filter. A systolic array is used in the further implementation of this process. The three conclude that the use of systolic architecture greatly reduce the cost of processing and improved performance. They argue that their design, in comparison to other designs, produces a lower cost and power (but a higher throughput). [5]

- Another paper, High-Speed Systolic Architectures for Finite Field Inversion and Division, written in combination by Dilip V. Sarwate of University of Illinois at Urbana-Champaign and Zhiyuan Yan of Lehigh University. The two propose the use of systolic architectures for finite field inversion and division. They claim that the systolic architecture shows a marked performance when compared to other, previously used, architectures, achieving an O(m2) area-time complexity, O(m) latency and a critical path delay on two logic gates. [6]

References and Links

- Papadopoulos, G. M. and Culler, D. E. 1990. Monsoon: an explicit token-store architecture. SIGARCH Comput. Archit. News 18, 3a (Jun. 1990), 82-91. DOI= http://doi.acm.org/10.1145/325096.325117

- http://comjnl.oxfordjournals.org/cgi/content/abstract/33/3/230

- http://www.informatik.uni-augsburg.de/~ungerer/dataflow/JPDCPdataflow.ps

- 2001. Asynchrony in parallel computing: from dataflow to multithreading. In Progress in Computer Research, F. Columbus, Ed. Nova Science Publishers, Commack, NY, 1-33. http://portal.acm.org/citation.cfm?id=506175&dl=ACM&coll=ACM