CSC/ECE 506 Spring 2010/ch 12 PP: Difference between revisions

| (36 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Interconnection Networks= | =Interconnection Networks= | ||

==Development of Interconnection Structures== | |||

Advances in multiprocessors, parallel computing & networking and parallel computer architectures demand very high performance from interconnection networks. Due to this, interconnection network structure has changed over time, trying to meet higher bandwidths and performance. | |||

Number of applications requiring interconnection networks are continuously growing. These include backplane buses and system area networks; telephone switches; internal networks for asynchronous transfer mode and Internet Protocol switches; processor/memory interconnects for vector supercomputers; interconnection networks for multicomputers and distributed shared-memory multiprocessors; clusters of workstations and personal computers; local area networks; wide area computer networks and networks for industrial applications. Hence, the demand for higher network bandwidth, scalability and reliability have led to new developments in network structures. | |||

==Choosing the “Best” Network== | ==Choosing the “Best” Network== | ||

While there is no best network that would work well for all applications, it greatly depends on the given application and the parallel system at hand. The following are some of the factors affect the choice of interconnection network. | While there is no best network that would work well for all applications, it greatly depends on the given application and the parallel system at hand. The following are some of the factors that affect the choice of interconnection network. | ||

1. '''Performance Requirements:''' Minimize message latency, avoid network from saturating (unable to deliver messages injected by nodes) and increase throughput of the network. | 1. '''Performance Requirements:''' Minimize message latency, avoid network from saturating (unable to deliver messages injected by nodes) and increase throughput of the network. | ||

| Line 26: | Line 30: | ||

10. '''Cost Constraints:''' The “best” network might be too expensive. Alternative design considerations are important to meet cost constraints. | 10. '''Cost Constraints:''' The “best” network might be too expensive. Alternative design considerations are important to meet cost constraints. | ||

==Classification of Interconnection networks== | ==Classification of Interconnection networks== | ||

| Line 36: | Line 39: | ||

''Figure 1: Classification of Interconnection Networks'' | |||

===Shared-Medium Networks=== | ===Shared-Medium Networks=== | ||

| Line 46: | Line 49: | ||

[[Image:Ring.gif]] | [[Image:Ring.gif]] | ||

''Figure 2: Token Ring'' | |||

'''Implementation examples:''' | '''Implementation examples:''' | ||

*IBM token ring: Supports bandwidth of 4 or 16 Mbps based on coaxial tube | *'''IBM token ring:''' Supports bandwidth of 4 or 16 Mbps based on coaxial tube | ||

*FDDI: Bandwidth of 100Mbps using fiber optics | *'''FDDI:''' Bandwidth of 100Mbps using fiber optics | ||

| Line 59: | Line 64: | ||

[[Image:Token_bus.gif]] | [[Image:Token_bus.gif]] | ||

''Figure3: Token Bus'' | |||

Nodes are physically connected as a bus, but logically form a ring with tokens passed around to determine the turns for sending. A token is passed around the network nodes and only the node possessing the token may transmit. If a node doesn't have anything to send, the token is passed on to the next node on the virtual ring. | Nodes are physically connected as a bus, but logically form a ring with tokens passed around to determine the turns for sending. A token is passed around the network nodes and only the node possessing the token may transmit. If a node doesn't have anything to send, the token is passed on to the next node on the virtual ring. | ||

| Line 64: | Line 70: | ||

'''Implementation examples:''' | '''Implementation examples:''' | ||

*ARCNET: Attached Resource Computer NETwork | *'''ARCNET:''' Attached Resource Computer NETwork | ||

| Line 71: | Line 77: | ||

[[Image:Backplane.jpg]] | [[Image:Backplane.jpg]] | ||

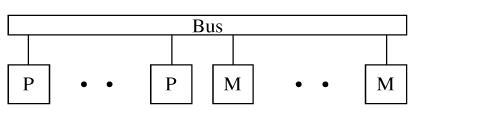

''Figure3: Backplane Bus'' | |||

Simplest interconnection structure for bus-based parallel computers. Commonly used to connect processors and memory modules to form UMA architecture. Many techniques are used for information transfers like synchronous, asynchronous, multiplexed and non-multiplexed. | Simplest interconnection structure for bus-based parallel computers. Commonly used to connect processors and memory modules to form UMA architecture. Many techniques are used for information transfers like synchronous, asynchronous, multiplexed and non-multiplexed. | ||

| Line 76: | Line 84: | ||

'''Implementation examples:''' | '''Implementation examples:''' | ||

*Gigaplane used in Sun Ultra Enterprise X000 Server (ca. 1996): 2.68 Gbyte/s peak, 256 bits data, 42 bits address, split transaction protocol, 83.8 MHz clock | *Gigaplane used in '''Sun Ultra Enterprise X000 Server''' (ca. 1996): 2.68 Gbyte/s peak, 256 bits data, 42 bits address, split transaction protocol, 83.8 MHz clock | ||

*DEC AlphaServer8X00, i.e. 8200 & 8400 (ca. 1995): 2.1 Gbyte/s, 256 bits data, 40 bits address, split transaction protocol, 100 MHz clock (1 foot length) | *'''DEC AlphaServer8X00''', i.e. 8200 & 8400 (ca. 1995): 2.1 Gbyte/s, 256 bits data, 40 bits address, split transaction protocol, 100 MHz clock (1 foot length) | ||

*SGI PowerPath -2 (ca. 1993): 1.2 Gbyte/s, 256 bits data, 40 bits address, 6 bits control, split transaction protocol, 47.5 MHz clock | *'''SGI PowerPath-2 (ca. 1993):''' 1.2 Gbyte/s, 256 bits data, 40 bits address, 6 bits control, split transaction protocol, 47.5 MHz clock | ||

*HP9000 Multiprocessor Memory Bus (ca. 1993): 1Gbyte/s, 128 buts data, 64 bit address, 13 inches, pipelined bus, 60 MHz clock | *'''HP9000 Multiprocessor Memory Bus (ca. 1993):''' 1Gbyte/s, 128 buts data, 64 bit address, 13 inches, pipelined bus, 60 MHz clock | ||

| Line 90: | Line 98: | ||

---- | ---- | ||

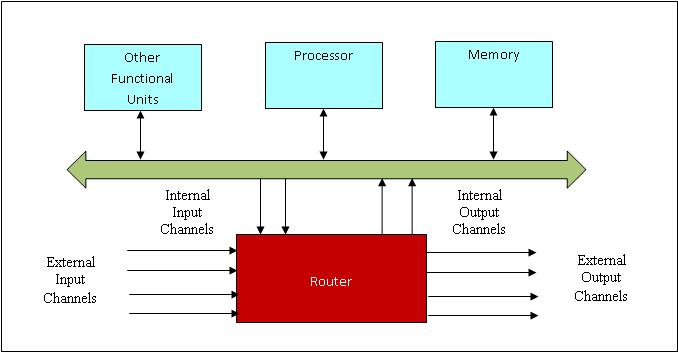

Bus based systems are not '''scalable''' since bus becomes a bottleneck when more processors are added. '''Direct network or point-to-point network''' scales well with large number of processors. A direct network consists of a set of nodes, each directly connected to a subset of other nodes. | Bus based systems are not '''scalable''' since bus becomes a bottleneck when more processors are added. '''Direct network or point-to-point network''' scales well with large number of processors. A direct network consists of a set of nodes, each directly connected to a subset of other nodes. ''Figure 4'' shows a generic node. | ||

[[Image:Node.jpg]] | [[Image:Node.jpg]] | ||

Each Router is connected to its neighbor’s routers through a unidirectional or bidirectional channel and handles the message communication among nodes. | |||

''Figure4: A generic node'' | |||

Each '''Router''' is connected to its neighbor’s routers through a '''unidirectional''' or '''bidirectional''' channel and handles the message communication among nodes. '''Internal channels''' connect local memory/processor to the router. '''External channels''' are used for communication between the routers. A direct network is formed by connecting input channels of one node to output channels of other nodes. Every input channel is paired with a corresponding output channel and there are many ways to interconnect these nodes such that every node in the network is able to reach every other node. | |||

Network topology is '''Orthogonal''' if and only if nodes can be arranged in a orthogonal n-dimensional space, and every link can be arranged in such a way that it produces a displacement in a single dimension. | |||

====Strictly Orthogonal Topologies==== | |||

In a strictly orthogonal topology, every node has at least one link crossing each dimension. | |||

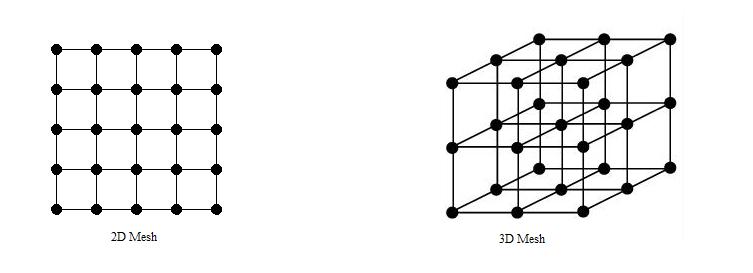

=====Mesh===== | |||

[[Image:2d3d.jpg]] | |||

''Figure5: 2D & 3D Mesh topologies'' | |||

'''Implementation Examples:''' | |||

* '''Intel Paragon:''' 16 rows and 16 columns of nodes, with a 2D mesh connecting them, 130 MB/sec for 1 MB messages, with a latency of around 100 usecs. | |||

* '''MIT Reliable Router:''' 2-D Mesh, 23-bit links (16- bit data), 200 MHz, 400 M bytes/s per link per direction, bidirectional signaling, reliable transmission. | |||

* '''MIT M-Machine:''' 3-D Mesh, 800 M bytes/s for each network channel. | |||

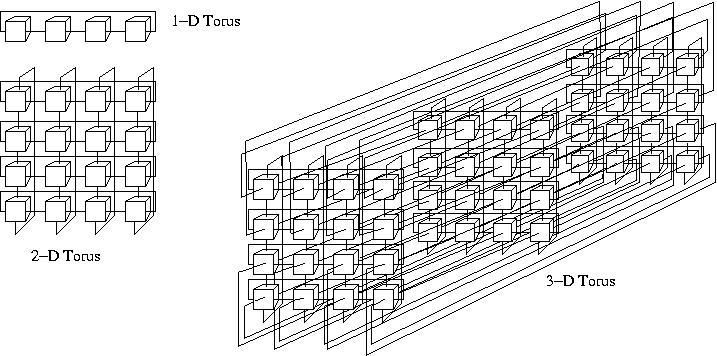

=====Torus===== | |||

[[Image:Torus_123D.jpg]] | |||

''Figure6: 1D, 2D & 3D Torus topologies'' | |||

'''Implementation Examples:''' | |||

*'''Chaos Router:''' 2-D torus topology, bidirectional 8-bit links, 180 MHz, 260 Mbytes/s in each direction. | |||

*'''Cray T3E:''' Bidirectional 3-D torus, 14-bit data in each direction, 375 MHz link speed, 600 Mbytes/s in each direction. | |||

*'''Cray T3D:''' Bidirectional 3-D torus, up to 1024 nodes (8 x 16 x 8), 24-bit links (16-bit data, 8-bit control), 150 MHz, 300 Mbytes/s per link. | |||

=====Hypercube===== | |||

[[Image:hyper.jpg]] | |||

''Figure7: Hypercube'' | |||

'''Implementation Examples:''' | |||

* '''Intel iPSC-2 Hypercube:''' Binary hypercube topology, bit-serial channels at 2.8 Mbytes/s. | |||

====Other Topologies==== | |||

In a '''Weakly Orthogonal''' topology, some nodes may not have any link in some dimensions. | |||

=====Tree===== | |||

Tree has a '''root node''' connected to a certain number of descendant nodes. A node with no descendants is a '''leaf node'''. If the distance from every node to the root is same, tree is '''balanced'''. | |||

[[Image:tree1.jpg]] | |||

''Figure8: Tree'' | |||

Disadvantage of this structure is that network is entirely dependent on the trunk which is the main backbone of the network. If that has to fail then the entire network would fail. Also, it gets difficult to configure after a certain point. | |||

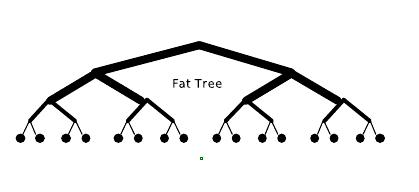

'''Fat Tree''', unlike the normal tree, which has "skinny" links all over, the links become "fatter" as one moves up the tree towards the root. By judiciously choosing the fatness of links, the network can be tailored to efficiently use any bandwidth. | |||

[[Image:Fat_tree.jpg]] | |||

''Figure9: Fat Tree'' | |||

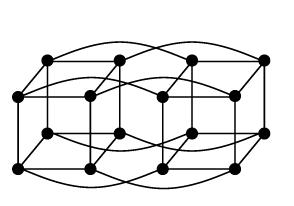

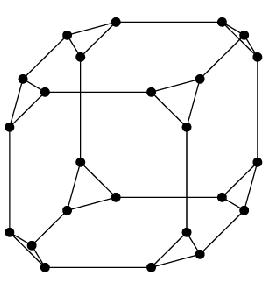

=====Cube-Connected Cycles===== | |||

Cube-Connected cycles can be considered as an n-dimensional hypercube of virtual nodes, where each node is a ring with n nodes, for a total of n2^n nodes. | |||

[[Image:Cubeconnectedcycles.jpg]] | |||

''Figure10: Cube-Connected Cycles'' | |||

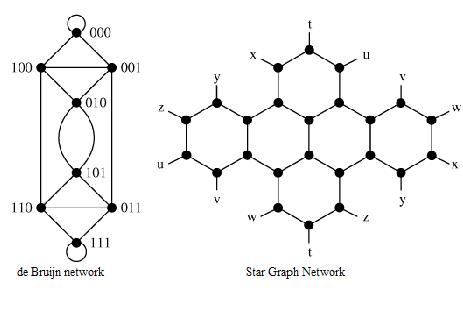

=====de Bruijn and Star Graph Networks===== | |||

[[Image:De_star.jpg]] | |||

''Figure11: de Bruijn and Star Graph Networks'' | |||

De Bruijn and Star Graphs are two well known topologies proposed to minimize the network diameter for a given number of nodes and node degree. | |||

===Indirect Networks=== | |||

---- | |||

Indirect networks do not provide a direct connection among nodes; instead communication has to be carried out through switches. Each node has a network adapter that connects to a network switch. Regular topologies have a regular connection pattern between switches whereas irregular topologies do not follow ant predefined pattern. | |||

====Regular Topologies==== | |||

=====Crossbar Network===== | |||

'''Crossbar networks''' allow many processors to communicate simultaneously without contention. It can be defined as a switching network with N inputs and M outputs, which allows up to min{N,M} one-to-one interconnections without contention. | |||

[[Image:Cross_bar.jpg]] | |||

''Figure12: Crossbar Networks'' | |||

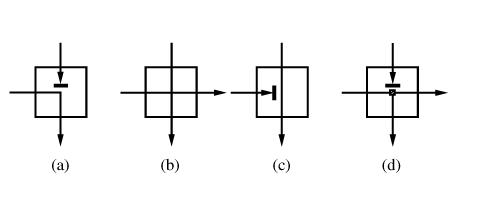

Each switch point for a crossbar with distributed control can have '''four states''' as shown in ''Figure 13''. (a) shows that input from the row containing the switch has been granted access to the output while input from the upper row requesting the same output has been blocked. (b) shows that both inputs have been granted access since they requested different outputs. (c) shows that the upper input has been grated access and the same row input is blocked. (d) is required if multicasting (one-to-many communication) is supported. | |||

[[Image:Switch_states.jpg]] | |||

''Figure13: Four Switch States'' | |||

=====Multistage Interconnection Network===== | |||

'''Multistage interconnection networks (MINs)''' connect devices through a number of switch '''stages''', where each switch is a crossbar network. They number of stages and connection patterns determine the routing capacity. | |||

Figure below shows a generic MIN with N inputs, M outputs and g stages. | |||

[[Image:MIN.jpg]] | |||

''Figure14: A generic MIN'' | |||

MINs can be divided into 3 categories depending on the availability of paths: | |||

* '''Blocking:''' A connection between a free input/output is not always possible because of conlicts with the existing connections. But, multiple paths are provided to reduce conflicts and increase fault tolerance. | |||

*'''Nonblocking:''' Any input port can be connected to any free output port without affecting the existing connections. | |||

*'''Rearrangeable:''' Any input port can be connected to any free output port. However, existing connections mat require rearrangement of paths. | |||

Depending on the kind of channels and switches, MINs can be divided into two classes: '''Unidirectional MINs''' and '''Bidirectional MINs.''' | |||

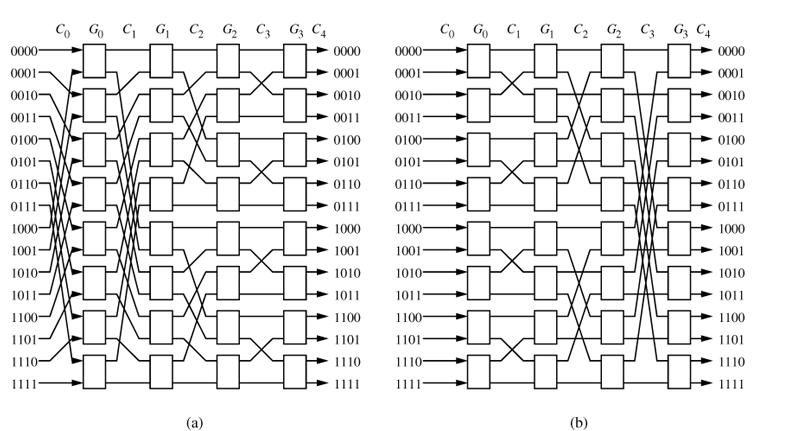

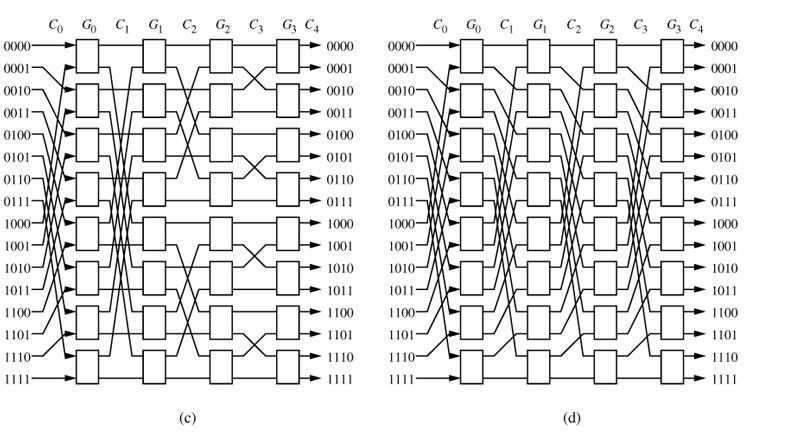

Figures below shows 4 unidirectional MINs. They differ in connection patterns. | |||

[[Image:Min1.jpg]] | |||

Figure15: Two 16 x 16 unidirectional multistage interconnection networks: (a) '''Baseline Network''' (b) '''Butterfly Network''' | |||

[[Image:Min2.jpg]] | |||

Figure16: Two 16 x 16 unidirectional multistage interconnection networks: (a) '''Cube Network''' (b) '''Omega Network''' | |||

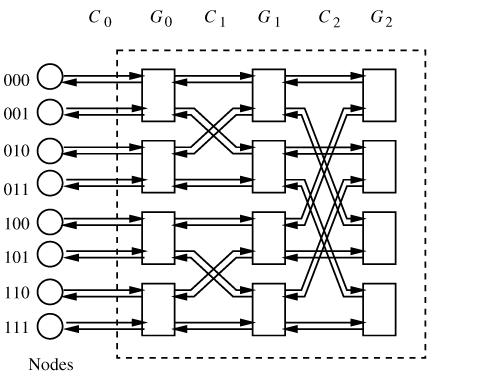

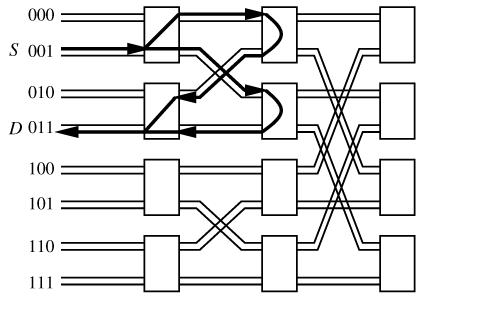

Following figures show an 8-node '''bidirectional butterfly''' MIN and alternate paths in the same. | |||

[[Image:Bi_butterfly.jpg]] | |||

''Figure17: Bidirectional Butterfly Multistage Interconnection Network '' | |||

[[Image:Bi_butterfly2.jpg]] | |||

''Figure18: An Alternate path in a Bidirectional Butterfly Multistage Interconnection Network '' | |||

'''Implementation examples of Indirect Networks:''' | |||

* '''Myricom Myrinet:''' Supports regular and irregular topologies, 8 x 8 crossbar switch, 9-bit channels, full-duplex, 160 Mbytes/s per link. | |||

*'''Thinking Machines CM-5:''' Fat tree topology, 4-bit bidirectional channels at 40 MHz, aggregate bandwidth in each direction of 20 Mbytes/s. | |||

*'''Inmos C104:''' Supports regular and irregular topologies, 32 x 32 crossbar switch, serial links, 100 Mbits/s per link. | |||

*'''IBM SP2:''' Crossbar switches supporting Omega network topologies with bidirectional, 16-bit channels at 150 MHz, 300 Mbytes/s in each direction. | |||

*'''SGI SPIDER:''' Router with 20-bit bidirectional channels operating on both edges, 200 MHz clock aggregate row data rate of 1Gbyte/s. Offers support for configurations as nonblocking multistage network topologies as well as irregular topologies. | |||

*'''Tandem ServerNet:''' Irregular topologies, 8-bit bidirectional channels at 50 MHz, 50 Mbytes/s link | |||

===Hybrid Networks=== | |||

---- | |||

'''Hybrid networks''' combine mechanisms from shared-medium and direct or indirect networks. They increase bandwidth with respect to shared-medium networks and reduce distance between nodes with respect to direct networks. | |||

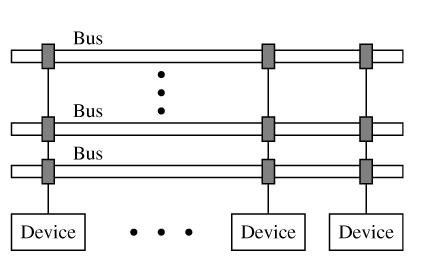

====Multiple Backplane Buses==== | |||

By having multiple buses as shown in Figure __, network bandwidth can be increased as compared to shared-medium networks. Shared-Medium networks pose a bottleneck since they can support only a small number of devices, have limited distance and are not scalable. | |||

[[Image:Hybrid1.jpg]] | |||

''Figure19: Multiple Backplane Buses '' | |||

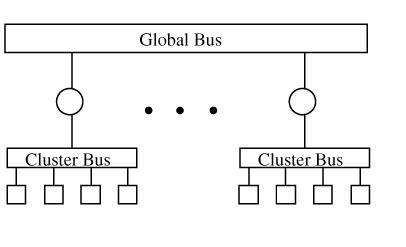

====Hierarchical Networks==== | |||

Different buses are interconnected by routers and bridges to transfer information. Routers/bridges filter the network traffic by checking the destination address of the message. This approach is used in bridged LAN. | |||

[[Image:Hybrid2.jpg]] | |||

''Figure20: Hierarchical Networks '' | |||

====Cluster-Based Networks==== | |||

Cluster based network combine advantages of buses and point-to-point links by using buses at the lower level in the hierarchy to form clusters and a direct network topology connecting the clusters at the higher level. | |||

[[Image:Hybrid3.jpg]] | |||

''Figure21: Cluster-Based Networks '' | |||

====Other Hypergraph Topologies==== | |||

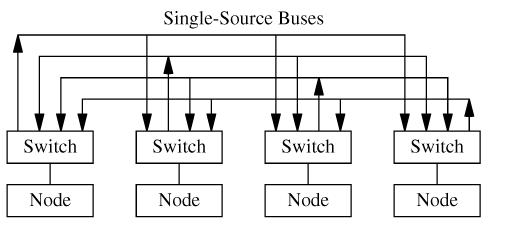

''Figure 22'' shows a distributed crossbar switch hypermesh. Each bus is driven by a single node. Bandwidth scales with the number of nodes. | |||

[[Image:Hybrid4.jpg]] | |||

''Figure22: Distributed Crossbar Switch Hypermesh Networks '' | |||

=Routing Algorithms= | |||

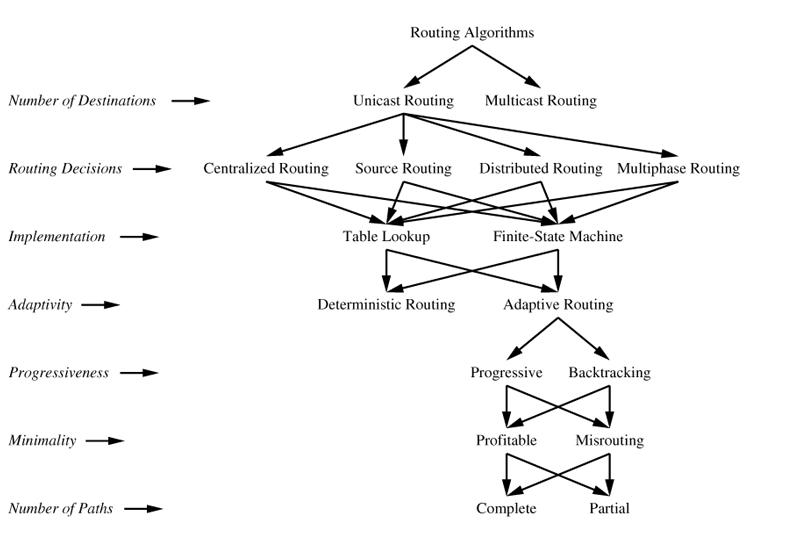

Figure below classifies routing algorithms according to several criteria, criteria being listed on the left. | |||

[[Image:Routing_algos.jpg]] | |||

'''Number of Destinations:''' In '''Unicast''' Routing packets have a single destination and multiple destinations in '''Multicast''' Routing. | |||

'''Routing Decisions:''' The path can either be established by a centralized controller ('''Centralized Routing''') at the source node prior to packet injection ('''Source Routing''') or determined in a distributed manner while the packet travels across the network ('''Distributed Routing'''). Hybrid Schemes are called '''Multiphase Routing''' in which the source node computes some destination nodes. | |||

'''Implementation:''' Routing algorithms can be implemented either by looking at a routing table ('''Table Lookup''') or executing a routing algorithm in software/hardware according to a '''Finite-State Machine'''. | |||

'''Adaptivity:''' '''Deterministic routing''' algorithms always supply the same path between a given source/destination pair. '''Adaptive routing''' algorithms use information abput network traffic and/or channel status to avoid congested or faulty regions of the network. | |||

''' Progressiveness:''' Adaptive algorithms can be progressive or backtracking. '''Progressive''' algorithms move the header forward, reserving a new channel at each routing operation. '''Backtracking''' allow the header to backtrack, releasing previously reserved channels. Backtracking algorithms are used for fault-tolerant routing. | |||

'''Minimality:''' '''Profitable''' routing algorithms only supply channels that bring the packet closer to its destination. '''Misrouting''' may also supply channels that send packets away from destinations. | |||

'''Number of paths:''' Algorithms can be classified according to the number of alternative paths as '''Completely Adaptive''' (or fully adaptive) or '''Partially Adaptive'''. | |||

==Deadlock== | |||

This is a situation where each packet waits for the other to finish, and since a waiting activity cannot finish, deadlock lasts forever. For example if each packet whose header has not yet arrived requests for buffers, and they are full, they will never get access since packet cannot be moved till the header arrives. This causes a deadlock between header requesting space but not getting it due to packet not being able to process. | |||

==Starvation== | |||

This situation arises when resources requested by the packet are always granted to other packet. This happened when incorrect resource assignment scheme is used to arbitrate in the case of conflict. | |||

==Livelock== | |||

Even if the packets are not blocked, they may not reach their destination node because the channels required to do so are occupied by other packets. | |||

=References= | |||

* http://www.boardguess.com/universitiesdeemed-universities/ignou/ignou-bca/assignments-bca/sem-i/100-100.htm | |||

* Interconnection Networks: An Engineering Approach by Duato, Jośe. Yalamanchili, Sudhakar. Ni, Lionel M. (Referencing many pictures and real implementations) | |||

* http://www.boardguess.com/universitiesdeemed-universities/ignou/ignou-bca/assignments-bca/sem-i/100-100.htm | |||

* http://www.cse.iitk.ac.in/~dheeraj/cs425/lec08.html | |||

* http://www.cs.nmsu.edu/~pfeiffer/classes/573/notes/topology.html | |||

* http://kbs.cs.tu-berlin.de/projects/tusci_engl.htm | |||

* http://www.top500.org/2007_overview_recent_supercomputers/sci | |||

* http://www.wikipedia.org/ | |||

Latest revision as of 21:22, 2 May 2010

Interconnection Networks

Development of Interconnection Structures

Advances in multiprocessors, parallel computing & networking and parallel computer architectures demand very high performance from interconnection networks. Due to this, interconnection network structure has changed over time, trying to meet higher bandwidths and performance.

Number of applications requiring interconnection networks are continuously growing. These include backplane buses and system area networks; telephone switches; internal networks for asynchronous transfer mode and Internet Protocol switches; processor/memory interconnects for vector supercomputers; interconnection networks for multicomputers and distributed shared-memory multiprocessors; clusters of workstations and personal computers; local area networks; wide area computer networks and networks for industrial applications. Hence, the demand for higher network bandwidth, scalability and reliability have led to new developments in network structures.

Choosing the “Best” Network

While there is no best network that would work well for all applications, it greatly depends on the given application and the parallel system at hand. The following are some of the factors that affect the choice of interconnection network.

1. Performance Requirements: Minimize message latency, avoid network from saturating (unable to deliver messages injected by nodes) and increase throughput of the network.

2. Scalability: Adding more processors should increase I/O bandwidth, Memory Bandwidth and Network Bandwidth should increase proportionally.

3. Incremental expandability: Should provide incremental expandability, allowing addition of a small number of nodes while minimizing resource wastage. For example, a network designed for number of processors to be a power of 4 makes it difficult to expand.

4. Partitionability: May be required for security reasons. If network can be partitioned into smaller systems, traffic produced by one user will not affect the others.

5. Simplicity: Simple Design lead to higher clock frequencies and better performance.

6. Distance Span: Maximum distance between nodes should be small.

7. Physical Constraints: Complexity of the connection is limited by the maximum wire density possible and by the maximum pin count. Factors like packaging, wiring, operating temperature should be taken into account since they pose many limitations on designs.

8. Reliability and Reparability: Should allow easy upgrades and repairs. Should minimize faults or detect them and correct them.

9. Expected Workloads: If kind of application is known in advance, network can be optimized for it. If not, network should be robust, design paramenters should be selected to perform well over a wide range of traffic conditions.

10. Cost Constraints: The “best” network might be too expensive. Alternative design considerations are important to meet cost constraints.

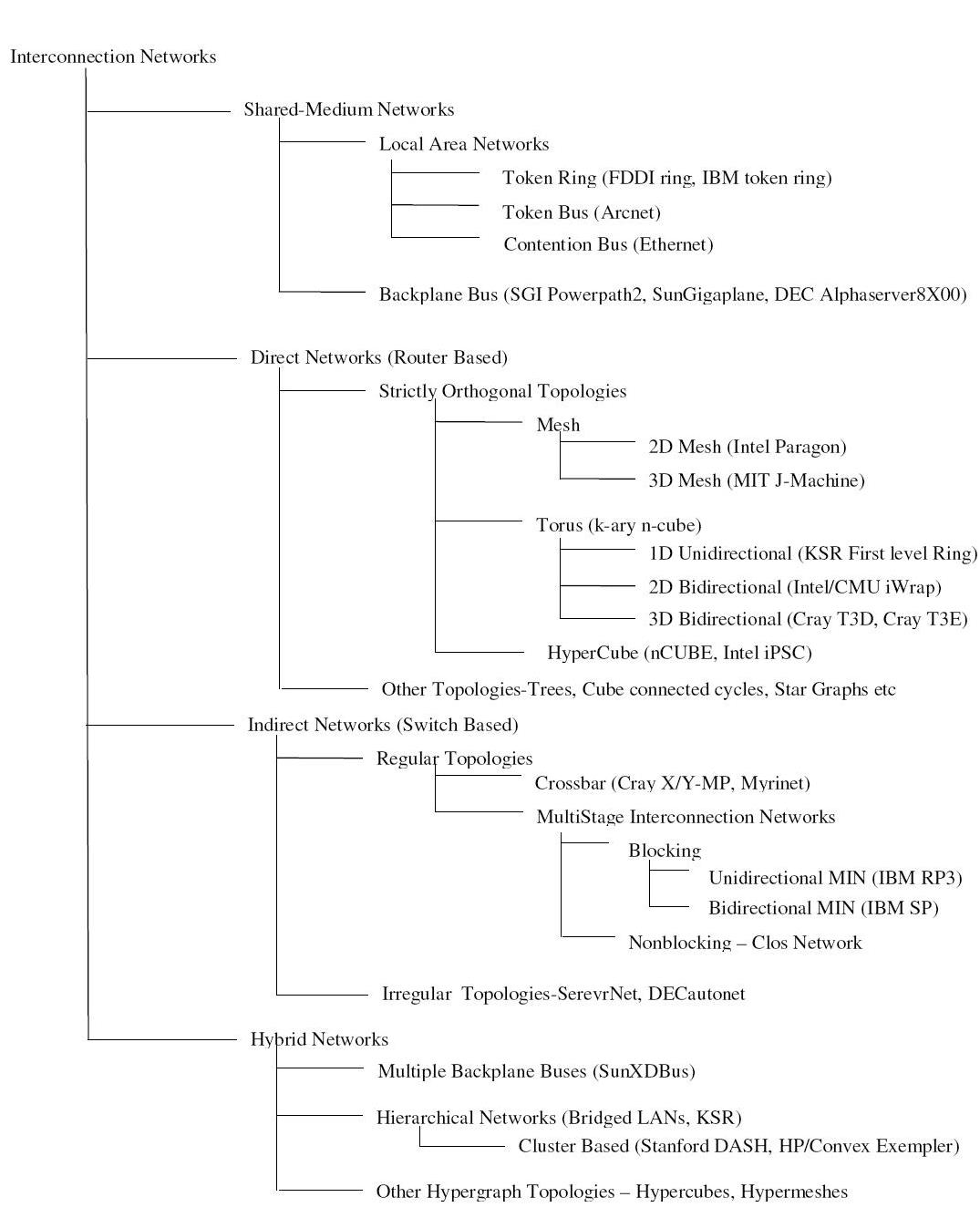

Classification of Interconnection networks

Figure 1 classifies Interconnection Networks based on network topologies. For each class, figure shows hierarchy of subclasses with some real implementations in parenthesis. In Shared-Medium Networks, transmission medium is shared by all devices. Direct Networks have point to point links directly connecting each communicating device to a subset of other devices in the network. Indirect networks connect devices using one or more switches. Hybrid networks are a combination of the above approaches.

Figure 1: Classification of Interconnection Networks

Token Ring

Figure 2: Token Ring

Implementation examples:

- IBM token ring: Supports bandwidth of 4 or 16 Mbps based on coaxial tube

- FDDI: Bandwidth of 100Mbps using fiber optics

Token Bus

Figure3: Token Bus

Nodes are physically connected as a bus, but logically form a ring with tokens passed around to determine the turns for sending. A token is passed around the network nodes and only the node possessing the token may transmit. If a node doesn't have anything to send, the token is passed on to the next node on the virtual ring.

Implementation examples:

- ARCNET: Attached Resource Computer NETwork

Backplane Bus

Figure3: Backplane Bus

Simplest interconnection structure for bus-based parallel computers. Commonly used to connect processors and memory modules to form UMA architecture. Many techniques are used for information transfers like synchronous, asynchronous, multiplexed and non-multiplexed.

Implementation examples:

- Gigaplane used in Sun Ultra Enterprise X000 Server (ca. 1996): 2.68 Gbyte/s peak, 256 bits data, 42 bits address, split transaction protocol, 83.8 MHz clock

- DEC AlphaServer8X00, i.e. 8200 & 8400 (ca. 1995): 2.1 Gbyte/s, 256 bits data, 40 bits address, split transaction protocol, 100 MHz clock (1 foot length)

- SGI PowerPath-2 (ca. 1993): 1.2 Gbyte/s, 256 bits data, 40 bits address, 6 bits control, split transaction protocol, 47.5 MHz clock

- HP9000 Multiprocessor Memory Bus (ca. 1993): 1Gbyte/s, 128 buts data, 64 bit address, 13 inches, pipelined bus, 60 MHz clock

Direct Networks

Bus based systems are not scalable since bus becomes a bottleneck when more processors are added. Direct network or point-to-point network scales well with large number of processors. A direct network consists of a set of nodes, each directly connected to a subset of other nodes. Figure 4 shows a generic node.

Figure4: A generic node

Each Router is connected to its neighbor’s routers through a unidirectional or bidirectional channel and handles the message communication among nodes. Internal channels connect local memory/processor to the router. External channels are used for communication between the routers. A direct network is formed by connecting input channels of one node to output channels of other nodes. Every input channel is paired with a corresponding output channel and there are many ways to interconnect these nodes such that every node in the network is able to reach every other node.

Network topology is Orthogonal if and only if nodes can be arranged in a orthogonal n-dimensional space, and every link can be arranged in such a way that it produces a displacement in a single dimension.

Strictly Orthogonal Topologies

In a strictly orthogonal topology, every node has at least one link crossing each dimension.

Mesh

Figure5: 2D & 3D Mesh topologies

Implementation Examples:

- Intel Paragon: 16 rows and 16 columns of nodes, with a 2D mesh connecting them, 130 MB/sec for 1 MB messages, with a latency of around 100 usecs.

- MIT Reliable Router: 2-D Mesh, 23-bit links (16- bit data), 200 MHz, 400 M bytes/s per link per direction, bidirectional signaling, reliable transmission.

- MIT M-Machine: 3-D Mesh, 800 M bytes/s for each network channel.

Torus

Figure6: 1D, 2D & 3D Torus topologies

Implementation Examples:

- Chaos Router: 2-D torus topology, bidirectional 8-bit links, 180 MHz, 260 Mbytes/s in each direction.

- Cray T3E: Bidirectional 3-D torus, 14-bit data in each direction, 375 MHz link speed, 600 Mbytes/s in each direction.

- Cray T3D: Bidirectional 3-D torus, up to 1024 nodes (8 x 16 x 8), 24-bit links (16-bit data, 8-bit control), 150 MHz, 300 Mbytes/s per link.

Hypercube

Figure7: Hypercube

Implementation Examples:

- Intel iPSC-2 Hypercube: Binary hypercube topology, bit-serial channels at 2.8 Mbytes/s.

Other Topologies

In a Weakly Orthogonal topology, some nodes may not have any link in some dimensions.

Tree

Tree has a root node connected to a certain number of descendant nodes. A node with no descendants is a leaf node. If the distance from every node to the root is same, tree is balanced.

Figure8: Tree

Disadvantage of this structure is that network is entirely dependent on the trunk which is the main backbone of the network. If that has to fail then the entire network would fail. Also, it gets difficult to configure after a certain point.

Fat Tree, unlike the normal tree, which has "skinny" links all over, the links become "fatter" as one moves up the tree towards the root. By judiciously choosing the fatness of links, the network can be tailored to efficiently use any bandwidth.

Figure9: Fat Tree

Cube-Connected Cycles

Cube-Connected cycles can be considered as an n-dimensional hypercube of virtual nodes, where each node is a ring with n nodes, for a total of n2^n nodes.

Figure10: Cube-Connected Cycles

de Bruijn and Star Graph Networks

Figure11: de Bruijn and Star Graph Networks

De Bruijn and Star Graphs are two well known topologies proposed to minimize the network diameter for a given number of nodes and node degree.

Indirect Networks

Indirect networks do not provide a direct connection among nodes; instead communication has to be carried out through switches. Each node has a network adapter that connects to a network switch. Regular topologies have a regular connection pattern between switches whereas irregular topologies do not follow ant predefined pattern.

Regular Topologies

Crossbar Network

Crossbar networks allow many processors to communicate simultaneously without contention. It can be defined as a switching network with N inputs and M outputs, which allows up to min{N,M} one-to-one interconnections without contention.

Figure12: Crossbar Networks

Each switch point for a crossbar with distributed control can have four states as shown in Figure 13. (a) shows that input from the row containing the switch has been granted access to the output while input from the upper row requesting the same output has been blocked. (b) shows that both inputs have been granted access since they requested different outputs. (c) shows that the upper input has been grated access and the same row input is blocked. (d) is required if multicasting (one-to-many communication) is supported.

Figure13: Four Switch States

Multistage Interconnection Network

Multistage interconnection networks (MINs) connect devices through a number of switch stages, where each switch is a crossbar network. They number of stages and connection patterns determine the routing capacity.

Figure below shows a generic MIN with N inputs, M outputs and g stages.

Figure14: A generic MIN

MINs can be divided into 3 categories depending on the availability of paths:

- Blocking: A connection between a free input/output is not always possible because of conlicts with the existing connections. But, multiple paths are provided to reduce conflicts and increase fault tolerance.

- Nonblocking: Any input port can be connected to any free output port without affecting the existing connections.

- Rearrangeable: Any input port can be connected to any free output port. However, existing connections mat require rearrangement of paths.

Depending on the kind of channels and switches, MINs can be divided into two classes: Unidirectional MINs and Bidirectional MINs.

Figures below shows 4 unidirectional MINs. They differ in connection patterns.

Figure15: Two 16 x 16 unidirectional multistage interconnection networks: (a) Baseline Network (b) Butterfly Network

Figure16: Two 16 x 16 unidirectional multistage interconnection networks: (a) Cube Network (b) Omega Network

Figure16: Two 16 x 16 unidirectional multistage interconnection networks: (a) Cube Network (b) Omega Network

Following figures show an 8-node bidirectional butterfly MIN and alternate paths in the same.

Figure17: Bidirectional Butterfly Multistage Interconnection Network

Figure18: An Alternate path in a Bidirectional Butterfly Multistage Interconnection Network

Implementation examples of Indirect Networks:

- Myricom Myrinet: Supports regular and irregular topologies, 8 x 8 crossbar switch, 9-bit channels, full-duplex, 160 Mbytes/s per link.

- Thinking Machines CM-5: Fat tree topology, 4-bit bidirectional channels at 40 MHz, aggregate bandwidth in each direction of 20 Mbytes/s.

- Inmos C104: Supports regular and irregular topologies, 32 x 32 crossbar switch, serial links, 100 Mbits/s per link.

- IBM SP2: Crossbar switches supporting Omega network topologies with bidirectional, 16-bit channels at 150 MHz, 300 Mbytes/s in each direction.

- SGI SPIDER: Router with 20-bit bidirectional channels operating on both edges, 200 MHz clock aggregate row data rate of 1Gbyte/s. Offers support for configurations as nonblocking multistage network topologies as well as irregular topologies.

- Tandem ServerNet: Irregular topologies, 8-bit bidirectional channels at 50 MHz, 50 Mbytes/s link

Hybrid Networks

Hybrid networks combine mechanisms from shared-medium and direct or indirect networks. They increase bandwidth with respect to shared-medium networks and reduce distance between nodes with respect to direct networks.

Multiple Backplane Buses

By having multiple buses as shown in Figure __, network bandwidth can be increased as compared to shared-medium networks. Shared-Medium networks pose a bottleneck since they can support only a small number of devices, have limited distance and are not scalable.

Figure19: Multiple Backplane Buses

Hierarchical Networks

Different buses are interconnected by routers and bridges to transfer information. Routers/bridges filter the network traffic by checking the destination address of the message. This approach is used in bridged LAN.

Figure20: Hierarchical Networks

Cluster-Based Networks

Cluster based network combine advantages of buses and point-to-point links by using buses at the lower level in the hierarchy to form clusters and a direct network topology connecting the clusters at the higher level.

Figure21: Cluster-Based Networks

Other Hypergraph Topologies

Figure 22 shows a distributed crossbar switch hypermesh. Each bus is driven by a single node. Bandwidth scales with the number of nodes.

Figure22: Distributed Crossbar Switch Hypermesh Networks

Routing Algorithms

Figure below classifies routing algorithms according to several criteria, criteria being listed on the left.

Number of Destinations: In Unicast Routing packets have a single destination and multiple destinations in Multicast Routing.

Routing Decisions: The path can either be established by a centralized controller (Centralized Routing) at the source node prior to packet injection (Source Routing) or determined in a distributed manner while the packet travels across the network (Distributed Routing). Hybrid Schemes are called Multiphase Routing in which the source node computes some destination nodes.

Implementation: Routing algorithms can be implemented either by looking at a routing table (Table Lookup) or executing a routing algorithm in software/hardware according to a Finite-State Machine.

Adaptivity: Deterministic routing algorithms always supply the same path between a given source/destination pair. Adaptive routing algorithms use information abput network traffic and/or channel status to avoid congested or faulty regions of the network.

Progressiveness: Adaptive algorithms can be progressive or backtracking. Progressive algorithms move the header forward, reserving a new channel at each routing operation. Backtracking allow the header to backtrack, releasing previously reserved channels. Backtracking algorithms are used for fault-tolerant routing.

Minimality: Profitable routing algorithms only supply channels that bring the packet closer to its destination. Misrouting may also supply channels that send packets away from destinations.

Number of paths: Algorithms can be classified according to the number of alternative paths as Completely Adaptive (or fully adaptive) or Partially Adaptive.

Deadlock

This is a situation where each packet waits for the other to finish, and since a waiting activity cannot finish, deadlock lasts forever. For example if each packet whose header has not yet arrived requests for buffers, and they are full, they will never get access since packet cannot be moved till the header arrives. This causes a deadlock between header requesting space but not getting it due to packet not being able to process.

Starvation

This situation arises when resources requested by the packet are always granted to other packet. This happened when incorrect resource assignment scheme is used to arbitrate in the case of conflict.

Livelock

Even if the packets are not blocked, they may not reach their destination node because the channels required to do so are occupied by other packets.

References

- Interconnection Networks: An Engineering Approach by Duato, Jośe. Yalamanchili, Sudhakar. Ni, Lionel M. (Referencing many pictures and real implementations)