CSC/ECE 506 Spring 2010/Ch 9/Synchronization: Difference between revisions

No edit summary |

|||

| (69 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Hardware Support For Synchronization== | ==Hardware Support For Synchronization== | ||

===Hardware Implementations=== | ===Hardware Implementations=== | ||

Fetch-and-Increment | Hardware implementations for synchronization traditionally include '''locks''', '''barriers''', and '''mutual exclusion'''. Locks are a block code in a parallel program that is meant to be executed by a single processor or thread. Barriers are different types of synchronization that wait for all threads to reach a specific point in the program. And mutual exclusion is the prevention from the concurrent access of shared data. These types of hardware synchronizations use a method called '''busy-waiting''' or '''spinning''' which prevents threads from continuing to execute. Spinning simply means that a thread continuously checks if it is okay to continue (no other useful work is accomplished by the thread). In the case for a lock, multiple threads are competing for access to the lock. Only one thread is allowed to access the critical code protected by the lock at a time. For barriers, multiple threads reach the barrier at different times and no thread can continue until all threads have reached the barrier. Spinning is implemented in hardware through mutexes or semaphores, which prevent multiple processes from being accessed at the same time. Atomic instructions, which are supported by the processor Instruction Set Architecture (ISA), are typically used for the hardware synchronization mechanism. Examples of these hardware synchronization mechanisms include: Test-and-Set, Fetch-and-Increment, Exchange, Compare-and-Swap. These types of synchronizations are used to provide mutual exclusion. They are also used in lock implementations, such as '''Test-and-Set''' or '''Test-and-Test-and-Set'''. See examples below. | ||

===Software Implementations=== | |||

There are also software synchronizations as well. For example '''ticket locks''' and '''queue-based MCS locks'''. The concept behind ticket locks is that each thread that requests the lock is given a ''ticket number'' and that thread waits until its ticket is being serviced. This is analogous to any kind of line (i.e. waiting in line at a grocery store). Say the checkout line is empty; the first person to get in the line (#1), they get serviced by the cashier. Another person (#2) gets in line behind them before the cashier finished checking the first person out. Then another (#3), and eventually the cashier will service the second person (#2) and (#3). So, think of the people in line as processor threads trying to access a critical section of code, and the cashier decides which thread gets access to the critical section. Queue-based MCS locks use a distributed queue of spinning processes opposed to the ticket locks method of contending for a single counter. So instead of having a single counter variable, the MCS lock assigns a different flag to each thread. It is important to note that both of these software implementations require the underlying hardware support for certain atomic instructions in order to work correctly under contention. Example code for these implementations can be seen below. | |||

==Mutual Exclusion== | |||

Mutual exclusion is implemented through atomic instructions, like the ones mentioned earlier. Below are atomic instructions that can help provide mutual exclusion in a multiprocessor system: | |||

'''Test-and-Set Rx, M''': Read the value stored in memory location M, test the value against a constant, and if they match, write the value in register Rx to memory location M. | |||

'''Fetch-and-Increment M''': Read the value stored in memory location M, increment it, and then store the new value to the memory location. | |||

'''Exchange Rx, M''': Atomically exchange the value at memory location M with the value in register Rx. | |||

'''Compare-and-Swap Rx, Ry, M''': Compare the value at memory location M with the value in register Rx. If they are equal, then store the value in register Ry to M, and copy the value Rx to Ry. | |||

These instructions are typically used for synchronization of threads in multiprocessor systems. These instructions are typically used for locks and other methods for providing mutual exclusion. | |||

==Overhead== | ==Overhead== | ||

===Test and Set=== | |||

The performance of test-and-set degrades significantly as the number of processors increases. There are two reasons for this performance decrease. First, a thread must contend with other threads in order to release the lock. The second reason is that the requests by the spinning threads trying to access the lock slow the lock holder. The overhead of this approach is very minimal. There is not much overhead associated with test-and-set. This approach is very trivial. It is the easiest to implement, but has the worst performance. (The code below come from the solihin text). | |||

lock: test-and-set R1, &lockvar //R1 = lockvar | |||

//if (R1 == 0) lockvar = 1; | |||

bnz R1, lock //jump to lock if R1 != 0 | |||

ret //return to caller | |||

unlock: st &lockvar, #0 // lockvar = 0 | |||

ret // return to caller | |||

===Test and Test and Set=== | |||

The performance of test-and-test-and-set is slightly better than test-and-set. The downside to test-and-test-and-set performance is that there is a separation between detecting that the lock has been released and trying to acquire the lock. The separation allows multiple threads to see that the lock is free, and then the threads that pass the test attempt a test-and-set instruction to try to acquire the lock. The overhead is about the same as test-and-set. There is still not a significant amount of overhead associated with test-and-test-and-set. Performance is sacrificed when overhead is smaller. (The code below comes from the Solihin text). | |||

lock: ld R1, &lockvar // R1 = lockvar | |||

bnz R1, lock // jump to lock if R1 != 0 | |||

t&s R1, &lockvar // R1 = lockvar | |||

// if (R1 == 0) lockvar = 1 | |||

bnz R1, lock // jump to lock if R1 != 0 | |||

ret // return to caller | |||

unlock: st &lockvar #0 // lockvar = 0 | |||

ret // return to caller | |||

===Ticket Lock=== | |||

The overhead for ticket locks is low, however, with contention traffic generated from ticket locks is about the same as test-and-set or test-and-test-and-set. This traffic is due to invalidations from the releasing of the lock. When there is very little contention the ticket lock performs much better than the MCS lock, but when there is high contention MCS locks are better (see MCS lock below). | |||

typedef struct TIXLOCK { | |||

unsigned long next_tix; | |||

unsinged long whose_turn; } | |||

tix_lock_type; | |||

void acquire (long lock_addr) { | |||

unsigned long my_tix; | |||

tix_lock_type* L = (tix_lock_type*)lock_addr; | |||

my_tix = fetch_and_increment((long)&(L->next_tix)); | |||

while(L->whose_turn != my_tix) | |||

pause(proportional_backoff * (my_tix - L->whose_turn)); | |||

} | |||

void release(long lock_addr) { | |||

tix_lock_type* L = (tix_lock_type*)lock_addr; | |||

L->whose_turn+1; | |||

} | |||

===MCS Lock=== | |||

As mentioned above, under high contention MCS locks have less traffic. This is because MCS locks use multiple flags (oppose to a single counter variable) which eliminates many invalidations and reloads. | |||

typedef struct qnode { | |||

struct qnode * next; | |||

boolean locked; } | |||

qnode_typed; | |||

typedef struct MCSLOCK { | |||

qnode_type *q_ptr; } | |||

mcs_lock_type; | |||

void acquire(long lock_addr) { | |||

/* allocated to each lock acquire instance */ | |||

/* and keep permanent until release */ | |||

qnode_type* qnode = allocate_qnode(lock_addr); | |||

mcs_lock_type* ml = (mcs_lock_type*)lock_addr; | |||

qnode_type *pred; | |||

qnode->next = NULL; | |||

pred = (qnode_type *) fetch_and_store((long)&ml->q_ptr, (long)qnode); | |||

if(pred!=NULL) { | |||

qnode->locked = TRUE; | |||

pred->next = qnode; | |||

while(qnode->locked); | |||

} | |||

} | |||

void release(long lock_addr) { | |||

qnode_type* qnode = get_qnode(lock_addr); | |||

mcs_lock_type* ml = (mcs_lock_type*)lock_addr; | |||

if (qnode->next == NULL) { | |||

if (compare_and_swap((long_&ml->q_ptr, (long)qnode, NULL)) return; | |||

while (qnode->next == NULL); | |||

} | |||

qnode->next->locked = FALSE; | |||

} | |||

===API Synchronization=== | |||

There are API's that exist for parallel architectures that provide specific types of synchronization. If the API are used they way they were design, performance can be maximized while minimizing overhead. In general, there is a trade-off between performance and overhead. See [http://software.intel.com/en-us/articles/choosing-appropriate-synchronization-primitives-to-minimize-overhead/ Choosing Appropriate Synchronization Primitives article] | |||

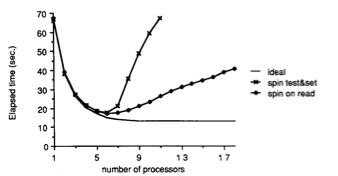

The figure below shows graphically these two methods and there actual performance (time vs number of threads) compared to the ideal desired performance of multiprocessing: | |||

[[Image:Graph2.png]] | |||

==Improved Hardware Primitives== | ==Improved Hardware Primitives== | ||

===Load Locked, Store Conditonal=== | |||

Load Locked(LL) and Store Conditional(SC) are a pair of instructions that are used for lock-free read-modify-write operation. Performance-wise the LL/SC implementation is similar to the test-and-test-and-set, however, it is much simpler with the extra linked registers and it can be used to implement many atomic instructions. The advantage of this method is that there is less wait traffic compared to test-and-test-and-set. | |||

==Barriers== | ==Barriers== | ||

== | |||

===Centralized Barrier=== | |||

http://www-inst.eecs.berkeley.edu/~n252/su06/CS252Midterm_Hermoso.pdf | |||

In a centralized barrier algorithm, each processor updates a counter to indicate that it has arrived at the barrier and then repeatedly polls a flag that is set when all threads have reached the barrier. Once all threads have arrived, each of them is allowed to continue past the barrier. The flag can be a sense reversal flag, to prevent intervention of adjacent barrier operations. A disadvantage to this type of barrier is that it does not scale well with a large number of processors, however, its advantage is that it uses a small amount of memory. See code below: | |||

// Shared data. | |||

barrier_count // not yet arrived | |||

barrier_flag // indicate all have arrived | |||

team_size // number of threads | |||

procedure central_barrier | |||

barrier_flag=team->barrier-flag | |||

new_count = atomic_inc(barrier_count) | |||

if (new_count == team_size) | |||

team->barrier_count = 0 | |||

team->barrier_flag = barrier_flag ^ 1 | |||

else | |||

while (team->barrier_flag == barrier_flag) | |||

===Tree Barrier=== | |||

In tree barriers, each thread is assigned to a node in the tree. The tree structure is such that a parent node notifies each of its child nodes by setting a flag, and similarly the child node responds to its parent node in order to let the parent node know that it has arrived at the barrier. The parent node clears the flag only after ALL of its child nodes have reached the barrier. Eventually all levels of tree will have all its child node reach the barrier, at which point the barrier can be crossed. The code below shows an implementation of a tree barrier: | |||

// Shared data: | |||

typedef struct { volatile boolean *parentflag; | |||

boolean *child_notify[2]; | |||

volatile long havechild; | |||

volatile long childnotready; | |||

boolean wakeup_sense; | |||

} treenode; | |||

treenode shared_array[P]; | |||

Private data for each thread: | |||

volatile int parity; | |||

volatile boolean sense; | |||

treenode *mynode; | |||

procedure tree_barrier | |||

vpid=thread_id; | |||

treenode *mynode_reg; | |||

mynode_reg = cur_thread->mynode; | |||

while (mynode_reg->childnotready); | |||

mynode_reg->childnotready = mynode_reg->havechild; | |||

*mynode_reg->parentflag = False; | |||

if (vpid) | |||

while(mynode_reg->wakeup_sense != cur_thread->sense); | |||

*mynode_reg->child_notify[0] = cur_thread->sense; | |||

*mynode_reg->child_notify[1] = cur_thread->sense; | |||

cur_thread->sense ^= True; | |||

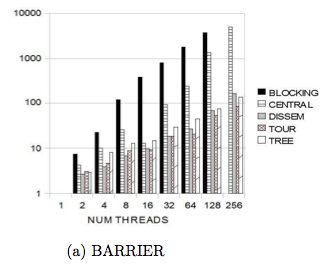

The Figure below shows how each barrier implementation performs with the certain number of threads. The graph shows how long it takes each barrier type to implement the barrier with respect to the number of threads. | |||

[[Image:Graph.png]] | |||

==References== | |||

1. http://www-inst.eecs.berkeley.edu/~n252/su06/CS252Midterm_Hermoso.pdf | |||

2. ftp://ftp.cs.wisc.edu/wwt/ics96_synch.pdf | |||

3. http://www2.cs.uh.edu/~hpctools/pub/iwomp-barrier.pdf | |||

4. http://software.intel.com/en-us/articles/choosing-appropriate-synchronization-primitives-to-minimize-overhead/ | |||

5. http://software.intel.com/en-us/articles/use-synchronization-routines-provided-by-the-threading-api-rather-than-hand-coded-synchronization/ | |||

6. http://software.intel.com/en-us/articles/use-non-blocking-locks-when-possible/ | |||

7. http://www.cs.washington.edu/homes/tom/pubs/spinlock.pdf | |||

8. http://www.cs.utah.edu/avalanche/sync.ps | |||

9. http://en.wikipedia.org/wiki/Mutual_exclusion | |||

10. ''Fundamentals of Parallel Computer Architecture'' - Yan Solihin | |||

Latest revision as of 04:16, 20 April 2010

Hardware Support For Synchronization

Hardware Implementations

Hardware implementations for synchronization traditionally include locks, barriers, and mutual exclusion. Locks are a block code in a parallel program that is meant to be executed by a single processor or thread. Barriers are different types of synchronization that wait for all threads to reach a specific point in the program. And mutual exclusion is the prevention from the concurrent access of shared data. These types of hardware synchronizations use a method called busy-waiting or spinning which prevents threads from continuing to execute. Spinning simply means that a thread continuously checks if it is okay to continue (no other useful work is accomplished by the thread). In the case for a lock, multiple threads are competing for access to the lock. Only one thread is allowed to access the critical code protected by the lock at a time. For barriers, multiple threads reach the barrier at different times and no thread can continue until all threads have reached the barrier. Spinning is implemented in hardware through mutexes or semaphores, which prevent multiple processes from being accessed at the same time. Atomic instructions, which are supported by the processor Instruction Set Architecture (ISA), are typically used for the hardware synchronization mechanism. Examples of these hardware synchronization mechanisms include: Test-and-Set, Fetch-and-Increment, Exchange, Compare-and-Swap. These types of synchronizations are used to provide mutual exclusion. They are also used in lock implementations, such as Test-and-Set or Test-and-Test-and-Set. See examples below.

Software Implementations

There are also software synchronizations as well. For example ticket locks and queue-based MCS locks. The concept behind ticket locks is that each thread that requests the lock is given a ticket number and that thread waits until its ticket is being serviced. This is analogous to any kind of line (i.e. waiting in line at a grocery store). Say the checkout line is empty; the first person to get in the line (#1), they get serviced by the cashier. Another person (#2) gets in line behind them before the cashier finished checking the first person out. Then another (#3), and eventually the cashier will service the second person (#2) and (#3). So, think of the people in line as processor threads trying to access a critical section of code, and the cashier decides which thread gets access to the critical section. Queue-based MCS locks use a distributed queue of spinning processes opposed to the ticket locks method of contending for a single counter. So instead of having a single counter variable, the MCS lock assigns a different flag to each thread. It is important to note that both of these software implementations require the underlying hardware support for certain atomic instructions in order to work correctly under contention. Example code for these implementations can be seen below.

Mutual Exclusion

Mutual exclusion is implemented through atomic instructions, like the ones mentioned earlier. Below are atomic instructions that can help provide mutual exclusion in a multiprocessor system:

Test-and-Set Rx, M: Read the value stored in memory location M, test the value against a constant, and if they match, write the value in register Rx to memory location M.

Fetch-and-Increment M: Read the value stored in memory location M, increment it, and then store the new value to the memory location.

Exchange Rx, M: Atomically exchange the value at memory location M with the value in register Rx.

Compare-and-Swap Rx, Ry, M: Compare the value at memory location M with the value in register Rx. If they are equal, then store the value in register Ry to M, and copy the value Rx to Ry.

These instructions are typically used for synchronization of threads in multiprocessor systems. These instructions are typically used for locks and other methods for providing mutual exclusion.

Overhead

Test and Set

The performance of test-and-set degrades significantly as the number of processors increases. There are two reasons for this performance decrease. First, a thread must contend with other threads in order to release the lock. The second reason is that the requests by the spinning threads trying to access the lock slow the lock holder. The overhead of this approach is very minimal. There is not much overhead associated with test-and-set. This approach is very trivial. It is the easiest to implement, but has the worst performance. (The code below come from the solihin text).

lock: test-and-set R1, &lockvar //R1 = lockvar

//if (R1 == 0) lockvar = 1;

bnz R1, lock //jump to lock if R1 != 0

ret //return to caller

unlock: st &lockvar, #0 // lockvar = 0

ret // return to caller

Test and Test and Set

The performance of test-and-test-and-set is slightly better than test-and-set. The downside to test-and-test-and-set performance is that there is a separation between detecting that the lock has been released and trying to acquire the lock. The separation allows multiple threads to see that the lock is free, and then the threads that pass the test attempt a test-and-set instruction to try to acquire the lock. The overhead is about the same as test-and-set. There is still not a significant amount of overhead associated with test-and-test-and-set. Performance is sacrificed when overhead is smaller. (The code below comes from the Solihin text).

lock: ld R1, &lockvar // R1 = lockvar

bnz R1, lock // jump to lock if R1 != 0

t&s R1, &lockvar // R1 = lockvar

// if (R1 == 0) lockvar = 1

bnz R1, lock // jump to lock if R1 != 0

ret // return to caller

unlock: st &lockvar #0 // lockvar = 0

ret // return to caller

Ticket Lock

The overhead for ticket locks is low, however, with contention traffic generated from ticket locks is about the same as test-and-set or test-and-test-and-set. This traffic is due to invalidations from the releasing of the lock. When there is very little contention the ticket lock performs much better than the MCS lock, but when there is high contention MCS locks are better (see MCS lock below).

typedef struct TIXLOCK {

unsigned long next_tix;

unsinged long whose_turn; }

tix_lock_type;

void acquire (long lock_addr) {

unsigned long my_tix;

tix_lock_type* L = (tix_lock_type*)lock_addr;

my_tix = fetch_and_increment((long)&(L->next_tix));

while(L->whose_turn != my_tix)

pause(proportional_backoff * (my_tix - L->whose_turn));

}

void release(long lock_addr) {

tix_lock_type* L = (tix_lock_type*)lock_addr;

L->whose_turn+1;

}

MCS Lock

As mentioned above, under high contention MCS locks have less traffic. This is because MCS locks use multiple flags (oppose to a single counter variable) which eliminates many invalidations and reloads.

typedef struct qnode {

struct qnode * next;

boolean locked; }

qnode_typed;

typedef struct MCSLOCK {

qnode_type *q_ptr; }

mcs_lock_type;

void acquire(long lock_addr) {

/* allocated to each lock acquire instance */

/* and keep permanent until release */

qnode_type* qnode = allocate_qnode(lock_addr);

mcs_lock_type* ml = (mcs_lock_type*)lock_addr;

qnode_type *pred;

qnode->next = NULL;

pred = (qnode_type *) fetch_and_store((long)&ml->q_ptr, (long)qnode);

if(pred!=NULL) {

qnode->locked = TRUE;

pred->next = qnode;

while(qnode->locked);

}

}

void release(long lock_addr) {

qnode_type* qnode = get_qnode(lock_addr);

mcs_lock_type* ml = (mcs_lock_type*)lock_addr;

if (qnode->next == NULL) {

if (compare_and_swap((long_&ml->q_ptr, (long)qnode, NULL)) return;

while (qnode->next == NULL);

}

qnode->next->locked = FALSE;

}

API Synchronization

There are API's that exist for parallel architectures that provide specific types of synchronization. If the API are used they way they were design, performance can be maximized while minimizing overhead. In general, there is a trade-off between performance and overhead. See Choosing Appropriate Synchronization Primitives article

The figure below shows graphically these two methods and there actual performance (time vs number of threads) compared to the ideal desired performance of multiprocessing:

Improved Hardware Primitives

Load Locked, Store Conditonal

Load Locked(LL) and Store Conditional(SC) are a pair of instructions that are used for lock-free read-modify-write operation. Performance-wise the LL/SC implementation is similar to the test-and-test-and-set, however, it is much simpler with the extra linked registers and it can be used to implement many atomic instructions. The advantage of this method is that there is less wait traffic compared to test-and-test-and-set.

Barriers

Centralized Barrier

In a centralized barrier algorithm, each processor updates a counter to indicate that it has arrived at the barrier and then repeatedly polls a flag that is set when all threads have reached the barrier. Once all threads have arrived, each of them is allowed to continue past the barrier. The flag can be a sense reversal flag, to prevent intervention of adjacent barrier operations. A disadvantage to this type of barrier is that it does not scale well with a large number of processors, however, its advantage is that it uses a small amount of memory. See code below:

// Shared data.

barrier_count // not yet arrived

barrier_flag // indicate all have arrived

team_size // number of threads

procedure central_barrier

barrier_flag=team->barrier-flag

new_count = atomic_inc(barrier_count)

if (new_count == team_size)

team->barrier_count = 0

team->barrier_flag = barrier_flag ^ 1

else

while (team->barrier_flag == barrier_flag)

Tree Barrier

In tree barriers, each thread is assigned to a node in the tree. The tree structure is such that a parent node notifies each of its child nodes by setting a flag, and similarly the child node responds to its parent node in order to let the parent node know that it has arrived at the barrier. The parent node clears the flag only after ALL of its child nodes have reached the barrier. Eventually all levels of tree will have all its child node reach the barrier, at which point the barrier can be crossed. The code below shows an implementation of a tree barrier:

// Shared data:

typedef struct { volatile boolean *parentflag;

boolean *child_notify[2];

volatile long havechild;

volatile long childnotready;

boolean wakeup_sense;

} treenode;

treenode shared_array[P];

Private data for each thread:

volatile int parity;

volatile boolean sense;

treenode *mynode;

procedure tree_barrier

vpid=thread_id;

treenode *mynode_reg;

mynode_reg = cur_thread->mynode;

while (mynode_reg->childnotready);

mynode_reg->childnotready = mynode_reg->havechild;

*mynode_reg->parentflag = False;

if (vpid)

while(mynode_reg->wakeup_sense != cur_thread->sense);

*mynode_reg->child_notify[0] = cur_thread->sense;

*mynode_reg->child_notify[1] = cur_thread->sense;

cur_thread->sense ^= True;

The Figure below shows how each barrier implementation performs with the certain number of threads. The graph shows how long it takes each barrier type to implement the barrier with respect to the number of threads.

References

1. http://www-inst.eecs.berkeley.edu/~n252/su06/CS252Midterm_Hermoso.pdf

2. ftp://ftp.cs.wisc.edu/wwt/ics96_synch.pdf

3. http://www2.cs.uh.edu/~hpctools/pub/iwomp-barrier.pdf

6. http://software.intel.com/en-us/articles/use-non-blocking-locks-when-possible/

7. http://www.cs.washington.edu/homes/tom/pubs/spinlock.pdf

8. http://www.cs.utah.edu/avalanche/sync.ps

9. http://en.wikipedia.org/wiki/Mutual_exclusion

10. Fundamentals of Parallel Computer Architecture - Yan Solihin