CSC/ECE 517 Fall 2009/wiki3 12 obj to relational: Difference between revisions

| Line 233: | Line 233: | ||

==== Example Implementation: ==== | ==== Example Implementation: ==== | ||

The example will show how an [http://www.telecom.ece.ntua.gr/HTML.Tutorials/java/strings/accessors.html accessor method] for the first name attribute in the Name class sets the [http://www.pcmag.com/encyclopedia_term/0,2542,t=dirty+bit&i=41458,00.asp dirty | The example will show how an [http://www.telecom.ece.ntua.gr/HTML.Tutorials/java/strings/accessors.html accessor method] for the first name attribute in the Name class sets the [http://www.pcmag.com/encyclopedia_term/0,2542,t=dirty+bit&i=41458,00.asp dirty bit] whenever the attributes changes its value. The accessor is the default generated by VisualAge with the | ||

bit] whenever the attributes changes its value. The accessor is the default generated by VisualAge with the | |||

addition of the makeDirty call, which sets the inherited attribute of isChanged to be true. | addition of the makeDirty call, which sets the inherited attribute of isChanged to be true. | ||

first: aString | first: aString | ||

Revision as of 03:27, 19 November 2009

Patterns for mapping objects to relational databases

The Object-Relational Mismatch

Object-orientation and relational model are different paradigms of programming.[1] The object paradigm is based on building applications out of objects that have both data and behavior, whereas the relational paradigm is based on storing data. With the object paradigm, one traverses objects via their relationships whereas with the relational paradigm one duplicates data to join the rows in tables.[2] The difference between how object models work and relational databases is known as the “Object-Relational Impedance Mismatch”. This is just nerd-speak for “they are different”. In analogy, we could consider the example of the ball and shape game. It's the same thing; you can’t fit a square in a triangle hole.[10]

One way to avoid the impedance mismatch between objects and relations is to use an object-oriented database. However, systems often need to store objects in a relational database. Sometimes a system needs relational calculus or the maturity of a relational database. Other times the corporate policy is to use a relational database rather than an object-oriented database. Whatever the reason, a system that stores objects in a relational database needs to provide a design that reduces the impedance mismatch.[5] One of the secrets of success for mapping objects to relational databases is to understand both paradigms, and their differences, and then make intelligent trade offs based on that knowledge.[2] An application that maps between the two paradigms needs to be designed with respect to performance, maintainability and cost to name just a few requirements.[4]

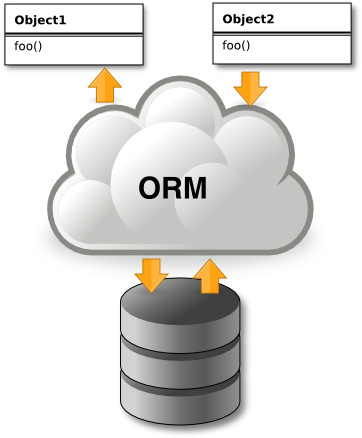

Persistence frameworks were designed to reduce the amount of work needed to develop an object-oriented system that stores data in a relational database. For this reason, this framework area is often also referred to as object-relational mapping or ORM (see the below Figure). The goal of a persistence framework is to automatically map objects to and from a database seamlessly so that information can be quickly and easily retrieved in object format, and then subsequently stored in its relational structure. This aids in system development and maintenance greatly. The mundane details of writing one's own create, read, update, and delete functions are taken care of, so one can concentrate on designing and building the application.[11]

Patterns

This page describes patterns for mapping objects to relations.

Persistence Layer

Motivation:

If one builds a large object-oriented business system that stores objects in a relational database, one can spend a lot of time dealing with the problems of making one's objects persistent. Also, every programmer working on the system has to know SQL and the code can become tied to the database. It can be a lot of work to convert the system from using Microsoft Access to using DB2, or even adding a few variables to an object. One needs to separate one's domain knowledge from knowledge of how objects are stored in a database to protect developers from these types of changes. The problem is how to save objects in a non object-oriented storage mechanism such as a relational database with the developers not having to know the exact implementation.[5]

Solution:

The solution is to provide a Persistence Layer that can populate objects from a data storage source and save their data back to the data storage source. This layer should hide the developer from the details of storing objects. All persistent objects use the standard interface of the Persistence Layer. If the data storage mechanism changes, only the Persistence Layer needs to be changed. For example, the corporate direction may be to start using Oracle rather than DB2 and then switch in midstream. The system needs to know how to store each object and to load it. Sometimes an object is stored in multiple databases over multiple media. An object that is part of a more complex object needs to keep track of which object it is a part; this is called the owning object. This owning object idea makes it easier to write complex queries. Therefore it is important for the Persistence Layer to provide a means to uniquely identify each object and its parent.[5]

Example Implementation:

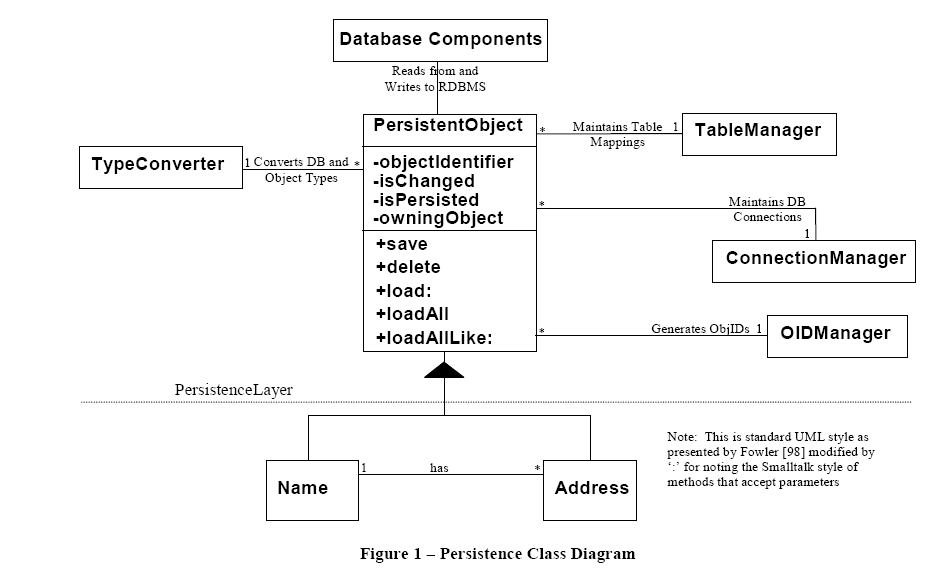

The figure shown below is a UML class diagram for an implementation of Persistent Layer which map domain objects to a relational database. Note that in this example, domain objects that need to be persisted are subclasses of the PersistentObject. The PersistentObject provides for the interface to the Persistence Layer. The PersistentObject interacts with the Table Manager, the Connection Manger and the OID Manager. Thus, the PersistentObject is the central hub for any information the domain object requires but does not contain in the instance.

Records can be read in three ways:

- a single row (PersistentObject>>load:),

- all records (PersistentObject>>loadAll),

- or all that match a specific criteria (PersistentObject>>loadAllLike:).

The specific criteria is provided by creating a new instance of the object to load with the attributes set needed to find a match. This functions with the PersistentObject>>load: and PersistentObject>>loadAllLike: methods. The PersistentObject>>loadAll method is useful for retrieving reference data from tables where you want to populate a selection list or drop down list.

The following is example code from the PersistentObject described above.

Protocol for Public Interface PersistentObject (instance)

load "Answer a single instance of a subclasse of PersistentObjects that matchs self.” | oc | oc := self loadAllLike. ^oc isEmpty ifTrue: [nil] ifFalse: [oc first]

loadAllLike "Answer a collection of subclasses of PersistentObjects that match self. The selectionClause method in the Domain object is called to prepare the WHERE clause for the read method in the PersistentObject" ^self class read: ( self selectionClause )

save “Saves self to the database wrapped in a transaction.” self class beginTransaction. self saveAsTransaction. self class endTransaction.

delete “Deletes self from the database wrapped in a transaction.” self class beginTransaction. self deleteAsTransaction. self class endTransaction.

Protocol for Public Interface PersistentObject (class)

loadAll "Answer a collection of ALL my instances from the database." ^self read: nil.

[5]

Advantages & Disadvantages:

An important benefit of isolating the application developer from the details of how an object saves itself is that it makes it easier to implement domain objects. Thus, it is less work to evolve the domain model. By encapsulating the functionality of the object persistence mechanism, a developer will effectively be hidden from the details of saving the object. However, a Persistent Layer can make it complicated and sometimes difficult to do operations that might be easy to write in SQL Code. Also, optimizations can be difficult in a Persistent Layer.

CRUD

Motivation:

Consider the example of a Patient class with components of class Name and Address. When one reads a Patient, one must also read Name and Address. Writing out a Patient will probably cause writing Name and Address objects to the database. The question that arises here is should they all have the same interface for reading and writing. Maybe some object requires a different interface to the database. Can we give them all the same interface? If so, what should it be?

Any persisted object needs operations to read from and write to the database. Values being persisted may also be for newly created objects. Sometimes, objects may need to be deleted from the persistence storage. Therefore, anytime an object needs to be persistent, it is important to provide, at a minimum, create, read, update, and delete operations. The question (and the problem) is what minimal operations are needed for a persistent object.[5]

Solution:

The solution is to provide the basic CRUD (create, read, update, and delete) operations for persistent objects. Other operations that may be needed are loadAllLike: or loadAll. The important point is to provide at least enough to instantiate objects from a database and store newly created or changed objects. If all domain objects have a common PersistentObject superclass, then this class can define the CRUD operations and all domain objects can inherit them. Subclasses can override them if necessary to increase performance. Thus, each domain object must make available a description of the SQL code necessary for accessing the database available for the CRUD operations. Thus CRUD works closely with a SQL Code Description for insuring that the operations are sufficient for persisting domain objects.[5]

Example Implementation:

The PersistentObject described above provides the standard interface to the basic set of operations for mapping the objects to the database; save, load, etc. These methods are inherited from the PersistentObject which access the CRUD operations. Some of these CRUD methods may need to be overwritten by the domain object. The updateRowSql and the insertRowSql are described in the SQL Code Description pattern below.

Protocol for CRUD PersistentObject (class)

This method takes a WHERE clause as an agent, and returns a collection of objects that correspond to the rows that matches the WHERE clause.

read: aSearchString

”Returns a collection of instances populated from the database.”

| aCollection |

aCollection := OrderedCollection new.

(self resultSet: aSearchString)

do: [:aRow | aCollection add: (self new initialize: aRow)].

^aCollection

Protocol for Persistence Layer PersistentObject (instance)

These methods save or delete objects from the database. These methods make the decisions of what kind of SQL statements (insert, update, or delete) based on the object’s values. Once the decisions have been made the SQL statement is then fired to the database.

saveAsTransaction "Save self to the database." self isPersisted ifTrue: [self update] ifFalse: [self create]. self makeClean

update "Updates aggregate classes then updates self to the database" self saveComponentIfDirty. self basicUpdate

create "Inserts aggregate classes then inserts self to the database." self saveComponentIfDirty. self basicCreate

basicCreate "Fires the insert SQL statement to the database" self class executeSql: self insertRowSql. isPersisted := true

basicUpdate "Fires the update SQL statement to the database." (self isKindOf: AbstractProxy) ifTrue: [^nil]. isChanged ifTrue: [self class executeSql: self updateRowSql]

deleteAsTransaction "Delete self from the database.." self isPersisted ifTrue: [self basicDelete]. ^nil

basicDelete

"Fires the delete SQL statement to the database."

self class

executeSql:('DELETE FROM ',self class table,' WHERE ID_OBJ=',

(self objectIdentifier printString)).

[5]

Advantages & Disadvantages:

Once the object model and data model have been analyzed, the results can be implemented in CRUD providing a performance-optimized solution, thus isolating the developer from having to worry about performance details. Flexibility to retrieve data based on how many rows or what kind of data (dynamic, static, or somewhere in-between) is needed. It has a simple implementation to SAVE the data to, or back to, the database. The application developer does not have to determine whether to insert or update the object. However, CRUD can cause sub-optimal performance if the object model and data model have not been properly analyzed. This will make the job of the developer more difficult if they have to compensate another way.[5]

Type Conversion

Motivation:

The values in a database do not always map directly into object types. For example, a boolean value may be saved as a “T” or “F” in a database. Sex could be stored as an attribute in which there is a class called Sex, which has certain behavior associated with male instances and different behavior with female instances. The database might store the values as “M” and “F”. When the values are read in from the database, the “M” would need to be converted to a male instance of the Sex class and the “F” would need to be converted to a female instance of the Sex class. Type Conversion allows for object values to be converted to and from database values. The problem here is how to take objects that may not have a database type and allow for them to map to a database type and vice-versa.[5]

Solution:

The solution would be to have all values convert their respective types through a Type Conversion object. This object knows how to handle nils and other mappings of objects to and from database values. When objects are persisted from large multi-application database the data formats can vary. This pattern ensures the data retrieved from the database is appropriate for the object. Each object attribute is passed through the appropriate Type Converter to apply the data rules necessary for proper application or DBM use.

Implementations can vary dependent on your needs. The example that follows places all the conversion methods in a single object. This keeps the methods localized to one area and allows the conversion object to be dynamically switched, if necessary. Thus, you could have a Strategy that applies different conversion algorithms for different databases. If the converters are not needed by the rest of the application this is a “cleaner” approach. Another option would be to extend each base class affected or used and place the methods there. Each object would know how to convert itself into a format necessary for the database. If you were using multiple databases then each of these methods would have to accommodate the differences in the formats. Yet another approach would be to put all of your conversion routines in PersistentObject thus isolating the place where you would have to change the code if you need to map to a new database or the conversion evolves. These methods would then be available to any object that inherits from PersistentObject. A similar situation occurs, as in the previous option, when you have multiple databases. All of the above mentioned solutions will work regardless of the persistence mechanism chosen.[5]

Example Implementation:

Type Conversion methods are accessed through the PersistentObject as the values are either being prepared for being saved or when the returned database rows are being mapped to an object’s attributes. The initialize: and insertRowSql: methods (in each domain object) show examples of Type Conversion.

Protocol for Type Conversion PersistentObject (instance)

This method determines which class is responsible for converting (translating) type between table types and object types. This method could be expanded to determine, at run time, which database is in use or which class to use for the conversions.

typeConverter "Returns the class responsible for Type Conversion” ^TypeConverter

Protocol for Type Conversion TypeConverter (class)

These methods provide the PersistentObject with consistently formatted data values. When converting from the object to the database (TypeConverter>>prepForSql:), the type can be determined and formatted based on what the database requires. When converting values from the database to an object, the type in the database may not be the type the object/application wants. In the Mapping Attributes Methods, each attribute is Type Converted to ensure the data values are correct. In some cases a default value is provided for when the database does not contain data. When several applications use the same database for diverse implementations, the data rules may not be able to be enforced at the database level.

convertToBooleanFalse: aString "Returns a boolean from a string character. False is the default value." ^'t' = aString asString trimBlanks asLowercase

convertToString: aString "Returns a string from a db string or character. Default returns a new string." ^aString asString trimBlanks

convertToNumber: aNumber "Returns a number from a db number. Default returns zero." ^aNumber isNumber ifTrue: [aNumber asInteger] ifFalse: [0]

[5]

Advantages & Disadvantages:

Type Conversion can help insure data consistency, object types can vary regardless of the database types and default values can be assigned for empty values from the database. It prevents “Undefined object does not understand” errors and provides increased RMA for the applications. (Reliability, Maintainability, and Accessibility). However, it can be time consuming to convert types, especially when reading in many values from a database.[5]

Change Manager

Motivation:

Consider the case in a patient management system when the patient's address has changed. The system should only write back the patient's address if it has actually changed. One way to implement this is to provide a separate screen for changing the address with a separate update button that causes the address to be written back. This is awkward and requires a lot of code maintenance. It would be better to let the user of the patient management system edit the address when necessary and for the system to only write back changes to the address object and other values of a patient when the user decides. In general, a Persistence Layer should keep track of all PersistentObjects that have changed state, and should make sure that they are all written back to the database. The problem is how to tell that an object has changed and needs to be saved to the database. It is important to prevent unnecessary access to the database and to ensure that the user knows when some values has changed and not saved before they exit the program.[5]

Solution:

The solution is to set up a Change Manager that keeps track of any PersistentObject that changes one of its persistent attributes. This Change Manager will be used whenever any requests to saving objects is needed. One way to do this is by inheriting from a PersistentObject, which has a dirty bit that gets set whenever one of the attributes that maps to the database changes. This dirty bit is usually an instance variable with a boolean value which indicates when an objects values have changed. When the boolean value is set, the PersistentObject will save the new values to the database when a request to save it is invoked. If the boolean value is not set, the PersistentObject will bypass the write to the database.

Data access is generally very expensive and should be used sparingly. By being able to identify that an object does or does not need to be written to the database can increase performance drastically. In addition to performance considerations, the user interface can benefit by being able to prompt the user to save before exiting. This ability adds to the user accepting your application by knowing that if they forget to save the changed information, the system will prompt them to save it. The user can come to the conclusion that the application is useless very quickly if they have to reenter the same data more than once.

Another feature that the Change Manager can provide is that of remembering original state or changed state of an object. If the user of your system needs to be able to revert back to original state, the Change Manager could keep around the original values so that an undo call could be invoked. Also, you might want to provide your system with the ability to have multiple undos.[5]

Example Implementation:

The example will show how an accessor method for the first name attribute in the Name class sets the dirty bit whenever the attributes changes its value. The accessor is the default generated by VisualAge with the addition of the makeDirty call, which sets the inherited attribute of isChanged to be true.

first: aString

"Save the value of first."

self makeDirty.

first := aString.

self signalEvent: #first

with: aString.

Protocol for Change Manager PersistentObject (instance) These methods provide the Persistence Layer with the ability to change the dirty flag. This saves the Persistence Layer from having to write data to the database that has not changed. It also provides the GUI programmer a way to test the object in order to provide the user a message to save their data or not.

makeDirty "Indicates an object needs to be saved to the db. This method can be called from the ‘setter’ method as in the example above.” isChanged:=true.

makeClean "Indicates that the object does not need to be saved to the db or that no changes have affected the object" isChanged:=false.

[5]

Advantages:

The user will more readily accept the application. It will result in a better performance by not writing object that has not changed back to the database. When merging data between databases the flag can be set so the record will be inserted to the new database as necessary.[5]

Transaction Manager

Motivation:

When saving objects, it is important to allow for objects to be stored in such a way that if some values are not stored properly, previously stored objects can be rolled back. For instance, in the patient management system, you not only want to store the attributes of the Patient object, but you also want to store any changes to the address objects that it contains. If trying to save any one of the Patient’s Address objects fails, you want to rollback any other writes to the database that was made for saving the patient information. A Transaction Manager provides support for beginning transactions, committing transactions, and rolling back transactions. The problem involves the question: how do you write a group of objects to the database in such a way that if any of the writes fail, none of the objects are written.[5]

Solution:

The solution to the above mentioned problem is to build a Transaction Manager that works similar to other transactions managers. This manager allows for the beginning of transactions, the ending of transactions, the committing of transactions, and the rollback of transactions. The transaction manager usually maps to the RDBMS’s Transaction Manager if it provides one. It might be preferable to cache values for performance reasons. When this is the case, it is important for part of the Transaction Manager to include a way to commit and rollback cached values. If the data store that you are mapping to doesn’t include a Transaction Manager then it is necessary for original state of objects to be saved at the beginnings of transactions so that if a rollback is requested, the original values can be written out to the data store.[5]

Example Implementation:

The following code is from excerpts from the PersistentObject. These show the wrapper effect for implementing transaction management. The code (self class beginTranaction) tells the database to start and stop a transaction will be dependant on which database you are using and what language you are developing in. You may need to extend transactions to handle your specific implementation. Also, if the database you are saving to (maybe a flat file) doesn’t support transactions, you are going to have to develop a complete Transaction Manager to support the needs of your users. This example wraps all saves and deletes to an object with a beginTransaction and an endTransaction. The class methods make the call to the database connection class.

Protocol for Public Interface PersistentObject (instance)

save self class beginTransaction. self saveAsTransaction. self class endTransaction.

delete self class beginTransaction. self deleteAsTransaction. self class endTransaction.

Protocol for Public Interface PersistentObject (class)

beginTransaction

self databaseConnection beginUnitOfWorkIfError:

[ self databaseConnection rollbackUnitOfWork ]

endTransaction self databaseConnection commitUnitOfWork

rollBackTransaction self databaseConnection rollbackUnitOfWork

[5]

Advantages & Disadvantages:

The advantages of the Transaction Manager are that the complex objects are saved completely or not at all and referential integrity is maintained. However, all other applications writing to the database have to use the Transaction Manager. You may have to add checks to see if anyone else has changed the database values that you are saving. Unless there is support built in from the database, writing a complete transaction manager is hard.[5]

Table Manager

Motivation:

The persistent storage that objects map to may evolve over time, or there may be multiple stores for objects. A Table Manager describes the mappings of databases to tables and columns, thus keeping the details away from the developer. This allows for changes to the database naming schema to not affect the application developer. The problem involves how does an object know what table and columns name(s) to use especially when multiple tables are needed to save the object. This magnitude of the problem increases when multiple databases are used.[5]

Solution:

The solution is to provide a place to retrieve the necessary table and column name(s) needed for objects to persist themselves. When an object is being stored, have it look up its table names in a Table Manager. By having the string that defines the name in only one-place, modifications and testing the changes becomes very quick and efficient.

When retrieving data from multiple sources, this pattern provides the ability to dynamically change which database or table is accessed. This flexibility alleviates the necessity to write the same SQL Code Description over and over for the same tables in multiple databases and still accesses it sequentially.[5]

Example Implementation:

The Table Manager stores the table names in dictionaries to provide to the SQL Code when requested. When the Table Manager is initialized the dictionaries are initialized with the table names. Then according to the Connection Manager the appropriate dictionary is accessed and the table name is returned.

When a domain object says:

self class table,

the TableManager will return the name of the table for the class. [5]

Advantages & Disadvantages:

One advantage of using a Table Manager is that when a table name changes, there is only one place to make the change in the code. Another advantage is that multiple databases can be accessed with the same table or view having a different name. However, writing a table manager involves creating a way to interpret the table and column mappings. It is not always easy to develop or maintain.[5]

Other Patterns

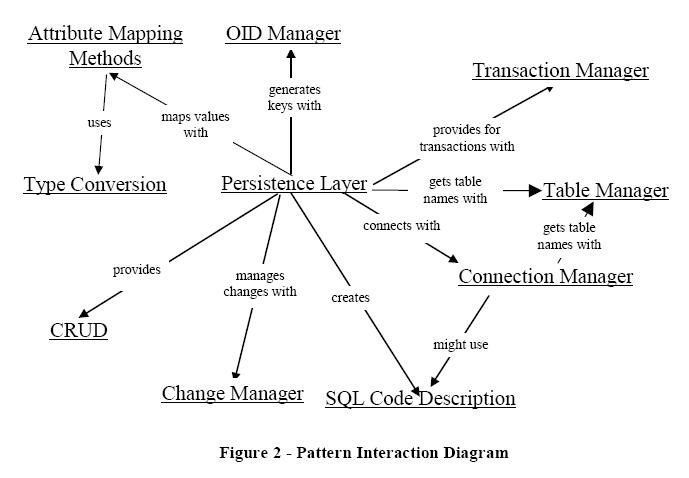

The other patterns include SQL Code Description, Attribute Mapping Methods, OID Manager and Connection Manager. We provide a brief description of these patterns here in order to understand the whole picture of how all the patterns collaborate with each other. (See "Putting It All Together").

The SQL Code Description defines the actual SQL code that takes the values from the RDBMS or other database and retrieves them for the object’s use and vice-versa. It is used to generate the SQL for performing the CRUD operations. The Attribute Mapping Methods pattern maps the values between the values from columns in the database and values being stored in an object's attributes. This pattern also handles complex object mappings. It populates the object(s) with the row values. Since every object is unique in any object oriented system, it is important to create unique identifiers for new objects with an OID Manager. Any system accessing a RDMBS will need to provide connections to the desired databases through some sort of Connection Manager.

Putting It All Together (Comparison of all patterns)

All of these patterns collaborate together to provide a mechanism for mapping persistent objects to a database. The figure below shows how the patterns interact with one another. The Persistence Layer provides the standard interface for the CRUD (create, read, update, and delete) operations needed to persist domain objects. The Persistence Layer builds the calls to the database by using the SQL Code Description provided by the domain objects. During the generation of the SQL code, the Persistence Layer interacts with the Table Manager to get the correct database table and column names. When values are being returned from the database or written back to the database, attributes values must be mapped to database column names and vice-versa. This is done with Attribute Mapping Methods. Attribute Mapping Methods do some Type Conversions while the SQL code is being generated. Attribute Mappings and Type Conversions can also happen while the Persistence Layer instantiates a new object. The Persistence Layer saves objects to the database through the Connection Manager only when an object’s value has changed. This change is managed by the Change Manager. The Connection Manager could interact with the Table Manager to decide on which database to use. The Persistence Layer provides access to a Transaction Manager whenever transaction processing is needed.[5]

Mapping Terminology

Mapping (v). The act of determining how objects and their relationships are persisted in permanent data storage, in this case relational databases.[3]

Mapping (n). The definition of how an object’s property or a relationship is persisted in permanent storage.[3]

Property. A data attribute, either implemented as a physical attribute such as the string firstName or as a virtual attribute implemented via an operation such as getTotal() which returns the total of an order.[3]

Property mapping. A mapping that describes how to persist an object’s property.[3]

Relationship mapping. A mapping that describes how to persist a relationship (association, aggregation, or composition) between two or more objects.[3]

See Also

References

[1] Wolfgang Keller, Mapping Objects to Tables - A Pattern Language http://www.objectarchitects.de/ObjectArchitects/papers/Published/ZippedPapers/mappings04.pdf

[2] Scott W. Ambler, President, Ronin International, Mapping Objects To Relational Databases http://www.crionics.com/products/opensource/faq/docs/mappingObjects.pdf

[3] Scott W. Ambler, Mapping Objects to Relational Databases: O/R Mapping In Detail http://www.agiledata.org/essays/mappingObjects.html

[4] Wolfgang Keller, Object/Relational Access Layers - A Roadmap, Missing Links and More Patterns http://citeseer.ist.psu.edu/4328.html

[5] Joseph W. Yoder, Ralph E. Johnson, Quince D. Wilson, Connecting Business Objects to Relational Databases http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.34.7703&rep=rep1&type=pdf

[6] Michael R. Blaha, William J. Premerlani and James E. Rumbaugh, Relational Database Design using an Object-Oriented Methodology http://www.sims.monash.edu.au/subjects/ims2501/seminars/oomodelling.pdf

[7] http://www.cetus-links.org/oo_db_systems_3.html

[8] http://www.ibm.com/developerworks/library/ws-mapping-to-rdb/

[10] http://cantgrokwontgrok.blogspot.com/2009/03/tech-day-1-nhibernate.html

[11] http://www.adobe.com/newsletters/edge/october2008/articles/article2/index.html?trackingid=DWZST

[12] Joseph W. Yoder, Patterns for Making your Business Objects Persistent in a Relational Database http://www.joeyoder.com/Research/objectmappings/mappingobjectstordbms/ppframe.htm