CSC/ECE 517 Spring 2022 - E2241: Heatgrid fixes and improvements: Difference between revisions

No edit summary |

No edit summary |

||

| (9 intermediate revisions by 2 users not shown) | |||

| Line 26: | Line 26: | ||

[[File:Screen Shot 2022-04-06 at 10.34.47 PM.png|center|550px]] | [[File:Screen Shot 2022-04-06 at 10.34.47 PM.png|center|550px]] | ||

| Line 164: | Line 127: | ||

<h4>Changes reflected in the UI</h4> | |||

<p> | <p> | ||

| Line 177: | Line 140: | ||

<h4> | <h4> Manual Testing </h4> | ||

<p> </p> | |||

<pre> | |||

Scenario: Visibility of all the unchecked metric checkboxes in View Scores view | |||

Given user has logged in | |||

When user visits View Scores page | |||

Then all the unchecked metric checkboxes are present above the heat grid | |||

</pre> | |||

<pre> | |||

Scenario: Default invisibility of metric columns in View Scores view | |||

Given user has logged in | |||

When user visits View Scores page | |||

Then metric columns are not visible in the heat grid | |||

</pre> | |||

<pre> | |||

Scenario: Checking a metric checkbox displays the corresponding metric column | |||

Given user has logged in | |||

When user visits View Scores page and | |||

When user clicks on unchecked comments#10 metric | |||

Then column with column name " comments#10" gets displayed with corresponding values | |||

</pre> | |||

<pre> | |||

Scenario: Unchecking a metric checkbox hides the corresponding metric column | |||

Given user has logged in | |||

When user visits View Scores page and | |||

When user clicks on checked comments#10 metric | |||

Then column with column name " comments#10" and corresponding values get hidden | |||

</pre> | |||

<h4> Unit Testing </h4> | |||

<p>As our project does not deal with actually adding new metrics, but just facilitating a low-coupling addition of new metrics, we need not add any unit test cases for metric implementations. There is only one metric in the system as of now for which test cases are already written.</p> | |||

<h2>Implementation of Issue 2019</h2> | <h2>Implementation of Issue 2019</h2> | ||

<p> This issue encompasses many problems such as review reports not displaying correctly and a user being able to review his/her work. Each problem has been dealt with individually. Main problems identified as part of this issue and relevant observations and corrections are jotted down in this section. </p> | |||

<h3> Problem 1 : A user being able to review his/her own work.</h3> | |||

<h4> Problem comment </h4> | |||

<p> On Madeup Problem 3, assignment 1055, there are several anomalies in the review report. User 9277 reviewed Team_2323 and gave this comment: "This question seems a little suspicious. It is nearly identical to Question 4a on page 327 in the 2016 edition book. The only thing change was variable name A to B and value 2 to 3." However, according to the View Submissions page, User 9277 is the only member of Team_2323. </p> | |||

< | <h4> Issue Reproduction and Observations </h4> | ||

<p> We tried reproducing the issue as specified in the issue comment, but we could not find Madeup Problem 3, assignment 1055. So, we have created two assignments with different parameters to check if the instructor is able to assign a reviewer his/her own work. </p> | |||

< | <p> We have created an assignment "Noself review" with allow self review option unchecked. Then, from the instructor page, we have created a team with student13 and student 14 as teammates. Then we logged into student14 account and submitted a hyperlink for that assignment. After that, from the instructor page we clicked on the assign review button and tried assigning student13 as the reviewer for that work and then tried the same with student14. In both the cases, the assignment failed by rendering a flash error message, "You cannot assign this student to review his/her own artifact." </p> | ||

<ul> | |||

<li> Unchecked allow self-review option in "Noself review" assignment </li> | |||

[[File:self-review-option-unchecked.png|600px]]<br/> | |||

<li> Participants page containing add reviewer button </li> | |||

[[File:Duo-team-submission.png|600px]]<br/> | |||

< | <li> Adding student13 as reviewer for his/her own work </li> | ||

[[File:Self-assign-review.png|600px]]<br/> | |||

<li> Flash error message displayed</li> | |||

[[File:self-review-unchecked.png|600px]]<br/> | |||

</ul> | |||

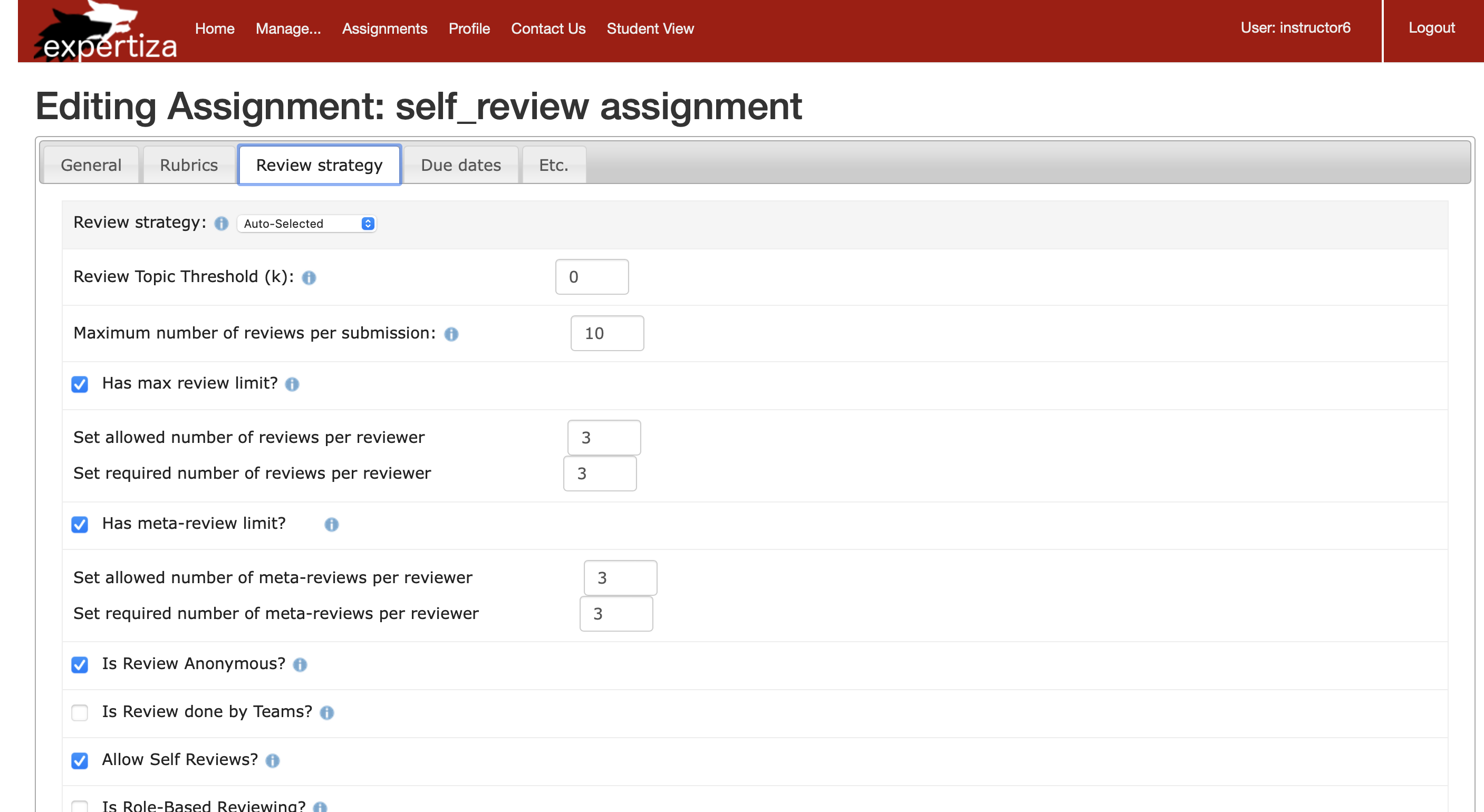

<p> We followed the same process for "self_review assignment", which gave student an option to self review his/her own work immediately after submitting their work. However, when the instructor tried assigning the user with his/her own work, same flash error message was displayed, not allowing the user to review their work.</p> | |||

< | <li> Checked allow self-review option in "self_review assignment" </li> | ||

[[File:Self-review-checked.png|600px]]<br/> | |||

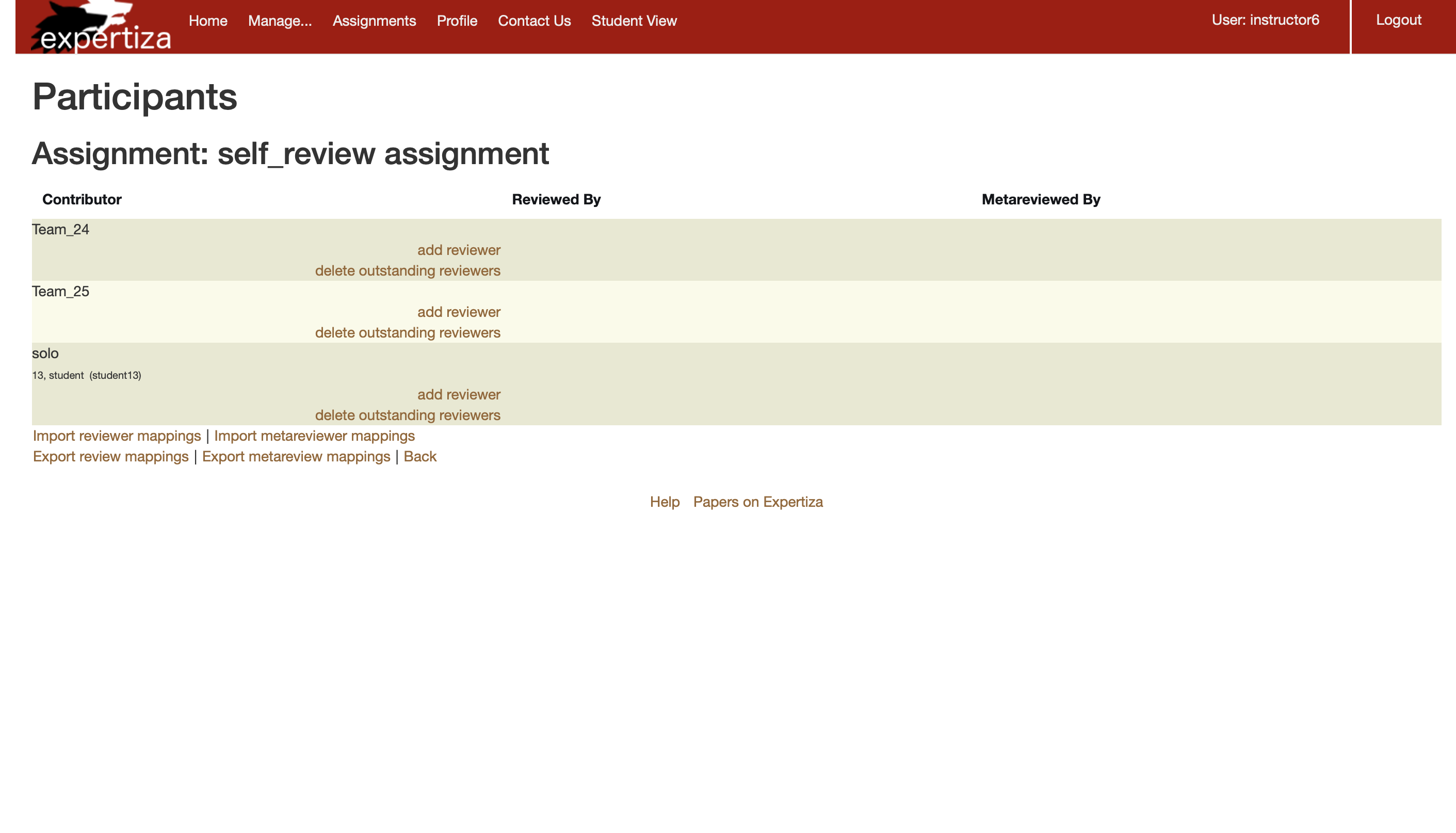

< | <li> Participants page of self review assignment</li> | ||

[[File:Participants_page_for_self-review_assignment.png|600px]]<br/> | |||

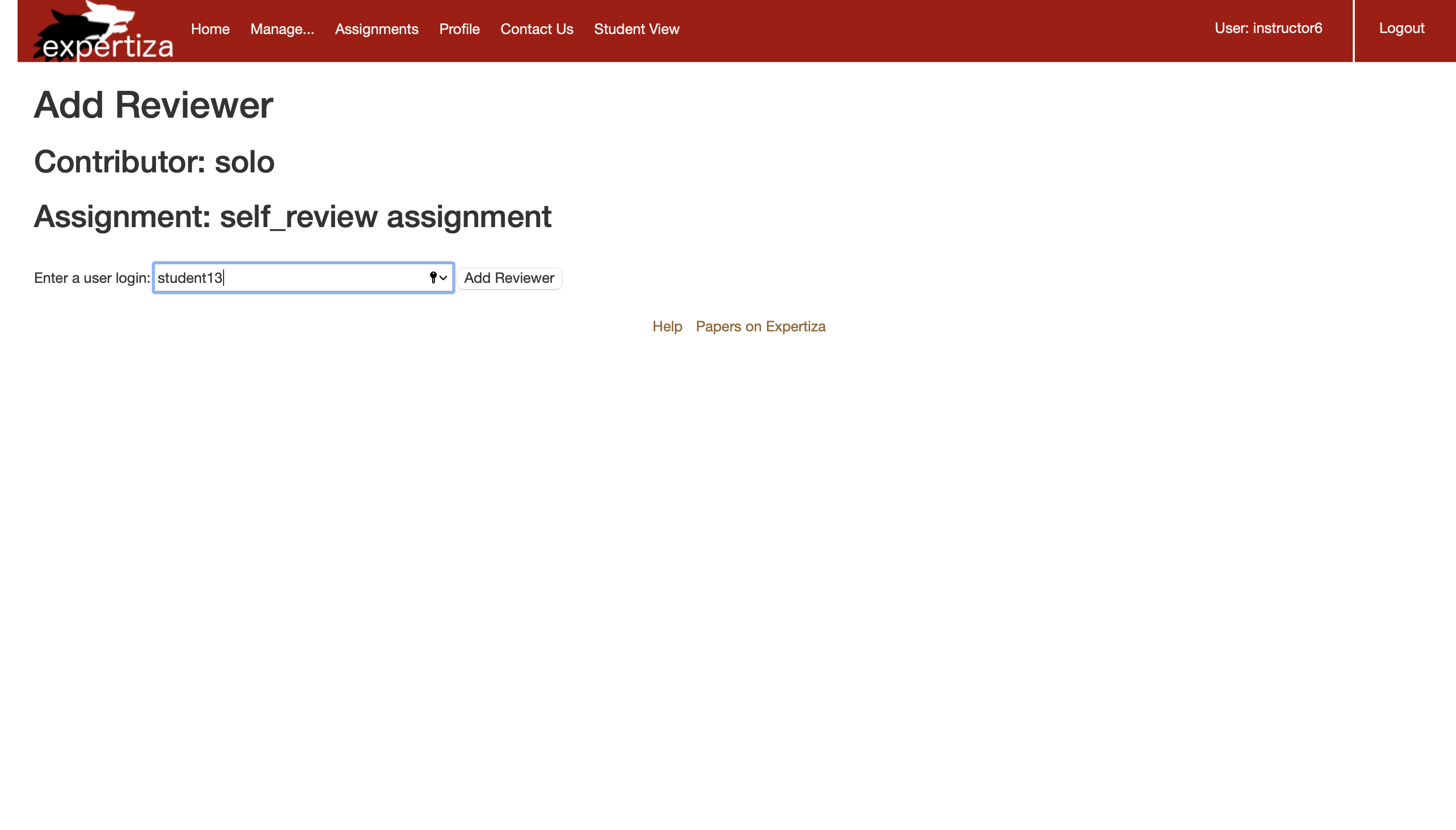

< | <li> Assigning student13 as reviewer for own work</li> | ||

[[File:Student-13-self-review.png|600px]]<br/> | |||

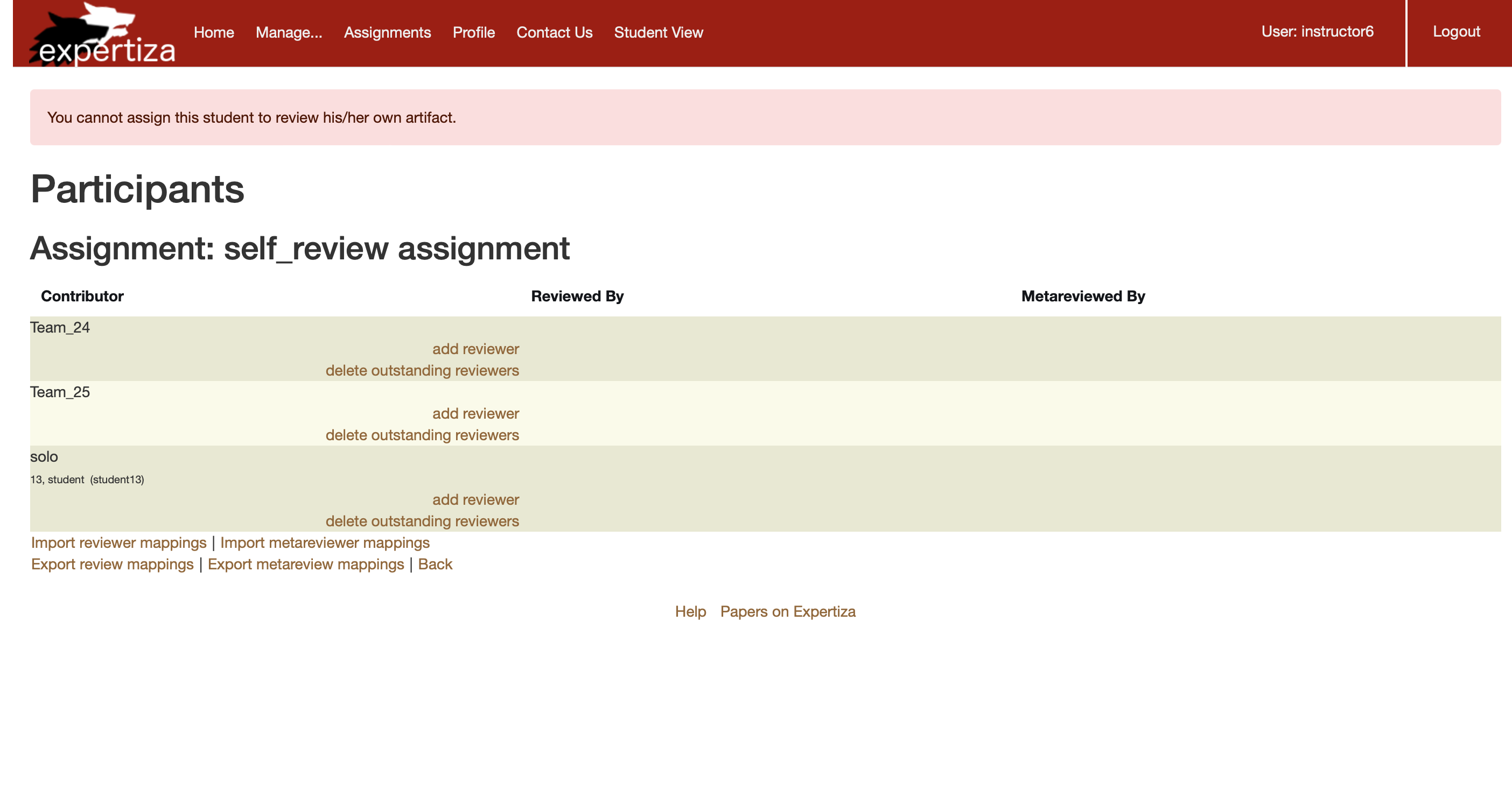

<li> Display of error message after assigning own artifact</li> | |||

[[File: Error-message-checked.png|600px]]<br/> | |||

</ | |||

<h4> Test case added</h4> | |||

<p> Previously implemented test cases of review_map controller have covered most of the tests pertaining to a user being assigned review work for his own artifact. These test cases primarily focused on the add_reviewer function of the review_mapping controller. However a test case checking for the display of flash error message on trying to assign reviewer to his/her own work was not covered, which we have added in the respective spec file. </p> | |||

<pre> | <pre> | ||

Scenario: | Scenario: Display of flash error message when instructor tries student their own artifact for reviewing | ||

Given | Given instructor has logged in | ||

When | When the instructor visits the assignment page and | ||

Then | When the instructor clicks on assign reviewer for an assignment and | ||

when the instructor tries to assign the owner of the artifact as the reviewer | |||

Then a flash error message is displayed saying "You cannot assign this student to review his/her own artifact." | |||

</pre> | </pre> | ||

<pre> | <pre> | ||

context 'when instructor tries to assign a student their own artifact for reviewing' do | |||

it 'flashes an error message' do | |||

allow(TeamsUser).to receive(:exists?).with(team_id: '1', user_id: 1).and_return(true) | |||

post :add_reviewer, @params | |||

expect(flash[:error]).to eq('You cannot assign this student to review his/her own artifact.') | |||

expect(response).to redirect_to '/review_mapping/list_mappings?id=1' | |||

end | |||

end | |||

</pre> | </pre> | ||

<h3> Problem 2 : Review comments from the first round not being displayed</h3> | |||

< | <h4> Problem comment </h4> | ||

<p> The Round 1 review (and the Round 1 heat grid) are missing when the instructor (instructor6) goes to Assign Grade for Student 9277. The Round 1 review should be in the first heat grid on the Assign Grades page. </p> | |||

</ | |||

<h4> | <h4> Issue Reproduction and Observations </h4> | ||

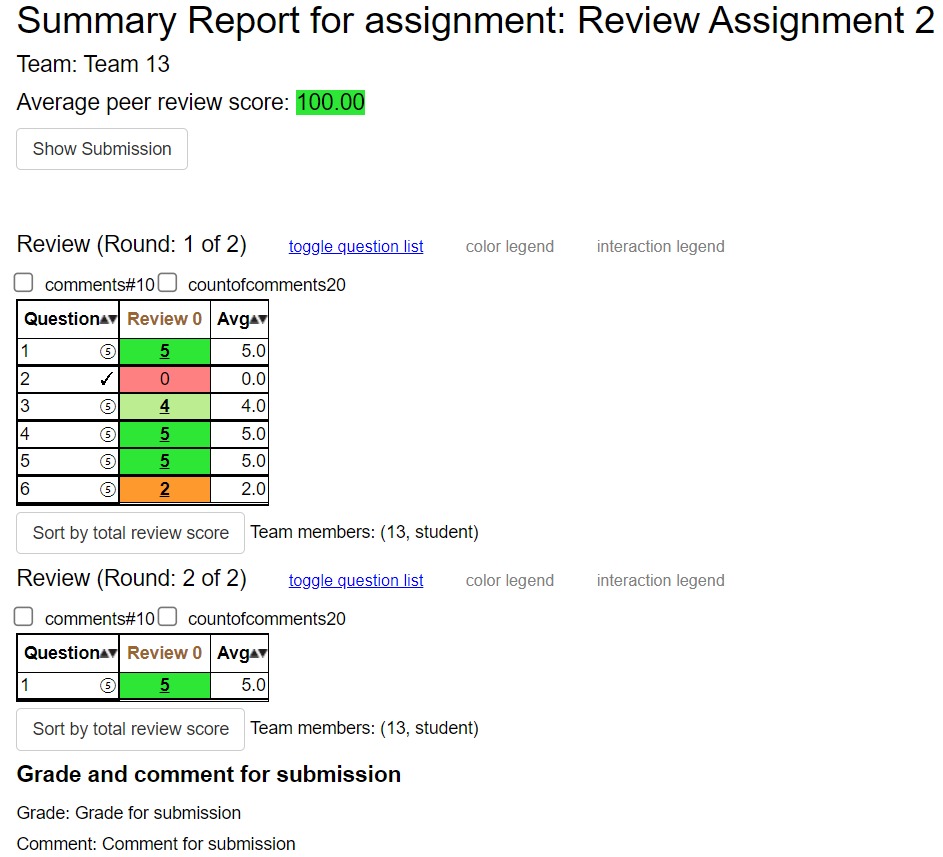

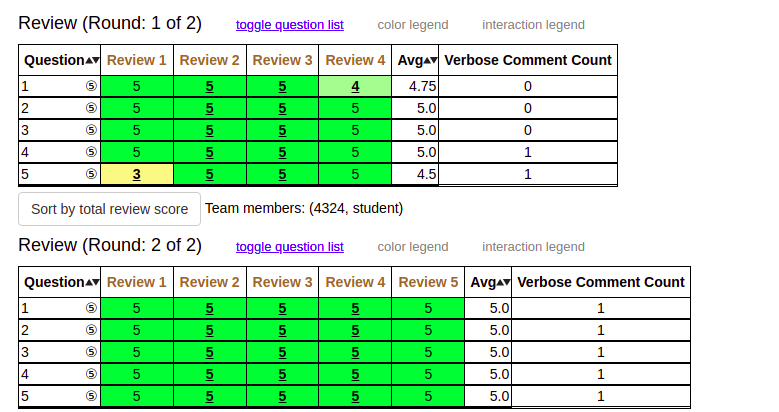

<p> For this problem, we have tried the similar thing as the first problem. We have observed that if the instructor checks the option "review rubric varies by round" when the instructor creates an assignment, reviews from all the rounds are displayed in the heat grid. When the instructor unchecks that option, only the most recent review set is being displayed. As this is dependent on the user preference, we identified this not to be a problem. </p> | |||

< | <ul> | ||

<li> Review rubric varies by round option, which is unchecked by default </li> | |||

[[File: Unchecked-rubric-varies.jpeg|600px]]<br/> | |||

</ | |||

< | <li> Display of only the reviews of latest round when "Review rubric varies by round" is unchecked</li> | ||

[[File:Latest_review_on_uncheck.jpeg|600px]]<br/> | |||

</ | |||

< | <li> Display of reviews of all the rounds when "Review rubric varies by round" is checked</li> | ||

[[File:All-round-display.jpeg|600px]]<br/> | |||

</ul> | |||

<h2> Important Links </h2> | <h2> Important Links </h2> | ||

* | * [https://youtu.be/K8rCxSZZOho/ Demo Video] | ||

* | * [https://github.com/EshwarCVS/expertiza/ GitHub repository link] | ||

* | * [https://docs.google.com/document/d/1H0BjzMBz5it7Wckhegq4LXhMi8RVctTwVzP2x9gnFCo/edit#heading=h.tqdrrd12xs4x/ Project Description Document] | ||

* [https://github.com/expertiza/expertiza/issues/1869/ GitHub Issue #1869] | |||

* [https://github.com/expertiza/expertiza/issues/2019/ GitHub Issue #2019] | |||

Latest revision as of 04:46, 2 May 2022

This page provides information about the documentation of E2241: Heatgrid fixes and improvements as part of CSC/ECE 517 Spring 2022 Final project.

Team

Mentor

- Nicholas Himes (nnhimes)

Team Members (PinkPatterns)

- Eshwar Chandra Vidhyasagar Thedla (ethedla)

- Gokul Krishna Koganti (gkogant)

- Jyothi Sumer Goud Maduru (jmaduru)

- Suneha Bose (sbose2)

Heat Grid

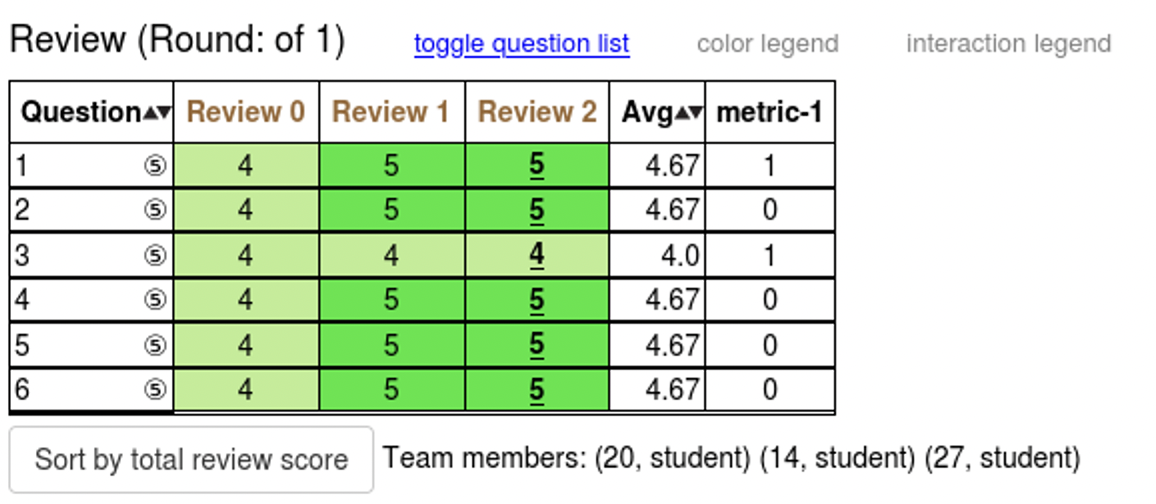

Heat Grid refers to a part of view in Expertiza that shows review scores of all the reviews done for a particular assignment. It shows scores given for each rubric by peer reviewers. This is used by instructors to assign scores to individual assignments and by students to view review scores of their assignment. Our project scope involves dealing with fixing issues related to the heat grid and any subsequent bugs that might arrive.

Issues identified

Issue #2019: Restrict teammates from reviewing their own work

There are some instances where a student was shown as reviewing his/her team even though the "Allow Self Review" checkbox was not checked by the instructor. This situation should not arise for practical purposes. Test cases must be extensively added so that we can be notified if the bug occurs again.

Issue #1869: Deal with the metrics of the heat grid

metric-1 column name in the heat grid follows bad naming convention. It doesn't explain what the column refers to. This must be changed to make the column name more apt.

As of now, the heat grid uses only one metric (metric-1). We must facilitate the system for addition of new metrics. Instructors should be able to toggle between various metrics as per their preferences. This can be done by the use of a drop down menu.

Design Principle

For Issue #1869 we plan to use the Factory Design Pattern since we are dealing with the creation of multiple kinds of metrics. As part of our implementation, we plan on using a metric interface, which can be implemented by different concrete metric classes. A factory class acts as a middleman between the implementations and the main class that instantiates the objects. Based on the instructor's selection, the main class requests the metric object from the factory class, rather than dealing with the creation logic on its own. This pattern, therefore, enforces encapsulation and results in the low coupling, the principle which is our primary target for this project scope.

The previous implementation has dealt with issue #1869. But the drawback with the implementation is that it renders a code that is highly coupled. Whenever a new metric is added two files (assignments/edit/_general.html.erb and grades/view_team.html.erb) are to be changed, resulting in high coupling. As a consequence, tests pertaining to the highly coupled codes require new tests as a new metric is added. This is undesirable. So, the principle of low coupling is the main focus of our implementation. This principle will enable a common set of tests that would be valid for any number of metrics that will potentially be added in the future.

Files to be targeted

Issue #2019

Test file for adding test cases to implement self-reviews checks

- spec/controllers/grades_controller_spec.rb

Issue #1869

For Heat grid view

- app/views/grades/_view_heatgrid.html.erb

- app/views/grades/view_team.html.erb

- app/helpers/grades_helper.rb

- app/controllers/grades_controller.rb

- app/models/vm_question_response.rb

- app/models/vm_question_row.rb

- assets/view_team_in_grades.js

For Assignment view

- app/views/assignment/edit/_general.html.erb

- app/controllers/assignments_controller.rb

Implementation of Issue 1869

Previous team’s implementation

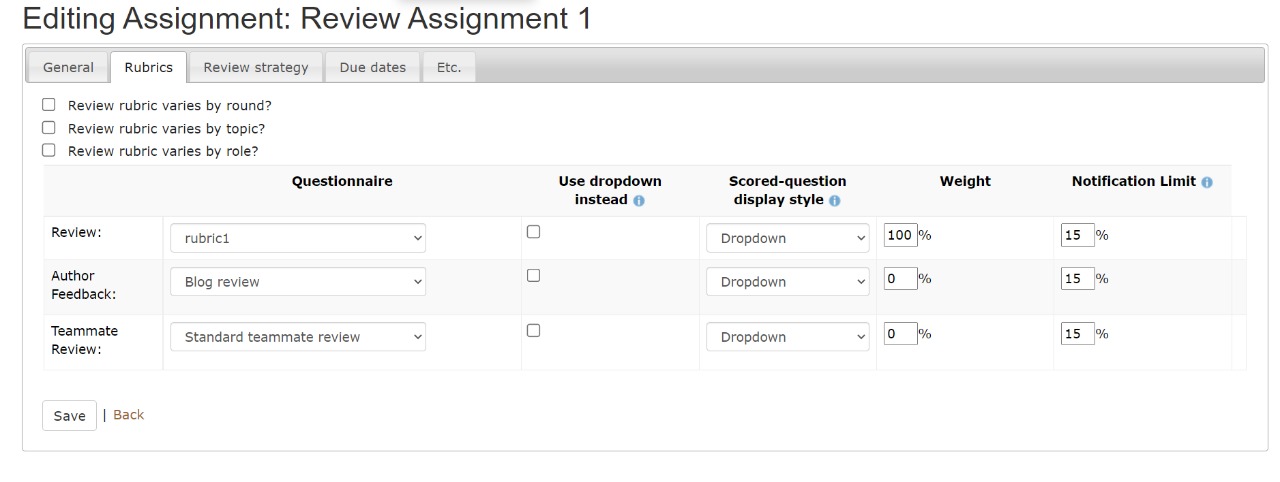

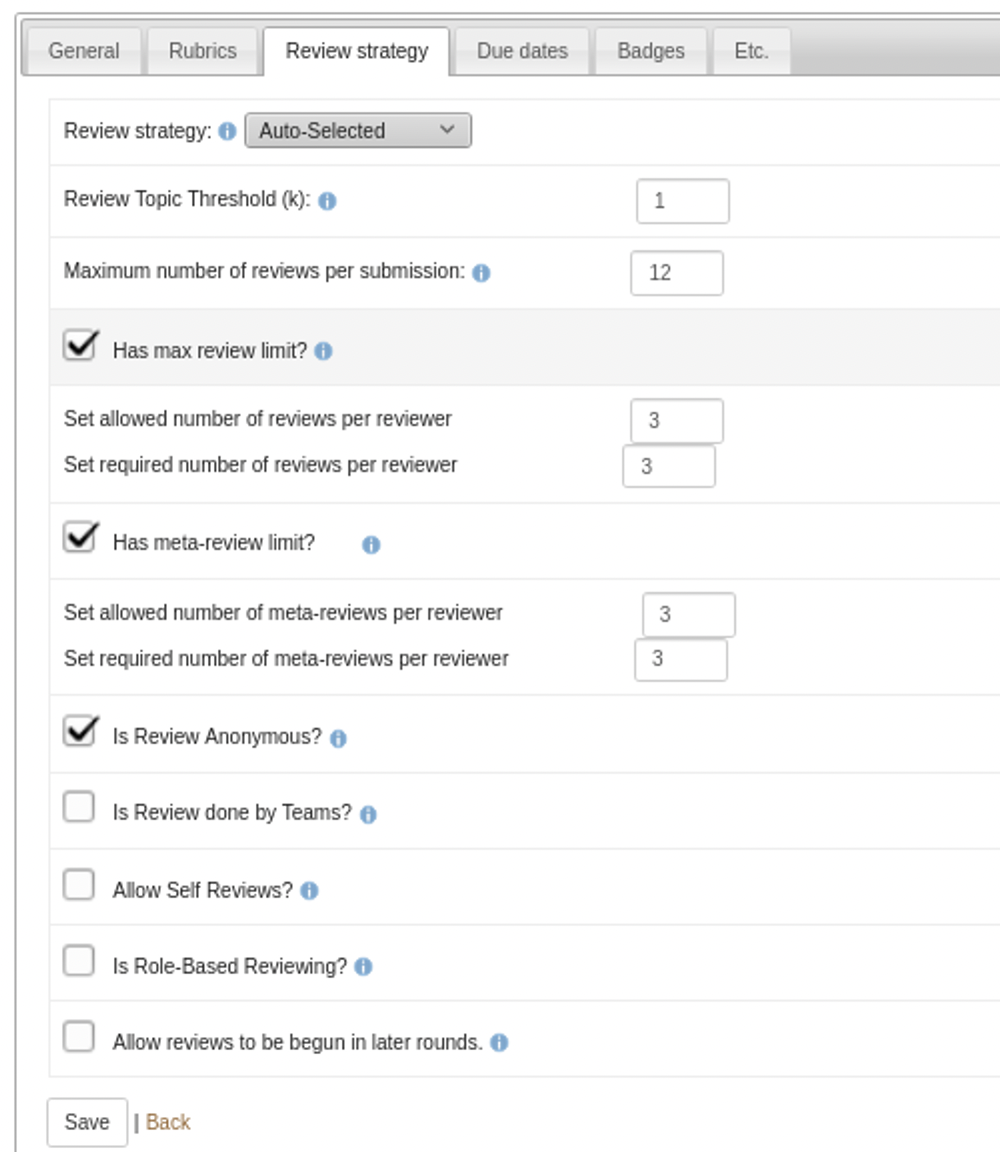

The previous team has dealt with the issue by changing “metric-1” to “verbose comment count”, which is relevant to what metric-1 refers to. For the latter half of the issue, they have provided a metric dropdown for the instructor to select while creating the assignment. This consequently requires only one metric to be displayed on the heat grid for which they’ve given a toggle option to show and hide the metric.

Changes reflected in the UI

Issue with previous team's implementation

The critical problem with their changes was, when in future a new metric is added, it forces a change in two view files: one time in assignments/edit/_general.html.erb and two times in grades/view_team.html.erb. Requiring these changes in view files that have little relevance to the heat grid is essentially causing high coupling.

Current implementation

Issue Goal

Our mentor has required us to display metrics in the heat grid with a toggle option for the user to select only the required metrics according to their preferences. This should be done keeping in mind low coupling as the primary design goal. These changes should be accompanied by minor changes such as adding comments and changing the name of “metric-1” to a self-indicative name.

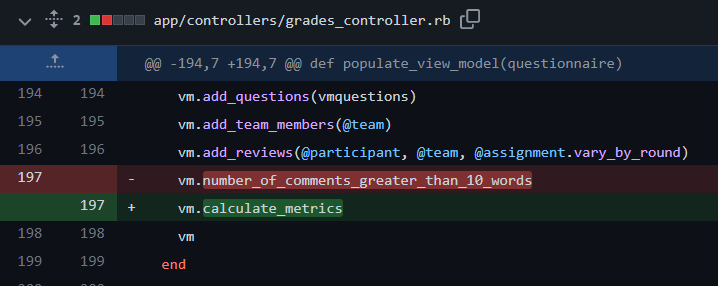

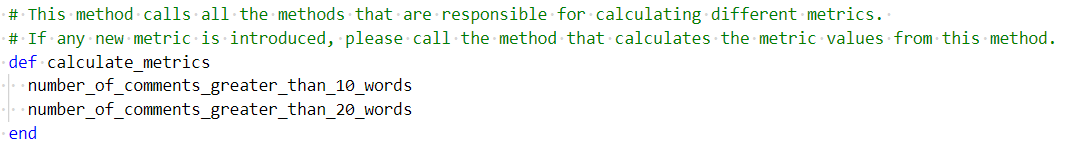

Implementation details

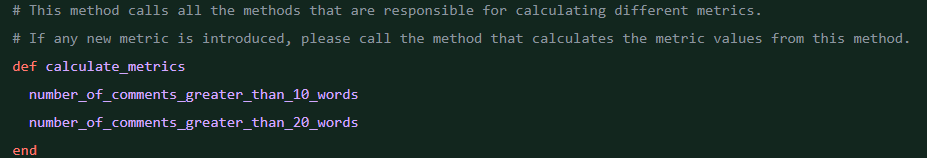

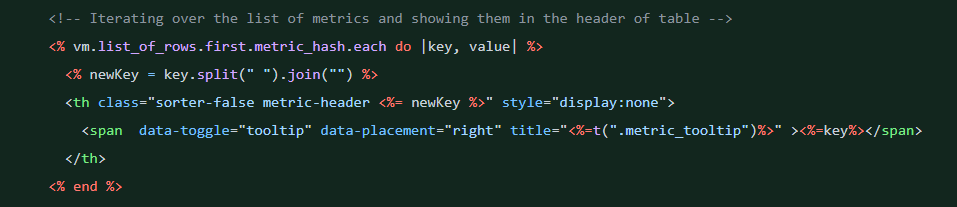

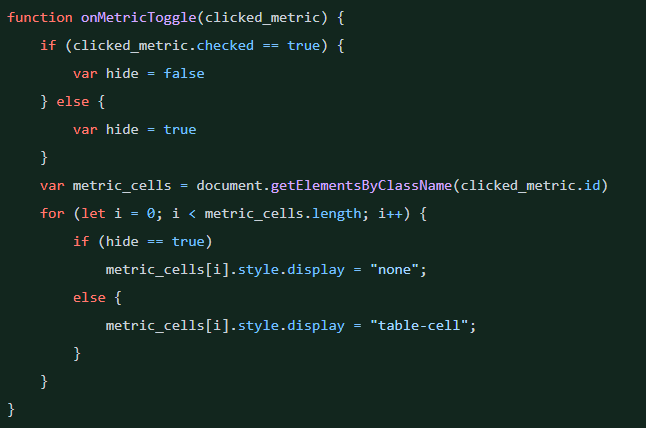

In the original beta implementation, whenever the relevant view file is loaded, it is calling the method "populate_view_model" present in the "grades_controller.rb" file. This method, is explicitly calling another model method to calculate metric. Whenever a new metric is added, we will have to make a call to that metric in the populate view model. This is not ideal as each new metric added is introducing a new line of code in the controller body. It is also not possible to determine the metrics present dynamically. To deal with this problem, we introduced a new parent method "calculate_metrics" in model file "vm_question_response.rb". This method is responsible for calling other individual methods that calculate metrics. The call to this method is replacing the explicit calls to metric calculation in the controller. This essentially is breaking the dependency between new metric introduction and the controller. Now, whenever a new metric is added, there is no need to introduce any code changes in the controller, instead we just have to change the code in relevant model file.

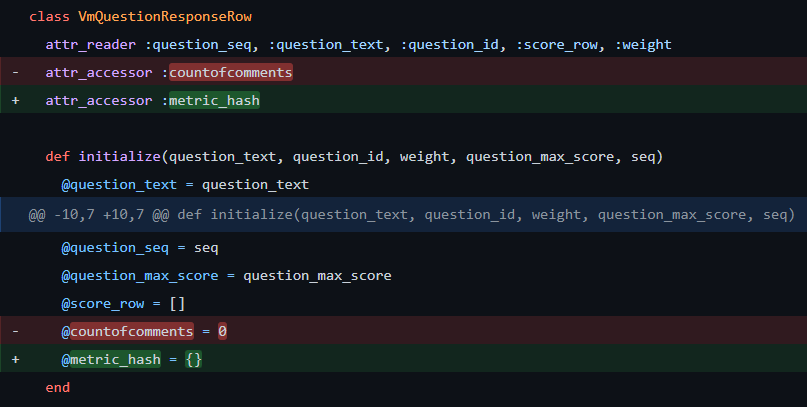

A new hashmap is introduced to store the values of the metrics in the form of key value pairs. Instead of explicitly calling metrics by name in the view file, this hashmap is used to iterate over the metrics and displayed

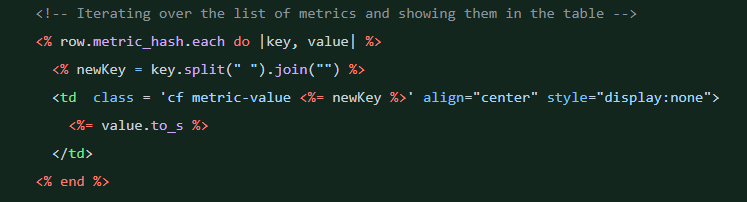

Toggle feature is implemented in "view_team_in_grades.js" file. When the checkbox is clicked, function in javascript file is called and appropriate elements are hidden.

Adding a new metric in future

To introduce new metric, a new method should be added in "vm_question_response.rb" file. This newly added method should be called from "calculate_metrics" method

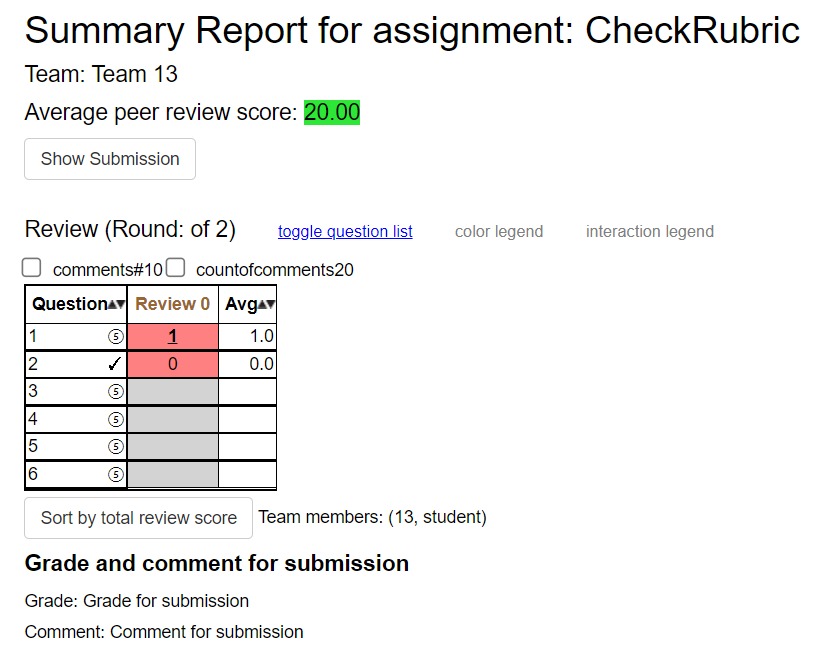

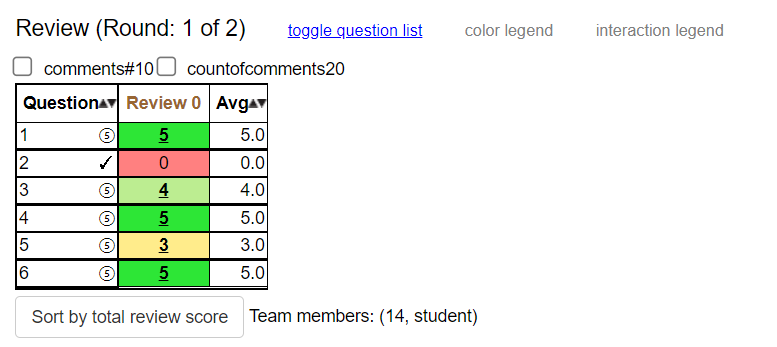

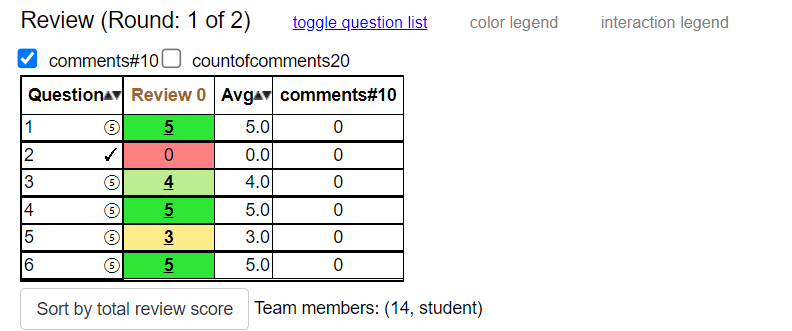

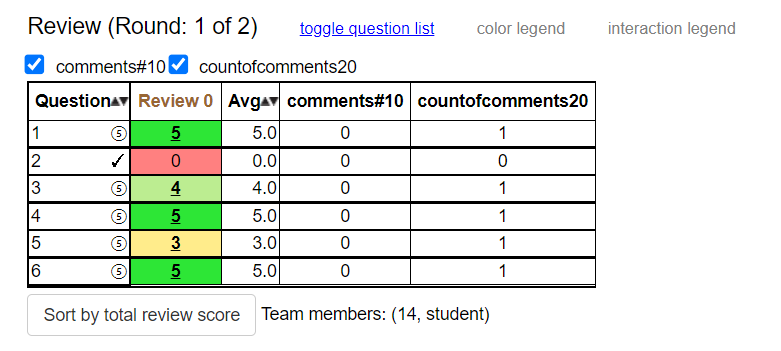

Changes reflected in the UI

Manual Testing

Scenario: Visibility of all the unchecked metric checkboxes in View Scores view Given user has logged in When user visits View Scores page Then all the unchecked metric checkboxes are present above the heat grid

Scenario: Default invisibility of metric columns in View Scores view Given user has logged in When user visits View Scores page Then metric columns are not visible in the heat grid

Scenario: Checking a metric checkbox displays the corresponding metric column Given user has logged in When user visits View Scores page and When user clicks on unchecked comments#10 metric Then column with column name " comments#10" gets displayed with corresponding values

Scenario: Unchecking a metric checkbox hides the corresponding metric column Given user has logged in When user visits View Scores page and When user clicks on checked comments#10 metric Then column with column name " comments#10" and corresponding values get hidden

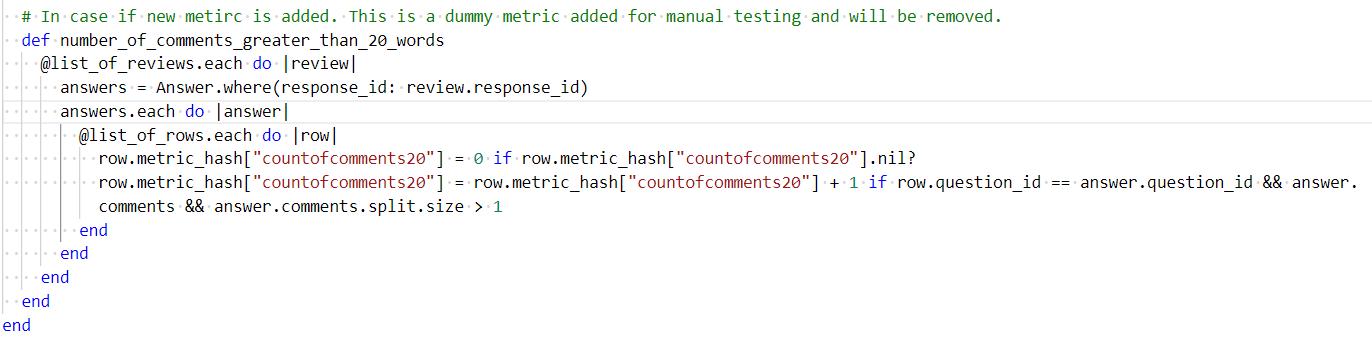

Unit Testing

As our project does not deal with actually adding new metrics, but just facilitating a low-coupling addition of new metrics, we need not add any unit test cases for metric implementations. There is only one metric in the system as of now for which test cases are already written.

Implementation of Issue 2019

This issue encompasses many problems such as review reports not displaying correctly and a user being able to review his/her work. Each problem has been dealt with individually. Main problems identified as part of this issue and relevant observations and corrections are jotted down in this section.

Problem 1 : A user being able to review his/her own work.

Problem comment

On Madeup Problem 3, assignment 1055, there are several anomalies in the review report. User 9277 reviewed Team_2323 and gave this comment: "This question seems a little suspicious. It is nearly identical to Question 4a on page 327 in the 2016 edition book. The only thing change was variable name A to B and value 2 to 3." However, according to the View Submissions page, User 9277 is the only member of Team_2323.

Issue Reproduction and Observations

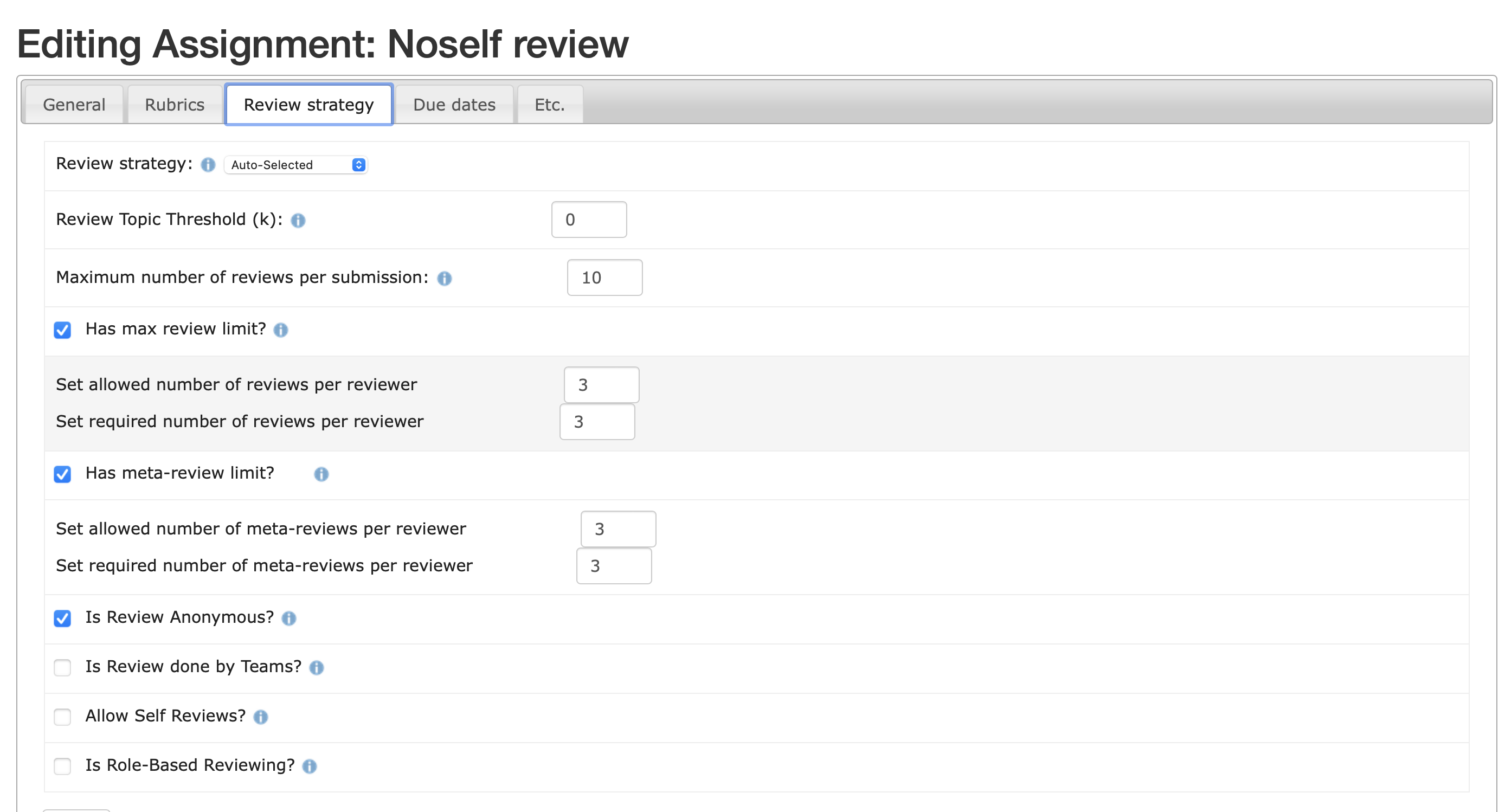

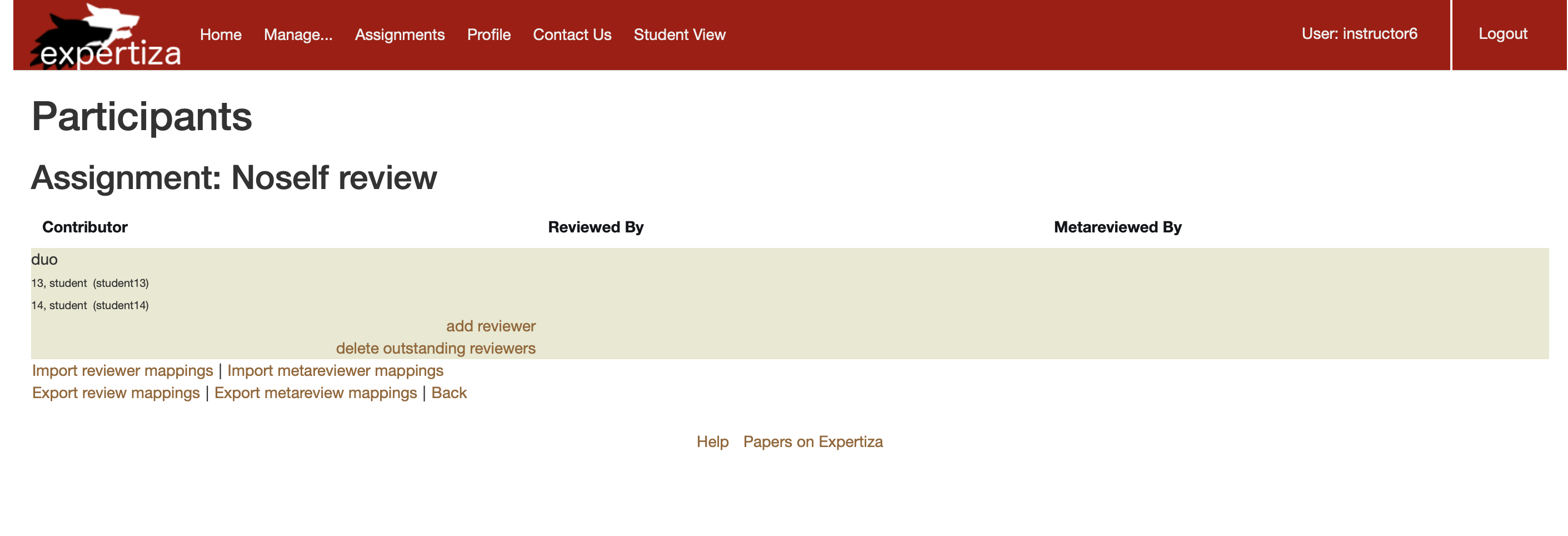

We tried reproducing the issue as specified in the issue comment, but we could not find Madeup Problem 3, assignment 1055. So, we have created two assignments with different parameters to check if the instructor is able to assign a reviewer his/her own work.

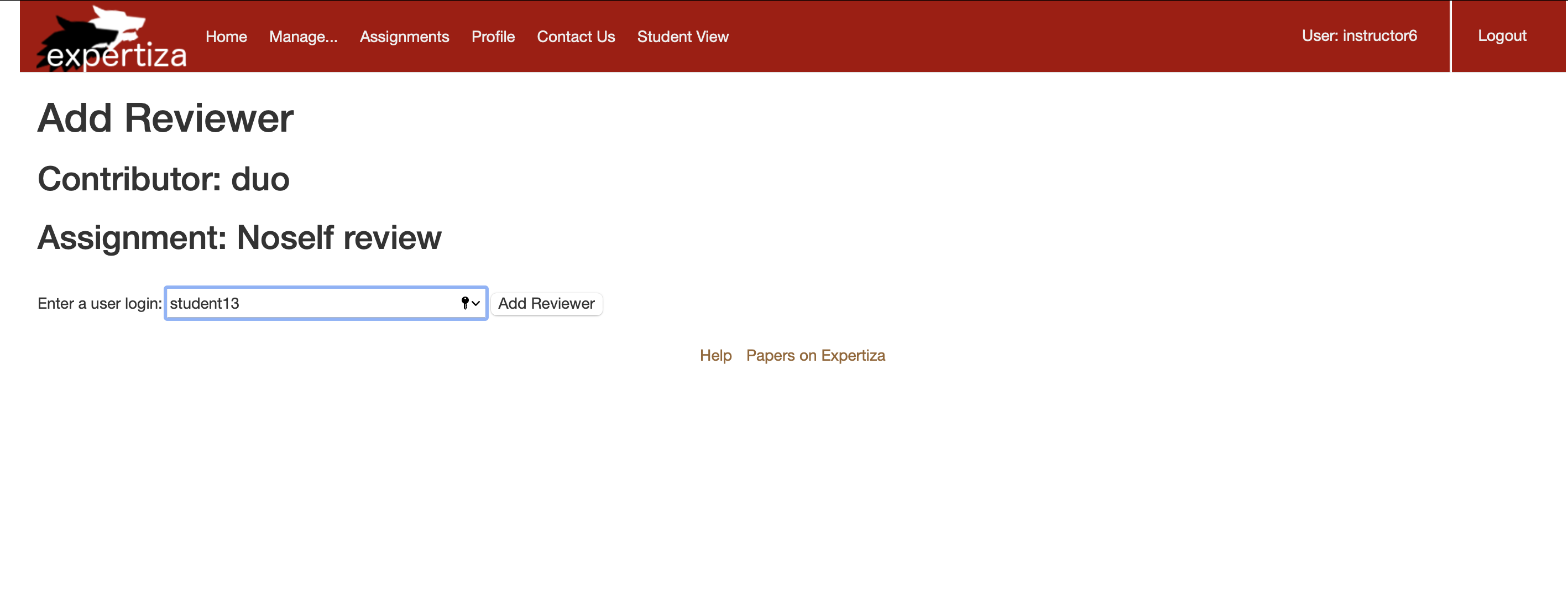

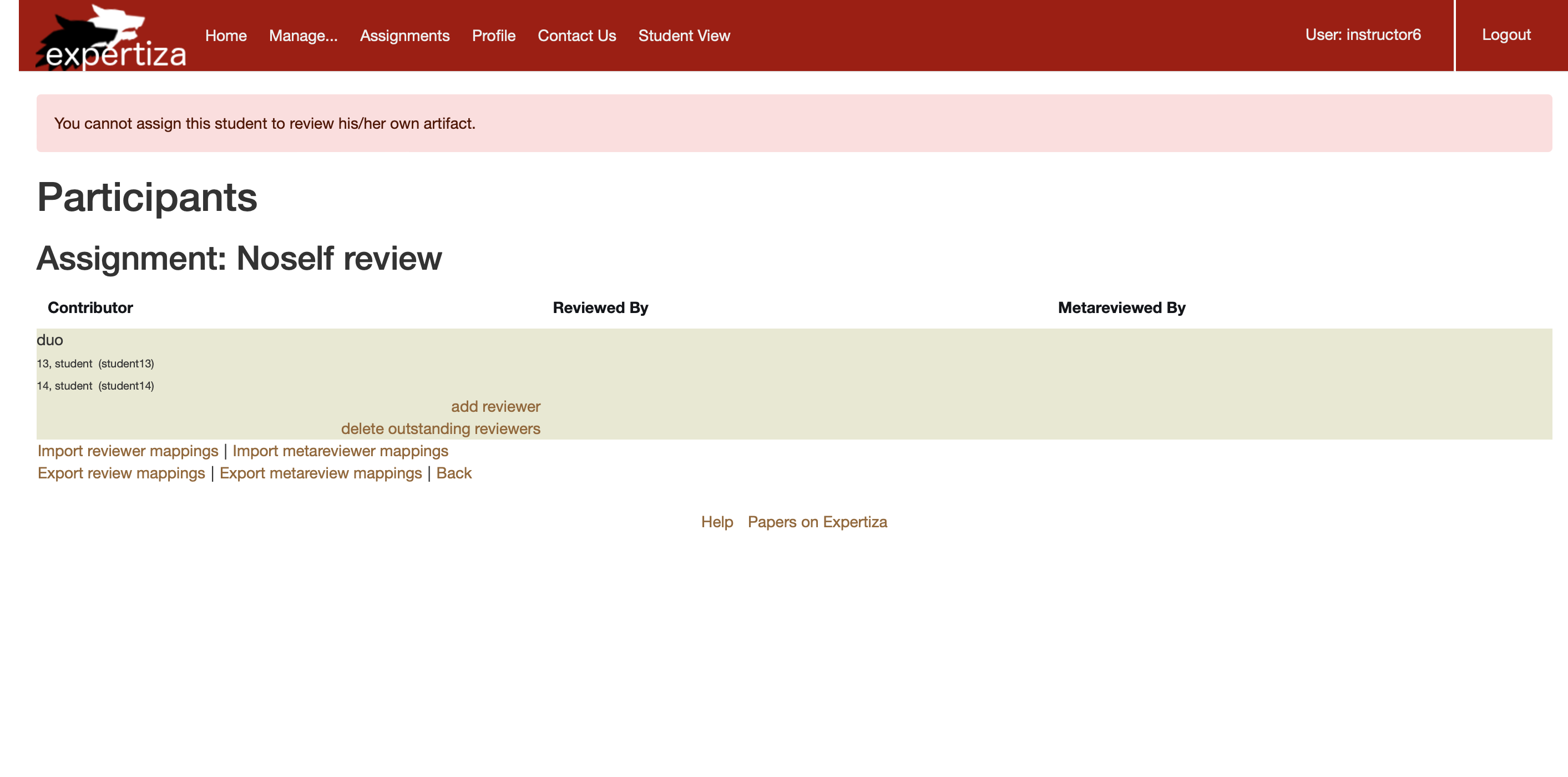

We have created an assignment "Noself review" with allow self review option unchecked. Then, from the instructor page, we have created a team with student13 and student 14 as teammates. Then we logged into student14 account and submitted a hyperlink for that assignment. After that, from the instructor page we clicked on the assign review button and tried assigning student13 as the reviewer for that work and then tried the same with student14. In both the cases, the assignment failed by rendering a flash error message, "You cannot assign this student to review his/her own artifact."

- Unchecked allow self-review option in "Noself review" assignment

- Participants page containing add reviewer button

- Adding student13 as reviewer for his/her own work

- Flash error message displayed

We followed the same process for "self_review assignment", which gave student an option to self review his/her own work immediately after submitting their work. However, when the instructor tried assigning the user with his/her own work, same flash error message was displayed, not allowing the user to review their work.

Test case added

Previously implemented test cases of review_map controller have covered most of the tests pertaining to a user being assigned review work for his own artifact. These test cases primarily focused on the add_reviewer function of the review_mapping controller. However a test case checking for the display of flash error message on trying to assign reviewer to his/her own work was not covered, which we have added in the respective spec file.

Scenario: Display of flash error message when instructor tries student their own artifact for reviewing Given instructor has logged in When the instructor visits the assignment page and When the instructor clicks on assign reviewer for an assignment and when the instructor tries to assign the owner of the artifact as the reviewer Then a flash error message is displayed saying "You cannot assign this student to review his/her own artifact."

context 'when instructor tries to assign a student their own artifact for reviewing' do

it 'flashes an error message' do

allow(TeamsUser).to receive(:exists?).with(team_id: '1', user_id: 1).and_return(true)

post :add_reviewer, @params

expect(flash[:error]).to eq('You cannot assign this student to review his/her own artifact.')

expect(response).to redirect_to '/review_mapping/list_mappings?id=1'

end

end

Problem 2 : Review comments from the first round not being displayed

Problem comment

The Round 1 review (and the Round 1 heat grid) are missing when the instructor (instructor6) goes to Assign Grade for Student 9277. The Round 1 review should be in the first heat grid on the Assign Grades page.

Issue Reproduction and Observations

For this problem, we have tried the similar thing as the first problem. We have observed that if the instructor checks the option "review rubric varies by round" when the instructor creates an assignment, reviews from all the rounds are displayed in the heat grid. When the instructor unchecks that option, only the most recent review set is being displayed. As this is dependent on the user preference, we identified this not to be a problem.

- Review rubric varies by round option, which is unchecked by default

- Display of only the reviews of latest round when "Review rubric varies by round" is unchecked

- Display of reviews of all the rounds when "Review rubric varies by round" is checked