E1868 remove reports from review mapping controller: Difference between revisions

No edit summary |

(→Team) |

||

| (14 intermediate revisions by 2 users not shown) | |||

| Line 37: | Line 37: | ||

In order to follow good design practices, the logic to differentiate reports will be implemented using Strategy Pattern[https://en.wikipedia.org/wiki/Strategy_pattern]. | In order to follow good design practices, the logic to differentiate reports will be implemented using Strategy Pattern[https://en.wikipedia.org/wiki/Strategy_pattern]. | ||

Currently, there are | Currently, there are ten different reports | ||

:1. Review report | :1. Review report | ||

:2. Author feedback report | :2. Author feedback report | ||

| Line 46: | Line 46: | ||

:7. Answer tagging report | :7. Answer tagging report | ||

:8. Calibration report | :8. Calibration report | ||

:9. Plagiarism checker report | |||

:10. Self review report | |||

The new implementation will have ReportFormatter module with following methods | The new implementation will have ReportFormatter module with following methods | ||

| Line 56: | Line 58: | ||

:- AnswerTagReport | :- AnswerTagReport | ||

:- CalibrationReport | :- CalibrationReport | ||

:- PlagiarismCheckerReport | |||

:- SelfReviewReport | |||

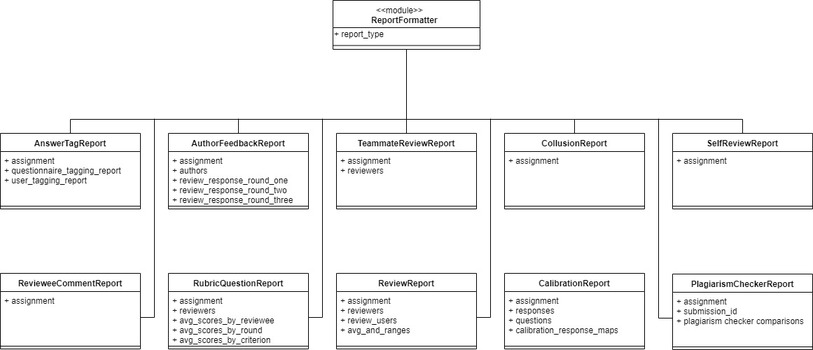

UML representation of the new | UML representation of the new module is provided below | ||

[[File: | [[File:Complete Report strategy E1868.jpg|frame|center]] | ||

=== Modified UI === | === Modified UI === | ||

In order to avoid the user to be redirected to review reports page every time the user wants to view a specific report, the following changes have been made to the implementation: | In order to avoid the user to be redirected to review reports page every time the user wants to view a specific report, the following changes have been made to the implementation: | ||

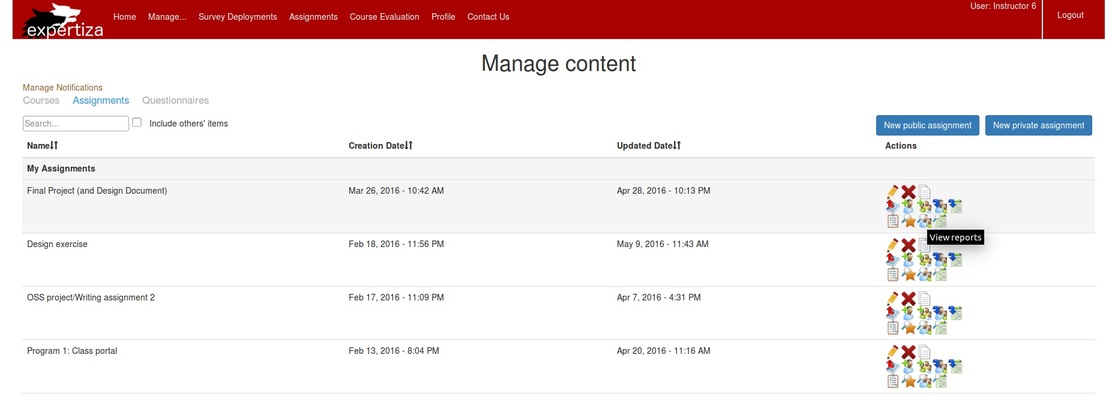

:1. The icon in view assignments page has been renamed from "view review report" to "view reports" to generalize. | |||

[[File:assignment_view.jpg | frame | center]] | |||

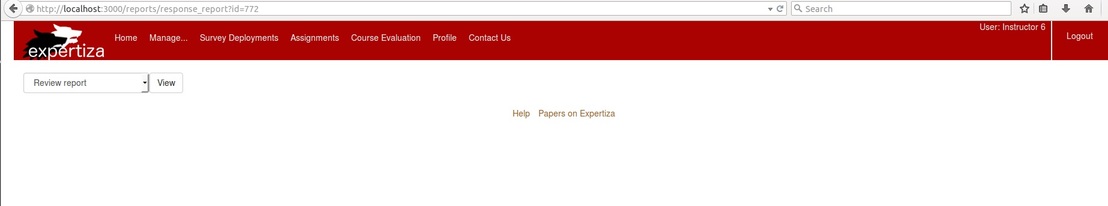

:2. On clicking the "view reports" icon, the user is directed to a new page that has the drop-down to select the required report. | |||

[[File:reports_view.jpg | frame | center]] | |||

= Test Plan = | = Test Plan = | ||

| Line 76: | Line 85: | ||

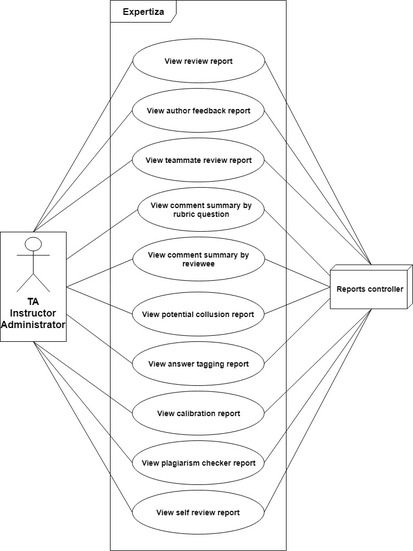

The use case of reports gives a broad overview of the functionality. It also provides the preliminary tests to be written to ensure the proper working of the feature. Below use case diagram represents the intent of reports functionality. | The use case of reports gives a broad overview of the functionality. It also provides the preliminary tests to be written to ensure the proper working of the feature. Below use case diagram represents the intent of reports functionality. | ||

[[File: | [[File:Basic Use case for reports.jpg|frame|center]] | ||

RSpec is the preferred testing framework in the project. The first set of test cases deal with the retrieval of different reports for assignments and validating the fields returned by the reports. The details are as follows | RSpec is the preferred testing framework in the project. The first set of test cases deal with the retrieval of different reports for assignments and validating the fields returned by the reports. The details are as follows(sample reports screenshot included) | ||

:1. When the requested report type is "SummaryByRevieweeAndCriteria", we check that the corresponding data is rendered. This report should contain a summary, reviewers, average scores by reviewee, average score by round and average score by criterion. | :1. When the requested report type is "SummaryByRevieweeAndCriteria", we check that the corresponding data is rendered. This report should contain a summary, reviewers, average scores by reviewee, average score by round and average score by criterion. | ||

[[File:Reviewee summary report.jpg|frame|center]] | |||

:2. When the requested report type is "SummaryByCriteria", we check that the corresponding data is rendered. This report should include a summary, reviewers, average scores by reviewee, average score by round and average score by criterion. | :2. When the requested report type is "SummaryByCriteria", we check that the corresponding data is rendered. This report should include a summary, reviewers, average scores by reviewee, average score by round and average score by criterion. | ||

[[File:Review summary report.jpg|frame|center]] | |||

:3. When the requested report type is "ReviewResponseMap", we check the corresponding report data is rendered. This reports participants, average and range. | :3. When the requested report type is "ReviewResponseMap", we check the corresponding report data is rendered. This reports participants, average and range. | ||

: | |||

: | |||

: | [[File:Review report.jpg|frame|center]] | ||

: | |||

:4A. When the requested report type is "FeedbackResponseMap" and assignment has varying rubrics by round feature, we check the corresponding participants' data related to feedback i.e. number of feedbacks done, last feedback status. | |||

:4B. When the requested report type is "FeedbackResponseMap" and when the assignment does not have varying rubrics by round feature, we check the corresponding participants' data related to feedback i.e. number of feedbacks done, last feedback status. | |||

[[File:Feedback report.jpg|frame|center]] | |||

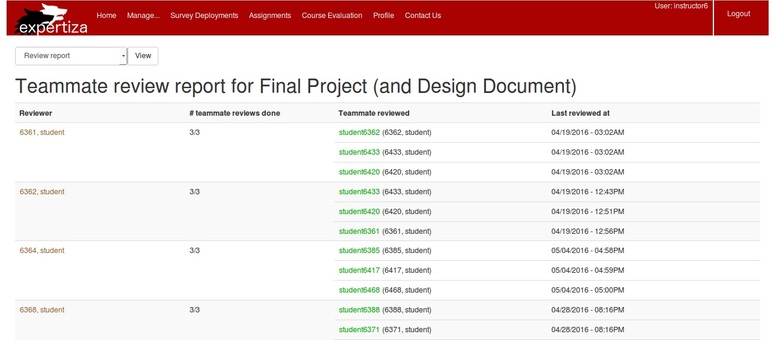

:5. When the requested report type is "TeammateReviewResponseMap", we check that there is a correct mapping between the participant and its response. The report must return participant information, the number of reviews completed, teammates reviewed, the last review status. | |||

[[File:Teammate review report.jpg|frame|center]] | |||

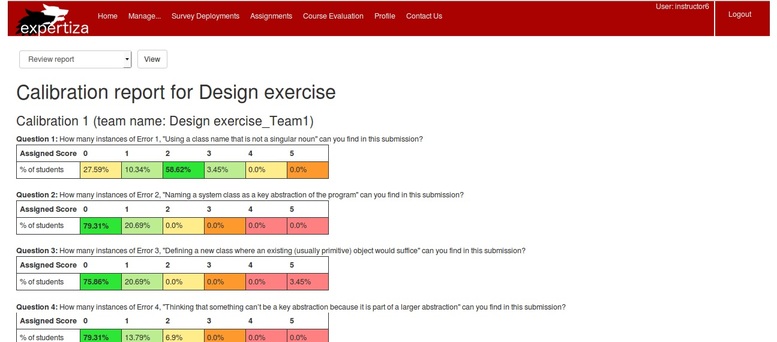

:6. When the requested report type is "Calibration" and participant is not empty we check if the correct report is rendered or not. The report must return the calibrated values of the participants' response to the given assignment. | |||

[[File:Calibration report.jpg|frame|center]] | |||

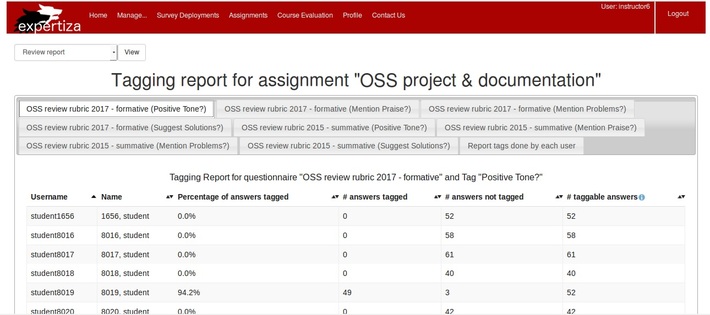

:7. When the requested report type is "AnswerTaggingReport", we check if the correct report page is rendered or not. The report must render the participants' details, the number of taggable answers, the number of answers tagged, the number of answers not tagged and the percentage of answers tagged. | |||

[[File:Answer tagging report.jpg|frame|center]] | |||

:8. When the requested report type is "PlagiarismCheckerReport", we check if the correct report page is rendered or not. | :8. When the requested report type is "PlagiarismCheckerReport", we check if the correct report page is rendered or not. | ||

:9. When the requested report type is "CollusionReport", we check if the correct report page is rendered or not. | |||

:10. When the requested report type is "SelfReviewReport", we check if the correct report page is rendered or not. | |||

Since this refactoring project includes a new controller, the idea from the test perspective is to cover the basic scenarios of the feature. Complete feature coverage including corner case scenarios shall be committed based on the schedule for the project. | Since this refactoring project includes a new controller, the idea from the test perspective is to cover the basic scenarios of the feature. Complete feature coverage including corner case scenarios shall be committed based on the schedule for the project. | ||

= Team = | |||

[mailto:rvijaya4@ncsu.edu Ramya Vijayakumar]<br> | |||

[mailto:skeerth@ncsu.edu Suhas Keerthiraju]<br> | |||

[mailto:rdange@ncsu.edu Rajat Mahesh Dange]<br> | |||

[mailto:ssaluja@ncsu.edu Sahej Saluja]<br> | |||

Latest revision as of 03:11, 3 January 2019

Background

review_mapping_controller is the largest controller in Expertiza with 614 lines of code. The basic functionality of this controller is to assign reviewers to review artefacts or submissions by other participants or teams. But this controller includes a major chunk of code for generating and rendering several reports to the instructors. As part of the project E1837[1], the review_mapping_controller.rb file has been significantly modified to improve maintainability, code quality and code readability. To summarize, the review_mapping_controller was modified by separating the report generation functionality into a new helper named report_formatter_helper. This helper serves the logic for rendering different reports as per the instructor's request, thus segregating the controller specific code from the code for rendering the reports.

Refactoring from E1837 served as one of the primary design improvements to achieve scalability with different reports. But, this improvement contradicts with the single responsibility Object-Oriented Design Principles. The aim of this project is to extrapolate the report_formatter_helper code into a reports_controller.

Goals

- - Separation of concerns

- - Generalize code to render reports

- - Modify the UI to list reports

- - Testing the newly introduced reports_controller

Separation of Concerns

The reports and reviews are two different functionalities which should not be clubbed. As part of this refactoring, the existing design of review_mapping_controller handles the following two different functionalities:

- 1. Manage various reviewers

- 2. Display and manage different reports

This violates the single responsibility principle[2] by handling two different functionalities. This project aims to segregate these functionalities into the following two controllers:

- 1. review_mapping_controller to manage the functionalities of reviewers

- 2. reports_controller to handle the reports functionalities

Generalize code to display reports

The reports provide functionality such as presenting reviews for grading, showing author feedback (rejoinders), showing a table of all teammate reviews, showing a calibration report in an assignment where students rate the same work that has been previously rated by an instructor, and showing how many answers students have tagged.

Every report boils down to one single idea i.e. loop through either participants or teams. This loop can be generalized so that the layout (includes headers, footers etc) can be consistent across reports while delivering the desired content.

Modify the UI to list reports

In the current implementation, the reports are accessed by clicking on the “view review report” button in the buttons tray of an assignment, which leads into the review report page. This page contains a drop-down menu listing various reports to navigate to.

This always forces the user to initially view the review report and only then choose the required report. We intend to modify this implementation such that the user is not forced to view the review report but can directly select the required report.

Testing the new controller

Since the logic for report generation has been abstracted into a new controller, existing tests for report generation must be verified for regression and also check for improving the code coverage statistics.

Implementation specifics

Refactored Design

In order to follow good design practices, the logic to differentiate reports will be implemented using Strategy Pattern[3].

Currently, there are ten different reports

- 1. Review report

- 2. Author feedback report

- 3. Teammate review report

- 4. Aggregated comments by rubric question

- 5. Comment summary by reviewee (team)

- 6. Potential collusion report

- 7. Answer tagging report

- 8. Calibration report

- 9. Plagiarism checker report

- 10. Self review report

The new implementation will have ReportFormatter module with following methods

- - ReviewReport

- - AuthorFeedbackReport

- - TeammateReviewReport

- - RubricQuestionReport

- - RevieweeCommentReport

- - CollusionReport

- - AnswerTagReport

- - CalibrationReport

- - PlagiarismCheckerReport

- - SelfReviewReport

UML representation of the new module is provided below

Modified UI

In order to avoid the user to be redirected to review reports page every time the user wants to view a specific report, the following changes have been made to the implementation:

- 1. The icon in view assignments page has been renamed from "view review report" to "view reports" to generalize.

- 2. On clicking the "view reports" icon, the user is directed to a new page that has the drop-down to select the required report.

Test Plan

The use case of reports gives a broad overview of the functionality. It also provides the preliminary tests to be written to ensure the proper working of the feature. Below use case diagram represents the intent of reports functionality.

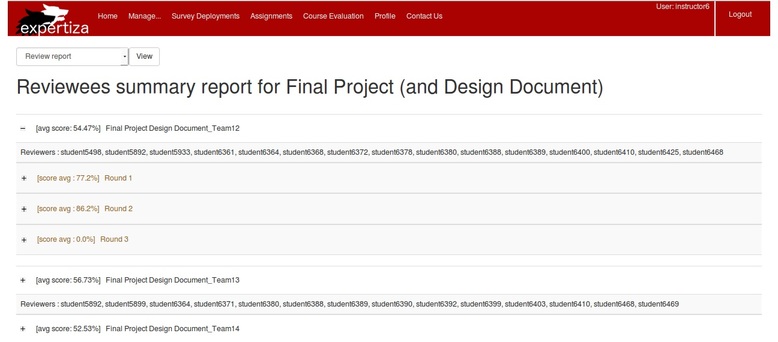

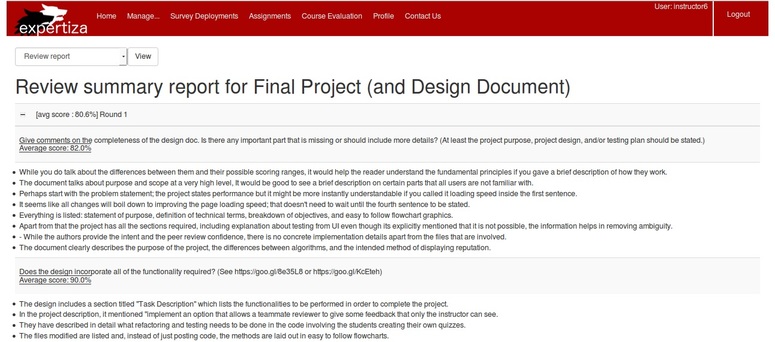

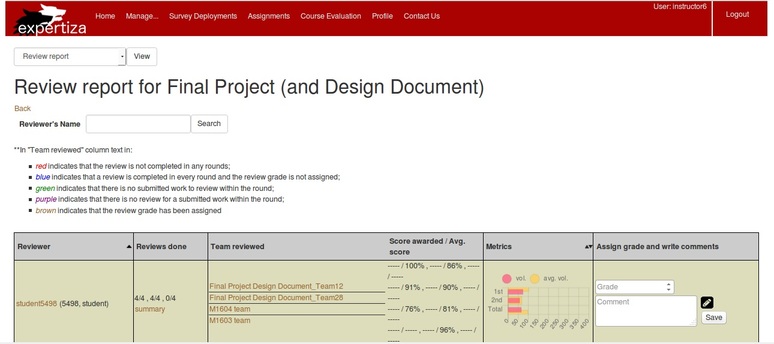

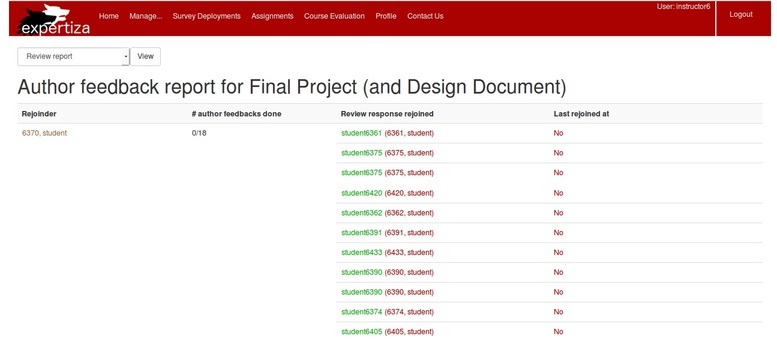

RSpec is the preferred testing framework in the project. The first set of test cases deal with the retrieval of different reports for assignments and validating the fields returned by the reports. The details are as follows(sample reports screenshot included)

- 1. When the requested report type is "SummaryByRevieweeAndCriteria", we check that the corresponding data is rendered. This report should contain a summary, reviewers, average scores by reviewee, average score by round and average score by criterion.

- 2. When the requested report type is "SummaryByCriteria", we check that the corresponding data is rendered. This report should include a summary, reviewers, average scores by reviewee, average score by round and average score by criterion.

- 3. When the requested report type is "ReviewResponseMap", we check the corresponding report data is rendered. This reports participants, average and range.

- 4A. When the requested report type is "FeedbackResponseMap" and assignment has varying rubrics by round feature, we check the corresponding participants' data related to feedback i.e. number of feedbacks done, last feedback status.

- 4B. When the requested report type is "FeedbackResponseMap" and when the assignment does not have varying rubrics by round feature, we check the corresponding participants' data related to feedback i.e. number of feedbacks done, last feedback status.

- 5. When the requested report type is "TeammateReviewResponseMap", we check that there is a correct mapping between the participant and its response. The report must return participant information, the number of reviews completed, teammates reviewed, the last review status.

- 6. When the requested report type is "Calibration" and participant is not empty we check if the correct report is rendered or not. The report must return the calibrated values of the participants' response to the given assignment.

- 7. When the requested report type is "AnswerTaggingReport", we check if the correct report page is rendered or not. The report must render the participants' details, the number of taggable answers, the number of answers tagged, the number of answers not tagged and the percentage of answers tagged.

- 8. When the requested report type is "PlagiarismCheckerReport", we check if the correct report page is rendered or not.

- 9. When the requested report type is "CollusionReport", we check if the correct report page is rendered or not.

- 10. When the requested report type is "SelfReviewReport", we check if the correct report page is rendered or not.

Since this refactoring project includes a new controller, the idea from the test perspective is to cover the basic scenarios of the feature. Complete feature coverage including corner case scenarios shall be committed based on the schedule for the project.

Team

Ramya Vijayakumar

Suhas Keerthiraju

Rajat Mahesh Dange

Sahej Saluja