Text metrics: Difference between revisions

No edit summary |

No edit summary |

||

| (53 intermediate revisions by 2 users not shown) | |||

| Line 8: | Line 8: | ||

===Tasks to be completed=== | ===Tasks to be completed=== | ||

The tasks that have to be completed as part of this project can be identified as follows: | |||

#Create DB table [review_metrics] to record all the metrics of volume, presence of suggestions, errors/problems pointed out by the reviewer and offensive words used in the review text if any. | #Create DB table [review_metrics] to record all the metrics of volume, presence of suggestions, errors/problems pointed out by the reviewer and offensive words used in the review text if any. | ||

| Line 22: | Line 24: | ||

In order to ease the process of evaluating the reviewers, metrics that can analyze the text written by the reviewers can be added. This will give more information about the reviews given by the reviewers to the instructors evaluating them. | In order to ease the process of evaluating the reviewers, metrics that can analyze the text written by the reviewers can be added. This will give more information about the reviews given by the reviewers to the instructors evaluating them. | ||

[File:Capture1981.PNG] | |||

The picture below shows the present implementation. The reviews do not show the metrics of the review, which could provide a quick summary of how the review is written/perceived. | |||

This picture shows how the reviews are displayed in the current implementation in Expertiza. The reviews are not sorted | |||

[[File:Capture1981.PNG]] | |||

===UML Diagram=== | |||

The UML diagram below shows the MVC design proposed as part of the project and the relationships between the different elements. | |||

The users being students and instructors, a single model called review metric need to be defined with its own controller review_metrics_controller | |||

which will have all the metric-based methods defined in it. The controller will update the attribute values in the review metrics table when a review | |||

is created or updated. This will then be visible in the views. | |||

[[File:UML1677.PNG]] | |||

===Proposed Design=== | ===Proposed Design=== | ||

'''TASK 1''' - we will be adding a new model | |||

Review_metrics. This model will include all the metrics as follows: | ''Review_metrics''. This model will include all the metrics as follows: | ||

#response_id → int(11) → foreign key | #response_id → int(11) → foreign key | ||

| Line 42: | Line 58: | ||

# student_review/list.html.erb - for the student | # student_review/list.html.erb - for the student | ||

# review_mapping/response_report.html.erb - for the instructor | # review_mapping/response_report.html.erb - for the instructor | ||

Since we will be iterating over the different responses in a given feedback by a reviewer (where the different responses in a given feedback are the elements of the collection) in order to collect the text metrics, the design pattern used will be an iterator pattern. | |||

'''TASK 2''' - For each peer review , we will be defining the following metrics, which the reviewer/instructor will be able to view after hovering the mouse over | |||

over the respective icons (it will either be a pop-up or a label text) | |||

# Number of words average - Integer | |||

# Number of words for all the reviews in this assignment in this round if suggestion is given- Integer | |||

# The percentage of the peer-reviews which offer suggestions in this assignment in this round - Integer | |||

# If problems or errors are pointed out - Boolean | |||

# The percentage of the peer-reviews which point out problems in this assignment in this round - Boolean | |||

# If the peer-review contains offensive language - Boolean | |||

# The percentages of the peer-reviews which contain offensive language - Integer | |||

The picture below shows how the proposed design will look to the user. | |||

The icons from left to right, will be indicative of the following metrics. | |||

(NOTE: The icon images are not subject to any copyright rules) | |||

#Icon 1 -> Number of reviews | |||

#Icon 2 -> Indicative of Vulgar content | |||

#Icon 3 -> Indicative of Similarity of reviews | |||

#Icon 4 -> Indicative of Problems or Errors in the peer review | |||

#Icon 5 -> Indicative of Suggestions made | |||

[[File:Icon790.png|Scale=100x150px]] | |||

The code snippet below shows where the view will be updated when the icons will be added - review_mapping/list.html.erb | |||

<%= create_report_table_header("Reviewer" => "16%"> | |||

"# reviews done per round" => "14%", | |||

"Team reviewed" => "20%", | |||

"Score awarded / Avg. score" => "14%", | |||

"Metrics" => "10%", | |||

"Assign grade and write comments" => "36%") %> | |||

<%@l = -1 %> | |||

<% sort_reviewer_by_review_volume_desc.each do |r| %> | |||

<% @response_maps, @bgcolor, @rspan, @l = get_data_for_review_report(@id, r.id, @type, @l) %> | |||

<tr> | |||

<%if r.id != -1 %> | |||

<% username = Participant.find(r.id).user.fullname %> | |||

<!--reviewer--> | |||

<td bgcolor=<%= @bgcolor %> rowspan= <%= @rspan%>> | |||

<%= link_to username, :controller => 'popup', :action => 'reviewer_details_popup', :id => r.id, :assignment_id=>@id%> | |||

</td> | |||

<!--# reviews done--> | |||

<td bgcolor=<%= @bgcolor %> rowspan= <%= @rspan%> align = 'left'> | |||

<% (1..@assignment.num_review_rounds).each do |round| %> | |||

<%= instance_variable_get("@review_in_round_" + round.to_s) %>/<%= @rspan %> | |||

<%= ', ' unless round == @assignment.num_review_rounds %> | |||

<% end %> | |||

<%= link_to "reviewer summary", :controller => 'popup', :action => 'view_review_scores_popup', :reviewer_id => r.id, :assignment_id=>@id %> | |||

</td> | |||

'''TASK 3''' - When the instructor checks the condition 'Vary rubric by rounds' the new code should work either way. This will be done checking the boolean method ''is_varying_rubric_by_rounds'' and then proceeding to update the ''review_metrics'' table. | |||

'''TASK 4''' - We will be defining an RSpec file in review_metrics_controller which will check that the metrics table is updated when a review is updated. | |||

'''TASK 5''' - We will be sorting and displaying the reviews in the file ''list.html.erb'' which is part of views/review_mapping based on the number of words metric received from the review_metrics table. Updating a review will effect a change in the review metrics table, therefore this will dynamically change the order if the metric has changed. | |||

NOTE: Code snippets have not been shown for Task 3, 4 and 5 because present code does not exist. We need to create new view and new rspec test cases for the same. | |||

==== Design Pattern Used ==== | |||

Iterator pattern is used as the design pattern. This pattern is used in order to allow us to iterate over the elements of a collection regardless of their implementation. In our case, the collection is the different responses given by a reviewer in one single feedback. Since we will be iterating over the different responses in a given feedback by a reviewer (where the different responses in a given feedback are the elements of the collection) in order to collect the text metrics, the design pattern used will be an iterator pattern. This will provide a standard interface for starting an iteration and moving to the next element, regardless of the implementation of the collection. | |||

====Features to be added==== | ====Features to be added==== | ||

| Line 48: | Line 124: | ||

Added label or pop up which will indicate the metrics as icons, as shown below. | Added label or pop up which will indicate the metrics as icons, as shown below. | ||

These icons will directly summarize the review in terms of predefined metrics. The reviews will be sorted according to the text metrics and will be displayed to the student on the 'Your Scores' page. | These icons will directly summarize the review in terms of predefined metrics. The reviews will be sorted according to the text metrics and will be displayed to the student on the 'Your Scores' page. | ||

====Gems to be used==== | |||

This project provides us the opportunity to use the Ruby-NLP gem which has specific methods for analyzing language with predefined patterns. | |||

We will be entering a filter for a tentative list of foul words, which when detect will populate as a metric. | |||

Gem - ''ruby-nlp'' | |||

Gem - Automated Metareview | |||

The Automated Metareview has been used in parts in the present expertiza codebase but has not been implemented. We have take inspiration from the set of phrases | |||

such as NEGATIVE_DESCRIPTORS< SUGGESTIVE_WORDS which are some of the constants as part of the gem documentation. | |||

Features like Word Count, Text summarization, Language detection, Text similarity, parsers are all part of this gem file which has been taken as baseline while implemneting few of the features. | |||

===Metrics View=== | |||

====Instructor View==== | |||

1. When an instructor views all Assignments and clicks on the Review Report, this is the view that pops up | |||

[[File:E1677ins11.PNG]] | |||

2. When an instructor clicks on View Review Metrics, the popup containing the Metrics for all the responses done by the particular reviewer are displayed in a tabular format. | |||

[[File:E1677ins2.PNG]] | |||

====Student View==== | |||

1. When a student clicks on Other's Work after clicking on a specific Assignment, this is the view that pops up, containing a View Text Metrics for each review that the student has done. | |||

[[File:E1677stu2.PNG]] | |||

2. When the student clicks on View Review Metrics, the popup opens which displays the metrics for the specific review. | |||

[[File:E1677ins1.PNG]] | |||

===Testing Plan=== | |||

As verified with the instructor, the scope for testing in this project will be limited to majorly UI testing. | |||

For user/reviewer, the steps will be as follows | |||

# Login to Expertiza with your credentials | |||

# Open the ''Assignments'', click on ''Other Work'' | |||

# For every review entered, hover the mouse on the icons beside the review | |||

# The review will be summarised by caption texts on the icon like "Good Content" or "Obscene Word usage" or "200 words reveiw" | |||

# View the metrics for each review. | |||

Few of the Test cases added to the test file for Review Metric. | |||

[[File:E1677test.PNG]] | |||

For the instructor, the steps will be as follows | |||

# Login to Expertiza with instructor credentials | |||

# Open Manage Assignments | |||

# Select the Assignment. Click on the icon 'View Reviews' | |||

# Depending on the stage of the assignment , the reviews will be listed in the order they were filled. | |||

# Metrics will be displayed on hovering the mouse over each icon. | |||

====Files Changed and Added==== | |||

====Models==== | |||

#ReviewMetric.rb | |||

#Response.rb | |||

====Controllers==== | |||

#popup_controller.rb | |||

#review_metric_controller.rb | |||

====Views==== | |||

#student_review/list.html | |||

#review_mapping/review_report.html.erb | |||

#popup/view_review_metrics | |||

#popup/view_student_review_metrics | |||

===Important Links=== | |||

Link to Github repository : ''http://github.com/sequae92/expertiza.git'' | |||

Link to Pull request : ''https://github.com/expertiza/expertiza/pull/892'' | |||

===References=== | |||

#''https://sourcemaking.com/design_patterns'' | |||

#''http://wiki.expertiza.ncsu.edu/index.php/Main_Page'' | |||

#''https://github.com/diasks2/ruby-nlp'' | |||

#''http://www.ibm.com/developerworks/rational/library/769.html'' | |||

Latest revision as of 09:48, 8 December 2016

This is the design review of our final project as part of CSC 517 in Fall 2016. The Project is titled Text Metrics.

Description

This project will facilitate few text metrics to evaluate student's peer reviews. The text metrics will provide a result about the textual content, the nature of the review, whether it contains any sort of offensive or improper language, and quantify the sincerity of the review in general.

Tasks to be completed

The tasks that have to be completed as part of this project can be identified as follows:

- Create DB table [review_metrics] to record all the metrics of volume, presence of suggestions, errors/problems pointed out by the reviewer and offensive words used in the review text if any.

- Add a pop-up dialog or a label for both students (student_review/list) and for instructors by adding content in review_mapping/response_report page metrics column

- Make sure the code works for both assignments with and without "vary rubric by rounds" selected.

- Make sure the code updates the review text metrics table when the peer reviews are updated.

- Sort the reviews based on the text metrics on the “Your scores” pages of students’ view

- Create tests to make sure the test coverage increases

Current Implementation

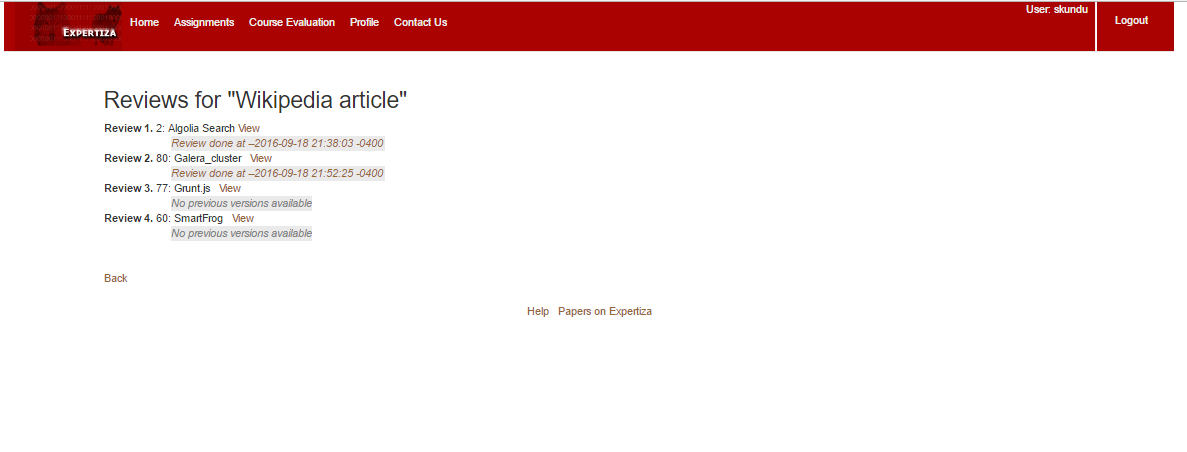

Currently, after completion of reviews, the reviewers can view their responses as shown in the screenshot below:

Instructors can see the response of the reviewers. However, in order to analyze the quality of review given by the students, the instructors have to manually go through all the reviews given by every student one at a time. This could be a time-consuming process, and the reviewers may not be properly assessed.

In order to ease the process of evaluating the reviewers, metrics that can analyze the text written by the reviewers can be added. This will give more information about the reviews given by the reviewers to the instructors evaluating them.

The picture below shows the present implementation. The reviews do not show the metrics of the review, which could provide a quick summary of how the review is written/perceived.

This picture shows how the reviews are displayed in the current implementation in Expertiza. The reviews are not sorted

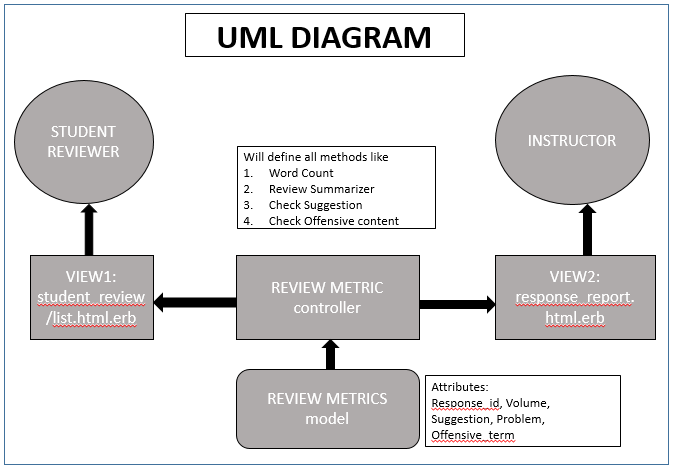

UML Diagram

The UML diagram below shows the MVC design proposed as part of the project and the relationships between the different elements. The users being students and instructors, a single model called review metric need to be defined with its own controller review_metrics_controller which will have all the metric-based methods defined in it. The controller will update the attribute values in the review metrics table when a review is created or updated. This will then be visible in the views.

Proposed Design

TASK 1 - we will be adding a new model Review_metrics. This model will include all the metrics as follows:

- response_id → int(11) → foreign key

- volume → int(11) → # of [different] words

- suggestion → tinyint(1) → if suggestion is given in the peer-review

- problem → tinyint(1) → if problems or errors in the artifact are pointed out in the peer-review

- offensive_term → tinyint(1) → if the peer-review contains any offensive terms

There will be a consequent review_metric_controller which will include all the necessary CRUD operations for review metrics such as add, remove and update review metrics.

We will be dealing with the following View files:

- student_review/list.html.erb - for the student

- review_mapping/response_report.html.erb - for the instructor

TASK 2 - For each peer review , we will be defining the following metrics, which the reviewer/instructor will be able to view after hovering the mouse over over the respective icons (it will either be a pop-up or a label text)

- Number of words average - Integer

- Number of words for all the reviews in this assignment in this round if suggestion is given- Integer

- The percentage of the peer-reviews which offer suggestions in this assignment in this round - Integer

- If problems or errors are pointed out - Boolean

- The percentage of the peer-reviews which point out problems in this assignment in this round - Boolean

- If the peer-review contains offensive language - Boolean

- The percentages of the peer-reviews which contain offensive language - Integer

The picture below shows how the proposed design will look to the user. The icons from left to right, will be indicative of the following metrics. (NOTE: The icon images are not subject to any copyright rules)

- Icon 1 -> Number of reviews

- Icon 2 -> Indicative of Vulgar content

- Icon 3 -> Indicative of Similarity of reviews

- Icon 4 -> Indicative of Problems or Errors in the peer review

- Icon 5 -> Indicative of Suggestions made

The code snippet below shows where the view will be updated when the icons will be added - review_mapping/list.html.erb

<%= create_report_table_header("Reviewer" => "16%">

"# reviews done per round" => "14%",

"Team reviewed" => "20%",

"Score awarded / Avg. score" => "14%",

"Metrics" => "10%",

"Assign grade and write comments" => "36%") %>

<%@l = -1 %>

<% sort_reviewer_by_review_volume_desc.each do |r| %>

<% @response_maps, @bgcolor, @rspan, @l = get_data_for_review_report(@id, r.id, @type, @l) %>

<%if r.id != -1 %> <% username = Participant.find(r.id).user.fullname %> <td bgcolor=<%= @bgcolor %> rowspan= <%= @rspan%>> <%= link_to username, :controller => 'popup', :action => 'reviewer_details_popup', :id => r.id, :assignment_id=>@id%> <td bgcolor=<%= @bgcolor %> rowspan= <%= @rspan%> align = 'left'> <% (1..@assignment.num_review_rounds).each do |round| %> <%= instance_variable_get("@review_in_round_" + round.to_s) %>/<%= @rspan %> <%= ', ' unless round == @assignment.num_review_rounds %> <% end %> <%= link_to "reviewer summary", :controller => 'popup', :action => 'view_review_scores_popup', :reviewer_id => r.id, :assignment_id=>@id %> TASK 3 - When the instructor checks the condition 'Vary rubric by rounds' the new code should work either way. This will be done checking the boolean method is_varying_rubric_by_rounds and then proceeding to update the review_metrics table. TASK 4 - We will be defining an RSpec file in review_metrics_controller which will check that the metrics table is updated when a review is updated. TASK 5 - We will be sorting and displaying the reviews in the file list.html.erb which is part of views/review_mapping based on the number of words metric received from the review_metrics table. Updating a review will effect a change in the review metrics table, therefore this will dynamically change the order if the metric has changed. NOTE: Code snippets have not been shown for Task 3, 4 and 5 because present code does not exist. We need to create new view and new rspec test cases for the same.

Design Pattern Used

Iterator pattern is used as the design pattern. This pattern is used in order to allow us to iterate over the elements of a collection regardless of their implementation. In our case, the collection is the different responses given by a reviewer in one single feedback. Since we will be iterating over the different responses in a given feedback by a reviewer (where the different responses in a given feedback are the elements of the collection) in order to collect the text metrics, the design pattern used will be an iterator pattern. This will provide a standard interface for starting an iteration and moving to the next element, regardless of the implementation of the collection.

Features to be added

Added label or pop up which will indicate the metrics as icons, as shown below. These icons will directly summarize the review in terms of predefined metrics. The reviews will be sorted according to the text metrics and will be displayed to the student on the 'Your Scores' page.

Gems to be used

This project provides us the opportunity to use the Ruby-NLP gem which has specific methods for analyzing language with predefined patterns. We will be entering a filter for a tentative list of foul words, which when detect will populate as a metric.

Gem - ruby-nlp Gem - Automated Metareview

The Automated Metareview has been used in parts in the present expertiza codebase but has not been implemented. We have take inspiration from the set of phrases such as NEGATIVE_DESCRIPTORS< SUGGESTIVE_WORDS which are some of the constants as part of the gem documentation.

Features like Word Count, Text summarization, Language detection, Text similarity, parsers are all part of this gem file which has been taken as baseline while implemneting few of the features.

Metrics View

Instructor View

1. When an instructor views all Assignments and clicks on the Review Report, this is the view that pops up

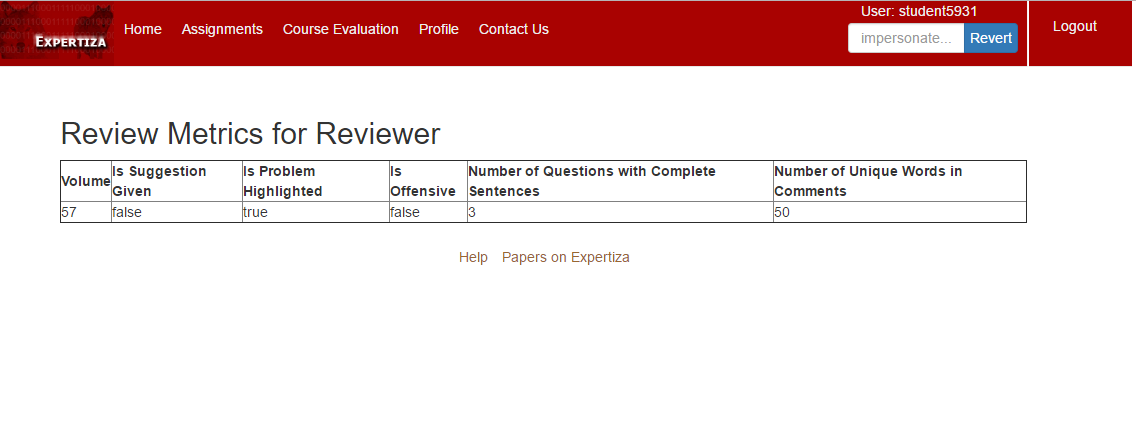

2. When an instructor clicks on View Review Metrics, the popup containing the Metrics for all the responses done by the particular reviewer are displayed in a tabular format.

Student View

1. When a student clicks on Other's Work after clicking on a specific Assignment, this is the view that pops up, containing a View Text Metrics for each review that the student has done.

2. When the student clicks on View Review Metrics, the popup opens which displays the metrics for the specific review.

Testing Plan

As verified with the instructor, the scope for testing in this project will be limited to majorly UI testing. For user/reviewer, the steps will be as follows

- Login to Expertiza with your credentials

- Open the Assignments, click on Other Work

- For every review entered, hover the mouse on the icons beside the review

- The review will be summarised by caption texts on the icon like "Good Content" or "Obscene Word usage" or "200 words reveiw"

- View the metrics for each review.

Few of the Test cases added to the test file for Review Metric.

For the instructor, the steps will be as follows

- Login to Expertiza with instructor credentials

- Open Manage Assignments

- Select the Assignment. Click on the icon 'View Reviews'

- Depending on the stage of the assignment , the reviews will be listed in the order they were filled.

- Metrics will be displayed on hovering the mouse over each icon.

Files Changed and Added

Models

- ReviewMetric.rb

- Response.rb

Controllers

- popup_controller.rb

- review_metric_controller.rb

Views

- student_review/list.html

- review_mapping/review_report.html.erb

- popup/view_review_metrics

- popup/view_student_review_metrics

Important Links

Link to Github repository : http://github.com/sequae92/expertiza.git

Link to Pull request : https://github.com/expertiza/expertiza/pull/892