Chapter 6: Allison Hamann, Chris Barile: Difference between revisions

m (→Sector Cache: Added sub headers that will have the content expanded on.) |

m (→Organization: Added image of cache to section) |

||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 5: | Line 5: | ||

===Handling Misses=== | ===Handling Misses=== | ||

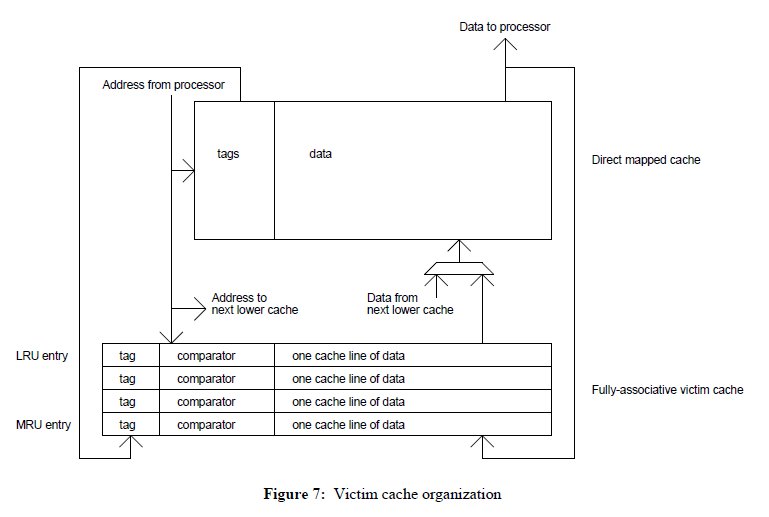

[[File:Jouppi_victimcaching_fig7.jpg|thumb|Jouppi's illustration of victim caching.]] | |||

The proposed victim cache is fully-associative and lies between the L1 memory and the next level of memory. While Jouppi proposed a victim cache with 1 to 5 entries, Naz et al. proposed that the victim caches should be 4 to 16 cache lines.<ref>http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=134547</ref><ref>http://dl.acm.org/citation.cfm?id=1101876</ref> Regardless of the size, when a miss occurs in the L1 cache, the victim cache is then scanned for the wanted line. If a miss occurs in both the L1 and victim cache, the needed line is then pulled from the next level, and the line evicted from the L1 cache is then placed in the victim cache. If a miss occurs in the L1 cache but hits in the victim cache, the two lines are swapped between the two caches. Thus, this eliminates the majority of conflict misses that occur due to temporal locality. | The proposed victim cache is fully-associative and lies between the L1 memory and the next level of memory. While Jouppi proposed a victim cache with 1 to 5 entries, Naz et al. proposed that the victim caches should be 4 to 16 cache lines.<ref>http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=134547</ref><ref>http://dl.acm.org/citation.cfm?id=1101876</ref> Regardless of the size, when a miss occurs in the L1 cache, the victim cache is then scanned for the wanted line. If a miss occurs in both the L1 and victim cache, the needed line is then pulled from the next level, and the line evicted from the L1 cache is then placed in the victim cache. If a miss occurs in the L1 cache but hits in the victim cache, the two lines are swapped between the two caches. Thus, this eliminates the majority of conflict misses that occur due to temporal locality. | ||

===Implementation=== | |||

Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. ''Computer Architecture: A Quantitative Approach''. Elsevier, Inc., 2012, p. B-14.</ref> | |||

==Sector Cache== | ==Sector Cache== | ||

Sector cache | One early cache organization technique was sector cache. It was used on the IBM 360/85, which was one of the earliest commercial CPUs<ref name="rot99">Rothman, Jeffrey B. and Alan Jay Smith. Sector Cache Design and Performance</ref>. Sector cache allowed for smaller tag sizes, which made searching in cache easier and quicker, without requiring cache lines to be excessively long.<ref name="rot99"/> It was also easier to build with the circuit technology of the time.<ref name="rot99"/> In testing during the design of the IBM 360/85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers.<ref name="lip68">Liptay, J. S. Structural Aspects of the System/360 Model 85, Part II: The Cache.</ref> Sector caches were later replaced by set associative caches, which were found to be more efficient.<ref name="rot99"/> | ||

=== | ===Organization=== | ||

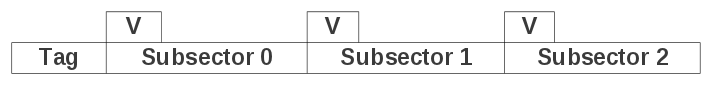

[[Image:Sectorcache.png|200px|thumb|right|Sectors in cache are made of subsectors. Each subsector has a validity bit indicating whether or not it has been loaded with data.]]The cache is divided into sectors, which correspond to logical sectors on the main storage device.<ref name="lip68"/> When sectors are needed, they are not loaded into cache all at once, but in smaller pieces known as subsectors.<ref name="lip68"/> Subsectors are similar to the lines in a direct mapped cache, and are only loaded into cache when needed. This prevents large amounts of data from having to be transferred for every memory reference. Subsectors have a validity bit that indicates whether they are loaded with data or not.<ref name="lip68"/> | |||

===Miss Handling=== | ===Miss Handling=== | ||

When a process requests data from a disk sector that is not in the cache, a cache sector is assigned to the sector on the main storage device where the requested data is stored. Then the subsector where the data is located is loaded into the cache. The subsector's validity bit is then set to reflect that it has been loaded from the main storage. When data from other subsectors within a loaded sector are requested, the system loads those subsectors into the cache sector and sets their validity bits. Sectors are not removed from the cache until the system needs to reclaim the space to process another request. | When a process requests data from a disk sector that is not in the cache, a cache sector is assigned to the sector on the main storage device where the requested data is stored.<ref name="lip68"/> Then the subsector where the data is located is loaded into the cache. The subsector's validity bit is then set to reflect that it has been loaded from the main storage.<ref name="lip68"/> When data from other subsectors within a loaded sector are requested, the system loads those subsectors into the cache sector and sets their validity bits.<ref name="lip68"/> Sectors are not removed from the cache until the system needs to reclaim the space to process another request.<ref name="lip68"/> | ||

==References== | ==References== | ||

<references /> | <references /> | ||

Latest revision as of 05:51, 22 February 2012

Victim Cache

Victim caches were first proposed by Norman P. Jouppi in 1990. Victim caching implements a small, fully-associative cache between direct-mapped L1 memory and the next level of memory. The cache allows lines evicted from the L1 cache a “second-chance” by loading them into the victim cache. Victim caches decrease the overall conflict miss rate (Jouppi).

Direct-mapped caches can especially benefit from victim caching due to their large miss rates. Victim caching allows direct-mapped caches to still be used in order to take advantage of their speed while decreasing the miss rate to an even lower rate than the miss rate found in set-associative caches (Jouppi).

Handling Misses

The proposed victim cache is fully-associative and lies between the L1 memory and the next level of memory. While Jouppi proposed a victim cache with 1 to 5 entries, Naz et al. proposed that the victim caches should be 4 to 16 cache lines.<ref>http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=134547</ref><ref>http://dl.acm.org/citation.cfm?id=1101876</ref> Regardless of the size, when a miss occurs in the L1 cache, the victim cache is then scanned for the wanted line. If a miss occurs in both the L1 and victim cache, the needed line is then pulled from the next level, and the line evicted from the L1 cache is then placed in the victim cache. If a miss occurs in the L1 cache but hits in the victim cache, the two lines are swapped between the two caches. Thus, this eliminates the majority of conflict misses that occur due to temporal locality.

Implementation

Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. Computer Architecture: A Quantitative Approach. Elsevier, Inc., 2012, p. B-14.</ref>

Sector Cache

One early cache organization technique was sector cache. It was used on the IBM 360/85, which was one of the earliest commercial CPUs<ref name="rot99">Rothman, Jeffrey B. and Alan Jay Smith. Sector Cache Design and Performance</ref>. Sector cache allowed for smaller tag sizes, which made searching in cache easier and quicker, without requiring cache lines to be excessively long.<ref name="rot99"/> It was also easier to build with the circuit technology of the time.<ref name="rot99"/> In testing during the design of the IBM 360/85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers.<ref name="lip68">Liptay, J. S. Structural Aspects of the System/360 Model 85, Part II: The Cache.</ref> Sector caches were later replaced by set associative caches, which were found to be more efficient.<ref name="rot99"/>

Organization

The cache is divided into sectors, which correspond to logical sectors on the main storage device.<ref name="lip68"/> When sectors are needed, they are not loaded into cache all at once, but in smaller pieces known as subsectors.<ref name="lip68"/> Subsectors are similar to the lines in a direct mapped cache, and are only loaded into cache when needed. This prevents large amounts of data from having to be transferred for every memory reference. Subsectors have a validity bit that indicates whether they are loaded with data or not.<ref name="lip68"/>

Miss Handling

When a process requests data from a disk sector that is not in the cache, a cache sector is assigned to the sector on the main storage device where the requested data is stored.<ref name="lip68"/> Then the subsector where the data is located is loaded into the cache. The subsector's validity bit is then set to reflect that it has been loaded from the main storage.<ref name="lip68"/> When data from other subsectors within a loaded sector are requested, the system loads those subsectors into the cache sector and sets their validity bits.<ref name="lip68"/> Sectors are not removed from the cache until the system needs to reclaim the space to process another request.<ref name="lip68"/>

References

<references />