CSC ECE 506 Spring 2015 6a vs: Difference between revisions

| (19 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

=Cache Hierarchy= | =Cache Hierarchy= | ||

[[Image: memchart.jpg|thumbnail|300px|right|Memory Hierarchy<sup><span id="9body">[[#9foot|[9]]]</span></sup>]] | [[Image: memchart.jpg|thumbnail|300px|right|Memory Hierarchy<sup><span id="9body">[[#9foot|[9]]]</span></sup>]] | ||

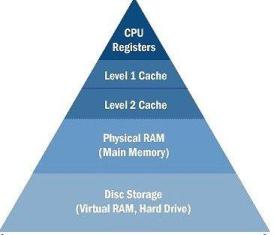

In a simple computer model, processor reads data and instructions from the memory and operates on the data. Operating frequency of CPU increased faster than the speed of memory and memory interconnects. Over the years the increase in the Intel microprocessor's speed has been roughly 60% per year, while memory access times have improved by less than 10% per year. Also, multi-core architecture started putting more demand on memory bandwidth. This increases the latency in memory access and CPU will have to | In a simple computer model, processor reads data and instructions from the memory and operates on the data. Operating frequency of CPU has increased faster than the speed of memory and memory interconnects. Over the years the increase in the Intel microprocessor's speed has been roughly 60% per year, while memory access times have improved by less than 10% per year. Also, multi-core architecture has started putting more demand on the memory bandwidth. This increases the latency in memory access and CPU will have to remain idle for most of the time. Due to this, memory has became a bottle neck in performance. | ||

To solve this problem, “cache” was invented. Cache is simply a temporary volatile storage space like primary memory but runs at the speed similar to core frequency. CPU can access data and instructions from cache in few clock cycles while accessing data from main memory can take more than 50 cycles. In early days of computing, cache was implemented as a stand alone chip outside the processor. In today’s processors, cache is implemented on same die as core. | To solve this problem, “cache” was invented. Cache is simply a temporary volatile storage space like primary memory but runs at the speed similar to core frequency. CPU can access data and instructions from cache in few clock cycles while accessing data from main memory can take more than 50 cycles. In early days of computing, cache was implemented as a stand alone chip outside the processor. In today’s processors, cache is implemented on the same die as the core. | ||

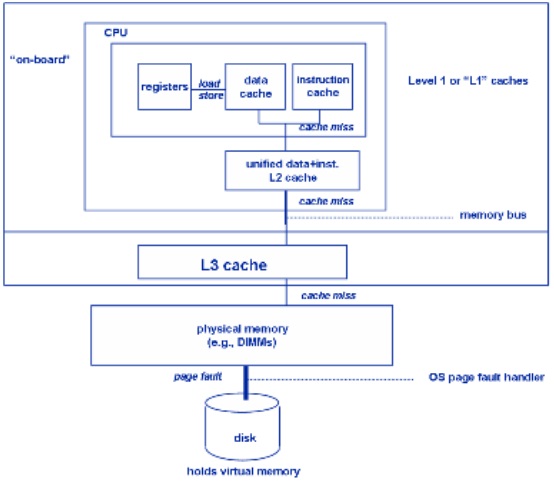

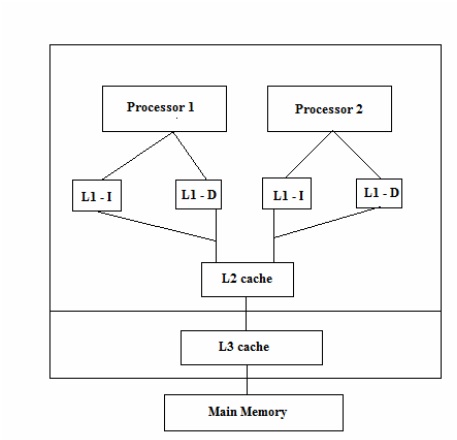

There can be multiple levels of caches, each cache subsequently away from the core and larger in size. L1 is closest to the CPU and as a result, fastest to | There can be multiple levels of caches, each cache level subsequently away from the core and larger in size. L1 is closest to the CPU and as a result, fastest to access. Next to L1 is L2 cache and then L3. L1 cache is divided into instruction cache and data cache. This is better than having a combined larger cache as instruction cache being read-only is easy to implement while data cache is read-write.<br> | ||

Cache management is impacted by three characteristics of modern processor architectures: multilevel cache hierarchies, multi-core and multiprocessor chip and systems designs, and shared vs. private caches. | Cache management is impacted by three characteristics of modern processor architectures: multilevel cache hierarchies, multi-core and multiprocessor chip and systems designs, and shared vs. private caches. | ||

| Line 141: | Line 141: | ||

=Cache Write Policies= | =Cache Write Policies= | ||

In section 6.2.3,<sup><span id="10body">[[#10foot|[10]]]</span></sup> cache write hit policies and write miss policies were explored. The write hit policies, write-through and write-back, determine when the data written in the local cache is propagated to L1 memory. | In section 6.2.3,<sup><span id="10body">[[#10foot|[10]]]</span></sup> cache write hit policies and write miss policies were explored. The write hit policies, write-through and write-back, determine when the data written in the local cache is propagated to L1 memory. Write-through writes data to the cache and memory on a write request. Write-back writes to cache first and to memory only when a flush is required. | ||

The write miss policies covered in the text,<sup><span id="10body">[[#10foot|[10]]]</span></sup> write-allocate and write no-allocate, determine if a memory block is stored in a cache line after the write occurs. Note that since a miss occurred on write, the block is not already in a cache line. | The write miss policies covered in the text,<sup><span id="10body">[[#10foot|[10]]]</span></sup> write-allocate and write no-allocate, determine if a memory block is stored in a cache line after the write occurs. Note that since a miss occurred on write, the block is not already in a cache line. | ||

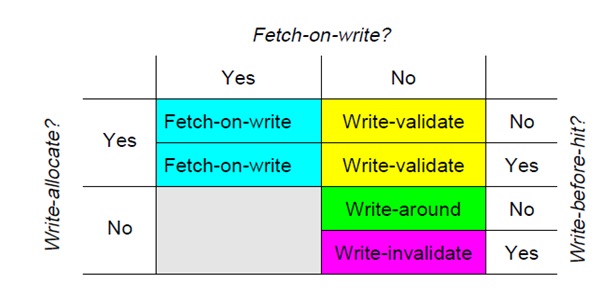

Write-miss policies can also be expressed in terms of write-allocate, fetch-on-write, and write-before-hit. These can be used in combination with the same write-through and write-back hit policies to define the complete cache write policy. Investigating alternative policies such as these is important since write-misses produce on average one-third of all cache misses. | Write-miss policies can also be expressed in terms of write-allocate, fetch-on-write, and write-before-hit. These can be used in combination with the same write-through and write-back hit policies to define the complete cache write policy. Investigating alternative policies such as these is important since write-misses produce on average one-third of all cache misses. | ||

| Line 169: | Line 169: | ||

Write miss policies can help eliminate write misses and therefore reduce bus traffic. This reduces the wait-time of all processors sharing the bus. | Write miss policies can help eliminate write misses and therefore reduce bus traffic. This reduces the wait-time of all processors sharing the bus. | ||

*Write-allocate vs Write no-allocate: When a write | *Write-allocate vs Write no-allocate: When a write miss occurs in the cache, there may or may not be a line in the cache allocated to the block. For write-allocate, there will be a line in the cache for the written data. This policy is typically associated with write-back caches. For no-write-allocate, there will not be a line in the cache. | ||

*Fetch-on-write vs no-fetch-on-write: The fetch-on-write will cause the block of data to be fetched from a lower memory hierarchy if the write | *Fetch-on-write vs no-fetch-on-write: The fetch-on-write will cause the block of data to be fetched from a lower memory hierarchy if the write miss occurs. The policy fetches a block on every write miss. Fetch-on-write determines if the block is fetched from memory, and write-allocate determines if the memory block is stored in a cache line. Certain write policies may allocate a line in cache for data that is written by the CPU without retrieving it from memory first. | ||

*Write-before-hit vs no-write-before-hit: The write-before-hit will write data to the cache before checking the cache tags for a match. In case of a miss, the policy will displace the block of data already in the cache. | *Write-before-hit vs no-write-before-hit: The write-before-hit will write data to the cache before checking the cache tags for a match. In case of a miss, the policy will displace the block of data already in the cache. | ||

== | ==Combination Policies== | ||

The two combinations of fetch-on-write and no-write-allocate are blocked out in the above diagram. They are considered unproductive since the data is fetched from L1 memory, but is not stored in the cache. Temporal and spatial locality suggest that this data will be used again, and will therefore need to be retrieved a second time. | |||

Three combinations named write-validate, write-around, and write-invalidate all use no-fetch-on-write. They result in 'eliminated misses' when compared to a fetch-on-write policy. In general, this will yield better cache performance if the overhead to manage the policy remains low. | |||

* Write-validate: It is a combination of no-fetch-on-write and write-allocate.<sup><span id="4body">[[#4foot|[4]]]</span></sup> The policy allows partial lines to be written to the cache on a miss. It provides for better performance as well as works with machines that have various line sizes and does not add instruction execution overhead to the program being run. Write-validate requires that the L1 system memory can support writes of partial lines. The no-fetch-on-write has the advantage of avoiding an immediate bus transaction to retrieve the block from memory. For a multiprocessor with a cache coherency model a bus transaction will be required to get exclusive ownership of the block. While this appears to negate the advantages of no-fetch-on-write, the process is able to continue other tasks while this transaction is occurring, including rewriting to the same cache location. Tests by the author demonstrated more than a 90% reduction in write misses when compared to fetch-on-write policies. | |||

* Write-invalidate: This policy is a combination of write-before-hit, no-fetch-on-write, and no-write-allocate.<sup><span id="4body">[[#4foot|[4]]]</span></sup> This policy invalidates lines when there is a miss. With this policy, the copy that exists in L1 memory after the write miss differs from the one in the cache. For write hits the data is simply written into the cache using the cache hit policy. Thus, for hits, the cache is not written around. Tests by the author demonstrated a 35-50% reduction in write misses when compared to fetch-on-write policies. | |||

* Write-around: This policy is a combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit.<sup><span id="4body">[[#4foot|[4]]]</span></sup> This policy uses a non-blocking write scheme to write to cache. It writes data to the next lower cache without modifying the data of the cache line. It bypasses the L2 cache, writes directly to the L1 memory, and leaves the existing block line in the cache unaltered on a hit or a miss. This strategy shows performance improvements when the data that is written will not be reread in the near future. Since we are writing before a hit is detected, the cache is written around for both hits and misses. The author notes that in only but a few cases write-around performs worse than write-validate policies. Most applications tend to reread what they have recently written. Using a write-around policy, this would result in a cache miss and a read from L1 memory. With write-validate, the data would be in cache. Tests by the author demonstrated a 40-70% reduction in write misses when compared to fetch-on-write policies. | |||

Of these three combinations, write-validate performed the worse. The author notes that it does perform better than fetch-on-write and is easy to implement. | |||

* | * Fetch-on-write: When used with write-allocate, fetch-on-write stores the block and processes the write to cache as expected. This policy was used as the base-line for determining the performance of the other three policy combinations. In general, all three previous combinations exhibited fewer misses than this policy. | ||

Replacement | =Cache Replacement Policies= | ||

Whenever a cache is full or conflicts for a set, a block in a cache line must be evicted in-order to make room for the new block. Replacement policies determine which block to choose for the eviction. | |||

* | *[https://en.wikipedia.org/wiki/CPU_cache#Direct-mapped_cache Direct mapped Caches]: Here incoming block can be placed in only one location( Direct Mapped Cache ) as a result there is no choice of which block to evict. | ||

*Set Associative and Fully Associative caches: Here blocks from multiple cache lines can be evicted for an incoming block.<sup><span id="28body">[[#28foot|[28]]]</span></sup> | |||

Replacement policies in general should evict the cache block so that it will minimise the future cache misses. | |||

* | *[https://en.wikipedia.org/wiki/Cache_algorithms#LRU Least Recently Used(LRU)] policy: In LRU policy, cache ranks each cache lines in a set based on how recently they have been accessed and evicts the block from least recently accessed cache line. Whenever a cache line is referenced, information on recent access must be updated leading to a complicated hardware. | ||

*[https://en.wikipedia.org/wiki/Least_frequently_used Least Frequently Used(LFU)] policy: In LFU policy, counter is maintained on how often the cache line is referenced and evicts the block from least frequently used cache line. | |||

There are other replacement policies such as Most Recently Used(MRU), Random Replacement(RR), [https://en.wikipedia.org/wiki/Pseudo-LRU Psuedo-LRU(PLRU)], Segmented LRU(SLRU) and so on. Each type of replacement policy works best for specific type of access patterns or the workload.<sup><span id="27body">[[#27foot|[27]]]</span></sup> Most latest Intel i7 processors use Pseudo-LRU policy for replacement owing to its lower latency and low power consumption. | |||

=Prefetching= | =Prefetching= | ||

[http://en.wikipedia.org/wiki/Instruction_prefetch Prefetching] is a technique that can be used in addition to write-hit and write-miss policies to | [http://en.wikipedia.org/wiki/Instruction_prefetch Prefetching] is a technique that can be used in addition to write-hit and write-miss policies to improve the utilization of the cache. Microprocessors, such as those listed in Table 1, support prefetching logic directly in the chip. Cache is very efficient in terms on access time once the data or instructions are in the cache. But when a process tries to access something that is not already in the cache, a cache miss occurs and those pages need to be brought into the cache from memory. Generally cache misses are expensive to the performance as the processor has to wait for the data (In parallel processing, a process can execute other tasks while it is waiting on data, but there will be some overhead for this). Prefetching is a technique in which data is brought into cache before the program needs it. In other words, it is a way to reduce cache misses. Prefetching uses some type of prediction mechanism to anticipate the next data that will be needed and brings them into cache. It is not guaranteed that the prefetched data will be used though. The goal here is to reduce the cache misses to improve the overall performance.<br><br> | ||

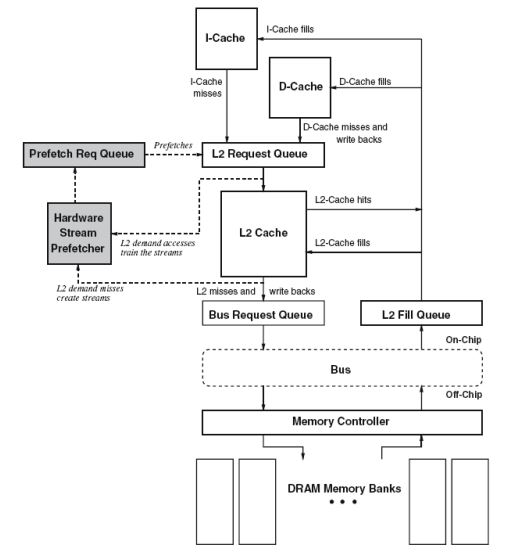

Some architectures have instructions to prefetch data into cache. Programmers and compliers can insert this prefect instruction in the code. This is known as [http://www.ece.lsu.edu/tca/papers/vanderwiel-00.pdf software prefetching]. Software prefetching can be of further two types: binding and non-binding. Binding pre-fetches into a register using a regular load while non-binding prefetches into cache using a special instruction. In [http://www.ece.lsu.edu/tca/papers/vanderwiel-00.pdf hardware prefetching], processor observers the system behavior and issues requests for prefetching. It can be tuned to system implementation and has no code portability issues. Next Line prefetchers, [http://www.bergs.com/stefan/general/general_slides/sld011.htm Stride Prefetchers] and [http://www.owlnet.rice.edu/~elec525/projects/SBpresentation.pdf Stream Prefetchers] are some of the modern techniques to implement hardware based prefetching. Intel 8086 and Motorola 68000 were the first microprocessors to implement instruction prefetch. Graphics Processing Units benefit from prefetching due to spatial locality property of the data.<sup><span id="8body">[[#8foot|[8]]]</span></sup>. The following image shows how a prefetch is organised in a memory system.<sup><span id="26body">[[#26foot|[26]]]</span></sup> | Some architectures have instructions to prefetch data into cache. Programmers and compliers can insert this prefect instruction in the code. This is known as [http://www.ece.lsu.edu/tca/papers/vanderwiel-00.pdf software prefetching]. Software prefetching can be of further two types: binding and non-binding. Binding pre-fetches into a register using a regular load while non-binding prefetches into cache using a special instruction. In [http://www.ece.lsu.edu/tca/papers/vanderwiel-00.pdf hardware prefetching], processor observers the system behavior and issues requests for prefetching. It can be tuned to system implementation and has no code portability issues. Next Line prefetchers, [http://www.bergs.com/stefan/general/general_slides/sld011.htm Stride Prefetchers] and [http://www.owlnet.rice.edu/~elec525/projects/SBpresentation.pdf Stream Prefetchers] are some of the modern techniques to implement hardware based prefetching. Intel 8086 and Motorola 68000 were the first microprocessors to implement instruction prefetch. Graphics Processing Units benefit from prefetching due to spatial locality property of the data.<sup><span id="8body">[[#8foot|[8]]]</span></sup>. The following image shows how a prefetch is organised in a memory system.<sup><span id="26body">[[#26foot|[26]]]</span></sup> | ||

[[Image:Fetch_1.JPG|center|alt=How a Prefetch fits in memory system]] | [[Image:Fetch_1.JPG|center|alt=How a Prefetch fits in memory system]] | ||

| Line 222: | Line 222: | ||

# Accuracy is defined as fraction of prefetches that are useful. | # Accuracy is defined as fraction of prefetches that are useful. | ||

# Timeliness measures how early the prefetches arrive. | # Timeliness measures how early the prefetches arrive. | ||

Ideally, a system should have high coverage, high accuracy and optimum timeliness. Realistically, aggressive prefetches can increase coverage but decrease accuracy and vice versa. Also, if prefetching is done too early, the fetched data may have to be evicted without being used and if done too late, it can cause cache miss. | Ideally, a system should have high coverage, high accuracy and optimum timeliness. Realistically, aggressive prefetches can increase coverage but decrease accuracy and vice versa. Also, if prefetching is done too early, the fetched data may have to be evicted without being used and if done too late, it can cause a cache miss. | ||

==Stream Buffer Prefetching== | ==Stream Buffer Prefetching== | ||

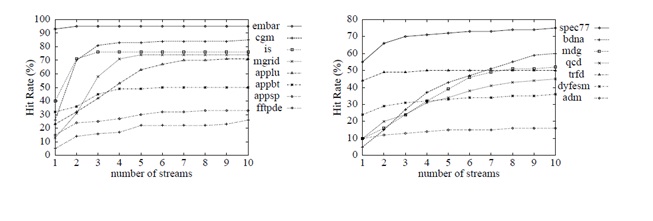

This is a technique for prefetching which uses a FIFO buffer in which each entry is a cacheline and has address (or tag) and a available bit. System prefetches a stream of sequential data into a stream buffer and multiple stream buffers can be used to prefetch multiple streams in parallel. On a cache access, head entries of stream buffers are checked for match along with cache check. If the block is not found in cache but found at the head of stream buffer, it is moved to cache and next entry in the buffer becomes the head. If the block is not found in cache or as head of buffer, data is brought from memory into cache and the subsequent address | This is a technique for prefetching which uses a FIFO buffer in which each entry is a cacheline and has a address (or tag) and a available bit. System prefetches a stream of sequential data into a stream buffer and multiple stream buffers can be used to prefetch multiple streams in parallel. On a cache access, head entries of stream buffers are checked for match along with cache check. If the block is not found in cache but found at the head of stream buffer, it is moved to cache and next entry in the buffer becomes the head. If the block is not found in cache or as head of buffer, data is brought from memory into cache and the subsequent address is assigned to a new stream buffer. Only the heads of the stream buffers are checked during cache access and not the whole buffer. Checking all the entries in all the buffers will increase hardware complexity. | ||

<br> | <br> | ||

<br> | <br> | ||

| Line 235: | Line 235: | ||

On a uniprocessor system, prefetching is definitely helpful to improve performance. On a multiprocessor system prefetching is useful, but there are tighter constrains in implementing because of the fact that data will be shared between different processors or cores. In message passing parallel model, each parallel thread has its own memory space and prefetching can be implemented in same way as for uniprocessor system.<br><br> | On a uniprocessor system, prefetching is definitely helpful to improve performance. On a multiprocessor system prefetching is useful, but there are tighter constrains in implementing because of the fact that data will be shared between different processors or cores. In message passing parallel model, each parallel thread has its own memory space and prefetching can be implemented in same way as for uniprocessor system.<br><br> | ||

In shared memory parallel programming, multiple threads that run on different processors share common memory space. If multiple processors use common cache, then prefetching implementation is similar to uniprocessor system. Difficulties arise when each core has its own cache. Some of the case-scenarios that can occur are: | In shared memory parallel programming, multiple threads that run on different processors share common memory space. If multiple processors use common cache, then prefetching implementation is similar to uniprocessor system. Difficulties arise when each core has its own cache. Some of the case-scenarios that can occur are: | ||

# Processor P1 has prefetched some data D1 into its stream buffer but is not used it. At the same time processor P2 reads data D1 into its cache and modifies it and one of the cache coherency protocols would be used to inform processor P1 about this change. D1 is not in P1’s cache so it | # Processor P1 has prefetched some data D1 into its stream buffer but is not used it. At the same time processor P2 reads data D1 into its cache and modifies it and one of the cache coherency protocols would be used to inform processor P1 about this change. D1 is not in P1’s cache so it may simply ignore this. Now, when P1 tries to read D1, it will get the stale data from its stream buffer. One way to prevent this is by improving stream buffers so that they can modify their data just like a cache. This adds complexity to the architecture and increases cost.<sup><span id="6body">[[#6foot|[6]]]</span></sup> | ||

# Prefetching mechanism can fetch data D1, D2, D3,….D10 into P1’s buffer. Now due to parallel processing, P1 only needs to operate on D1 to D5 while P2 will operate on remaining data. Some bandwidth was wasted in fetching D6 to D10 into P1 even though it did not use it. There is a trade off to be made here, if prefetching is very conservative then it will lead to miss and if not then it will waste bandwidth. | # Prefetching mechanism can fetch data D1, D2, D3,….D10 into P1’s buffer. Now due to parallel processing, P1 only needs to operate on D1 to D5 while P2 will operate on remaining data. Some bandwidth was wasted in fetching D6 to D10 into P1 even though it did not use it. There is a trade off to be made here, if prefetching is very conservative then it will lead to a miss and if not then it will waste bandwidth. | ||

# In a multiprocessor system, if threads are not bound to a core, | # In a multiprocessor system, if threads are not bound to a core, Operating system can rebalance the treads of different cores. This will require the prefetched buffers to be trashed. | ||

Following are two examples of processors that support prefetching directly in the chip design: | Following are two examples of processors that support prefetching directly in the chip design: | ||

| Line 250: | Line 250: | ||

===Prefetching in AMD<sup><span id="12body">[[#12foot|[12]]]</span></sup>=== | ===Prefetching in AMD<sup><span id="12body">[[#12foot|[12]]]</span></sup>=== | ||

The AMD Family 10h processors | The AMD Family 10h processors uses a stream-detection strategy similar to the Intel process described above to trigger prefetching of the next sequential memory location into the L1 cache. (Previous AMD processors fetched into the L2 cache, which introduced the access latency of the L2 cache and hindered performance.) | ||

The AMD Family 10h can prefetch more than just the next sequential block when a stream is detected, though. When this 'unit-stride' prefetcher detects misses to sequential blocks, it can trigger a preset number of prefetch requests from memory. For example, if the preset value is two, the next two blocks of memory will be prefetched when a sequential access is detected. Subsequent detection of misses of sequential blocks may only prefetch a single block. This maintains the 'unit-stride' of two blocks in the cache ahead of the next anticipated read. | The AMD Family 10h can prefetch more than just the next sequential block when a stream is detected, though. When this 'unit-stride' prefetcher detects misses to sequential blocks, it can trigger a preset number of prefetch requests from the memory. For example, if the preset value is two, the next two blocks of memory will be prefetched when a sequential access is detected. Subsequent detection of misses of sequential blocks may only prefetch a single block. This maintains the 'unit-stride' of two blocks in the cache ahead of the next anticipated read. | ||

AMD contends that this is beneficial when processing large data sets which often process sequential data and | AMD contends that this is beneficial when processing large data sets which often process sequential data and processes all the data in the stream. In these cases, a larger unit-stride populates the cache with blocks that will ultimately be processed by the CPU. AMD suggests that the optimal number of blocks to prefetch is between 4 and 8, and that fetching too many or fetching too soon has impaired performance in empirical testing. | ||

The AMD Family 10h also includes 'Adaptive Prefetching', a hardware optimization that triggers prefetching when the demand stream catches up to the prefetch stream. In this case, the unit-stride is increased to assure that the prefetcher is fetching at a rate sufficient enough to continuously provide data to the processor. | The AMD Family 10h also includes 'Adaptive Prefetching', a hardware optimization that triggers prefetching when the demand stream catches up to the prefetch stream. In this case, the unit-stride is increased to assure that the prefetcher is fetching at a rate sufficient enough to continuously provide data to the processor. | ||

| Line 268: | Line 268: | ||

==Software vs. Hardware solutions== | ==Software vs. Hardware solutions== | ||

Both software and hardware solutions exists for the cache coherence. Hardware solutions are most widely used than software solutions. Software scheme require support from cache or runtime | Both software and hardware solutions exists for the cache coherence. Hardware solutions are most widely used than software solutions. Software scheme require support from cache or runtime systems and in some cases also require hardware assistance. | ||

<ref>[ftp://ftp.cs.wisc.edu/markhill/Papers/isca91_coherence.pdf Comparison of Hardware and Software Cache Coherence Schemes] Sarita V. Adve, Vikram S. Adve, Mark D. Hill, Mary K. Vernon</ref>Recent studies have shown that software schemes are comparable to the hardware solutions. The only cases for which software schemes perform significantly worse than hardware schemes are when there is | <ref>[ftp://ftp.cs.wisc.edu/markhill/Papers/isca91_coherence.pdf Comparison of Hardware and Software Cache Coherence Schemes] Sarita V. Adve, Vikram S. Adve, Mark D. Hill, Mary K. Vernon</ref>Recent studies have shown that software schemes are comparable to the hardware solutions. The only cases for which software schemes perform significantly worse than hardware schemes are when there is greater than 15% reduction in hit rate due to inaccurate prediction of memory access conflicts or when there are many writes in the program that are not executed at run-time. For relatively well structured and deterministic programs, on the other hand, software schemes perform significantly in the same range as the hardware schemes. | ||

==Cache Coherence Schemes – Fetch and Replacements== | ==Cache Coherence Schemes – Fetch and Replacements== | ||

| Line 294: | Line 294: | ||

===Directory Based Cache Coherence Schemes=== | ===Directory Based Cache Coherence Schemes=== | ||

#In a directory-based system, the data being shared is placed in a common directory that maintains the coherence between caches. The directory acts as a filter through which the | #In a directory-based system, the data being shared is placed in a common directory that maintains the coherence between caches. The directory acts as a filter through which the processors must ask permission to load an entry from the primary memory to its cache. When an entry is changed the directory either updates or invalidates the other caches with that entry. | ||

#Fetch and Replacement Scenarios:<ref>[http://www.di.unipi.it/~vannesch/SPA%202010-11/Silvia.pdf Cache Coherence Techniques] Silvia Lametti</ref> | #Fetch and Replacement Scenarios:<ref>[http://www.di.unipi.it/~vannesch/SPA%202010-11/Silvia.pdf Cache Coherence Techniques] Silvia Lametti</ref> | ||

##Block in Uncached State: The block required by a processor P1 is not in the cache. When a processor P1 tries to read an uncached block X, read miss occurs. | ##Block in Uncached State: The block required by a processor P1 is not in the cache. When a processor P1 tries to read an uncached block X, read miss occurs. | ||

| Line 301: | Line 301: | ||

##Block is Shared: The block requested by a Processor P1 is in shared state. | ##Block is Shared: The block requested by a Processor P1 is in shared state. | ||

##*Read Miss: Requesting Processor P1 is sent the data and P1 is added to the set of processors sharing the data. | ##*Read Miss: Requesting Processor P1 is sent the data and P1 is added to the set of processors sharing the data. | ||

##*Write Miss: Requesting Processor P1 is sent the value. All processors sharing the data are sent | ##*Write Miss: Requesting Processor P1 is sent the value. All processors sharing the data are sent an invalidate message. The block is made exclusive for the Processor P1. | ||

##Block is Exclusive: The current value of the block (say X) is held in the cache of the processor (the owner) P1 which currently owns the block exclusively. | ##Block is Exclusive: The current value of the block (say X) is held in the cache of the processor (the owner) P1 which currently owns the block exclusively. | ||

##*Read Miss: When a processor P2 raises fetch request for the block X, P1 is sent | ##*Read Miss: When a processor P2 raises a fetch request for the block X, P1 is sent a notification that a fetch request has been posted for a block currently held by P1. P1 sends the data back to the directory, where it is written to memory and sent back to the requesting processor P2. P2 will now be added to the set of processor sharing the block X along with P1. | ||

##*Data Write-back: The owner processor P1 is replacing the block and hence must write it back. This cause the copy of block X in memory to be up to date. The block will now be uncached and the set maintaining the list of shared processors will be emptied | ##*Data Write-back: The owner processor P1 is replacing the block and hence must write it back. This cause the copy of block X in memory to be up to date. The block will now be uncached and the set maintaining the list of shared processors will be emptied | ||

##*Write Miss: A processor P2 makes write request to the block X which is currently owned by P1. P1 will be notified of this, which causes P1 to update the memory with its value of block X. The updated block X is now sent to P2 and it is made exclusive for P2. | ##*Write Miss: A processor P2 makes write request to the block X which is currently owned by P1. P1 will be notified of this, which causes P1 to update the memory with its value of block X. The updated block X is now sent to P2 and it is made exclusive for P2. | ||

| Line 361: | Line 361: | ||

<span id="25foot">[[#25body|25.]]</span> https://www.ece.cmu.edu/~ece548/handouts/07assoc.pdf <br /> | <span id="25foot">[[#25body|25.]]</span> https://www.ece.cmu.edu/~ece548/handouts/07assoc.pdf <br /> | ||

<span id="26foot">[[#26body|26.]]</span> http://www.ece.cmu.edu/~ece740/f11/lib/exe/fetch.php?media=wiki:lectures:onur-740-fall11-lecture24-prefetching-afterlecture.pdf <br /> | <span id="26foot">[[#26body|26.]]</span> http://www.ece.cmu.edu/~ece740/f11/lib/exe/fetch.php?media=wiki:lectures:onur-740-fall11-lecture24-prefetching-afterlecture.pdf <br /> | ||

<span id="27foot">[[#27body|27.]]</span> http://en.wikipedia.org/wiki/Cache_algorithms <br /> | |||

<span id="28foot">[[#28body|28.]]</span> http://www.dauniv.ac.in/downloads/CArch_PPTs/CompArchCh09L04RelplacementPolicies.pdf <br /> | |||

<br /> | <br /> | ||

=Other References= | =Other References= | ||

<references/> | <references/> | ||

Latest revision as of 01:18, 3 March 2015

Link to write-up for the topic: https://docs.google.com/document/d/1ML-r6viXBcbKUvHfUnHtsi4EgRzkPw30S8t1Qn3WnUk/

Link to page started with: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/6a_cs

Cache Hierarchy

In a simple computer model, processor reads data and instructions from the memory and operates on the data. Operating frequency of CPU has increased faster than the speed of memory and memory interconnects. Over the years the increase in the Intel microprocessor's speed has been roughly 60% per year, while memory access times have improved by less than 10% per year. Also, multi-core architecture has started putting more demand on the memory bandwidth. This increases the latency in memory access and CPU will have to remain idle for most of the time. Due to this, memory has became a bottle neck in performance.

To solve this problem, “cache” was invented. Cache is simply a temporary volatile storage space like primary memory but runs at the speed similar to core frequency. CPU can access data and instructions from cache in few clock cycles while accessing data from main memory can take more than 50 cycles. In early days of computing, cache was implemented as a stand alone chip outside the processor. In today’s processors, cache is implemented on the same die as the core.

There can be multiple levels of caches, each cache level subsequently away from the core and larger in size. L1 is closest to the CPU and as a result, fastest to access. Next to L1 is L2 cache and then L3. L1 cache is divided into instruction cache and data cache. This is better than having a combined larger cache as instruction cache being read-only is easy to implement while data cache is read-write.

Cache management is impacted by three characteristics of modern processor architectures: multilevel cache hierarchies, multi-core and multiprocessor chip and systems designs, and shared vs. private caches.

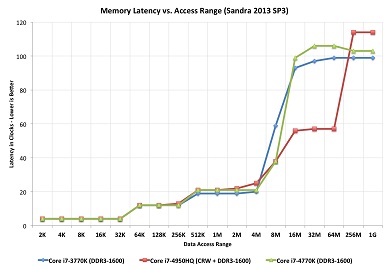

Multi-level cache hierarchies are used by most contemporary chip designs to enable memory access to keep pace with CPU speeds that are advancing at a more rapid pace. Two-level and three-level cache hierarchies are common. L1 typically ranges from 16-64KB and provide access in 2-4 cycles. L2 typically ranges from 512KB to as much as 8MB and provides access in 6-15 cycles. L3 typically ranges from 4MB to 32MB and provides access to the data it contains within 50-30 cycles.[10]

Multi-core and multprocessor designs place additional constraints on cache management by requiring them to consider all processes running concurrently in the cache coherency, replenishment, fetching, and other management functions.

In multiprocessors with multiple levels of cache, the way the caches are managed depends on the architecture of the processors, but there are 3 main methods, inclusive,non inclusive and exclusive. In inclusive caches all data in the L1 cache must also be somewhere in the L2 cache. In exclusive caches data is guaranteed to be in at most one of the L1 and L2 caches, never in both.[24] Non-Inclusion lies in between the two. A block can be present in both the L1 and L2 or one or the other. Exclusive caches can store more data but Inclusive cache helps in cache coherence. Another advantage of inclusive caches is that the larger cache can use larger cache lines, which reduces the size of the secondary cache tags. Nowadays most of the processors use Inclusive cache like the Intel i7 processors. However as the cache sizes are increasing non-inclusive and exclusive protocols would definitely come in the picture.[23]

The three-level cache was used again first with the introduction of multiple processor cores, where the L3 was added to the CPU die. It became common to have the three levels be larger in size than the next and today it is not uncommon to find Level 3 cache sizes of eight megabytes. This trend appears to continue for the foreseeable future.[19]

Below is a table that illustrates the variety with which these characteristics have been combined in processors from four manufacturers over the past 10 years.

| Company & Processor | # cores | L1 cache Per core | L2 cache Per core | L3 cache | Year |

|---|---|---|---|---|---|

| Intel Xeon Clovertown | 2 | I:4*32KB D:4*32KB | 2*4MB | - | Jan 2007 |

| Intel Xeon 3200 series | 4 | - | 2*4MB | - | Jan 2007 |

| AMD Athlon 64FX | 2 | I:64KB D:4KB Both 2-way set assoc. | 1MB 16way set assoc. | - | Jan 2007 |

| AMD Athlon 64X2 | 2 | I:64KB D:4KB Both 2-way set assoc. | 512KB/1MB 16way set assoc. | 2MB | Jan 2007 |

| AMD Barcelona | 4 | I:64KB D:64KB | 512KB | 2MB Shared | Aug 2007 |

| Sun Microsystems Ultra Sparc T2 | 8 | I:16KB D:8KB | 4MB (8 banks) 16way set assoc. | - | Oct 2007 |

| Intel Xeon Wolfdale DP | 2 | D:96KB | 6MB | - | Nov 2007 |

| Intel Xeon Hapertown | 4 | D:96KB | 2*6MB | - | Nov 2007 |

| AMD Phenom | 4 | I:64KB D:64KB | 512KB | 2MB Shared | Nov 2007 Mar 2008 |

| Intel Core 2 Duo | 2 | I:32KB D:32KB | 2/4MB 8 way set assoc. | - | 2008 |

| Intel Penryn Wolfdale DP | 4 | - | 6-12MB | 6MB | Mar 2008 Aug 2008 |

| Intel Core 2 Quad Yorkfield | 4 | D:96KB | 12MB | - | Mar 2008 |

| AMD Toliman | 3K10 | I:64KB D:64KB | 512KB | 2MB Shared | Apr 2008 |

| Azul Systems Vega3 7300 Series | 864 | 768GB | - | - | May 2008 |

| AMD Athlon II X2 | 2 | 128 KB x 2 | 512 KB x 2 1MB x 2 | - | 2009 |

| AMD Athlon II X3 | 3 | 128 KB x 3 | 512 KB x 3 | - | 2009 |

| AMD Athlon II X4 | 4 | 128 KB x 4 | 512 KB x 4 | - | 2009 |

| AMD Phenom II X2 | 2 | 128 KB x 2 | 512 KB x 2 | 6MB | 2009 |

| AMD Phenom II X3 | 3 | 128 KB x 3 | 512 KB x 3 | 6MB | 2009 |

| AMD Phenom II X4 | 4 | 128 KB x 4 | 512 KB x 4 | 4-6MB | 2009 |

| AMD Phenom II X6 | 6 | 128 KB x 6 | 512 KB x 4 | 6MB | 2010 |

| Intel Core i3 | 2 | 32+32 KB x 2 | 256 KB x 2 | 4MB | 2010 |

| Intel Core i3 | 2 | 32+32 KB x 2 | 256 KB x 2 | 4MB | 2010 |

| Intel Core i5 - 6 Series | 2 | 32+32 KB x 2 | 256 KB x 2 | 4MB | 2010 |

| Intel Core i5 - 7 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 8 MB | 2010 |

| Intel Core i5 - 2400 Series Core i5 - 2500 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 6 MB | 2010 |

| Intel Core i7 - 8 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 8 MB | 2010 |

| Intel Core i7 - 9 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 8 MB | 2010 |

| Intel Core i7 - 970 | 6 | 32+32 KB x 6 | 256 KB x 6 | 12 MB | 2010 |

| Sun SPARC T4 | 8 | 8 x 16 + 16 | 8 x 128 KB | 4 MB | 2011 |

| Sun SPARC64 IXfx | 16 | 32 KB x 16 | 12 MB | - | 2011 |

| Intel i7-2600 | 4 | 32 x 16 KB x 4 | 256 KB x 4 | 8MB | 2011 |

| Intel i7-3970X | 6 | 32 x 16 KB x 6 | 256 KB x 6 | 15MB | November 2012 |

| Intel i5-3330 | 4 | 32 x 32 KB x 4 | 256 KB x 4 | 6MB | 2012 |

| Intel i5-3570K | 4 | 32 x 32 KB x 4 | 256 KB x 4 | 6MB | 2012 |

| Intel i7-3820 | 4 | 32 x 32 KB x 4 | 256 KB x 4 | 10MB | February 2012 |

| Intel i7-3540M | 2 | 32 x 32 KB x 2 | 256 KB x 2 | 4MB | January 2013 |

| Intel i5-3339Y | 2 | 32 x 32 KB x 2 | 256 KB x 2 | 3MB | January 2013 |

| Intel i5-4670 | 4 | 4 x 32 x 2 8-way set associative | 256 KB x 4 | 6MB | June 2013 |

| Intel i7-4960HQ | 4 | 4 x 32 x 2 8-way set associative | 256 KB x 4 | 6MB | September 2013 |

| Intel i7-4960HQ | 4 | 4 x 32 x 2 8-way set associative | 256 KB x 4 | 6MB | September 2013 |

| Intel i5-4210M | 2 | 2 x 32 KB x 2 8-way set associative | 256 KB x 2 | 3MB | April 2014 |

| Intel i7-4790K | 4 | 4 x 32 KB x 2 8-way set associative | 256 KB x 4 | 8MB | June 2014 |

| Intel i7-4980HQ | 4 | 4 x 32 KB x 2 8-way set associative | 256 KB x 4 | 6MB | July 2014 |

| Intel i5-5350U | 2 | 2 x 32 KB x 2 8-way set associative | 256 KB x 2 | 3MB | Jan 2015 |

| Intel i7-4722HQ | 4 | 4 x 32 KB x 2 8-way set associative | 256 KB x 4 | 6MB | Jan 2015 |

Note: The 'x #' in the L1 and L2 columns indicates that this cache is for each core.

The next two sections will discuss cache write policies and cache prefetching as techniques to improve the performance of these complex caching architectures by reducing write-miss and read-miss rates.

Cache Sizes[22]

From the table above we can clearly notice that the cache size has increase steadily with time which is also in accordance with Moore's law. Each additional memory pool pushes back the need to access main memory and improves the performance in specific cases which leads to considerable reduction in Cache misses. It might seem logical, then, to devote huge amounts of on-die resources to cache but it turns out there’s a diminishing marginal return to doing so. Larger caches are both slower and more expensive. At six transistors per bit of SRAM (6T), cache is also expensive. Past a certain point, it makes more sense to spend the chip’s power budget and transistor count on more execution units, better branch prediction, or additional cores.

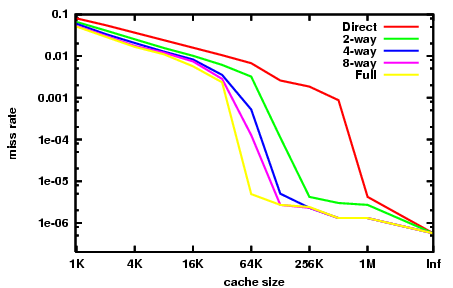

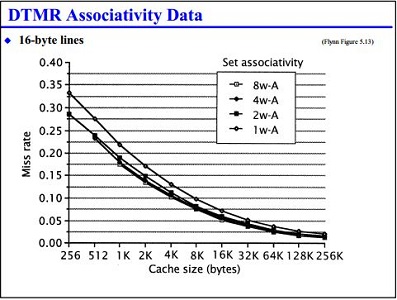

Cache Associativity[25]

Increasing the cache associativity in general increases the hit rate and hence performance of the cache since it reduces the conflict misses. This however comes with the penalty of increased cost and latency. Furthermore since the cache sizes have been increasing steadily there has been an implicit decrease in the conflict misses. Any more than eight-way associativity and the cache's latency is increased so much that the decrease in miss rate isn't worth it. The latest Intel i7 processors have 8-way set associate L1 and L2 caches.

Cache Write Policies

In section 6.2.3,[10] cache write hit policies and write miss policies were explored. The write hit policies, write-through and write-back, determine when the data written in the local cache is propagated to L1 memory. Write-through writes data to the cache and memory on a write request. Write-back writes to cache first and to memory only when a flush is required. The write miss policies covered in the text,[10] write-allocate and write no-allocate, determine if a memory block is stored in a cache line after the write occurs. Note that since a miss occurred on write, the block is not already in a cache line. Write-miss policies can also be expressed in terms of write-allocate, fetch-on-write, and write-before-hit. These can be used in combination with the same write-through and write-back hit policies to define the complete cache write policy. Investigating alternative policies such as these is important since write-misses produce on average one-third of all cache misses.

Write hit policies

- Write-through: Also known as store-through, this policy will write to main memory whenever a write is performed to cache.

- Advantages:

- Easy to implement

- Main memory has most recent copy of the data

- Read misses never result in writes to main memory

- Disadvantages:

- Every write needs to access main memory

- Bandwidth intensive

- Writes are slower

- Advantages:

- Write-back: Also known as store-in or copy-back, this policy will write to main memory only when a block of data is purged from the cache storage.

- Advantages:

- Writes are as fast as the speed of the cache memory

- Multiple writes to a block require one write to main memory

- Less bandwidth intensive

- Disadvantages:

- Harder to implement

- Main memory may not be consistent with cache

- Reads that result in data replacement may cause dirt blocks to be written to main memory

- Advantages:

Write miss policies

Write miss policies can help eliminate write misses and therefore reduce bus traffic. This reduces the wait-time of all processors sharing the bus.

- Write-allocate vs Write no-allocate: When a write miss occurs in the cache, there may or may not be a line in the cache allocated to the block. For write-allocate, there will be a line in the cache for the written data. This policy is typically associated with write-back caches. For no-write-allocate, there will not be a line in the cache.

- Fetch-on-write vs no-fetch-on-write: The fetch-on-write will cause the block of data to be fetched from a lower memory hierarchy if the write miss occurs. The policy fetches a block on every write miss. Fetch-on-write determines if the block is fetched from memory, and write-allocate determines if the memory block is stored in a cache line. Certain write policies may allocate a line in cache for data that is written by the CPU without retrieving it from memory first.

- Write-before-hit vs no-write-before-hit: The write-before-hit will write data to the cache before checking the cache tags for a match. In case of a miss, the policy will displace the block of data already in the cache.

Combination Policies

The two combinations of fetch-on-write and no-write-allocate are blocked out in the above diagram. They are considered unproductive since the data is fetched from L1 memory, but is not stored in the cache. Temporal and spatial locality suggest that this data will be used again, and will therefore need to be retrieved a second time. Three combinations named write-validate, write-around, and write-invalidate all use no-fetch-on-write. They result in 'eliminated misses' when compared to a fetch-on-write policy. In general, this will yield better cache performance if the overhead to manage the policy remains low.

- Write-validate: It is a combination of no-fetch-on-write and write-allocate.[4] The policy allows partial lines to be written to the cache on a miss. It provides for better performance as well as works with machines that have various line sizes and does not add instruction execution overhead to the program being run. Write-validate requires that the L1 system memory can support writes of partial lines. The no-fetch-on-write has the advantage of avoiding an immediate bus transaction to retrieve the block from memory. For a multiprocessor with a cache coherency model a bus transaction will be required to get exclusive ownership of the block. While this appears to negate the advantages of no-fetch-on-write, the process is able to continue other tasks while this transaction is occurring, including rewriting to the same cache location. Tests by the author demonstrated more than a 90% reduction in write misses when compared to fetch-on-write policies.

- Write-invalidate: This policy is a combination of write-before-hit, no-fetch-on-write, and no-write-allocate.[4] This policy invalidates lines when there is a miss. With this policy, the copy that exists in L1 memory after the write miss differs from the one in the cache. For write hits the data is simply written into the cache using the cache hit policy. Thus, for hits, the cache is not written around. Tests by the author demonstrated a 35-50% reduction in write misses when compared to fetch-on-write policies.

- Write-around: This policy is a combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit.[4] This policy uses a non-blocking write scheme to write to cache. It writes data to the next lower cache without modifying the data of the cache line. It bypasses the L2 cache, writes directly to the L1 memory, and leaves the existing block line in the cache unaltered on a hit or a miss. This strategy shows performance improvements when the data that is written will not be reread in the near future. Since we are writing before a hit is detected, the cache is written around for both hits and misses. The author notes that in only but a few cases write-around performs worse than write-validate policies. Most applications tend to reread what they have recently written. Using a write-around policy, this would result in a cache miss and a read from L1 memory. With write-validate, the data would be in cache. Tests by the author demonstrated a 40-70% reduction in write misses when compared to fetch-on-write policies.

Of these three combinations, write-validate performed the worse. The author notes that it does perform better than fetch-on-write and is easy to implement.

- Fetch-on-write: When used with write-allocate, fetch-on-write stores the block and processes the write to cache as expected. This policy was used as the base-line for determining the performance of the other three policy combinations. In general, all three previous combinations exhibited fewer misses than this policy.

Cache Replacement Policies

Whenever a cache is full or conflicts for a set, a block in a cache line must be evicted in-order to make room for the new block. Replacement policies determine which block to choose for the eviction.

- Direct mapped Caches: Here incoming block can be placed in only one location( Direct Mapped Cache ) as a result there is no choice of which block to evict.

- Set Associative and Fully Associative caches: Here blocks from multiple cache lines can be evicted for an incoming block.[28]

Replacement policies in general should evict the cache block so that it will minimise the future cache misses.

- Least Recently Used(LRU) policy: In LRU policy, cache ranks each cache lines in a set based on how recently they have been accessed and evicts the block from least recently accessed cache line. Whenever a cache line is referenced, information on recent access must be updated leading to a complicated hardware.

- Least Frequently Used(LFU) policy: In LFU policy, counter is maintained on how often the cache line is referenced and evicts the block from least frequently used cache line.

There are other replacement policies such as Most Recently Used(MRU), Random Replacement(RR), Psuedo-LRU(PLRU), Segmented LRU(SLRU) and so on. Each type of replacement policy works best for specific type of access patterns or the workload.[27] Most latest Intel i7 processors use Pseudo-LRU policy for replacement owing to its lower latency and low power consumption.

Prefetching

Prefetching is a technique that can be used in addition to write-hit and write-miss policies to improve the utilization of the cache. Microprocessors, such as those listed in Table 1, support prefetching logic directly in the chip. Cache is very efficient in terms on access time once the data or instructions are in the cache. But when a process tries to access something that is not already in the cache, a cache miss occurs and those pages need to be brought into the cache from memory. Generally cache misses are expensive to the performance as the processor has to wait for the data (In parallel processing, a process can execute other tasks while it is waiting on data, but there will be some overhead for this). Prefetching is a technique in which data is brought into cache before the program needs it. In other words, it is a way to reduce cache misses. Prefetching uses some type of prediction mechanism to anticipate the next data that will be needed and brings them into cache. It is not guaranteed that the prefetched data will be used though. The goal here is to reduce the cache misses to improve the overall performance.

Some architectures have instructions to prefetch data into cache. Programmers and compliers can insert this prefect instruction in the code. This is known as software prefetching. Software prefetching can be of further two types: binding and non-binding. Binding pre-fetches into a register using a regular load while non-binding prefetches into cache using a special instruction. In hardware prefetching, processor observers the system behavior and issues requests for prefetching. It can be tuned to system implementation and has no code portability issues. Next Line prefetchers, Stride Prefetchers and Stream Prefetchers are some of the modern techniques to implement hardware based prefetching. Intel 8086 and Motorola 68000 were the first microprocessors to implement instruction prefetch. Graphics Processing Units benefit from prefetching due to spatial locality property of the data.[8]. The following image shows how a prefetch is organised in a memory system.[26]

Advantages

- Masks data access latency caused by cache misses.[20]

- Improves the performance between the processor and the memory.[20]

Disadvantages

- Wastes bandwidth when prefetched data is not used.

- Hardware prefetching requires complex architecture. Second order effect is cost of implementation on silicon and validation costs.

- Software prefetching adds additional instructions to the program, making the program larger.

- If same cache is used for prefetching, then prefetching could cause other cache blocks to be evicted. If the evicted blocks are needed, then that will generate a cache miss. This can be prevented by having a separate cache for prefetching but it comes with hardware costs.

- When scheduler changes the task running on a processor, prefetched data may become useless.

Effectiveness

Prefetching effectiveness can be tracked by following matrices

- Coverage is defined as fraction of original cache misses that were prefetched resulting in cache hit.

- Accuracy is defined as fraction of prefetches that are useful.

- Timeliness measures how early the prefetches arrive.

Ideally, a system should have high coverage, high accuracy and optimum timeliness. Realistically, aggressive prefetches can increase coverage but decrease accuracy and vice versa. Also, if prefetching is done too early, the fetched data may have to be evicted without being used and if done too late, it can cause a cache miss.

Stream Buffer Prefetching

This is a technique for prefetching which uses a FIFO buffer in which each entry is a cacheline and has a address (or tag) and a available bit. System prefetches a stream of sequential data into a stream buffer and multiple stream buffers can be used to prefetch multiple streams in parallel. On a cache access, head entries of stream buffers are checked for match along with cache check. If the block is not found in cache but found at the head of stream buffer, it is moved to cache and next entry in the buffer becomes the head. If the block is not found in cache or as head of buffer, data is brought from memory into cache and the subsequent address is assigned to a new stream buffer. Only the heads of the stream buffers are checked during cache access and not the whole buffer. Checking all the entries in all the buffers will increase hardware complexity.

Above plot shows how the hit rate varies with respect to number of stream buffers for different benchmarks. Here the hit-rate is the fraction of on-chip misses that hit in the streams. Graph on left is the result of running benchmark programs of NAS suite where as Graph on the right is from running programs of PERFECT suite. As seen from the graph majority of the benchmarks have the hit-rate in the range of 50-80%. Also, it is evident from the graph that the hit-rate gets saturated when the number of streams is increased. This hit-rate saturation is the related to number of unique array references in the benchmark programs. Lower and higher hit-rates for benchmark programs are due to large number of non-unit stride and unit-stride references respectively.[7]

Prefetching in Parallel Computing

On a uniprocessor system, prefetching is definitely helpful to improve performance. On a multiprocessor system prefetching is useful, but there are tighter constrains in implementing because of the fact that data will be shared between different processors or cores. In message passing parallel model, each parallel thread has its own memory space and prefetching can be implemented in same way as for uniprocessor system.

In shared memory parallel programming, multiple threads that run on different processors share common memory space. If multiple processors use common cache, then prefetching implementation is similar to uniprocessor system. Difficulties arise when each core has its own cache. Some of the case-scenarios that can occur are:

- Processor P1 has prefetched some data D1 into its stream buffer but is not used it. At the same time processor P2 reads data D1 into its cache and modifies it and one of the cache coherency protocols would be used to inform processor P1 about this change. D1 is not in P1’s cache so it may simply ignore this. Now, when P1 tries to read D1, it will get the stale data from its stream buffer. One way to prevent this is by improving stream buffers so that they can modify their data just like a cache. This adds complexity to the architecture and increases cost.[6]

- Prefetching mechanism can fetch data D1, D2, D3,….D10 into P1’s buffer. Now due to parallel processing, P1 only needs to operate on D1 to D5 while P2 will operate on remaining data. Some bandwidth was wasted in fetching D6 to D10 into P1 even though it did not use it. There is a trade off to be made here, if prefetching is very conservative then it will lead to a miss and if not then it will waste bandwidth.

- In a multiprocessor system, if threads are not bound to a core, Operating system can rebalance the treads of different cores. This will require the prefetched buffers to be trashed.

Following are two examples of processors that support prefetching directly in the chip design:

Prefetching in Intel Core i7[11]

The Intel 64 Architecture, including the Intel Core i7, includes both instruction and data prefetching directly on the chip.

The Data Cache Unit prefetcher is a streaming prefetcher for L1 caches that detects ascending access to data that has been loaded very recently. The processor assumes that this ascending access will continue, and prefetches the next line.

The data prefetch logic (DPL) maintains two arrays to track the recent accesses to memory: one for the upstreams that has 12 entries, and one for downstreams that has 4 entires. As pages are accessed, their addresses are tracked in these arrays. When the DPL detects an access to a page that is sequential to an existing entry, it assumes this sequential access will continue, and prefetches the next cache line from memory.

Prefetching in AMD[12]

The AMD Family 10h processors uses a stream-detection strategy similar to the Intel process described above to trigger prefetching of the next sequential memory location into the L1 cache. (Previous AMD processors fetched into the L2 cache, which introduced the access latency of the L2 cache and hindered performance.)

The AMD Family 10h can prefetch more than just the next sequential block when a stream is detected, though. When this 'unit-stride' prefetcher detects misses to sequential blocks, it can trigger a preset number of prefetch requests from the memory. For example, if the preset value is two, the next two blocks of memory will be prefetched when a sequential access is detected. Subsequent detection of misses of sequential blocks may only prefetch a single block. This maintains the 'unit-stride' of two blocks in the cache ahead of the next anticipated read.

AMD contends that this is beneficial when processing large data sets which often process sequential data and processes all the data in the stream. In these cases, a larger unit-stride populates the cache with blocks that will ultimately be processed by the CPU. AMD suggests that the optimal number of blocks to prefetch is between 4 and 8, and that fetching too many or fetching too soon has impaired performance in empirical testing.

The AMD Family 10h also includes 'Adaptive Prefetching', a hardware optimization that triggers prefetching when the demand stream catches up to the prefetch stream. In this case, the unit-stride is increased to assure that the prefetcher is fetching at a rate sufficient enough to continuously provide data to the processor.

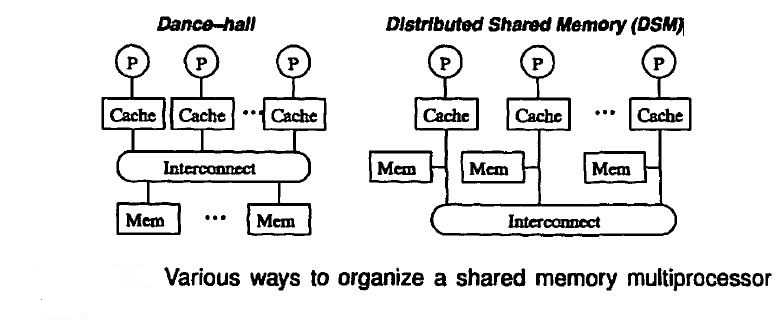

Cache Coherence Support

<ref>Cache coherence Wiki</ref>Cache Coherence (also cache coherency) refers to the consistency of data stored in local caches of a shared resource. In a Shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of any one instruction operand: one copy in the main memory and one in each cache memory. When one copy of an operand is changed, the other copies of the operand must be changed also. Cache coherence is the discipline that ensures that changes in the values of shared operands are propagated throughout the system in a timely fashion.

Cache coherence is achieved if the following conditions are met.

- If a processor P1 writes a value A to a memory location X and reads from the same memory location X, then P1 should be returned value A provided no other write happened in-between.

- If a processor P1 writes a value A to a memory location X and another processor P2 reads from the same memory location X, then P2 should be returned the value A written by P1 provided no other write happened in-between.

- Consider that a processor P1 writes a value A to a memory location X followed by another processor P2 which writes a value B to the same memory location X. In this case the writes should appear in the same order i.e. A and then B.

Software vs. Hardware solutions

Both software and hardware solutions exists for the cache coherence. Hardware solutions are most widely used than software solutions. Software scheme require support from cache or runtime systems and in some cases also require hardware assistance.

<ref>Comparison of Hardware and Software Cache Coherence Schemes Sarita V. Adve, Vikram S. Adve, Mark D. Hill, Mary K. Vernon</ref>Recent studies have shown that software schemes are comparable to the hardware solutions. The only cases for which software schemes perform significantly worse than hardware schemes are when there is greater than 15% reduction in hit rate due to inaccurate prediction of memory access conflicts or when there are many writes in the program that are not executed at run-time. For relatively well structured and deterministic programs, on the other hand, software schemes perform significantly in the same range as the hardware schemes.

Cache Coherence Schemes – Fetch and Replacements

Invalidation Schemes vs. Update Strategies<ref>Cache Coherence Josep Torrellas</ref>

- Invalidation: On a write, all other caches with a copy are invalidated. Invalidation is least recommended when there is a single producer and many consumers of data. A shared bus is used as a medium here to enforce write serialization.

- Update: On a write, all other caches with a copy are updated. Update Strategy is least recommended when there are multiple writes by one programming element say PE1 before data is read by another programming element PE2.

The strategies followed for fetch and replacement in some of the schemes are discussed below.

Snoopy Cache Coherence Schemes

- Snooping<ref>Cache coherence Wiki</ref> is the process where the individual caches monitor address lines for accesses to memory locations that they have cached. When a write is observed to a location that a cache has a copy of and the cache controller invalidates its own copy of the snooped memory location.

- Snoopy Scheme is a distributed cache coherence scheme based on the notion of a snoop that watches all activity on a global bus, or is informed about such activity by some global broadcast mechanism. Snoopy scheme is the most popular scheme used in commercial multiprocessors.

- All caches must report on the bus before transaction can proceed. Snoop result informs main memory, if it should respond or a cache has a modified copy of the block.

- A decoder is used to decode the tag and the index bits from the address. In most modern processors the address combines of both the tag and the index with index being a few offset bits at the end in the address.

- When replacement of one of the entries is required the snoop filter selects for replacement the entry representing the cache line or lines owned by the fewest nodes, as determined from a presence vector in each of the entries.

- The Snooping results can be reported within fixed number of clock cycles from the address issue on the bus, after a variable delay or immediately based on the protocol.

- A three wired-OR signals is used to report snoop results.The first two wires are for snooping results. The third one indicates a snoop valid. An inhibit signal is asserted until all processors have completed their snoop.

In order to support snoopy scheme for bus based multiprocessors, a new component called coherence controller is added to each processor and also to memory. Each coherence controller has a bus snooper which is used to snoop bus transactions.

Directory Based Cache Coherence Schemes

- In a directory-based system, the data being shared is placed in a common directory that maintains the coherence between caches. The directory acts as a filter through which the processors must ask permission to load an entry from the primary memory to its cache. When an entry is changed the directory either updates or invalidates the other caches with that entry.

- Fetch and Replacement Scenarios:<ref>Cache Coherence Techniques Silvia Lametti</ref>

- Block in Uncached State: The block required by a processor P1 is not in the cache. When a processor P1 tries to read an uncached block X, read miss occurs.

- Read Miss: P1 is sent data from memory and P1 is made as the only sharing Node. The block X that is cached is marked as shared.

- Write Miss: P1 is sent the data and also made as the sharing node. The block is now marked exclusive to indicate that the only valid copy is cached.

- Block is Shared: The block requested by a Processor P1 is in shared state.

- Read Miss: Requesting Processor P1 is sent the data and P1 is added to the set of processors sharing the data.

- Write Miss: Requesting Processor P1 is sent the value. All processors sharing the data are sent an invalidate message. The block is made exclusive for the Processor P1.

- Block is Exclusive: The current value of the block (say X) is held in the cache of the processor (the owner) P1 which currently owns the block exclusively.

- Read Miss: When a processor P2 raises a fetch request for the block X, P1 is sent a notification that a fetch request has been posted for a block currently held by P1. P1 sends the data back to the directory, where it is written to memory and sent back to the requesting processor P2. P2 will now be added to the set of processor sharing the block X along with P1.

- Data Write-back: The owner processor P1 is replacing the block and hence must write it back. This cause the copy of block X in memory to be up to date. The block will now be uncached and the set maintaining the list of shared processors will be emptied

- Write Miss: A processor P2 makes write request to the block X which is currently owned by P1. P1 will be notified of this, which causes P1 to update the memory with its value of block X. The updated block X is now sent to P2 and it is made exclusive for P2.

- Block in Uncached State: The block required by a processor P1 is not in the cache. When a processor P1 tries to read an uncached block X, read miss occurs.

Write Through Schemes

In write through schemes, all processor writes results in update of local cache and a global bus write that updates the main memory and also invalidates or updates all other caches with that same item.

Write-Back/Ownership Schemes

In write-back/ownership schemes, a single cache has ownership of a block. In this case when a processor writes it will not result in bus writes thus conserving bandwidth.

Pointer-Based Coherence Schemes<ref>Reducing Memory and Traffic Requirements for Scalable Directory-Based Cache Coherence Schemes Anoop Gupta, Wolf-Dietrich Weber, and Todd Mowry</ref>

- The Full Bit Vector Schemes

- This scheme associates a complete bit vector, one bit per processor, with each block of main memory. The directory also contains a dirty-bit for each memory block to indicate if some processor has been given exclusive access to modify that block in its cache. Each bit indicates whether that memory block is being cached by the corresponding processor, and thus the directory has full knowledge of the processors caching a given block.

- When a block has to be invalidated, messages are sent to all processors whose caches have a copy. In terms of message traffic needed to keep the caches coherent, this is the best that an invalidation-based directory scheme can do.

- Limited Pointer Schemes

- Recent studies has shown that for most kinds of data objects the corresponding memory locations are cached by only a small number of processors at any given time. This knowledge can be exploited to reduce directory memory overhead by restricting each directory entry to a small fixed number of pointers, each pointing to a processor caching that memory block.

- An important implication of limited pointer schemes is that there must exist some mechanism to handle blocks that are cached by more processors than the number of pointers in the directory entry.

Cache Coherency protocol

A coherency protocol is a protocol which maintains the consistency between all the caches in a system of distributed shared memory. The protocol maintains memory coherence according to a specific consistency model. Older multiprocessors support the sequential consistency model, while modern shared memory systems typically support the release consistency or weak consistency models.

Transitions between states in any specific implementation of these protocols may vary. For example, an implementation may choose different update and invalidation transitions such as update-on-read, update-on-write, invalidate-on-read, or invalidate-on-write. The choice of transition may affect the amount of inter-cache traffic, which in turn may affect the amount of cache bandwidth available for actual work. This should be taken into consideration in the design of distributed software that could cause strong contention between the caches of multiple processors. Different processors may have different cache organization depending on the cache coherence protocol being implemented and the design of shared memory multi-processor system. Cache to cache transfer techniques would be determined by the design of the multi-processor system and the cache coherence protocol being used.

Various models and protocols have been devised for maintaining coherence, such as MSI, MESI (aka Illinois), MOSI, MOESI, MERSI, MESIF, Write-once (cache coherence), and Synapse, Berkeley, Firefly and Dragon.

Intel and AMD processors use variation of MSI protocols in order to co-ordinate memory access. Latest Intel i7 Core processors use MESIF protocol which is similar to MESI but includes an additional Forward(F) state. AMD processors use MOESI protocol which achieves benefits of both MESI and MOSI.

References

1. http://www.real-knowledge.com/memory.htm

2. Computer Design & Technology- Lectures slides by Prof.Eric Rotenberg

3. Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin

4. “Cache write policies and performance,” Norman Jouppi, Proc. 20th International Symposium on Computer Architecture (ACM Computer Architecture News 21:2), May 1993, pp. 191–201.

5. Architecture of Parallel Computers, Lecture slides by Prof. Edward Gehringer

6. “Parallel computer architecture: a hardware/software approach” by David. E. Culler, Jaswinder Pal Singh, Anoop Gupta (pg 887)

7. “Evaluating Stream Buffers as a Secondary Cache Replacement” by Subbarao Palacharla and R. E. Kessler. (ref#2)

8. http://en.wikipedia.org/wiki/Instruction_prefetch

9. http://www.real-knowledge.com/memory.htm

10. Yan Solihin, Fundamentals of Parallel Computer Architecture (Solihin Publishing and Consulting, LLC, 2008-2009), p. 151

11. "Intel® 64 and IA-32 Architectures Optimization Reference Manual", Intel Corporation, 1997-2011. http://www.intel.com/Assets/PDF/manual/248966.pdf

12. "Software Optimization Guide for AMD Family 10h and 12h Processors", Advanced Micro Devices, Inc., 2006–2010. http://support.amd.com/us/Processor_TechDocs/40546.pdf

13. http://en.wikipedia.org/wiki/SPARC

14. http://en.wikipedia.org/wiki/Athlon_II

15. http://en.wikipedia.org/wiki/Phenom_II

16. http://en.wikipedia.org/wiki/List_of_Intel_Core_i5_microprocessors

17. http://en.wikipedia.org/wiki/List_of_Intel_microprocessors

18. http://www.cpu-world.com/

19. http://en.wikipedia.org/wiki/CPU_cache#History

20. http://www.multicoreinfo.com/prefetching-multicore-processors/

21. http://en.wikipedia.org/wiki/List_of_Intel_Core_i7_microprocessors

22. http://www.extremetech.com/extreme/188776-how-l1-and-l2-cpu-caches-work-and-why-theyre-an-essential-part-of-modern-chips

23. http://pages.cs.wisc.edu/~david/courses/cs838/projects/danav.pdf

24. https://en.wikipedia.org/wiki/CPU_cache#Exclusive_versus_inclusive

25. https://www.ece.cmu.edu/~ece548/handouts/07assoc.pdf

26. http://www.ece.cmu.edu/~ece740/f11/lib/exe/fetch.php?media=wiki:lectures:onur-740-fall11-lecture24-prefetching-afterlecture.pdf

27. http://en.wikipedia.org/wiki/Cache_algorithms

28. http://www.dauniv.ac.in/downloads/CArch_PPTs/CompArchCh09L04RelplacementPolicies.pdf

Other References

<references/>