CSC 456 Spring 2012/ch7 AA

Cache Coherence

Problem

In a system with a single processor (single core), maintaining cache coherency is simple and easy, but in a multiprocessor system, it is not as simple. Data can be present in any processor's cache, and protocol needs to ensure that the data is same in every cache. If it cannot ensure that all the caches are the same, then it needs to flag a cache line to indicate that it is not updated.

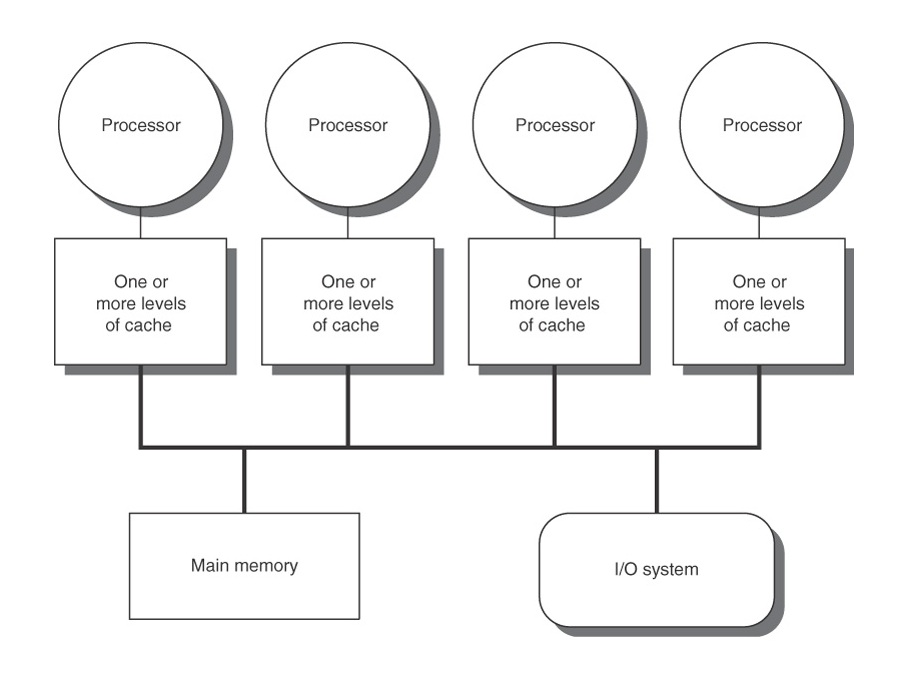

In the figure shown here, there is a 4 processor shared memory system where each processor has its own cache. Suppose processor P1 reads memory location M1 and stores it in its local cache. Then, processor P2 also reads from M1 and stores its own local cache. Now, if P1 changes value of M1, there will be two copies of same data residing in different caches, but the one in P1's cache will be different. The problem arises when P2 operates on M1, and uses the stale value of M1 that was stored in its cache. There exist solutions to this problem, and are described in the next section.

Solutions

Cache coherence solutions are mainly classified as software-based or hardware-based solutions.

Software-based solutions include:

- Compiler-based or with run-time system support

- With or without hardware assist

Hardware-based solutions include:

- Shared caches or Snoopy schemes or Directory-based schemes

- Write-through vs write-back protocols

- Update vs invalidation protocols

- Dirty-sharing vs. no-dirty-sharing protocols

The main concern in the case of software-based solutions is that perfect information is needed at all times when memory aliasing and explicit parallelism are required. So, the focus is more on improving hardware-based solutions, making them more common. Studies have shown that different snoop-based cache coherency schemes are more strongly sensitive toward write-policy than the specific coherency protocol. Write-back schemes are more efficient despite the increased hardware complexity involved in cache-coherency support.

Hardware-based cache-coherence protocols, though more competitive in terms of performance with respect to basic architectures with no hardware support, incur significant power cost as coherence traffic grows. Thus, as power constraints become tighter and the degree of multiprocessing increases, viability of hardware-based solutions becomes doubtful.

One may think that cache write policy can provide cache coherence, but it is not true. Cache write policy only controls how a change in value of a cache is propagated to a lower level cache or main memory. It is not responsible for propagating changes to other caches.

Protocols

The two basic methods to utilize the inter-core bus to notify other cores when a core changes something in its cache are update and invalidate. In the update method, if variable 'x' is modified by core 1, core 1 has to send the updated value of 'x' onto the inter-core bus. Each cache listens to the inter-core bus and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable.

In case of invalidation, an invalidation message is sent onto the inter-core bus when a variable is changed. The other caches will read this invalidation signal; if its core attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.

The update method results in significant amount of traffic on the inter-core bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method.

In order to improve cache coherency performance over the years, the following protocols were proposed:

- MSI

MSI stands for Modified, Shared, and Invalid, which is based on the three states that a line of cache can be in. The Modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The Shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The Invalid state means that the value of the variable has been modified by another cache and is invalid; the cache must read a new value from main memory (or another cache).

- MESI

MESI stands for Modified, Exclusive, Shared, and Invalid. The Modified and Invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the Exclusive state. The Exclusive state means that the variable is only in this cache and its value matches the value within the main memory. This now means that the Shared state indicates that the variable is contained in more than one cache.

- MOSI

The MOSI protocol is identical to the MSI protocol except that it adds an Owned state. The Owned state means that the processor "Owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the Owning cache much, much, faster.

- MOESI

The MOESI protocol is a combination of the MESI and MOSI protocols.

Peterson's Algorithm

This algorithm utilizes the simple idea of combining per-thread flags to indicate the intent to enter the lock and the turn variable to determine which thread should enter in the rare case that both wish to enter at the same time. The algorithm is as shown below:

void init() {

flag[0] = flag[1] = 0; // 1 -> thread wants to acquire lock (intent)

turn = 0; // whose turn is it? (thread 0 or thread 1?)

}

void lock() {

flag[self] = 1;

turn = 1 - self; // be generous: make it the other thread’s turn

while ((flag[1-self] == 1) && (turn == 1 - self))

; // spin-wait while other thread has intent

// AND it is other thread’s turn

}

void unlock() {

flag[self] = 0; // simply undo your intent

}

The advantages of this approach are:

- It does not assume much about the underlying hardware. It relies on the facts that load and store instructions are atomic (even across processors) and they execute in an order.

- It does not require special instructions.

Though Peterson's algorithm has the above advantages, there are a few problems that render it impractical for use:

- Spin-waiting is possible which causes a thread to spend lot of its CPU time waiting for another thread to release the lock.

- Algorithm might not work well with out-of-order execution of instructions supported by most of the modern processors.

- The algorithm also lacks scalability.

Thus, only the software solutions cannot work well. Sufficient amount of hardware and OS support is needed to get the locking work properly.