CSC 456 Spring 2012/10a AJ: Difference between revisions

| (12 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Prefetching and Consistency Models= | =Prefetching and Consistency Models= | ||

==Intro== | ==Intro== | ||

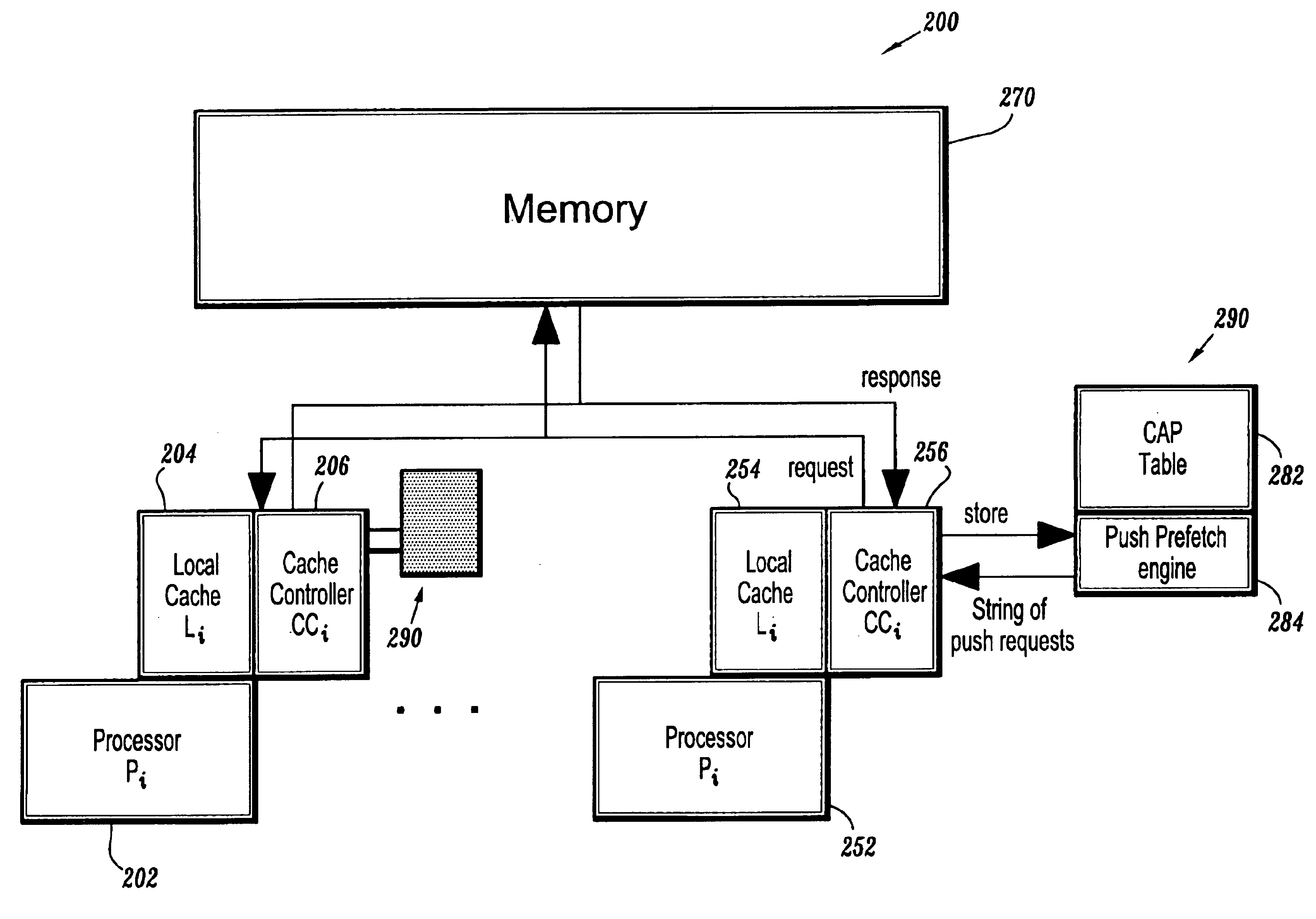

While memory consistency models | While memory consistency models ensure instructions are executed in correct order, these models can hinder efficiency. Since consistency models dictate order of execution, prefetching allows operations to complete quicker once their turn comes by bringing the necessary data closer into the cache before it is needed. Prefetching is a hardware optimization technique in which the processor automatically prefetches ownership for any write operations that are delayed. These delays are due to the program order requirement. Using prefetching as well as sequential consistency creates delayed writes that become partially overlapped with the operations preceding them in program order. This technique is only applicable to cache-based systems that use an invalidation-based protocol. This technique is suitable for statically scheduled processors. | ||

==Methods== | ==Methods== | ||

===Fixed vs. Adaptive Sequential Prefetching=== | ===Fixed vs. Adaptive Sequential Prefetching=== | ||

Fixed sequential prefetching refers to prefetching that occurs at a constant rate over time. Adaptive sequential prefetching, on the other hand, changes the rate of prefetching allowed over time. The prefetching rate is increased | Fixed sequential prefetching refers to prefetching that occurs at a constant rate over time. Because the amount of prefetching remains constant throughout execution, fixed sequential is the simplest method of prefetching to implement. When block n is read, a fixed number of consecutive blocks (K), are prefetched if they are not already in the cache regardless of a read miss or read hit. Finding the best value for K remains tricky, however. The K value must be large enough to lower cold miss rates while at the same time small enough to ensure that useless data is not loaded into the cache causing unnecessary traffic. | ||

Adaptive sequential prefetching, on the other hand, changes the rate of prefetching allowed over time. The prefetching rate is increased or decreased based on the number of past successful prefetches (prefetched blocks that were actually used). The rate of prefetching is increased when the ratio between useful prefetches and total prefetches becomes greater than a particular threshold. The rate is also consequently decreased the the same ratio fall below a second threshold. While both methods improve efficiency, adaptive sequential prefetching is the most efficient but also the most costly. (Dahlgren) | |||

===Exclusive Mode-Prefetching=== | ===Exclusive Mode-Prefetching=== | ||

Exclusive-mode prefetching helps to reduce both the miss latencies and the message traffic associated with writes. Unlike read misses, which directly stall the processor for their entire duration | Exclusive-mode prefetching helps to reduce both the miss latencies and the message traffic associated with writes. Unlike read misses, which directly stall the processor for their entire duration. Write misses affect performance[[File:exclusive_prefetch.jpg|200px|thumb|right| exclusive prefetch]] more indirectly, since writes can be buffered. A processor stalls while waiting for writes to complete in two situations: (i) when executing a write instruction if the write buffer is full, and (ii) during a read miss if previous writes must complete before the read miss can proceed. The impact of the former effect can be reduced through larger write buffers. In summary, exclusive-mode prefetching can provide significant performance benefits in architectures that have not already eliminated write stall times through aggressive implementations of weaker consistency models with lockup-free caches. Even if write stall times cannot be further reduced, exclusive-mode prefetching can improve performance somewhat by reducing the traffic associated with cache coherency. The main effect of exclusive mode prefetching is that the item that is prefetched, is done so as though its exclusive to that cache. | ||

==Where they stand now== | ==Where they stand now== | ||

| Line 18: | Line 20: | ||

*http://impact.asu.edu/cse520fa08/Srivatsan.pdf | *http://impact.asu.edu/cse520fa08/Srivatsan.pdf | ||

*http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/ch10_sj | *http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/ch10_sj | ||

*http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf | |||

*http://www.cesr.ncsu.edu/solihin/Main.html | |||

*Kourosh Gharachorloo, Anoop Gupta, and John Hennessy of Stanford University, "Two techniques to enhance the performance of memory consistency models," International Conference on Parallel Processing, 1991 | |||

*Frederick Dahlgren, Michel Dubois, and Per Stenstrom, "Sequential hardware prefetching in shared-memory multiprocessors," IEEE Trans. Parallel and Distributed Sytems, July 1995 | *Frederick Dahlgren, Michel Dubois, and Per Stenstrom, "Sequential hardware prefetching in shared-memory multiprocessors," IEEE Trans. Parallel and Distributed Sytems, July 1995 | ||

*Mark Hill, "Multiprocessors should support simple memory consistency models," IEEE Computer, August 1998 | |||

*Amir Kamil Jimmy Su, and Katherine Yelick, "Making sequential consistency practical in Titanium," Supercomputing, SC-2005 | |||

*http://download.intel.com/education/highered/multicore/Lecture27.pdf | |||

Latest revision as of 05:56, 26 April 2012

Prefetching and Consistency Models

Intro

While memory consistency models ensure instructions are executed in correct order, these models can hinder efficiency. Since consistency models dictate order of execution, prefetching allows operations to complete quicker once their turn comes by bringing the necessary data closer into the cache before it is needed. Prefetching is a hardware optimization technique in which the processor automatically prefetches ownership for any write operations that are delayed. These delays are due to the program order requirement. Using prefetching as well as sequential consistency creates delayed writes that become partially overlapped with the operations preceding them in program order. This technique is only applicable to cache-based systems that use an invalidation-based protocol. This technique is suitable for statically scheduled processors.

Methods

Fixed vs. Adaptive Sequential Prefetching

Fixed sequential prefetching refers to prefetching that occurs at a constant rate over time. Because the amount of prefetching remains constant throughout execution, fixed sequential is the simplest method of prefetching to implement. When block n is read, a fixed number of consecutive blocks (K), are prefetched if they are not already in the cache regardless of a read miss or read hit. Finding the best value for K remains tricky, however. The K value must be large enough to lower cold miss rates while at the same time small enough to ensure that useless data is not loaded into the cache causing unnecessary traffic.

Adaptive sequential prefetching, on the other hand, changes the rate of prefetching allowed over time. The prefetching rate is increased or decreased based on the number of past successful prefetches (prefetched blocks that were actually used). The rate of prefetching is increased when the ratio between useful prefetches and total prefetches becomes greater than a particular threshold. The rate is also consequently decreased the the same ratio fall below a second threshold. While both methods improve efficiency, adaptive sequential prefetching is the most efficient but also the most costly. (Dahlgren)

Exclusive Mode-Prefetching

Exclusive-mode prefetching helps to reduce both the miss latencies and the message traffic associated with writes. Unlike read misses, which directly stall the processor for their entire duration. Write misses affect performance

more indirectly, since writes can be buffered. A processor stalls while waiting for writes to complete in two situations: (i) when executing a write instruction if the write buffer is full, and (ii) during a read miss if previous writes must complete before the read miss can proceed. The impact of the former effect can be reduced through larger write buffers. In summary, exclusive-mode prefetching can provide significant performance benefits in architectures that have not already eliminated write stall times through aggressive implementations of weaker consistency models with lockup-free caches. Even if write stall times cannot be further reduced, exclusive-mode prefetching can improve performance somewhat by reducing the traffic associated with cache coherency. The main effect of exclusive mode prefetching is that the item that is prefetched, is done so as though its exclusive to that cache.

Where they stand now

Prefetching began to fade due to its many disadvantages and memory speeds starting to catch up with processor transfer rates. One issue with prefetching is the increased complexity and overhead of handing the prefetching algorithms. There is great risk that this overhead can be larger than any benefits if the prefetching algorithm used is not accurate, and fetches too early or fetches too late to be effective. Performance must be improved significantly to overcome the overhead and complexity or be a waste. Another problem comes about with the introduction of multicore architectures. In a single core architecture, prefetching requests are able to come from one core. With multiple cores, prefetching requests can originate from any of the different cores. This puts additional stress on memory to not only deal with regular prefetch requests but also to handle prefetch from different sources, and greatly increases the overhead and complexity of logic. Coherence algorithms must account not only for sequential consistency issues, but also account for the data change in another location, the location of prefetched data. Flushing data becomes significantly more complicated. If prefetched data is stored in the data cache, then cache conflict, can become a significant problem. This is because the current and predictive sets of data must exist in the cache at the same time. Without prefetching, you could use the additional space to simply increase the cache size itself. The solution here would be to add extra hardware to act as a buffer to prevent utilizing this cache space.

References

- http://impact.asu.edu/cse520fa08/Srivatsan.pdf

- http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/ch10_sj

- http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf

- http://www.cesr.ncsu.edu/solihin/Main.html

- Kourosh Gharachorloo, Anoop Gupta, and John Hennessy of Stanford University, "Two techniques to enhance the performance of memory consistency models," International Conference on Parallel Processing, 1991

- Frederick Dahlgren, Michel Dubois, and Per Stenstrom, "Sequential hardware prefetching in shared-memory multiprocessors," IEEE Trans. Parallel and Distributed Sytems, July 1995

- Mark Hill, "Multiprocessors should support simple memory consistency models," IEEE Computer, August 1998

- Amir Kamil Jimmy Su, and Katherine Yelick, "Making sequential consistency practical in Titanium," Supercomputing, SC-2005

- http://download.intel.com/education/highered/multicore/Lecture27.pdf