CSC/ECE 517 Summer 2020 - Active Learning for Review Tagging: Difference between revisions

No edit summary |

No edit summary |

||

| Line 80: | Line 80: | ||

====Answer-Tagging Report Page==== | ====Answer-Tagging Report Page==== | ||

[[File: | [[File:Answer_tagging_report_page.png|800px|center]] | ||

Changes made to this page include changing column names and adding an additional column for the number of inferred tags. Below we explained how each column is calculated. | Changes made to this page include changing column names and adding an additional column for the number of inferred tags. Below we explained how each column is calculated. | ||

Revision as of 04:50, 2 November 2020

This page provides a description of the Expertiza based independent development project.

Introduction

Background

The web application Expertiza, used by students in CSC 517 and other courses, allows students to peer-review and give suggestive comments to each other's work. Students will later be asked to voluntarily participate in an extra-credit review-tagging assignment in which they tag comments they received for helpfulness, positive tone, and other characteristics interested by researchers. Currently, students have to tag hundreds of comments they received in order to get full participation credits. Researchers are concerned that this amount of work would cause inattentive participants to submit responses deviating from what they should be, thus corrupting the established model. Therefore, by having the machine-learning algorithm to pre-determine the confidence level of the presence of the asked characteristic in a comment, one can ask students to assign only tags that the algorithm is unsure of, so students can focus on fewer tags with more attention and accuracy.

Problem Statement

The goal of this project is to construct a workable infrastructure for active learning, by incorporating machine-learning algorithms in evaluating which tags, by having a manual input, can help the AI learn more effectively. In particular, the following requirements are fulfilled:

- Incorporate metrics analysis into the review-giving process

- Reduce the number of tags students have to assign

- Reveal gathered information to report pages

- Update the web service to include paths to the confidence level of each prediction

- Decide a proper tag certainty threshold that says how certain the ML algorithm must be of a tag value before it will ask the author to tag it manually

Notes

This project is simultaneously being held with another project named 'Integrate Suggestion Detection Algorithm'. Whereas that project focuses on forming a central outlet to external web services, this project focuses more on interpreting results gotten back from external web services.

Design

Control Flow Diagram

Peer Logic is an NSF-funded research project that provides services for educational peer-review systems. It has a set of mature machine learning algorithms and models that compute metrics on the reviews. It would be helpful for Expertiza to integrate these algorithms into the peer-review process. Specifically, we want to

- Let students see the quality of their review before submission, and

- Selectively query manual tagging that are used to further train the models (active learning)

In order to integrate these algorithms into the Expertiza system, we have to build a translator-like model, which we named ReviewMetricsQuery, that converts outputs from external sources into a form that our system can understand and use.

Below we show the control flow diagram to help illustrate the usage of ReviewMetricsQuery model.

The ReviewMetricsQuery class is closely tied to the peer-review process. It is first called when students finish and about to submit their reviews on other students' work. Our plan is that, before the system marks their reviews as submitted, the ReviewMetricsQuery class intercepts the content of these reviews and send them to the Peer Logic web service for predictions. After it receives the results, it caches them to the local Expertiza database and then releases the intercept. Students, instead of being redirected to the list of reviews, are presented with an analysis report on the quality of their reviews. They may go back and edit their review comments, or confirm to submit, depending on whether they are satisfied with the results displayed to them.

Every prediction from the web service comes with a confidence level, indicating how confident the algorithm is in this prediction. Whenever a student goes to the review tagging page, before rendering any tags, the system consults the ReviewMetricsQuery class to see whether the value of the tag has previously been determined by the web service and whether its confidence level exceeds the pre-set threshold. If yes, meaning the algorithm is confident of its prediction, then it applies a lightening effect onto the tag to make it less noticeable. Students who do the tagging can easily distinguish the difference between normal tags and gray-out tags, and focus their attention more on normal tags. This is what active learning is about, to query manual inputs only if it adds to the knowledge of the algorithm.

These cached data would also be used in the instructor's report views, and that's the reason why these data must be cached locally. One review consists of about 10 to 20 comments and takes about minutes to process, and a report composes of thousands of such reviews. Querying web service results in real-time is impractical with respect to the time it consumes. We limit the number of contacts with the web service the least, by sending requests only when students decide to submit their reviews. In this way, the predicted values of each tag are up to date with the stored reviews.

Database Design

The only change to the database is to add a "confidence_level" column to the existing answer_tags table which is originally used to store tags assigned by students. One can imagine results from web service being a stack of tags assigned by the outside tool, with confidence level indicating how confident the outside tool is to each tag is assigned. The answer_tags table will therefore have two types of tags, one from the student, which has user_id but not confidence_level, and the other inferred from web service, which has confidence_level but not user_id. The system can determine what type of tags they are by checking the presence of values in these two fields.

UI Design

Four pages are needed to be modified to reflect the addition of new functionality.

Metrics Analysis Page

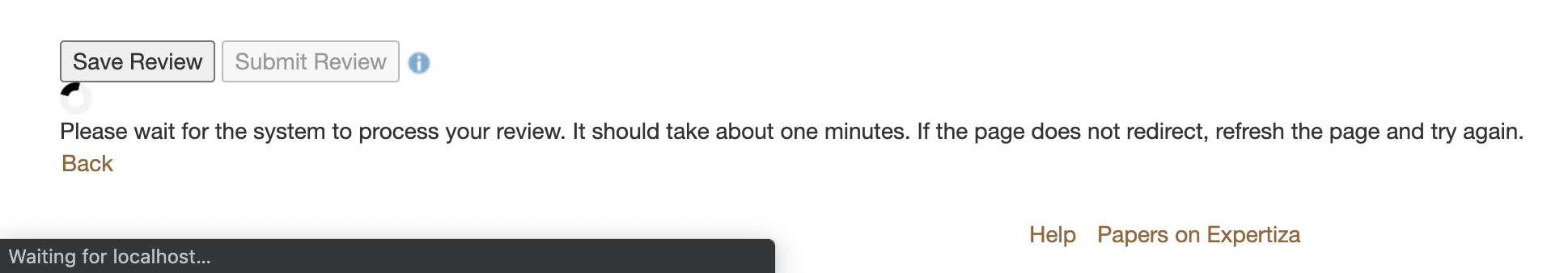

When students click the 'submit' button on the review giving page, the button will be put on the disabled effect to prevent students from submitting requests multiple times. The consequence of submitting request multiple times is that the same set of comments are being sent to the external web service for processing, wasting resources on both sides. To reassure students that the request has been made, we add to the bottom of the 'submit' button a loader and message, asking them to wait patiently to avoid overload the system.

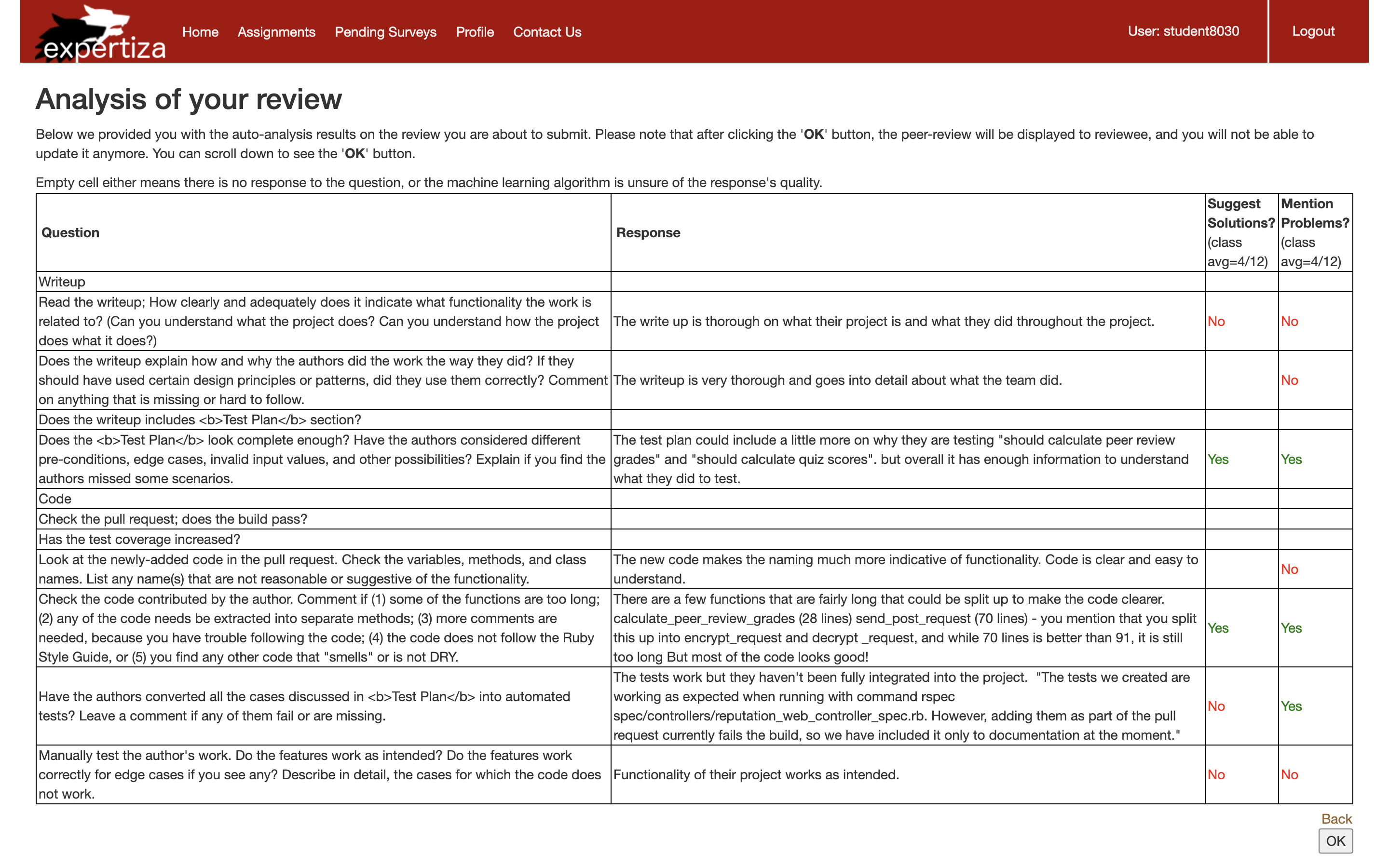

About half a minute after students click the 'submit' button, they are redirected to a page that shows the analysis of their submitted reviews. On that page, students can see every of their submitted comments along with the analyzed result on each metric. These metrics came from tag prompt deployments set by the instructor in a per questionnaire scope. Predictions with confidence levels under the predefined threshold are not rendered to the report so students do not see predictions that are uncertain or inaccurate. When students confirm to submit, they are returned to the list of reviews to perform other actions.

Review Tagging Page

From the above image, one can see that the slider has been changed into three forms:

The original form, meaning it needs input from the user.

The gray-out form, presenting tag inferred by the web service. Tag in this form is editable, meaning students can override some of the inferred tags if they wish.

The overridden form, which is used to represent a tag that originally has a value assigned by the web service but gets overrode by the user.

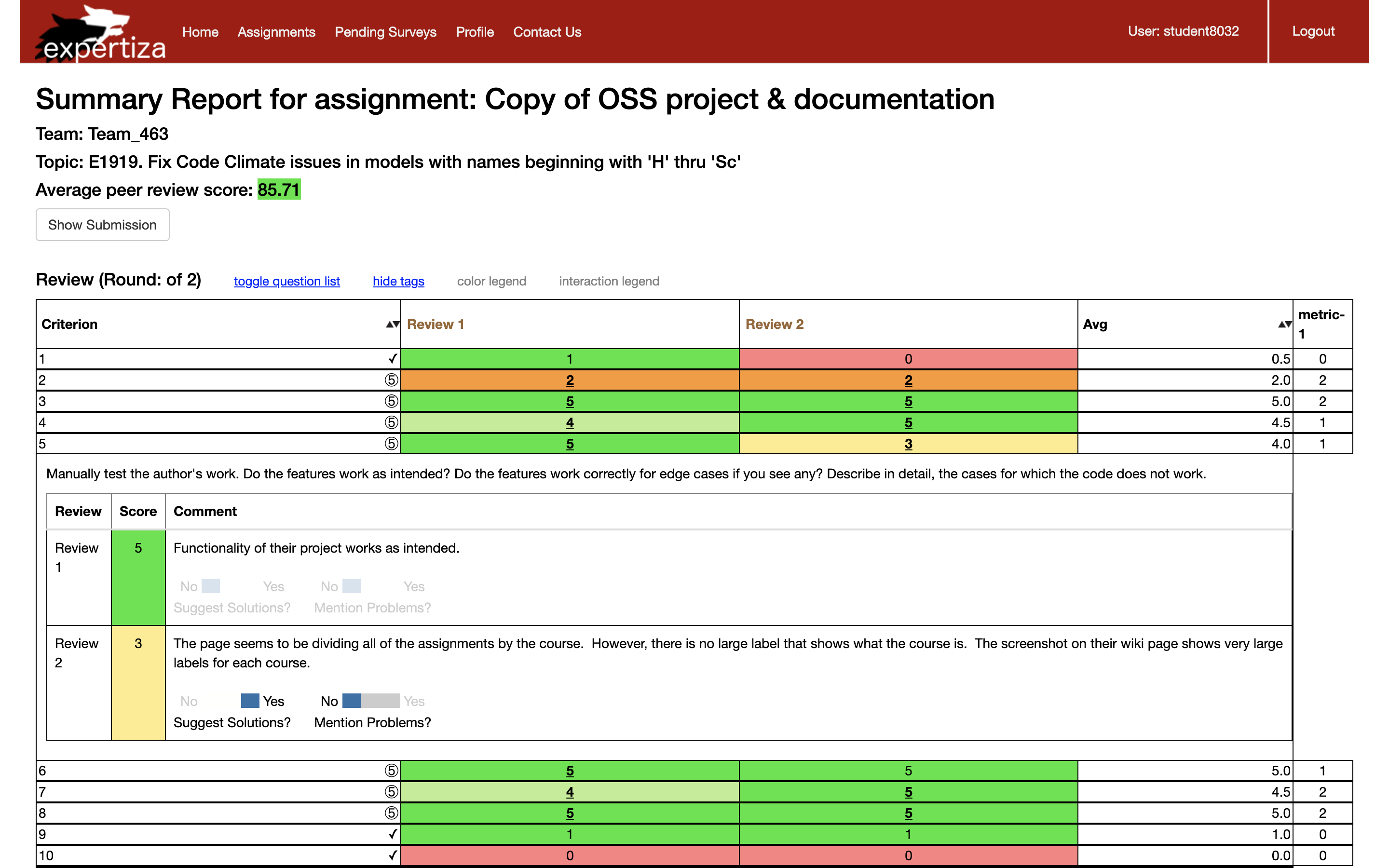

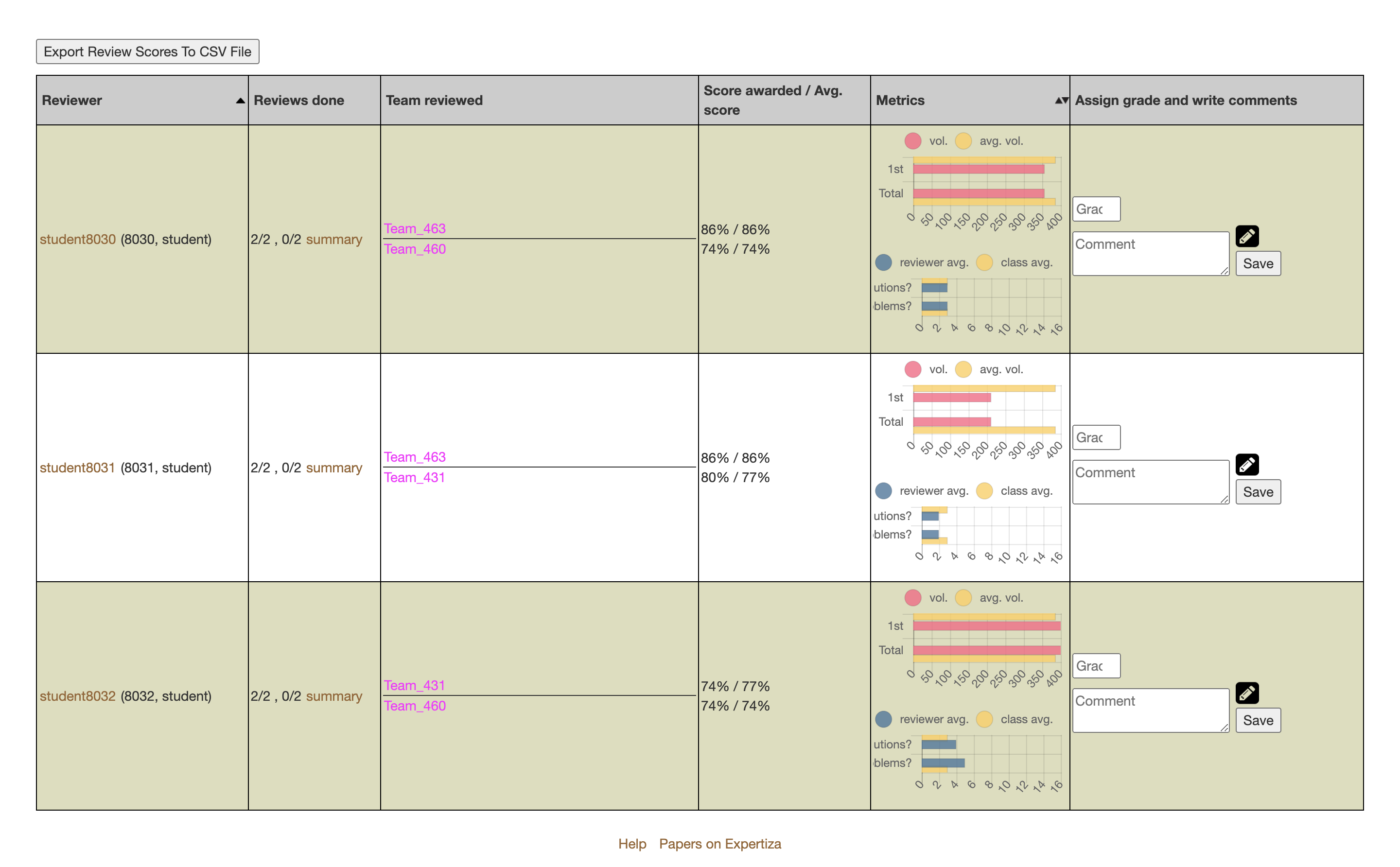

Review Report Page

In each row that represents each student, a metrics chart is added below the volume chart that's already there. Graders get useful information by looking at these two charts combined and are able to offer more accurate review grades to students. Due to the space limitation, each metric name cannot be fully expanded. Grader could hover the cursor above each bar to see its corresponding metric name.

Answer-Tagging Report Page

Changes made to this page include changing column names and adding an additional column for the number of inferred tags. Below we explained how each column is calculated.

- % tags applied by author = # tags applied by author / # appliable tags

- # tags applied by author = from # appliable tags, how many are tagged by the author

- # tags not applied by author = # appliable tags - # tags applied by the author

- # appliable tags = # tags whose comment is longer than the length threshold - # tags inferred by ML

- # tags inferred by ML = # tags whose comment is predicted by the machine learning algorithm with high confidence

Implementation

Models

app/models/answer.rb

- Added

de_tag_commentsmethod which remove html tags from the submitted review comment

app/models/answer_tag.rb

- Corrected typo (tag_prompt_deployment instead of tag_prompts_deployment)

- Added validation clause that checks the presence of either the

user_idor theconfidence_level

app/models/review_metrics_query.rb

- The only model class that is responsible for communications between `MetricsController` and the rest of the Expertiza system where tags are used

- Added

average_number_of_qualifying_commentsmethod which returns either the average for one reviewer or the average for the whole class, depending on whether the reviewer is supplied

app/models/tag_prompt.rb

- Added codes to set up the style of the slider (none, gray-out, or overridden) when it is about to be rendered

app/models/tag_prompt_deployment.rb

- Slightly changed how each column in the answer_tagging report is calculated

app/models/vm_user_answer_tagging.rb & app/helpers/report_formatter_helper.rb

- Added one variable that stores the number of tags inferred by ML

Controllers

app/controllers/metrics_controller.rb

- Created an empty

MetricsControllerclass so tests could be passed

app/controllers/response_controller.rb

- Alternated the redirection so students could be redirected to the analysis page after they click to submit their reviews

- Added

confirm_submitmethod which marks the review in the parameter to 'submitted'

Views

app/views/popup/view_review_scores_popup.html.erb

- Fixed syntax error

app/views/reports/_answer_tagging_report.html.erb

- Renamed columns in the answer_tagging report

- Added a new column to the table named "# tags inferred by ML"

app/views/reports/_review_report.html.erb & app/helpers/review_mapping_helper.rb

- Added an additional bar chart in each row of the review report for metrics

- Fixed redirection bug

app/views/response/analysis.html.erb

- Drafted the analysis page, which shows the web service's prediction for each comment on each metric

app/views/response/response.html.erb

- Added codes that disable the "Submit" button after it is being clicked

app/views/versions/search.html.erb

- Fixed syntax error

Peripheral Changes

app/assets/javascripts/answer_tags.js

- Controlled the dynamic effect of overriding an inferred tag

app/assets/stylesheets/response.scss

- Styles for disabled button and spinning loader

app/assets/stylesheets/three_state_toogle.scss

- Styles for different forms of tags

config/routes.rb

- Added a

confirm_submitroute

db/migrate/20200825210644_add_confidence_level_to_answer_tags_table.rb

- Added

confidence_levelcolumn to theanswer_tagstable

db/schema.rb

- Updated schema

Test Plan

References

Yulin Zhang (yzhan114@ncsu.edu)