CSC/ECE 517 Spring 2024 - NTNX-1 : Extend NDB Operator to Support Postgres HA: Difference between revisions

No edit summary |

No edit summary |

||

| Line 80: | Line 80: | ||

[[File:GetInstanceNodesImplementation.png]] | [[File:GetInstanceNodesImplementation.png]] | ||

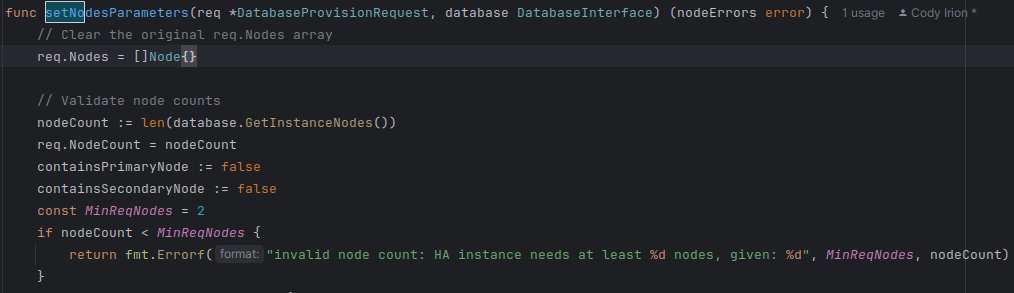

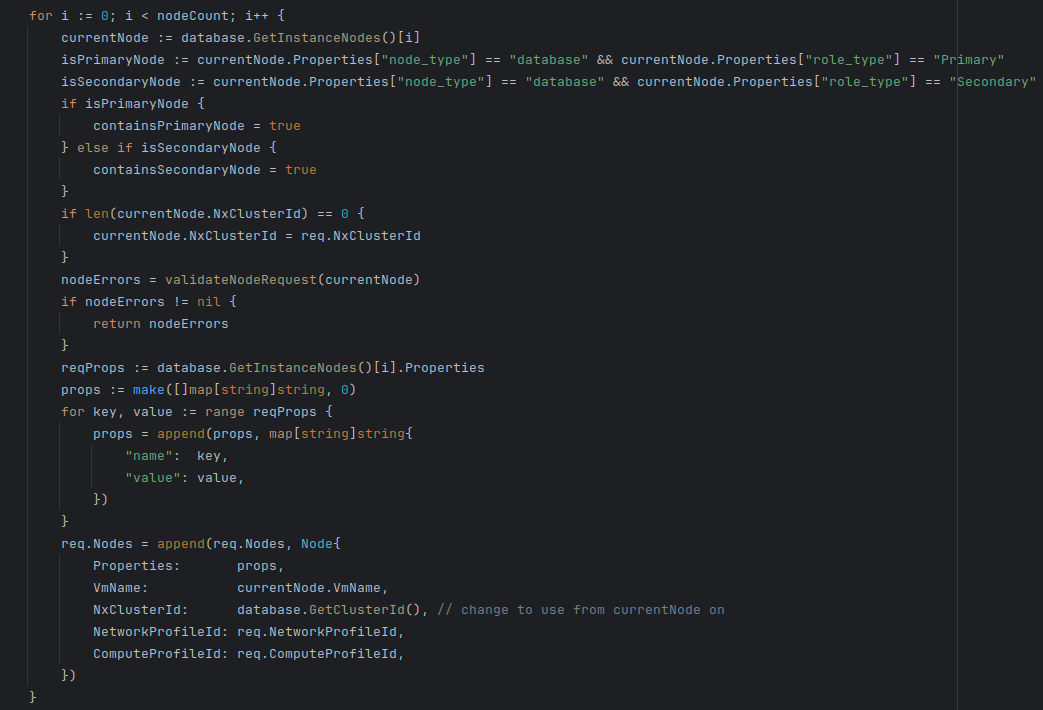

Now that the Node information is available we need to use the provided information to build the request. This is accomplished by modifying the existing setNodesParameters function inside the db_helpers.go file. This method originally had all the values hard set and would only create an Postgres HA instance with one configuration, 2 HA proxy nodes and 3 database nodes. The new implementations will allow the user to specify 2 or more nodes. The setNodesParameters function will be modified to to return golangs built in error object in the case of an error when setting the node parameters. Initially the length of the array of nodes is checked to make sure there are at least 2 nodes. There must be at a minimum 2. There must be at least one primary and one secondary database node. This is checked with the containsPrimaryNode and | Now that the Node information is available we need to use the provided information to build the request. This is accomplished by modifying the existing setNodesParameters function inside the db_helpers.go file. This method originally had all the values hard set and would only create an Postgres HA instance with one configuration, 2 HA proxy nodes and 3 database nodes. The new implementations will allow the user to specify 2 or more nodes. The '''setNodesParameters''' function will be modified to to return golangs built in error object in the case of an error when setting the node parameters. Initially the length of the array of nodes is checked to make sure there are at least 2 nodes. There must be at a minimum 2. There must be at least one primary and one secondary database node. This is checked with the '''containsPrimaryNode''' and '''containsSecondaryNode variables'''. If these are false after creating all the nodes an error is returned. | ||

[[File:ValidateCount.png]] | [[File:ValidateCount.png]] | ||

If the number of nodes is valid the node structs are iterated through. While iterating through the nodes the node properties are validated using the validateNodeRequest function. If no errors are found the properties are added to an array of key/value pairs. After adding all the properties the rest of the node fields are set then the node is added to the request | If the number of nodes is valid the node structs are iterated through. While iterating through the nodes the node properties are validated using the '''validateNodeRequest''' function. If no errors are found the properties are added to an array of key/value pairs. After adding all the properties the rest of the node fields are set then the node is added to the request | ||

[[File:SetNodeProperties.png]] | [[File:SetNodeProperties.png]] | ||

Revision as of 00:16, 9 April 2024

Problem Backgroud

Existing Architecture

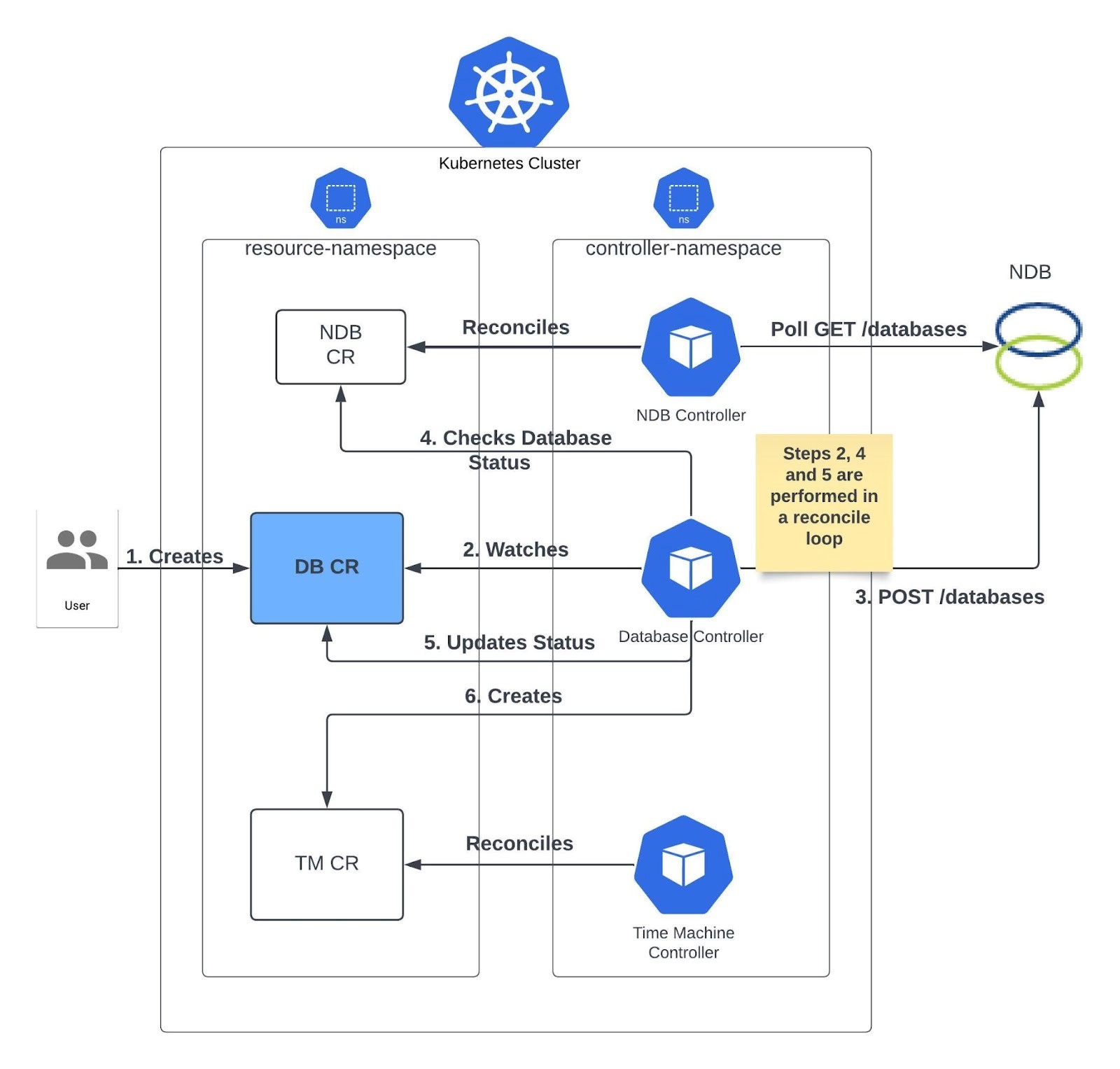

When provisioning a new database, the NDB Operator monitors the cluster for newly created custom resources. Once it is created, the operator syncs the change with the NDB Server, which records all databases to be provisioned.

The operator then reconciles the database/NDB CR, and watches its status thereafter. If a user modifies that DB CR, the reconcile loop begins again.

Postgres HA Instance

Postgres HA instances use redundancies to keep a PostgreSQL database active and accessible during hardware failures, software difficulties, and other disturbances. These redundancies, DB replication, failover, and load balancing, are managed through a HAProxy. The proxy determines which database the query goes to based on the load and status.

The NDB API already supports Postgres High Availability DB, but provisioning them in the Kubernetes operator is not supported.

Problem Statement

We need to expand the NDB Operator to accommodate Postgres HA. This, as you will see below, will add fields to the existing architecture. With the new HA options, we will create end-to-end and unit tests for provisioning and removing DB.

Design and Workflow

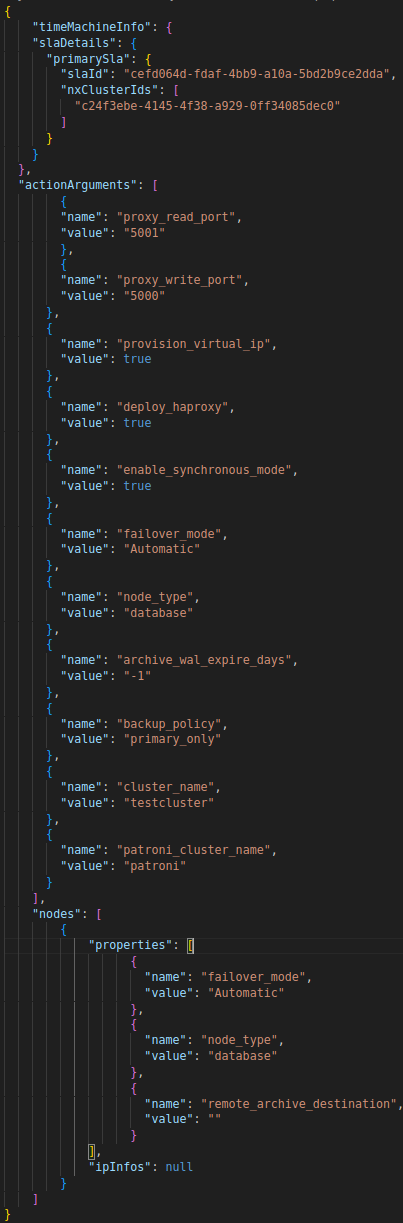

- To start we compared the API payloads for creating a Postgres and Postgres HA database to see which parameters are unique to Postgres HA. The values unique to the Postgres HA payload can be seen here:

- Next, we compared these unique parameters with the pull request from last semester to begin implementing Postgres HA. This told us which parts have been partially or fully implemented and which have not been added at all.

- When comparing the Postgres HA with the changes made in the existing PR we can see that most of the parameters have been implemented in a hard coded fashion. With the past implementation a user can create a Postgres HA instance by setting the new isHighAvailability parameter in the NDB Custom Resource (referred to later as CR) to true. This allowed the provisioning of a Postgres HA instance with preset values for the various HA options.

- Our implementation will instead move the isHighAvailability field to be a part of the database parameters AdditionalArgument map. This has the benefit of not requiring another field for the custom resource when not wanting an HA instance.

- Most of the work in our implementation will be allowing the default values below to be set using optional parameters. These optional parameters will be provided by the user when creating the CR by specifying the parameters by their key/value pairs inside the additionalArguments section.

- Provided parameters are used (assuming it is valid) to override the default values.

- If the parameter is absent, the default values will be left unchanged.

- In addition to the the additionalArguments we need the ability to specify individual nodes and their properties. Since the properties can vary between nodes and the number of nodes is variable the additionalArguments, that only supports key/value parts of the String type, is not a good location for this information. We are adding a new struct titled Node and the manifest that is used to provision the request will accept an array of these new node structs.

Out of those ActionArguments above, the unimplemented ones are:

actionArguments": [

{

"name": "provision_virtual_ip",

"value": true

},

{

"name": "deploy_haproxy",

"value": true

},

{

"name": "failover_mode",

"value": "Automatic"

},

{

"name": "archive_wal_expire_days",

"value": "-1"

},

{

"name": "patroni_cluster_name",

"value": "patroni"

},

],

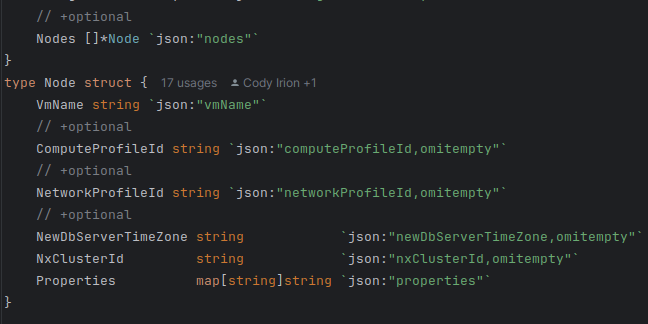

Implementing Dynamic Node Declaration

In order to add the new struct to the database request a few changes are needed to be made to the code. The first of which is to add the a new Node struct to the database_type file. These new struct will hold all the field and the properties that can be specified in the database custom resource (CR). An optional array of these structs will be added to the Instance and Clone structs to allow users to specify which nodes to create. If no node specifications are given a default setup will be used. The // +optional looks like just a comment but it what marks a field as optional in the CR, without it and error will be thrown if the field is not provided.

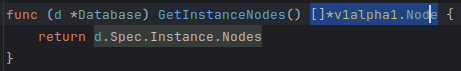

Next to make these new structs available when creating the provisioning request we need to add a method to the interface.go file and implement that method.This interface is implemented in the database.go file and this will make the new Node structs available when we build the provisioning request in the next steps.

Now that the Node information is available we need to use the provided information to build the request. This is accomplished by modifying the existing setNodesParameters function inside the db_helpers.go file. This method originally had all the values hard set and would only create an Postgres HA instance with one configuration, 2 HA proxy nodes and 3 database nodes. The new implementations will allow the user to specify 2 or more nodes. The setNodesParameters function will be modified to to return golangs built in error object in the case of an error when setting the node parameters. Initially the length of the array of nodes is checked to make sure there are at least 2 nodes. There must be at a minimum 2. There must be at least one primary and one secondary database node. This is checked with the containsPrimaryNode and containsSecondaryNode variables. If these are false after creating all the nodes an error is returned.

If the number of nodes is valid the node structs are iterated through. While iterating through the nodes the node properties are validated using the validateNodeRequest function. If no errors are found the properties are added to an array of key/value pairs. After adding all the properties the rest of the node fields are set then the node is added to the request

References:

https://github.com/nutanix-cloud-native/ndb-operator

https://sdk.operatorframework.io/docs/building-operators/golang/tutorial/

https://docs.google.com/document/d/11Z-AAB7O-Fy0tRo1X7qI5_JfRcwcZuuhpGY0tKoFp0U/edit?usp=sharing

https://www.nutanix.com/what-we-do

https://github.com/dvrohan/ndb-operator

Provisioning Postgres HA Instances Team:

Mentor

Kartiki Bhandakkar <kbhanda3@ncsu.edu>

Student

Cody Irion

Zhi Zhang

Justin Orringer

Kandarpkumar Patel