CSC/ECE 517 Spring 2024 - E2440 Testing for questionnaire helper, review bids helper

This page describes the changes made for the Spring 2024 E2440. Testing for questionnaire_helper, review_bids_helper

Project Overview

Problem Statement

Objectives

- bbnn

Files Involved

- reimplemented algorithm: /app/controllers/reputation_web_service_controller.rb

- test file: /spec/controllers/reputation_mock_web_server_hamer.rb

Mentor

- Muhammet Mustafa Olmez (molmez@ncsu.edu)

Team Members

- Neha Vijay Patil (npatil2@ncsu.edu)

- Prachit Mhalgi (psmhalgi@ncsu.edu)

- Sahil Santosh Sawant (ssawant2@ncsu.edu)

Hamer Algorithm

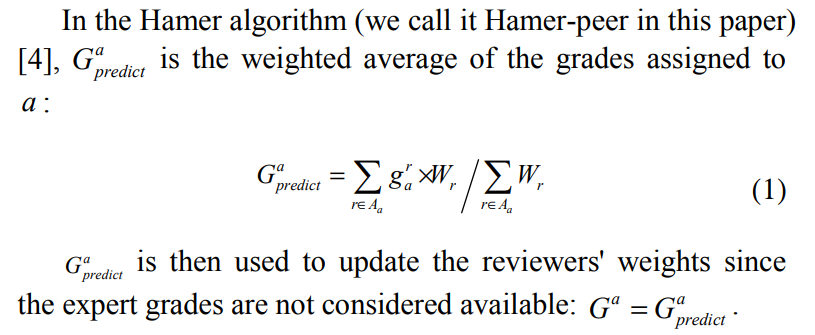

The grading algorithm described in the paper is designed to provide a reward to reviewers who participate effectively by allocating a portion of the assignment mark to the review, with the review mark reflecting the quality of the grading. Here's an explanation of the algorithm:

1. Review Allocation: Each reviewer is assigned a number of essays to grade. The paper suggests assigning at least five essays, with ten being ideal. Assuming each review takes 20 minutes, ten reviews can be completed in about three and a half hours.

2. Grading Process:

- Once the reviewing is complete, grades are generated for each essay and weights are assigned to each reviewer.

- The essay grades are computed by averaging the individual grades from all the reviewers assigned to that essay.

- Initially, all reviewers are given equal weight in the averaging process.

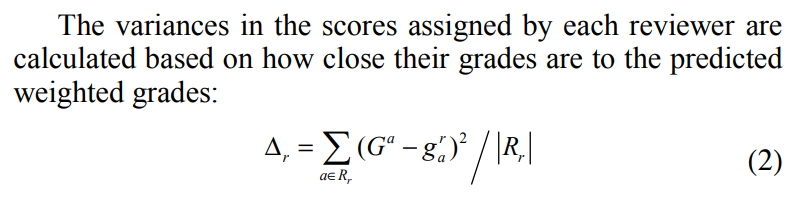

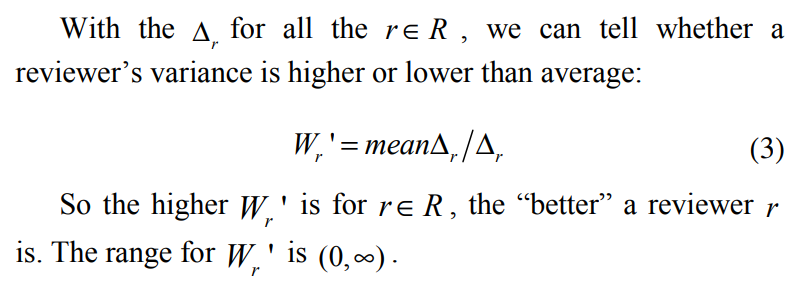

- The algorithm assumes that some reviewers will perform better than others. It measures this by comparing the grades assigned by each reviewer to the averaged grades. The larger the difference between the assigned and averaged grades, the more out of step the reviewer is considered with the consensus view of the class.

- The algorithm adjusts the weighting of the reviewers based on this difference. Reviewers who are closer to the consensus view are given higher weights, while those who deviate significantly are given lower weights.

3. Iterative Process:

- The calculation of grades and weights is an iterative process. Each time the grades are calculated, the weights need to be updated, and each change in the weights affects the grades.

- Convergence occurs quickly, typically requiring four to six iterations before a solution (a "fix-point") is reached.

4. Weight Adjustment:

- The weights assigned to reviewers are adjusted based on the difference between the assigned and averaged grades. Reviewers with larger discrepancies have their weights adjusted inversely proportional to this difference.

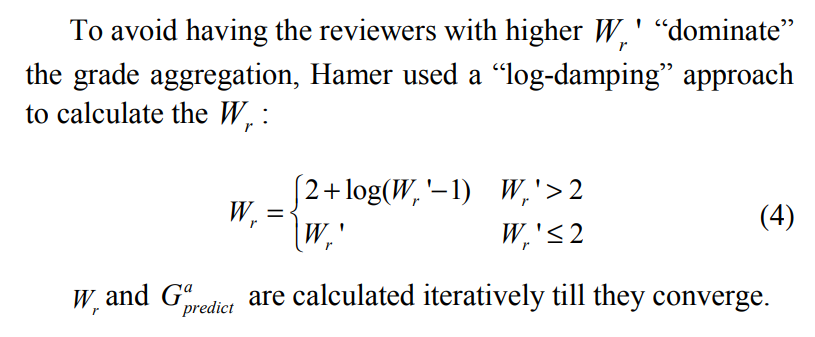

- To prevent excessively large weights, a logarithmic dampening function is applied, allowing weights to rise to twice the class average before further increases are awarded sparingly.

5. Properties:

- The algorithm aims to identify and diminish the impact of "rogue" reviewers who may inject random or arbitrary grades into the peer assessment process.

- By adjusting reviewer weights based on their grading accuracy, the algorithm aims to improve the reliability of the grading process in the presence of such rogue reviewers.

Overall, the algorithm seeks to balance the contributions of different reviewers based on the accuracy of their grading, ultimately aiming to produce reliable grades for each essay in a peer assessment scenario.

Hamer value calculation

Objective 1: Develop code testing scenarios

We assumed 9 reviewers to review 4 submissions each to cover the following test scenarios:

- 3 cases where reviewers are giving credible scores (passing1, passing2, passing3)

- case where reviewer is giving max scores (10) to all submissions (should be flagged)

- case where reviewer is giving min scores (0) to all submissions (should be flagged)

- case where reviewer is giving median scores (5) to all submissions (should be flagged)

- case where reviewer is giving same scores to all submissions (should be flagged)

Object Creation

Below is the Input object for tests that cover all the above scenarios:

INPUTS_new = {

"submission1": {

"maxtoall": 10,

"mintoall": 1,

"mediantoall": 5,

"incomplete_review": 4,

"max_incomplete": 10,

"sametoall":3,

"passing1": 10,

"passing2": 10,

"passing3": 9

},

"submission2": {

"maxtoall": 10,

"mintoall": 1,

"mediantoall": 5,

"incomplete_review": 2,

"max_incomplete": 10,

"min_incomplete": 1,

"sametoall":3,

"passing1": 3,

"passing2": 2,

"passing3": 4

},

"submission3": {

"maxtoall": 10,

"mintoall": 1,

"mediantoall": 5,

"sametoall":3,

"passing1": 7,

"passing2": 4,

"passing3": 5

},

"submission4": {

"maxtoall": 10,

"mintoall": 1,

"mediantoall": 5,

"max_incomplete": 10,

"min_incomplete": 1,

"sametoall":3,

"passing1": 6,

"passing2": 4,

"passing3": 5

}

}.to_json

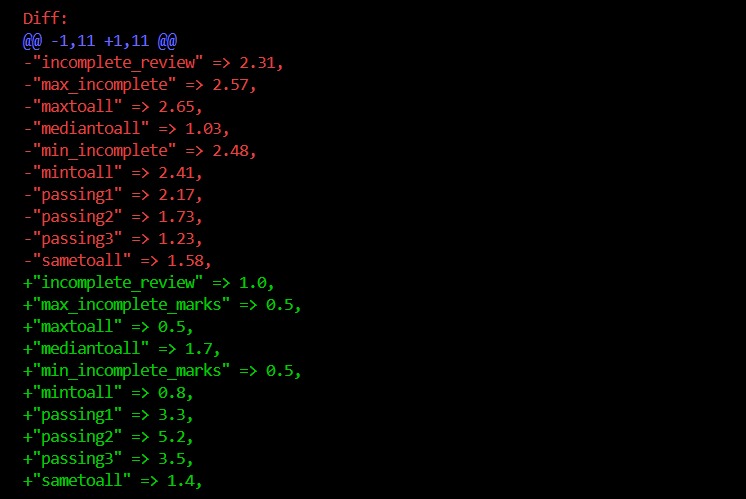

Expected Hamer Values

EXPECTED = {

"Hamer": {

"maxtoall": 2.65,

"mintoall": 2.41,

"mediantoall": 1.03,

"incomplete_review": 2.31,

"max_incomplete": 2.57,

"min_incomplete": 2.48,

"sametoall":1.58,

"passing1": 2.17,

"passing2": 1.73,

"passing3": 1.23,

}

}.to_json

Objective 2: Reimplement the algorithm if discrepancies arise in the reputation web server's Hamer values.

As established before, the values returned by reputation server do not match the expected values. Hence, we concluded that the PeerLogic Webservice is implemented incorrectly. In this phase, we implemented the algorithm in Ruby as a function in a controller file : /app/controllers/reputation_web_service_controller.rb

Changes made in implementation

- coded this algorithm in Ruby in a controller.

- Included a way for the algorithm to handle nil values.

Code Snippet

# Method: calculate_reputation_score

# This method calculates the reputation scores for each reviewer based on the provided input data.

# It first parses the input JSON string to extract the submissions and their corresponding scores.

# Then, it calculates the average weighted grades per reviewer and the delta R values.

# Next, it calculates the weight prime values based on the delta R values.

# Finally, it calculates the reputation weights for each reviewer using the weight prime values.

#

# Params

# - input_json: a JSON string representing the input data with submission scores

#

# Returns

# An array of reputation scores, one score per reviewer, indicating their reputation in the system.

def calculate_reputation_score(reviews)

# Parse the input JSON string

# reviews = JSON.parse(input_json)

# Initialize arrays to store intermediate values

grades = []

delta_r = []

weight_prime = []

weight = []

# Calculate Average Weighted Grades per Reviewer

reviews.each do |reviewer_marks|

# Skip nil values when calculating the sum

reviewer_marks_without_nil = reviewer_marks.compact

assignment_grade_average = reviewer_marks_without_nil.sum.to_f / reviewer_marks_without_nil.length

grades << assignment_grade_average

end

# Calculate delta R

reviews.each do |reviewer_marks|

reviewer_delta_r = 0

# Skip nil values when calculating the sum

reviewer_marks_without_nil = reviewer_marks.compact

reviewer_marks_without_nil.each_with_index do |grade, student_index|

reviewer_delta_r += (grade - grades[student_index]) ** 2

end

delta_r << reviewer_delta_r / reviewer_marks_without_nil.length

end

# Calculate weight prime

average_delta_r = delta_r.sum / delta_r.length.to_f

delta_r.each do |reviewer_delta_r|

weight_prime << average_delta_r / reviewer_delta_r

end

# Calculate reputation weight

weight_prime.each do |reviewer_weight_prime|

if reviewer_weight_prime <= 2

weight << reviewer_weight_prime.round(2)

else

weight << (2 + Math.log(reviewer_weight_prime - 1)).round(2)

end

end

# Return the reputation weights

weight

end

end

Objective 3: Validate the accuracy of the newly implemented Hamer algorithm

We test the newly implemented Hamer algorithm function with our scenarios and verify if they match the expected values.

Test Code Snippet

describe ReputationWebServiceController do

it "should calculate correct Hamer calculation" do

weights = ReputationWebServiceController.new.calculate_reputation_score(reviews)

keys = ["maxtoall", "mintoall", "mediantoall", "incomplete_review", "sametoall", "passing1", "passing2", "passing3"]

rounded_weights = weights.map { |w| w.round(1) }

result_hash = keys.zip(rounded_weights).to_h

expect(result_hash).to eq(JSON.parse(EXPECTED)["Hamer"])

end

end

Results

Conclusion

The observed results indicate a tendency towards lower values, primarily due to our decision to include nil values and treat them as zeros in our analysis. This treatment has led to a skew in the scores, favoring lower values and potentially impacting the accuracy of our findings. To address this issue and improve the robustness of our analysis, it is advisable to explore alternative approaches such as using median or random values instead of treating nil values as zeros. However, we must also carefully consider how to handle incomplete reviews that contain nil values in our input dataset, as this can significantly influence the overall integrity and reliability of our results and conclusions.

Conclusion

In this project, we aimed to test the accuracy of the Hamer algorithm used for assessing the credibility of reviewers in a peer assessment system. We began by developing code testing scenarios to validate the Hamer algorithm and ensure the accuracy of its output values. These scenarios covered various review scenarios, including cases where reviewers provided extreme scores.

It was established that the original reputation web server was implemented incorrectly.

As a result, we proceeded to reimplement the Hamer algorithm in Ruby, incorporating adjustments to handle nil values appropriately. Subsequently, we validated the accuracy of the newly implemented algorithm using the same testing scenarios. While the results initially showed a skew towards lower values due to our treatment of nil values, we acknowledge the need for further refinement to handle these cases more effectively.

In conclusion, this project highlights the importance of rigorous testing and implementation adjustments in ensuring the reliability of algorithms used in peer assessment systems. Moving forward, we recommend further refinements and validations to enhance the accuracy and robustness of the Hamer algorithm.

Links

Link to Expertiza repository: here

Link to the forked repository: here

Link to pull request: here

Link to Github Project page: here

Link to Testing Video: here

References

1. Expertiza on GitHub (https://github.com/expertiza/expertiza)

2. The live Expertiza website (http://expertiza.ncsu.edu/)

3. Pluggable reputation systems for peer review: A web-service approach (https://doi.org/10.1109/FIE.2015.7344292)