CSC/ECE 517 Spring 2022 - E2212: Testing for hamer.rb

This page describes the changes made for the Spring 2022 OSS Project E2212: Testing for hamer.rb

Project Overview

Introduction

Using student's reviews of a certain assignment as a more accurate grade has become more popular among professors and courses in universities. Not only does this method free the professor and TAs from days of work, but also allows for students to learn more about an assignment through grading other's work. Unfortunately, many students may not take reviewing other's work seriously and may simply give 100 or 0 to other students. Since such reviews may skew a student's grade, a system to assert the correctness and credibility of a reviewer is necessary for student reviews to be accurate. The hamer algorithm was create for such purposes and returns a reputation weight associated with each reviewer. The instructor can then use the reputation weight value to either assert the reliability of a reviewer or use these values to compute a grade for a reviewer.

System Design

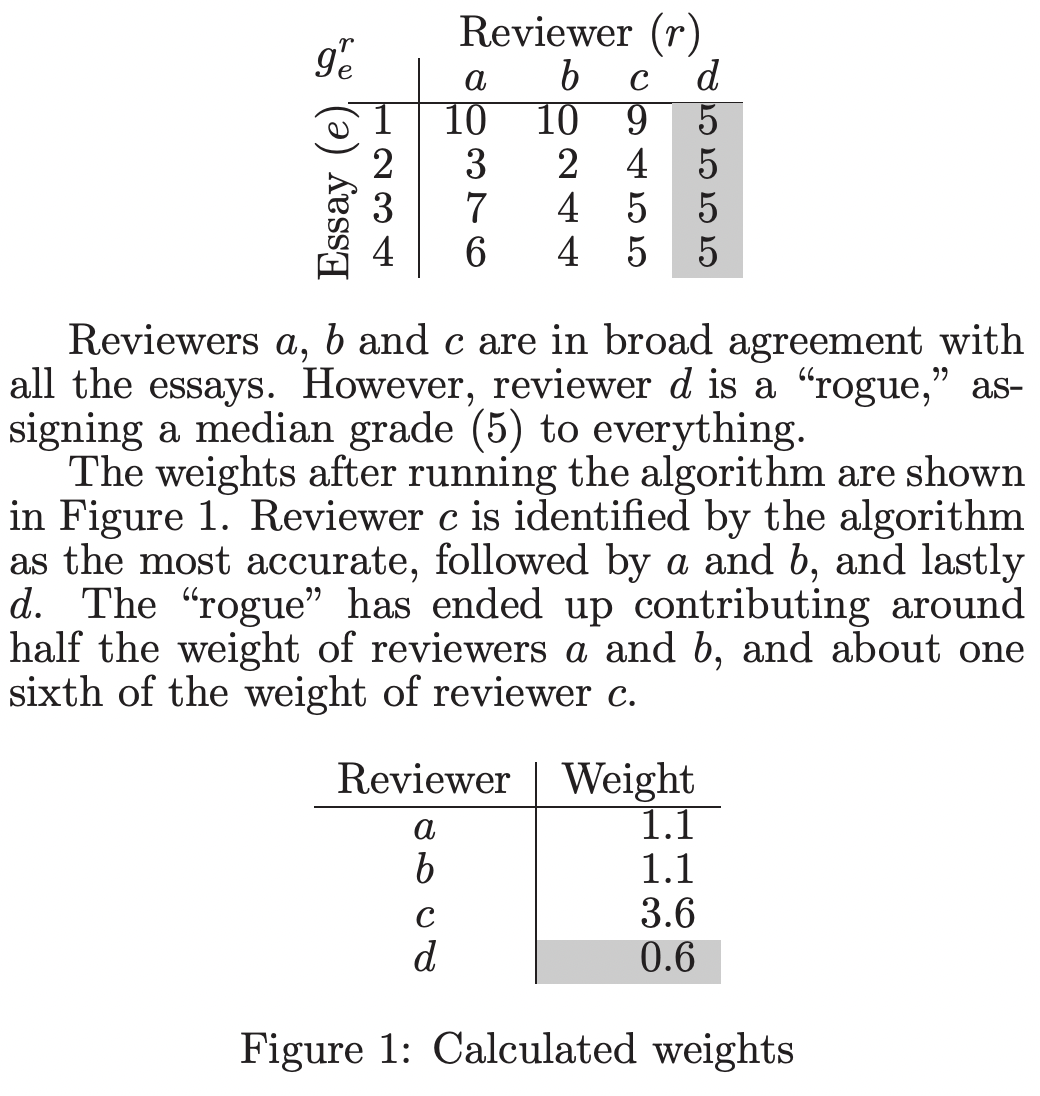

The hamer algorithm takes in a set of grades for assignments by reviewer and also any reputation weights (optional) associated with each reviewer to compute a reputation weight value for each reviewer. These reputation weight values indicate the accuracy and reliability of each reviewer. For example, a reviewer with a reputation weight of 3.0 is more accurate and reliable in their reviews compared to a 0.5 reputation weight of another reviewer. The following is an example from the paper [1] that describes the hamer algorithm:

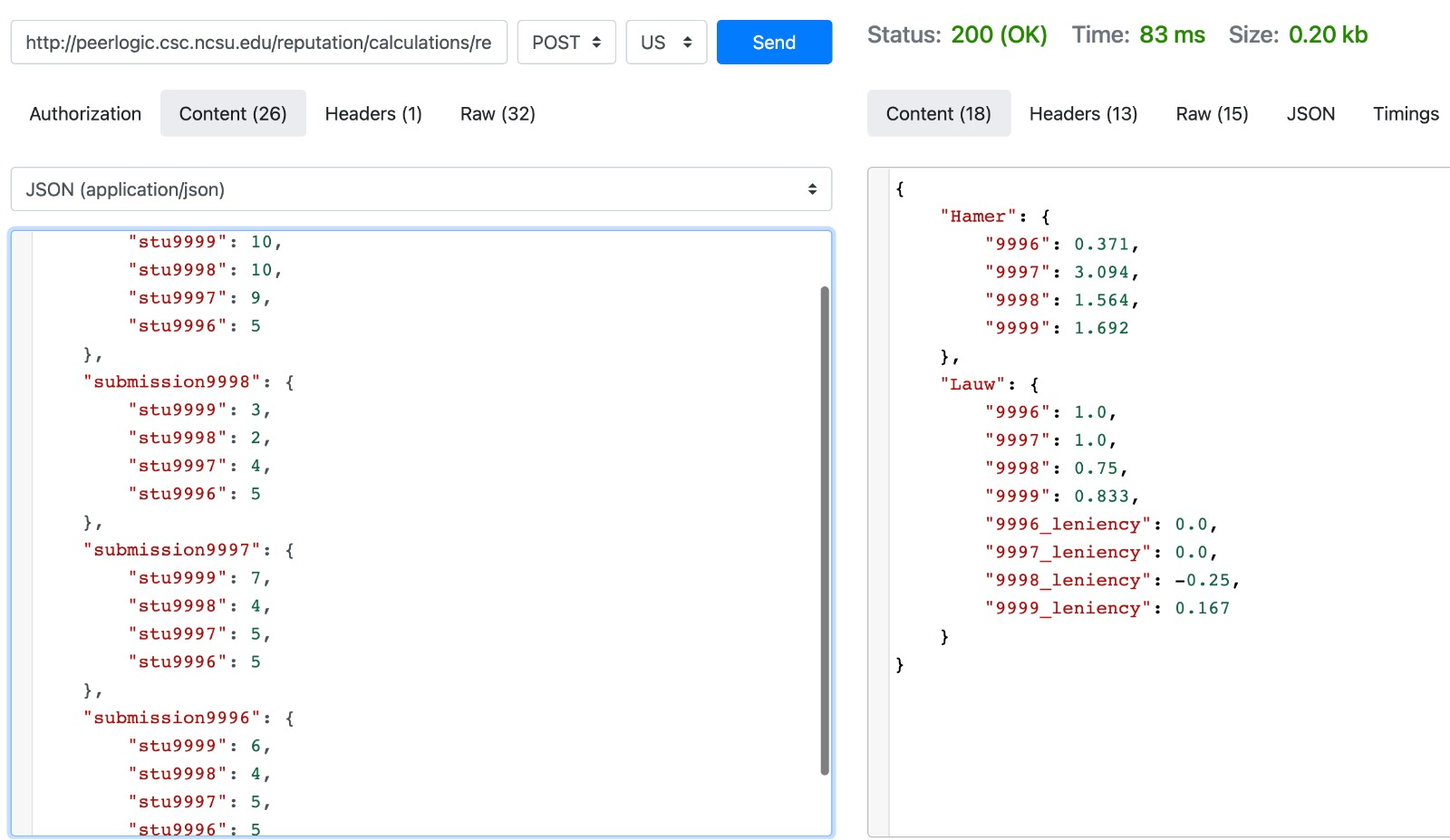

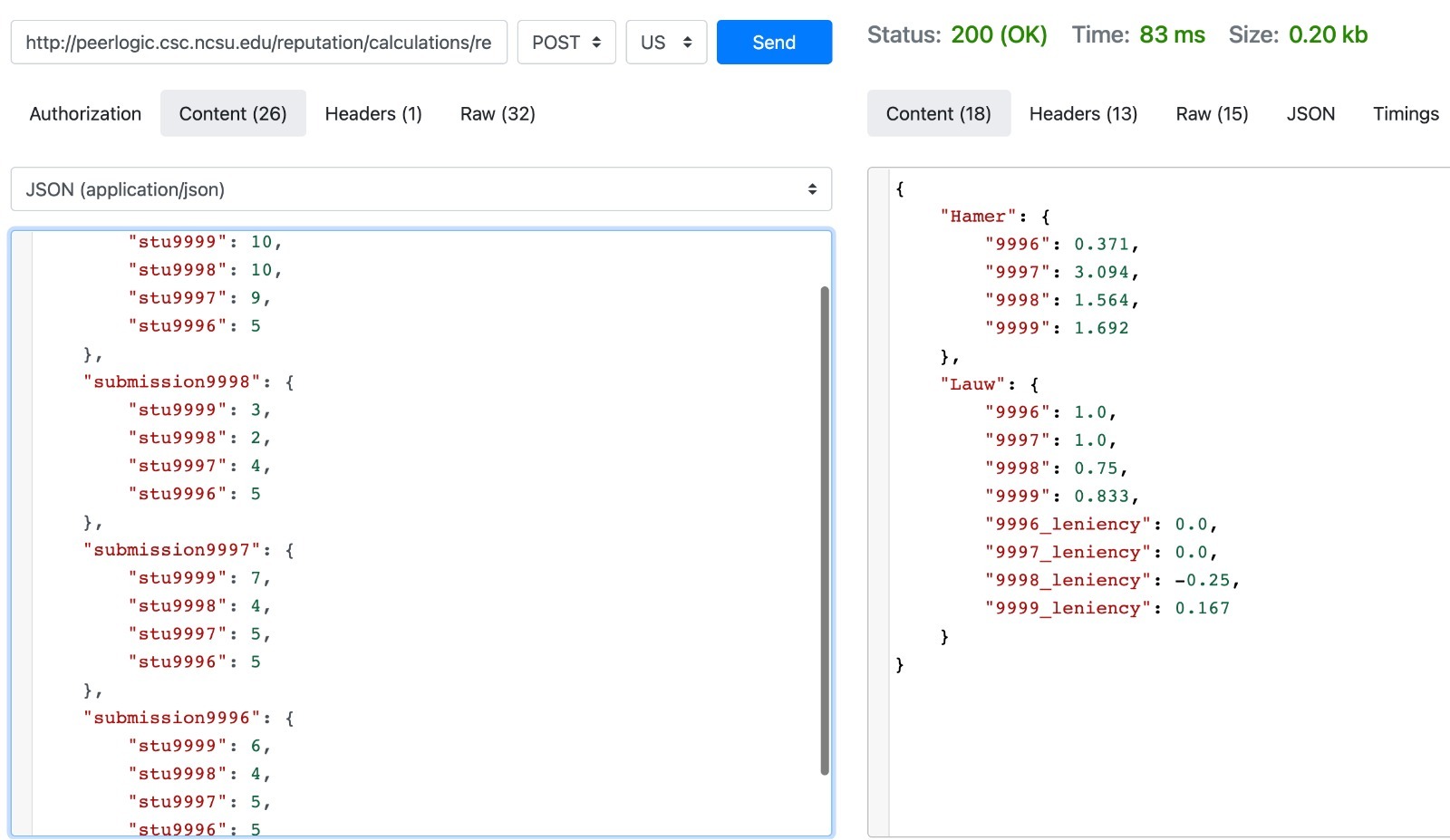

This algorithm is currently deployed to the following web server: http://peerlogic.csc.ncsu.edu/reputation/calculations/reputation_algorithms. To use the algorithm, a post request is sent to the peerlogic URL with a json body consisting of each assignment and the grades, and the reviewer who gave each grade. Once the request is sent, a json response is sent back with the corresponding hamer reputation weight values. The following is an example of a post request to the peerlogic URL to get back hamer reputation values:

Objectives

- Calculate reputation scores based on paper "Pluggable reputation systems for peer review: A web-service approach"

- Assert the accuracy of the reputation web server's hamer values through the URL http://peerlogic.csc.ncsu.edu/reputation/calculations/reputation_algorithms.

- Create a mock web server to return the correct hamer values if the reputation web server's hamer algorithm returns incorrect values.

Files Involved

- reputation_web_server_hamer.rb

- reputation_mock_web_server_hamer.rb

Mentor

Ed Gehringer, efg@ncsu.edu

Team Members

- Joshua Lin (jlin36@ncsu.edu)

- Muhammet Mustafa Olmez (molmez@ncsu.edu)

- Soumyadeep Chatterjee (schatte5@ncsu.edu)

Algorithms

Reputation systems may take various factors into account: • Does a reviewer assign scores that are similar to scores assigned by the instructor (on work that they both grade)? • Does a reviewer assign scores that match those assigned by other reviewers? • Does the reviewer assign different scores to different work? • How competent has the reviewer been on other work done for the class?

There are two algorithms used, the Hamer-peer algorithm has the lowest maximum absolute bias and the Lauw-peer algorithm has the lowest overall bias.This indicates, from theinstructor’s perspective, if there are further assignments of this kind, expert grading may not be necessary. It is observed in the article (https://ieeexplore.ieee.org/abstract/document/7344292) that the overall bias is a little bit higher, but the max. absolute bias is very high (more than 20). This indicates that for future similar courses, the instructor can trust most students’ peer grading, but should be aware that the students may give inflated grades. Therefore spot-checking is necessary. However, overall bias is quite low, as the students gave grades at least 16 points lower than expert grades. This may because either more training is needed, or the review rubric is inadequate. The results also suggest that for future courses of this kind, the instructor cannot trust the students' grades; expert grades are still necessary. The main difference between the Hamer-peer and the Lauw-peer algorithm is that the Lauw-peer algorithm keeps track of the reviewer's leniency (“bias”), which can be either positive or negative. A positive leniency indicates the reviewer tends to give higher scores than average. Additionally, the range for Hamer’s algorithm is (0,∞) while for Lauw’s algorithm it is [0,1].

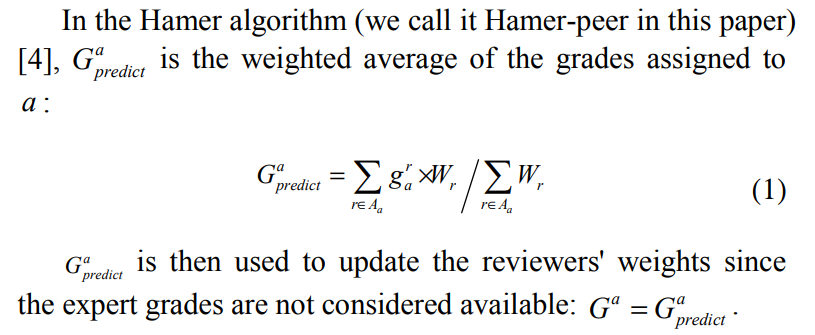

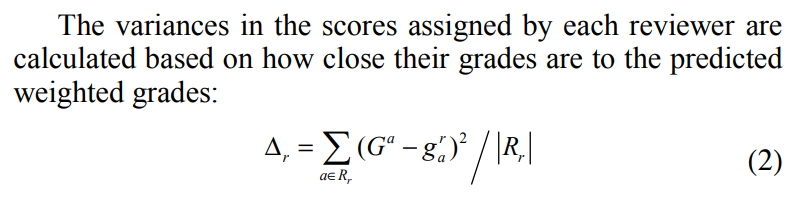

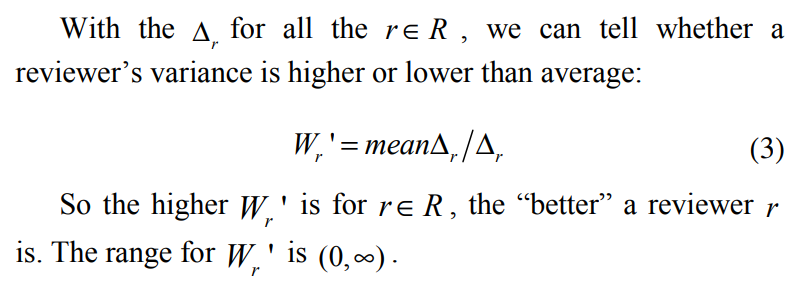

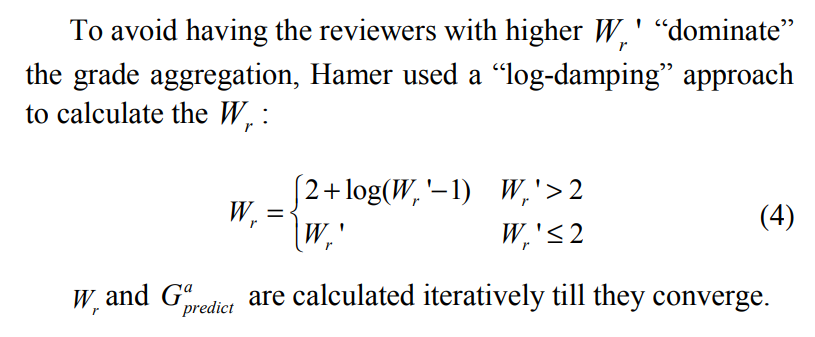

Hamer Algorithm

We implemented the steps of this algorithm for our analytical validation, found in the section below.

Test Plan - Initial Phase

In the initial phase, we were tasked with testing the reputation_web_service_controller. The work done by a previous project team was impacted by the web-service (peerlogic) not being available at that time. This time, we were able to access the Peerlogic server at a late stage - therefore, our plan at this moment involved performing a series of unit tests to determine that the web-service was communicating correctly with Expertiza.

Initial Testing Plan

1. Since our focus in this phase was to conduct exploratory testing of the system, we wrote some conventional tests to examine Peerlogic functionality. At this stage, we realized that Peerlogic would only accept and respond with JSON data.

2. Therefore, a natural next step was to prepare a series of input data that simulated a general input scenario for the system, comprising of:

- a. Each reviewer has assigned scores to 3 reviewees (fellow students)

- b. There are a total of 3 reviewers, who have all graded each other in some fashion for 5 assignments

- c. Convert this scenario to JSON

- d. Write code to PUT this to Peerlogic, and receive a response

- e. Parse through this response to obtain the output values of the Hamer Algorithm, as calculated by Peerlogic.

- f. This output would be compared against actual data that we calculated based on the Research Paper for the Hamer Algorithm

The code for the last step is shown below

import math

# Parameters: reviews list

# reviews list - a list of each reviewer's grades for each assignment

# Example:

# reviews = [[5,4,4,3,2],[5,3,4,4,2],[4,3,4,3,2]]

# Corresponding reviewer and grade for each assignment table

# Essay Reviewer1 Reviewer2 Reviewer3

# Assignment1 5 5 4

# Assignment2 4 3 3

# Assignment3 4 4 4

# Assignment4 3 4 3

# Assignment5 2 2 2

# Reivewer's grades given to each assignment 2D array

# Each index of reviews is a reviewer. Each index in reviews[i] is a review grade

reviews = [[5,4,4,3,2],[5,3,4,4,2],[4,3,4,3,2]]

# Number of reviewers

numReviewers = len(reviews)

# Number of assignments

numAssig = len(reviews[0])

# Initial empty grades for each assignment array

grades = []

# Initial empty delta R array

deltaR = []

# Weight prime

weightPrime = []

# Reviewer's reputation weight

weight= []

# Calculating Average Weighted Grades per Reviewer

for numAssigIndex in range(numAssig):

assignmentGradeAverage = 0

for numReviewerIndex in range(numReviewers):

assignmentGradeAverage += reviews[numReviewerIndex][numAssigIndex]

grades.append(assignmentGradeAverage/numReviewers)

print("Average Grades:")

print(grades)

# Calculating delta R

for numReviewerIndex in range(numReviewers):

reviewerDeltaR = 0

assignmentAverageGradeIndex = 0

for reviewGrade in reviews[numReviewerIndex]:

reviewerDeltaR += ((reviewGrade - grades[assignmentAverageGradeIndex]) ** 2)

assignmentAverageGradeIndex += 1

reviewerDeltaR /= numAssig

deltaR.append(reviewerDeltaR)

print("deltaR:")

print(deltaR)

# Calculating weight prime

averageDeltaR = 0

for reviewerDeltaR in deltaR:

averageDeltaR += reviewerDeltaR

averageDeltaR /= numReviewers

print("averageDeltaR:")

print(averageDeltaR)

# Calculating weight prime

for reviewerDeltaR in deltaR:

weightPrime.append(averageDeltaR/reviewerDeltaR)

print("weightPrime:")

print(weightPrime)

# Calculating reputation weight

for reviewerWeightPrime in weightPrime:

if reviewerWeightPrime <= 2:

weight.append(reviewerWeightPrime)

else:

weight.append(2 + math.log(reviewerWeightPrime - 1))

print("reputation per reviewer:")

i = 1

for reviewerWeight in weight:

print("Reputation of Reviewer ", i)

print(round(reviewerWeight,1))

i += 1

Output

Reputation of Reviewer 1 1.0 Reputation of Reviewer 2 1.0 Reputation of Reviewer 3 1.0

Initial Testing Conclusions

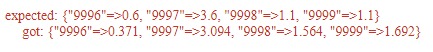

The results that are actually received from Peerlogic are presented below:

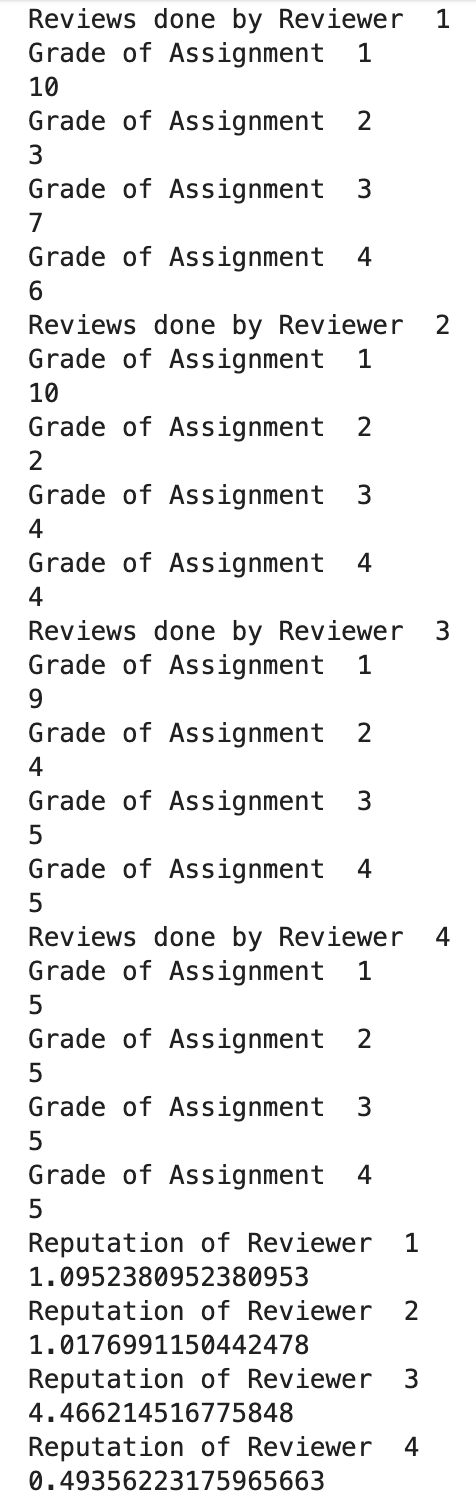

The results from our python recreated Hamer Algorithm are as followed:

As you can see, they do NOT match with expected results.

Therefore, our first conclusion is that the PeerLogic Webservice is implemented incorrectly.

This has been documented in the Conclusion section as the first point.

Changes to Project Scope

Test Plan - Second Phase

We followed the testing thought process recommended by Dr. Gehringer: In testing this service, we used an external program to send requests to a simulated service, and inspected the returned data. This decision was reached since our program of test was unfortunately not running, and could not be inspected in an ideal manner. Additionally, this method was recommended by Dr. Gehringer, our project mentor. Finally, we also added a test case that mocks a webservice and asserts the output, done in 2 ways:

- a. In the first code snippet, we send JSON to a webservice that returns the correct Hamer output, as Peerlogic should when fixed.

- b. In the form of an RSpec mock, in the second snippet below.

The test below sends real JSON to both peerlogic and mock. http://peerlogic.csc.ncsu.edu/reputation/calculations/reputation_algorithms

As we tested on the peerlogic and mock, current web-service is not correct, since the returned values do not match the expected values as can be seen in the picture.

This is what we are supposed to reach in this project.

Proof of working:

Test Code Snippet:

require "net/http"

require "json"

INPUTS = {

"submission9999": {

"stu9999": 10,

"stu9998": 10,

"stu9997": 9,

"stu9996": 5

},

"submission9998": {

"stu9999": 3,

"stu9998": 2,

"stu9997": 4,

"stu9996": 5

},

"submission9997": {

"stu9999": 7,

"stu9998": 4,

"stu9997": 5,

"stu9996": 5

},

"submission9996": {

"stu9999": 6,

"stu9998": 4,

"stu9997": 5,

"stu9996": 5

}

}.to_json

EXPECTED = {

"Hamer": {

"9996": 0.6,

"9997": 3.6,

"9998": 1.1,

"9999": 1.1

}

}.to_json

describe "Expertiza" do

it "should return the correct Hamer calculation" do

uri = URI('http://peerlogic.csc.ncsu.edu/reputation/calculations/reputation_algorithms')

req = Net::HTTP::Post.new(uri)

req.content_type = 'application/json'

req.body = INPUTS

response = Net::HTTP.start(uri.hostname, uri.port) do |http|

http.request(req)

end

expect(JSON.parse(response.body)["Hamer"]).to eq(JSON.parse(EXPECTED)["Hamer"])

end

end

describe "Expertiza Web Service" do

it "should return the correct Hamer calculation" do

uri = URI('https://4dfaead4-a747-4be4-8683-3b10d1d2e0c0.mock.pstmn.io/reputation_web_service/default')

req = Net::HTTP::Post.new(uri)

req.content_type = 'application/json'

req.body = INPUTS

response = Net::HTTP.start(uri.hostname, uri.port, :use_ssl => uri.scheme == 'https') do |http|

http.request(req)

end

expect(JSON.parse("#{response.body}}")["Hamer"]).to eq(JSON.parse(EXPECTED)["Hamer"])

end

end

In addition, this plan enables us to test the current functionality by treating this system as a black box, and is able to provide conclusions on

the accuracy of the implementation as a whole.

Therefore, in the section below, we have provided code that showcases this plan in action. The values returned by the algorithm are to be inspected both by code and by hand.

require "webmock/rspec" # gem install webmock -v 2.2.0

WebMock.disable_net_connect!(allow_localhost: true)

INPUTS = {

"submission9999": {

"stu9999": 10,

"stu9998": 10,

"stu9997": 9,

"stu9996": 5

},

"submission9998": {

"stu9999": 3,

"stu9998": 2,

"stu9997": 4,

"stu9996": 5

},

"submission9997": {

"stu9999": 7,

"stu9998": 4,

"stu9997": 5,

"stu9996": 5

},

"submission9996": {

"stu9999": 6,

"stu9998": 4,

"stu9997": 5,

"stu9996": 5

}

}.to_json

EXPECTED = {

"Hamer": {

"9996": 0.6,

"9997": 3.6,

"9998": 1.1,

"9999": 1.1

}

}.to_json

describe "Expertiza" do

before(:each) do

stub_request(:post, /peerlogic.csc.ncsu.edu/).

to_return(status: 200, body: EXPECTED, headers: {})

end

it "should return the correct Hamer calculation" do

uri = URI('http://peerlogic.csc.ncsu.edu/reputation/calculations/reputation_algorithms')

req = Net::HTTP::Post.new(uri)

req.content_type = 'application/json'

req.body = INPUTS

response = Net::HTTP.start(uri.hostname, uri.port, :use_ssl => uri.scheme == 'https') do |http|

http.request(req)

end

expect(JSON.parse(response.body)["Hamer"]).to eq(JSON.parse(EXPECTED)["Hamer"])

end

end

Edge Cases & Scenarios

We present these scenarios as possible test cases for an accurately working Peerlogic webservice.

1) Reviewer gives all max scores

2) Reviewer gives all min scores

3) Reviewer completes no review

alternative scenario - reviewer gives max scores even if no inputs

These have not been implemented as there is no point in testing a system further when positive flows do not work. However, the code in the Initial Phase Section can be used to analytically calculate correct responses for future assertions. We have provided outputs to these scenarios below:

Conclusion

We as a team figured out the algorithms and applications and write some test scenarious. However, we did not have chance to work on web service since it does not work due to module errors. What we had is undefined method strip on Reputation Web Service Controller. Although sometimes it works on expertiza team side, we were not able to see the web service working. We created some test scenarios and write a python code for simulate the algorithm.

In the code segment written to simulate the hamer.rb algorithm as described in "A Method of Automatic Grade Calibration in Peer Assessment" by John Hamer Kenneth T.K. Ma Hugh H.F. Kwong (https://crpit.scem.westernsydney.edu.au/confpapers/CRPITV42Hamer.pdf), we take a list of reviewers and their grades for each assignment reviewed to compute the associated reputation weight. Since the algorithm described in the paper does not specify an original weight for first time reviewers, we coded it so the first time reviewers had an original weight of 1. In addition, this code does not have reviewer weights added in for reviewers who already have reputation weights but will be added in soon. Also, we followed the algorithm they mentioned in the paper to the dot, but even then the output values they wrote as the example did not match what we computed by hand and by code. In this situation, either we missed something completely or the algorithm has been changed. As we tested on the peerlogic and mock, current web-service is not correct, since the returned values do not match the expected values as can be seen in the picture. This can be what we are supposed to reach in this project.

GitHub Links

Link to Expertiza repository: here

Link to the forked repository: here

Link to pull request: here

References

1. Expertiza on GitHub (https://github.com/expertiza/expertiza)

2. The live Expertiza website (http://expertiza.ncsu.edu/)

3. Pluggable reputation systems for peer review: A web-service approach (https://doi.org/10.1109/FIE.2015.7344292)