CSC/ECE 517 Spring 2019 - Project E1931. Conflict notification: Difference between revisions

No edit summary |

|||

| (32 intermediate revisions by 3 users not shown) | |||

| Line 3: | Line 3: | ||

=Problem Statement= | =Problem Statement= | ||

Current conflict notification sends an email to the instructor whenever two reviews for the same submission differ | Current conflict notification sends an email to the instructor whenever two reviews for the same submission differ “significantly” on their scoring (the threshold is specified in the “Notification limit” on the Rubrics tab of assignment creation). Right now an email is sent at any such time one of these conflicts happen, and it links to the review or submission which initiated the notification. It gives the link in raw HTML, but it should give it as a link associated with text, such as the text “this new review” linking to the page that shows the review.The email send to the instructor should also link to page that shows data on each of the reviews done on this team. | ||

=Existing Feature Description= | =Existing Feature Description= | ||

| Line 11: | Line 11: | ||

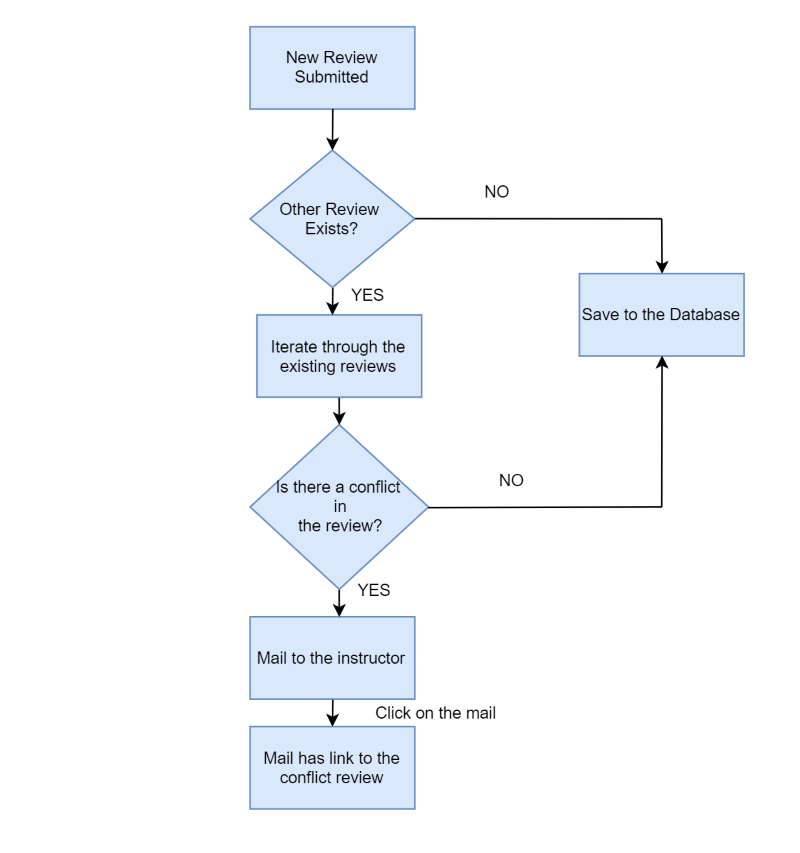

[[File:Flowwork.PNG|center]] | [[File:Flowwork.PNG|center]] | ||

Whenever a new review is submitted, it is compared with the average of the previously submitted reviews. If the difference between the new submission and existing average is more than the threshold limit set, then a mail is sent to the instructor. | Whenever a new review is submitted, it is compared with the average of the previously submitted reviews. If the difference between the new submission and existing average is more than the threshold limit set, then a mail is sent to the instructor.With every review submitted for an assignment of a particular student, the average is updated.The mail sent to the instructor contains the links to the conflicted review and a summary of the reviews. | ||

With every review submitted for an assignment of a particular student, the average is updated. | |||

==Flaws with the existing Implementation== | ==Flaws with the existing Implementation== | ||

===No view to analyze the | ===No view exists to analyze the conflict report=== | ||

Currently whenever the conflict happens,a summary link is sent to the instructor which contains the score of all the | Currently whenever the conflict happens,a summary link is sent to the instructor which contains the score of all the reviews but there is no view where the instructor can see all the conflicts and analyze them. | ||

===Incorrect email message links=== | ===Incorrect email message links=== | ||

| Line 24: | Line 23: | ||

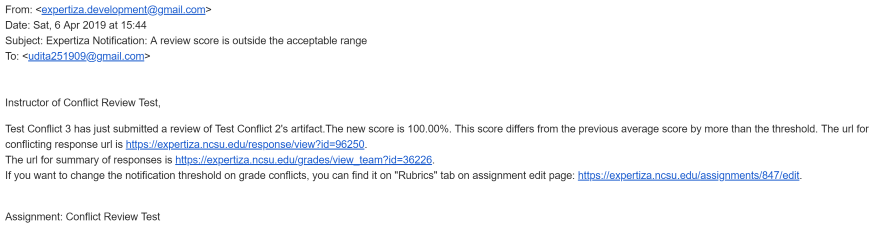

[[File:EMAIL.png|center]] | [[File:EMAIL.png|center]] | ||

*Conflicting Review which triggered the mail | |||

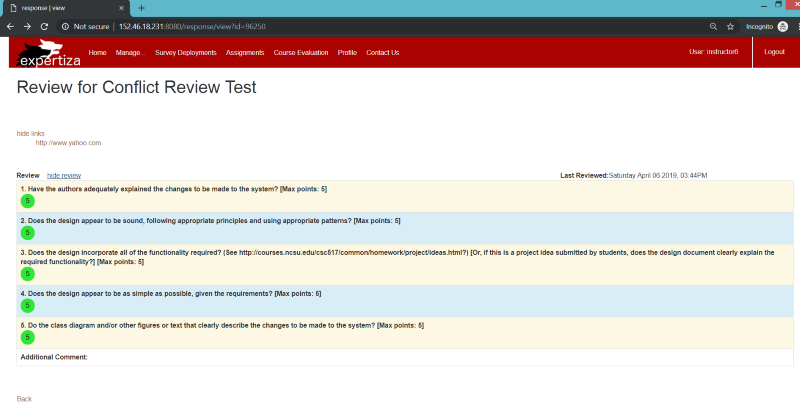

[[File:Score conflicting.png|center]] | |||

=What needs to be done= | |||

The scope of this project is to send an email notification to instructor which will contain links to the conflicting review, summary link, and a link to report which can be used for analyzing. The new report will have the information like the Team(having conflicts), the standard deviation for the team review score and pictorial representation of all the review scores. <br> | |||

The email sent to the instructor should link to a page that shows the data on each of the reviews done on this team: | |||

* The name of the team. | |||

* The members (user-IDs and/or names) | |||

* One line for each review received.In this line would be | |||

# The name of the person (or team) that submitted the review, which would be hyperlinked to the review itself | |||

# The score given for the review | |||

# A bar chart showing pictorial view | |||

* The average score received from reviewers. | |||

* The standard deviation of the score received from reviewers. | |||

* Review scores that are outside some “tolerance” limit should be highlighted, e.g., boldfaced or shown in a different color.Probably the tolerance should be, perhaps, one or two standard deviations from the mean. | |||

=Implementation= | |||

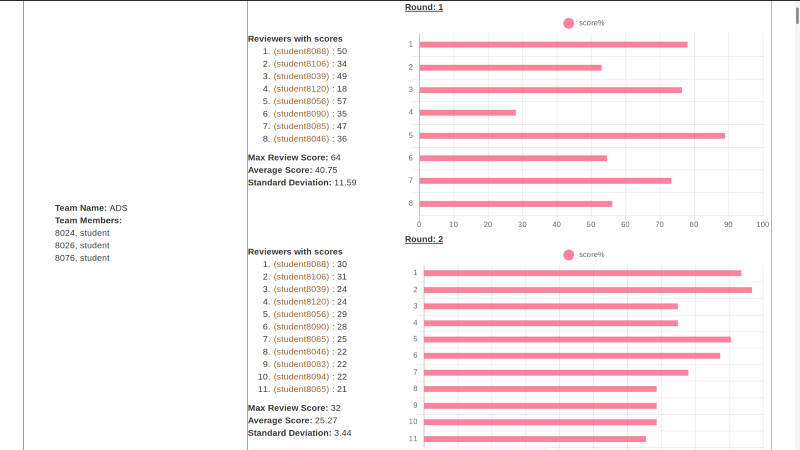

*'''Feature 1:''' New page to show the conflict report | |||

A new view page of the report which will have the following information:-<br> | |||

# The name of the team. | |||

# The members of the team. | |||

# The name of the reviewer which would be hyperlinked to the review itself. | |||

# The score given for the review. | |||

# A bar chart showing pictorial view of all the reviews for a particular team. | |||

# The average score received from reviewers. | |||

# The standard deviation of the score received from reviewers. | |||

# Conflicting reviewers are highlighted.These are those which are two standard deviations away from the mean. | |||

This view have a report of all the teams on one page.This report link is added to the mail that is already being sent whenever some conflict happens.This report page will be also accessible from the reports drop-down in the instructor view. | |||

*A new partial is created for this view - | |||

* | <pre> | ||

[[File: | <div style="color:green;text-align:adjust"> | ||

Note: <br> | |||

X- axis represents precentage score of the team<br> | |||

Y- axis represents the reviewers <br> | |||

Highlighted reviewers are outside the tolerance limit<br> | |||

</div><br><br> | |||

<table id="myTable" border="1px solid black" margin-top="-9px" width="100%" cellspacing='0' cellpadding='2' border='0'> | |||

<thead> | |||

<tr bgcolor='#CCCCCC'> | |||

<!-- class value decides whether the column should be sortable or not --> | |||

<th width="30%" class="sorter-false" border="1px black solid" style="text-align:center">Team <span></span></th> | |||

<th width="70%" class="sorter-false" border="1px black solid" style="text-align:center" >Reviewers/Metrics <span></span></th> | |||

</tr> | |||

</thead> | |||

<tbody> | |||

<% max_scores= max_assignment_review_score_per_round(@id) %> | |||

<%@reviewers.keys.each do |team_name| %> | |||

<tr> | |||

<td style="padding-left: 50px;" > | |||

<% members = team_members(team_name, @id)%> | |||

<strong>Team Name: </strong><%= team_name %><br> | |||

<strong>Team Members: </strong><br> | |||

<% members.each do |member|%> | |||

<%=User.find(member.user_id).fullname %><br> | |||

<% end %> | |||

</td> | |||

<td> | |||

<%= render 'review_conflict_metric' , team_name: team_name , max_scores: max_scores %> | |||

</td> | |||

</tr> | |||

<% end %> | |||

</tbody> | |||

</table> | |||

</pre> | |||

* Partial for the display for reviewers,scores and metrics | |||

<pre> | |||

<table width="100%" border="0" > | |||

<%question_answers = review_score_for_team(@id, team_name) %> | |||

<% i = 1 %> | |||

<% question_answers.each do |question_answer| %> | |||

<% average = average_of_round(question_answer) %> | |||

<% std = std_of_round(average,question_answer) %> | |||

<% upper_tolerance_limit = (average+(2*std)).round(2) %> | |||

<% lower_tolerance_limit = (average-(2*std)).round(2) %> | |||

<tr> | |||

<td width="30%"> | |||

<div> | |||

<strong>Reviewers with scores</strong><br> | |||

<ol> | |||

<% question_answer.each do |reviewer, answer| %> | |||

<% if answer > upper_tolerance_limit or answer < lower_tolerance_limit %> | |||

<li><% response_id = response_id_review_conflict(reviewer, @teams[team_name], @id) %> | |||

<mark style="background-color:#FFFF00"> | |||

<%= link_to "("+@reviewers[team_name.to_s][reviewer.to_s].to_s+")", :controller => 'popup', :action => 'team_users_popup', :id => @teams[team_name], :id2 => response_id, :assignment_id=>@id %> | |||

<%= list_review_submissions(reviewer, @teams[team_name], response_id) %> | |||

<%= ": " + answer.to_s %></mark> | |||

</li> | |||

<% else %> | |||

<li><% response_id = response_id_review_conflict(reviewer, @teams[team_name], @id) %> | |||

<%= link_to "("+@reviewers[team_name.to_s][reviewer.to_s].to_s+")", :controller => 'popup', :action => 'team_users_popup', :id => @teams[team_name], :id2 => response_id, :assignment_id=>@id %> | |||

<%= list_review_submissions(reviewer, @teams[team_name], response_id) %> | |||

<%= ": " + answer.to_s %> | |||

</li> | |||

<% end %> | |||

<% end %> | |||

</ol> | |||

</div> | |||

<strong>Max Review Score: </strong><%=max_scores[i-1]%><br> | |||

<strong>Average Score: </strong><%= average.to_s %><br> | |||

<strong>Standard Deviation: </strong><%= std.to_s %><br><br> | |||

</td> | |||

<td> | |||

<div> | |||

<strong><u><%= "Round: " + i.to_s %></u></strong> | |||

<%= generate_score_chart(max_scores[i-1], question_answer) %> | |||

</div> | |||

</td> | |||

</tr> | |||

<% i+=1 %> | |||

<% end %> | |||

</table> | |||

</pre> | |||

*Sample view of the new report - | |||

[[File: Oodd report.PNG|center]] | |||

<br> | |||

* Method to get all the answers(scores) for a reviewee on an assignment for a particular round | |||

<pre> | |||

def self.answers_by_round_for_reviewee(assignment_id, reviewee_id,round) | |||

#get all answers for a reviewee on an assignment for a particular round | |||

question_answers = Answer.select("answers.answer, response_maps.reviewer_id") | |||

.joins("join responses on responses.id = answers.response_id") | |||

.joins("join response_maps on responses.map_id = response_maps.id") | |||

.where("response_maps.reviewed_object_id = ? and | |||

response_maps.reviewee_id = ? and | |||

responses.round = ?", assignment_id, reviewee_id,round) | |||

question_answers | |||

end | |||

</pre> | |||

* Method to get the maximum score for an assignment and for a particular round | |||

<pre> | |||

def max_score_of_assignment_per_round(assignment,round) | |||

rubric = get_questions_by_assignment(assignment) | |||

rubric_questions_used = rubric[assignment.varying_rubrics_by_round? ? round : 0] | |||

assignment_max_score_per_round = 0 | |||

rubric_questions_used.each do |q| | |||

next if q.type.eql?("SectionHeader") | |||

q_max_score = get_max_score_for_question(q) | |||

assignment_max_score_per_round += q_max_score | |||

end | |||

assignment_max_score_per_round | |||

end | |||

def max_assignment_review_score_per_round(reviewed_object_id) | |||

assignment = Assignment.find(reviewed_object_id) | |||

total_rounds = assignment.rounds_of_reviews | |||

review_max_scores = Array.new(total_rounds) | |||

(0..total_rounds-1).each do |round| | |||

review_max_scores[round] = SummaryHelper::Summary.new.max_score_of_assignment_per_round(assignment, round) | |||

end | |||

review_max_scores | |||

end | |||

</pre> | |||

* Method to create the bar chart showing the review scores per round | |||

<pre> | |||

def generate_score_chart(review_max_score, question_answer) | |||

scores = Array.new | |||

question_answer.each do |reviewer,answer| | |||

scores << ((answer.to_f/review_max_score.to_f)*100).round(2) | |||

end | |||

labels = (1..scores.length).to_a | |||

data = { | |||

labels: labels, | |||

datasets: [ | |||

{ | |||

label: "score%", | |||

backgroundColor: "rgba(255,99,132,0.8)", | |||

borderWidth: 1, | |||

data: scores, | |||

hoverBackgroundColor: "orange", | |||

yAxisID: "bar-y-axis1" | |||

} | |||

] | |||

} | |||

options = { | |||

legend: { | |||

position: 'top', | |||

labels: { | |||

usePointStyle: true | |||

} | |||

}, | |||

width: "125", | |||

height: "75", | |||

scales: { | |||

yAxes: [{ | |||

stacked: true, | |||

id: "bar-y-axis1", | |||

barThickness: 10 | |||

}, { | |||

display: false, | |||

stacked: true, | |||

id: "bar-y-axis2", | |||

barThickness: 15, | |||

type: 'category', | |||

categoryPercentage: 0.8, | |||

barPercentage: 0.9, | |||

gridLines: { | |||

offsetGridLines: true | |||

} | |||

}], | |||

xAxes: [{ | |||

stacked: false, | |||

ticks: { | |||

beginAtZero: true, | |||

stepSize: 10, | |||

max: 100 | |||

} | |||

}] | |||

} | |||

} | |||

horizontal_bar_chart data, options | |||

end | |||

</pre> | |||

* Method to get the team members | |||

<pre> | |||

def team_members(team_name, assignment_id) | |||

team_id = Team.select(:id).where(name: team_name) | |||

members = TeamsUser.where(team_id: team_id) | |||

members | |||

end | |||

</pre> | |||

* Method to find the standard deviation | |||

<pre> | |||

def std_of_round(average,question_answer) | |||

accum=0.0 | |||

i=0 | |||

question_answer.each do |reviewer,answer| | |||

accum+=(answer-average)**2 | |||

i+=1 | |||

end | |||

if i != 0 | |||

accum=Math.sqrt(accum/i) | |||

end | |||

accum.round(2) | |||

end | |||

</pre> | |||

* Method to find the average score per round per team | |||

<pre> | |||

def average_of_round(question_answer) | |||

average=0.0 | |||

i=0 | |||

question_answer.each do |reviewer,answer| | |||

average+=answer | |||

i+=1 | |||

end | |||

if i != 0 | |||

average=average/i | |||

end | |||

average.round(2) | |||

end | |||

</pre> | |||

* Method to get the review score for each round of particular team | |||

<pre> | |||

def review_score_for_team(reviewed_object_id, team_name) | |||

question_answers=[] | |||

reviewee_id = Team.select(:id).where(name: team_name, parent_id: reviewed_object_id) | |||

reviewee_id.each do |reviewee| | |||

total_rounds = Assignment.find(reviewed_object_id).rounds_of_reviews | |||

question_answers = Array.new(total_rounds) | |||

(0..total_rounds-1).each do |round| | |||

temp_values = Answer.answers_by_round_for_reviewee(reviewed_object_id, reviewee,round+1) | |||

question_answers[round] = review_score_helper_for_team(temp_values) | |||

end | |||

end | |||

question_answers | |||

end | |||

def review_score_helper_for_team(temp_values) | |||

[[ | question_answers={} | ||

temp_values.each do |temp_value| | |||

if question_answers.key?(temp_value[:reviewer_id]) | |||

if temp_value[:answer].nil? | |||

question_answers[temp_value[:reviewer_id]] += 0 | |||

else | |||

question_answers[temp_value[:reviewer_id]] += temp_value[:answer] | |||

end | |||

else | |||

if temp_value[:answer].nil? | |||

question_answers[temp_value[:reviewer_id]] = 0 | |||

else | |||

question_answers[temp_value[:reviewer_id]] = temp_value[:answer] | |||

end | |||

end | |||

end | |||

question_answers | |||

end | |||

</pre> | |||

* Method to create a response map for the reviewers and reviewee as well as the teams and the reviewee_id | |||

<pre> | |||

def review_conflict_response_map(params, _session = nil) | |||

assign_basics(params) | |||

teams = Team.select(:id, :name).where(parent_id: @id).order(:name) | |||

@teams = ({}) | |||

@reviewers = ({}) | |||

teams.each do |reviewee| | |||

@reviewers[reviewee.name] = reviewers_name_id_by_reviewee_and_assignment(reviewee, @id) | |||

@teams[reviewee.name] = reviewee.id | |||

end | |||

end | |||

</pre> | |||

* Method to get the reviewers of a particular assignment and particular reviewee | |||

* | <pre> | ||

def reviewers_name_id_by_reviewee_and_assignment(reviewee, id) | |||

temp_reviewers = User.select(" DISTINCT participants.id, users.name") | |||

.joins("JOIN participants ON participants.user_id = users.id") | |||

.joins("JOIN response_maps ON response_maps.reviewer_id = participants.id") | |||

.where("response_maps.reviewee_id = ? and response_maps.reviewed_object_id = ?", reviewee.id, id) | |||

reviewers = ({}) | |||

temp_reviewers.each do |reviewer| | |||

reviewers[reviewer[:id].to_s] = reviewer[:name] | |||

end | |||

reviewers | |||

end | |||

</pre> | |||

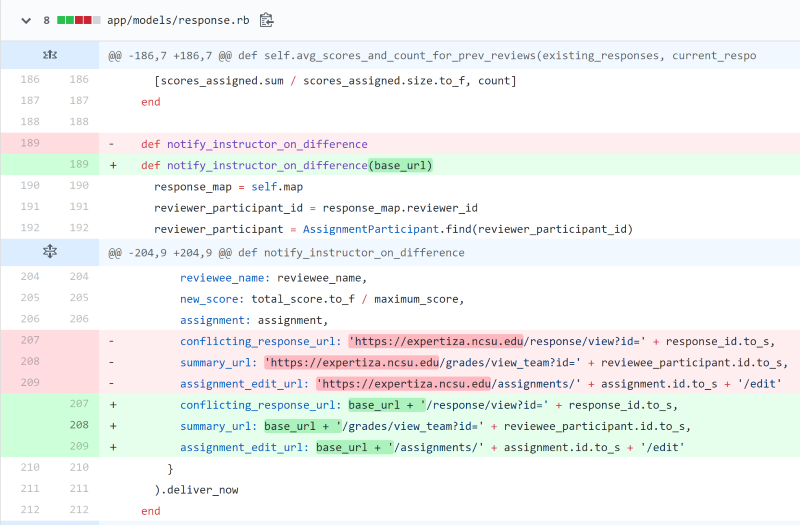

* | *'''Feature 2:''' No hardcoded URLs | ||

Hardcoded URLs are be managed by the config file in order to make these links functional on every server running Expertiza and raw URLs will be given some meaningful names. | |||

* Code changed for removing the hardcoded URLs | |||

[[File:Harcord link.PNG|center]] | |||

=Test Plan= | =Test Plan= | ||

To test code implementation and correctness of the modified feature, we | To test code implementation and correctness of the modified feature, we had done the following tests:- | ||

*Run and pass existing RSpec Tests | *Run and pass existing RSpec Tests | ||

*UI testing for the new functionality | |||

*Develop New RSpec Tests for the new code | *Develop New RSpec Tests for the new code | ||

==Rspec Testing== | ==Rspec Testing== | ||

[to | |||

All the existing related rspec tests have tested and they all passed wit 0 failures. | |||

Majority of the new functionality concerns with the new view,so we have mainly done the UI testing shown below.Some of the Rspec test added are shown below: | |||

*Rspec Tests for Answer Model | |||

<pre> | |||

let(:response_map) { create(:review_response_map, id: 1, reviewed_object_id: 1, reviewer_id: 1) } | |||

it "returns answers by reviewer for reviewee in round from db which is not empty" do | |||

expect(Answer.answers_by_round_for_reviewee(@assignment_id, @reviewee_id,@round)).not_to be_empty | |||

end | |||

</pre> | |||

*Rspec Tests for Report Formatter Helper methods | |||

<pre> | |||

describe 'review_conflict_response_map' do | |||

context 'when type is ReviewConflictResponseMap' do | |||

it 'renders response_report page with corresponding data' do | |||

allow(Team).to receive(:where).with(parent_id: '1').and_return([integer('id')]).ordered | |||

params = { | |||

id: 1, | |||

report: {type: 'ReviewResponseMap'}, | |||

user: 'no one' | |||

} | |||

get :response_report, params | |||

expect(response).to render_template(:response_report) | |||

end | |||

end | |||

end | |||

describe "reviewers_name_id_by_reviewee_and_assignment" do | |||

before(:each) do | |||

@assignment_id = 1 | |||

@reviewee_id = 1 | |||

end | |||

it "returns reviewers name from Answer by reviewee ans assignment id from db which is not empty" do | |||

expect(allow(User).to receive(:where).with(@reviewee_id, @assignment_id).and_return([integer('id')])).not_to be_empty | |||

end | |||

end | |||

</pre> | |||

==UI Testing== | ==UI Testing== | ||

[ | |||

We have created a [https://cl.ly/ab6b9c8bac36 Demo Video] showing the new functionality. | |||

=Files changed in this Project= | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/controllers/response_controller.rb Response Controller] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/helpers/report_formatter_helper.rb Report Formatter Helper] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/helpers/review_mapping_helper.rb Review Mapping Helper] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/helpers/summary_helper.rb Summary Helper] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/mailers/mailer.rb Mailer] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/models/answer.rb Answer model] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/models/response.rb Response Model] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/views/mailer/notify_grade_conflict_message.html.erb Notify Grade Conflict Message View] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/views/reports/_review_conflict_metric.html.erb Review Conflict Metric View] (New Created) | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/views/reports/_review_conflict_report.html.erb Review Conflict Report View] (New Created) | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/views/reports/_review_report.html.erb Review Report View] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/views/reports/_searchbox.html.erb Searchbox View] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/app/views/reports/response_report.html.haml Response Report View] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/spec/controllers/reports_controller_spec.rb Reports Controller Spec] | |||

* [https://github.com/AryaAbhishek/expertiza/blob/beta/spec/models/answer_spec.rb Answer model spec] | |||

=Important Links= | |||

* [https://github.com/expertiza/expertiza/pull/1445 Pull Request] | |||

* [https://github.com/AryaAbhishek/expertiza Expertiza Github Repo] | |||

=References= | =References= | ||

* [https://github.com/expertiza/expertiza expertiza] | |||

* [http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_517_Fall_2018-_Project_E1865._Conflict_Notification Previous Work] | |||

* [https://github.com/expertiza/expertiza/pull/1329 Previous Pull Request] | |||

* [https://rubygems.org/gems/standard-deviation/versions/0.1.1 Standard-Deviation gem] | |||

* [https://github.com/jtescher/descriptive-statistics descriptive-statistics gem] | |||

=Team= | =Team= | ||

Latest revision as of 00:54, 27 April 2019

This wiki page is for the description of changes made under ECE517 SPRING 2019 PROJECT E1931 CONFLICT NOTIFICATIONS

Problem Statement

Current conflict notification sends an email to the instructor whenever two reviews for the same submission differ “significantly” on their scoring (the threshold is specified in the “Notification limit” on the Rubrics tab of assignment creation). Right now an email is sent at any such time one of these conflicts happen, and it links to the review or submission which initiated the notification. It gives the link in raw HTML, but it should give it as a link associated with text, such as the text “this new review” linking to the page that shows the review.The email send to the instructor should also link to page that shows data on each of the reviews done on this team.

Existing Feature Description

Currently, this feature works as follows:

Whenever a new review is submitted, it is compared with the average of the previously submitted reviews. If the difference between the new submission and existing average is more than the threshold limit set, then a mail is sent to the instructor.With every review submitted for an assignment of a particular student, the average is updated.The mail sent to the instructor contains the links to the conflicted review and a summary of the reviews.

Flaws with the existing Implementation

No view exists to analyze the conflict report

Currently whenever the conflict happens,a summary link is sent to the instructor which contains the score of all the reviews but there is no view where the instructor can see all the conflicts and analyze them.

Incorrect email message links

The existing email body uses hardcoded URLs mentioned in models/response.rb file in the method notify_instructor_on_difference. Being hardcoded, these links wouldn't work on other servers where Expertiza is running. For Example, if the setup is done on localhost, the links will not be functional.

- Conflicting Review which triggered the mail

What needs to be done

The scope of this project is to send an email notification to instructor which will contain links to the conflicting review, summary link, and a link to report which can be used for analyzing. The new report will have the information like the Team(having conflicts), the standard deviation for the team review score and pictorial representation of all the review scores.

The email sent to the instructor should link to a page that shows the data on each of the reviews done on this team:

- The name of the team.

- The members (user-IDs and/or names)

- One line for each review received.In this line would be

- The name of the person (or team) that submitted the review, which would be hyperlinked to the review itself

- The score given for the review

- A bar chart showing pictorial view

- The average score received from reviewers.

- The standard deviation of the score received from reviewers.

- Review scores that are outside some “tolerance” limit should be highlighted, e.g., boldfaced or shown in a different color.Probably the tolerance should be, perhaps, one or two standard deviations from the mean.

Implementation

- Feature 1: New page to show the conflict report

A new view page of the report which will have the following information:-

- The name of the team.

- The members of the team.

- The name of the reviewer which would be hyperlinked to the review itself.

- The score given for the review.

- A bar chart showing pictorial view of all the reviews for a particular team.

- The average score received from reviewers.

- The standard deviation of the score received from reviewers.

- Conflicting reviewers are highlighted.These are those which are two standard deviations away from the mean.

This view have a report of all the teams on one page.This report link is added to the mail that is already being sent whenever some conflict happens.This report page will be also accessible from the reports drop-down in the instructor view.

- A new partial is created for this view -

<div style="color:green;text-align:adjust">

Note: <br>

X- axis represents precentage score of the team<br>

Y- axis represents the reviewers <br>

Highlighted reviewers are outside the tolerance limit<br>

</div><br><br>

<table id="myTable" border="1px solid black" margin-top="-9px" width="100%" cellspacing='0' cellpadding='2' border='0'>

<thead>

<tr bgcolor='#CCCCCC'>

<!-- class value decides whether the column should be sortable or not -->

<th width="30%" class="sorter-false" border="1px black solid" style="text-align:center">Team <span></span></th>

<th width="70%" class="sorter-false" border="1px black solid" style="text-align:center" >Reviewers/Metrics <span></span></th>

</tr>

</thead>

<tbody>

<% max_scores= max_assignment_review_score_per_round(@id) %>

<%@reviewers.keys.each do |team_name| %>

<tr>

<td style="padding-left: 50px;" >

<% members = team_members(team_name, @id)%>

<strong>Team Name: </strong><%= team_name %><br>

<strong>Team Members: </strong><br>

<% members.each do |member|%>

<%=User.find(member.user_id).fullname %><br>

<% end %>

</td>

<td>

<%= render 'review_conflict_metric' , team_name: team_name , max_scores: max_scores %>

</td>

</tr>

<% end %>

</tbody>

</table>

- Partial for the display for reviewers,scores and metrics

<table width="100%" border="0" >

<%question_answers = review_score_for_team(@id, team_name) %>

<% i = 1 %>

<% question_answers.each do |question_answer| %>

<% average = average_of_round(question_answer) %>

<% std = std_of_round(average,question_answer) %>

<% upper_tolerance_limit = (average+(2*std)).round(2) %>

<% lower_tolerance_limit = (average-(2*std)).round(2) %>

<tr>

<td width="30%">

<div>

<strong>Reviewers with scores</strong><br>

<ol>

<% question_answer.each do |reviewer, answer| %>

<% if answer > upper_tolerance_limit or answer < lower_tolerance_limit %>

<li><% response_id = response_id_review_conflict(reviewer, @teams[team_name], @id) %>

<mark style="background-color:#FFFF00">

<%= link_to "("+@reviewers[team_name.to_s][reviewer.to_s].to_s+")", :controller => 'popup', :action => 'team_users_popup', :id => @teams[team_name], :id2 => response_id, :assignment_id=>@id %>

<%= list_review_submissions(reviewer, @teams[team_name], response_id) %>

<%= ": " + answer.to_s %></mark>

</li>

<% else %>

<li><% response_id = response_id_review_conflict(reviewer, @teams[team_name], @id) %>

<%= link_to "("+@reviewers[team_name.to_s][reviewer.to_s].to_s+")", :controller => 'popup', :action => 'team_users_popup', :id => @teams[team_name], :id2 => response_id, :assignment_id=>@id %>

<%= list_review_submissions(reviewer, @teams[team_name], response_id) %>

<%= ": " + answer.to_s %>

</li>

<% end %>

<% end %>

</ol>

</div>

<strong>Max Review Score: </strong><%=max_scores[i-1]%><br>

<strong>Average Score: </strong><%= average.to_s %><br>

<strong>Standard Deviation: </strong><%= std.to_s %><br><br>

</td>

<td>

<div>

<strong><u><%= "Round: " + i.to_s %></u></strong>

<%= generate_score_chart(max_scores[i-1], question_answer) %>

</div>

</td>

</tr>

<% i+=1 %>

<% end %>

</table>

- Sample view of the new report -

- Method to get all the answers(scores) for a reviewee on an assignment for a particular round

def self.answers_by_round_for_reviewee(assignment_id, reviewee_id,round)

#get all answers for a reviewee on an assignment for a particular round

question_answers = Answer.select("answers.answer, response_maps.reviewer_id")

.joins("join responses on responses.id = answers.response_id")

.joins("join response_maps on responses.map_id = response_maps.id")

.where("response_maps.reviewed_object_id = ? and

response_maps.reviewee_id = ? and

responses.round = ?", assignment_id, reviewee_id,round)

question_answers

end

- Method to get the maximum score for an assignment and for a particular round

def max_score_of_assignment_per_round(assignment,round)

rubric = get_questions_by_assignment(assignment)

rubric_questions_used = rubric[assignment.varying_rubrics_by_round? ? round : 0]

assignment_max_score_per_round = 0

rubric_questions_used.each do |q|

next if q.type.eql?("SectionHeader")

q_max_score = get_max_score_for_question(q)

assignment_max_score_per_round += q_max_score

end

assignment_max_score_per_round

end

def max_assignment_review_score_per_round(reviewed_object_id)

assignment = Assignment.find(reviewed_object_id)

total_rounds = assignment.rounds_of_reviews

review_max_scores = Array.new(total_rounds)

(0..total_rounds-1).each do |round|

review_max_scores[round] = SummaryHelper::Summary.new.max_score_of_assignment_per_round(assignment, round)

end

review_max_scores

end

- Method to create the bar chart showing the review scores per round

def generate_score_chart(review_max_score, question_answer)

scores = Array.new

question_answer.each do |reviewer,answer|

scores << ((answer.to_f/review_max_score.to_f)*100).round(2)

end

labels = (1..scores.length).to_a

data = {

labels: labels,

datasets: [

{

label: "score%",

backgroundColor: "rgba(255,99,132,0.8)",

borderWidth: 1,

data: scores,

hoverBackgroundColor: "orange",

yAxisID: "bar-y-axis1"

}

]

}

options = {

legend: {

position: 'top',

labels: {

usePointStyle: true

}

},

width: "125",

height: "75",

scales: {

yAxes: [{

stacked: true,

id: "bar-y-axis1",

barThickness: 10

}, {

display: false,

stacked: true,

id: "bar-y-axis2",

barThickness: 15,

type: 'category',

categoryPercentage: 0.8,

barPercentage: 0.9,

gridLines: {

offsetGridLines: true

}

}],

xAxes: [{

stacked: false,

ticks: {

beginAtZero: true,

stepSize: 10,

max: 100

}

}]

}

}

horizontal_bar_chart data, options

end

- Method to get the team members

def team_members(team_name, assignment_id)

team_id = Team.select(:id).where(name: team_name)

members = TeamsUser.where(team_id: team_id)

members

end

- Method to find the standard deviation

def std_of_round(average,question_answer)

accum=0.0

i=0

question_answer.each do |reviewer,answer|

accum+=(answer-average)**2

i+=1

end

if i != 0

accum=Math.sqrt(accum/i)

end

accum.round(2)

end

- Method to find the average score per round per team

def average_of_round(question_answer) average=0.0 i=0 question_answer.each do |reviewer,answer| average+=answer i+=1 end if i != 0 average=average/i end average.round(2) end

- Method to get the review score for each round of particular team

def review_score_for_team(reviewed_object_id, team_name)

question_answers=[]

reviewee_id = Team.select(:id).where(name: team_name, parent_id: reviewed_object_id)

reviewee_id.each do |reviewee|

total_rounds = Assignment.find(reviewed_object_id).rounds_of_reviews

question_answers = Array.new(total_rounds)

(0..total_rounds-1).each do |round|

temp_values = Answer.answers_by_round_for_reviewee(reviewed_object_id, reviewee,round+1)

question_answers[round] = review_score_helper_for_team(temp_values)

end

end

question_answers

end

def review_score_helper_for_team(temp_values)

question_answers={}

temp_values.each do |temp_value|

if question_answers.key?(temp_value[:reviewer_id])

if temp_value[:answer].nil?

question_answers[temp_value[:reviewer_id]] += 0

else

question_answers[temp_value[:reviewer_id]] += temp_value[:answer]

end

else

if temp_value[:answer].nil?

question_answers[temp_value[:reviewer_id]] = 0

else

question_answers[temp_value[:reviewer_id]] = temp_value[:answer]

end

end

end

question_answers

end

- Method to create a response map for the reviewers and reviewee as well as the teams and the reviewee_id

def review_conflict_response_map(params, _session = nil)

assign_basics(params)

teams = Team.select(:id, :name).where(parent_id: @id).order(:name)

@teams = ({})

@reviewers = ({})

teams.each do |reviewee|

@reviewers[reviewee.name] = reviewers_name_id_by_reviewee_and_assignment(reviewee, @id)

@teams[reviewee.name] = reviewee.id

end

end

- Method to get the reviewers of a particular assignment and particular reviewee

def reviewers_name_id_by_reviewee_and_assignment(reviewee, id)

temp_reviewers = User.select(" DISTINCT participants.id, users.name")

.joins("JOIN participants ON participants.user_id = users.id")

.joins("JOIN response_maps ON response_maps.reviewer_id = participants.id")

.where("response_maps.reviewee_id = ? and response_maps.reviewed_object_id = ?", reviewee.id, id)

reviewers = ({})

temp_reviewers.each do |reviewer|

reviewers[reviewer[:id].to_s] = reviewer[:name]

end

reviewers

end

- Feature 2: No hardcoded URLs

Hardcoded URLs are be managed by the config file in order to make these links functional on every server running Expertiza and raw URLs will be given some meaningful names.

- Code changed for removing the hardcoded URLs

Test Plan

To test code implementation and correctness of the modified feature, we had done the following tests:-

- Run and pass existing RSpec Tests

- UI testing for the new functionality

- Develop New RSpec Tests for the new code

Rspec Testing

All the existing related rspec tests have tested and they all passed wit 0 failures. Majority of the new functionality concerns with the new view,so we have mainly done the UI testing shown below.Some of the Rspec test added are shown below:

- Rspec Tests for Answer Model

let(:response_map) { create(:review_response_map, id: 1, reviewed_object_id: 1, reviewer_id: 1) }

it "returns answers by reviewer for reviewee in round from db which is not empty" do

expect(Answer.answers_by_round_for_reviewee(@assignment_id, @reviewee_id,@round)).not_to be_empty

end

- Rspec Tests for Report Formatter Helper methods

describe 'review_conflict_response_map' do

context 'when type is ReviewConflictResponseMap' do

it 'renders response_report page with corresponding data' do

allow(Team).to receive(:where).with(parent_id: '1').and_return([integer('id')]).ordered

params = {

id: 1,

report: {type: 'ReviewResponseMap'},

user: 'no one'

}

get :response_report, params

expect(response).to render_template(:response_report)

end

end

end

describe "reviewers_name_id_by_reviewee_and_assignment" do

before(:each) do

@assignment_id = 1

@reviewee_id = 1

end

it "returns reviewers name from Answer by reviewee ans assignment id from db which is not empty" do

expect(allow(User).to receive(:where).with(@reviewee_id, @assignment_id).and_return([integer('id')])).not_to be_empty

end

end

UI Testing

We have created a Demo Video showing the new functionality.

Files changed in this Project

- Response Controller

- Report Formatter Helper

- Review Mapping Helper

- Summary Helper

- Mailer

- Answer model

- Response Model

- Notify Grade Conflict Message View

- Review Conflict Metric View (New Created)

- Review Conflict Report View (New Created)

- Review Report View

- Searchbox View

- Response Report View

- Reports Controller Spec

- Answer model spec

Important Links

References

Team

Abhishek Arya

Bhargav Deshpande

Ramandeep Kaur

Udita Chattopadhyay