CSC/ECE 517 Spring 2018- Project E1819: Improve self review, link self and peer review to derive grades: Difference between revisions

No edit summary |

|||

| (23 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction and Problem Statement == | == Introduction and Problem Statement == | ||

Expertiza has the ability to require self reviews on projects, however, these self review scores do not have much functionality. In the current system, self review scores are created and stored, but are not used afterwards. This project seeks to improve upon the self review system, and extend its functionality. The main points of this project are as follows: | Expertiza has the ability to require self-reviews on projects, however, these self-review scores do not have much functionality. In the current system, self-review scores are created and stored, but are not used afterwards. This project seeks to improve upon the self-review system, and extend its functionality. The main points of this project are as follows: | ||

== | * Create a method to calculate a derived score between self and peer-reviews. The closer the self-review is to the peer-review, the greater the score. Display this score on the "View Scores" page. | ||

* Allow instructors to decide if peer-reviews should be visible before self-review completion. Display the correct review scores accordingly. | |||

* Display the self-review scores in the "View Scores" and heat map of peer-reviews, denoting that they are a different type of review. | |||

== Implementation == | |||

; Allow instructors to decide if students should be allowed to view peer-reviewers before completing their self-review. | |||

: Currently when instructors create a new assignment, or edit an existing one, they have the option to require self-reviews. | |||

: [[File:Allow Self Review.PNG]] | |||

: This will be updated to ask instructors if peer-reviews should be visible before self-reviews are completed. | |||

: [[File:Allow Self Review Edited.PNG]] | |||

: When checked the system will check if a student has completed a self-review when they access the scores view. If they have not completed their self-review they will see a message indicating such. If they have completed the review, they will see their peer-reviews as normal. | |||

; Find or create a function that will derive a score from self-reviews and peer-reviews. | |||

: This score should be reflective of the difference between the students self-review score, and their peer's reviews. The purpose behind this score is to teach students to become better at reviewing their own work. By providing a score that reflects how similar their reviews are to their peer's reviews, students receive feedback on their self-assessment abilities. More information on the function creation/decision proccess can be found in the section below. | |||

; Create the different displays for self-reviews | |||

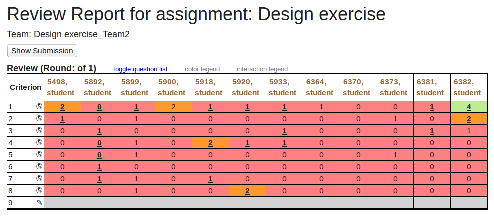

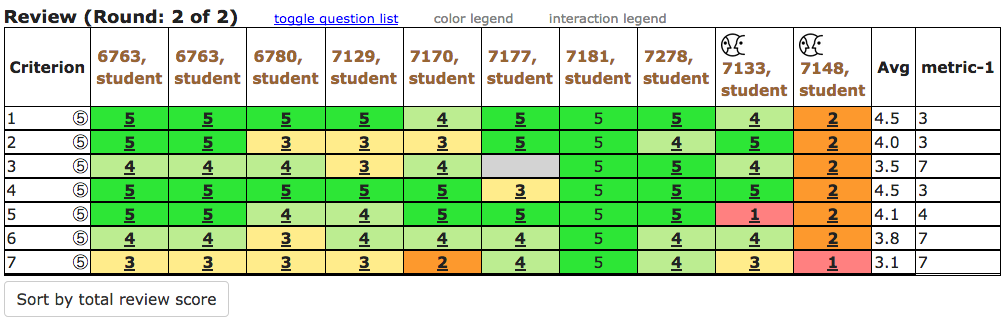

: Instructors and students both have a heat-map view of scores from peer-reviews | |||

: [[File:Instructor Review Heatmap.png]] | |||

:(Instructor view pictured) student's self-review scores will be added to this view, but need to be denoted in a way that makes it easy to see this is a self-review not a peer-review. One potential option is to use an icon that shows this is a self-review score. | |||

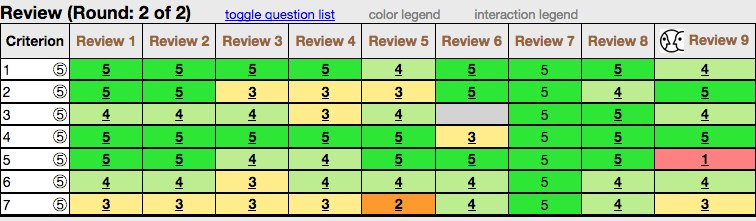

: [[File:HeatMapSelfie.png]] | |||

: Which makes it simple for users to understand that particular column represents the scores from a self-review. Other potential options are to title the column "Self-review", or to highlight/border the column with a particular color (ex. blue), both denoting that the score comes from a self-review. Similar approaches can be taken to differentiate between self-review and peer-review scores in the regular "view scores" page. | |||

: [[File:SelfHeatMapSelfie.png]] | |||

: (Student view pictured) The students can see their self review alongside the peer reviews. | |||

: [[File:LimitedSelfReview.png]] | |||

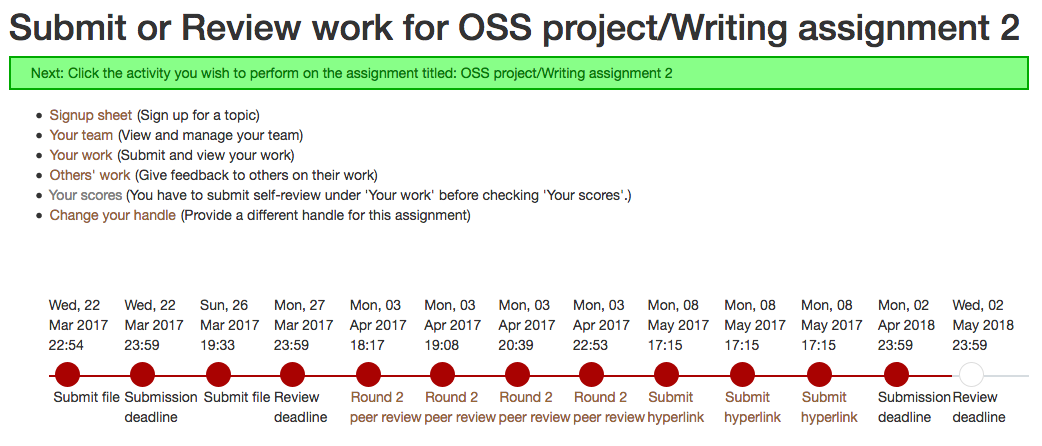

: The instructor has the option as described previously to limit students access to peer reviews until after they have submitted a self review. Students will see "You have to submit self-review under 'Your work' before checking 'Your scores'." next to "Your scores" until they submit a self review. | |||

== Score Formulas == | |||

There are many possible methods of deriving a score between self and peer-reviews. Each formula has the same goal, to derive a meaningful score between the peer and self-reviews. Thus, giving feedback to students on their self-reviewing, hopefully increasing their abilities in this area. Several potential approaches are available, each with different pros and cons. The simpler of approaches involves only the self and peer-review scores, and the more complex approaches may involve several other factors. | |||

The simplest approach is to simply take the difference between the average peer-review score, and the self-review score. This rewards the students for good self-reviewing ability, and evenly punishes students for over and underrating themselves. A similar approach is utilized in several other systems, in which when a students self-review score is within 5% of their average peer-review score, they will receive the greater of the two grades. An extension of this can be made to allow a weight to be applied to the difference between the grades, and to allow instructors to manually enter the weight for each assignment. | |||

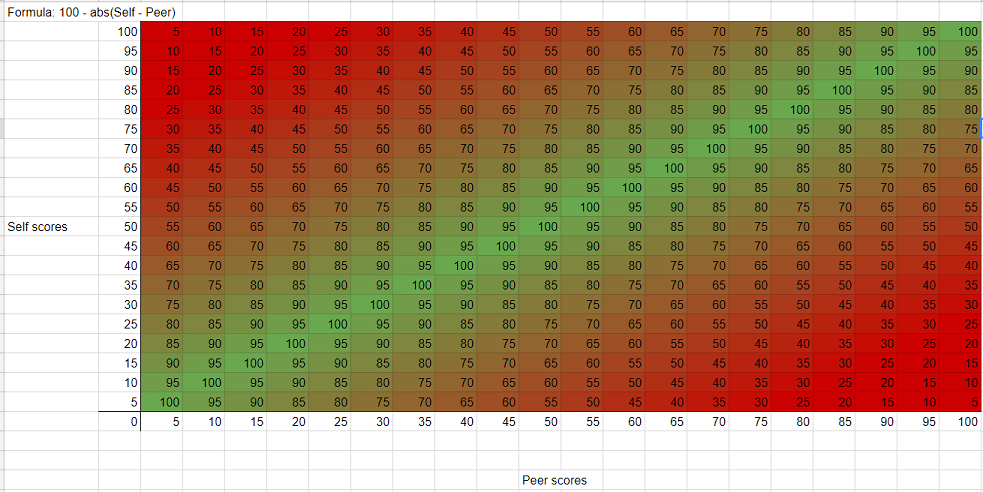

[[File:Design Doc Naive Formula.PNG]] | |||

A slight change to this approach is to take into account the peer score, so the greater your peers believe you performed, the greater your score is (proportional to the difference between reviews again). However, this, and similar approaches, should be avoided as they are potentially harmful. In the chart below, it can be seen that students with poor performance will be harshly punished for poor performance in their peer-reviews, their self and peer-review derived grade, and finally in their instructor grade. The self and peer-review derived grade should only be indicative of the students ability to self-review, not of the quality of the project. | |||

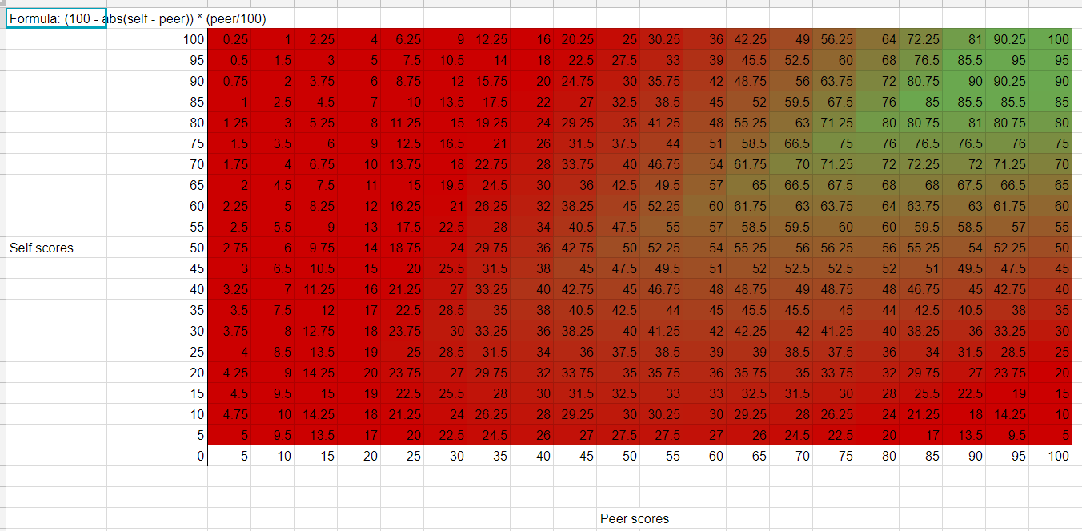

[[File:Design Doc Take Score Into Account.PNG]] | |||

Another approach is to derive the grade based on the percent difference between the self-review score, and the peer-review scores. This results in a smooth gradient which does not punish students as harshly as the previous two approaches. However, at low scores students will see greater swings in this derived grade. | |||

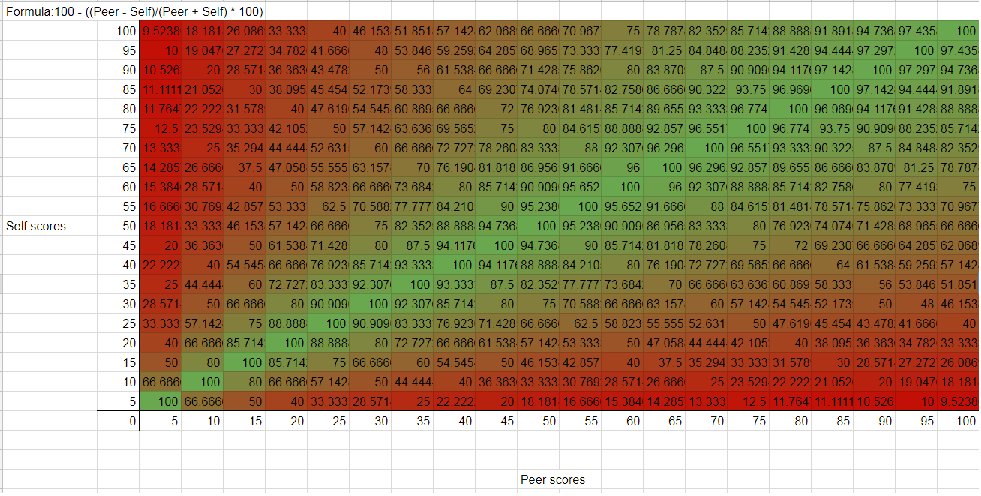

[[File:Design Doc Pecentage Diff.PNG]] | |||

These previous approaches involve only the peer and self-review scores, and are the simple approaches to the problem, the following options involve greater complexity, but seek to better solve the problem at hand. | |||

The SPARK approach is discussed [https://www.ojs.unisa.edu.au/index.php/atna/article/viewFile/343/258 here.] Which results in a score that should accurately reflect the relation between self and peer scores. The scores here will need to be adjusted to match the grading scheme of the project, a score of 1 is equivalent to a 100%, and the difference between 1 and the score is representative of a lower grade, regardless of the score being above or below 1. This method also includes a second score titled SAPA, Self-Assessment to Peer-Assessment factor. This score requires teams to evaluate each team member on the same self-review rubric. After this is completed team member's self-review scores are divided by their average teammate-review score to create the SAPA. A SAPA of > 1.0 indicates that the student rated themselves higher than their team mates did, a score of less than 1.0 indicates that students underrated themselves compared to their peers. | |||

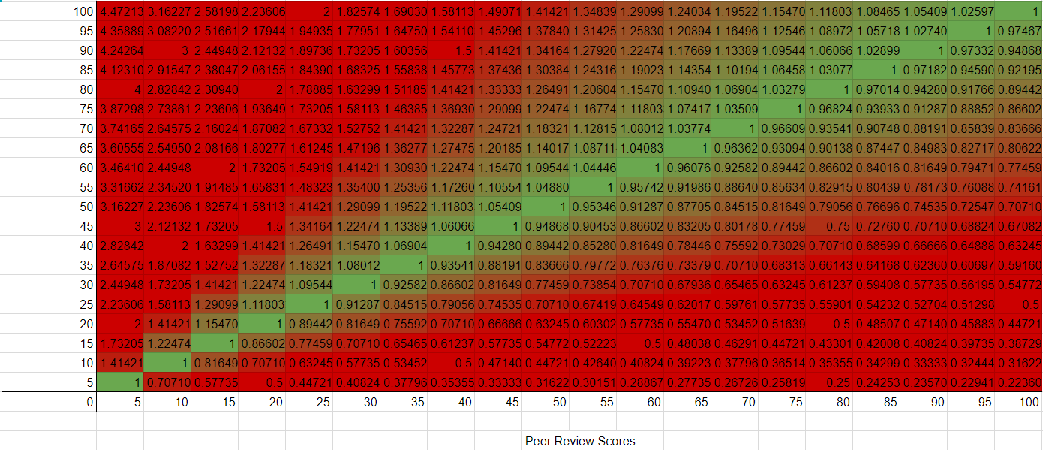

[[File:Design Doc Spark Formula.PNG]] | |||

Two other approaches involving teammate participation are ranking and FCS(Fair Contribution Scoring). | |||

In the ranking approach, students are asked to rank their team, including themselves, from 1 to n (where n is equal to team size), where 1 is the highest score, and n is the lowest score. Students are then assessed based on their average rank from teammates and the rank they awarded themselves. This approach is simple, but it forgoes the rubric approach that garners greater self-reflection in specific areas. | |||

In the FCS approach, students are given a finite number of points to award to their teammates, including themselves. The more points that a person receives, the greater their perceived work contribution. At the end of the project students are assessed based on their average number of points received, and the points awarded to themselves. While the approach is very similar to the ranking approach there are important differences. With this approach students will need to weight the contributions of each member on a percentage of points basis, rather than on a, teammate A vs teammate B overall basis. | |||

{| class="wikitable" | |||

|+ Approach Pros and Cons | |||

! Approach | |||

! Pros | |||

! Cons | |||

|- | |||

! Simple approaches | |||

| Simple to implement, scoring is quick, direct feedback based only on student's self-review ability | |||

| Does not take into account teammate assessments, has potential pitfalls at low peer-review scores | |||

|- | |||

! SPARK approach | |||

| Simple to implement, includes SAPA which shows how self-reviews compare to teammate-reviews | |||

| A separate metric must be calculated, stored, and displayed, team-reviews will slow the process | |||

|- | |||

! Ranking approach | |||

| A very simple approach, can be implemented similar to the bidding page | |||

| Loss of some self-reflection without a rubric | |||

|- | |||

! FCS approach | |||

| A slightly improved ranking approach, scores may be more accurate than just ranking | |||

| Loss of some self-reflection without a rubric | |||

|} | |||

The current implementation focuses on the simple approach of finding the difference between the two review scores and subtracting that from the maximum possible grade. For future work on this project it is recommended that teams combine multiple approaches to have the pros of several different score types. For example, one can combine the simple approaches, and the FCS approach by calculating both, and using a percentage of each one to create the final score. The prototype pattern can be used to simplify the creation of these hybridized approaches. | |||

== Future Work == | |||

The work done in this project can be built upon by future groups. Several areas can be improved upon, or new functionality can be added. | |||

* Refactoring of the ResponseMap features. This feature is currently rather complicated, accessing scores from reviews is difficult, and requires several method calls. | |||

* Implementing multiple score approaches, or hybridizing the approach. A small addition to the instructor UI could allow them to select from a list of possible approaches, or multiple approaches to combine. Perhaps each selected approach can have a weight input from the UI too. | |||

* Improving the response times of the grades page in instructor view. | |||

== Testing == | == Testing == | ||

Test cases are as follows: | Test cases are as follows: | ||

* Peer reviews cannot be viewed before a self review is completed. | * Peer-reviews cannot be viewed before a self-review is completed. | ||

* Peer reviews can be viewed immediately when self reviews are not required. | * Peer-reviews can be viewed immediately when self-reviews are not required. | ||

* The derived scores can be saved to the database. | * The derived scores can be saved to the database. | ||

* Self review scores are displayed with peer review scores | * Self-review scores are displayed with peer-review scores | ||

* Student can only see their own self-review | |||

== Files Changed == | == Files Changed == | ||

* app/views/assignments/edit/_review_strategy.html.erb | |||

* db/migrate/20180429203828_add_columndisplaypeerreviewbeforeselfreviewcomplete.rb | |||

* db/schema.rb | |||

* app/models/self_review_response_map.rb | |||

* app/views/grades/_view_heatgrid.html.erb | |||

* app/views/grades/view_team.html.erb | |||

* app/models/response.rb | |||

* app/models/vm_question_response.rb | |||

== Reference == | == Reference == | ||

Our | [https://github.com/YourSignificantOtter/expertiza Our repo link] | ||

[https://github.com/expertiza/expertiza/pull/1183 Our pull request link] | |||

[https://youtu.be/-u5MF9G3ViY Our demonstration video] | |||

Edward F. Gehringer, "Self-assessment to improve learning and evaluation," ASEE Annual Conference and Exposition, Columbus, OH, June 25–28, 2017 | |||

Latest revision as of 18:15, 4 May 2018

Introduction and Problem Statement

Expertiza has the ability to require self-reviews on projects, however, these self-review scores do not have much functionality. In the current system, self-review scores are created and stored, but are not used afterwards. This project seeks to improve upon the self-review system, and extend its functionality. The main points of this project are as follows:

- Create a method to calculate a derived score between self and peer-reviews. The closer the self-review is to the peer-review, the greater the score. Display this score on the "View Scores" page.

- Allow instructors to decide if peer-reviews should be visible before self-review completion. Display the correct review scores accordingly.

- Display the self-review scores in the "View Scores" and heat map of peer-reviews, denoting that they are a different type of review.

Implementation

- Allow instructors to decide if students should be allowed to view peer-reviewers before completing their self-review.

- Currently when instructors create a new assignment, or edit an existing one, they have the option to require self-reviews.

- This will be updated to ask instructors if peer-reviews should be visible before self-reviews are completed.

- When checked the system will check if a student has completed a self-review when they access the scores view. If they have not completed their self-review they will see a message indicating such. If they have completed the review, they will see their peer-reviews as normal.

- Find or create a function that will derive a score from self-reviews and peer-reviews.

- This score should be reflective of the difference between the students self-review score, and their peer's reviews. The purpose behind this score is to teach students to become better at reviewing their own work. By providing a score that reflects how similar their reviews are to their peer's reviews, students receive feedback on their self-assessment abilities. More information on the function creation/decision proccess can be found in the section below.

- Create the different displays for self-reviews

- Instructors and students both have a heat-map view of scores from peer-reviews

- (Instructor view pictured) student's self-review scores will be added to this view, but need to be denoted in a way that makes it easy to see this is a self-review not a peer-review. One potential option is to use an icon that shows this is a self-review score.

- Which makes it simple for users to understand that particular column represents the scores from a self-review. Other potential options are to title the column "Self-review", or to highlight/border the column with a particular color (ex. blue), both denoting that the score comes from a self-review. Similar approaches can be taken to differentiate between self-review and peer-review scores in the regular "view scores" page.

- The instructor has the option as described previously to limit students access to peer reviews until after they have submitted a self review. Students will see "You have to submit self-review under 'Your work' before checking 'Your scores'." next to "Your scores" until they submit a self review.

Score Formulas

There are many possible methods of deriving a score between self and peer-reviews. Each formula has the same goal, to derive a meaningful score between the peer and self-reviews. Thus, giving feedback to students on their self-reviewing, hopefully increasing their abilities in this area. Several potential approaches are available, each with different pros and cons. The simpler of approaches involves only the self and peer-review scores, and the more complex approaches may involve several other factors.

The simplest approach is to simply take the difference between the average peer-review score, and the self-review score. This rewards the students for good self-reviewing ability, and evenly punishes students for over and underrating themselves. A similar approach is utilized in several other systems, in which when a students self-review score is within 5% of their average peer-review score, they will receive the greater of the two grades. An extension of this can be made to allow a weight to be applied to the difference between the grades, and to allow instructors to manually enter the weight for each assignment.

A slight change to this approach is to take into account the peer score, so the greater your peers believe you performed, the greater your score is (proportional to the difference between reviews again). However, this, and similar approaches, should be avoided as they are potentially harmful. In the chart below, it can be seen that students with poor performance will be harshly punished for poor performance in their peer-reviews, their self and peer-review derived grade, and finally in their instructor grade. The self and peer-review derived grade should only be indicative of the students ability to self-review, not of the quality of the project.

Another approach is to derive the grade based on the percent difference between the self-review score, and the peer-review scores. This results in a smooth gradient which does not punish students as harshly as the previous two approaches. However, at low scores students will see greater swings in this derived grade.

These previous approaches involve only the peer and self-review scores, and are the simple approaches to the problem, the following options involve greater complexity, but seek to better solve the problem at hand.

The SPARK approach is discussed here. Which results in a score that should accurately reflect the relation between self and peer scores. The scores here will need to be adjusted to match the grading scheme of the project, a score of 1 is equivalent to a 100%, and the difference between 1 and the score is representative of a lower grade, regardless of the score being above or below 1. This method also includes a second score titled SAPA, Self-Assessment to Peer-Assessment factor. This score requires teams to evaluate each team member on the same self-review rubric. After this is completed team member's self-review scores are divided by their average teammate-review score to create the SAPA. A SAPA of > 1.0 indicates that the student rated themselves higher than their team mates did, a score of less than 1.0 indicates that students underrated themselves compared to their peers.

Two other approaches involving teammate participation are ranking and FCS(Fair Contribution Scoring).

In the ranking approach, students are asked to rank their team, including themselves, from 1 to n (where n is equal to team size), where 1 is the highest score, and n is the lowest score. Students are then assessed based on their average rank from teammates and the rank they awarded themselves. This approach is simple, but it forgoes the rubric approach that garners greater self-reflection in specific areas.

In the FCS approach, students are given a finite number of points to award to their teammates, including themselves. The more points that a person receives, the greater their perceived work contribution. At the end of the project students are assessed based on their average number of points received, and the points awarded to themselves. While the approach is very similar to the ranking approach there are important differences. With this approach students will need to weight the contributions of each member on a percentage of points basis, rather than on a, teammate A vs teammate B overall basis.

| Approach | Pros | Cons |

|---|---|---|

| Simple approaches | Simple to implement, scoring is quick, direct feedback based only on student's self-review ability | Does not take into account teammate assessments, has potential pitfalls at low peer-review scores |

| SPARK approach | Simple to implement, includes SAPA which shows how self-reviews compare to teammate-reviews | A separate metric must be calculated, stored, and displayed, team-reviews will slow the process |

| Ranking approach | A very simple approach, can be implemented similar to the bidding page | Loss of some self-reflection without a rubric |

| FCS approach | A slightly improved ranking approach, scores may be more accurate than just ranking | Loss of some self-reflection without a rubric |

The current implementation focuses on the simple approach of finding the difference between the two review scores and subtracting that from the maximum possible grade. For future work on this project it is recommended that teams combine multiple approaches to have the pros of several different score types. For example, one can combine the simple approaches, and the FCS approach by calculating both, and using a percentage of each one to create the final score. The prototype pattern can be used to simplify the creation of these hybridized approaches.

Future Work

The work done in this project can be built upon by future groups. Several areas can be improved upon, or new functionality can be added.

- Refactoring of the ResponseMap features. This feature is currently rather complicated, accessing scores from reviews is difficult, and requires several method calls.

- Implementing multiple score approaches, or hybridizing the approach. A small addition to the instructor UI could allow them to select from a list of possible approaches, or multiple approaches to combine. Perhaps each selected approach can have a weight input from the UI too.

- Improving the response times of the grades page in instructor view.

Testing

Test cases are as follows:

- Peer-reviews cannot be viewed before a self-review is completed.

- Peer-reviews can be viewed immediately when self-reviews are not required.

- The derived scores can be saved to the database.

- Self-review scores are displayed with peer-review scores

- Student can only see their own self-review

Files Changed

- app/views/assignments/edit/_review_strategy.html.erb

- db/migrate/20180429203828_add_columndisplaypeerreviewbeforeselfreviewcomplete.rb

- db/schema.rb

- app/models/self_review_response_map.rb

- app/views/grades/_view_heatgrid.html.erb

- app/views/grades/view_team.html.erb

- app/models/response.rb

- app/models/vm_question_response.rb

Reference

Edward F. Gehringer, "Self-assessment to improve learning and evaluation," ASEE Annual Conference and Exposition, Columbus, OH, June 25–28, 2017