CSC/ECE 517 Fall 2017/E1792 OSS Visualizations for instructors

This wiki page is for the description of changes made under E1792 OSS Visualizations for instructors.

About Expertiza

Expertiza is an open source project based on Ruby on Rails framework. Expertiza allows the instructor to create new assignments and customize new or existing assignments. It also allows the instructor to create a list of topics the students can sign up for. Students can form teams in Expertiza to work on various projects and assignments. Students can also peer review other students' submissions. Instructors can also use Expertiza for interactive views of class performances and reviews.

Introduction

This project aims to improve the visualizations of certain pages related to reviews and feedback in Expertiza in the instructor's view. This would aid the instructors to judge outcomes of reviews and class performance in assignments via graphs and tables, which in turn would ease the process of grading the reviews.

Problem Statement

- Issue 1: The scale is blue to green, which does not make sense. And colors will change randomly each time loading the page. It will be better to scale from red to green.

- Issue 2: Two adjacent bar represents the response in round 1 to round k. It makes sense only if the rubrics in all review rounds are all the same. If the instructor implements the vary-rubric-by-round mechanism, this visualization will not make sense.

- Issue 3:The table is presorted by teams in the page View Scores, but you can now also sort alphabetically. The cell view looks way too long, and should be divided into partials.

- Issue 4: An interactive visualization or table that shows how a class performed on selected rubric criteria would be immensely helpful. It would show the instructors what they need to focus more attention on.

Solutions Implemented

Issue 1

Description:The scale is blue to green, which does not make sense. And colors will change randomly each time loading the page. It will be better to scale from red to green.

Approach: Here the color coding is blue to green and also it changes randomly. So we will use RBG color coding to make it red to green. We will use percentage to decide color coding. For example if it between 0 - 20% then make it red and so on. This way no matter what scale is being used it will always have appropriate color coding.

Related screenshot

Issue 2

Description: Two adjacent bar represents the response in round 1 to round k. It makes sense only if the rubrics in all review rounds are all the same. If the instructor implements the vary-rubric-by-round mechanism, this visualization will not make sense.

Approach: Here The problem is that the the review responses show in a grid format that shows all the reviews from round 1 to round n. But this is ok when the rubrics is same for all the rounds. But when the rubric is different from round to round this view doesn't make sense. So what we are thinking is creating different grid for each review round. That will solve the problem for when the rubric is different as well as when the rubric is same.

Issue 3

Description: The table is presorted by teams, but you can now also sort alphabetically. The cell view looks way too long, and should be divided into partials.

Approach: Here we can sort the select query with appropriate column alphabetically, or we can sort the table automatically according to user criteria using dynamic table format. And the long view issue can be solved by using paging.

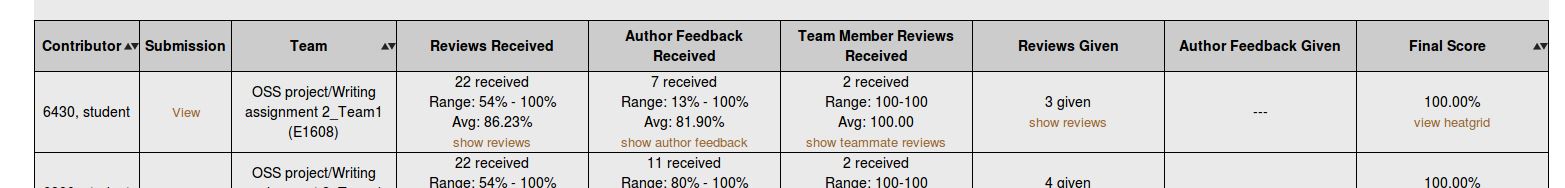

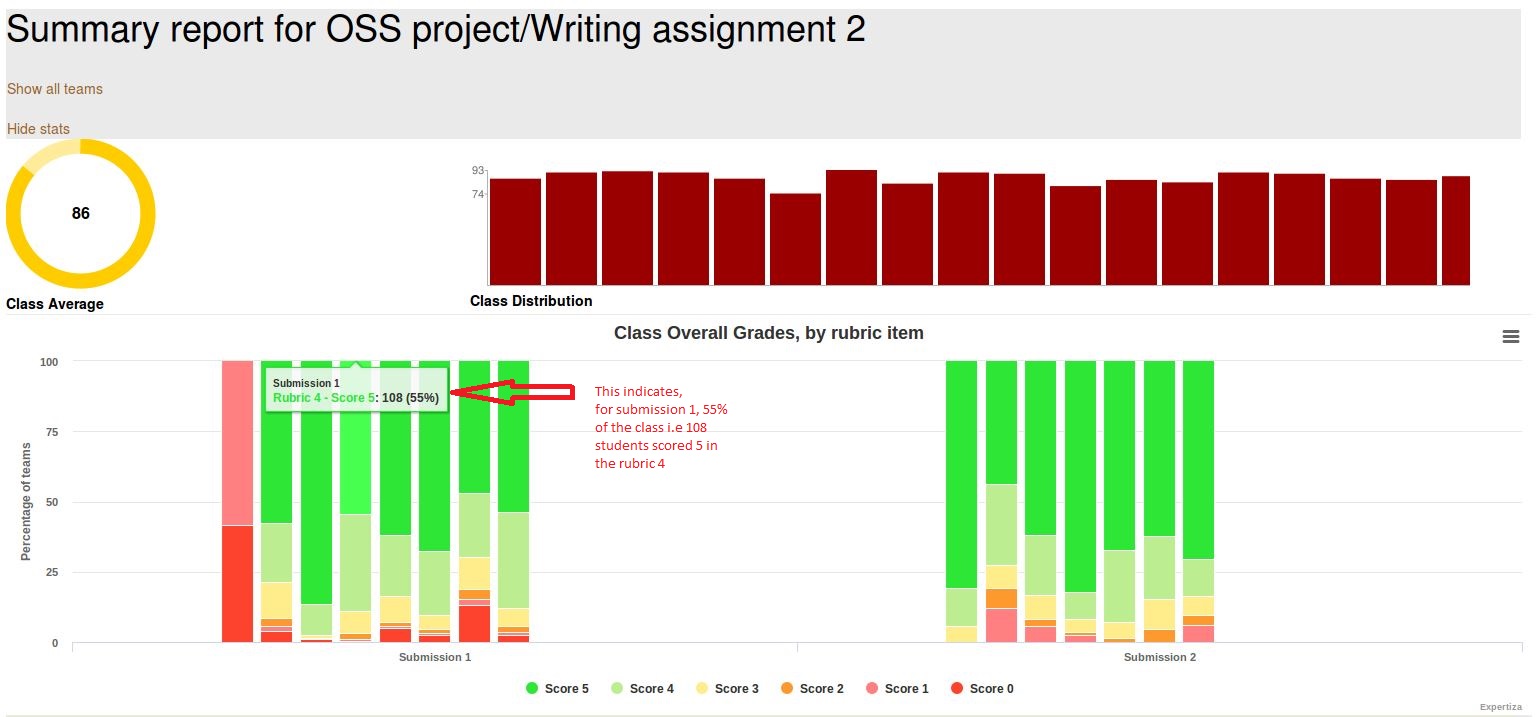

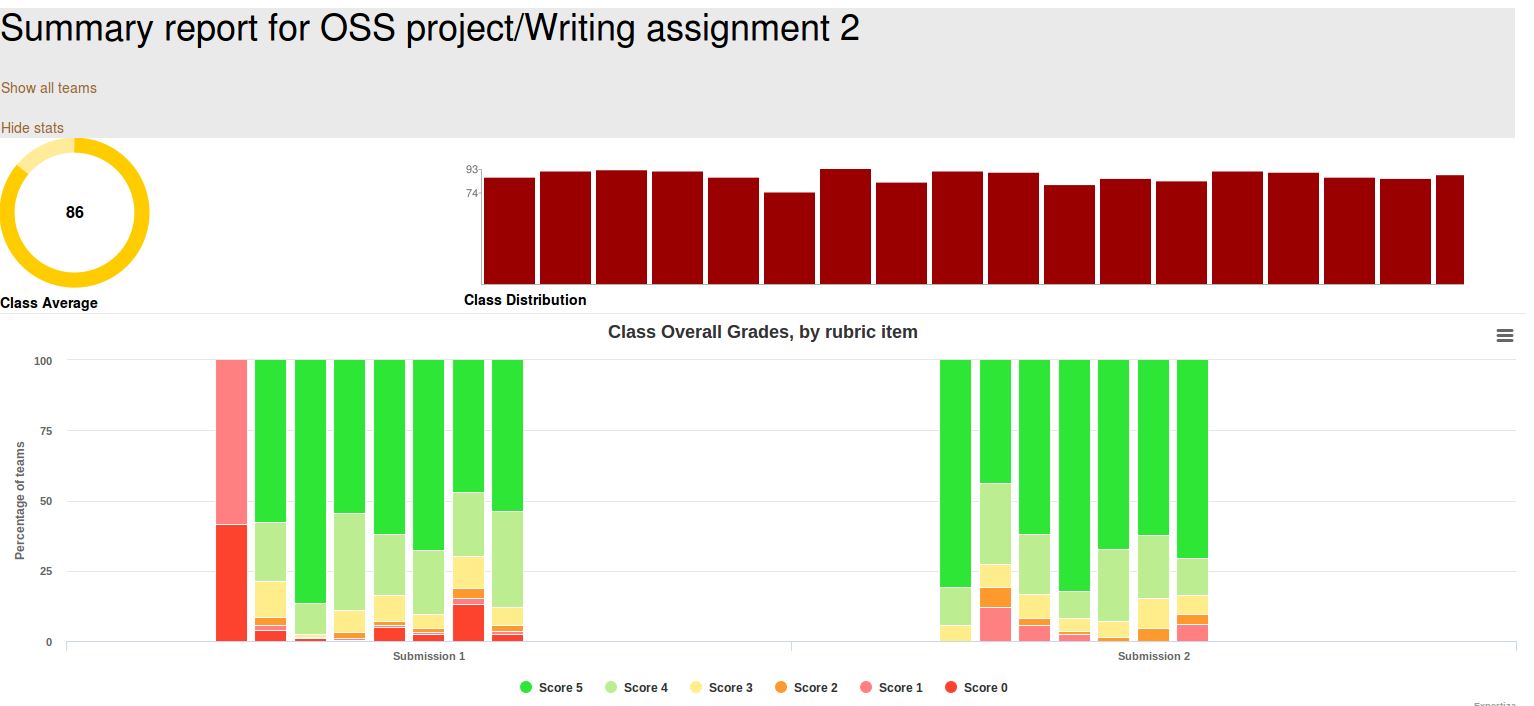

Issue 4

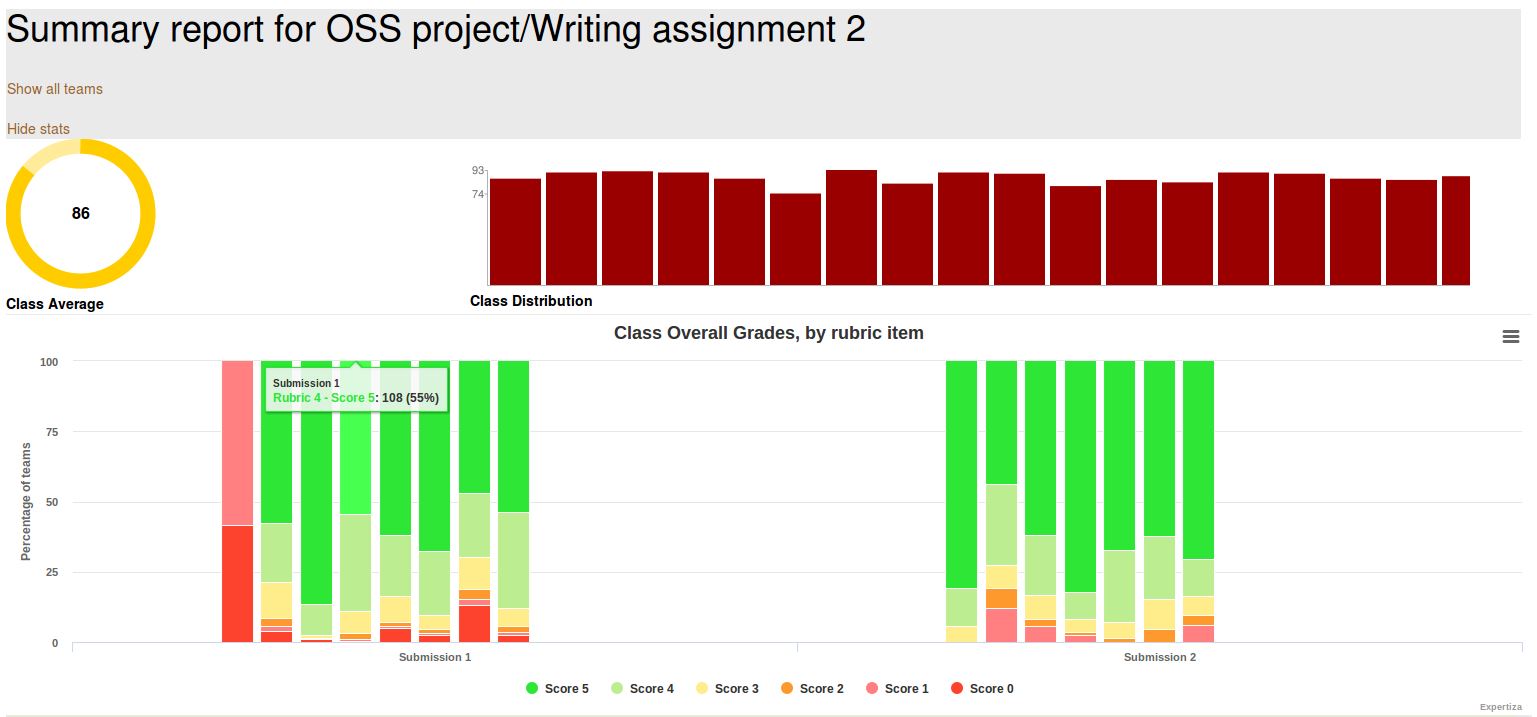

Description: An interactive visualization or table that shows how a class performed on selected rubric criteria would be immensely helpful. It would show me what I need to focus more attention on.

Approach: The issue is about getting the performance of the entire class graded using the 5 rubrics criteria.Scores will be color coded for each of the rubrics in each of the submissions. When hovered over the graph , for each score, the instructor should see the number of students , the percentage of students that has scored that particular point in that rubric in that submission.

Test Plan

Issue 1: Check the color coding when different number is given in different scale.

- 1) Login as instructor

- 2) Create a dummy assignment and come dummy reviews for the same.Log out.

- 3) Login as a student. Attempt the assignment. Log out.

- 4) Login as another student and repeat step 3.

- 5) Login as either student and attempt the review. Logout.

- 6) Login as instructor. Go to Review grades page and check the table. If color code ranges from red ( for least score) to green ( for highest score), then test passed.

Issue 2: Enter multiple reviews with different rubric and check different table/grid shows for each review round separately.

- 1) Login as instructor.

- 2) Create an assignment. Select 2 reviews. Also select different rubrics for both reviews.

- 3) Login as a student ("A") and submit the assignment. Repeat this for another student("B").

- 4) Login as student A and perform review. Do this for student B too.

- 5) Now resubmit assignment as student A and B again.

- 6) Resubmit reviews as student A and B again. This time the rubrics will be different from the previous round.

- 7) Now login as instructor and see the visualization of the reviews. You can see the different graphs for different submissions.

Issue 3: Check if the table is sorted with appropriate column alphabetically

- 1) Login as instructor

- 2) Create a dummy assignment with some teams. Logout.

- 3) Login as a student and attempt the assignment and logout.

- 4) Repeat step 3 for all dummy teams.

- 5) Login as instructor.

- 6) Go to View Scores page. Check the grade table.

- 7) Click on a column header and check if data in it is getting sorted alphabetically. If yes, then the test passed.

Issue 4:

- 1) Login as the instructor

- 2) Click on the button to compute graphs

- 3) Compare the bar graphs with separate scores of students in each assignments.

References

- Expertiza repo : [1]

- The live Expertiza website

- [2]