CSC/ECE 517 Fall 2013/oss E815 saa: Difference between revisions

No edit summary |

No edit summary |

||

| (28 intermediate revisions by the same user not shown) | |||

| Line 5: | Line 5: | ||

=Introduction to WordNet= | =Introduction to WordNet= | ||

Expertiza is an application that aims at 'reusable learning objects through peer review'. It allows students to submit their work and review other's work. Our OSS project aims at refactoring and testing of the wordnet_based_similarity.rb. The file determines how similar concepts are, based on the WordNet database and is used in NLP analysis of reviews. | <b>Expertiza</b><ref>Expertiza: http://wikis.lib.ncsu.edu/index.php/Expertiza</ref> is an application that aims at 'reusable learning objects through peer review'. It allows students to submit their work and review other's work. Our OSS project aims at [http://en.wikipedia.org/wiki/Refactoring refactoring] and testing of the <b>wordnet_based_similarity.rb</b>. The file determines how similar concepts are, based on the WordNet database and is used in <b>[http://en.wikipedia.org/wiki/Natural_language_processing NLP]</b> analysis of reviews. | ||

WordNet is widely used resource for measuring similarity. It is a network of nouns,verbs,adjectives and adverbs which are grouped into synsets(synonymous words), and linked by lexical relations. WordNet is faster to query and involves no additional pre-processing despite having limitations in terms of domains it covers and lack of real world knowledge when compared to Wikipedia.It allows comparisons across different word forms. | WordNet is widely used resource for measuring similarity. It is a network of nouns,verbs,adjectives and adverbs which are grouped into <b>synsets</b>(synonymous words), and linked by [http://en.wikipedia.org/wiki/Lexical lexical] relations. WordNet is faster to query and involves no additional pre-processing despite having limitations in terms of domains it covers and lack of real world knowledge when compared to <b>Wikipedia</b>.It allows comparisons across different word forms. | ||

==WordNet relations-based semantic metric== | ==WordNet relations-based semantic metric== | ||

In order to identify similarity, a relations-based metric is used. Relatedness between two terms v and w, known as match(v, w) is one of those listed below. Each of these different types of matches is given a weight value based on the importance of the match that is found. Hence the matches are assigned values in the range of 0–6. A value of 6 is assigned when the best match (an exact match) occurs and a value of 0 is assigned when a distinct or non-match occurs. | In order to identify similarity, a <b>relations-based metric</b> is used. Relatedness between two terms <b>v</b> and <b>w</b>, known as match(v, w) is one of those listed below. Each of these different types of matches is given a weight value based on the importance of the match that is found. Hence the matches are assigned values in the range of <b>0–6</b>. A value of 6 is assigned when the best match (an exact match) occurs and a value of 0 is assigned when a distinct or non-match occurs.<ref>Lakshmi Ramachandran's Dissertation: http://repository.lib.ncsu.edu/ir/bitstream/1840.16/8813/1/etd.pdf</ref> | ||

The following are the possible matches that can be found : | The following are the possible matches that can be found : | ||

* If v and w are <b>exactly</b> the same. This match is given a weight value of 6. | * If v and w are <b>exactly</b> the same. This match is given a weight value of 6. | ||

* If v and w are | * If v and w are <b>[http://en.wikipedia.org/wiki/Synonyms synonyms]</b>. This match is given a weight of 5. | ||

* If v is a hypernym of w (i.e., v is more generic than the token w) or vice versa. Or v is a hyponym of w (i.e., v is a more specific form of w)or vice-versa. This match is given a weight of 4. | * If v is a <b>[http://en.wikipedia.org/wiki/Hypernym hypernym]</b> of w (i.e., v is more generic than the token w) or vice versa. Or v is a <b>hyponym</b> of w (i.e., v is a more specific form of w)or vice-versa. This match is given a weight of 4. | ||

* If v is a meronym of w (i.e., v is a sub-part of w) or vice versa. Or v is a holonym of w (i.e., v contains w as a sub-part) or vice-versa. For example, “leg” is a meronym of the token “body” and “body” is the holonym of the term “leg”. This type of match is given a weight of 3. | * If v is a <b>[http://en.wikipedia.org/wiki/Meronym meronym]</b> of w (i.e., v is a sub-part of w) or vice versa. Or v is a <b>[http://en.wikipedia.org/wiki/Holonym holonym]</b> of w (i.e., v contains w as a sub-part) or vice-versa. For example, “leg” is a meronym of the token “body” and “body” is the holonym of the term “leg”. This type of match is given a weight of 3. | ||

* If v and w have common parents( excluding generic parents),the normalized distance between the two tokens' sysnets is calculated or identified.(1-distance)gives the similarity between the two tokens. This similarity is then given a weight value between 0-6. If the scale value is greater than 0,a common parents match exists between v and w and is given a weight of 2. | * If v and w have <b>common parents</b>( excluding generic parents),the normalized distance between the two tokens' sysnets is calculated or identified.(1-distance)gives the similarity between the two tokens. This similarity is then given a weight value between 0-6. If the scale value is greater than 0,a common parents match exists between v and w and is given a weight of 2. | ||

*The match between the definition of a word, whose sense is to be determined and a word is already known is identified by Lesk. If a high degree of overlap is seen, then this indicates that the two tokens share the same sense.Context based similarity across token is determined by using overlapping definitions as a metric. We also find overlaps across examples of the words.IF two tokens have overlapping definitions or examples, then the match gets a weight value of 1. | *The match between the definition of a word, whose sense is to be determined and a word is already known is identified by <b>[http://en.wikipedia.org/wiki/Mike_Lesk Lesk]</b>. If a high degree of overlap is seen, then this indicates that the two tokens share the same sense.Context based similarity across token is determined by using overlapping definitions as a metric. We also find overlaps across examples of the words.IF two tokens have overlapping definitions or examples, then the match gets a weight value of 1. | ||

* If v and w contain distinct tokens or phrases .This distinct match is given a weight of 0. | * If v and w contain distinct tokens or phrases .This distinct match is given a weight of 0. | ||

A combination of token and its <b>[http://en.wikipedia.org/wiki/Part_of_speech POS]</b> information is used for comparison with another token.Such a match ensures that the right type of words are being compared as the POS of tokens are also taken into consideration. Appropriate set of synonyms,hypernyms etc are extracted with the help of the POS information for a particular token.Thus the meaning of the generated synonyms differs with the POS information of the token.Hence this metric determines relatedness using the POS values of the compared tokens. | |||

A combination of token and its POS information is used for comparison with another token.Such a match ensures that the right type of words are being compared as the POS of tokens are also taken into consideration. Appropriate set of synonyms,hypernyms etc are extracted with the help of the POS information for a particular token.Thus the meaning of the generated synonyms differs with the POS information of the token.Hence this metric determines relatedness using the POS values of the compared tokens. | |||

=Refactoring wordnet_based_similarity.rb= | =Refactoring wordnet_based_similarity.rb= | ||

The methods in this file are long and complex, and contain a lot of duplicated code. Refactoring of the code ensures that code is written in an optimized manner and follows the DRY principle.Since these methods are important,they need to be written transparently and tested thoroughly. | The methods in this file are long and complex, and contain a lot of duplicated code. Refactoring of the code ensures that code is written in an optimized manner and follows the <b>[http://en.wikipedia.org/wiki/Don%27t_repeat_yourself DRY]</b> principle.Since these methods are important,they need to be written transparently and tested thoroughly. | ||

==Case 1:== | ==Case 1:== | ||

In this case we have removed redundancy by converting a code that was repeating 4 times to a single function call. | In this case we have removed <b>redundancy</b> by converting a code that was repeating 4 times to a single function call. | ||

===Before Refactoring=== | ===<b>Before Refactoring</b>=== | ||

Each of the arrays for synonyms, hypernyms, hyponyms and antonyms were declared and then the code checked for each of these conditions and resulted in a changed array that was declared initially. | Each of the arrays for synonyms, hypernyms, hyponyms and antonyms were declared and then the code checked for each of these conditions and resulted in a changed array that was declared initially. | ||

| Line 42: | Line 42: | ||

The 4 functions are given below, other than the change in the parameter, they do the same functionality. | The 4 functions are given below, other than the change in the parameter, they do the same functionality. | ||

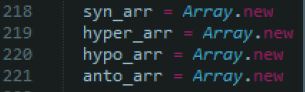

1) Synonyms | 1) <b>Synonyms</b> | ||

[[File:synonyms_func.jpg|alt=synonyms_func]] | [[File:synonyms_func.jpg|alt=synonyms_func]] | ||

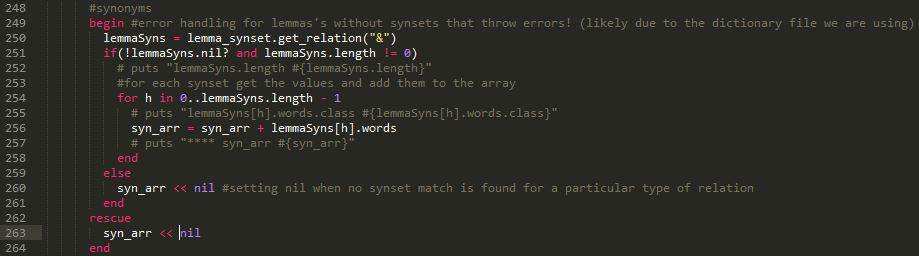

2) Hypernyms | 2) <b>Hypernyms</b> | ||

[[File:hypernyms_func.jpg|alt=hypernyms_func]] | [[File:hypernyms_func.jpg|alt=hypernyms_func]] | ||

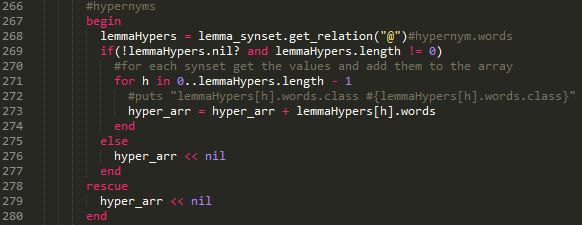

3) Hyponyms | 3) <b>Hyponyms</b> | ||

[[File:hyponyms_func.jpg|alt=hyponyms_func]] | [[File:hyponyms_func.jpg|alt=hyponyms_func]] | ||

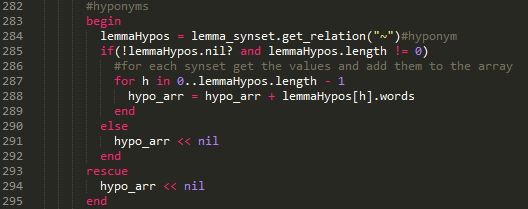

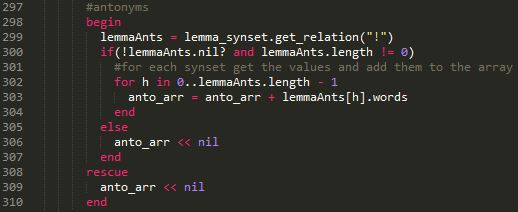

4) Antonyms | 4) <b>Antonyms</b> | ||

[[File:antonyms_func.jpg|alt=antonyms_func]] | [[File:antonyms_func.jpg|alt=antonyms_func]] | ||

===After Refactoring=== | ===<b>After Refactoring</b>=== | ||

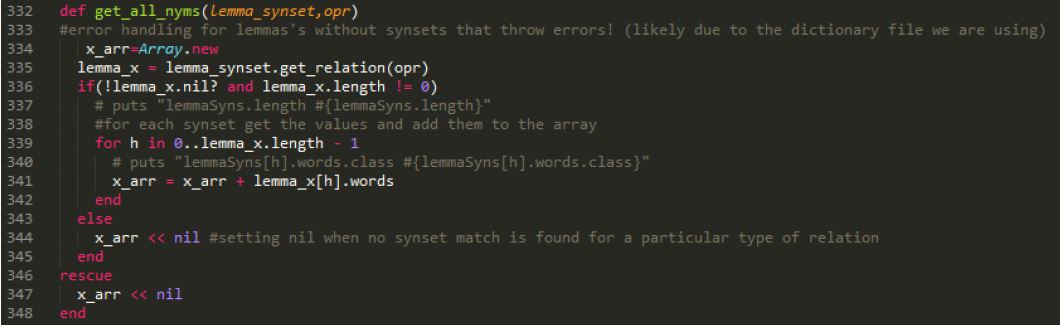

We implemented the below code which resulted in eliminating the redundant occurrences of the same code over and again. | We implemented the below code which resulted in eliminating the redundant occurrences of the same code over and again. | ||

[[File:get_all_nyms_func.jpg|alt=get_all_nyms_func]] | [[File:get_all_nyms_func.jpg|alt=get_all_nyms_func]] | ||

In the above function <b>‘get_all_nyms’</b> we use each of the arrays(syn_arr, hyper_arr, hypo_arr, anto_arr) locally within the function, checking within the function with respect to a particular parameter and returning the value to the respected array(1 of the 4). | |||

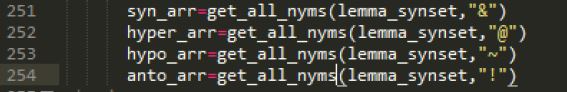

Note that the call to each of the arrays must be within the <b>‘for’</b> loop which is in-turn within an <b>‘if’</b> condition. The function calls are as follows: | |||

[[File:nyms_calls.jpg|alt=nyms_calls]] | |||

The <b>‘opr’</b> variable in the <b>‘get_all_nyms’</b> method holds values <b>“&”,”@”,”~”,”!”</b>, depending on which operation is getting executed. | |||

This refactor has helped us bring 64 lines of code down to 15 lines. | |||

==Case 2:== | ==Case 2:== | ||

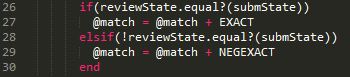

In this case we were able to prevent the same code from being written twice and have written it in form of a function. | |||

===<b>Before Refactoring</b>=== | |||

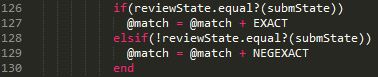

Previously, the two occurrences of the same code were in the below manner: | |||

[[File:redundant1.jpg|alt=redundant1]] | |||

and | |||

[[File:redundant2.jpg|alt=redundant2]] | |||

===<b>After Refactoring</b>=== | |||

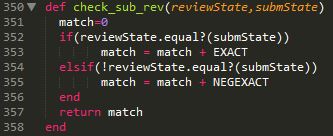

After <b>refactoring</b> this code we defined a function as follows: | |||

[[File:check_sub_rev_func.jpg|alt=check_sub_rev_func]] | |||

And the function call is as follows(the same function call is even at line 138 of the file): | |||

[[File:check_sub_rev_call.jpg|alt=check_sub_rev_call]] | |||

This refactor reduced the <b>code duplication</b>. | |||

==Case 3:== | ==Case 3:== | ||

Unlike the previous two cases, in case-3 we are giving an instance when although the code looks quite redundant, refactoring it might result in a less efficient code than it is in its present state. | |||

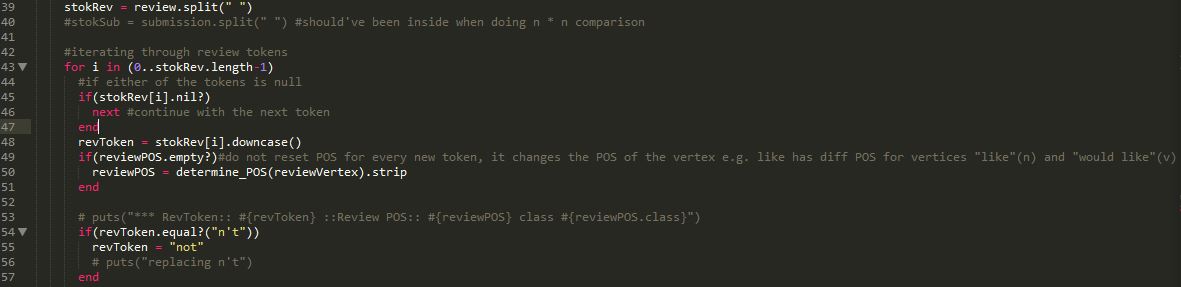

The code as present looks as follows: | |||

[[File:stokRev.jpg|alt=stokRev]] | |||

and | |||

[[File:stokSub.jpg|alt=stokSub]] | |||

Although the code looks quite similar, refactoring them into a single method is not advisable since the first set of statements is under a for loop (loop variable ‘i’) and the second set of statements are under a nested for loop(loop variable ‘j’) under the earlier for loop. Each of the pieces of code further use the iterator within them. Also, the variable within these pieces of code are further accessed for other functionality before that particular iteration has been completed. | |||

Therefore, even if we get a refactored code which converts these to a single function, the function will have to be called as many times as the iteration which results in inefficiency. | |||

=Testing= | |||

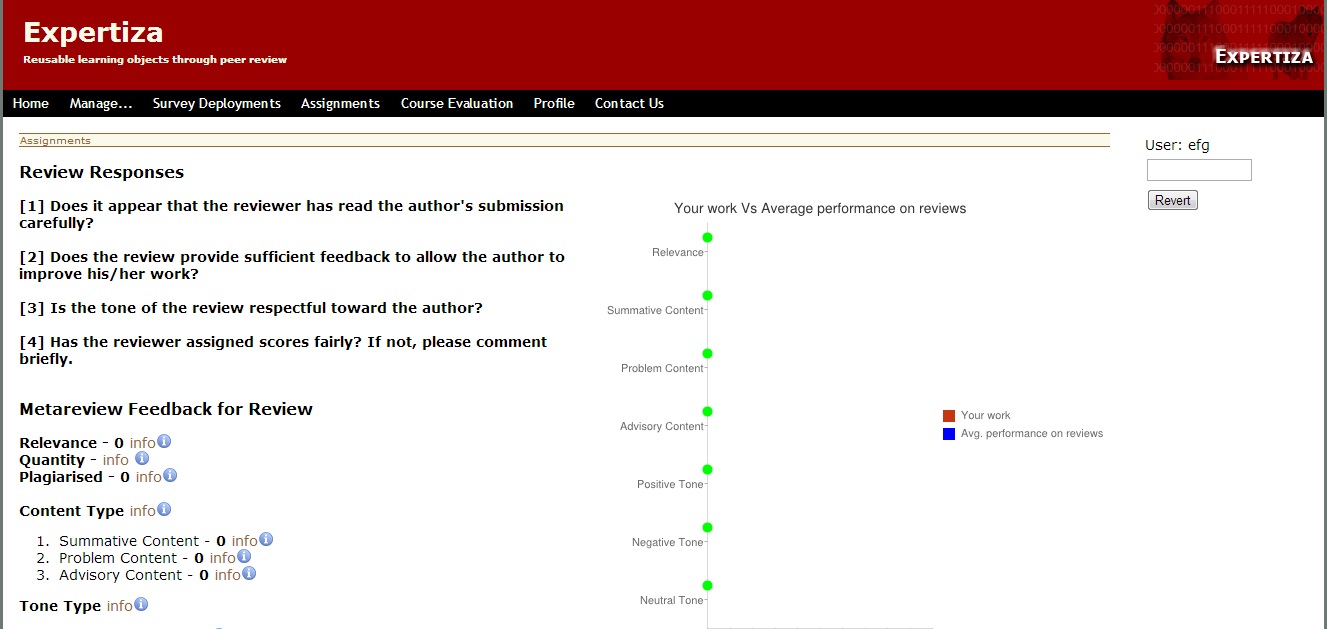

We ran the Expertiza system before and after refactoring the code on the same data.The automated meta review feature is invoked when a review is submitted which in turn renders and a view. Below is a screenshot regarding the same which will be helpful in illustrating the same. | |||

[[File:Testing.jpg|alt=testing]] | |||

=Future Work= | |||

The file wordnet_based_similarity.rb plays an important role in determining similarity between concepts by using tokens and their POS information.The future work after refactoring would be to still follow <b>DRY principle</b> while writing the code and keep the methods as <b>transparent</b> as possible similar to how refactoring has been done now. | |||

=Appendix= | |||

This appendix focuses on the setup issues that we came across while pursuing our <b>OSS</b>project. | |||

Our refactored code is functional on the following link: <b>http://152.46.19.59:3000</b> | |||

Also, it is available on the <b>repo: https://github.com/apek0729/expertiza</b> | |||

This section of the document is a walk-through our process of getting the ‘Expertiza’ project up and running: | |||

1) <b>Forked</b> the repository from <b>https://github.com/expertiza/expertiza</b>. | |||

2) We cloned the above repository and created a new branch. | |||

3) Opened the directory in <b>RubyMine</b> and ran <b>‘bundle install’</b> which installed the required gems. | |||

4) Created a <b>database.yml</b> file and changed add port: to <b>3307</b>. | |||

5) Ran <b>rake db:create:all</b> | |||

6) Downloaded the <b>test_db.sql</b> and opened it through MySQL command prompt through the pg_development database. | |||

7) Logged in using the credentials user:admin password:admin. | |||

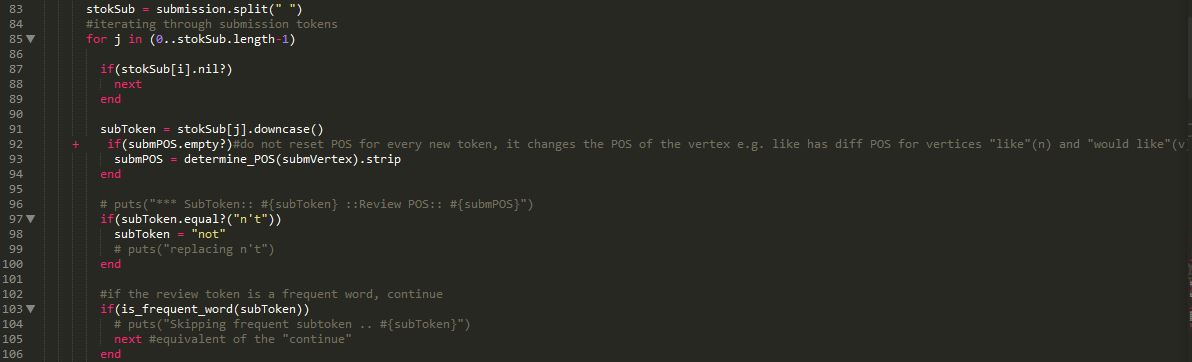

8) We went through our way debugging the source code wherever necessary until we could form reviews and metareviews (A summary of these errors | |||

can be found after the setup steps). | |||

9) Eventually, we changed our database to <b>scrubbed_db.sql</b>. This change helped us a lot since it has already existing assignments, rubrics and questionnaires. | |||

10) The new credentials are user:admin and password:password. | |||

==Debugging and Setting up== | |||

1) We added a lot of methods to the <b>routes.rb</b> file | |||

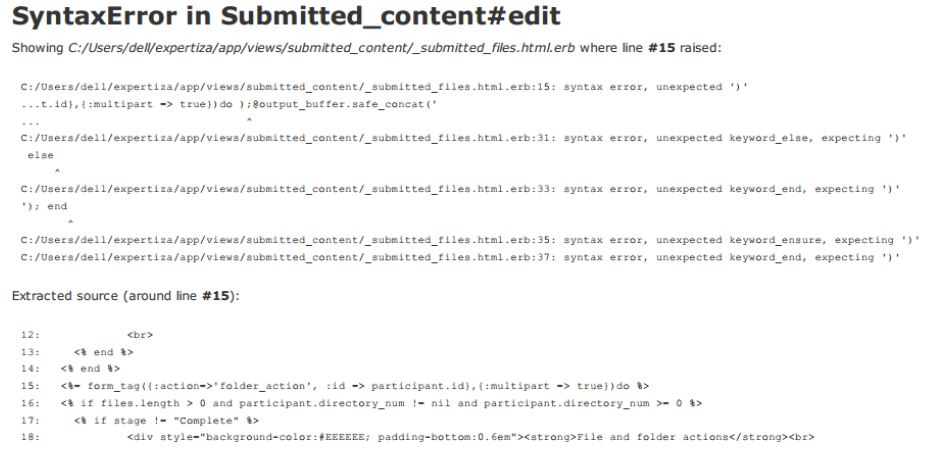

2) Error while trying to access the 'Your Work' link of a user: | |||

------ | |||

------ | |||

[[File:submitted_content.jpg|alt=submitted_content]] | |||

------ | |||

------ | |||

In the above error we removed the “=” in line 15 and got it working. | |||

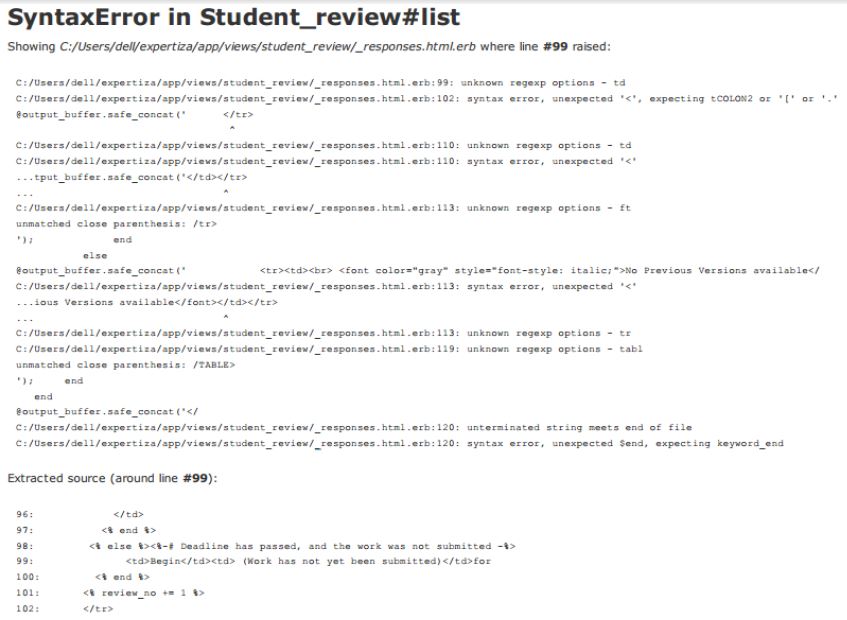

3) Error while trying to access 'Other’s Work' within a user: | |||

------ | |||

------ | |||

[[File:student_review.jpg|alt=student_review]] | |||

------ | |||

------ | |||

We could get past this error by removing the code after ‘<% else %>’ on line 98. | |||

4) | |||

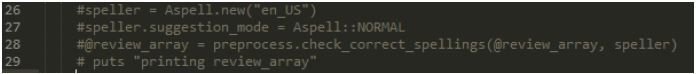

a) While trying to save review and metareview: | |||

------ | |||

------ | |||

[[File:autocontroller.jpg|alt=autocontroller]] | |||

------ | |||

------ | |||

We could get past this error by commenting out the following lines from automated_metreview.rb: | |||

------ | |||

------ | |||

[[File:autocontrollercontrolled.jpg|alt=autocontrollercontrolled]] | |||

------ | |||

------ | |||

b) Undefined method <b>link_to_remote_redbox</b>: | |||

To work our way around this method we changed all the 'link_to_remote_redbox' to 'link_to' in the <b>list.html.erb</b> file in the view of automated_metareview. | |||

=References= | |||

<references/> | |||

Latest revision as of 01:29, 31 October 2013

Refactoring and Testing of wordnet_based_similarity.rb

Introduction to WordNet

Expertiza<ref>Expertiza: http://wikis.lib.ncsu.edu/index.php/Expertiza</ref> is an application that aims at 'reusable learning objects through peer review'. It allows students to submit their work and review other's work. Our OSS project aims at refactoring and testing of the wordnet_based_similarity.rb. The file determines how similar concepts are, based on the WordNet database and is used in NLP analysis of reviews. WordNet is widely used resource for measuring similarity. It is a network of nouns,verbs,adjectives and adverbs which are grouped into synsets(synonymous words), and linked by lexical relations. WordNet is faster to query and involves no additional pre-processing despite having limitations in terms of domains it covers and lack of real world knowledge when compared to Wikipedia.It allows comparisons across different word forms.

WordNet relations-based semantic metric

In order to identify similarity, a relations-based metric is used. Relatedness between two terms v and w, known as match(v, w) is one of those listed below. Each of these different types of matches is given a weight value based on the importance of the match that is found. Hence the matches are assigned values in the range of 0–6. A value of 6 is assigned when the best match (an exact match) occurs and a value of 0 is assigned when a distinct or non-match occurs.<ref>Lakshmi Ramachandran's Dissertation: http://repository.lib.ncsu.edu/ir/bitstream/1840.16/8813/1/etd.pdf</ref>

The following are the possible matches that can be found :

- If v and w are exactly the same. This match is given a weight value of 6.

- If v and w are synonyms. This match is given a weight of 5.

- If v is a hypernym of w (i.e., v is more generic than the token w) or vice versa. Or v is a hyponym of w (i.e., v is a more specific form of w)or vice-versa. This match is given a weight of 4.

- If v is a meronym of w (i.e., v is a sub-part of w) or vice versa. Or v is a holonym of w (i.e., v contains w as a sub-part) or vice-versa. For example, “leg” is a meronym of the token “body” and “body” is the holonym of the term “leg”. This type of match is given a weight of 3.

- If v and w have common parents( excluding generic parents),the normalized distance between the two tokens' sysnets is calculated or identified.(1-distance)gives the similarity between the two tokens. This similarity is then given a weight value between 0-6. If the scale value is greater than 0,a common parents match exists between v and w and is given a weight of 2.

- The match between the definition of a word, whose sense is to be determined and a word is already known is identified by Lesk. If a high degree of overlap is seen, then this indicates that the two tokens share the same sense.Context based similarity across token is determined by using overlapping definitions as a metric. We also find overlaps across examples of the words.IF two tokens have overlapping definitions or examples, then the match gets a weight value of 1.

- If v and w contain distinct tokens or phrases .This distinct match is given a weight of 0.

A combination of token and its POS information is used for comparison with another token.Such a match ensures that the right type of words are being compared as the POS of tokens are also taken into consideration. Appropriate set of synonyms,hypernyms etc are extracted with the help of the POS information for a particular token.Thus the meaning of the generated synonyms differs with the POS information of the token.Hence this metric determines relatedness using the POS values of the compared tokens.

Refactoring wordnet_based_similarity.rb

The methods in this file are long and complex, and contain a lot of duplicated code. Refactoring of the code ensures that code is written in an optimized manner and follows the DRY principle.Since these methods are important,they need to be written transparently and tested thoroughly.

Case 1:

In this case we have removed redundancy by converting a code that was repeating 4 times to a single function call.

Before Refactoring

Each of the arrays for synonyms, hypernyms, hyponyms and antonyms were declared and then the code checked for each of these conditions and resulted in a changed array that was declared initially.

The 4 functions are given below, other than the change in the parameter, they do the same functionality.

1) Synonyms

2) Hypernyms

3) Hyponyms

4) Antonyms

After Refactoring

We implemented the below code which resulted in eliminating the redundant occurrences of the same code over and again.

In the above function ‘get_all_nyms’ we use each of the arrays(syn_arr, hyper_arr, hypo_arr, anto_arr) locally within the function, checking within the function with respect to a particular parameter and returning the value to the respected array(1 of the 4).

Note that the call to each of the arrays must be within the ‘for’ loop which is in-turn within an ‘if’ condition. The function calls are as follows:

The ‘opr’ variable in the ‘get_all_nyms’ method holds values “&”,”@”,”~”,”!”, depending on which operation is getting executed. This refactor has helped us bring 64 lines of code down to 15 lines.

Case 2:

In this case we were able to prevent the same code from being written twice and have written it in form of a function.

Before Refactoring

Previously, the two occurrences of the same code were in the below manner:

and

After Refactoring

After refactoring this code we defined a function as follows:

And the function call is as follows(the same function call is even at line 138 of the file):

This refactor reduced the code duplication.

Case 3:

Unlike the previous two cases, in case-3 we are giving an instance when although the code looks quite redundant, refactoring it might result in a less efficient code than it is in its present state. The code as present looks as follows:

and

Although the code looks quite similar, refactoring them into a single method is not advisable since the first set of statements is under a for loop (loop variable ‘i’) and the second set of statements are under a nested for loop(loop variable ‘j’) under the earlier for loop. Each of the pieces of code further use the iterator within them. Also, the variable within these pieces of code are further accessed for other functionality before that particular iteration has been completed. Therefore, even if we get a refactored code which converts these to a single function, the function will have to be called as many times as the iteration which results in inefficiency.

Testing

We ran the Expertiza system before and after refactoring the code on the same data.The automated meta review feature is invoked when a review is submitted which in turn renders and a view. Below is a screenshot regarding the same which will be helpful in illustrating the same.

Future Work

The file wordnet_based_similarity.rb plays an important role in determining similarity between concepts by using tokens and their POS information.The future work after refactoring would be to still follow DRY principle while writing the code and keep the methods as transparent as possible similar to how refactoring has been done now.

Appendix

This appendix focuses on the setup issues that we came across while pursuing our OSSproject.

Our refactored code is functional on the following link: http://152.46.19.59:3000

Also, it is available on the repo: https://github.com/apek0729/expertiza

This section of the document is a walk-through our process of getting the ‘Expertiza’ project up and running:

1) Forked the repository from https://github.com/expertiza/expertiza.

2) We cloned the above repository and created a new branch.

3) Opened the directory in RubyMine and ran ‘bundle install’ which installed the required gems.

4) Created a database.yml file and changed add port: to 3307.

5) Ran rake db:create:all

6) Downloaded the test_db.sql and opened it through MySQL command prompt through the pg_development database.

7) Logged in using the credentials user:admin password:admin.

8) We went through our way debugging the source code wherever necessary until we could form reviews and metareviews (A summary of these errors can be found after the setup steps).

9) Eventually, we changed our database to scrubbed_db.sql. This change helped us a lot since it has already existing assignments, rubrics and questionnaires.

10) The new credentials are user:admin and password:password.

Debugging and Setting up

1) We added a lot of methods to the routes.rb file

2) Error while trying to access the 'Your Work' link of a user:

In the above error we removed the “=” in line 15 and got it working.

3) Error while trying to access 'Other’s Work' within a user:

We could get past this error by removing the code after ‘<% else %>’ on line 98.

4)

a) While trying to save review and metareview:

We could get past this error by commenting out the following lines from automated_metreview.rb:

b) Undefined method link_to_remote_redbox:

To work our way around this method we changed all the 'link_to_remote_redbox' to 'link_to' in the list.html.erb file in the view of automated_metareview.

References

<references/>