CSC/ECE 517 Fall 2013/ch1 1w31 vm: Difference between revisions

No edit summary |

No edit summary |

||

| Line 72: | Line 72: | ||

;Agile Model Driven Development (AMDD) Lifecycle | ;Agile Model Driven Development (AMDD) Lifecycle | ||

[[File:amdd.gif]] | [[File:amdd.gif]] | ||

=== TDD vs. AMDD === | |||

<li>TDD shortens the programming feedback loop whereas AMDD shortens the modeling feedback loop. | |||

<li>TDD provides detailed specification (tests) whereas AMDD is better for thinking through bigger issues. | |||

<li>TDD promotes the development of high-quality code whereas AMDD promotes high-quality communication with your stakeholders and other developers. | |||

<li> TDD provides concrete evidence that your software works whereas AMDD supports your team, including stakeholders, in working toward a common understanding. | |||

<li> TDD “speaks” to programmers whereas AMDD speaks to business analysts, stakeholders, and data professionals. | |||

<li> TDD is provides very finely grained concrete feedback on the order of minutes whereas AMDD enables verbal feedback on the order minutes (concrete feedback requires developers to follow the practice Prove It With Code and thus becomes dependent on non-AM techniques). | |||

<li> TDD helps to ensure that your design is clean by focusing on creation of operations that are callable and testable whereas AMDD provides an opportunity to think through larger design/architectural issues before you code. | |||

<li> TDD is non-visually oriented whereas AMDD is visually oriented. | |||

<li> Both techniques are new to traditional developers and therefore may be threatening to them. | |||

<li> Both techniques support evolutionary development. | |||

Revision as of 11:19, 7 October 2013

Test Driven Development (Under Construction)

Test-driven development (TDD) is a software development process that relies on the repetition of a very short development cycle: first the developer writes an (initially failing) automated test case that defines a desired improvement or new function, then produces the minimum amount of code to pass that test, and finally refactors the new code to acceptable standards. Kent Beck, who is credited with having developed or 'rediscovered' the technique, stated in 2003 that TDD encourages simple designs and inspires confidence. Test-driven development is related to the test-first programming concepts of extreme programming, begun in 1999, but more recently has created more general interest in its own right. Programmers also apply the concept to improving and debugging legacy code developed with older techniques.

Rules of Thumb

- Design with organization

- Write your own tests

- Rapid Response

- High Cohesion

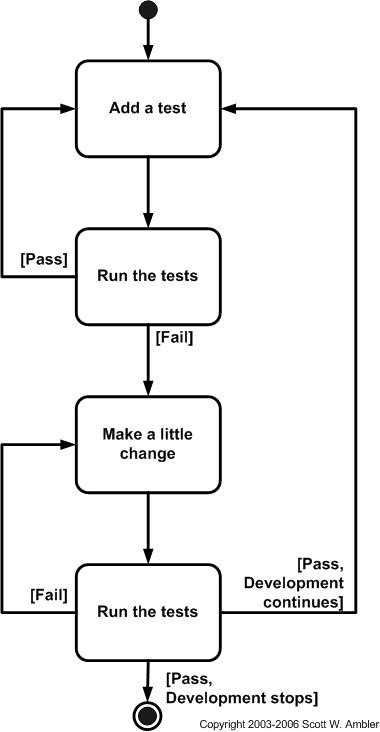

Steps performed in TDD

The following sequence is based on the book Test-Driven Development by Example.[1]

Quickly add a test

In test-driven development, each new feature begins with writing a test. This test must inevitably fail because it is written before the feature has been implemented. (If it does not fail, then either the proposed "new" feature already exists or the test is defective.) To write a test, the developer must clearly understand the feature's specification and requirements. The developer can accomplish this through use cases and user stories to cover the requirements and exception conditions, and can write the test in whatever testing framework is appropriate to the software environment. This could also be a modification of an existing test. This is a differentiating feature of test-driven development versus writing unit tests after the code is written: it makes the developer focus on the requirements before writing the code, a subtle but important difference.

Run all tests and see the new one fail

This validates that the test harness is working correctly and that the new test does not mistakenly pass without requiring any new code. This step also tests the test itself, in the negative: it rules out the possibility that the new test always passes, and therefore is worthless. The new test should also fail for the expected reason. This increases confidence (though does not guarantee) that it is testing the right thing, and passes only in intended cases.

Make a little change

The next step is to write some code that causes the test to pass. The new code written at this stage is not perfect, and may, for example, pass the test in an inelegant way. That is acceptable because later steps improve and hone it. At this point, the only purpose of the written code is to pass the test; no further (and therefore untested) functionality should be predicted and 'allowed for' at any stage.

Run all tests and see them all succeed

If all test cases now pass, the programmer can be confident that the code meets all the tested requirements. This is a good point from which to begin the final step of the cycle.

Refactor to remove duplication

Now the code should be cleaned up as necessary. Move code from where it was convenient for passing the test to where it logically belongs. Remove any duplication you can find. Make sure that variable and method names represent their current use. Clarify any constructs that might be misinterpreted. Use Kent Beck's four rules of simple design[5][6] to guide you, as well as anything else you know about writing clean code. By re-running the test cases, the developer can be confident that code refactoring is not damaging any existing functionality. The concept of removing duplication is an important aspect of any software design. In this case, however, it also applies to removing any duplication between the test code and the production code—for example magic numbers or strings repeated in both to make the test pass in step 3.

Levels of Test Driven Development

There are two levels of TDD:

- Acceptance TDD (ATDD)

- With ATDD developers write a single acceptance test, or behavioral specification depending on preferred terminology, and then just enough production functionality/code to fulfill that test. The goal of ATDD is to specify detailed, executable requirements for the solution be on a just in time (JIT) basis. ATDD is also called Behavior Driven Development (BDD).

- Developer TDD

- With developer TDD a single developer test is written, sometimes inaccurately referred to as a unit test, and then just enough production code to fulfill that test. The goal of developer TDD is to specify a detailed, executable design for the solution be on a JIT basis. Developer TDD is often simply called TDD.

See more at: http://www.agiledata.org/essays/tdd.html#sthash.11uJfvXR.dpuf

- Testing using xUnit can be done as shown below

Characteristics of Good Tests

For developers, the implication is that they need to learn how to write effective unit tests. Beck’s experience is that good unit tests:

Traditional Testing

TDD is primarily a specification technique with a side effect of ensuring that your source code is thoroughly tested at a confirmatory level. However, there is more to testing than this. Particularly at scale you'll still need to consider other agile testing techniques such as pre-production integration testing and investigative testing. Much of this testing can also be done early in your project if you choose to do so (and you should). With traditional testing a successful test finds one or more defects. It is the same with TDD; when a test fails you have made progress because you now know that you need to resolve the problem. More importantly, you have a clear measure of success when the test no longer fails. TDD increases your confidence that your system actually meets the requirements defined for it, that your system actually works and therefore you can proceed with confidence. As with traditional testing, the greater the risk profile of the system the more thorough your tests need to be. With both traditional testing and TDD you aren't striving for perfection, instead you are testing to the importance of the system. To paraphrase Agile Modeling (AM), you should "test with a purpose" and know why you are testing something and to what level it needs to be tested. An interesting side effect of TDD is that you achieve 100% coverage test – every single line of code is tested – something that traditional testing doesn’t guarantee (although it does recommend it). In general I think it’s fairly safe to say that although TDD is a specification technique, a valuable side effect is that it results in significantly better code testing than do traditional techniques.

- See more at: http://www.agiledata.org/essays/tdd.html#sthash.11uJfvXR.dpuf

Documentation

Like it or not most programmers don’t read the written documentation for a system, instead they prefer to work with the code. And there’s nothing wrong with this. When trying to understand a class or operation most programmers will first look for sample code that already invokes it. Well-written unit tests do exactly this – the provide a working specification of your functional code – and as a result unit tests effectively become a significant portion of your technical documentation. The implication is that the expectations of the pro-documentation crowd need to reflect this reality. Similarly, acceptance tests can form an important part of your requirements documentation. This makes a lot of sense when you stop and think about it. Your acceptance tests define exactly what your stakeholders expect of your system, therefore they specify your critical requirements. Your regression test suite, particularly with a test-first approach, effectively becomes detailed executable specifications. - See more at: http://www.agiledata.org/essays/tdd.html#sthash.11uJfvXR.dpuf

Scaling TDD via Agile Model-Driven Development (AMDD)

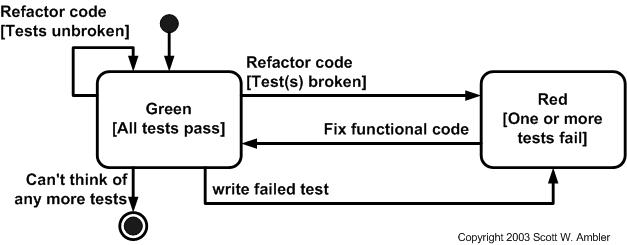

TDD is very good at detailed specification and validation, but not so good at thinking through bigger issues such as the overall design, how people will use the system, or the UI design (for example). Modeling, or more to the point agile model-driven development (AMDD) (the lifecycle for which is captured in Figure 4) is better suited for this. AMDD addresses the agile scaling issues that TDD does not.

- Agile Model Driven Development (AMDD) Lifecycle