CSC/ECE 506 Spring 2013/4a ss: Difference between revisions

(Created page with "300px|thumb|left|SCD's IBM SP system blackforest, a distributed shared memory ('''DSM''') system == SAS programming on distributed-memory machines == [http://en...") |

No edit summary |

||

| (25 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

== SAS programming on distributed-memory machines == | == SAS programming on distributed-memory machines == | ||

[http://en.wikipedia.org/wiki/Shared_memory '''Shared Address Space'''] (SAS) programming on distributed memory machines is a programming abstraction that provides less development effort than that of the traditional method of [http://en.wikipedia.org/wiki/Message_passing '''Message Passing'''] (MP) on distributed memory machines | [http://en.wikipedia.org/wiki/Shared_memory '''Shared Address Space'''] (SAS) programming on distributed memory machines is a programming abstraction that provides less development effort than that of the traditional method of [http://en.wikipedia.org/wiki/Message_passing '''Message Passing'''] (MP) on distributed memory machines. Example of SAS programming is clusters of servers. Distributed systems are groups of computers that communicate through a network and share a common work goal. Distributed systems typically do not physically share the same memory (are not [http://en.wikipedia.org/wiki/Coupling_%28computer_programming%29 '''tightly coupled''']) but rather each processor or group of processors must depend on mechanisms other than direct memory access in order to communicate. Some of the issues include [http://en.wikipedia.org/wiki/Memory_coherence '''memory coherence'''], types of memory access, data and process [http://en.wikipedia.org/wiki/Synchronization_%28computer_science%29 '''synchronization'''], and performance. | ||

=== Origins === | === Origins === | ||

Distributed memory systems | Distributed memory systems started to flourish in the 80s. The increasing performance in processors and network connectivity offered the perfect environment for parallel processing over a network of computers. This was a cheap way to put together massive computing power. The main drawback was going from sequential programs made for local memory to parallel programming in shared memory. This was where SAS provided the means to simplify programming by hiding the mechanisms to access distant memory located in other computers of the cluster. | ||

In 1985, Cheriton in his article [http://doi.acm.org.prox.lib.ncsu.edu/10.1145/858336.858338 "Preliminary thoughts on problem-oriented shared memory: a decentralized approach to distributed systems"] introduced ideas for the application of shared memory techniques in Distributed memory systems. Cheriton envisioned a system of nodes with a pool of shared memory with a common file namespace that could "decentralize the implementation of a service." | |||

=== Background === | === Background === | ||

[[File:Dsmd.jpg|400px|thumb|right|Distributed Shared Memory]] | [[File:Dsmd.jpg|400px|thumb|right|Distributed Shared Memory]] | ||

Early distributed computer systems relied almost exclusively on message passing in order to communicate with one another, and this technique is still widely used today. In a message passing model, each processor's local memory can be considered as isolated from that of the rest of the system. Processes or objects can send or receive messages in order to communicate and this can occur in a synchronous or asynchronous manner. In distributed systems, | Early distributed computer systems relied almost exclusively on message passing in order to communicate with one another, and this technique is still widely used today. In a message passing model, each processor's local memory can be considered as isolated from that of the rest of the system. Processes or objects can send or receive messages in order to communicate and this can occur in a synchronous or asynchronous manner. In distributed systems, particularly with certain types of programs, the message passing model can become overly burdensome to the programmer as tracking data movement and maintaining data integrity can become quite challenging with many control threads. A shared address or shared-memory system, however, can provide a programming model that simplifies data sharing via uniform mechanisms of data structure reads and writes on common memory. Current distributed systems seek to take advantage both SAS and MP programming model principles in hybrid systems. | ||

=== Distributed Shared Memory (DSM) === | === Distributed Shared Memory (DSM) === | ||

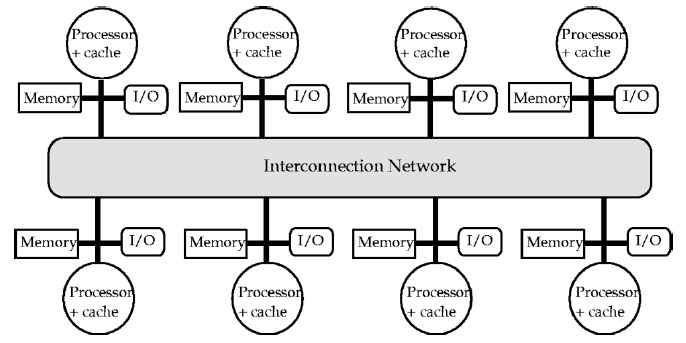

Distributed shared memory is an architectural approach designed to overcome the scaling limitations of symmetric shared memory multiprocessors while retaining a shared memory model for communication and programming. A distributed system consists of a set of nodes connected by an interconnection network. Nodes may be comprised of individual processors or a multiprocessor system (e.g [http://en.wikipedia.org/wiki/Symmetric_multiprocessing '''Symmetric Multiprocessor'''] (SMP)), the latter typically sharing a system bus. Each node contains a local memory, which maps partially to the distributed address space. | |||

Regardless of the system topology(bus,ring,mesh), a specific interconnection in each cluster must connect it to the system. Information about states and current locations of particular data blocks usually resides in a system table or directory. Directory organization varies from full map storage to different dynamic organizations. | Regardless of the system topology(bus,ring,mesh), a specific interconnection in each cluster must connect it to the system. Information about states and current locations of particular data blocks usually resides in a system table or directory. Directory organization varies from full map storage to different dynamic organizations. | ||

There are 3 issues while accessing the data in the DSM address space while keeping the data consistent | There are 3 issues while accessing the data in the DSM address space while keeping the data consistent | ||

a)Which DSM algorithm to use to access data | a)Which DSM algorithm to use to access data? | ||

Commonly used strategies are replication and migration. Replication allows multiple copies of same data items to reside in different local memories. Migration implies that only a single copy of a data item exists at any one time, so the data item must be moved to the requesting site for exclusive use. | Commonly used strategies are replication and migration. Replication allows multiple copies of same data items to reside in different local memories. Migration implies that only a single copy of a data item exists at any one time, so the data item must be moved to the requesting site for exclusive use. | ||

b)Implementation level of the DSM | b)Implementation level of the DSM - A look up for data should first determine if the requested data is in the local memory, if not the system must bring the data to local memory. This can be executed in software or hardware or both. Choice of implementations depends on the price/performance trade offs. | ||

c)Memory consistency model | c)Memory consistency model - The behavior of the memory with respect to read and write operations from multiple processors has to be dealt with appropriate memory consistency models. | ||

=== DSM Implementations === | |||

From an architectural point of view, DSMs are composed of several nodes connected via a network. Each of the nodes can be an individual machine or a cluster of machines. Each system has local memory modules that are in part or entirely part of the shared memory. There are many characteristics that can be used to classify DSM implementations. One of them is obvious and is based on the nature of the implementation as demarcation: Software, Hardware, and Hybrid. This historical classification has been extracted from [http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=494605&isnumber=10721 Distributed shared memory: concepts and systems]. | |||

Software DSM implementations refer to the DSM implemented by using user-level software, OS, programming language, or combination of all or some of them. | |||

===== | {| {{table}} | ||

| align="center" style="background:#f0f0f0;"|'''Implementation''' | |||

| align="center" style="background:#f0f0f0;"|'''Type of Implementation / Cluster configuration''' | |||

| align="center" style="background:#f0f0f0;"|'''Network''' | |||

| align="center" style="background:#f0f0f0;"|'''Type of Algorithm''' | |||

| align="center" style="background:#f0f0f0;"|'''Consistency Model''' | |||

| align="center" style="background:#f0f0f0;"|'''Granularity Unit''' | |||

| align="center" style="background:#f0f0f0;"|'''Coherence Policy''' | |||

| align="center" style="background:#f0f0f0;"|'''SW/HW/Hybrid''' | |||

|- | |||

| [http://www.cs.uwaterloo.ca/~brecht/courses/702/Possible-Readings/vm-and-gc/ivy-shared-virtual-memory-li-icpp-1988.pdf IVY]||User-level library + OS modification || style="padding-left: 2em" |- ||MRSW ||Sequential ||1 Kbyte ||Invalidate ||SW | |||

|- | |||

| [http://doi.acm.org.prox.lib.ncsu.edu/10.1145/121133.121159 Munin]||Runtime system + linker + library + preprocessor + OS modifications ||style="padding-left: 2em" | - ||Type-specific (SRSW, MRSW, MRMW) ||Release ||Variable size objects ||Type-specific (delayed update, invalidate) ||SW | |||

|- | |||

| [http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=485843&tag=1 TreadMarks]||User-level || style="padding-left: 2em" |- ||MRMW ||Lazy release ||4 Kbytes ||Update, Invalidate ||SW | |||

|- | |||

| [http://doi.acm.org.prox.lib.ncsu.edu/10.1145/74851.74871 Mirage]||OS kernel ||style="padding-left: 2em" | - ||MRSW ||Sequential ||512 bytes ||Invalidate ||SW | |||

|- | |||

| [http://onlinelibrary.wiley.com.prox.lib.ncsu.edu/doi/10.1002/spe.4380210503/pdf Clouds]||OS, out of kernel || style="padding-left: 2em" |- ||MRSW ||Inconsistent, sequential ||8 Kbytes ||Discard segment when unlocked ||SW | |||

|- | |||

| [http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=1663305&tag=1 Linda]||Language || style="padding-left: 2em" |- ||MRSW ||Sequential ||Variable (tuple size) ||Implementation- dependent ||SW | |||

|- | |||

| [http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=93183 Memnet]||Single processor, Memnet device||Token ring||MRSW||Sequential||32 bytes||Invalidate||HW | |||

|- | |||

| [http://en.wikipedia.org/wiki/Scalable_Coherent_Interface SCI]||Arbitrary||Arbitrary||MRSW||Sequential||16 bytes||Invalidate||HW | |||

|- | |||

| [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8026&rep=rep1&type=pdf KSR1]||64-bit custom PE, I+D caches, 32M local memory||Ring-based||MRSW||Sequential||128 bytes||Invalidate||HW | |||

|- | |||

| [http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=766965&isnumber=16621 RMS]||1-4 processors, caches, 256M local memory||RM bus||MRMW||Processor||4 bytes||Update||HW | |||

|- | |||

| [http://en.wikipedia.org/wiki/Alewife_(multiprocessor) Alewife]||Sparcle PE, 64K cache, 4M local memory, CMMU|| mesh||MRSW||Sequential||16 Kbytes||Invalidate||Hybrid | |||

|- | |||

| [http://dl.acm.org/citation.cfm?id=192056 Flash]||MIPS T5, I +D caches, Magic controller|| mesh||MRSW||Release||128 Kbytes||Invalidate||Hybrid | |||

|- | |||

| [http://www.cs.utexas.edu/users/dburger/teaching/cs395t-s08/papers/10_tempest.pdf Typhoon]||SuperSparc, 2-L caches|| NP controller||MRSW||Custom||32 Kbytes||Invalidate custom||Hybrid | |||

|- | |||

| [http://shrimp.cs.princeton.edu/ Shrimp]||16 Pentium PC nodes|| Intel Paragon routing network||MRMW||AURC, scope||4 Kbytes||Update/Invalidate||Hybrid | |||

|- | |||

|} | |||

Below is an explanation of the main characteristics listed in the DSM classification. | |||

There are three types of DSM algorithm: | |||

* '''Single Reader/ Single Writer''' (SRSW) | |||

** central server algorithm - produces long network delays | |||

** migration algorithm - produces thrashing and false sharing | |||

* '''Multiple Readers/ Single Writer''' (MRSW) - read replication algorithm. It uses write invalidate. MRSW is the most adopted algorithm. | |||

* '''Multiple Readers/Multiple Writers''' (MRMW) - full replication algorithm. It has full concurrency and uses atomic updates. | |||

The consistency model plays a fundamental role in DSM systems. Due to the nature of the distributed systems, memory accesses are constrained in the different consistency models. "A memory consistency model defines the legal ordering of memory references issued by some processor, as observed by other processors." The stricter the consistency model, the higher the access times, but programming is more simplified. Some of the consistency models types are: | |||

* Sequential consistency - all processors see the same ordering of memory references, and these are issued in sequences by the individual processors. | |||

* Processor consistency - the order of writes is observed by every individual processor in the system, but the order of reads does not need to be in sequence. | |||

* Weak consistency - consistency is required only on synchronization accesses. | |||

* Release consistency - divides the synchronization accesses into acquire and release. Normal read and writes for a particular node can be done only after all acquires for that node are finished. Similarly, releases can only be done after all writes and reads are finished. | |||

* Lazy Release consistency - extends the Release consistency, by propagating modifications to the shared data only on the acquire, and of those, only the ones related to critical sections. | |||

* Entry consistency - synchronization is performed at variable level. This increases programming labor but helps with lowering latency and traffic exchanges as only the specific variable needs to be synchronized. | |||

Granularity refers to the unit of data blocks that are managed by the coherence protocols. The unit differs between hardware and software systems, as hardware systems tend to use smaller size blocks than the virtual layer that manages the data in the software systems. The problem with larger size blocks is that the probability for contingency is higher, even when the different processors involved are not accessing the exact same piece of memory, just a part contained in the block size. This is known as false sharing and creates thrashing (memory blocks keep being requested by processors and processors keep waiting for the same memory blocks). | |||

Coherence policy regulates data replication. The coherence policy dictates if the data that is being written at a site should be invalidated or updated at the remote sites. Usually, systems with fine-grain coherence (byte/word) impose the update policy, whereas the systems based on coarse-grain (page) coherence utilize the invalidate policy. This is also known in other parts of the literature as coherence protocol. And the two types of protocols are known as write-invalidate and write-update. The write-invalidate protocol invalidates all the copies except one before writing to it. In contrast, write-update maintains all copies updated. | |||

=== Cache-Coherent DSM === | |||

Cache coherence, arises when different processors cache and update values of the same memory location. Early DSM systems implemented a shared address space where the amount of time required to access a piece of data was | |||

related to its location. These systems became known as [http://en.wikipedia.org/wiki/Non-Uniform_Memory_Access '''Non-Uniform Memory Access'''] (NUMA), whereas an SMP type system is known as [http://en.wikipedia.org/wiki/Uniform_Memory_Access '''Uniform Memory Access'''] (UMA) architecture. NUMA architectures were difficult to program in due to potentially significant differences in data access times. SMP architectures dealt with this problem through caching. | |||

Protocols were established that ensured prior to writing a location, all other copies of the location (in other caches) were invalidated.Thus, the system allows multiple copies of a memory location to exist when it is being read, but only one copy when it is being written. | |||

Cache-coherent DSM architectures rely on a directory-based [http://en.wikipedia.org/wiki/Cache_coherency '''cache coherence'''] where an extra directory structure keeps track of all blocks that have been cached by each processor. Since the directory tracks which caches have copies of any given memory block, a coherence protocol can use the directory to maintain a consistent view of memory. A coherence protocol can then establish a consistent view of memory by maintaining state and other information about each cached block. A simple cache coherence protocol can operate with three states for each cache block. These state are Invalid, Shared, and Exclusive. Furthermore, in a cache-coherent DSM machine, the directory is distributed in memory to associate with the cache block it describes in the physical local memory. | |||

=== Configurable Shared Virtual Memory === | |||

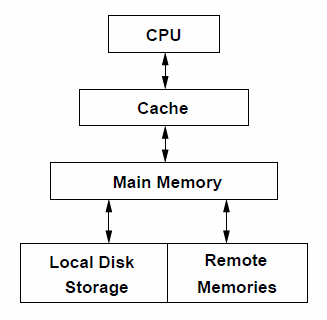

As described by [http://www.cs.utexas.edu/ftp/techreports/tr94-21.pdf Yoon, et al.] in 1994 the communication paradigm | As described by [http://www.cs.utexas.edu/ftp/techreports/tr94-21.pdf Yoon, et al.] in 1994 the communication paradigm | ||

for their DSM node network relies on a memory hierarchy for each node that places remote memories at the same hierarchy as | for their DSM node network relies on a memory hierarchy for each node that places remote memories at the same hierarchy as | ||

| Line 106: | Line 137: | ||

which acts as protection to control access to relevant memory locations. When received at the appropriate member | which acts as protection to control access to relevant memory locations. When received at the appropriate member | ||

node, the virtual address is translated to a local physical address. | node, the virtual address is translated to a local physical address. | ||

=====Improvements in communication===== | =====Improvements in communication===== | ||

Early SAS programming models in DSM environments suffered from poor performance because protection schemes demanded | Early SAS programming models in DSM environments suffered from poor performance because protection schemes demanded | ||

applications to access the network via system calls, significantly increasing latency. Later software | applications to access the network via system calls, significantly increasing latency. Later software | ||

| Line 124: | Line 156: | ||

request and will update data sent previously to an imported receive buffer. This transfer occurs directly without receiver | request and will update data sent previously to an imported receive buffer. This transfer occurs directly without receiver | ||

CPU interruption. | CPU interruption. | ||

=== Implementation of Page Management in Mome, a User-Level DSM === | |||

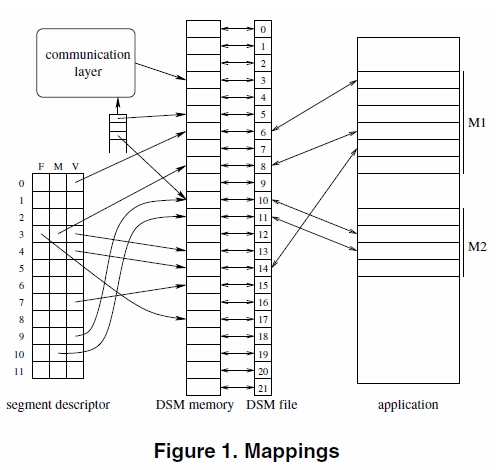

[[File:Untitled_Project.jpg|350px|thumb|left|Memory Mapping in Mome]] | |||

[http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=1199404 Mome] is described as a user-level distributed shared memory. Mome DSM allows heterogeneous processes running on distributed memory architectures and clusters to share data through the memory mapping of segments into their address space. Each parallel process can freely select the consistency model which must | |||

be applied to its own view of the shared data. The behavior of Mome has been evaluated in various configurations: high performance computing using the SPMDexecution model, code coupling through segment sharing on clusters, shared data repository or dynamic coupling of parallel codes. Parallel programs share data using the Mome DSM through the mapping of Mome segments on their private address space. A Fortran interface as well as a C interface are available in the current implementation. | |||

===== Mome Segment creation ===== | |||

Each segment of Mome is referenced using the same unique identifier on all processing nodes supporting the DSM. A new segment is created through a call to the MomeCreateSegment(size)routine. The routine returns a valid segment identifier which can be used for mapping requests by all the computation nodes. A process requests for a mapping between a local memory section and a Mome segment section using the call MomeMMap(Addr, Lg, Prot, Flags, Seg, Offset), which returns the starting address of the mapped region. Each mapping request made by a process is independent and the addresses of the mappings may or may not be consistent on all nodes. If mappings are consistent between processes, however, then pointers may be shared by them. Mome supports strong and weak consistency models, and for any particular page each node is able to dynamically manage its consistency during program execution. | |||

===== Page Management in Mome ===== | |||

Mome manages [http://en.wikipedia.org/wiki/Page_%28computer_memory%29 '''pages'''] in a directory based scheme where each page directory maintains the status of six characteristics per page on each node. The page manager acts upon collections of nodes according to these characteristics for each page: | |||

V nodes posses the current version, M nodes have a modified version, S nodes want strong consistency, I nodes are | |||

invalidated, F nodes have initiated a modification merge and H nodes are a special type of hidden page. A new version of | |||

a page is created prior to a constraint violation and before modifications are integrated as a result of a consistency | |||

request. | |||

===== Memory mapping in Mome ===== | |||

[[File:Mem hierarchy.png|200px|thumb|right|Memory hierarchy of node]] | |||

The Mome memory mapping figure to the left shows a possible DSM memory organization on a single node. The DSM memory size | |||

shown is 22 pages. When a new segment is created on a node a segment descriptor is created on that node. In this case the | |||

segment descriptor is 12 pages, with each segment descriptor block corresponding to one page. Each block also contains | |||

three DSM memory references for current, modified and next version of pages. The memory organization state shows an | |||

application with two mappings, M1 and M2, with segment offsets at 0 and 8. The six pages of M1 are managed by segment | |||

descriptor blocks 0 to 5. The descriptor blocks (and application memory) show that pages 1,2 and 5 have no associated | |||

memory, while M1 page 0 is mapped to block 6 as a current version and M1 page 3 is mapped to block 13 as a current version, | |||

block 8 as a modified version, and has initiated a modifications merge as indicated by the block 17 pointer. The communication | |||

layer manages incoming messages from other nodes. | |||

=== Programming Environment === | |||

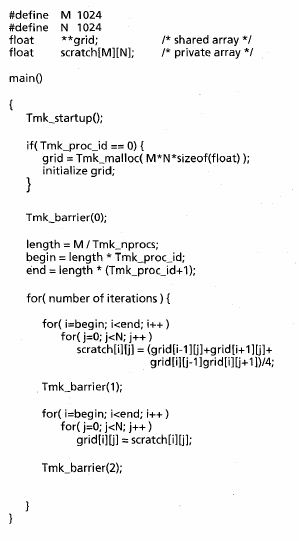

[[File:Shortest_path_pseudocode.jpg|300px|thumb|right|Shortest path pseudocode using TreadMarks API]] | [[File:Shortest_path_pseudocode.jpg|300px|thumb|right|Shortest path pseudocode using TreadMarks API]] | ||

The globally shared memory abstraction provided through virtual memory or some other DSM mechanism allows programmers | The globally shared memory abstraction provided through virtual memory or some other DSM mechanism allows programmers | ||

to focus on algorithms instead of processor communication and data tracking. Many programming environments have been | to focus on algorithms instead of processor communication and data tracking. Many programming environments have been | ||

| Line 133: | Line 200: | ||

C, C++ or Fortran and then compiled and linked with the TreadMarks library. | C, C++ or Fortran and then compiled and linked with the TreadMarks library. | ||

Shown at | [[File:Jacobi_code.jpg|300px|thumb|left|Jacobi method pseudocode using TreadMarks API]] | ||

Shown at right is a pseudocode example of using the TreadMarks API to implement the Jacobi method, a type of partial | |||

differential equation solver. The code iterates over a 2D array and updates each element to the average of its four | differential equation solver. The code iterates over a 2D array and updates each element to the average of its four | ||

nearest neighbors. All processors are assigned an approximately equivalent number of rows and neighboring processes | nearest neighbors. All processors are assigned an approximately equivalent number of rows and neighboring processes | ||

| Line 143: | Line 211: | ||

guarantees all current iteration values are written before any next iteration computation begins. | guarantees all current iteration values are written before any next iteration computation begins. | ||

To the | |||

To the left is shown a short pseudocode program exemplifying another SAS synchronization technique which uses [http://en.wikipedia.org/wiki/Lock_%28computer_science%29 '''locks''']. This program calculates the shortest path in a grouping of nodes that starts at any designated start node, visits each | |||

other node once and returns to the origin node. The shortest route identified thus far is stored in the shared ''Shortest_length'' | other node once and returns to the origin node. The shortest route identified thus far is stored in the shared ''Shortest_length'' | ||

and investigated routes are kept in a queue, most promising at the front, and expanded one node at a time. A process | and investigated routes are kept in a queue, most promising at the front, and expanded one node at a time. A process | ||

| Line 152: | Line 221: | ||

increasing the ''Shortest_path'' a lock is acquired to ensure [http://en.wikipedia.org/wiki/Mutual_exclusion '''mutual exclusion'''] to update this shared data as well. | increasing the ''Shortest_path'' a lock is acquired to ensure [http://en.wikipedia.org/wiki/Mutual_exclusion '''mutual exclusion'''] to update this shared data as well. | ||

=== MSA: Multiphase Specifically Shared Arrays === | |||

It is often argued that shared address space (SAS) is an easier method of programming. Although quantitative empirical support for such a statement is lacking, there probably is an intuitive basis for this belief among a section of researchers. Jayant DeSouza, et al.[http://charm.cs.illinois.edu/newPapers/04-10/paper.pdf] indicate that there are distinct programming situations where SAS is an easier programming model whereas there are equally distinct situations where it is not. E.g. when there are data races, shared memory paradigm, which allows for a much larger number of distinguishable interleavings of executions of multiple threads,tends to be a difficult paradigm. In contrast, in a computation such as matrix multiply, where the data in input matrices is only read, and data in the output matrix is only written, is relatively easier to express in SAS. Further, since SAS views all data as uniformly accessible, it does not lend itself to locality-conscious programming, which is needed for efficiency. | |||

They suggest that the problems with SAS are due to trying to do everything (i.e. all kinds of information exchange between processes) with SAS. It may be more productive to incorporate SAS as a part of a programming model that also allows private data for each thread, and mechanisms for synchronization and data-exchange such as message-passing or method-invocations. This frees us to support only those SAS access modes that can be efficiently and scalably supported on distributed memory machines including clusters, without being encumbered by having to support a “complete” programming model. | |||

Which access modes can be efficiently supported? read-only accesses, write-many accesses (where each location is updated by at most one thread), and accumulate accesses (where multiple threads may update a single location, but only via a well-defined commutative associative operation) seem to be the obvious candidates. Another observation stemming from our application studies is that the access | |||

pattern for the same data changes distinctly between computation phases. For example, a matrix multiply operation (C = AxB) may calculate a C matrix in Phase I of the computation (where A and B matrices are accessed in readonly manner, and C is written-only or accumulated), whereas in the phase II, C matrix may be used in a read-only manner while A and B may be updated. These phases may then iterate. This suggests the idea of multi-phase shared arrays. | |||

For each multi-phase shared array(MSA), the programmer specifies its access mode, and may change it between phases. The phases may be separated by array-specific synchronizations such as barrier (as in release consistency). The restricted set of operations simplifies the consistency protocol and traffic associated with that: no invalidations are needed, all writes can be buffered, etc. One of the costs of DSM systems is the long latency on “misses”. Processor virtualization techniques that have been explored in Charm++ and Adaptive MPI (AMPI) allow many user-level (lightweight) threads per processor, which help tolerate such latencies. The MSA abstraction has been implemented as a library in Charm++ and AMPI, and as a language-level feature in a compiler-supported parallel language called Jade. Compiler support simplifies syntax and automates optimizations which have to be done manually by MSA users (such as inserting prefetches). | |||

'''MSA Description''' | |||

Conceptually, an MSA is an array of data elements that can be globally accessed in an MIMD program in one of several dynamically chosen global access modes and in units of a user-defined page size. Internally, the MSA array is split up into “pages” of a user-defined size. The page size is user specified for each MSA at the time the MSA is created. The page size is specified as a number of elements and can range from one element to the total number of elements in the array. The access modes are not expected to be fixed for the lifetime of an array, but for a phase of execution of the program. MSA’s are implemented as a templated, object-oriented C++ class. Currently 1D and 2D MSA arrays are supported. The elements of an MSA can be one of the standard C++ built-in types, or a user-defined class with certain operations. The number of elements in the MSA is specified in the constructor when the MSA is created. Currently, an MSA cannot be resized once created. For complicated element types (such as linked lists), a pup() method must be defined by the user for the element type: this is used to pack and unpack the element when pages are shipped around. This allows each element to be a linked list or other variable sized data structure. | |||

=== Performance === | === Performance === | ||

There are numerous studies of the performance of shared memory applications in distributed systems. The vast majority of them use a collection of programs named [http://dl.acm.org/citation.cfm?id=223990 SPLASH and SPLASH-2.] | There are numerous studies of the performance of shared memory applications in distributed systems. The vast majority of them use a collection of programs named [http://dl.acm.org/citation.cfm?id=223990 SPLASH and SPLASH-2.] | ||

===== SPLASH and SPLASH-2 ===== | ===== SPLASH and SPLASH-2 ===== | ||

The '''Stanford ParalleL Applications for | The '''Stanford ParalleL Applications for Shared memory''' (SPLASH) is a collection of parallel programs engineered for the evaluation of shared address space machines. These programs have been used by research studies to provide measurements and analysis of different aspects of the emerging DSM architectures at the time. A subsequent suite of programs (SPLASH-2) evolved from the necessity of improving on the SPLASH programs limitations. SPLASH-2 covers a more ample domain of scientific programs, makes use of improved algorithms, and pays more attention to the architecture of the underlying systems. | ||

Selected applications in the SPLASH-2 collections include: | Selected applications in the SPLASH-2 collections include: | ||

| Line 231: | Line 253: | ||

===== Case studies ===== | ===== Case studies ===== | ||

In 2001, [http://escholarship.org/uc/item/76p9b40g#page-1 Shan et al.] presented a comparison of the performance and programming effort of MP versus SAS running on clusters of '''Symmetric Memory Processors''' (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort, among others. | In 2001, [http://escholarship.org/uc/item/76p9b40g#page-1 Shan et al.] presented a comparison of the performance and programming effort of MP versus SAS running on clusters of '''Symmetric Memory Processors''' (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort, among others. | ||

| Line 330: | Line 353: | ||

The overall conclusion is that using multiple threads per node and optimized locking mechanisms (CVM-type) provides the best performance. | The overall conclusion is that using multiple threads per node and optimized locking mechanisms (CVM-type) provides the best performance. | ||

=== Evolution === | |||

=== Evolution Over Time === | |||

A more recent version of a distributed shared memory system is vNUMA. [http://www.usenix.org/event/usenix09/tech/full_papers/chapman/chapman_html/index.html Chapman and Heiser], describe vNUMA (where v is for virtual and NUMA is for [http://en.wikipedia.org/wiki/Non-Uniform_Memory_Access Non Uniform Memory Access]) as "a virtual machine that presents a cluster as a virtual shared-memory multiprocessor." The virtualization in vNUMA is a layer between the real CPUs that form the distributed shared memory system and the OS that runs on top it, usually Linux. The DSM in vNUMA is part of the hypervisor, which is the part of a virtual system that maps guest virtual addresses into real physical ones. The guest virtual addresses get then mapped into virtual memory addresses through the guest OS. | A more recent version of a distributed shared memory system is vNUMA. [http://www.usenix.org/event/usenix09/tech/full_papers/chapman/chapman_html/index.html Chapman and Heiser], describe vNUMA (where v is for virtual and NUMA is for [http://en.wikipedia.org/wiki/Non-Uniform_Memory_Access Non Uniform Memory Access]) as "a virtual machine that presents a cluster as a virtual shared-memory multiprocessor." The virtualization in vNUMA is a layer between the real CPUs that form the distributed shared memory system and the OS that runs on top it, usually Linux. The DSM in vNUMA is part of the hypervisor, which is the part of a virtual system that maps guest virtual addresses into real physical ones. The guest virtual addresses get then mapped into virtual memory addresses through the guest OS. | ||

| Line 361: | Line 386: | ||

*David R. Cheriton. 1985. [http://doi.acm.org.prox.lib.ncsu.edu/10.1145/858336.858338 Preliminary thoughts on problem-oriented shared memory: a decentralized approach to distributed systems.] SIGOPS Oper. Syst. Rev. 19, 4 (October 1985), 26-33. | *David R. Cheriton. 1985. [http://doi.acm.org.prox.lib.ncsu.edu/10.1145/858336.858338 Preliminary thoughts on problem-oriented shared memory: a decentralized approach to distributed systems.] SIGOPS Oper. Syst. Rev. 19, 4 (October 1985), 26-33. | ||

*Matthew Chapman and Gernot Heiser. 2009. [http://www.usenix.org/event/usenix09/tech/full_papers/chapman/chapman_html/index.html vNUMA: a virtual shared-memory multiprocessor.] In Proceedings of the 2009 conference on USENIX Annual technical conference (USENIX'09). USENIX Association, Berkeley, CA, USA, 2-2. | *Matthew Chapman and Gernot Heiser. 2009. [http://www.usenix.org/event/usenix09/tech/full_papers/chapman/chapman_html/index.html vNUMA: a virtual shared-memory multiprocessor.] In Proceedings of the 2009 conference on USENIX Annual technical conference (USENIX'09). USENIX Association, Berkeley, CA, USA, 2-2. | ||

*Jayant DeSouza and Laxmikant V. Kal´e. [http://charm.cs.illinois.edu/newPapers/04-10/paper.pdf] | |||

== Quiz == | == Quiz == | ||

Latest revision as of 02:58, 14 February 2013

SAS programming on distributed-memory machines

Shared Address Space (SAS) programming on distributed memory machines is a programming abstraction that provides less development effort than that of the traditional method of Message Passing (MP) on distributed memory machines. Example of SAS programming is clusters of servers. Distributed systems are groups of computers that communicate through a network and share a common work goal. Distributed systems typically do not physically share the same memory (are not tightly coupled) but rather each processor or group of processors must depend on mechanisms other than direct memory access in order to communicate. Some of the issues include memory coherence, types of memory access, data and process synchronization, and performance.

Origins

Distributed memory systems started to flourish in the 80s. The increasing performance in processors and network connectivity offered the perfect environment for parallel processing over a network of computers. This was a cheap way to put together massive computing power. The main drawback was going from sequential programs made for local memory to parallel programming in shared memory. This was where SAS provided the means to simplify programming by hiding the mechanisms to access distant memory located in other computers of the cluster.

In 1985, Cheriton in his article "Preliminary thoughts on problem-oriented shared memory: a decentralized approach to distributed systems" introduced ideas for the application of shared memory techniques in Distributed memory systems. Cheriton envisioned a system of nodes with a pool of shared memory with a common file namespace that could "decentralize the implementation of a service."

Background

Early distributed computer systems relied almost exclusively on message passing in order to communicate with one another, and this technique is still widely used today. In a message passing model, each processor's local memory can be considered as isolated from that of the rest of the system. Processes or objects can send or receive messages in order to communicate and this can occur in a synchronous or asynchronous manner. In distributed systems, particularly with certain types of programs, the message passing model can become overly burdensome to the programmer as tracking data movement and maintaining data integrity can become quite challenging with many control threads. A shared address or shared-memory system, however, can provide a programming model that simplifies data sharing via uniform mechanisms of data structure reads and writes on common memory. Current distributed systems seek to take advantage both SAS and MP programming model principles in hybrid systems.

Distributed shared memory is an architectural approach designed to overcome the scaling limitations of symmetric shared memory multiprocessors while retaining a shared memory model for communication and programming. A distributed system consists of a set of nodes connected by an interconnection network. Nodes may be comprised of individual processors or a multiprocessor system (e.g Symmetric Multiprocessor (SMP)), the latter typically sharing a system bus. Each node contains a local memory, which maps partially to the distributed address space. Regardless of the system topology(bus,ring,mesh), a specific interconnection in each cluster must connect it to the system. Information about states and current locations of particular data blocks usually resides in a system table or directory. Directory organization varies from full map storage to different dynamic organizations. There are 3 issues while accessing the data in the DSM address space while keeping the data consistent a)Which DSM algorithm to use to access data? Commonly used strategies are replication and migration. Replication allows multiple copies of same data items to reside in different local memories. Migration implies that only a single copy of a data item exists at any one time, so the data item must be moved to the requesting site for exclusive use. b)Implementation level of the DSM - A look up for data should first determine if the requested data is in the local memory, if not the system must bring the data to local memory. This can be executed in software or hardware or both. Choice of implementations depends on the price/performance trade offs. c)Memory consistency model - The behavior of the memory with respect to read and write operations from multiple processors has to be dealt with appropriate memory consistency models.

DSM Implementations

From an architectural point of view, DSMs are composed of several nodes connected via a network. Each of the nodes can be an individual machine or a cluster of machines. Each system has local memory modules that are in part or entirely part of the shared memory. There are many characteristics that can be used to classify DSM implementations. One of them is obvious and is based on the nature of the implementation as demarcation: Software, Hardware, and Hybrid. This historical classification has been extracted from Distributed shared memory: concepts and systems.

Software DSM implementations refer to the DSM implemented by using user-level software, OS, programming language, or combination of all or some of them.

| Implementation | Type of Implementation / Cluster configuration | Network | Type of Algorithm | Consistency Model | Granularity Unit | Coherence Policy | SW/HW/Hybrid |

| IVY | User-level library + OS modification | - | MRSW | Sequential | 1 Kbyte | Invalidate | SW |

| Munin | Runtime system + linker + library + preprocessor + OS modifications | - | Type-specific (SRSW, MRSW, MRMW) | Release | Variable size objects | Type-specific (delayed update, invalidate) | SW |

| TreadMarks | User-level | - | MRMW | Lazy release | 4 Kbytes | Update, Invalidate | SW |

| Mirage | OS kernel | - | MRSW | Sequential | 512 bytes | Invalidate | SW |

| Clouds | OS, out of kernel | - | MRSW | Inconsistent, sequential | 8 Kbytes | Discard segment when unlocked | SW |

| Linda | Language | - | MRSW | Sequential | Variable (tuple size) | Implementation- dependent | SW |

| Memnet | Single processor, Memnet device | Token ring | MRSW | Sequential | 32 bytes | Invalidate | HW |

| SCI | Arbitrary | Arbitrary | MRSW | Sequential | 16 bytes | Invalidate | HW |

| KSR1 | 64-bit custom PE, I+D caches, 32M local memory | Ring-based | MRSW | Sequential | 128 bytes | Invalidate | HW |

| RMS | 1-4 processors, caches, 256M local memory | RM bus | MRMW | Processor | 4 bytes | Update | HW |

| Alewife | Sparcle PE, 64K cache, 4M local memory, CMMU | mesh | MRSW | Sequential | 16 Kbytes | Invalidate | Hybrid |

| Flash | MIPS T5, I +D caches, Magic controller | mesh | MRSW | Release | 128 Kbytes | Invalidate | Hybrid |

| Typhoon | SuperSparc, 2-L caches | NP controller | MRSW | Custom | 32 Kbytes | Invalidate custom | Hybrid |

| Shrimp | 16 Pentium PC nodes | Intel Paragon routing network | MRMW | AURC, scope | 4 Kbytes | Update/Invalidate | Hybrid |

Below is an explanation of the main characteristics listed in the DSM classification.

There are three types of DSM algorithm:

- Single Reader/ Single Writer (SRSW)

- central server algorithm - produces long network delays

- migration algorithm - produces thrashing and false sharing

- Multiple Readers/ Single Writer (MRSW) - read replication algorithm. It uses write invalidate. MRSW is the most adopted algorithm.

- Multiple Readers/Multiple Writers (MRMW) - full replication algorithm. It has full concurrency and uses atomic updates.

The consistency model plays a fundamental role in DSM systems. Due to the nature of the distributed systems, memory accesses are constrained in the different consistency models. "A memory consistency model defines the legal ordering of memory references issued by some processor, as observed by other processors." The stricter the consistency model, the higher the access times, but programming is more simplified. Some of the consistency models types are:

- Sequential consistency - all processors see the same ordering of memory references, and these are issued in sequences by the individual processors.

- Processor consistency - the order of writes is observed by every individual processor in the system, but the order of reads does not need to be in sequence.

- Weak consistency - consistency is required only on synchronization accesses.

- Release consistency - divides the synchronization accesses into acquire and release. Normal read and writes for a particular node can be done only after all acquires for that node are finished. Similarly, releases can only be done after all writes and reads are finished.

- Lazy Release consistency - extends the Release consistency, by propagating modifications to the shared data only on the acquire, and of those, only the ones related to critical sections.

- Entry consistency - synchronization is performed at variable level. This increases programming labor but helps with lowering latency and traffic exchanges as only the specific variable needs to be synchronized.

Granularity refers to the unit of data blocks that are managed by the coherence protocols. The unit differs between hardware and software systems, as hardware systems tend to use smaller size blocks than the virtual layer that manages the data in the software systems. The problem with larger size blocks is that the probability for contingency is higher, even when the different processors involved are not accessing the exact same piece of memory, just a part contained in the block size. This is known as false sharing and creates thrashing (memory blocks keep being requested by processors and processors keep waiting for the same memory blocks).

Coherence policy regulates data replication. The coherence policy dictates if the data that is being written at a site should be invalidated or updated at the remote sites. Usually, systems with fine-grain coherence (byte/word) impose the update policy, whereas the systems based on coarse-grain (page) coherence utilize the invalidate policy. This is also known in other parts of the literature as coherence protocol. And the two types of protocols are known as write-invalidate and write-update. The write-invalidate protocol invalidates all the copies except one before writing to it. In contrast, write-update maintains all copies updated.

Cache-Coherent DSM

Cache coherence, arises when different processors cache and update values of the same memory location. Early DSM systems implemented a shared address space where the amount of time required to access a piece of data was related to its location. These systems became known as Non-Uniform Memory Access (NUMA), whereas an SMP type system is known as Uniform Memory Access (UMA) architecture. NUMA architectures were difficult to program in due to potentially significant differences in data access times. SMP architectures dealt with this problem through caching. Protocols were established that ensured prior to writing a location, all other copies of the location (in other caches) were invalidated.Thus, the system allows multiple copies of a memory location to exist when it is being read, but only one copy when it is being written.

Cache-coherent DSM architectures rely on a directory-based cache coherence where an extra directory structure keeps track of all blocks that have been cached by each processor. Since the directory tracks which caches have copies of any given memory block, a coherence protocol can use the directory to maintain a consistent view of memory. A coherence protocol can then establish a consistent view of memory by maintaining state and other information about each cached block. A simple cache coherence protocol can operate with three states for each cache block. These state are Invalid, Shared, and Exclusive. Furthermore, in a cache-coherent DSM machine, the directory is distributed in memory to associate with the cache block it describes in the physical local memory.

As described by Yoon, et al. in 1994 the communication paradigm for their DSM node network relies on a memory hierarchy for each node that places remote memories at the same hierarchy as its own local disk storage. Page faults within a given node that can be resolved within disk storage are handled normally while those that cannot are resolved between node main memory and memory of other nodes. Point to point communication at the node level is supported through message passing, and the specific mechanism for communication is agreed to by all nodes.

Yoon describes a DSM system that generates a shared virtual memory on a per job basis. A configurable shared virtual address space (CSVS) is readied for when a member node receives a job, generates a job identification number and creates an information table in its memory:

JOB_INFORMATION {

status;

number_of_tasks;

number_of_completed_tasks;

*member_list; /*pointer to first member*/

number_of_members;

IO_server;

}

The status refers to the creation of the CSVS and number_of_members and member_list are established through a task distribution process during address space assignment. All tasks associated with the program are tagged with the job_id and requester_id and, following address space assignment, are distributed across the system. The actual CSVS creation occurs when the first task of a job is initiated by a member, who requests the generation of the new CSVS to all other members. Subspace assignment for the SAS model ensues under the specific job_id.

The operating system (OS) or memory management unit (MMU) of each member maintains a copy of the JOB_INFORMATION table which is consulted to identify the default manager when a page fault occurs. When a page fault does occur, the MMU locates the default manager and handles the fault normally. If the page requested is out of its subspace then the virtual address, job_id, and default manager identification are sent to the control unit (CU) to construct a message requesting a page copy. All messages sent through the CSVS must include a virtual address and the job_id, which acts as protection to control access to relevant memory locations. When received at the appropriate member node, the virtual address is translated to a local physical address.

Improvements in communication

Early SAS programming models in DSM environments suffered from poor performance because protection schemes demanded applications to access the network via system calls, significantly increasing latency. Later software systems and network interfaces arose that were able to ensure safety without incurring the time cost of the system calls. Addressing this and other latency sources on both ends of communication were an important goal for projects such as the Virtual memory- mapped communication (VMMC) model that was developed as part of the Shrimp Project.

Protection is achieved in VMMC because the receiver must grant permission before the sender is allowed to transfer data to a receiver defined area of its address space. In this communication scheme, the receiver process exports areas of its address space that will act as receive buffers and sending processes must import the destinations. There is no explicit receive operation in VMMC. Receivers are able to define which senders can import specific buffers and VMMC ensures only receiver buffer space is overwritten. Imported receive buffers are mapped to a destination proxy space which can be implemented as part of the sender's virtual address space and can be translated by VMMC to a receiver, process and memory address. VMMC supports a deliberate update request and will update data sent previously to an imported receive buffer. This transfer occurs directly without receiver CPU interruption.

Implementation of Page Management in Mome, a User-Level DSM

Mome is described as a user-level distributed shared memory. Mome DSM allows heterogeneous processes running on distributed memory architectures and clusters to share data through the memory mapping of segments into their address space. Each parallel process can freely select the consistency model which must be applied to its own view of the shared data. The behavior of Mome has been evaluated in various configurations: high performance computing using the SPMDexecution model, code coupling through segment sharing on clusters, shared data repository or dynamic coupling of parallel codes. Parallel programs share data using the Mome DSM through the mapping of Mome segments on their private address space. A Fortran interface as well as a C interface are available in the current implementation.

Mome Segment creation

Each segment of Mome is referenced using the same unique identifier on all processing nodes supporting the DSM. A new segment is created through a call to the MomeCreateSegment(size)routine. The routine returns a valid segment identifier which can be used for mapping requests by all the computation nodes. A process requests for a mapping between a local memory section and a Mome segment section using the call MomeMMap(Addr, Lg, Prot, Flags, Seg, Offset), which returns the starting address of the mapped region. Each mapping request made by a process is independent and the addresses of the mappings may or may not be consistent on all nodes. If mappings are consistent between processes, however, then pointers may be shared by them. Mome supports strong and weak consistency models, and for any particular page each node is able to dynamically manage its consistency during program execution.

Page Management in Mome

Mome manages pages in a directory based scheme where each page directory maintains the status of six characteristics per page on each node. The page manager acts upon collections of nodes according to these characteristics for each page: V nodes posses the current version, M nodes have a modified version, S nodes want strong consistency, I nodes are invalidated, F nodes have initiated a modification merge and H nodes are a special type of hidden page. A new version of a page is created prior to a constraint violation and before modifications are integrated as a result of a consistency request.

Memory mapping in Mome

The Mome memory mapping figure to the left shows a possible DSM memory organization on a single node. The DSM memory size shown is 22 pages. When a new segment is created on a node a segment descriptor is created on that node. In this case the segment descriptor is 12 pages, with each segment descriptor block corresponding to one page. Each block also contains three DSM memory references for current, modified and next version of pages. The memory organization state shows an application with two mappings, M1 and M2, with segment offsets at 0 and 8. The six pages of M1 are managed by segment descriptor blocks 0 to 5. The descriptor blocks (and application memory) show that pages 1,2 and 5 have no associated memory, while M1 page 0 is mapped to block 6 as a current version and M1 page 3 is mapped to block 13 as a current version, block 8 as a modified version, and has initiated a modifications merge as indicated by the block 17 pointer. The communication layer manages incoming messages from other nodes.

Programming Environment

The globally shared memory abstraction provided through virtual memory or some other DSM mechanism allows programmers to focus on algorithms instead of processor communication and data tracking. Many programming environments have been developed for DSM systems including Rice University's TreadMarks in the 1990s. TreadMarks was a user-level library that ran on top of Unix. Programs were written in C, C++ or Fortran and then compiled and linked with the TreadMarks library.

Shown at right is a pseudocode example of using the TreadMarks API to implement the Jacobi method, a type of partial differential equation solver. The code iterates over a 2D array and updates each element to the average of its four nearest neighbors. All processors are assigned an approximately equivalent number of rows and neighboring processes share boundary rows as is necessary for the calculation. This example shows TreadMarks use of barriers, a technique used for process synchronization. Barriers prevent race conditions. void Tmk_startup(int argc, char **argv) initializes TreadMarks and starts the remote processes. The void Tmk_barrier(unsigned id) call blocks the calling process until every other process arrives at the barrier. In this example, Tmk_barrier(0) guarantees that process 0 completes initialization before any process proceeds, Tmk_barrier(1) guarantees all previous iteration values are read before any current iteration values are written, and Tmk_barrier(2) guarantees all current iteration values are written before any next iteration computation begins.

To the left is shown a short pseudocode program exemplifying another SAS synchronization technique which uses locks. This program calculates the shortest path in a grouping of nodes that starts at any designated start node, visits each

other node once and returns to the origin node. The shortest route identified thus far is stored in the shared Shortest_length

and investigated routes are kept in a queue, most promising at the front, and expanded one node at a time. A process

compares its resulting shortest partial path with Shortest_length, updating if necessary and returns to the queue

to continue its search. Process 0 allocates the shared queue and minimum length. Exclusive access must be established

and maintained to ensure correctness and this is achieved through a lock on the queue and Shortest_length. Each

process acquires the queue lock to identify a promising partial path and releases it upon finding one. When

increasing the Shortest_path a lock is acquired to ensure mutual exclusion to update this shared data as well.

It is often argued that shared address space (SAS) is an easier method of programming. Although quantitative empirical support for such a statement is lacking, there probably is an intuitive basis for this belief among a section of researchers. Jayant DeSouza, et al.[1] indicate that there are distinct programming situations where SAS is an easier programming model whereas there are equally distinct situations where it is not. E.g. when there are data races, shared memory paradigm, which allows for a much larger number of distinguishable interleavings of executions of multiple threads,tends to be a difficult paradigm. In contrast, in a computation such as matrix multiply, where the data in input matrices is only read, and data in the output matrix is only written, is relatively easier to express in SAS. Further, since SAS views all data as uniformly accessible, it does not lend itself to locality-conscious programming, which is needed for efficiency. They suggest that the problems with SAS are due to trying to do everything (i.e. all kinds of information exchange between processes) with SAS. It may be more productive to incorporate SAS as a part of a programming model that also allows private data for each thread, and mechanisms for synchronization and data-exchange such as message-passing or method-invocations. This frees us to support only those SAS access modes that can be efficiently and scalably supported on distributed memory machines including clusters, without being encumbered by having to support a “complete” programming model. Which access modes can be efficiently supported? read-only accesses, write-many accesses (where each location is updated by at most one thread), and accumulate accesses (where multiple threads may update a single location, but only via a well-defined commutative associative operation) seem to be the obvious candidates. Another observation stemming from our application studies is that the access pattern for the same data changes distinctly between computation phases. For example, a matrix multiply operation (C = AxB) may calculate a C matrix in Phase I of the computation (where A and B matrices are accessed in readonly manner, and C is written-only or accumulated), whereas in the phase II, C matrix may be used in a read-only manner while A and B may be updated. These phases may then iterate. This suggests the idea of multi-phase shared arrays.

For each multi-phase shared array(MSA), the programmer specifies its access mode, and may change it between phases. The phases may be separated by array-specific synchronizations such as barrier (as in release consistency). The restricted set of operations simplifies the consistency protocol and traffic associated with that: no invalidations are needed, all writes can be buffered, etc. One of the costs of DSM systems is the long latency on “misses”. Processor virtualization techniques that have been explored in Charm++ and Adaptive MPI (AMPI) allow many user-level (lightweight) threads per processor, which help tolerate such latencies. The MSA abstraction has been implemented as a library in Charm++ and AMPI, and as a language-level feature in a compiler-supported parallel language called Jade. Compiler support simplifies syntax and automates optimizations which have to be done manually by MSA users (such as inserting prefetches).

MSA Description

Conceptually, an MSA is an array of data elements that can be globally accessed in an MIMD program in one of several dynamically chosen global access modes and in units of a user-defined page size. Internally, the MSA array is split up into “pages” of a user-defined size. The page size is user specified for each MSA at the time the MSA is created. The page size is specified as a number of elements and can range from one element to the total number of elements in the array. The access modes are not expected to be fixed for the lifetime of an array, but for a phase of execution of the program. MSA’s are implemented as a templated, object-oriented C++ class. Currently 1D and 2D MSA arrays are supported. The elements of an MSA can be one of the standard C++ built-in types, or a user-defined class with certain operations. The number of elements in the MSA is specified in the constructor when the MSA is created. Currently, an MSA cannot be resized once created. For complicated element types (such as linked lists), a pup() method must be defined by the user for the element type: this is used to pack and unpack the element when pages are shipped around. This allows each element to be a linked list or other variable sized data structure.

Performance

There are numerous studies of the performance of shared memory applications in distributed systems. The vast majority of them use a collection of programs named SPLASH and SPLASH-2.

SPLASH and SPLASH-2

The Stanford ParalleL Applications for Shared memory (SPLASH) is a collection of parallel programs engineered for the evaluation of shared address space machines. These programs have been used by research studies to provide measurements and analysis of different aspects of the emerging DSM architectures at the time. A subsequent suite of programs (SPLASH-2) evolved from the necessity of improving on the SPLASH programs limitations. SPLASH-2 covers a more ample domain of scientific programs, makes use of improved algorithms, and pays more attention to the architecture of the underlying systems.

Selected applications in the SPLASH-2 collections include:

- FFT: a Fast Fourier Transform implementation, in which the data is organized in source and destination matrices so that processors have stored in their local memory a contiguous set of rows. In this application all processors involved communicate among them, sending data to each other, to evaluate a matrix transposition.

- Ocean: calculations of large scale ocean movements simulating eddy currents. For the purpose of calculations, it shows nearest-neighbors accessing patterns in multi-grid formation as opposed to using a single grid.

- LU: matrix decomposition in the product of an upper triangular and lower triangular matrices. LU exhibits a "one-to-many non-personalized communication".

- Barnes: simulates the interaction of a group of particles over time steps.

- Radix sort: integer sorting algorithm. This algorithm implementation displays an example of communication among all the processors involved, and the nature of this communication presents irregular patterns.

Case studies

In 2001, Shan et al. presented a comparison of the performance and programming effort of MP versus SAS running on clusters of Symmetric Memory Processors (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort, among others.

The complexity of development is represented by the number of code lines per application as shown in the table below this lines. It is observed that SAS complexity is significantly lower than that of MP and this difference increases as applications are more irregular and dynamic in nature (almost doubles for Radix).

| Appl. | FFT | OCEAN | LU | RADIX | SAMPLE | N-BODY |

|---|---|---|---|---|---|---|

| MPI | 222 | 4320 | 470 | 384 | 479 | 1371 |

| SAS | 210 | 2878 | 309 | 201 | 450 | 950 |

The results performance-wise indicated that SAS was only half efficiently dealing with parallelism for most of the applications in this study. The only application that showed similar performance for both methods (MP and SAS) was the LU application. The authors concluded that the overhead of the SVM protocol for maintaining page coherence and synchronization were the handicap of the easier SAS programming model.

In 2004, Iosevich and Schuster performed a study on the choice of memory consistency model in a DSM. The two types of models under study were the sequential consistency (SC) model and the relaxed consistency model, in particular the home-based lazy release consistency (HLRC) protocol. The SC provides a less complex programming model, whereas the HLRC improves on running performance as it allows parallel memory access. Memory consistency models provide a specific set of rules for interfacing with memory.

The authors used a multiview technique to ensure an efficient implementation of SC with fine-grain access to memory. The main advantage of this technique is that by mapping one physical region to several virtual regions, the system avoids fetching the whole content of the physical memory when there is a fault accessing a specific variable located in one of the virtual regions. One step further is the mechanism proposed by Niv and Shuster to dynamically change the granularity during runtime. For this SC(with multiview MV), only all page replicas need to be tracked, whereas for HLRC the tracking needed is much more complex. The advantage of HLRC is that write faults on read only pages are local, therefore there is a lower cost for these operations.

This table summarizes the characteristics of the benchmark applications used for the measurements. In the Synch column, B represents barriers and L locks.

| Application | Input data set | Shared memory | Sharing granularity | Synch | Allocation pattern |

|---|---|---|---|---|---|

| Water-nsq | 8000 molecules | 5.35MB | a molecule (672B) | B, L | fine |

| Water-sp | 8000 molecules | 10.15MB | a molecule (680B) | B, L | fine |

| LU | 3072 × 3072 | 72.10MB | block (coarse) | B | coarse |

| FFT | 2^20 numbers | 48.25MB | a row segment | B | coarse |

| TSP | A graph of 32 cities | 27.86MB | a tour (276B) | L | fine |

| SOR | 2066 × 10240 | 80.73MB | a row (coarse) | B | coarse |

| Barnes-sp | 32768 bodies | 41.21MB | body fields (4-32B) | B, L | fine |

| Radix | 10240000 keys | 82.73MB | an integer (4B) | B, L | coarse |

| Volrend | a file -head.den- | 29.34MB | a 4 × 4 box (4B) | B, L | fine |

| Ocean | a 514 × 514 grid | 94.75MB | grid point (8B) | B, L | coarse |

| NBody | 32768 bodies | 2.00MB | a body (64B) | B | fine |

| NBodyW | 32768 bodies | 2.00MB | a body (64B) | B | fine |

The authors found that the average speedup of the HLRC protocol is 5.97, and the average speedup of the SC(with MV) protocol is 4.5 if non-optimized allocation is used, and 6.2 if the granularity is changed dynamically.

In 2008, Roy and Chaudhary compared the communication requirements of three different page-based DSM systems (CVM, Quarks, and Strings) that use virtual memory mechanisms to trap accesses to shared areas. Their study was also based on the SPLASH-2 suite of programs.

In their experiments, Quarks was running tasks on separate processes (it does not support more than one application thread per process) while CVM and Strings were run with multiple application threads.

| Program | CVM | Quarks | Strings |

|---|---|---|---|

| FFT | 1290 | 2419 | 1894 |

| LU-c | 135 | - | 485 |

| LU-n | 385 | 2873 | 407 |

| OCEAN-c | 1955 | 15475 | 6676 |

| WATER-n2 | 2253 | 38438 | 10032 |

| WATER-sp | 905 | 7568 | 1998 |

| MATMULT | 290 | 1307 | 645 |

| SOR | 247 | 7236 | 934 |

The results indicate that Quarks generates a large quantity of messages in comparison with the other two systems. This can be observed in the table above. LU-c (contiguous version of LU) does not have a result for Quarks, as it was not possible to obtain results for the number of tasks (16) used in the experiment. This was due to the excessive number of collisions. In general, due to the lock related traffic, the performance of Quarks is quite low when compared to the other two systems for many of the application programs tested. CVM improves on the lock management by allowing out of order access through a centralized lock manager. This makes the locking times for CVM much smaller than for others. Nevertheless, the best performer is Strings. It wins in overall performance over the two other system compared, but there is room for improvement here too, as it was observed that the locking times in Strings are an elevated percentage of the overall computation.

The overall conclusion is that using multiple threads per node and optimized locking mechanisms (CVM-type) provides the best performance.

Evolution Over Time

A more recent version of a distributed shared memory system is vNUMA. Chapman and Heiser, describe vNUMA (where v is for virtual and NUMA is for Non Uniform Memory Access) as "a virtual machine that presents a cluster as a virtual shared-memory multiprocessor." The virtualization in vNUMA is a layer between the real CPUs that form the distributed shared memory system and the OS that runs on top it, usually Linux. The DSM in vNUMA is part of the hypervisor, which is the part of a virtual system that maps guest virtual addresses into real physical ones. The guest virtual addresses get then mapped into virtual memory addresses through the guest OS.

The difference with other virtual machines is that vNUMA runs one OS on top of several physical machines, whereas virtual machines often run several guest OS on top of one host OS that runs on one machine. And it uses DSM software techniques to present all the virtualized memory as a whole.

The DSM in vNUMA is a single-writer/multiple-reader write-invalidate protocol with sequential coherence. It is based on the IVY DSM, but it introduces several improvements to increase performance. The owner of a page can determine if the page needs to be sent by looking at the copyset (contains information of the set of nodes that maintain a copy of the page), avoiding several page faults and the manager becomes the owner of the copyset as soon as it is part of it. There are a couple other improvements: incremental deterministic merging and write-update-plus (WU+). Incremental deterministic merging uses sequence numbers to ensure that a location gets updated with the latest value and not intermediate, out of order writes. Write-update-plus (WU+) enforces single-writer for pages where atomic operations are done. vNUMA dynamically changes from multiple-writer to single-writer when atomic operations are detected.

In vNUMA what it is and why it matters, VMware presents vNUMA as part of vSphere, a virtualization platform oriented to build cloud computing frameworks.

See also

- Performance and Productivity Breakthroughs with Very Large Coherent Shared Memory: The SGI® UV Architecture SGI white paper.

- Versatile SMP (vSMP) Architecture

- Adrian Moga, Michel Dubois, "A comparative evaluation of hybrid distributed shared-memory systems," Journal of Systems Architecture, Volume 55, Issue 1, January 2009, Pages 43-52

- Jinbing Peng; Xiang Long; Limin Xiao; , "DVMM: A Distributed VMM for Supporting Single System Image on Clusters," Young Computer Scientists, 2008. ICYCS 2008. The 9th International Conference for , vol., no., pp.183-188, 18-21 Nov. 2008

References

- Shan, H.; Singh, J.P.; Oliker, L.; Biswas, R.; , "Message passing vs. shared address space on a cluster of SMPs," Parallel and Distributed Processing Symposium., Proceedings 15th International , vol., no., pp.8 pp., Apr 2001

- Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996

- Chandola, V. , "Design Issues in Implementation of Distributed Shared Memory in User Space,"

- Nitzberg, B.; Lo, V. , "Distributed Shared Memory: A Survey of Issues and Algorithms"

- Steven Cameron Woo , Moriyoshi Ohara , Evan Torrie , Jaswinder Pal Singh , Anoop Gupta, "The SPLASH-2 programs: characterization and methodological considerations," Proceedings of the 22nd annual international symposium on Computer architecture, p.24-36, June 22-24, 1995, S. Margherita Ligure, Italy

- Jegou, Y. , Implementation of page management in Mome, a user-level DSM Cluster Computing and the Grid, 2003. Proceedings. CCGrid 2003. 3rd IEEE/ACM International Symposium on, p.479-486, 21 May 2003, IRISA/INRIA, France

- Hennessy, J.; Heinrich, M.; Gupta, A.; , "Cache-Coherent Distributed Shared Memory: Perspectives on Its Development and Future Chanllenges," Proceedings of the IEEE, Volume: 87 Issue:3, pp.418 - 429, Mar 1999, Comput. Syst. Lab., Stanford Univ., CA

- J. Protic, M. Tomasevic, and V. Milutinovic, An Overview of Distributed Shared Memory

- Yoon, M.; Malek, M.; , "Configurable Shared Virtual Memory for Parallel Computing" University of Texas Technical Report tr94-21, July 15 1994, Department of Electrical and Computer Engineering, The University of Texas at Austin

- Dubnicki, C.; Iftode, L.; Felten, E.W.; Kai Li; , "Software Support for Virtual Memory-Mapped Communication" Parallel Processing Symposium, 1996., Proceedings of IPPS '96, The 10th International, pp.372 - 381, 15-19 Apr 1996, Dept. of Comput. Sci., Princeton Univ., NJ

- Dubnicki, C.; Bilas, A.; Li, K.; Philbin, J.; , "Design and Implementation of Virtual Memory-Mapped Communication on Myrinet" Parallel Processing Symposium, 1997. Proceedings., 11th International, pp.388 - 396, 1-5 Apr 1997, Princeton Univ., NJ

- Kranz, D.; Johnson, K.; Agarwal, A.; Kubiatowicz, J.; Lim, B.; , "Integrating Message-Passing and Shared-Memory: Early Experience" PPOPP '93 Proceedings of the fourth ACM SIGPLAN symposium on Principles and practice of parallel programming, pp.54 - 63

- Amza, C.; Cox, A.L.; Dwarkadas, S.; Keleher, P.; Honghui Lu; Rajamony, R.; Weimin Yu; Zwaenepoel, W.; , "TreadMarks: shared memory computing on networks of workstations" Computer, Volume: 29 Issue:2, pp. 18 - 28, Feb 1996, Dept. of Comput. Sci., Rice Univ., Houston, TX

- David R. Cheriton. 1985. Preliminary thoughts on problem-oriented shared memory: a decentralized approach to distributed systems. SIGOPS Oper. Syst. Rev. 19, 4 (October 1985), 26-33.

- Matthew Chapman and Gernot Heiser. 2009. vNUMA: a virtual shared-memory multiprocessor. In Proceedings of the 2009 conference on USENIX Annual technical conference (USENIX'09). USENIX Association, Berkeley, CA, USA, 2-2.

- Jayant DeSouza and Laxmikant V. Kal´e. [2]

Quiz

The memory hierarchy described for the CSVS system places remote memories:

- Between main memory and local disk storage

- Same hierarchy as local disk storage

- Below local disk storage

When messages are sent by the OS to retrieve non-local data the virtual address of the retrieved data is translated to physical:

- At the origin of the message, i.e. where the page fault occurs

- By the DSM system default manager

- At the location where the desired page resides

DSM nodes

- partially map variable amounts of their memory to the distributed address space

- are configured to supply a contiguous and fixed amount of memory to the distributed address space

- utilize I/O to access the entirely non-local distributed address space

The SAS programming model: