CSC/ECE 506 Spring 2013/10a os: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

== '''Prefetching'''== | == '''Prefetching'''== | ||

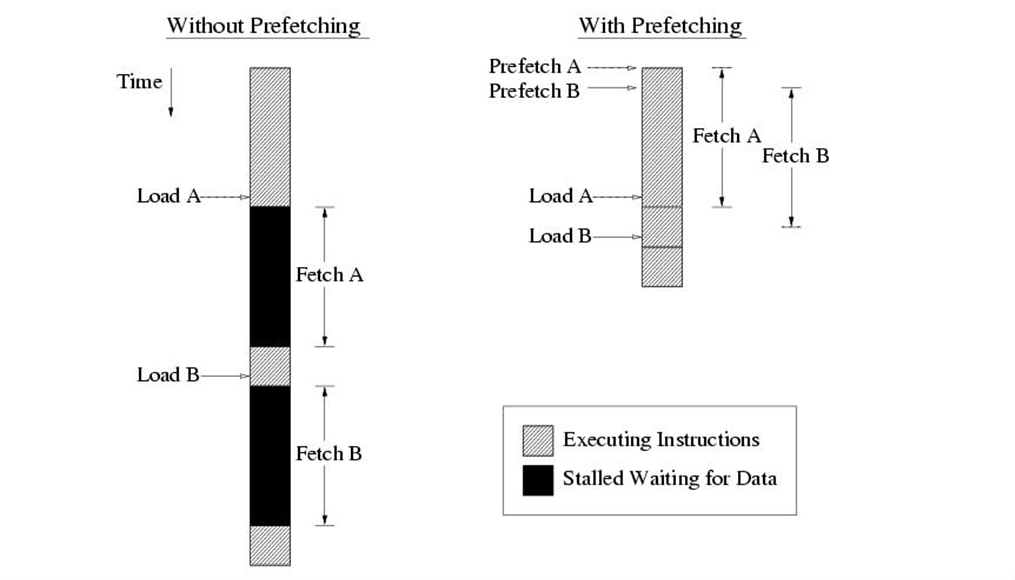

Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based. | Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based. | ||

[[ | [[Image:Prefetching.png]] | ||

=References= | =References= | ||

<references/> | <references/> | ||

Revision as of 00:29, 2 April 2013

Prefetching and Memory Consistency Models

Cache Misses

Cache misses can be classified into four categories: conflict, compulsory, capacity [3], and coherence. Conflict misses are misses that would not occur if the cache was fully-associative and had LRU replacement. Compulsory misses are misses required in any cache organization because they are the first references to an instruction or piece of data. Capacity misses occur when the cache size is not sufficient to hold data between references. Coherence misses are misses that occur as a result of invalidation to preserve multiprocessor cache consistency.The number of compulsory and capacity misses can be reduced by employing prefetching techniques such as by increasing the size of the cache lines or by prefetching blocks ahead of time.

Prefetching

Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based.

References

<references/>